Digital twin, physics-based model, and machine learning applied to damage detection in structures

Abstract

This work is interested in digital twins, and the development of a simplified framework for them, in the context of dynamical systems. Digital twin is an ingenious concept that helps on organizing different areas of expertise aiming at supporting engineering decisions related to a specific asset; it articulates computational models, sensors, learning, real time analysis, diagnosis, prognosis, and so on. In this framework, and to leverage its capacity, we explore the integration of physics-based models with machine learning. A digital twin is constructed for a damaged structure, where a discrete physics-based computational model is employed to investigate several damage scenarios. A machine learning classifier, that serves as the digital twin, is trained with data taken from a stochastic computational model. This strategy allows the use of an interpretable model (physics-based) to build a fast digital twin (machine learning) that will be connected to the physical twin to support real time engineering decisions. Different classifiers (quadratic discriminant, support vector machines, etc) are tested, and different model parameters (number of sensors, level of noise, damage intensity, uncertainty, operational parameters, etc) are considered to construct datasets for the training. The accuracy of the digital twin depends on the scenario analyzed. Through the chosen application, we are able to emphasize each step of a digital twin construction, including the possibility of integrating physics-based models with machine learning. The different scenarios explored yield conclusions that might be helpful for a large range of applications.

Keynotes: digital twin, physical based model, machine learning classifier, damage identification, structural dynamics.

1 Introduction

The fourth industrial revolution is related to data, artificial intelligence, robotics, internet of things, and much more [1]. Every day there are more sensors, data, and computer power at our disposal. In such a context, the interest in developing digital twins for engineering systems is rapidly growing. More specifically, here we would like to treat structural dynamic problems, such as wind turbine dynamics and drill string dynamics, operating in locations of difficult access and harsh environments.

There is no consolidated view on what digital twins are [2, 3, 4]. However, it is consensual that a digital twin is a virtual representation of a specific physical asset, which uses data collected from this asset to connect digital and physical parts. The potential benefits within such a generic framework have sparked much attention from different industrial segments. In section 2 of the present paper, a digital twin conceptual framework and its ingredients are detailed.

A virtual representation means a physics-based computational model and/or a machine learning model. The former is the usual model obtained from the combination of first principles (e.g. Newton’s second law) with closure models (e.g. constitutive model), and the later is obtained through fitting data to some mathematical structure; for instance, neural networks [5], or any other data-driven model [6, 7, 8].

Recently, lots of researches are dedicated to leverage computational models, by integrating physics-based models with machine learning [9, and references therein]. The present paper aims at tackling this integration in the context of building digital twins, and some strategies are depicted in section 3. It should be noticed that the use of a physics-based model ensures physical interpretability (very desired for engineering systems), and machine learning models are very adapted to data, and suited to real time application [10].

A simple structure, modeled as a lumped-parameter system, is the asset to be tracked by a digital twin in the present analysis. The steps of constructing a digital twin are emphasized, and a strategy to combine physics-based models with machine learning is employed. The main ingredients of our digital twin are: (i) computational model, (ii) uncertainty quantification, (iii) calibration/update of the model using data from the physical twin.

The contributions of the present paper are twofold: (i) to construct a digital twin conceptual framework, in the context of structural dynamics, to detect damage in structures, and (ii) to assess a strategy to combine physics-based models with machine learning, for the system under analysis. In this attempt, section 2 gives a general view of digital twins, and section 3 summarizes ideas related to physics-based models and machine learning. There is an effort of the research community to explore these possibilities, and their many variations, in several applications.

The strategy employed here is detailed in section 4. It considers a stochastic physics-based computational model (with different damage scenarios) to train a machine learning classifier (our digital twin). This methodology might be applied whenever a computational model is useful to understand (and catalog) different behaviors (or failures) of a dynamical system. The digital twin classifier will be connected to the physical twin, supporting real time engineering decisions.

There is an increasing body of literature in line with the main topics under analysis here. Bigoni and Hesthaven [11] employ a strategy similar to the one analyzed in the present work. They called it simulation-based anomaly detection, and propose it in the context of structural health monitoring; where Support Vector Machines are used to detect anomalies. Zhao et al [12] apply deep learning (auto-encoder, convolution networks, etc.) in machine health monitoring systems. A deep learning based framework for prognostics and health monitoring is also proposed by Booyse et al [13]. Karvea et al [14] propose a digital twin approach for damage diagnosis, damage prognosis, and optimization for crack growth. Finally, Kapteyn et al [15] develop a digital twin for an aerial vehicle, and consider a damaged structure. The computational physics-based model is updated using a machine learning classification technique called optimal trees [16]. After training the classifier with datasets from a library that contains models with different levels of degradation (damage), the machine learning classifier is able to choose the best candidate model, given new experimental data. A state-of-art digital twins for modelling and simulation in engineering dynamics applications can be found in [4].

This paper starts establishing, in section 2, the digital twin framework for our analysis. Then, alternatives to combine physics-based models with machine learning, within the context of a digital twin, are detailed in section 3. The physical twin for damage detection is developed in section 4, and it is assessed in section 5. Finally, the concluding remarks are made in the last section of the paper.

2 A Digital twin framework

Jones et al. [2] performed a systematic literature review on Digital Twin, and, after consolidation, reached thirteen main characteristics. Some of them are exposed here, adapted to our specific context. Wagg et al. [4] presents a state-of-art more related to the present work, modeling and simulation in engineering dynamics applications. Some elements exposed in [4] will be explored further in this section.

Figure 1 shows a schematic representation of a digital twin conceptual framework. Measurements are taken from the physical twin (wind turbine) to calibrate/update the digital twin. The digital twin is composed of a computational model (physics-based and/or machine learning models) and a stochastic layer to take into account uncertainties. Note that the inverse problem (calibration) is stochastic, which can be tackled with the Bayesian methodology [17], for instance.

A digital twin is well suited to real time applications [10], and it can be useful on system automation [18]. Its goal is to support engineering and operational decisions, and it can be used for: (i) feeding information to actuators and operational parameters, (ii) setting up alarms, (iii) performing diagnosis, (iv) performing prognosis (what if questions and estimate residual life), and also (v) designing a system with improved performance, (vi) optimizing sensors/actuators locations, etc.

A digital twin is more than a computational model. It is a computational model, that takes into account uncertainties, and is calibrated with data measured from its physical twin (a specific asset). It is updated whenever needed to track the life cycle of the asset; i.e., it evolves with time, replicating the history of performance and degradation of its twin. Some pretty well established disciplines are part of a digital twin framework; for example, finite element model [19], neural networks [5], digital signal processing [20], structural health monitoring [21], uncertainty quantification [22], inverse problem [23], model updating [24], and verification and validation [25]. All of these areas should be coordinated inside the digital twin framework to provide results that support decisions concerning a specific asset goals.

In this digital twin conceptual framework, we consider three mandatory ingredients: (i) computational model, (ii) uncertainty quantification, and (iii) calibration using laboratory (off-line) and field online data from the asset (physical twin). Some remarks should be made:

-

•

There are physical-to-virtual and virtual-to-physical connections. Data collected from the physical twin are used to calibrate/update the digital twin. And, the predictions of the digital twin are used to support decision concerning the physical twin operation (parameter values, control strategy, degradation evaluation, etc).

-

•

The digital twin must represent the main characteristics of the physical twin within a given confidence.

-

•

The level of confidence of the digital twin predictions should be calculated by means of a stochastic analysis (e.g. probability of failure). Note: there are other approaches to model uncertainties (e.g. fuzzy sets [26]), but they are not considered here.

-

•

The computational model might be physics-based and/or machine learning. Specifically, we are interested in leveraging the computational model integrating both types of modeling; see section 3.

- •

The framework presented in this section can be expanded to capture a wider picture. For instance (details can be found in [4, and references therein]): combination of multiple models (different physics, fidelity, scales) of the structure, laboratory tests using components from the structure, multiple teams of experts, computations of the subsystems in parallel, commissioning, process control, software integration and management, technical and organisational problems, autonomous manufacturing. The digital twin can start in the design phase of its physical counterpart, and pass through the manufacturing process. Wagg et al [4] proposed a W model for product design, where a specific virtual prototyping stage is included and used as the basis for the digital twin.

3 Integrating physics-based models with machine learning

Let us start this section commenting on some general characteristics of physics-based and machine learning models. Before, it should be mentioned that, while constructing a model, and to extract most of it, the role of the specialist (expert knowledge) is extreme valuable. The model hypotheses and goals must be clearly stated, and the characteristics of the target engineering application will determine the type and sophistication of modeling. One should ask questions, such as: How complex is the system? Is the physics well understood? What kind of data are available, and what is the acquisition rate? Is it a real time application? What is the level of uncertainty (of inputs, parameters, models, and outputs)? Etc.

A data-driven analysis is paramount in the digital twin realm. Both physics-based and machine learning models must be calibrated/trained with experimental or field data. Part of the data should be separated for calibration/training, and the remainder for validation/testing. More complex models have more parameters, what makes the calibration procedure more difficult. Depending on the problem, normalization of data and parameters, and regularization of objective functions, are highly recommended [7].

Some characteristics of a physics-based model are listed below:

-

•

It is constructed using first principles (e.g. Newton’s second law) combined with phenomenological closure models (e.g. constitutive models, friction models, damping models, boundary conditions, joints)

-

•

Each parameter has a clear physical interpretation

-

•

One can build high fidelity time consuming computational models of complex engineering systems [28]

-

•

One can calibrate the model at a given operational condition and use it to analyze different scenarios (good extrapolation capacity)

In summary, we can build high fidelity and interpretable physics-based models, that can be used to analyze a multiplicity of new scenarios. Nevertheless, uncertainty is an important issue for complex systems, with joints, friction, and multi-physics, for example. And if part of the physics is not known, another mathematical structure should take place. Finally, they can have high computational cost, which is critical for real time applications. To alleviate this last issue, reduced-order models serving as proxies for the original expensive one might be constructed [29, 30, 31].

Some characteristics of a machine learning model are listed below [7]:

-

•

It is obtained from some mathematical architecture that is not based on physical laws; it is a linear or a complicated nonlinear transformation of inputs into outputs

-

•

Usually its parameters are seldom physically interpretable

- •

-

•

Over-fitting is an issue and should be avoided

-

•

The capacity of extrapolation is limited; if the machine learning model is not trained for a specific scenario, it can not generally make predictions about it

In summary, we can build pretty fancy data-driven machine learning models, that can be used to tackle complex engineering problems. No physics laws are needed, and, if enough data is available, their predictions can be accurate. Furthermore, they run fast, amenable to real time applications. However, they are often limited to the training domain, and it is usually hard to extrapolate the results with confidence. In addition, they can not be used to design a new system. Finally, they usually need more data for the training (calibration) to compensate the absence of an initial mathematical structure (materialized as a physical law); and finding an optimal network architecture, for example, remains an art.

It seems to make a lot of sense to integrate physics-based models with machine learning to take advantage of their pros and diminish their cons. And that is exactly what a lot of researchers are doing [9, and references therein], setting the realm of Physics aware Machine Learning. There are many possibilities for this integration, some of which are out of the scope of the present work, such as using machine learning to discover governing equations [32], using machine learning to solve partial differential equations [33], incorporating physical constraints into the machine learning [34, 35], transfer learning using physics-based model to pre-train the machine learning [36], embedding physical principles into the neural network design [37], analyzing the mismatch between a physics based model and data [34].

We are particularly interested in using physics-based models for intensively simulating different damage scenarios to be tracked during the online operation of the digital twin. The resulting significant amount of data is employed to train a machine learning [15, 11, 38] that serves as a digital twin to detect damage, or to select an appropriate model, in the structural system. It is important to highlight the role played by a physics-based model in amplifying the interpretability of machine learning tools, what is crucial for the success of a digital twin in achieving the main goals of the detection system: locating and evaluating the severity of the damage. Another critical point in such a strategy for putting together an effective digital twin relies on conciliating the production of accurate information through the simulations and the required amount of data to train properly the machine learning classifier. That can be achieved by a judicious design of the computer model, as we illustrate in our example of a digital twin prototype to be introduced in the next section.

4 A Digital Twin to damage detection in structures

In the present section, we give form to the above ideas and concepts that delineates a digital twin through a prototype dedicated to damage detection. Identification of damage in structures is conveniently phrased as an inversion problem, in which the input data is obtained by sensors and the outputs are damage localization and severity. Models are used to connect inputs and outputs. The resulting mathematical problem is usually cumbersome to be solved in real time applications. Here, to cope with the need of providing online responses, we follow a nonstandard approach. We train a machine learning classifier using synthetic data produced by a physics-based model that replicates, at a certain extent, the real structure dynamic response. The role of such classifier is to relate the inputs (sensors signals) to plausible damage scenarios obtained though the model in an off-line stage.

In addition to the profile of digital twin described above, we have to consider the three main goals of damage detection: identify the presence, location and severity of damaged regions. Our proposal addresses those issues with the help of simulations employing a physics-based model. Indeed, [11] also uses synthetic data for training their classifier but differs in the sense that localization is achieved by assigning to each critical area one digital twin and positioning sensors accordingly. Moreover, one can also extend such a diagnosis perspective, by estimating the residual life of the structure, pinpointing risks to its future functioning. This prognosis phase is not addressed here.

A bar structure is used to illustrate our prototype of physical twin. Then, a discrete computational model is built to represent the physical twin, and to support the interpretability of the machine learning tools embedded in it. The physics-based model with a localized damage is used to simulate different degraded scenarios of the structure. For this purpose, a stochastic model is developed to construct datasets. We go step-by-step in the design of the prototype in the sequence of this section.

4.1 The Physical twin

The physical twin considered here consists of a slender dynamic structure that vibrates axially, as depicted in the form of a sketch in Fig. 2. Indeed, in the present application, we do not have a real physical twin. Hence, a prismatic bar governed by the following partial differential equation, considered here as a high-fidelity model of the asset, is used to emulate it. Such equation results from the combination of the balance of linear momentum with a elastic constitutive equation.

| (1) |

where is the density of the material, is the elasticity modulus, is the cross section area, is the displacement field that is a function of the independent variables (position) and (time), and if the force per unit length. This partial differential equation is discretized by means of the finite element method, from which we obtained the discretized system (linear shape functions are employed)

| (2) |

with the appropriate initial conditions, and where the subscript stands for physical twin. The usual mass, stiffness and proportional damping matrix () are shown in the equation; in which and are positive constants. A fixed-free boundary condition is considered, and the system is rewritten in the frequency domain

| (3) |

where . The system is discretized in forty finite elements (elementary matrices are in Appendix A), and , , , , , and . The first three natural frequencies, computed from the mass and stiffness matrices (generalized eigenvalue problem) are Hz and the first damping ratios are %.

4.2 The discrete computational model

The computational model is physics-based, and is constructed using a 6-DOF lumped parameter description, as displayed in (Fig. 3):

| (4) |

where the mass and the stiffness matrices, and , are described in Appendix B, and . The first three natural frequencies, considering and , are Hz and the first damping ratios are %.

A lumped parameter model fulfills the requirements raised before. It is able to furnish a significant amount of training data with reasonable computational costs, capturing the essential dynamic response of the physical counterpart, within the frequency range of interest, as will be made clear in the sequence. Moreover, it also equipped to handle the two main issues of damage detection as each spring can be associate with the average stiffness of segments in the continuum model. Indeed, that can be easily established by using in the finite element discretization a lumped mass distribution, linear elements, and a coarse grid. Such connectivity between continuum and discrete models helps on improving the interpretability of the machine learning classifier.

Figure 4 shows the frequency response of the physical twin (Eq. 3) and the computational model (Eq. 4), where a decorrelated Gaussian noise was added to the physical twin, with zero mean and standard deviation of m, to reproduce usual monitoring conditions. A force with magnitude of N is applied at the right end of the system, and the response is obtained at the same position, which corresponds to the sixth degree-of-freedom of the discrete computational model. The response of this computational model does not match the one of the physical twin, but it is yet quite representative. The amplitudes of the responses are comparable and the error in the first natural frequencies are lower than 5%.

4.3 The Stochastic computational model

To augment the predictive capability of the computational model by quantifying its confidence level, we take into account uncertainties originated from different sources endowing the original model with a stochastic structure, assuming probabilistic parametric characterizations. We admit that , , , and are independent Uniform random variables .

Therefore, mass, stiffness, and damping matrices are now random , , , as is the response of the system , denoted in bold. The stochastic model is, thus, expressed in the form of its FRF:

| (5) |

Figure 5 shows the physical twin response together with the 95% statistical envelope of the stochastic computational model, where the bounds of each random Uniform variable were taken to plus and minus five percent of the nominal value (e.g. ). The response of the computational model is now able to get closer to the physical twin response. Of course, the price we pay is to deal with a statistical envelope; which represents the level of confidence.

4.4 Damage parametrization

We assume that damage manifests, at the macroscopic scale, as a local loss of stiffness [39], and a simple damage model is considered for the analyses [40]. In the context of our computer model, damage is parametrized by means of a scalar that modulates the reduction in the spring stiffness. The stiffness value is multiplied by at the damage location (corresponding spring), where , with the percentage of the damage expressing its severity. For instance, if a 10% damage is considered at the second spring, (Eq. 6); and the stiffness matrix is recalculated, as well as the damping matrix. Moreover, we have the constraint to be consistent with the underlying physics of damage process [41]. In the present application, we do not follow damage evolution, therefore there is no need to take this constraint into account.

| (6) |

A healthy structure is characterized by in all springs (in all continuum segments), and hence its FRF provides a reference to identify damaged structures in the frequency band of interest. Figure 6 shows the response of the deterministic system without damage together with five damage situations: damage of 20% at the (1) first spring, (2) second spring, (3) third spring, (4) fourth spring, and (5) fifth spring (no damage was considered in the last spring). The response changes depending on the degree of freedom observed, and on the frequency of the applied force. For instance, at 7000 Hz, the response corresponding to the healthy structure (black) touches the curve with 20% of damage at the fourth spring (blue). But, at the same frequency, the response of the sixth DOF shows black and blue curves far apart; see Fig. 6 (b). Such initial studies justify the choice of vibration amplitudes, due to their sensitivity to damage, as principal features to be employed in the identification technique.

(a)

(b)

(b)

4.5 Constructing the training dataset

A supervised learning is employed to train the classifier, where the dataset is constructed using many samples of the displacements of our discrete system: , ,…, as needed. Other responses could be used, such as velocity, acceleration, or deformation, where the most appropriate type of response will depend on the application and on the sensors at disposal. The dataset (features) and the associated damaged scenario (labels) are the input training pair for the machine learning classifier, a central piece of our digital twin, as will be detailed in the sequence.

The dataset structure comprises random samples for each one of the damage scenarios, which are generated using our stochastic computational and damage models (Eqs. 5 and 6). The response of each sample is organized in a row of matrix . For example, the damage scenario can be 10% of damage at spring # 1. Hence,

| (7) |

which is associated to the label . The multivariate nature of the digital twin allows, partially and implicitly, to address two main issues of the damage detection: localization and severity. The final dataset is a dimension matrix, which is a composition of the random samples of each damage scenarios (including the healthy structure), with displacements measured:

| (8) |

As done in section 4.3, the parameters , , , and , are modeled as independent Uniform random variables. In addition, we will allow fluctuations of the damage variable, the forcing frequency, and the measured displacements, to allow a more generalized digital twin; because the damage of the physical twin might be a little higher or a little lower than the nominal value, the excitation frequency might be a little different, and there might be noisy measurements. So, the damage is also modeled as a Uniform random variable with bounds , the excitation frequency idem , and a zero mean Gaussian random noise is added to the amplitude of the response, .

4.6 Construction of the digital twin - machine learning classifier

As mentioned in the last section, the stochastic computational model is used to simulate the response of the system with different damage locations and corresponding intensities. From these simulations, a dataset is constructed to train the digital twin, which is a machine learning classifier, see Fig. 7. The trained classifier can be used in the digital twin platform to deploy a diagnosis and quickly warn the operation if there is damage, and where it is located.

| Classifier | Accuracy |

|---|---|

| Quadratic Discriminant | 93.3% |

| SVM (quadratic) | 93.1% |

| SVM (linear) | 92.2% |

| SVM (cubic) | 86.0% |

| Linear Discriminant | 84.8% |

| KNN | 81.8% |

| SVM (Gaussian) | 80.6% |

| Ensemble (Bagged Trees) | 77.9% |

| Decision Tree | 61.2% |

| Ensemble (RUSBoosted Trees) | 39.5% |

There are several machine learning classifiers, such as k-nearest neighbor, linear/quadratic discriminants, support vector machines, decision tree, and ensemble methods [42]. We tested a number of well established classifiers, and Table 1 shows their accuracy111The parameters of some classifiers can be tuned to achieve a better performance, but this is not the goal of the present work. The idea is to show that the Quadratic Discriminant has a good performance, and other classifiers, such as SVM, perform similarly. Note: quadratic discriminant takes 0.06s to run, while SVM (quadratic) takes 11.97s.,222For the decision Tree, the maximum number of splits is 100, and the split criterion is the Gini’s diversity index. For the Support Vector Machine (SVM), the Box constraint level is 1, and the multi-class method is one-vs-one. For the Gaussian kernel, the scale is 0.61. For the K nearest neighbor (KNN), the number of neighbors is one, and the distance metric is Euclidean. For the Bagged trees, the number of learners is 30. For the RUSBoosted trees, the maximum number of slips is 20 and the learning rate is 0.1., evaluated by the percentage of correctly classified inputs amongst the whole set of data, and where a 5-fold cross validation was employed [42]. The frequency of excitation used was Hz, and the damage =20%. This set of parameters yield good results. In the next sections, we analyze the impact of changing some parameters, on the accuracy of the machine learning model.

The classifier that best performed, observing this accuracy criterion and for the current application, was the quadratic discriminant. Therefore, we present a high-level overview of the quadratic discriminant analysis (QDA), that also naturally accommodates our probabilistic approach. A classifier aims to provide a partition of the multidimensional input domain associate to classes , to be defined by the user, accordingly with previously established objectives.

In QDA, the Bayes formula is used to compute the posterior probability of the -th class given the input vector :

| (9) |

where is the prior probability of the -th class (number of times this class is observed divided by the total number of observations) with , and is the probability density function of the -th class, which is assumed to be Gaussian:

| (10) |

in which the mean and the covariance matrix of class are estimated from the dataset. Note that there is no extra parameter to tune. To judge if a point is from class or , the probability ratio is verified, . If this ratio is greater than one, it is classified as class , i.e., the class with higher probability is chosen. If the covariance matrices of each class are not the same (which is the present case), then the discriminant function is quadratic, hence the name of the method.

To summarize, Fig. 8 shows the digital twin framework for our application. In this framework, physics-based and machine learning models are integrated as follows. Measurements are taken from the physical twin (bar structure) to calibrate a stochastic computational model (physics-based), which is used to train the digital twin (a machine learning classifier). The physics-based model has the advantage of been interpretable, and able to emulate different scenarios of damage. And the machine learning model has the advantage of running fast. The trained digital twin classifier could be used for a real time application. That is, it would be connected to the physical twin, processing new dynamic signals, and, whenever a signal is associated with a damage, there would be a warning.

5 Assessing the Digital Twin

To understand many aspects of the proposed digital twin, several analysis are performed in this section.

5.1 Dataset 1: 20% of damage at each spring

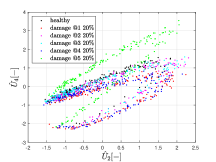

Figure 9 shows the dataset used to train the digital twin, considering a damage of 20% at each spring. We do not analyse scenarios in which two (or more) springs are damaged simultaneously. Two hundred samples were collected for each one of the six configurations analyzed: healthy and damage placed at each i-th spring (i=1,..,5). To improve the training conditions, the data is normalized by subtracting the mean and dividing by the standard deviation of all samples.

(a)

(b)

(b)

(c)

(c)

(d)

(d)

The plots in Fig. 9 display some separation within the data, and, thus, this observed clustering bears potential to an input domain partition by a classifier. Particularly, Fig.9(a), which presents the correlation between displacements of first and sixth DOFs, shows red dots (20% of damage at the first spring) at the bottom of the cloud of points. And, Fig. 9(b), which presents the displacements of the second and fourth DOFs, shows green dots (20% of damage at the third spring) at the top of the cloud of points. It is not clear, a priori, if the machine learning model will be able to classify correctly the damage location. Amazingly, the accuracy of the quadratic discriminant strategy is very high: 93.3%.

One hundred samples, not used in the training, were employed to validate the digital twin. The confusion matrix [43] is presented in Table 2. Each row represents the real damage scenario, and the columns display the results of the classifier. The first row shows that 86 out of the 100 (row sum) samples for the healthy structure are correctly classified. One sample is misclassified as (damage at the second spring), 10 incorrectly classified as , and so on. Moving to the third row, the same statistics for damage detection can be obtained for class : 97 out of the 100 samples are correctly classified, 1 sample is incorrectly classified as healthy, and 2 samples are incorrectly classified as . Therefore, the diagonal terms are related to correctly classified samples, and the off-diagonal terms are related incorrectly classified samples. There is a lot of information in this matrix, such as the quantification of true positives and false negatives. The accuracy is excellent when the damage is at the first spring (100%), at the second spring (97%), at the fourth spring (99%) and at the fifth spring (100%). The most difficult damage to locate is at the third spring (78%). Other information that can be taken from this matrix is the probability of considering a damage when the system is healthy (false-positive), which is (1-86%)=14%. And the probability of indicating a healthy structure, when the third spring is damaged (false-negative), is 19%. Overall, the results are quite satisfactory.

| healthy | ||||||

|---|---|---|---|---|---|---|

| healthy | 86 | 0 | 1 | 10 | 3 | 0 |

| 0 | 100 | 0 | 0 | 0 | 1 | |

| 1 | 0 | 97 | 2 | 0 | 0 | |

| 19 | 0 | 0 | 78 | 2 | 1 | |

| 0 | 0 | 0 | 0 | 99 | 1 | |

| 0 | 0 | 0 | 0 | 0 | 100 |

One might argue that 20% is too much damage. The level of damage will surely affect the performance of the digital twin, as it will be confirmed in the sequence. Actually, the performance of the digital twin depends on several factors, such as combinations of:

-

•

damage severity

-

•

damage location

-

•

number of sensors (and sensors arrangement)

-

•

excitation location

-

•

excitation frequency

-

•

signal to noise ratio

-

•

level of parameter (and model) uncertainties

The next sections will analyze the performance of our digital twin varying these factors.

5.2 Dataset 2: 10% of damage at each spring

If a damage of 10% is considered, the accuracy of the digital twin gets lower (80.3%), which is expected since, as damage decreases, it is harder to distinguish among the different scenarios. At the limit, when d=0, all scenarios converge to (healthy structure).

The confusion matrix is given in Table 3, showing a lower performance, but the main conclusions are similar: the accuracy is very good when the damage is at the first spring (95%), at the fourth spring (90%) and at the fifth spring (90%). The most difficult damage to locate is at the third spring (80%). The probability of considering a damage when the system is healthy (false-positive) is (1-64%)=36%. And the probability of indicating a healthy structure, when the third spring is damaged (false-negative), is 24%.

| healthy | ||||||

|---|---|---|---|---|---|---|

| healthy | 64 | 1 | 13 | 18 | 3 | 1 |

| 1 | 95 | 0 | 3 | 1 | 0 | |

| 9 | 0 | 83 | 4 | 2 | 2 | |

| 24 | 2 | 8 | 60 | 5 | 1 | |

| 1 | 2 | 1 | 4 | 90 | 2 | |

| 4 | 0 | 4 | 2 | 0 | 90 |

5.3 Other datasets: further analyses

As mention before, the accuracy and efficiency of the digital twin depends on the dataset used in the training the classifier, that depends on how the input space, comprising the modal parameters and operational conditions, is explored in the off-line phase.

Table 4 shows the accuracy for different situations. The reference case has an accuracy of 93.3%, as seen in the previous section. The table shows that the accuracy tends to decrease if: the damage is lower, there are less sensors, there is more noise, and there is more uncertainty (e.g. parameter uncertainties). That is, if we have less information, or poorer information, the accuracy will decrease. And, depending on the force location and frequency, the results will change.

| Cases | Accuracy |

|---|---|

| Reference case | 93.3% |

| lower damage (10%) | 80.3% |

| less sensors (2,3,4,5,6) | 74.8% |

| more noise (2) | 85.3% |

| more uncertainty (bounds ) | 81.5% |

| different force frequency (7000 Hz) | 74.8% |

| different force location (first DOF) | 81.0% |

Figure 10 details a little more the digital twin accuracy when some parameters vary, where the red bar represents the reference case. Figure 10(a) and (b) show the accuracy increase when the damage increases, and the uncertainty bounds of the parameters of the stochastic model decrease. Figure 10(c) shows how the accuracy changes depending on the excitation frequency . It can be noted that the accuracy is higher when the system is excited close to the natural frequencies, Hz and Hz. At these frequencies the amplitude is higher and the response is more sensitive to damage comparing to the points in the neighborhood. Of course, we would not expect the system to operate close to the natural frequencies due to resonance.

Figure 10(d) shows the accuracy decrease when less sensors are taken into account. Specifically, the first sensor is the more informative for the present analysis, since removing it leads to the highest drop in the accuracy. If we take away sensors 3 and 5, the accuracy is 89.5%; if we take away sensors 2, 3 and 5 the accuracy is 80.0%. We could enter deeper in this analysis trying to optimize the number of sensors and their locations, but this is not the idea here (another possible strategy to choose sensor locations is the effective independence distribution vector [44, 45]).

(a)

(b)

(b)

(c)

(c)

(d)

(d)

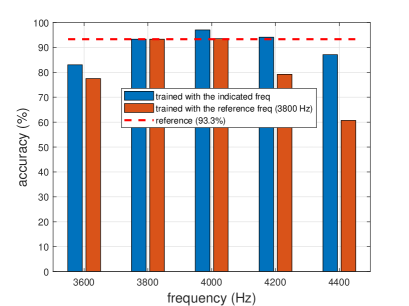

What if we try to extrapolate and employ the digital twin to identify damage in the physical twin under a operational condition different from the one it was trained? If this is done, we would expect a loss in its accuracy, which is what happens in our analysis, as observed in Fig. 11. For example, considering our reference configuration, trained with an excitation frequency Hz, the accuracy of the digital twin is 93.3%. If the digital twin is trained with Hz, the accuracy is 97.0% (a little higher), and if it is trained with Hz, the the accuracy is 83.0% (lower). Now let the reference digital twin (obtained with Hz) be used to classify data obtained for simulations with and Hz. If this is done, the accuracy drops to 93.5% ( Hz) and 77.5% ( Hz). Therefore, the generalization capacity of our digital twin is limited.

In this context, it should be noticed that we are allowing the fluctuation of several parameters of the system to construct the datasets, including the frequency of excitation . If we fix a specific frequency ( Hz) for the training, the accuracy of the digital twin remains the same (around 93.3%). However, we argue that it is interesting to allow fluctuations of the parameters because the physical twin might be, for instance, excited by a force with a frequency different from the nominal one used in the training. Hence, these fluctuations might help the digital twin aiming at achieving a little more generalization. For example, training with the fixed frequency Hz, and testing with the fixed frequency Hz, the digital twin presents an accuracy of 80.5%, which is lower than 83.0% obtained when fluctuations are allowed.

Finally, Fig. 12 shows how the precision of the digital twin changes for different values of training set size. Two thirds of the samples are used for training and one third for validation. We should ensure a minimum sample size to reach convergence. The mean and the coefficient of variation (standard deviation over the mean) of the accuracy are computed with 100 simulations; and the reference case considers 200 hundred points for testing and 100 for validation, for each one of the 6 damage scenarios. This means that the training dataset has a total of 1200 rows (), and the validation dataset has a total of 600 rows (); a total of 1800 points (). If 450 points are considered to calibrate the digital twin, the mean accuracy is a little lower and the coefficient of variation is a little higher than the reference values. But, if 90 points are taken into account, the mean accuracy and the coefficient of variation are reasonably distant from the reference. It should be remarked that it is not simple to train the digital twin using data from the physical twin. Imagine testing the physical asset (e.g. wind turbine, drill string) for each damage condition, several times. Therefore, a physical-based computational model is paramount.

6 Concluding remarks

This paper presents a digital twin conceptual framework for a dynamic structural damage problem. A Digital twin (see section 2) is a virtual representation of a specific physical asset, built to support engineering decisions, especially in real time applications. Three important ingredients of this framework are highlighted: (i) computational model, (ii) uncertainty quantification, and (iii) calibration using data from the physical twin.

To leverage the computational strategy, a physics-based model is combined with a machine learning classifier to construct a digital twin that would be connected to the physical counterpart and support decisions.

The discrete damaged structure analyzed enabled us to construct a digital twin step-by-step, and to understand its characteristics. This knowledge might be helpful to other applications and more complex systems. The accuracy of the digital twin classifier (quadratic discriminant) decreases when: there are less sensors, there is less damage, there are less training points, and there is more uncertainty and noise. We tested Several operational conditions, and their impact on the digital twin accuracy computed. The physics-based computational model is paramount to assure interpretability and to explore different damage scenarios that could not be assessed with the physical twin. Because the physics-based model can be time consuming, training a machine learning classifier allows a fast evaluation of the physical twin in a real time operation. Future investigations include model discrepancy and prognosis [14].

Acknowledgement

We would like to acknowledge that this investigation was financed in part by the Brazilian agencies: Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES) - Finance code 001 - Grant PROEX 803/2018, Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPQ) - Grants 400933/2016-0, 302489/2016-9, and Fundação Carlos Chagas Filho de Amparo à Pesquisa do Estado do Rio de Janeiro (FAPERJ) - Grant E-26/201.572/2014.

References

- [1] K. Schwab, The Fourth Industrial Revolution, Currency, 2017.

- [2] D. Jones, C. Snider, A. Nassehi, J. Y, B. Hicks, Characterising the digital twin: A systematic literature review, CIRP Journal of Manufacturing Science and Technology in press (2020).

- [3] R. Ganguli, S. Adhikari, The digital twin of discrete dynamic systems: Initial approaches and future challenges, Applied Mathematical Modelling 77 (2019) 1110–1128.

- [4] D. Wagg, K. Worden, R. Barthorpe, P. Gardner, Digital twins: State-of-the-art and future directions for modelling and simulation in engineering dynamics applications, ASME in press (2020).

- [5] M. A. Nielsen, Neural networks and deep learning, Determination Press, 2015.

- [6] C. Soize, R. Ghanem, Data-driven probability concentration and sampling on manifold, Journal of Computational Physic 321 (2016) 242–258.

- [7] D. Jones, C. Snider, A. Nassehi, J. Y, B. Hicks, A high-bias, low-variance introduction to machine learning for physicists, Physics Reports 810 (2019) 1–124.

- [8] S. Brunton, J. Kutz, Data-driven science and engineering: machine learning, dynamical systems, and control, Cambridge University Press, 2019.

- [9] K. Willard, X. Jia, S. Xu, M. Steinbach, V. Kumar, Integrating physics-based modeling with machine learning: A survey, ArXiv 2003.04919v3 (2020) 1–11.

- [10] H. Kopetz, Real-Time Systems: Design Principles for Distributed Embedded Applications, 2nd Edition, Springer, 2011.

- [11] C. Bigoni, J. S. Hesthaven, Simulation-based anomaly detection and damage localization: An application to structural health monitoring, Comput. Methods Appl. Mech. Engrg. 363 (2020) 112896.

- [12] R. Zhao, R. Yan, Z. Chen, K. Mao, P. Wang, R. X. Gao, Deep learning and its applications to machine health monitoring, Mechanical Systems and Signal Processing 115 (2019) 213–237.

- [13] W. Booyse, D. N. W. a, S. Heyns, Deep digital twins for detection, diagnostics and prognostics, Mechanical Systems and Signal Processing 140 (2020) 106612.

- [14] P. M.Karve, Y. Guo, B. Kapusuzoglu, S. Mahadevan, M. A.Haileb, Digital twin approach for damage-tolerant mission planning under uncertainty, Engineering Fracture Mechanics 225 (2020) 106766.

- [15] M. K. D. Knezevic, K. Willcox, Toward predictive digital twins via component-based reduced-order models and interpretable machine learning, AIAA Scitech Forum 0418 (2020).

- [16] D. Bertsimas, J. Dunn, Optimal classification trees, Machine Learning 106 (7) (2017) 1039–1082.

- [17] D. Sivia, J. Skilling, Data Analysis: A Bayesian Tutorial, Oxford University Press, 2006.

- [18] A. Gupta, S. Arora, J. R. Westcott, Industrial Automation and Robotics, Mercury Learning and Information, 2016.

- [19] T. Hughes, The Finite Element Method, Dover Publications, 2012.

- [20] R. G. Lyons, Understanding Digital Signal Processing, Prentice Hall, 2010.

- [21] C. R. Farrar, K. Worden, An introduction to structural health monitoring, Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 365 (1851) (1991) 303–315.

- [22] C. Soize, Uncertainty Quantification: An Accelerated Course with Advanced Applications in Computational Engineering, Springer, 2017.

- [23] J. Kaipio, E. Somersalo, Statistical and Computational Inverse Problems, Springer, 2004.

- [24] M. Friswell, J. E. Mottershead, Uncertainty Quantification: An Accelerated Course with Advanced Applications in Computational Engineering, Springer Science, 2013.

- [25] C. Roy, W. Oberkampf, A comprehensive framework for verification, validation, and uncertainty quantification in scientific computing, Computer Methods in Applied Mechanics and Engineering 200 (25-28) (2011) 2131–2144.

- [26] L. A. Zadeh, Fuzzy sets, Information and Controls 8 (3) (1965) 338–353.

- [27] T. Ritto, A. Fabro, Investigation of random matrix applications on structural dynamics using a tensor decomposition, Journal of the Brazilian Society of Mechanical Sciences and Engineering 41 (352) (2019).

- [28] C. Farhat, P. Geuzaine, G. Brown, Application of a three-field nonlinear fluid–structure formulation to the prediction of the aeroelastic parameters of an f-16 fighter, Computers & Fluids 32 (1) (2003) 3–29.

- [29] P. Benner, S. Gugercin, K. Willcox, A survey of projection-based model reduction methods for parametric dynamical systems, SIAM Review 57 (4) (2015) 483–531.

- [30] R. Ohayon, C. Soize, Advanced Computational Vibroacoustics: Reduced-Order Models and Uncertainty Quantification, Cambridge University Press, 2014.

- [31] T. Ritto, F. Buezas, R. Sampaio, A new measure of efficiency for model reduction: application to a vibroimpact system, Journal of Sound and Vibration 330 (9) (2011) 1977–1984.

- [32] B. D. Silva, D. Higdon, S. Brunton, N. Kutz, Discovery of physics from data: Universal laws and discrepancies, ArXiv 1906.07906 (2020).

- [33] J. Han, A. Jentzen, W. E, Solving high-dimensional partial differential equations using deep learning (2018).

- [34] A. Karpatne, G. Atluri, J. Faghmous, M. Steinbach, A. Banerjee, A. Ganguly, S. Shekhar, N. Samatova, V. Kumar, Theory-guided data science: A new paradigm for scientific discovery from data, IEEE 29 (10) (2017) 2318–2331.

- [35] M. Raissi, P. Perdikaris, G. Karniadakis, Data-driven probability concentration and sampling on manifold, Journal of Computational Physic 378 (2016) 686–707.

- [36] X. Jia, J. Willard, A. Karpatne, J. Read, J. Zwart, M. Steinbach, V. Kumar, Physics guided rnns for modeling dynamical systems: A case study in simulating lake temperature profiles, SIAM (2019).

- [37] A. Daw, R. Thomas, C. Carey, J. Read, A. Appling, A. Karpatne, Physics-guided architecture (pga) of neural networks for quantifying uncertainty in lake temperature modeling, ArXiv 1911.02682 (2019).

- [38] D. Alves, G. Daniel, H. de Castro, T. Machado, K. Cavalca, O. Gecgel, J. Dias, S. Ekwaro-Osire, Uncertainty quantification in deep convolutional neural network diagnostics of journal bearings with ovalization fault, Mechanism and Machine Theory 149 (2020) 103835.

- [39] A. Pandey, M. Biswas, Damage detection in structures using changes in flexibility, Journal of Sound and Vibration 169 (1) (1994) 3–43176.

- [40] R. Ribeiro, T. Ritto, Damage identification in a multi-dof system under uncertainties using optimization algorithms, Journal of Applied and Computational Mechanics 4 (4) (2018) 365–374.

- [41] D. Castello, L. Stutz, F. Rochinha, A structural defect identification approach based on a continuum damage model, Computers & structures 180 (5-6) (2002) 417–436.

- [42] T. Hastie, R. Tibshirani, J. Friedman, The Elements of Statistical Learning: Data Mining, Inference, and Prediction, second edition Edition, Springer, 2016.

- [43] G. James, D. Witten, T. Hastie, R. Tibshirani, Data-driven science and engineering: machine learning, dynamical systems, and control, Springer Texts in Statistics, 2017.

- [44] D. C. Kammer, Sensor placement for on-orbit modal identification and correlation of large space structures, Journal of Guidance Control, and Dynamics 14 (2) (1991) 251–259.

- [45] T. Ritto, Choice of measurement locations of nonlinear structures using proper orthogonal modes and effective independence distribution vector, Shock and Vibration 697497 (2014) 1–6.

Appendix A Elementary mass and stiffness matrices of the bar model

The elementary mass and stiffness matrices of the bar model are given by ( is the element length):

| (11) |

| (12) |

Appendix B Mass and stiffness matrices of the computational model

The mass and stiffness matrices of the computational model are given by:

| (13) |

| (14) |