Diffusion Models Enable Zero-Shot Pose Estimation for Lower-Limb Prosthetic Users

Abstract

The application of 2D markerless gait analysis has garnered increasing interest and application within clinical settings. However, its effectiveness in the realm of lower-limb amputees has remained less than optimal. In response, this study introduces an innovative zero-shot method employing image generation diffusion models to achieve markerless pose estimation for lower-limb prosthetics, presenting a promising solution to gait analysis for this specific population. Our approach demonstrates an enhancement in detecting key points on prosthetic limbs over existing methods, and enables clinicians to gain invaluable insights into the kinematics of lower-limb amputees across the gait cycle. The outcomes obtained not only serve as a proof-of-concept for the feasibility of this zero-shot approach but also underscore its potential in advancing rehabilitation through gait analysis for this unique population.

keywords:

transtibial , transfemoral , amputee , gait , locomotion , biomechanics , movement science[inst1]organization=Bioinformatics Institute,addressline=30 Biopolis Street, postcode=138671, country=Singapore

[inst2]organization=Nanyang Technological University,addressline=1 Nanyang Walk, postcode=637616, country=Singapore

1 Introduction

Human gait, especially in lower limb amputees, is a complex motor task requiring coordinated movement of various body segments. Extensive research has established that individuals in these populations frequently exhibit distinct gait abnormalities and physiological challenges. For example, it is known that gait asymmetry [1, 2] is prevalent, where the prosthetic and intact limbs display different walking patterns. The presence of gait asymmetry may contribute to the development of degenerative joint disease [3]. Previous studies on physiological differences have also revealed that above-knee amputations lead to an increase in oxygen consumption by approximately 49% [4], in addition to an approximately 65% higher energy expenditure when compared to individuals without amputations [5]. These issues could hinder mobility and functionality, ultimately diminishing the quality of life [6]. Therefore, clinicians are often keen to understand the gait patterns of lower limb amputees both in general and at an individual level, in order to improve the quality of life of prosthetic users through better rehabilitation monitoring, providing better-fitted prosthetics, reducing gait abnormalities and more.

Gait analysis offers a systematic approach to identify pathological gait patterns associated with neurological [7], musculoskeletal [8], and other disorders by collecting various gait parameters data [9, 10]. This allows clinicians to identify deviations from normal gait patterns and tailor interventions to address the specific issue. Gait analysis can be broadly classified as either observational[11], or quantitative.

In observational gait analysis (OGA), clinicians typically rely on a checklist to note the presence of pathological gait characteristics [2, 11]. For instance, studies have shown that individuals with lower-limb amputations often exhibit a lateral trunk lean towards the prosthetic limb [2, 12]. When clinicians observe such characteristics during OGA, they simply mark a tick on the checklist. Subsequently, they may address these issues by implementing interventions to reduce the extent of lateral trunk lean through targeted training. These metrics are often qualitative, which may introduce errors in terms of inter- and intra-rater reliability [13] and are recommended to supplement their clinical assessment with some form of quantitative measurement [14, 15].

Quantitative gait assessments have been facilitated by technologies such as instrumented motion analysis [16], force platforms, electromyography [1], and wearable sensors. These tools allow clinicians to systematically quantify a spectrum of gait parameters, enabling them to effectively monitor progress throughout the rehabilitation journey. For instance, Sjödahl et al. [17] used force platforms and motion-capture systems to identify increased hip flexion at the beginning of the stance phase on the intact limb, offering timely feedback to the patient. Furthermore, intricate spatiotemporal variables such as step length and cadence could be scrutinized in meticulous detail. The study revealed a reduction in variability on the prosthetic limb, indicative of a more stabilized and symmetrical gait pattern post-treatment. Such nuanced, comprehensive and timely analysis would not be possible if clinicians were tasked with manually tracking each individual step.

Therefore, although trained clinicians may possess the expertise to identify pathological gait patterns in OGA, the introduction of quantification brings objectivity into the assessment, facilitating more accurate and precise measurements of diverse gait parameters. This also becomes crucial when comparing results among different clinicians, ensuring consistency and reducing subjective interpretation. Furthermore, by collecting standardized and objective data, researchers can analyze gait patterns across a larger sample of amputees, compare rehabilitation strategies, and evaluate the effectiveness of different prosthetic devices.

While trained clinicians may possess the expertise to discern pathological gait patterns through OGA, the integration of quantification brings greater objectivity into the assessments. This would enable more precise and accurate measurements of a diverse range of gait parameters. This is particularly crucial when comparing results across different clinicians, ensuring consistency and mitigating subjective interpretation. Moreover, through the collection of standardized and objective data, researchers gain the ability to analyze gait patterns across a broader spectrum of amputees, compare rehabilitation strategies, and assess the efficacy of various prosthetic devices with unprecedented granularity.

Traditionally, obtaining quantitative measurements demands specialized, often costly equipment, which confines data collection to controlled laboratory environments, subsequently limiting the broader applicability of findings [18, 19]. In recent years, an alternative arises through the utilization of high-speed video recording on commercial video cameras. Advances in deep learning have enabled rapid progress in human pose estimation from videos [20, 21, 22, 23]. Pose estimation software such as OpenPose [24] are able to estimate the position of keypoints such as joints on images of humans without the need for markers. With multiple cameras, it is possible to triangulate the keypoints to obtain 3D coordinates [25]. Such markerless pose estimation techniques may potentially be the key to cost-effective systems for automated clinical gait analysis. Several studies have examined the accuracy of available pose estimation methods specifically for gait analysis applications [10, 26, 27, 28].

Current markerless pose estimation models face challenges in accurately localizing joints on prosthetic limbs [29]. This limitation stems from their training on datasets primarily featuring able-bodied individuals, resulting in poor generalization to unseen prosthetic limbs. Custom models tailored for prosthetic keypoint identification have been proposed, yet their applicability across diverse settings is constrained by the wide array of prosthetic appearances and limited training data [29, 30]. Achieving robust generalization across varied settings necessitates training on extensive datasets akin to those used in general human pose estimation, like the COCO dataset underlying OpenPose. However, the resource-intensive manual labelling of such datasets presents a formidable barrier in terms of cost and time and would likely be beyond the reach of rehabilitation labs.

This paper introduces a novel zero-shot pose estimation method leveraging existing pre-trained image generative diffusion models and pose estimation frameworks to achieve accurate pose estimation for lower limb prosthetic users. Recent advances in image generation artificial intelligence, notably with denoising diffusion models, have enabled the generation of remarkably realistic images conditioned on diverse inputs, including text descriptions and sample images [31]. Leveraging the capabilities of these image generation models, we transform prosthetic limbs in images into representations resembling able-bodied limbs while preserving their position and shape. This technique empowers existing pose estimation models to make accurate keypoint estimations on lower-limb prosthetics without further training.

To our knowledge, this is the first work that achieves zero-shot pose estimation for lower-limb prosthetic users. The contributions of this paper are twofold. First, we provide quantification of the errors made by one of the most widely used pre-trained pose estimation software, OpenPose, on lower-limb prosthetic users. Second, we demonstrate a working method for accurate zero-shot pose estimation on lower-limb prosthetic users without the need for any data collection, labelling or training.

2 Methods

We analyzed publicly accessible videos of individuals with unilateral lower-limb prosthetic walking from video sharing website YouTube [32]. For each video, an image generative model, ControlNet, was utilized to generate synthetic images corresponding to every frame of the video. Subsequently, OpenPose was applied to these newly synthesized images, allowing for the extraction of anatomical keypoints. Inverse kinematics was performed based on the 2D coordinates generated. We then evaluated the performance based on a custom model created using DeepLabCut (DLC). Gardiner et al. [33] have shown that using publicly available videos produces results that are comparable with published data from controlled laboratory studies.

2.1 Dataset

The video search was conducted by a single researcher with terms include: gait, walking, amputee, transtibial, and transfemoral. A total of 16 videos containing two subjects with different amputation levels; a female transfemoral and a male transtibial amputee, were downloaded. Essentially, each subject has two sets of four videos (Supplementary Fig. 1), recorded at different camera positions (i.e., anterior, posterior, left and right). Both subjects are amputees on the right side. As the source of videos was publicly available, the etiology for amputation, time since amputation and type of prosthetic used were unknown. The videos were downloaded at a resolution of 1280 x 720 and 30 frames per second. Subsequently, each video was trimmed to 6 seconds (180 frames) as the initial videos included the subject changing walking direction, before following the zero-shot pose estimation pipeline.

2.2 Zero-shot pose estimation pipeline

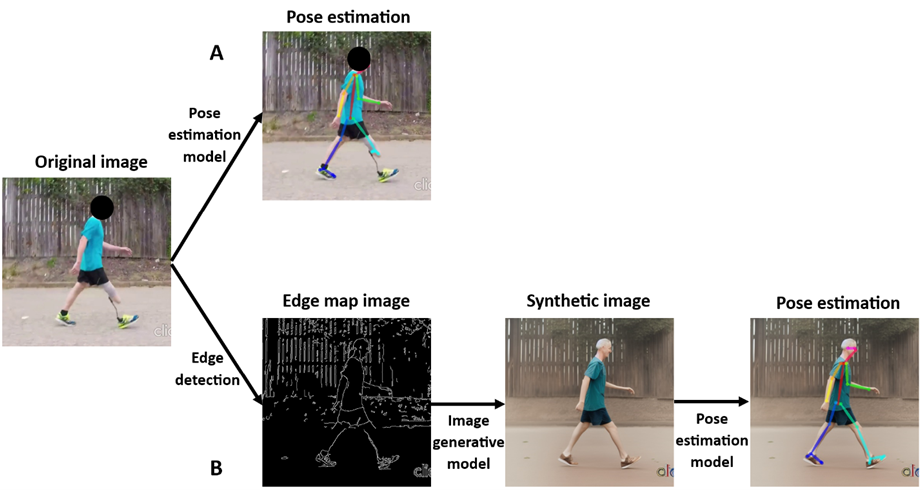

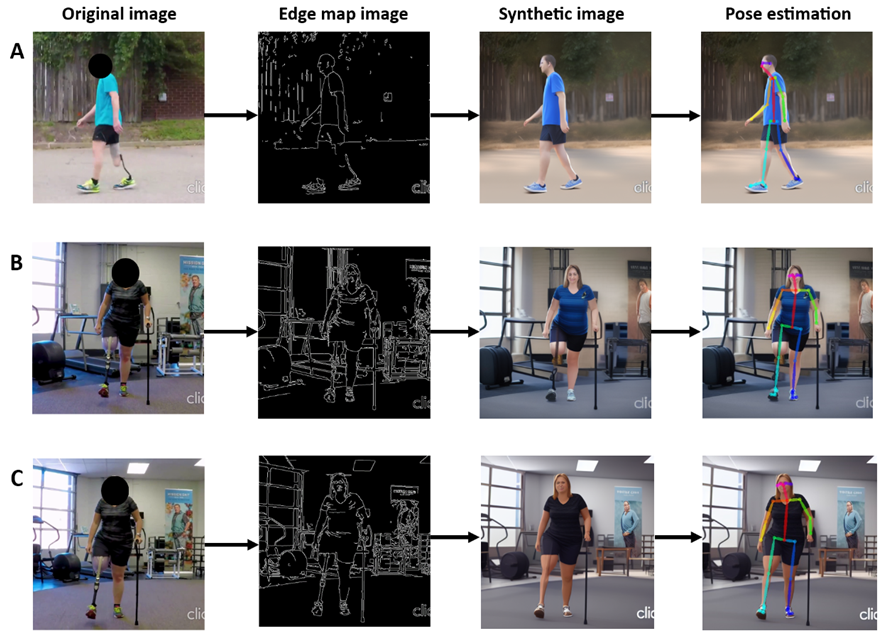

The pipeline for zero-shot pose estimation is as follows. Given a video, for each frame, we first apply edge detection using Canny edge detection to generate an edge map. The edge map is then used as the conditional control into a text-to-image generative diffusion model. In this case, we use ControlNet proposed by Zhang & Agrawala [31] which applies conditional controls using inputs such as edge maps, segmentation maps, and keypoints to pretrained large diffusion models to support additional input conditions other than prompt text. The generated image by the diffusion model conforms to the edge map to a large extent but transforms the prosthetic limbs into able-bodied limbs. The generated image is then passed to a standard pose estimation model, for example, OpenPose, trained on large human pose estimation datasets to output the pixel locations of body keypoints. The frames were rescaled to 512 x 512 to adhere to the default ControlNet configuration.

2.3 Edge map conditioned diffusion model

Image generative diffusion models are currently state-of-the-art models for generating synthetic images. They are inspired by the diffusion process where molecules move from high to low-concentration areas, leading to a homogenization of the distribution over time. In image generation, the diffusion process is applied to the image pixels, where random noise is added over a number of time steps until the image is indistinguishable from random Gaussian noise.

The diffusion process is typically implemented using a sequence of invertible transformations, such as Gaussian diffusion or Langevin dynamics. During training, the model learns to reverse the diffusion process given the time step and the noisy image at that time step. During inference, new images can be generated by sampling from random Gaussian noise and applying the learnt reverse diffusion step-wise to obtain a clean generated image.

To generate images conditioned upon other features such as class, text description, or sample image, the conditioning information is provided to the diffusion model as an additional input. The conditioning information is typically encoded into a fixed-length vector representation using a separate neural network, such as a text encoder, which is then used as an input to the diffusion model.

During training, the diffusion model is trained to maximize the log-likelihood of the observed data given the conditioning information. During inference, the conditioned diffusion model is used to generate images by sampling from the diffusion process given the conditioning information. The conditioning information is used to initialize the diffusion process and to guide the sampling process at each time step.

Overall, conditioning diffusion models for image generation involves modifying the training and inference procedures to incorporate the conditioning information, and training a separate network to encode the conditioning information into a fixed-length vector that can be used as input to the diffusion model.

ControlNet is a neural network architecture proposed by [31] that applies task-specific controls to large image diffusion models such as Stable Diffusion. ControlNet does so by changing the inputs of diffusion network blocks. ControlNet makes a copy of the network block and applies a 1x1 convolution layer before and after the block. The corresponding diffusion model network block weights are frozen during training to avoid overfitting to the smaller conditional training set and preserve the model’s pre-trained high-quality image generation capabilities. The control condition is fed to the first 1x1 convolution layer and the output of that layer is added to the original input of the network block. This modified input is then passed through the network block and the subsequent 1x1 convolution layer. The resulting output is added to the original output of the network block [31].

On the Stable Diffusion model, which follows a U-Net architecture, ControlNet is implemented to control each level of the U-Net. It creates trainable copies of the 12 encoding blocks and 1 middle block of Stable Diffusion. The outputs of the ControlNet are added to the 12 skip-connections and 1 middle block of the U-Net. We refer interested readers to the detailed explanation in the original paper.

ControlNets have been trained to perform tasks based on various conditions, this includes generating images that are controlled by Canny edges, Hough lines, HED edges, human pose keypoints, segmentation maps, depth maps and more.

Specifically relevant to this application, the Canny edge conditioned ControlNet was trained with edge maps that were obtained by processing 3M images (with captions) using the Canny edge detector. The positive prompts used to generate images for this study were, “an able-body person walking, intact lower limbs, 2 legs, full-body portrait, realistic”, while the negative prompts were, “cyborg, amputee, panfuturism”. The code for ControlNet is created by [31] and available at https://github.com/lllyasviel/ControlNet.

2.4 Pretrained pose estimation model

Human pose estimation models aim to estimate the pose of humans in 2D images, typically by detecting body keypoints. Deep learning has led to significant advances in human pose estimation, and models such as OpenPose and AlphaPose, which have been trained on large datasets (COCO human keypoints dataset), demonstrated good performance on various benchmarks. Several studies also investigated the accuracy of these pretrained pose estimation models for clinical applications including gait analysis. Here we introduce OpenPose in more detail, which is the widely used model in pose estimation for clinical and biomechanics studies [34, 28, 35, 36].

The OpenPose model uses a multi-stage convolutional neural network (CNN) architecture to process images and estimate human poses [24]. The keypoint detection is performed in two stages: the first stage generates a coarse heatmap of body keypoints and parts affinity field, and the second stage refines the heatmap to produce a more accurate estimate of the keypoint locations. The model then uses the key point heatmap and parts affinity field to link up the keypoints into the pose skeleton. The model can detect various body keypoints, including those of the head, neck, shoulders, elbows, wrists, hips, knees, and ankles. OpenPose is designed to work in real-time and is capable of estimating human poses in images or videos with multiple people, even in complex scenes with occlusions and overlapping body parts. It is also able to estimate the pose of people in different orientations and viewpoints.

Another pose estimation model used in this study was DeepLabCut (DLC) version 2.3.0. DLC allows users to label and train with their own key points. Its architecture consists of a residual neural network (ResNet-50) with deep convolutional and deconvolutional neural network layers to predict the keypoints using feature detection. We train a DLC model using videos from the transtibial amputee. Ground truth labels for 8 lower-body keypoints (left and right of hip, knee, ankle and toes) were obtained by manual annotation of every frame for one set of the transtibial videos. Using the labels of these 4 videos as ground truth, we evaluated the accuracy of the custom model (using DLC) trained on 20 labelled frames until the training loss had plateaued.

The data, expressed in mean (and standard deviation in parenthesis), are reported in pixels due to unknown measurements and the lack of a calibration procedure in the video. Results from our preliminary study were deemed satisfactory which showed that the custom model had a mean absolute error (MAE) of 7.89 3.11 pixels for the coordinates (Supplementary Table 5) and 1.61∘ 1. 19∘ for kinematics when evaluated on one set of transtibial videos (Supplementary Table 6). Therefore, a custom model following the same steps was taken to obtain the ground truth coordinates for the other 12 videos. The resulting keypoints were visually examined frame by frame to ensure accuracy before we effectively treated as manual labels.

2.5 Error quantification on keypoints coordinates

In certain frames, the visibility of keypoints may be occluded leading to lower likelihood scores given by OpenPose. Consequently, frames with a likelihood below 0.50 were removed. Cubic spline interpolation was applied to address the resulting gaps in the data, and a low-pass Butterworth filter (4th order, 6 Hz) was subsequently applied to attenuate high-frequency noise. These procedures have also been used in previous studies [37, 26].

2.6 Error quantification on joint kinematics

Assuming that the left and right camera views are positioned perpendicularly relative to the sagittal plane, lower-limb joint angles of the limb closest to the camera can be calculated using the keypoints coordinates via inverse kinematics [10, 28, 26, 27]. The hip angle was calculated involving the keypoint vectors of the hip, knee and a relative vertical line to the hip. Similarly, the knee angle was computed by using the keypoint vectors of the hip, knee and ankle. In both cases, a positive value indicates flexion while a negative value indicates extension. The ankle angle was calculated using the keypoints vectors of the knee, ankle and toe. In addition, the ankle angle is defined by the foot with respect to a 90∘ line to the tibia. The kinematic data for the entire time-series was initially calculated, followed by time normalization from 0-100% of the gait cycles. Gait cycles were defined as consecutive occurrences of heel strikes by the same foot, which were manually identified. There were at least 4 gait cycles observed for all videos.

To quantify the error of pose estimation, we use the MAE which is the mean Euclidean distance between ground truth and predicted keypoints. Both coordinates and kinematics data were quantified using MAE. There are several previous works in the literature that quantify the accuracy of markerless pose estimation models such as OpenPose for the purpose of gait analysis. Supplementary Table 4 summarizes existing data reported on the accuracy of clinical gait applications of markerless pose estimation on able-bodied individuals. Original values reported are used if provided in the papers, otherwise, the values were estimated by reading off from figures.

3 Results

The experimental findings demonstrate significant improvement in pose estimation accuracy achieved by our zero-shot method when compared to the established pose estimation software, OpenPose. This is a crucial improvement needed for practical gait analysis, such as comparing joint angles through the gait cycle, that is of interest to clinicians. Additionally, we also discern and quantify variations in pose estimation performance across different prosthetic types.

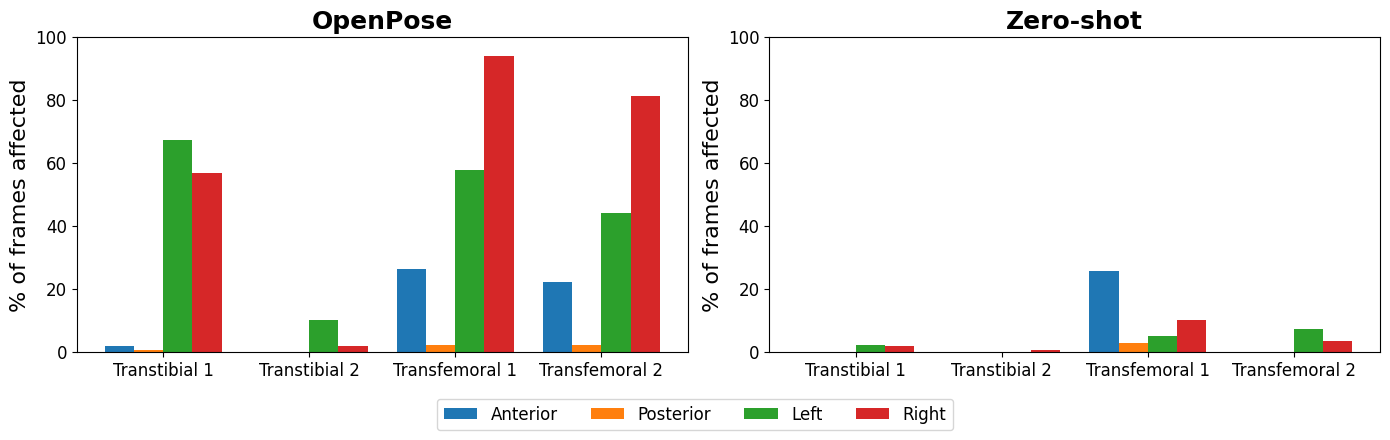

3.1 Obvious misidentification in OpenPose resolved by zero-shot method

Our findings reveal that when OpenPose is directly applied to the original image, a substantial proportion of frames exhibit either an inability to identify or a conspicuous misidentification of lower-limb keypoints (Fig. 2). Supplementary Table 1 provides a detailed breakdown of these occurrences. Specifically, transfemoral images display a higher incidence of such challenges compared to their transtibial counterparts. Notably, our zero-shot method demonstrates remarkable efficacy in mitigating the number of affected frames across all camera perspectives. It is also important to note that the majority of observed keypoint challenges are concentrated in the sagittal camera view, particularly when the prosthetic limb is in closer proximity to the camera.

3.2 Substantial improvement in accuracy of quantitative measures using zero-shot method

The OpenPose method exhibited coordinate errors that were about twice as large (Table 1) on the prosthetic limb (right) compared to the intact limb (left). A detailed breakdown of the individual keypoints coordinates errors can be found in Supplementary Table 2.

The zero-shot method consistently outperformed the OpenPose method in estimating keypoints on the prosthetic limb by a large margin for joint coordinates. The mean absolute error (MAE) measured for joint coordinates decreases by 37% for transtibial prosthetic limbs and 76% for transfemoral prosthetic limbs. Even though the OpenPose method yielded slightly lower coordinate errors on the intact limb, the differences observed were relatively minor, with a mean difference of less than 2 pixels.

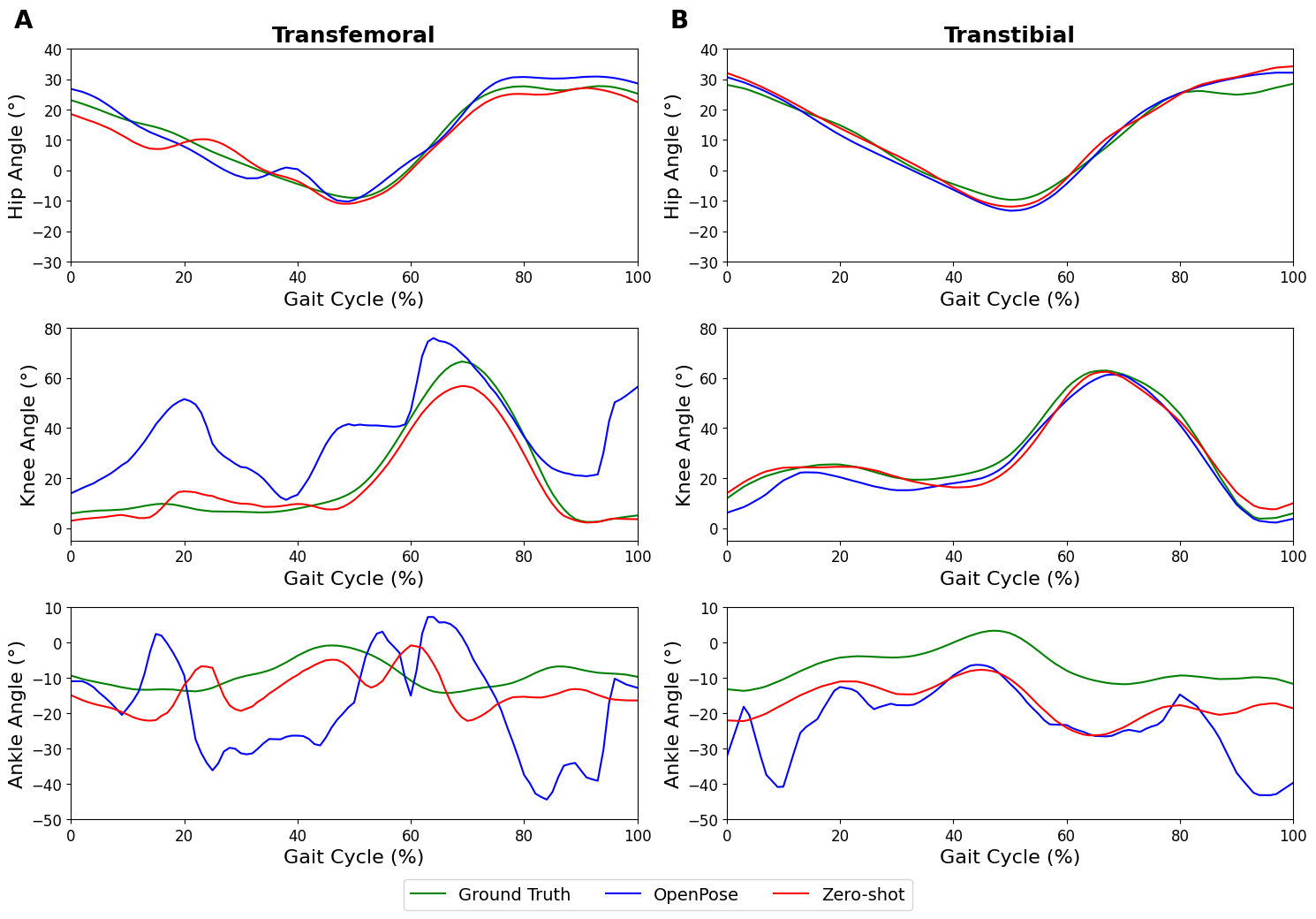

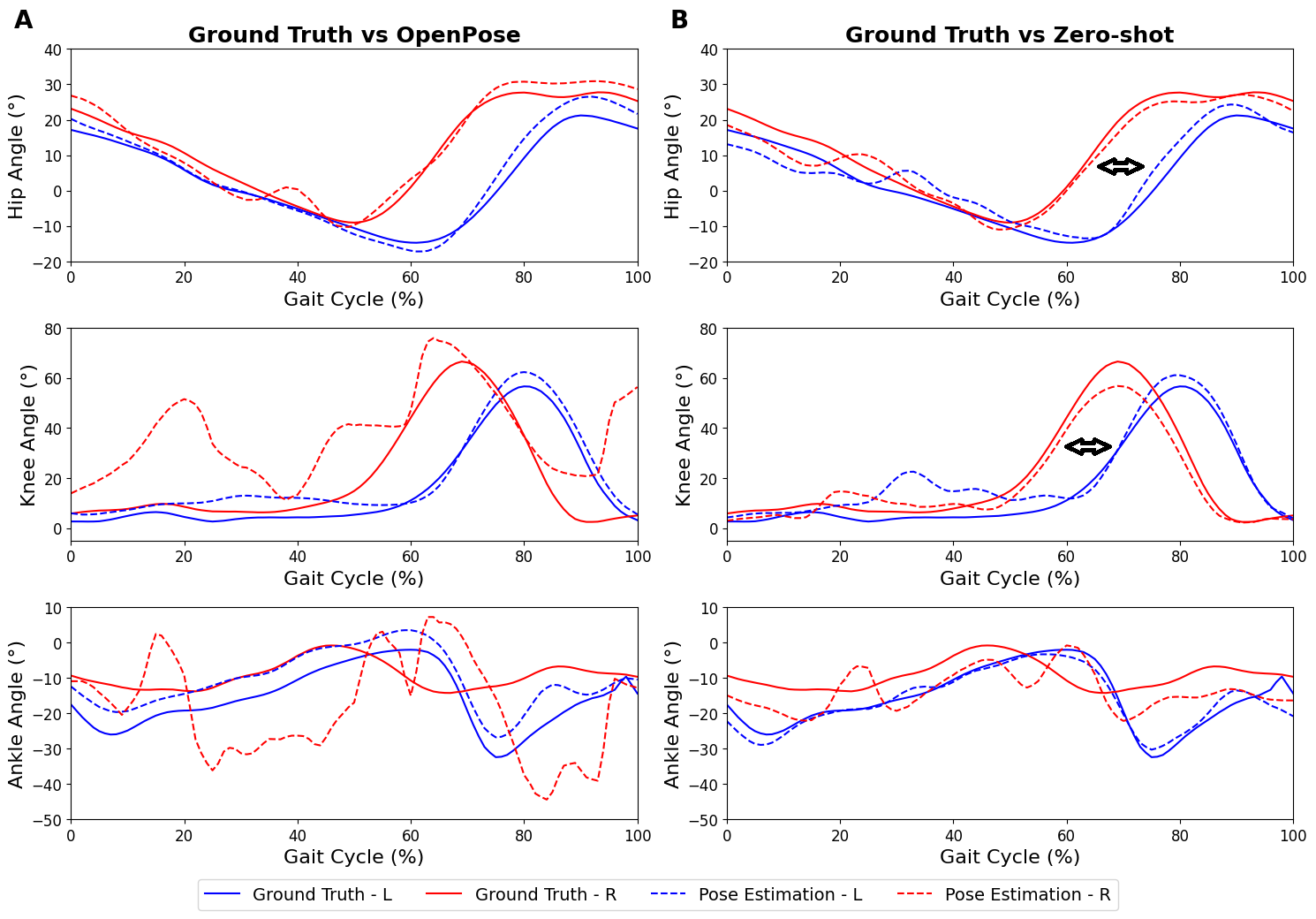

Similarly for joint kinematics, the errors against ground truth using the zero-shot method consistently outperformed the OpenPose method on the prosthetic limb by a large margin. The improvement is particularly noteworthy for ankle angles and transfemoral knee angles. The comparison of joint kinematics is shown in Fig. 4.

| Metrics (Units) | Amputation Type | Leg | OpenPose (MAE) | Zero-shot (MAE) |

|---|---|---|---|---|

| Joint Coordinates (pixels) | Transfemoral | Prosthetic | >100 (>100) | 23.7 (35.61) |

| Intact | 10.22 (5.36) | 11.96 (7.58) | ||

| Transtibial | Prosthetic | 24.07 (15.74) | 15.18 (6.66) | |

| Intact | 12.01 (3.54) | 12.27 (7.60) | ||

| Joint Kinematics (∘) | Transfemoral | Prosthetic | 29.64 (26.07) | 6.66 (6.50) |

| Intact | 5.71 (4.77) | 5.23 (5.10) | ||

| Transtibial | Prosthetic | 10.23 (9.08) | 6.32 (4.49) | |

| Intact | 2.90 (2.11) | 3.25 (2.54) |

Although the open-source videos lacked explicit calibration or measurement information, an estimate of the subject’s height enabled us to approximate the metric error. Assuming a subject height of approximately 180 cm, we deduced that 1 pixel corresponded to approximately 0.3 cm. These assumptions enabled us to draw comparisons with previous findings. Our results for OpenPose on subjects with intact limbs are consistent with existing literature (Supplementary Table 4). Clinicians aiming to replicate our study may consider incorporating calibration steps at the beginning of the video recording. This would allow them to gather additional gait parameters, such as stride length and step width, which were not analyzed in our study due to the utilization of open-source videos.

3.3 Comparison of performance between different prosthetic types

The two main types of lower-limb prosthetics, transfemoral and transtibial differ in appearance and in characteristics of gait. The performance of pose estimation and gait analysis on transfemoral and transtibial amputees are compared.

As observed in Table 1, when comparing transfemoral and transtibial amputees, the pose estimation of the prosthetic limb exhibited poorer performance in the transfemoral amputee. Even with the application of the zero-shot method, the coordinate errors on the prosthetic limb were twice as substantial in the transfemoral amputee. Whereas in the transtibial amputee, the zero-shot method was able to reduce the coordinate errors on the prosthetic limb to a comparable level as the intact limb.

The hip angle curve exhibited satisfactory results for both transtibial and transfemoral amputees when employing both the OpenPose and zero-shot methods. Particularly, the zero-shot method displayed the ability to reduce the MAE on the prosthetic limb for the transfemoral condition by about 3 degrees.

In the knee angle, the OpenPose method was able to replicate the kinematic curvature throughout the gait cycle in the transtibial amputee (Fig. 3) but less so in the transfemoral amputee (Fig. 3). Thereafter, the application of the zero-shot method resulted in an improvement in replicating the knee angle kinematic throughout the gait cycle for the transfemoral amputee (Fig. 3).

In comparison to the hip and knee angles measured using OpenPose, the ankle angle exhibited higher error values. This observation is further supported by the higher error in ankle keypoint coordinate (Supplementary Table 3). When the zero-shot method was applied, a slight improvement in replicating the ankle kinematic curve for the transtibial amputee was observed, but no improvement was evident for the transfemoral amputee.

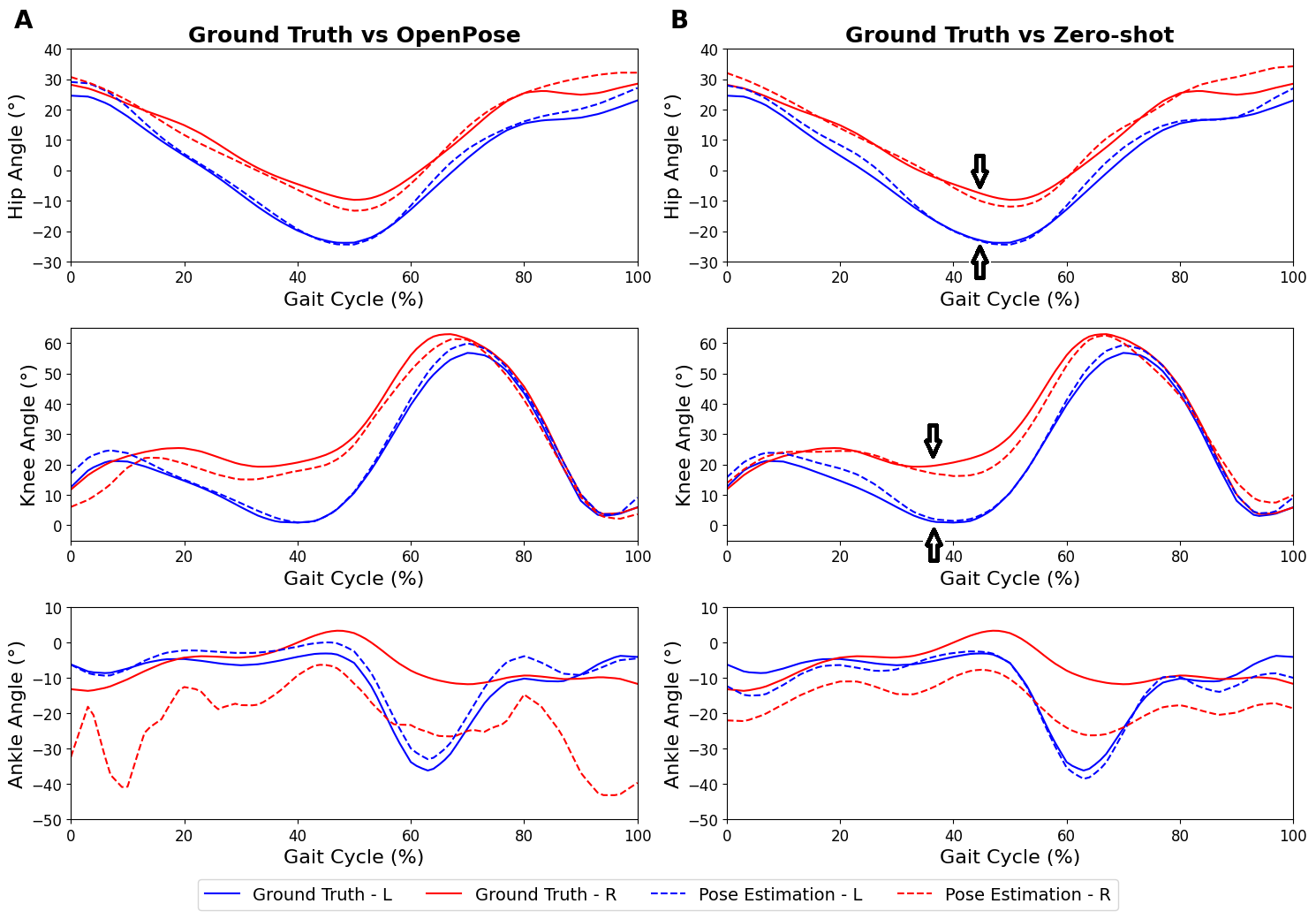

3.4 Gait anomalies in prosthetic limb revealed by zero-shot method

Previous studies have indicated significant kinematic differences between prosthetic and intact limbs in lower-limb amputees [38]. Notably, the kinematic gait cycle observed in our study exhibited a similar pattern to those reported in previous studies [39, 2, 40].

For instance, as seen in Fig. 4, our zero-shot approach revealed that the hip extension of the transtibial amputee was reduced. Additionally, within the 10-50% range of the gait cycle, a decreased range of motion was observed in the knee angle. In the case of the transfemoral amputee as seen in Fig. 5, a noticeable phase shift in both hip and knee angles was observed, indicating a leftward displacement in the kinematic patterns throughout the gait cycle when compared to the intact limb’s kinematics. In comparison, OpenPose fails to capture some of these findings, especially in the transfemoral case due to greater inaccurate joint angle kinematics. This demonstrates the effectiveness of the zero-shot method over OpenPose for identifying and quantifying key anomalies during gait analysis and provides the means for clinicians to uncover and measure key gait markers during rehabilitation.

4 Discussion

4.1 Improved pose estimation accuracy enables quantitative analysis of gait biomechanics in lower-limb amputees

Gait analysis for lower-limb prosthetic users without the need for specialized equipment has the potential to transform rehabilitation for this population, by providing an automated, consistent and quantitative assessment of the presence and severity of pathological gait. This opens up the possibility of rapid clinical feedback, reliable longitudinal tracking of progress, objective comparison between rehabilitation strategies and more. The method proposed in this work is a step towards this goal through accurate pose estimation from markerless videos acquired from low-cost commercial cameras.

The results obtained in our investigation demonstrate the effectiveness of the zero-shot method in reducing both the coordinates and kinematics errors when compared to applying OpenPose on the original images. Although the ankle kinematics exhibited a relatively higher error value, the hip and knee kinematics generated using the zero-shot method remain valuable for clinicians. Such kinematic information enables clinicians to discern disparities in kinematic patterns between intact and prosthetic limbs, thereby facilitating the planning and monitoring of individualized rehabilitation programs. Presently, it is evident that OpenPose encounters difficulties in accurately detecting images featuring prosthetic limbs, with higher error rates observed for prosthetic limbs compared to intact limbs (Fig. 2). This observation aligns with the study by Cimorelli et al.[29], who similarly reported challenges when utilizing OpenPose for lower-limb amputees, consequently restricting the applicability of markerless motion capture in this population.

Our findings demonstrate that the implementation of the zero-shot method leads to enhanced keypoint detection by greatly reducing the number of problematic frames, subsequently reducing coordinate and kinematic errors. This improvement has practical implications for gait analysis, particularly in deriving kinematic angles throughout the gait cycle. This information is crucial for clinicians to comprehend the gait symmetry of individuals. From a clinical standpoint, the analysis of kinematics throughout the gait cycle provides the ability to discern asymmetrical walking patterns between the prosthetic and intact limb. When compared to OpenPose, we have shown the zero-shot method to be more effective in identifying and quantifying significant anomalies during gait analysis. By incorporating the identified anomalies and utilizing the insights gained from the zero-shot method, the clinician can design an individualized rehabilitation regimen that addresses specific gait deviations and promotes the restoration of balanced walking patterns. This personalized approach enhances the potential for successful rehabilitation outcomes in the lower-limb amputees’ population.

4.2 Limitation, possible solutions and future work

One drawback in the application of the zero-shot method is the increased swapping of lower-limb keypoints in the sagittal plane (Fig. 2). This occurs when the edge map generation process fails to preserve the information regarding the leg’s proximity to the camera. Humans can perceive the relative positions of objects from 2D images based on overlapping regions. For instance, if the left leg is closer to the camera, the left leg will naturally occlude parts of the right leg that are directly behind when viewing from the camera. Subsequently, when the edge detector fails to identify the outline of the leg in the region where the two legs overlap, it will result in an ambiguous edge map. This ambiguity may potentially result in a swap between the two legs in the synthetic image. Fig. 6 provides an illustrative example of the observed phenomenon. Nevertheless, this issue can be readily resolved by manually identifying and correcting the swapped frames or by utilizing other cues, such as the asynchronous movement of the ipsilateral arm and leg swing. This observation is reinforced by the finding that, although the zero-shot method requires a higher number of frames to be swapped compared to OpenPose, it still leads to a decrease in error. It is worth highlighting that the default OpenPose on the original image may also cause the lower-limb keypoints to swap, similar to previous studies [28, 41]. Moreover, the zero-shot method resulted in the reduction of frames where keypoints were not detected in OpenPose due to the non-resemblance of intact limbs. This further demonstrates the efficacy of the zero-shot method in converting the prosthetic limb to a human-like representation, thereby allowing the application of pose estimation.

It is important to acknowledge that not all frames can be successfully transformed into images resembling able-bodied individuals (Fig. 6B). This indicates certain limitations with the edge map. Nevertheless, even when comparing frames at approximately the same gait cycle, the generation of synthetic images yields inconsistent results (Fig. 6C). This inconsistency is more pronounced in the case of transfemoral amputees, likely attributed to the dissimilarity between the prosthetic limb and a human limb, posing challenges for the model in identifying the appropriate image type. Despite inputs of positive prompts into ControlNet, emphasizing the presence of two intact limbs, the model may still encounter difficulties. However, it is worth noting that the majority of frames can still be successfully transformed, as evidenced by the overall reduction in coordinate error, especially for the prosthetic limb. Another limitation pertains to the wide range of prosthetic options available in the market. Our testing of the diffusion model was limited to a specific subset of prosthetic users, which may introduce some variability in the results. Nevertheless, we anticipate that any deviations from our findings will be minor and not substantially different.

The main advantage of the proposed method is its ability to perform zero-shot pose estimation for prosthetic users without the need for any manual labelling and model training. However, the zero-shot ability comes with the limitations of slow processing speed due to the need to run inference on the large image generative diffusion model. Thus, there is a trade-off between inference time versus the time required for manual labelling for prosthetic joints and finetuning or training a custom detection model. With personal computing hardware (Nvidia GTX Titan X), the speed of generating 512 x 512 synthetic images is approximately 8 - 10 seconds per image which presents the main bottleneck for the workflow. This means for a 24 FPS video, the inference time needed is 200x that of the video length. Given a walking speed of 1 m/s, a 10 m walk test may require 10 seconds of video recording and may take more than 30 minutes to process which precludes real-time analysis and presents limitations for practical clinical usage.

To address this, we propose 2 methods to speed up inference. First, clinicians may run pose estimation on the original video to identify poorly estimated frames before employing the image-generative zero-shot workflow on the poorly estimated frames only. Second, clinicians may drop selected frames to reduce the number of images to be generated. For the purpose of gait analysis, the gait cycle is obtained by averaging over many gaits. In such use cases, it is possible to remove selected frames and perform interpolation without affecting the resultant gait cycle significantly. Nevertheless, the zero-shot method is still faster than creating a custom-trained image set which includes manual annotations and a longer training time for custom pose estimation models for each individual.

Another potential alternative for regenerating an intact limb image for lower-limb amputees is inpainting a masked section of the image with diffusion models [42]. This technique involves selectively highlighting specific parts of an image that require modification. However, this option was not explored in our study due to the continuous movement of the limbs in each image, which necessitates manual masking and thereby increases post-processing time. Future research could investigate alternative methods for automating the masking of the prosthetic limb in each frame. By generating images for smaller masked regions, it may be possible to expedite the inference time.

5 Conclusion

The proposed zero-shot method presents a novel approach to applying markerless pose estimation techniques in the context of lower-limb amputees. Our results demonstrate that this approach improves the detection of keypoints on prosthetic limbs, thereby enabling clinicians to gain valuable insights into the subject’s kinematics throughout the gait cycle. Although it is important to acknowledge that the method is not flawless, its successful implementation showcases the proof-of-concept for such techniques. Future investigations can focus on streamlining the workflow to enable real-time analysis, thereby enhancing its practical utility in clinical settings.

References

- [1] C. M. Powers, S. Rao, J. Perry, Knee kinetics in trans-tibial amputee gait, Gait & Posture 8 (1) (1998) 1–7.

-

[2]

A. Esquenazi, Gait Analysis in Lower-Limb Amputation and Prosthetic Rehabilitation 25 (1) 153–167.

doi:10.1016/j.pmr.2013.09.006.

URL https://linkinghub.elsevier.com/retrieve/pii/S1047965113000739 - [3] L. Yang, P. Dyer, R. Carson, J. Webster, K. B. Foreman, S. Bamberg, Utilization of a lower extremity ambulatory feedback system to reduce gait asymmetry in transtibial amputation gait, Gait & posture 36 (3) (2012) 631–634.

- [4] C. T. Huang, J. R. Jackson, N. B. Moore, P. R. Fine, K. V. Kuhlemeier, G. H. Traugh, P. T. Saunders, Amputation: Energy cost of ambulation 60 (1) 18–24. arXiv:420566.

- [5] G. H. Traugh, P. J. Corcoran, R. L. Reyes, Energy expenditure of ambulation in patients with above-knee amputations 56 (2) 67–71. arXiv:1124978.

- [6] R. Gailey, K. Allen, J. Castles, J. Kucharick, M. Roeder, Review of secondary physical conditions associated with lower-limb, Journal of Rehabilitation Research & Development 45 (1-4) (2008) 15–30.

-

[7]

Y. Celik, S. Stuart, W. Woo, A. Godfrey, Gait analysis in neurological populations: Progression in the use of wearables 87 9–29.

doi:10.1016/j.medengphy.2020.11.005.

URL https://linkinghub.elsevier.com/retrieve/pii/S1350453320301697 -

[8]

I. K. Jalata, T.-D. Truong, J. L. Allen, H.-S. Seo, K. Luu, Movement Analysis for Neurological and Musculoskeletal Disorders Using Graph Convolutional Neural Network 13 (8) 194.

doi:10.3390/fi13080194.

URL https://www.mdpi.com/1999-5903/13/8/194 -

[9]

L. Lonini, Y. Moon, K. Embry, R. J. Cotton, K. McKenzie, S. Jenz, A. Jayaraman, Video-Based Pose Estimation for Gait Analysis in Stroke Survivors during Clinical Assessments: A Proof-of-Concept Study 6 (1) 9–18.

doi:10.1159/000520732.

URL https://www.karger.com/Article/FullText/520732 -

[10]

M. Moro, G. Marchesi, F. Odone, M. Casadio, Markerless gait analysis in stroke survivors based on computer vision and deep learning: A pilot study, in: Proceedings of the 35th Annual ACM Symposium on Applied Computing, ACM, pp. 2097–2104.

doi:10.1145/3341105.3373963.

URL https://dl.acm.org/doi/10.1145/3341105.3373963 -

[11]

C. Ridao-Fernández, E. Pinero-Pinto, G. Chamorro-Moriana, Observational Gait Assessment Scales in Patients with Walking Disorders: Systematic Review 2019 1–12.

doi:10.1155/2019/2085039.

URL https://www.hindawi.com/journals/bmri/2019/2085039/ - [12] M. J. Highsmith, C. R. Andrews, C. Millman, A. Fuller, J. T. Kahle, T. D. Klenow, K. L. Lewis, R. C. Bradley, J. J. Orriola, Gait training interventions for lower extremity amputees: a systematic literature review, Technology & Innovation 18 (2-3) (2016) 99–113.

-

[13]

S. J. Hillman, S. C. Donald, J. Herman, E. McCurrach, A. McGarry, A. M. Richardson, J. E. Robb, Repeatability of a new observational gait score for unilateral lower limb amputees 32 (1) 39–45.

doi:10.1016/j.gaitpost.2010.03.007.

URL https://linkinghub.elsevier.com/retrieve/pii/S0966636210000779 - [14] M. Saleh, G. Murdoch, In defence of gait analysis. observation and measurement in gait assessment, The Journal of bone and joint surgery. British volume 67 (2) (1985) 237–241.

- [15] J. M. Wilken, R. Marin, Gait analysis and training of people with limb loss, Care of the Combat Amputee (2009) 535–52.

- [16] D. J. Sanderson, P. E. Martin, Lower extremity kinematic and kinetic adaptations in unilateral below-knee amputees during walking, Gait & Posture 6 (2) (1997) 126–136.

- [17] C. Sjödahl, G.-B. Jarnlo, B. Söderberg, B. Persson, Kinematic and kinetic gait analysis in the sagittal plane of trans-femoral amputees before and after special gait re-education, Prosthetics and orthotics international 26 (2) (2002) 101–112.

-

[18]

S. Blair, M. J. Lake, R. Ding, T. Sterzing, Magnitude and variability of gait characteristics when walking on an irregular surface at different speeds 59 112–120.

doi:10.1016/j.humov.2018.04.003.

URL https://linkinghub.elsevier.com/retrieve/pii/S0167945717305407 - [19] B. Van Hooren, J. T. Fuller, J. D. Buckley, J. R. Miller, K. Sewell, G. Rao, C. Barton, C. Bishop, R. W. Willy, Is motorized treadmill running biomechanically comparable to overground running? a systematic review and meta-analysis of cross-over studies, Sports medicine 50 (2020) 785–813.

-

[20]

M. G. Bernal-Torres, H. I. Medellín-Castillo, J. C. Arellano-González, Development of a new low-cost computer vision system for human gait analysis: A case study 095441192311636doi:10.1177/09544119231163634.

URL http://journals.sagepub.com/doi/10.1177/09544119231163634 -

[21]

Y. Chen, Y. Tian, M. He, Monocular human pose estimation: A survey of deep learning-based methods 192 102897.

doi:10.1016/j.cviu.2019.102897.

URL https://linkinghub.elsevier.com/retrieve/pii/S1077314219301778 -

[22]

E. Insafutdinov, L. Pishchulin, B. Andres, M. Andriluka, B. Schiele, DeeperCut: A Deeper, Stronger, and Faster Multi-Person Pose Estimation Model.

arXiv:1605.03170.

URL http://arxiv.org/abs/1605.03170 -

[23]

A. Mathis, S. Schneider, J. Lauer, M. W. Mathis, A Primer on Motion Capture with Deep Learning: Principles, Pitfalls, and Perspectives 108 (1) 44–65.

doi:10.1016/j.neuron.2020.09.017.

URL https://linkinghub.elsevier.com/retrieve/pii/S0896627320307170 -

[24]

Z. Cao, G. Hidalgo, T. Simon, S.-E. Wei, Y. Sheikh, OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields 43 (1) 172–186.

doi:10.1109/TPAMI.2019.2929257.

URL https://ieeexplore.ieee.org/document/8765346/ -

[25]

S. D. Uhlrich, A. Falisse, L. Kidzinski, J. Muccini, M. Ko, A. S. Chaudhari, J. L. Hicks, S. L. Delp, OpenCap: 3D human movement dynamics from smartphone videos.

doi:10.1101/2022.07.07.499061.

URL http://biorxiv.org/lookup/doi/10.1101/2022.07.07.499061 -

[26]

B. Van Hooren, N. Pecasse, K. Meijer, J. M. N. Essers, The accuracy of markerless motion capture combined with computer vision techniques for measuring running kinematics sms.14319doi:10.1111/sms.14319.

URL https://onlinelibrary.wiley.com/doi/10.1111/sms.14319 -

[27]

E. P. Washabaugh, T. A. Shanmugam, R. Ranganathan, C. Krishnan, Comparing the accuracy of open-source pose estimation methods for measuring gait kinematics 97 188–195.

doi:10.1016/j.gaitpost.2022.08.008.

URL https://linkinghub.elsevier.com/retrieve/pii/S0966636222004738 -

[28]

J. Stenum, C. Rossi, R. T. Roemmich, Two-dimensional video-based analysis of human gait using pose estimation 17 (4) e1008935.

doi:10.1371/journal.pcbi.1008935.

URL https://dx.plos.org/10.1371/journal.pcbi.1008935 -

[29]

A. Cimorelli, A. Patel, T. Karakostas, R. J. Cotton, Portable in-clinic video-based gait analysis: Validation study on prosthetic users.

doi:10.1101/2022.11.10.22282089.

URL http://medrxiv.org/lookup/doi/10.1101/2022.11.10.22282089 -

[30]

A. Mathis, P. Mamidanna, K. M. Cury, T. Abe, V. N. Murthy, M. W. Mathis, M. Bethge, DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning 21 (9) 1281–1289.

doi:10.1038/s41593-018-0209-y.

URL https://www.nature.com/articles/s41593-018-0209-y - [31] L. Zhang, M. Agrawala, Adding Conditional Control to Text-to-Image Diffusion Models, preprint at https://arxiv.org/abs/2302.05543. doi:10.48550/ARXIV.2302.05543.

-

[32]

Mission gait.

URL https://www.youtube.com/@MissionGait -

[33]

J. Gardiner, N. Gunarathne, D. Howard, L. Kenney, Crowd-Sourced Amputee Gait Data: A Feasibility Study Using YouTube Videos of Unilateral Trans-Femoral Gait 11 (10) e0165287.

doi:10.1371/journal.pone.0165287.

URL https://dx.plos.org/10.1371/journal.pone.0165287 -

[34]

N. Nakano, T. Sakura, K. Ueda, L. Omura, A. Kimura, Y. Iino, S. Fukashiro, S. Yoshioka, Evaluation of 3D Markerless Motion Capture Accuracy Using OpenPose With Multiple Video Cameras 2 50.

doi:10.3389/fspor.2020.00050.

URL https://www.frontiersin.org/article/10.3389/fspor.2020.00050/full -

[35]

K. Sato, Y. Nagashima, T. Mano, A. Iwata, T. Toda, Quantifying normal and parkinsonian gait features from home movies: Practical application of a deep learning–based 2D pose estimator 14 (11) e0223549.

doi:10.1371/journal.pone.0223549.

URL https://dx.plos.org/10.1371/journal.pone.0223549 -

[36]

K. Abe, K.-I. Tabei, K. Matsuura, K. Kobayashi, T. Ohkubo, OpenPose-based Gait Analysis System For Parkinson’s Disease Patients From Arm Swing Data, in: 2021 International Conference on Advanced Mechatronic Systems (ICAMechS), IEEE, pp. 61–65.

doi:10.1109/ICAMechS54019.2021.9661562.

URL https://ieeexplore.ieee.org/document/9661562/ -

[37]

G. Serrancoli, P. Bogatikov, J. P. Huix, A. F. Barbera, A. J. S. Egea, J. T. Ribe, S. Kanaan-Izquierdo, A. Susin, Marker-Less Monitoring Protocol to Analyze Biomechanical Joint Metrics During Pedaling 8 122782–122790.

doi:10.1109/ACCESS.2020.3006423.

URL https://ieeexplore.ieee.org/document/9131774/ -

[38]

T. Varrecchia, M. Serrao, M. Rinaldi, A. Ranavolo, S. Conforto, C. De Marchis, A. Simonetti, I. Poni, S. Castellano, A. Silvetti, A. Tatarelli, L. Fiori, C. Conte, F. Draicchio, Common and specific gait patterns in people with varying anatomical levels of lower limb amputation and different prosthetic components 66 9–21.

doi:10.1016/j.humov.2019.03.008.

URL https://linkinghub.elsevier.com/retrieve/pii/S0167945718307577 -

[39]

A. D. Koelewijn, A. J. Van Den Bogert, Joint contact forces can be reduced by improving joint moment symmetry in below-knee amputee gait simulations 49 219–225.

doi:10.1016/j.gaitpost.2016.07.007.

URL https://linkinghub.elsevier.com/retrieve/pii/S0966636216301448 - [40] H. Bateni, S. J. Olney, Kinematic and kinetic variations of below-knee amputee gait, JPO: Journal of Prosthetics and Orthotics 14 (1) (2002) 2–10.

-

[41]

L. Needham, M. Evans, D. P. Cosker, L. Wade, P. M. McGuigan, J. L. Bilzon, S. L. Colyer, The accuracy of several pose estimation methods for 3D joint centre localisation 11 (1) 20673.

doi:10.1038/s41598-021-00212-x.

URL https://www.nature.com/articles/s41598-021-00212-x - [42] A. Lugmayr, M. Danelljan, A. Romero, F. Yu, R. Timofte, L. Van Gool, Repaint: Inpainting using denoising diffusion probabilistic models, arXiv preprint arXiv:2201.09865 (2022).