Diffusion-Based Visual Art Creation: A Survey and New Perspectives

Abstract.

The integration of generative AI in visual art has revolutionized not only how visual content is created but also how AI interacts with and reflects the underlying domain knowledge. This survey explores the emerging realm of diffusion-based visual art creation, examining its development from both artistic and technical perspectives. We structure the survey into three phases, data feature and framework identification, detailed analyses using a structured coding process, and open-ended prospective outlooks. Our findings reveal how artistic requirements are transformed into technical challenges and highlight the design and application of diffusion-based methods within visual art creation. We also provide insights into future directions from technical and synergistic perspectives, suggesting that the confluence of generative AI and art has shifted the creative paradigm and opened up new possibilities. By summarizing the development and trends of this emerging interdisciplinary area, we aim to shed light on the mechanisms through which AI systems emulate and possibly, enhance human capacities in artistic perception and creativity.

1. Introduction

As an emerging concept and evolving field, Artificial Intelligence Generated Content (AIGC) has made significant progress and impact over the past several years, especially since the diffusion model was proposed (Ho et al., 2020). On the other hand, visual art, encompassing a wide variety of genres, media, and styles, possesses high artistic value and diverse creativity, sparking widespread interest. However, compared to general method innovations (Song et al., 2020a; Ho and Salimans, 2022) and specific model designs (Esser et al., 2021; Ramesh et al., 2021), relatively limited research focuses on diffusion-based methods for visual art creation. Fewer works thoroughly examine the problem, summarize frameworks, or provide trends and insights for future research.

Relevant surveys approach this problem from both technical and artistic perspectives. Some recent surveys focus on the intersection of artificial intelligence with content generation, examining data modalities and tasks (Li et al., 2023b; Foo et al., 2023) to methodological progressions and applications (Cao et al., 2023a; Bie et al., 2023). These surveys reviewed a series of work on artistic stylization (Kyprianidis et al., 2012), appearance editing (Schmidt et al., 2016), text-to-image transitions (Zhang et al., 2023e), and the newfound applications of AI across multiple data modalities (Zhang et al., 2023d). Methodologically, they span neural style transfer (Jing et al., 2019), GAN inversion (Xia et al., 2022) to attention mechanisms (Guo et al., 2022) and diffusion models (Chen et al., 2024b)—each contributing to the state of the art in their own right. From an application perspective, they explore the transformative integration of AIGC across various domains, and while remarkable, they also highlight challenges that call for further development and ethical consideration (Liu et al., 2023b; Xu et al., 2024). Meanwhile, surveys with an artistic focus unravel the interplay between arts and humanities within the AIGC era, probing into the processing and understanding of art through advanced computational methods (Bengamra et al., 2024; Castellano and Vessio, 2021b; Liu et al., 2023c), the generative potential of AI in creating novel art forms (DePolo et al., 2021; Zhang et al., 2021), and the applicability of its integration in enhancing educational and therapeutic experiences (Mai et al., 2023; Chen et al., 2024a). We noticed a lack of surveys that specifically focus on combining diffusion-based models with visual art creation, and aim to fill this gap with our work.

This survey aims to provide a comprehensive review of the intersection of diffusion-based generative methods and visual art creation. We define the research scope through two independent taxonomies from technical and artistic perspectives, identifying diffusion-based generative techniques as one of the key methods and art as a significant application scenario. Our research goals are to analyze how diffusion models have revolutionized visual art creation and to offer frameworks and insights for future research in this area. We address four main research questions that explore the trending topics, current challenges, employed methods, and future directions in diffusion-based visual art creation.

We first conduct a structural analysis of diffusion-based visual art creation, which highlights current hot topics and evolving trends. Through categorizing data into application, understanding, and generation, we find a concentration of research on generation, specifically in controllable, application-oriented, and historically- or genre-specific art creation (Choi et al., 2021; Wang et al., 2023e; Liu et al., 2023a). Furthermore, we present a new analytical framework that aligns artistic scenarios with data modalities and generative tasks, allowing for a structured approach to the research questions. Temporal analysis suggests a post-diffusion boom in visual art creation, with a steady rise in diffusion-based methods (Saharia et al., 2022; Rombach et al., 2022; Gal et al., 2022). Generative methods have shifted from traditional rule-based simulations to diffusion-model modifications, along with a progression from image-only inputs to more controllable conditional formats, and an increase in dataset generality and process complexity (Ruiz et al., 2023; Zhang et al., 2023c; Mou et al., 2024; Bar-Tal et al., 2023). The emerging trends point towards a technical evolution from basic model frameworks to interactive systems and a shift in focus toward user requirements for creativity and interactivity. These findings set the stage for our survey, which aims to bridge the gap between technological advancements and artistic creation, fostering a synergy that can lead to a new wave of innovation in AIGC.

The exploration from artistic requirements to technical problems forms a cornerstone of our investigation in diffusion-based visual art. We scrutinize the symbiotic relationship between application domains, artistic genres, and their correspondence with data modality and generative tasks. Supported by a robust body of work (Abrahamsen2023InventingAS; Zhang et al., 2024c; Qiao et al., 2022; Huang et al., 2022; Liao et al., 2022), we delve into how different visual art forms and domains drive the development of technical solutions. From complex artistic scenarios to specific genres like traditional Chinese painting (Lyu et al., 2024; Fu et al., 2021; Wang et al., 2024a; Li et al., 2021; Wang et al., 2023a), our approach deciphers the intersection of AI and art, translating artistic goals into computational tasks. We establish a framework that defines these relationships via data modalities—ranging from brush strokes (Li et al., 2024) to 3D models (Dong et al., 2024)—and generative tasks like quality enhancement (He et al., 2023) and controllable generation (Kawar et al., 2023). These tasks are then meticulously tied to artistic objectives, with corresponding evaluation metrics such as CLIP Score for controllability (Radford et al., 2021; Patashnik et al., 2021) and FID for fidelity (Heusel et al., 2017; Salimans et al., 2016). This multifaceted evaluation system ensures that the generated art not only meets technical standards but also fulfills the nuanced demands of artistic creation, aligning with the evolving trends in the post-diffusion era.

We also investigate the intricate designs and applications of diffusion-based methods to explore how these methods enhance the generative process in visual art. We offer a detailed classification of tasks such as controllable generation, content editing, and stylization, each bolstered by novel diffusion-based approaches that prioritize user input and artistic integrity (Choi et al., 2021; Gal et al., 2022). Innovations like ILVR (Choi et al., 2021) and ControlNet (Zhang et al., 2023c) exemplify the strides made in achieving precise control over image attributes, while advances in methods like GLIDE (Nichol et al., 2021) and InstructPix2Pix (Brooks et al., 2023) showcase the growing sophistication in content editing and the ability to adaptively respond to textual prompts. Stylization techniques, such as InST (Zhang et al., 2023b) and DiffStyler (Huang et al., 2024), demonstrate the nuanced application of artistic styles, while quality enhancement tools like eDiff-I (Balaji et al., 2022) and PIXART- (Chen et al., 2023) push the limits of image resolution and fidelity. Furthermore, we categorize these methods based on a unified diffusion model structure, highlighting advancements in individual modules such as encoder-decoders, denoisers, and noise predictors (Lu et al., 2023; Liu et al., 2022; Chefer et al., 2023). These developments manifest in trends that emphasize attention mechanisms, personalization, control, quality, modularity, multi-tasking, and efficiency (Cao et al., 2023b; Ruiz et al., 2023; Kumari et al., 2023). The synthesis of these trends reflects a dynamic evolution in diffusion-based generative models, marking a transformative era in visual art creation.

The frontiers of diffusion-based visual art creation are seen through the lens of technical evolution and human-AI collaboration. Technically, we are witnessing a leap into higher dimensions and more diverse modalities, transcending traditional boundaries to create immersive experiences (Zhang et al., 2022a, 2024a). A synergistic perspective reveals a future where human and AI collaboration is seamless, allowing for interactive systems that augment human creativity and facilitate a deeper reception and alignment with content (Chung and Adar, 2023; Dall’Asen et al., 2023; Guo et al., 2023). These approaches range from the use of human concepts as task inspiration to the generation of content that resonates emotionally and models that encapsulate the essence of creativity (Sartori, 2014; Yang et al., 2024a; Wu, 2022). This multidimensional approach is shifting paradigms, enabling a greater understanding and co-creation between humans and AI (Russo, 2022; Zheng et al., 2024; Zhong et al., 2023). It paints a future where the boundaries between human and AI creativity become blurred, leading to a new era of digital artistry.

In summary, our literature review yields the following contributions:

-

•

A comprehensive dataset and taxonomy of AIGC techniques in visual art creation, coded with multi-dimensional, fine-grained labels.

-

•

A framework for analyzing and categorizing the relationship between diffusion-based generative methods and their applications in visual art creation, with multi-faceted features and relationships as key findings.

-

•

A summary of frontiers, trends, and future outlooks from multiple interdisciplinary perspectives.

2. Background

Prior to the emergence of diffusion models, the field of machine learning in visual art creation had already gone through several significant developments. These stages were marked by various generative models that opened new chapters in image synthesis and editing. One of the earliest pivotal advancements was the introduction of Generative Adversarial Networks (GANs) by Goodfellow et al. (Goodfellow et al., 2014), which introduced a new framework where a generator network learned to produce data distributions through an adversarial process. Following closely, CycleGAN by Zhu et al. (Zhu et al., 2017) overcame the need for paired samples, enabling image-to-image translation without paired training samples. These models gained widespread attention due to their potential in a variety of visual content creation tasks. Simultaneously with the development of GANs, another important class of models was the Variational Autoencoder (VAEs) introduced by Kingma and Welling (Kingma and Welling, 2013), which offered a method to generate continuous and diverse samples by introducing a latent space distribution. This laid the groundwork for controllable image synthesis and inspired a series of subsequent works. With enhanced computational power and innovation in model design, Karras et al. pushed the quality of image generation further with StyleGAN (Karras et al., 2020), a model capable of producing high-resolution and lifelike images, driving more personalized and detailed image generation. The incorporation of attention mechanisms into generative models significantly improved the relevance and detail of generated content. The Transformer by Vaswani et al. (Vaswani et al., 2017), with its powerful sequence modeling capabilities, influenced the entire field of machine learning, and in visual art generation, the successful application of Transformer architecture to image recognition with Vision Transformer (ViT) by Dosovitskiy et al. (Dosovitskiy et al., 2020), and further for high-resolution image synthesis with Taming Transformers by Esser et al. (Esser et al., 2021), showed the immense potential of Transformers in visual generative tasks. Subsequent developments like SPADE by Park et al. (Park et al., 2019) and the time-lapse synthesis work by Nam et al. (Nam et al., 2019) marked significant steps towards more complex image synthesis tasks. These methods provided richer context awareness and temporal dimension control, offering users more powerful creative expression capabilities. The introduction of Denoising Diffusion Probabilistic Models (DDPMs) by Ho et al. (Ho et al., 2020) and the subsequent showcase by Ramesh et al. of DALL·E (Ramesh et al., 2021), which could create images from textual prompts based on such models, marked another leap forward for generative models, adding a new chapter to the history of model development. These models achieved breakthroughs in image quality and also demonstrated new possibilities in terms of controllability and diversity. These developments constitute a rich history of visual art creation in the field of machine learning, laying a solid foundation for the arrival of the diffusion era. In this survey, we will delve deeper into how diffusion models inherit and transcend the boundaries of these prior technologies, opening a new chapter in creative generation.

From an artistic perspective, the advancements in machine learning and generative models have intersected intriguingly with the domain of visual arts, which encompasses a wide variety of genres, media, and styles. Artists have traditionally held the reins of creative power, with the ability to produce works that carry significant artistic value and cultural resonance. The introduction of sophisticated generative algorithms offers a new toolkit for artists, potentially expanding the boundaries of their creativity (Mazzone and Elgammal, 2019). As these technological tools become more accessible and integrated into artistic workflows, they present an opportunity for artists to experiment with novel forms of expression, blending traditional techniques with computational processes (Elgammal et al., 2017). This fusion sparks widespread interest not only within the tech community but also among art enthusiasts who are curious about the new creative possibilities (Miller, 2019). Machine learning models, especially those capable of generating high-quality visual content, are increasingly seen as collaborators in the artistic process. Rather than replacing human creativity, they are enhancing it, enabling artists to explore complex patterns, intricate details, and conceptual depths that were previously difficult or impossible to achieve manually (Gatys et al., 2016). This symbiotic relationship between artist and algorithm is transforming the landscape of visual art. Artists are beginning to harness these models to create works that challenge our understanding of art and authorship (McCormack et al., 2019). As a result, the dialogue between technology and art is becoming richer, with machine learning models contributing to the creation of art that offers greater creative freedom and artistic value. This evolving dynamic prompts both excitement and philosophical reflection on the nature of creativity and the role of artificial intelligence in the future of artistic expression.

3. Related Work

In this section, we provide an overview of the scope of AIGC and contributions of pertinent surveys that concentrate on fields and topics relevant to diffusion-based visual art creation. We first collected 42 surveys and filtered out 30 by relevance. These surveys are primarily categorized by their focus on either technical (17) or artistic (13) aspects. Collectively, they establish the paradigm of this interdisciplinary field and create a platform for our discussion.

3.1. Relevant Surveys with Technical Focus

From a technical view, a tier of surveys focus on the advancements and implications of artificial intelligence in content generation. For example, Cao et al. (Cao et al., 2023a) provides a detailed review of the history and recent advances in AIGC, highlighting how large-scale models have improved the extraction of intent information and the generation of digital content such as images, music, and natural language. We further break down this view into data and task, method, and application perspectives.

3.1.1. Data and Task Perspectives.

A series of surveys inspect AIGC from data and modality and highlight the evolution and challenges in various tasks, including artistic stylization, appearance editing, text-to-image generation, text-to-3D transformation, and AI-generated content across multiple modalities. Prior to the diffusion era, the survey by Kyprianidis et al. (Kyprianidis et al., 2012) delves into the field of nonphotorealistic rendering (NPR), presenting a comprehensive taxonomy of artistic stylization techniques for images and video. It traces the development of NPR from early semiautomatic systems to the automated painterly rendering methods driven by image gradient analysis, ultimately discussing the fusion of higher-level computer vision with NPR for artistic abstraction and the evolution of real-time stylization techniques. Schmidt et al. (Schmidt et al., 2016) review the state of the art in the artistic editing of appearance, lighting, and material, essential for conveying information and mood in various industries. The survey categorizes editing approaches, interaction paradigms, and rendering techniques while identifying open problems to inspire future research in this complex and active area. In the era of large generative models, Bie et al.’s survey on text-to-image generation (TTI) (Bie et al., 2023) explores how the integration with large language models and the use of diffusion models have revolutionized TTI, bringing it to the forefront of machine-learning research and greatly enhancing the fidelity of generated images. The review provides a critical comparison of existing methods and proposes potential improvements and future pathways, including video and 3D generation. Li et al. conducted the first comprehensive survey on text-to-3D (Li et al., 2023b), an active research field due to advancements in text-to-image and 3D modeling technologies. The work introduces 3D data representations and foundational technologies, summarizing how recent developments realize satisfactory text-to-3D results and are used in various applications like avatar and scene generation. Finally, Foo et al.’s survey on AI-generated content (Foo et al., 2023) spans a plethora of data modalities, from images and videos to 3D shapes and audio. It reviews single-modality and cross-modality AIGC methods, discusses the representative challenges and works in each modality, and suggests future research directions.

3.1.2. Method Perspective.

A main body of recent surveys in generative AI and computer vision has been on the evolution of methodologies for style transfer, GAN inversion, attention mechanisms, and diffusion models, which have been instrumental in driving forward the state-of-the-art. Neural Style Transfer (NST) has evolved into a field of its own, with a variety of algorithms aimed at improving or extending the seminal work of Gatys et al. (Jing et al., 2019) provides a taxonomy of NST algorithms and compares them both qualitatively and quantitatively, also highlighting the potential applications and future challenges in the field. In the realm of GANs, the survey on GAN inversion (Xia et al., 2022) details the process of inverting images back into the latent space to enable real image editing and interpreting the latent space of GANs. It outlines representative algorithms, applications, and emerging trends and challenges in this area. The survey on attention mechanisms in computer vision (Guo et al., 2022) categorizes them based on their approach, including channel, spatial, temporal, and branch attention. This comprehensive review links the success of attention mechanisms in various visual tasks to the human ability to focus on salient regions in complex scenes, and it suggests future research directions. Diffusion-based image generation models have seen significant progress, paralleling advancements in large language models like ChatGPT. (Zhang et al., 2023d) examines the issues and solutions associated with these models, particularly focusing on the stable diffusion framework and its implications for future image generation modeling. Text-to-image diffusion models are also reviewed (Zhang et al., 2023e), offering a self-contained discussion on how basic diffusion models work for image synthesis. This includes a review of state-of-the-art methods on text-conditioned image synthesis, applications beyond, and existing challenges. Retrieval-Augmented Generation (RAG) for AIGC is discussed in a survey that classifies RAG foundations and suggests future directions by illuminating advancements and pivotal technologies (Zhao et al., 2024b). The survey provides a unified perspective encompassing all RAG scenarios, summarizing enhancement methods, and surveying practical applications across different modalities and tasks. Finally, an overview of diffusion models addresses their applications, guided generation, statistical rates, and optimization (Chen et al., 2024b). It reviews emerging applications and theoretical aspects of diffusion models, exploring their statistical properties, sampling capabilities, and new avenues in high-dimensional structured optimization.

3.1.3. Application Perspective.

From an application perspective, recent surveys have explored the integration and impact of AIGC across different domains such as brain-computer interfaces, education, and mobile networks, emphasizing its transformative potential. Mai et al.’s survey (Mai et al., 2023) introduces the concept of Brain-conditional Multimodal Synthesis within the AIGC framework, termed AIGC-Brain. This domain leverages brain signals as a guiding condition for content synthesis across various modalities, aiming to decode these signals back into perceptual experiences. The survey provides a detailed taxonomy for AIGC-Brain decoding models, task-specific implementations, and quality assessments, offering insights and prospects for research in brain-computer interface systems. Chen’s systematic literature review (Chen et al., 2024a) addresses AIGC’s application in education, highlighting the profound impact of technologies like ChatGPT. The review identifies key themes such as performance assessment, instructional applications, and the advantages and risks of AIGC in education. It delves into the research trends, geographical distribution, and future agendas to integrate AI more effectively into educational methods, tools, and innovation. Xu et al. (Xu et al., 2024) survey the deployment of AIGC services in mobile networks, focusing on providing personalized and customized content while preserving user privacy. The survey examines the lifecycle of AIGC services, collaborative cloud-edge-mobile infrastructure, creative applications, and the associated challenges of implementation, security, and privacy. It also outlines future research directions for enhancing mobile AIGC networks.

These technically oriented surveys characterize remarkable advancements in the field of generative AI, emphasizing the innovative algorithms and interaction paradigms that enable the creation of diverse content across various data modalities. However, they also point out the existing challenges, including the need for further technical development, the consideration of ethical issues, and the imperative to address potential negative impacts on society.

3.2. Relevant Surveys with Artistic Focus

Another tier of work adopts an artistic view by specifically focusing on arts and humanities in the AIGC era. For example, Liu et al. (Liu et al., 2023b) explore the transformational impact of artificial general intelligence (AGI) on the arts and humanities, addressing critical concerns related to factuality, toxicity, biases, and public safety, and proposing strategies for responsible deployment. We further break the view into processing and understanding, generation, and application perspectives.

3.2.1. Processing and Understanding Perspectives.

The surveys with an artistic focus shed light on the intersection of art and technology, where advanced processing techniques and computational methods are employed to understand and enhance the appreciation of visual arts. Depolo et al.’s review (DePolo et al., 2021) discusses the mechanical properties of artists’ paints, emphasizing the importance of understanding paint material responses to stress through tensile testing data and other innovative techniques. The study highlights how new methods allow for the investigation of historic samples with minimal intervention, utilizing techniques such as nanoindentation, optical methods like laser shearography, computational simulations, and non-invasive approaches to predict paint behavior. Castellano et al. (Castellano and Vessio, 2021b) provides an overview of deep learning in pattern extraction and recognition within paintings and drawings, showcasing how these technological advances paired with large digitized art collections can assist the art community. The goal is to foster a deeper understanding and accessibility of visual arts, promoting cultural diffusion. Zhang et al.’s comprehensive survey on the computational aesthetic evaluation of visual art images (Zhang et al., 2021) tackles the challenge of quantifying aesthetic perception. It reviews various approaches, from handcrafted features to deep learning techniques, and explores applications in image enhancement and automatic generation of aesthetic-guided art, while addressing the challenges and future directions in this field. Liu et al.’s review on neural networks for hyperspectral imaging (HSI) of historical paintings (Liu et al., 2023c) details the application of neural networks for pigment identification and classification. By focusing on processing large spectral datasets, the review contributes to the application of these networks, enhancing artwork analysis and preservation of cultural heritage. Lastly, Bengamra’s survey on object detection in visual art (Bengamra et al., 2024) offers a taxonomy of the methods used in the analysis of artwork images, proposing a classification based on the degree of learning supervision, methodology, and style. It outlines challenges and future directions for improving object detection performance in visual art, contributing to the overall understanding of human history and culture through art.

3.2.2. Generation Perspective.

Surveys focused on the generation of art through AI technologies underscore the transformative role AI plays in both understanding and creating visual arts. Cetinic et al. (Cetinic and She, 2022) offer an integrated review of AI’s dual application in art analysis and creation, including an overview of artwork datasets and recent works tackling various tasks such as classification and computational aesthetics, as well as practical and theoretical considerations in the generation of AI Art. Shahriar et al. (Shahriar, 2022) examine the potential of GANs in art creation, exploring their use in generating visual arts, music, and literary texts. This survey highlights the performance and architecture of GANs, alongside the challenges and future recommendations in the field of computer-generated arts. Liu’s overview of AI in painting (Liu, 2023) reveals the field’s current status and future direction, discussing how AI algorithms can produce unique art forms and automate tasks in traditional painting, thereby promising a revolution in the digital art world and traditional painting processes. Ko et al. (Ko et al., 2023) delve into Large-scale Text-to-Image Generation Models (LTGMs) like DALL-E, discussing their potential to support visual artists in creative works through automation, exploration, and mediation. The study includes an interview and literature review, offering design guidelines for future intelligent user interfaces using LTGMs. Lastly, Maerten et al.’s review on deep neural networks in AI-generated art (Maerten and Soydaner, 2023) examines the evolution of these architectures, from classic convolutional networks to advanced diffusion models, providing a comparison of their capabilities in producing AI-generated art. This review encapsulates the rapid progress and interaction between art and computer science.

3.2.3. Application Perspective.

The surveys with an artistic focus on application delve into the transformative potential of integrating art with other disciplines, particularly science education and therapy, to foster holistic learning and healing experiences. Turkka et al. (Turkka et al., 2017) investigates how art is integrated into science education, revealing through a qualitative e-survey of science teachers (n=66) that while the incorporation of art can enhance teaching, it is infrequently applied in classroom practices. The study presents a pedagogical model for art integration, which characterizes integration through content and activities, and suggests that teacher education should provide more consistent opportunities for art integration to enrich science teaching. The study on art therapy (Hu et al., 2021b) surveys the clinical applications and outcomes of art therapy as a non-pharmacological intervention for mental disorders. The systematic review of 413 literature pieces underscores the clinical effectiveness of art therapy in alleviating symptoms of various mental health conditions, such as depression, anxiety, and cognitive impairments, including Alzheimer’s and autism. It emphasizes the therapeutic power of art in assisting patients to express emotions and providing medical specialists with complementary diagnostic information.

The artistically-oriented surveys reveal how technological advancements—specifically in AI—have revolutionized not only the analysis and preservation of visual arts but also enabled the active creation of innovative art forms. These studies underscore the potential of AI to deeply understand artistic nuances and contribute creatively, thus enriching the artistic domain with new tools and methodologies. However, we observe that while there is existing literature surveying diffusion models and visual art creation individually, there is a gap in research that synthesizes both perspectives. This opens up an opportunity to explore how diffusion models can be specifically applied to the domain of visual art creation, potentially leading to innovative approaches that could transform content production and understanding. We aim to bridge this gap by merging the technical intricacies of generative AI with the creative process of art. By doing so, we seek to contribute to the ongoing dialogue between art and technology, enhancing the creative process and expanding the scope of possibilities in visual art creation.

4. Research Scope and Concepts

In this section, we first define the survey’s research scope and explain relevant concepts. Then, we summarize our research goals and target questions. Together, they establish a coherent context and lay a foundation for the following sections.

4.1. Research Scope

Based on the surveys discussed in the previous section, we identify two independent taxonomies in the technical and artistic realms. The first taxonomy, typical in surveys with a technical focus, categorizes diffusion-based generative techniques as one of the generative methods and art as an application scenario (Cao et al., 2023a; Li et al., 2023b; Foo et al., 2023; Liu et al., 2023b). On the other hand, surveys with an artistic stance commonly adopt historical or theoretical perspectives, categorize relevant research by application scenarios and artistic categories (in Sec. 5.1, we correspond them to different data modalities or applications), and focus more on the implications of generated results (Liu, 2023; Liu et al., 2023c; DePolo et al., 2021; Castellano and Vessio, 2021a; Zhang et al., 2021). We display the two taxonomies and our research scope in Fig. 1. The independent taxonomies are represented as perpendicular axes. Following our motivation, this survey lies in the intersection of these two axes.

4.2. Relevant Concepts

To clearly define our research scope and differentiate it from similar work, we provide an explanation and categorization method for the two most relevant concept realms and their sub-concepts.

4.2.1. Diffusion Model.

In Jan. 2020, Ho et al. proposed Denoising Diffusion Probabilistic Models (Ho et al., 2020) and tested its performance on multiple image synthesis tasks, proclaiming the advent of the post-diffusion era. Ten months later, Song et al. adapted the denoising process to the latent space and significantly improved the generative performance, which is called Denoising Diffusion Implicit Models (Song et al., 2020a). In 2021, different researchers optimized the method by integrating advanced text-image encoders (e.g., CLIP (Radford et al., 2021)) and conditioning methods (e.g., ILVR (Choi et al., 2021)). Another series of work systematically framed the generative task (Nichol et al., 2021) and established relevant benchmarks (Dhariwal and Nichol, 2021), demonstrating surpassing performance than previous state-of-the-art methods.

In early 2022, many technical companies released respective diffusion-based generative frameworks, including DALL·E-2 (Ramesh et al., 2022), Imagen (Saharia et al., 2022), Stable Diffusion (Rombach et al., 2022), etc. These methods feature extensive training and can generate high-quality, artistic images to meet commercial needs. From late 2022, the field has shifted from a common focus to different sub-tracks and downstream applications, by diversifying multiple tasks, introducing different methods, and adapting to various scenarios. Meanwhile, within the AIGC framework, the field of Natural Language Processing (NLP) has also witnessed significant breakthroughs. Researchers proposed foundational models (e.g., the GPT series (OpenAI, 2023)), designed adaptation methods (e.g., LoRA (Hu et al., 2021a)), and achieved comparable performance with humans in NLP tasks (Bubeck et al., 2023). Combined with these advancements, the field of Diffusion Model increased in both width and inclusiveness, becoming more expanded and more interconnected with other fields.

Fig. 2 illustrates the basic structure of how a diffusion model is used as the core structure for a complete generative process (Fig. 2-(a)), and how the model is combined with other pre-trained foundational models (such as CLIP as text-image encoder, Fig. 2-(b)) to accomplish typical text-to-image generative tasks. From a technical perspective, the structure of a diffusion-based generative model typically consists of the following five parts:

-

•

Encoder and Decoder. In image generation, the encoder and decoder connect the pixel space and latent space. During the generation process, the encoder compresses the input data into a latent representation, and the decoder subsequently reconstructs the output from this compressed form.

-

•

Denoiser. As a core component, the denoiser works to remove noise from the latent, in a step-by-step manner, by a set of learned Gaussian noises. Researchers design both new model structures and denoising processes for better performance.

-

•

Noise Predictor. This module predicts key parameters of noise distribution, which is learned during the training process. Setting proper noise can guide the generation process toward intended targets.

-

•

Post-Processor. After the initial output is generated, this module refines the results by enhancing its resolution and final quality.

-

•

Additional Modules. These may include any extra components that supplement the core functionality, such as modules to improve controllability or fulfill specific tasks.

In Sec. 5.4, we will adhere to this framework to categorize different generative methods.

4.2.2. Visual Art.

We break up the topic by three different perspectives of visual art 1) as a conceptual realm under art, 2) as visual contents created by artists, and 3) as generated results with the quality of artistic. Such perspectives will be revisited in the Discussion section (Sec. 6).

-

•

What is Visual Art? Art is broadly understood to encompass a variety of creative expressions that convey ideas, emotions, and concepts through various media. Among them, Visual Art features different visual forms as media (Lazzari and Schlesier, 2008). This field can be further split into different artistic categories, including painting, sketch, sculpture, etc. (where painting is the most common category), and different dimensions, including 1D brush stroke, 2D images, 3D scenes, etc. (where 2D images are the most common representation).

-

•

Who is the Artist? Throughout our history, different schools of criticism have adopted different views on the subject and creation of artwork. One perspective of AIGC is to mimic the role of artists with advanced generative models, which provides a possible future framework for creativity (McCormack and D’Inverno, 2014).

-

•

How is Visual Art Created? In visual art generation, a common way is to incorporate human aesthetics and expertise into both the generative and evaluation processes. Such a system includes data choice, task definition, and model design, which is parallel to the artist’s role as both creators and receivers (e.g., (Elgammal et al., 2017)). However, the process is data-driven and rule-based, which is different from perception, emotion, and creativity as artists’ driving forces.

-

•

What is Artistic? In the artistic realm, the definition of artistic features more psychological and philosophical elements and is also under debate (Dutton, 2009). However, scientific researchers often adopt a common ground and propose more acceptable standards and more approachable metrics. As will be discussed in Sec. 5.3, the commonly referred terms include high quality, stylistic/realistic, controllable, etc.

4.3. Research Goals and Questions

Following the previous discussion and prior work, we summarize two research goals of our paper:

-

G1

Analyze how diffusion-based methods have facilitated and transformed visual art creation. How are diffusion-based generative systems and models used for different Visual Art applications?

-

G2

Provide frameworks, trends, and inspirations for future research in relevant fields. How may human and generative AI inspire each other in Diffusion-Based Visual Art Creation?

Based on the two research goals, we further propose four research questions as the basis of this survey.

-

Q1

What are the most attended topics in diffusion-based Visual Art Creation? This is the basic step to identify hot issues and construct a consistent framework. The question also concerns contrasts between diffusion-based and non-diffusion-based methods, and the temporal features and evolution of this field.

-

Q2

What are current research problems/needs/requirements in diffusion-based Visual Art creation? This question ranges from an artistic/user perspective to a technical/designer perspective. In the following sections, we further break it down to artistic requirements and technical problems, featured by application scenarios, data modalities, and generative tasks, and attempt to establish connections between them.

-

Q3

What are the methods applied in diffusion-based Visual Art creation? For each technical problem, we focus on diffusion-based method design according to its modalities and tasks. As DDPM, DDIM, and their extensions follow similar model structures, we can further categorize and organize the methods based on the unified structure of an extended diffusion model.

-

Q4

What are the frontiers, trends, and future works? We are interested in the following questions: Are there any further problems to solve? How may we leverage the development of a diffusion model and its application in relevant fields to cope with the problems?

5. Findings

In this section, we aim to fulfill G1 by answering questions Q1–Q3.

5.1. Structural Analysis and Framework Construction

5.1.1. Data Classification.

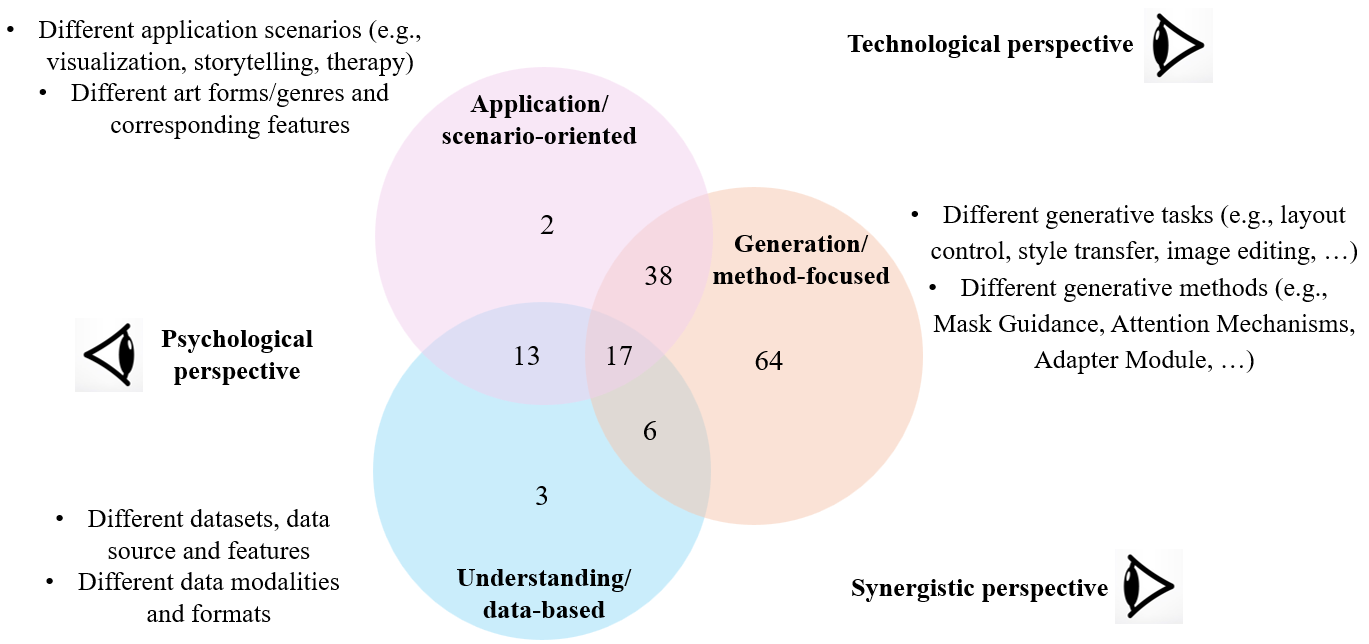

We focus on the first question: What are the currently most attended topics in diffusion-based Visual Art Creation? (Q1) We first summarized different paper codes proposed in Sec. LABEL:sec:_coding along with the index terms of each selected paper. Among them, a major part is closely related to method design, while others concern data, modalities, artistic genres, and application scenarios. We found that these terms basically form three categories and thus applied a Venn Chart to characterize different works, as shown in Fig. 3. The three categories include:

- •

-

•

Understanding. Different data forms and corresponding modalities (e.g., image series (Song et al., 2020b; Suh et al., 2022; Braude et al., 2022), 3D scenes (Dong et al., 2024; Zhang et al., 2024a; Haque et al., 2023)). From an artistic perspective, the first two categories characterize different art forms/genres with corresponding features.

-

•

Generation. Different generative tasks (e.g., style control (Liang et al., 2023; Wu et al., 2023), style transfer (Zhang et al., 2024c, b), image editing (Hertz et al., 2022; Biner et al., 2024)) and Different generative methods (e.g., ControlNet (Xue et al., 2024; Lukovnikov and Fischer, 2024), Textual Inversion (Ahn et al., 2024; Zhang et al., 2023b), LoRA (Shah et al., 2023; Chen et al., 2024c))

With such a categorization method, we can approach the dataset from different perspectives and identify corresponding hot topics. As shown in Fig. 3, most of the selected works lie in four subsets of the seven areas, including:

- •

- •

- •

- •

5.1.2. Framework Construction.

Since most of the work is concentrated at the pole of generation, we dived into the generative part and paid more attention to the intersection areas. We found that 1) Research in Diffusion-Based Visual Art Creation is typically characterized by different artistic scenarios and technical methods. 2) the artistic requirements and technical problems are basically connected by specifying data modality and extracting generative tasks. As a result, we summarized a new framework that can better characterize the current research paradigm (Fig. 4).

Based on the framework, we can further break down Q1 into a series of consequential questions and approach a generative problem from different perspectives:

-

•

Scenario. What are the common features and requirements of different artistic scenarios?

-

•

Modality. What are the data modalities applied, including training dataset, input, conditions, and output?

-

•

Task. What are popular research problems in generating Visual Arts, including their technical statements and classification?

-

•

Method. What are the methods used to augment and adapt diffusion models?

In the following sections, we will refer to this structure to analyze the relationships represented by each red dashed line.

5.2. Temporal Analysis and Trend Detection.

In this part, we investigate how the number of publications, categories, and keywords in different dimensions evolve over time in our dataset. We specifically focus on the difference between pre-diffusion and post-diffusion eras.

5.2.1. Data Distribution.

Fig. 5 displays the temporal distribution of our dataset, including the time when major diffusion-based models are proposed. According to the figure, most of the work is published after Jun. 2022, especially after Feb. 2023, when a series of landmark methods were introduced. We also calculate the proportions of different categories of work and demonstrate the result in Fig. 6. We found that while the proportion of generation research remains essentially the same, understanding research keeps growing in the four years of 2020-2023, and application research witnessed a surge in 2023. On the other hand, the proportion of diffusion-based methods increased steadily, from around 20% in 2020 to over 70% in 2023.

5.2.2. Topic Evolution.

In examining the dataset, we noticed that most methods and some tasks change and upgrade over time, while application scenarios are generally constant. We thus provide a Word Cloud Chart (Fig. 7) to compare methods and tasks in pre-diffusion and post-diffusion eras and illustrate the main application scenarios, artistic categories, method features, and user requirements. According to the figure, most generative tasks stay the same, while some traditional tasks (e.g., NPR) are less mentioned. However, generative methods have undergone a major shift, from SBR (stroke-based rendering), rule-based generation, and physical-based simulation, to a series of modifications based on diffusion models.

5.2.3. Qualitative Comparison.

From a microscopic perspective, we are also interested in how the development of diffusion-based models introduces new methods for solving traditional problems. We thus selected five artistic genres or scenarios, including robotic painting, Chinese landscape painting, ink painting, story visualization, and artistic font synthesis, to compare different approaches for similar problems and tasks.

Fig. 8 displays an example to compare different methods for similar tasks (portrait stylization) before and after the Diffusion Era. According to the similarities between the two workflows, we derived a unified, model-agnostic structure for solving the problem. As shown in Fig. 8, in solving the task of face stylization (stylized portrait generation), both methods generate the new image based on the previous two images. Meanwhile, they both refer to the input image (content reference) for details and local information, and the template image (style reference) for background and global information in the generative process. When traditional tasks meet with new methods, such frameworks provide an interesting perspective to capture the embedded human expertise. On the other hand, the two approaches display multiple differences in result quality, model complexity, computational cost, etc. Based on multiple pairs of selected work in our dataset, we summarize the following trends:

-

•

Input Format: from images only to images and masks as conditional input (controllability↑)

-

•

Dataset: from fixed database to arbitrary image (generalizability↑)

-

•

Generative Process: from explicit/pixel manipulation to implicit/latent manipulation (Complexity↑)

-

•

Method Category: from traditional rule-based image processing to diffusion-based image stylization (Computational cost/Time consumption↑)

5.2.4. A Brief Summary and Outlook.

In the previous section, we have compared methods before and after the Diffusion Era, to borrow frameworks and ideas from the Pre-Diffusion Era and inspire new method design. Here we are further interested in identifying research gaps from temporal trends and task-method relationships in Diffusion-Based Visual Art Creation.

| Year | Method Features | User Requirements |

|---|---|---|

| 2021 | Basic Model, Dataset, Metric, Evaluation | (No specific requirements) |

| 2022 | Framework, Adaptive, Sampling, Fine-tuning | Photorealistic, Multilayer, Artistic, Creative, Coherent |

| 2023 | Tuning-free, Training-free, System, Prompt | Controllable, Subject, Disentanglement, Interaction, Painterly |

| 2024 | Inversion, Dilation, Layer-aware, Step-aware | Personalization, Composition, Visualization, Concept, Context |

In Table 1, we present the top growing keywords in method features and user requirements for the post-diffusion era. We first calculate keyword frequencies for each year from 2020 to 2024 (up until May 2024). We then calculate the difference in each word’s frequency compared to the previous year. The words with the highest frequency growth are identified as ’Top Growing Key Words’. Combine Table 1 with Fig. 7, we identify some major trends in Diffusion-Based Visual Art Creation:

-

•

Technically, the research type developed from a basic model to a generative framework to an interactive system. Researchers’ design focus also shifted from developing benchmarks (dataset, metric, evaluation) to introducing generative methods (sampling, inversion, dilation), with a general trend to simplify the generative process (tuning-free and training-free).

-

•

Artistically, user requirements are diverging from higher quality (photorealistic, artistic, coherent) to multiple diversified needs (controllable, composition, visualization), and research focus has shifted from the generated visual content (multilayer, coherent) to creative subject (personalization, concept, context). The most notable requirement is creative, which emerged two years ago but has not been well resolved until now (Sec. LABEL:sec:_creativity).

-

•

Interdisciplinarily, the keywords also manifested more collaborations between human creators and AI models. On the one hand, experts introduced principles in the diffusion process (e.g., step-aware) and concepts from artistic areas (e.g., layer-aware), to boost controllability and performance. On the other hand, researchers ventured into understanding implicit latent (e.g., disentanglement), adapting the system to user inputs (e.g., prompt), and catering the diffusion model to the human thinking process (e.g., interaction).

In Sec. 6, we will go into detail to discuss the trends and future outlook from multiple perspectives.

5.3. From Artistic Requirements to Technical Problems

In this section, we focus on the upper half of the Rhombus framework (Fig. 4), to summarize current research problems/needs/requirements in Diffusion-Based Visual Art creation (Q2). Specifically, we start from application scenarios and artistic genres, analyze their corresponding data modality and generative task, and dive into their key requirements/goals and computational statements. By doing this, we aim to fill in the three connections and bridge the gap between artistic requirements and technical problems.

5.3.1. Application Domain and Artistic Category.

Among our 143 selected papers, 70 are coded as application/scenario-oriented. Within this subset, 55 papers focus on specific artistic categories (e.g., traditional paintings, human portraits, and specific art genres), and 17 focus on relevant domains (e.g., story visualization, replication prevention, human-AI collaboration). We summarize representative work in different application scenarios, focusing on how they formulate and tackle the domain issues.

The first series of works view visual art (or digital art, fine art) as a general category. Abrahamsen et al. (Abrahamsen2023InventingAS) introduce innovative methods to invent art styles using models trained exclusively on natural images, thereby circumventing the issue of plagiarism in human art styles. Their approach leverages the inductive bias of artistic media for creative expression, harnessing abstraction through reconstruction loss and inspiration from additional natural images to forge new styles. This holds the promise of ethical generative AI use in art without infringing upon human creators’ originality. In a similar vein, Zhang et al. (Zhang et al., 2024c) address the limitations of existing artistic style transfer methods, which either fail to produce highly realistic images or struggle with content preservation, by proposing ArtBank. This novel framework, underpinned by a Pre-trained Diffusion Model and an Implicit Style Prompt Bank (ISPB), adeptly generates lifelike stylized images while maintaining the content’s integrity. The added Spatial-Statistical-based self-attention Module (SSAM) further refines training efficiency, with their method surpassing contemporary artistic style transfer techniques in both qualitative and quantitative evaluations. Meanwhile, Qiao et al. (Qiao et al., 2022) explore the use of image prompts in conjunction with text prompts to enhance subject representation in multimodal AI-generated art. Their annotation experiment reveals that initial images significantly improve subject depiction, particularly for concrete singular subjects, with icons and photos fostering high-quality, aesthetically varied generations. They provide valuable design guidelines for leveraging initial images in AI art creation. Furthermore, Huang et al. (Huang et al., 2022) present the multimodal guided artwork diffusion (MGAD) model, a novel approach to digital art synthesis that leverages multimodal prompts to direct a classifier-free diffusion model, thereby achieving greater expressiveness and result diversity. The integration of the CLIP model unifies text and image modalities, with substantial experimental evidence endorsing the efficacy of the diffusion model coupled with multimodal guidance. Lastly, Liao et al. (Liao et al., 2022) contribute to the field by introducing ArtBench-10, a class-balanced, high-grade dataset for benchmarking artwork generation. It stands out with its clean annotations, high-quality images, and standardized dataset creation process, addressing the skewed class distributions prevalent in prior artwork datasets. Available in multiple resolutions and formatted for seamless integration with prevalent machine learning frameworks, ArtBench-10 facilitates comprehensive benchmarking experiments and in-depth analyses to propel generative model research forward. Collectively, these works illustrate the dynamic intersection of AI and art, where innovative methodologies and datasets are expanding the frontiers of artistic creation, opening avenues for novel styles, ethical considerations, and enhanced representation in the digital art sphere.

The second series of works focus on specific artistic genres or historical contexts, among which traditional Chinese painting is most frequently visited. Wang et al. (Wang et al., 2023e) introduce CCLAP, a pioneering method for controllable Chinese landscape painting generation. By leveraging a Latent Diffusion Model, CCLAP consists of a content generator and style aggregator that together produce paintings with specified content and style, evidenced by both qualitative and quantitative results that showcase the model’s artful composition capabilities. A dedicated dataset, CLAP, has been developed to evaluate the model comprehensively, and the code has been made accessible for broader use. Addressing the issue of low-resolution images in the digital preservation of Chinese landscape paintings, Lyu et al. (Lyu et al., 2024) propose the diffusion probabilistic model CLDiff. It employs iterative refinement steps akin to the Langevin dynamic process to transform Gaussian noise into high-quality, ink-textured super-resolution images, while a novel attention module enhances the U-Net architecture’s generative power. Fu et al. (Fu et al., 2021) tackle the challenge of generating traditional Chinese flower paintings with various styles such as line drawing, meticulous, and ink through a deep learning approach. Their Flower-Generative Adversarial Network framework, bolstered by attention-guided generators and discriminators, facilitates style transfer and overcomes common artifacts and blurs. A new loss function, Multi-Scale Structural Similarity, is introduced to enforce structural preservation, resulting in higher quality multi-style Chinese art paintings. From the perspective of generative teaching aids, Wang et al. (Wang et al., 2024a) present ”Intelligent-paint,” a method for generating the painting process of Chinese artworks. Using a Vision Transformer-based generator and an adversarial learning approach, this method emphasizes the unique characteristics of Chinese painting, such as void and brush strokes, employing loss constraints to align with traditional techniques. The coherence of the generated painting sequences with real painting processes is further validated by expert evaluations, making it a valuable tool for beginners learning Chinese painting. Finally, Li et al. (Li et al., 2021) introduce the novel task of artistically visualizing classical Chinese poems. For this, they construct the Paint4Poem dataset, comprising high-quality poem-painting pairs and a larger collection to assist in training poem-to-painting generation models. Despite the models’ capabilities in capturing pictorial quality and style, reflecting poem semantics remains a challenge. Paint4Poem opens many research avenues, such as transfer learning and text-to-image generation for low-resource data, enriching the intersection of literature and visual art. These works collectively highlight the potential of diffusion-based techniques in enriching the field of traditional Chinese painting, offering advanced tools for both creation and restoration and enhancing the educational process for aspiring artists.

With the development of diffusion-based generative methods, the application scenario has expanded to cover a wide range of artistic categories, including human images, portraits, fonts, and more. Ju et al. (Ju et al., 2023) have crafted the Human-Art dataset to bridge the gap between natural and artificial human representations. Spanning natural and artificial scenes, this dataset is comprehensive, covering 2D and 3D instances, and is poised to enable advancements in various computer vision tasks such as human detection, pose estimation, image generation, and motion transfer. Liu et al. (Liu et al., 2023a) present Portrait Diffusion, a training-free face stylization framework that utilizes text-to-image diffusion models for detailed style transformation. This novel framework integrates content and style images into latent codes, which are then delicately blended using Style Attention Control, yielding precise face stylization. The innovative Chain-of-Painting method allows for gradual redrawing of images from coarse to fine details. In the realm of secondary painting for artistic productions like comics and animation, Ai et al. (Ai and Sheng, 2023) introduce Stable Diffusion Reference Only, a method that accelerates the process with a dual-conditioning approach using image prompts and blueprint images for precise control. This self-supervised model integrates seamlessly with the original UNet architecture, enhancing efficiency and controllability without the need for complex training methods. Wang et al. (Wang et al., 2023a) tackle the challenge of creating nontypical aspect-ratio images with MagicScroll, a diffusion-based image generation framework. It addresses issues of content repetition and style inconsistency by allowing fine-grained control of the creative process across object, scene, and background levels. This model is benchmarked against mediums like paintings, comics, and cinema, demonstrating its potential in visual storytelling. Lastly, Tanveer et al. (Tanveer et al., 2023) introduce DS-Fusion, a method for generating artistic typography that balances stylization with legibility. Utilizing large language models and an unsupervised generative model with a diffusion model backbone, it creates typographies that visually convey semantics while remaining coherent. DS-Fusion is validated through user studies and stands out against prominent baselines and artist-crafted typographies. Together, these advancements signify a major leap in the application of diffusion-based methods to a myriad of artistic categories. By encompassing human-centric datasets, training-free frameworks, speed-enhancing models for artists, tools for visual storytelling, and typography generation techniques, the scope of AI in art creation is being pushed to new, previously unimagined heights.

5.3.2. Representing Scenarios as Modalities and Tasks.

Next, we attempt to structure different application scenarios by their corresponding data modalities and generative tasks. In this way, we aim to approach the embedded technical problems and establish alignment between artistic requirements and technical problems.

Following the common practice in AIGC, we first categorize artistic scenarios by different data modalities:

-

•

Thread/Brushstroke. The first series of work focus on brush stroke generation. The problem has been long-studied and technically attended since around 2000 and can be well solved by traditional rendering and rule-based methods, with little involvement of diffusion-based models (Lee et al., 2005; Yang et al., 2019; Nakano, 2019; Bidgoli et al., 2020; Fang et al., 2018).

- •

- •

- •

-

•

Others. Other artistic genres are commonly believed to possess certain modality features. For example, sketch share both a raster and a vector representation, thus inspiring researchers to take different generative approaches (Li et al., 2024; Wang et al., 2022; Wang, 2022; Wang et al., 2021; Ciao et al., 2024).

Next, we summarize typical tasks in Diffusion-Based Visual Art Creation:

-

•

Quality Enhancement. As the baseline task in content generation and the basic requirement in visual art creation, the generated content should possess higher resolution and better quality. This is commonly realized by aesthetic training data, advanced model structure, more parameters, and result optimization designs. In the post-diffusion era, these methods are integrated into training foundation models (He et al., 2023; Nichol et al., 2021; Chen et al., 2023; Zhang et al., 2024b; Rombach et al., 2022).

-

•

Controllable Generation. The requirement emerges from artists’ need to precisely control each perspective of their generated results, including context, subject, content, and style. Researchers adapt diversified ways, including additional information encoding, cross-attention mechanism, and retrieval augmentation, to support different forms of conditions (Zhang et al., 2023c; Li et al., 2023a; Ye et al., 2023b; Huang et al., 2024; Zhao et al., 2024a).

-

•

Content Editing and Stylization. This task is seen in various scenarios such as iterative generation, collaborative creation, and image inpainting. Following the understanding of high-level concept and low-level style in deep latent structure, experts are also working on decoupling the two aspects, to improve the performance of diffusion-based models on style transfer, style control, style inversion, etc (Hertz et al., 2022; Lu et al., 2023; Brack et al., 2022; Abrahamsen2023InventingAS; Kawar et al., 2023).

-

•

Specialized Tasks. According to different visual art scenarios and inspired by human concepts, experts summarized and proposed new tasks including compositional generation (e.g., concept, layout, layer) and latent manipulation. Still, more research is application-oriented, designed, and optimized for specific data types (e.g., human portrait) or specific scenarios (e.g., multiview art) (Wang et al., 2023a; Wu et al., 2023; Zhou et al., 2023; Chefer et al., 2023; Liu et al., 2022).

5.3.3. From Artistic Goals to Evaluation Metrics.

In Diffusion-Based Visual Art Creation, artistic goals drive the development of generative tasks, and the success of these tasks is measured using specific evaluation metrics. In Table 2, we summarize common artistic goals and list several evaluation metrics as an example:

-

•

Controllability. Achieving precise control over generated outcomes is measured by metrics that evaluate the adherence to user-specified prompts and directions.

-

–

CLIP Score: Assesses alignment between text prompts and generated images using CLIP (Contrastive Language-Image Pretraining) embeddings (Radford et al., 2021).

-

–

CLIP Directional Similarity: Measures the semantic similarity between changes in text prompts and corresponding changes in generated images (Patashnik et al., 2021).

-

–

-

•

Visual Quality. The quality of generated art is quantified by subjective and objective metrics that reflect the aesthetic and technical excellence of the artwork.

-

–

User studies: Subjective evaluations where users rate the visual appeal and aesthetic qualities of generated content (Wang et al., 2023b).

-

–

LAION-AI Aesthetics: A metric that uses a dataset from LAION-AI to objectively evaluate the aesthetic aspects of generated images, such as harmony, balance, and composition (Schuhmann et al., 2021).

-

–

-

•

Fidelity. The fidelity of the generated content to the target data distribution is gauged using metrics that compare the statistical properties of generated and real artwork.

- –

- –

-

•

Interpretability. For the goal of interpretability, metrics assess how well we can understand and manipulate the generative model’s inner workings.

- –

- –

| Artistic Goal | Example Evaluation Metric |

|---|---|

| Controllability | CLIP Score (Radford et al., 2021), CLIP Directional Similarity (Patashnik et al., 2021) |

| Visual Quality | User studies, LAION-AI Aesthetics (Schuhmann et al., 2021) |

| Fidelity | Fréchet Inception Distance (Heusel et al., 2017), Inception Score (Salimans et al., 2016) |

| Interpretability | Disentanglement metrics, feature attribution |

5.4. Design and Application of Diffusion-Based Methods

In the previous discussion, we gradually shifted from an artistic/user perspective to a technical/designer perspective. In this part, we focus on the lower half of the Rhombus framework (Fig. 4), to summarize specific methods applied in Diffusion-Based Visual Art Creation (Q3).

5.4.1. From Generative Tasks to Method Design.

Based on the previously summarized generative tasks, we first categorize representative diffusion-based methods applied to solve each problem. We specifically focus on controllable generation, content editing, and stylization which together take up more than 80% of research focuses in generative/method-based research.

Controllable generation. In the realm of controllable generation, various studies have presented innovative approaches to guide diffusion models effectively. The work by Choi et al. (Choi et al., 2021) introduces Iterative Latent Variable Refinement (ILVR), which conditions denoising diffusion probabilistic models (DDPM) using a reference image. The ILVR method directs a single DDPM to generate images with various attributes informed by the reference, enhancing the controllability and quality of generated images across multiple tasks like multi-domain image translation and image editing. Gal et al. (Gal et al., 2022) propose a method that personalizes text-to-image generation by learning new ”words” to represent user-provided concepts. This approach, named Textual Inversion, adapts a frozen text-to-image model to generate images of unique concepts. By embedding these unique ”words” into natural language sentences, users have the creative freedom to guide the AI in generating personalized images. In another breakthrough, Zhang et al. (Zhang et al., 2023c) present ControlNet, an architecture that adds spatial conditioning controls to pre-trained text-to-image diffusion models. ControlNet takes advantage of ”zero convolutions” and existing deep encoding layers from large models, allowing the fine-tuning of conditional controls like edges and segmentation with robust training across different dataset sizes. Building further on control mechanisms, Zhao et al. (Zhao et al., 2024a) introduce Uni-ControlNet, a unified framework that enables the simultaneous use of multiple control modes, both local and global, without the need for extensive training from scratch. The framework’s unique adapter design ensures cost-effective and composable control, enhancing both controllability and generation quality. Finally, Ruiz et al. (Ruiz et al., 2023) present DreamBooth, a fine-tuning approach that personalizes text-to-image diffusion models to generate novel renditions of subjects in varying contexts using a small reference set. This method, empowered by a class-specific prior preservation loss, maintains the subject’s defining features across different scenes, opening the door to new applications like subject recontextualization and artistic rendering. These studies collectively illustrate the evolving landscape of design and application within diffusion-based methods. They highlight the progress from generative tasks to refined method design and the ongoing pursuit of enhanced controllability in image generation.

Content Editing. The design and application of diffusion-based methods have paved the way for breakthroughs in content editing, offering enhanced photorealism and greater control in the text-guided synthesis and manipulation of images. Nichol et al. (Nichol et al., 2021) delve into text-conditional image generation using diffusion models, contrasting CLIP guidance with classifier-free guidance. The latter is favored for producing realistic images that closely align with human expectations. Their 3.5 billion parameter model outperforms DALL-E in human evaluations, and further demonstrates its flexibility in image inpainting, facilitating text-driven editing capabilities. Hertz et al. (Hertz et al., 2022) introduce an intuitive image editing framework, where modifications are steered solely by textual prompts, bypassing the need for spatial masks. Their analysis highlights the crucial role of cross-attention layers in mapping text to image layout, enabling precise control over local and global edits while preserving fidelity to the original content. Kumari et al. (Kumari et al., 2023) propose an efficient approach for incorporating user-defined concepts into text-to-image diffusion models, Custom Diffusion. By optimizing a subset of parameters, the method allows for rapid adaptation to new concepts and the combination of multiple concepts, yielding high-quality images that outperform existing methods in both efficiency and effectiveness. Brooks et al. (Brooks et al., 2023) present InstructPix2Pix, a conditional diffusion model trained on a dataset generated by combining the expertise of GPT-3 and Stable Diffusion. This model can interpret human-written instructions to edit images accurately, operating swiftly without needing per-example fine-tuning, showcasing its proficiency across a wide array of editing tasks. Lastly, Parmar et al. (Parmar et al., 2023) tackle the challenge of content preservation in image-to-image translation with pix2pix-zero. Through the discovery of editing directions in text embedding space and cross-attention guidance, their method ensures the input image’s content remains intact. They further streamline the process with a distilled conditional GAN, achieving superior performance in both real and synthetic image editing without necessitating additional training. Collectively, these advancements in diffusion-based methods signify a transformative period in content editing, where the synthesis of images is becoming increasingly controllable, customizable, and responsive to textual nuance, greatly expanding the potential for creative expression and practical applications.

Stylization. Recent advancements in diffusion-based methods have significantly enhanced the stylization capabilities in the domain of generative AI, enabling more intuitive and precise artistic expression. Zhang et al. (Zhang et al., 2023b) propose an inversion-based style transfer technique that captures the artistic style directly from a single painting, circumventing the need for complex textual descriptions. This method, named InST, efficiently captures the essence of a painting’s style through a learnable textual description and applies it to guide the synthesis process, thus achieving high-quality style transfer across diverse artistic works. Huang et al. (Huang et al., 2024) present DiffStyler, a novel architecture that leverages dual diffusion processes to control the balance between content and style during text-driven image stylization. By integrating cross-modal style information as guidance and proposing a content image-based learnable noise, DiffStyler ensures that the structural integrity of the content image is maintained while achieving a compelling style transformation. In the realm of artistic image synthesis, Ahn et al. (Ahn et al., 2024) propose DreamStyler, a framework that optimizes multi-stage textual embedding with context-aware text prompts. DreamStyler excels at both text-to-image synthesis and style transfer, providing the flexibility to adapt to various style references and producing images that exhibit high-quality and unique artistic traits. Sohn et al. (Sohn et al., 2024) develop StyleDrop, a method designed to synthesize images that adhere closely to a specific style using a text-to-image model. StyleDrop stands out for its ability to capture intricate style nuances with minimal parameter fine-tuning. It demonstrates impressive results even when provided with a single image, effectively synthesizing styles across different patterns, textures, and materials. Together, these methodologies exemplify the ongoing innovation in the field of image stylization through diffusion-based methods. They afford users an unprecedented level of control and flexibility in generating and editing images, breaking new ground in the creation of stylized artistic content. These tools not only facilitate the expression of visual art but also promise to expand the possibilities for personalized and creative digital media.

Quality Enhancement. The exploration of diffusion-based methods has led to significant enhancements in the quality of text-to-image synthesis, pushing the boundaries of resolution, fidelity, and customization. Balaji et al. (Balaji et al., 2022) propose eDiff-I, an ensemble of text-to-image diffusion models that specialize in different stages of the image synthesis process. This approach results in images that better align with the input text while maintaining visual quality. The models use various embeddings for conditioning and introduce a ”paint-with-words” feature, which allows users to control the output by applying words to specific areas of an image canvas, providing a more intuitive way to craft images. Chang et al. (Chang et al., 2023) introduce Muse, a Transformer model that surpasses diffusion and autoregressive models in efficiency. Muse achieves state-of-the-art performance with a masked modeling task on discrete tokens, informed by text embedding from a large pre-trained language model. This method allows for fine-grained language understanding and diverse image editing applications without additional fine-tuning, such as inpainting and mask-free editing. In the realm of cost-effective and environmentally conscious training, Chen et al. (Chen et al., 2023) present PIXART-, a Transformer-based diffusion model that significantly reduces training time and costs while maintaining competitive image quality. Through a decomposed training strategy, efficient text-to-image Transformer design, and higher informative data, PIXART- demonstrates superior speed, saving resources and minimizing CO2 emissions. It provides a template for startups and the AI community to build high-quality, low-cost generative models. Lastly, He et al. (He et al., 2023) delve into higher-resolution visual generation with ScaleCrafter, an approach that addresses the challenges of object repetition and structure in images created at resolutions beyond those of the training datasets. By re-dilating convolutional perception fields and implementing dispersed convolution and noise-damped classifier-free guidance, ScaleCrafter enables the generation of ultra-high-resolution images without additional training or optimization, setting a new standard for texture detail and resolution in synthesized images. Collectively, these advancements represent a paradigm shift in the quality enhancement of diffusion-based generative models, offering innovative solutions to meet the ever-growing demands for high-quality, customizable, and efficient image generation and editing in the AI-powered creative landscape.