Differentially Private Synthetic Medical Data Generation using Convolutional GANs

Abstract

Deep learning models have demonstrated superior performance in several application problems, such as image classification and speech processing. However, creating a deep learning model using health record data requires addressing certain privacy challenges that bring unique concerns to researchers working in this domain. One effective way to handle such private data issues is to generate realistic synthetic data that can provide practically acceptable data quality and correspondingly the model performance. To tackle this challenge, we develop a differentially private framework for synthetic data generation using Rényi differential privacy. Our approach builds on convolutional autoencoders and convolutional generative adversarial networks to preserve some of the critical characteristics for the generated synthetic data. In addition, our model can also capture the temporal information and feature correlations that might be present in the original data. We demonstrate that our model outperforms existing state-of-the-art models under the same privacy budget using several publicly available benchmark medical datasets in both supervised and unsupervised settings.

Index Terms:

Deep learning, differential privacy, synthetic data generation, generative adversarial networks.I Introduction

Deep learning has been successful in a wide range of application domains such as computer vision, information retrieval, and natural language processing due to its superior performance and promising capabilities. However, its success is heavily dependent on the availability of a massive amount of training data. Hence, the progress in deploying such deep learning models can be crippled in certain critical domains such as healthcare, where data privacy issues are more stringent, and large amounts of sensitive data are involved.

Hence, to effectively utilize specific promising data-hungry methods, there is a need to tackle the privacy issues involved in the medical domains. To handle the privacy concerns of dealing with sensitive information, a common method that is often used in practice is the anonymization of personally identifiable data. But, such approaches are susceptible to de-anonymization attacks [1]. That made researchers explore alternative methods. To make the system immune to such attacks, privacy-preserving machine learning approaches have been developed [2, 3]. This particular privacy challenge is usually compounded by some auxiliary ones due to the presence of complex and often noisy data that, in practice, might typically consist of a combination of multiple types of data such as discrete, continuous, and categorical.

I-A Synthetic Data Generation

One of the most promising privacy-preserving approaches is Synthetic Data Generation (SDG). Synthetically generated data can be shared publicly without privacy concerns and provides many collaborative research opportunities, including for tasks such as building prediction models and finding patterns. As SDG inherently involves a generative process, Generative Adversarial Networks (GANs) [4] attracted much attention in this research area, due to their recent success in other domains.

GANs are not reversible, i.e., one may not use a deterministic function to go from the generated samples to the real samples, as the generated samples are created using an implicit distribution of the real data. However, a naive usage of GANs for SDG does not guarantee the system being privacy-preserving by just relying on the fact that GANs are not reversible, as GANs are already proven to be vulnerable [5].

This problem becomes much more severe when such privacy violations can have serious consequences, such as when dealing with patient sensitive data in the medical domain. The primary ambiguity here is to understand how a system can claim to preserve the original data’s privacy. More precisely, how private is a system? How much information is leaked during the training process? Thus, there is a need to measure the privacy of a system – to be able to judge if a system is privacy-preserving or not.

I-B Differential Privacy

Differential Privacy (DP) [6] provides a mechanism to ensure and quantify the privacy of a system using a solid mathematical formulation. Differential privacy recently became the de facto standard for statistical exploration of databases that contain sensitive private data. The power of differential privacy is in its accurate mathematical representation, that ensures privacy, without restricting the model for accurate statistical reasoning. Furthermore, utilizing differential privacy, one can measure the privacy level of a system.

Differential privacy, as a strong notion of privacy, becomes crucial in machine learning and deep learning as the system by itself often employs sensitive information to augment the model performance for prediction. Although many different attack types can jeopardize a machine learning model’s privacy, it is the safest to assume the presence of a powerful adversary with complete knowledge of the pipeline, training, and model [7]. Hence protecting the system against such an adversary, or at the very least, measuring the upper bound of the privacy leakage in this scenario, gives us a full view of the system’s privacy. A fully differentially private system guarantees that the algorithm’s training is not dependent on an individual’s sensitive data.

One of the most adopted approaches to ensure differential privacy, under a modest privacy budget, and model accuracy, is Differentially Private Stochastic Gradient Descent (DP-SGD), proposed in [8], which is the basis for many different research works [9, 10]. At its core, DP-SGD (1) bounds the sensitivity of the algorithm on individuals by clipping the gradients, (2) adds Gaussian noise, and (3) performs the gradient descent optimization step. As of now, SGD under a DP regime is one of the most promising privacy-preserving approaches.

Due to the rapid rise in the utilization of differential privacy, practical issues related to tracking the privacy budget became a subject of many discussions. Despite its great advantages, the proposed notion of differential privacy has some disadvantages due to what is called the composition theorem [6, 11]. The composition theorem indicates that, for some composed mechanisms, the privacy cost simply adds up, leading to the discussion of privacy budget restriction. This is problematic, especially in deep learning, as the model training is an iterative process, and each iteration adds to the privacy loss. To tackle the shortcomings of the differential privacy definition, Rényi Differential Privacy (RDP) has been proposed as a natural relaxation of differential privacy [12]. As opposed to differential privacy, RDP is a more robust notion of privacy that may lead to a more accurate and numerically stable computation of privacy loss.

I-C Challenges with Medical Data

There are four primary challenges in the synthetic data generation research in the medical domain: (1) Preserving the privacy. The majority of the existing works do not train the model in a privacy-preserving manner. At best, they only try to address privacy with some statistical or machine learning-based measurements. (2) Handling discrete data. When discrete data is involved, many of the methods using GANs face some difficulty since GAN models are inherently designed for generating continuous values. In particular, they especially fail when there is a mixture of continuous and discrete data. (3) Evaluation of synthetic data quality. One of the challenging issues regarding the evaluation of GANs and the quality of synthetic data in a real-world domain is: How can we measure the quality of a synthetically generated data? However, this becomes particularly more critical in the medical domain since the use of low-quality synthetic data for building prediction models can have dire consequences and may even jeopardize human lives. (4) Temporal information and correlated features. The temporal and local correlation between the features is often ignored. Incorporating such information is important in the medical domain since the disease incidents, or individual patients’ records, often have meaningful temporal/correlation patterns. The quality of the generated data can be significantly improved by incorporating such dependencies in the data.

I-D Our Contributions

To address the problems described before, here we propose a privacy-preserving framework that employs Rényi Differential Privacy and Convolutional Generative Adversarial Networks (RDP-CGAN). Our work makes the following contributions:

-

•

We track and compute privacy loss with stable numerical computations and have tighter bounds on the privacy loss function, which leads to better performance under the sample privacy budget. We achieve this by employing Rényi differential privacy in our model.

-

•

Our model can effectively handle discrete data and capture the information from a mixture of discrete-continuous data by creating a compact, unified representation through Convolutional Autoencoders (CEs) for unsupervised feature learning.

-

•

In addition to measuring the quality of synthetic data with statistical measurements, we also employ a labeled synthetic data generation mechanism for assessing the quality of the synthetic data in a supervised manner.

-

•

To incorporate temporal and correlation dependencies in the data, we utilize one-dimensional convolutional neural networks.

We demonstrate that our model generates higher quality synthetic data under the same privacy budget. Likewise, it can provide a higher level of privacy under the same level of synthetic data quality. We also empirically show that, regardless of privacy considerations, our proposed architecture generates higher quality synthetic data quality as it captures temporal and correlated information.

The rest of this part is organized as follows: Section II investigate some prior research efforts related to synthetic medical data generation and differentiates our work from other existing works. Section III describes preliminary concepts required to comprehend our proposed work. Section IV provides the structural and implementation details of the proposed system. Section III demonstrates the comparison results with state-of-the-arts by privacy analysis and the quality of generated synthetic data. Finally, Section VI concludes our findings in this paper.

II Related Works

There are a wide variety of works utilizing Differential Privacy for generating synthetic data. A majority of them use the mechanism proposed in [8] to train a neural network with differential privacy based ipon gradient clipping for bounding the gradient norms and adding noise. It follows the general mechanism proposed in [6]. One of the most critical contributions in [8] is introducing the privacy accountant that tracks the privacy loss. Motivated by the success of this mechanism, in our approach, we extend our privacy-preserving framework using Rényi Differential Privacy (RDP) [12] as a new notion of DP to calculate the privacy loss.

The problem of synthetic data generation in the healthcare domain has seen a recent surge in the research efforts [17, 18, 19]. Perhaps one of the earlier works in generating synthetic medical data is MedGAN [13] in which there is no privacy-preserving mechanism being enforced. It only uses GAN models to create synthetic data, and it is well known that GANs are prone to be attacked. Hence, despite its good performance in generating data, it does not provide any kind of privacy guarantee. One of the primary contributions of MedGAN is to tackle the discrete data generation through denoising autoencoders [13]. One the other hand, utilization of synthetic patient population simulators such as Synthea [20] is minimal as it only relies on standard guidelines and does not model factors that may contribute to a predictive analysis [21]. Another work is CorGAN [15] that tries to generate longitudinal event sequences by capturing feature correlations and temporal information. The TableGAN approach [14] also uses convolutional GANs to synthesize data by leveraging auxiliary classifier GANs. Another work in this area is the CTGAN model presented in [22] that addresses tabular data which has a mixture of discrete and continuous features. However, none of these approaches guarantees any sort of privacy during the data generation. Hence, there is a good chance that many of these models can compromise the privacy of the original medical data, making these models vulnerable in real practice. Accordingly, we will now discuss various privacy-preserving strategies.

One of the earliest works that addressed the privacy-preserving deep learning in the healthcare domain is [23], that uses Auxiliary Classifier GANs [24] to generate synthetic data. It assumes having access to labeled data. However, we aim to develop a model able to create synthetic data from both labeled and unlabeled real data. PATE-GAN [16] is one of the successful SDG approaches that use the Private Aggregation of Teacher Ensembles (PATE) [25, 26] mechanism to ensure Differential Privacy. Another framework related to this topic is DPGAN [9], which implements a mechanism similar to the one developed in [8], with the main difference of clipping weights instead of gradients. We compare our work with PATE-GAN and DPGAN due to their success and relevance to the medical domain. One of the research effort associated with utilizing RDP is [27] in which the authors training a privacy-preserving model on MNIST dataset build on conditional GANs. The work proposed in [28] uses RDP to enforce differential privacy. However, they do not consider temporal information. Further, we claim that their system is not fully-differentially private as they only partially apply differential privacy. A general comparison for related methods is depicted in Table I. The rows in Table I describe important characteristics. First, we desire to investigate if a model preserves the privacy or not. Second, it is crucial to assess the model’s utility by checking if a model requires labels. If a model does not need labels, it can be used for unsupervised synthetic data generation. Third, we target the approaches that can handle a mixture of data types. At last, we are also interested in the models that can capture correlated and temporal information.

To assess the privacy-preserving characteristics of our model, we compare our method to two of the prominent research efforts that guarantee differential privacy in the context of synthetic data generation, namely, DPGAN and PATE-GAN. PATE-GAN uses a modified version of a PATE mechanism. One issue with the original PATE is that its privacy cost depends on the amount data it requires for a labeling process incorporated in the mechanism. Noted that PATE mechanism uses some public dataset (similar to real private data) for its labeling mechanism. Not only may this increase the privacy loss significantly, but the availability of such a labeled public dataset is a limiting assumption in a practical, real-world scenario. Although PATE-GAN changed the PATE training paradigm so that it does not require public data, its basis is to train a student model by employing the generator output that may aggregate the error, and it still needs labeled data. However, the advantage of PATE is that it provides a tighter privacy upper bound as opposed to the mechanism used in DPGAN. Regarding the privacy, the advantage of our method compared to both these methods is that we use the RDP mechanism which provides a tighter privacy upper bound. Furthermore, our approach uses convolutional architectures which typically perform better than standard multilayer perception models by capturing the correlated features and temporal information. Furthermore, we use convolutional autoencoders that, in an unsupervised manner, create a feature space that can effectively incorporate both discrete and continuous values.

III Preliminaries

III-A Autoencoders

Autoencoder are a kind of neural network architectures that consists of two encode and decode functions. and aim to transpose the input to the latent space and then reconstruct . In an ideal scenario, a perfect reconstruction of the original input data can be achieved, i.e., . The autoencoders usually employ Binary Cross Entropy (BCE) and Mean Square Error (MSE) losses for binary and continuous inputs, respectively.

| (1) |

| (2) |

We utilize autoencoders in our model for capturing low-dimensional representations for both discrete and continuous variables.

III-B Differential Privacy

Differential Privacy establishes a guarantee of individuals’ privacy via measuring the privacy loss (associated with any information release extracted from a database) by a mathematical definition [11, 6]. The most widely adopted definition is the -differential privacy.

Definition 1 (-DP)

The randomized algorithm , is -differentially private for all query outcomes and all neighbor datesets and if:

Two datasets , that only differ by one record (e.g., a patients’ record) are called neighbor datasets. The notion of neighboring datasets emphasizes the sensitivity of any individual private data. The parameters denote the privacy budget, i.e., being differentially private does not mean we have absolute privacy. It only indicates our confidence level of privacy, given the parameters. The smaller the parameters are, the more confident we become about our algorithm’s privacy as indicates the privacy loss by definition.

The -DP with is called -DP which is the initially proposed definition [11]. It provides a more substantial promise of privacy as even a tiny amount of might be a dangerous privacy violation due to the distribution shift [11]. One of the most important reasons in the usage of -DP is the applicability of advanced composition theorems [6, 29].

III-C Rényi Differential Privacy

The strong composition (see Definition 1) enables tighter upper bound for compositions of -DP steps compared to the basic composition theorem [6]. One particular problem with the advanced composition theorem is that iterating this process leads to the rapid growth of parameters as each employment of the advanced composition theorem (each step of the iterative process) lead to a various selection of possible values. To address some of the shortcomings of the -DP definition, the Rényi Differential Privacy (RDP) has been proposed in [12] based on Rényi divergence (Eq. 2).

Definition 2 (Rényi divergence of order [30])

The Rényi divergence of order of a distribution from the distribution is defined as:

The Rényi divergence, as a generalization of the Kullback–Leibler divergence, is precisely equal to the Kullback–Leibler divergence for the order . The special case of equals to:

which is the log of the maximum ratio of the probabilities over . The order of establishes the connection between the Rényi divergence and the -DP. A randomized mechanism is -differentially private if for two neighbor datasets and , we have:

Now, based on these definitions, let us define Rényi differential privacy (RDP) [12] as a new notion of differential privacy.

Definition 3 (Rényi Differential Privacy (RDP) [12])

The randomized algorithm is -RDP if, for all neighbor datasets and , we have:

There are two essential properties of the RDP definition (Definition 3) that are required here.

Proposition 1 (Composition of RDP [12])

If is -RDP and is -RDP, then the mechanism , where and , satisfies the -RDP conditions.

Proposition 2

If the mechanism is -RDP then it also obeys -DP for any [12].

The above two propositions form the basis for preserving privacy in our approach. Proposition 1 determines the calculation of the privacy cost in our framework as a composition of two structures (autoencoder and convolutional GAN) and Proposition 2 is applicable when one wants to calculate the extent to which our system is differentially private based on the traditional notion (see Definition 1).

IV Privacy-Preserving Framework

In our work, we build a privacy-preserving GAN model using Rényi differential privacy. However, since it is well known that the GAN models typically have a poor performance in generating non-continuous data [31], we utilize autoencoders [32] to aid the GAN model by creating a continuous feature space to represent the input. At the same time, the GAN aims to generate high-fidelity synthetic data in a privacy-preserving manner. The autoencoder will transform the input space into a continuous space, whether we have a discrete, continuous, or a mixture of both types as the input. Thus, the autoencoder acts as a bridge between the GAN model and the non-continuous data present in the medical domain.

Furthermore, the majority of existing research efforts in generating synthetic data [13] ignore the features’ local correlations or temporal information by using multilayer perceptrons, which are inconsistent with real-world scenarios such as disease progression. To remedy this drawback, for both autoencoder and GAN architecture (generator & discriminator), we use convolutional neural networks. In our approach, we employ One Dimensional Convolutional Neural Networks (1D-CNNs) to capture correlated input features’ patterns as well as temporal information and incorporate them within an autoencoder based framework.

The proposed framework is depicted in Fig. 1. The inputs to the generator and the discriminator are the random noise sampled from and the real data . and are the generator and discriminator domains which are usually and , respectively. In RDP-CGAN, as opposed to the regular GAN’s training procedure, the generated fake data is decoded before being fed to the discriminator. The decoding is done by feeding the fake data to a pre-trained autoencoder.

IV-A Convolutional Autoencoder

We build a one-dimensional convolutional autoencoder (1D-CAE) based architecture. The main goal of such a 1D-CAE is to: (1) capture the correlation between neighboring features, (2) represent a new compact feature space, (3) transform the possible discrete records to a new continuous space, and (4) simultaneously model discrete and continuous phenomena, which is a challenging problem [22].

We enforce privacy by adding noise, and by clipping gradients of both the encoder and decoder (see Algorithm 1, Autoencoder Pretraining Step), as in some previous research works [10, 33]. One might claim adding noise to the decoder part should suffice as the discriminator only has access to the decoder part of the CAE. We assert that such an action might jeopardize the privacy of the model since we cannot rely on the autoencoder training to be trusted and secure as it has access to the real data. The details of autoencoder pretraining, demonstrated in Algorithm 1 are as follows:

-

•

We pre-train the autoencoder for steps (which will be determined based on the privacy budget ), and hence it varies by changing the desired level of .

-

•

We divide a mini-batch into several micro-batches.

-

•

For each micro-batch of size 1, we calculate the loss (line 7), calculate the gradients (line 8), and clip the gradients to bind the model’s sensitivity to the individuals (line 9). The operation indicates the norm over the micro-batch gradients, and is an arbitrary upper bound for the gradients’ norm.

-

•

The Gaussian noise ()will then be independently added to the gradients of each micro-batch and will be aggregated (line 10).

-

•

The last step is where the optimizer performs the parameter update (line 11).

IV-B Convolutional GAN

The pseudocode for the Convolutional GAN is given in Algorithm 2, under the procedure GAN Training Step. As can be observed in Algorithm 2, we only enforce differential privacy on the discriminator as only it has access to the real data. To avoid the issue of mode collapse [34], we train the CGAN model, using Wasserstein GAN [35] since it is an efficient approximation of Earth Mover (EM) distance and is shown to be robust to the mode collapse problem during model training [35].

The Earth-Mover (EM) distance or Wasserstein-1 distance represents the minimum price in transforming the generated data distribution to the real data distribution :

| (3) |

refers to how much “mass” should be moved from to to transform to .

However, as the infimum in (3) is intractable, based on the the Kantorovich-Rubinstein duality [36], the WGAN propose the Eq. 4 optimization:

| (4) |

For simplicity of the definition, infimum and supremum indicate the greatest lower bound and the least upper bound, respectively.

Definition 4 (1-Lipschitz functions)

Given two metric spaces and , where denotes the metric (e.g., distance metric), the function is called K-Lipschitz if:

| (5) |

Using the distance metric and , Eq. (5) is equivalent to:

| (6) |

It is clear that for computing the Wasserstein distance, we should find a 1-Lipschitz function (see Definition 4 and Eq. 6.. The approach is to build a neural model to learn it. The procedure is to construct a discriminator D without the Sigmoid function, and output a scalar instead of the probability of confidence.

Regarding the privacy considerations, the generator has no access to real data directly (although it has access to the gradients from the discriminator); only the discriminator will have access to it. We propose only to train the discriminator under differential privacy for this aim. More intuitively, this approach considers the post-processing theorem in differential privacy as follows.

Theorem 2 (Post-processing [6])

Assume is an algorithm which is -differentially private and is some arbitrary function operates on . Then is -differentially private as well [6].

Following the argument above, we consider and as discriminator and generator functions, respectively. Since the generator is an arbitrarily randomized mapping on top of the discriminator, enforcing the differential privacy on the discriminator suffices to guarantee that the overall system ensures differential privacy, and we do not need to train a private generator as well. The generator training procedure is given in Algorithm 2, lines 17-20. As can be observed, we do not have a private generator and the loss function is the regular generator loss function of the WGAN method [35].

IV-C Architecture Details

For the GAN discriminator, we used five convolutional layers similar to the autoencoder (encoder), except for the last layer that we have another dense layer, of output size 1, for decision making. For all layers, we used PReLU activation [37], except for the last layer that does not use any activation. For the generator, we used transposed convolutions (also called fractionally-strided convolutions) similar to the ones used in [38]. However, we use 1-D transposed convolutions. The GAN generator has an input noise of size 100, and its output size is set to 128.

In the autoencoder and for the encoder, we used an architecture similar to the GAN discriminator, except we discard the last layer of the discriminator. For the decoder, as done in the generator, we used transposed convolutional layers in the reverse order of the ones used for the encoder. For the encoder, we used PReLU activation layers except for the last layer of the encoder where Tanh was used to match the generator output. For the decoder, we also used PReLU activation except for the last layer where a Sigmoid activation function was used to bound the range of output data to the to ideally reconstruct the discrete range of aligned with the input data. The decoder input size (the encoder output size) is equal to the GAN’s generator output dimension.

Since our model inputs sizes may change for different datasets, we modify the input pipeline of our architecture by varying the dimensions of convolution kernels, stride for the GAN discriminator, and the autoencoder, to match the new dimensionality. The GAN generator does not require any change since, for all experiments, we used the same noise dimension as mentioned above.

IV-D Privacy Loss

As tracking the RDP privacy accountant [8] is computationally more precise than the regular DP, we based our privacy loss calculation on RDP. Then, the RDP computations can be transformed to DP based on the proposition 2. The Gaussian noise additive procedure is also called Sampled Gaussian Mechanism (SGM) [39]. For tracking privacy loss, we use the following Theorem.

Theorem 3 (SGM privacy loss [39])

Assume and are two neighbor datasets and is a SGM applied to a function with -sensitivity of one. If we set the following:

where and are PDFs, then, the mechanism satisfies -RDP for:

where and .

It can be proven that and the RDP privacy computation can solely be focused on upper bounding which can be calculated with a closed-form bound and numerical calculations [39]. We use the same numerical calculations here. However, that bounds for each step. The overall bound of for the entire training process can be calculated by for any given (see proposition 1). To determine the tighter upper bound, we try multiple values and use the minimum and its associated (obtained by RDP privacy accountant) to compute the -DP employing proposition 2.

System privacy budget: One important question is: how do we calculate the -RDP for the combination of an autoencoder and GAN training? Consider proposition 1, in which , , and are the input and output spaces of the autoencoder and the output space of the discriminator. The mechanisms and are the autoencoder and discriminator, respectively. Consider is what the discriminator observes after decoding of the fake samples, and is the space of real inputs. Hence, the proposition 1 directly results in having the whole system with -RDP by fixing the . But we cannot guarantee that we can have a fixed , and the RDP has a budget curve parameterized by it. The procedure of fixing is as follows.

-

•

Assume we have two systems and such that one is the autoencoder, and the other is the GAN. Hence we have two systems which -RDP and -RDP.

-

•

Without loss of generality, we assume . We pick . Now we have system which -RDP.

-

•

For we pick and calculate that .

-

•

Now, the total system satisfies -RDP.

V Experiments

In this section, we will first present the details of the experimental setup and then report the results obtained in various experiments and compare with different methods available in the literature.

V-A Experimental Setup

Here, we split the dataset to train and test sets . We utilize to train the models, and then generate symthesized samples using the trained model. We set . Recall from Section IV that although for different datasets we alter the architectures associated with the input size, the encoder output space (decoder input space) and the generator output space will always have the same dimensionality. Furthermore, the encoder input space, the decoder output space, and the discriminator input space will also have the same dimensionality. It is worth noting that all of the reported values are associated with the -DP definition with unless otherwise stated.

Both the convolutional autoencoder and convolutional GAN were trained with the Adam optimizer [40] with the learning rate of , with a mini-batch size of 64. For both generator and discriminator we used Batch Normalization (BN) [41] to improve the training. We used one GeForce RTX 2080 NVIDIA GPU for our experiments.

We compare our framework with various different methods. Depends on the experiments, some of those methods may or may not be used for comparison depending on their characteristics. For further details, please see Table I.

V-B Datasets

To evaluate this performance, we used the following datasets for our experiments:

- 1.

-

2.

Kaggle Cervical Cancer [43]: This dataset covers patients records and aims to classify the cervical cancer. There are multiple attributes in the dataset of discrete, and continuous types and one attribute associated with the class label (e.g., Biopsy as the target for classification).

- 3.

- 4.

-

5.

Kaggle Cardiovascular Disease [46]: This Kaggle datasetis used to determine if a patient has cardiovascular disease or not from a variety of features such as age, systolic blood pressure and diastolic blood pressure. This dataset has a mixture of discrete and continuous feature types as well.

-

6.

MIT-BIH Arrhythmia [47]: The MIT-BIH Arrhythmia database [48] includes ambulatory ECG recordings, created from 47 subjects. records obtained from a mixed group of inpatients (requires overnight hospitalization) and outpatients (usually do not require overnight hospitalization) at Boston’s Beth Israel Hospital. Unlike other datasets in this set of experiments, for the MIT-BIH Arrhythmia database, we are dealing with a multicategory classification task. The ECG signals correspond to normal (negative) and abnormal cases (positive). There are a total of five classes in this dataset. Class zero is associated with normal cases. The rest of the classes are associated with different arrhythmias and myocardial infarction which we call abnormal.

V-C Baseline Comparison Methods

We compare our model with different benchmark methods (see Table I). Depends on the nature of experiments, the models are used as benchmarks. For example, if the experiments are associated with different privacy settings, the models that do not preserve the privacy, will not be used for comparison.

-

1.

MedGAN [13]: MedGAN consists of an architecture that is designed to generate discrete records. It has an autoencoder and a vanilla GAN. MedGAN does not preserve privacy. MedGAN can be used for unsupervised synthetic data generation. We used MedGAN open-source implementation111https://github.com/mp2893/medgan.

-

2.

TableGAN [14]: TableGAN consists of three components: A generator, a discriminator, and an auxiliary classifier that aims to augment the semantic integrity of synthesized data. Similar to our method, TableGAN uses Convolutional Neural Networks. TableGAN requires labeled training data and will be used in experiments with a supervised setting. We used TableGAN open-source implementation222https://github.com/mahmoodm2/tableGAN.

-

3.

DPGAN [9]: DPGAN enforces differential privacy by clipping the weights rather than the gradients. It uses WGAN to stabilize the training and use multi-layer perceptions for its architecture. DPGAN does not require labeled data and hence will be used in both supervised and unsupervised settings. We used DPGAN open-source implementation 333https://github.com/illidanlab/dpgan.

-

4.

PATE-GAN [16]: PATE-GAN proposes a differentially private model formed based on the modification of the PATE mechanism. We utilized our own implementation of the PATE-GAN as its code was not available publicly.

V-D Unsupervised Synthetic Data Generation

We chose electronic health records after converting them to a high-dimensional binary discrete dataset to demonstrate the privacy-preserving potential of the proposed model. Here, we have , , and , where represents the feature size. The goal is to capture the information from a real private dataset, and use that to synthesize a dataset with similar distribution in a privacy-sensitive manner. In this section, we can compare our method with models that do not require labeled data (for unsupervised synthetic data generation) and are also privacy-reserving method (see Table I). In the experiments of this section, to pretrain the autoencoder, we used Eq. 1 as the loss function.

Dataset Construction: We used MIMIC-III dataset [42] as an example for high-dimensional and discrete dataset. From MIMIC-III, We extracted and used 1071 unique ICD-9 codes. The dataset has different discrete variables (e.g., diagnosis codes, procedure, etc.). Assuming there are discrete variables, we can represent the patient’s record using a binary vector . The variable is set to one if it is available in the patient’s record. In our experiments, .

Evaluation Metrics: To assess the quality of synthetically generated data, we used two evaluation metrics:

-

1.

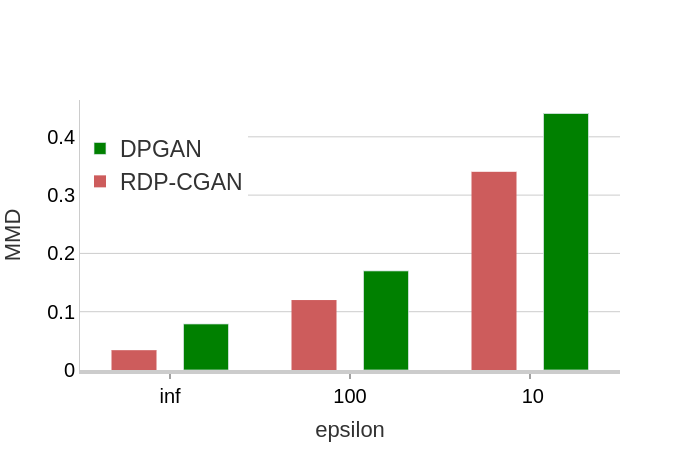

Maximum Mean Discrepancy (MMD): This statistical measure indicates the extent to which the model captures the statistical distribution of the real data. In a recent study [49], MMD demonstrated most of the desired features of an evaluation metric, especially for GANs. This is used in an unsupervised setting since there are no labeled data for statistical measurements. To report MMD, we compared two samples of real and synthetic data, each of size 10000.

-

2.

Dimension-wise prediction: This setting aims to illustrate the inter-connection between features, i.e., if we can predict missing features using the features available in the dataset. We select top-10 and top-50 most frequent features (ICD-9 codes in the patients’ history) from training, test, and synthetic sets. In each run, one testing dimension () from and will be selected as and . The remaining dimensions ( and ) will be utilized to train a classifier that aims to predict from the test set. We employed Random Forests [50], XGBoost [51], and Decision Tree [52]. We report the averaged performance over all predictive models (trained on synthetic data) and for all features using the F1-score (Figure 3). We compare models by considering different privacy budgets by fixing and varying .

As can be observed in Figure 2 and Figure 3, without enforcing privacy (), our model performs better compared to DPGAN. This is due to the fact that by using 1-D convolutional architecture, our model captures the correlated information among adjacent ICD-9 codes in the MIMIC-III dataset. Top-features indicate the most frequent features in the database.

To further investigate the effect of CGANs and CAEs in capturing correlated features, we conduct some experiments without enforcing any privacy and compare our method with existing methods. As can be observed in Table II our method outperforms other methods. Note that when the number of top features are increased, our method shows even better performance compared to other methods since capturing correlation for higher number of features becomes more feasible with convolutional layers.

| Top Features | Real Data | MedGAN | DPGAN | RDP-CGAN |

|---|---|---|---|---|

| 10 | 0.51 0.043 | 0.41 0.013 | 0.36 0.010 | 0.44 0.017 |

| 50 | 0.45 0.037 | 0.24 0.017 | 0.21 0.017 | 0.35 0.056 |

| 100 | 0.36 0.051 | 0.15 0.016 | 0.12 0.009 | 0.25 0.096 |

V-E Supervised Synthetic Data Generation

| Dataset | Samples | Features | Positive Labels | AUROC | AUPRC |

|---|---|---|---|---|---|

| MIMIC-III | 12,127 | 31 | 3272 (27%) | 0.81 0.005 | 0.74 0.007 |

| Kaggle Cervical Cancer | 858 | 36 | 52 (6%) | 0.94 0.011 | 0.69 0.003 |

| UCI Epileptic Seizure | 11,500 | 178 | 2300 (20%) | 0.97 0.008 | 0.94 0.014 |

| PTB Diagnostic | 14,552 | 118 | 10506 (62%) | 0.97 0.006 | 0.96 0.003 |

| Kaggle Cardiovascular Disease | 70,000 | 14 | 35000 (50%) | 0.80 0.017 | 0.78 0.011 |

| MIT-BIHArrhythmia | 109,444 | 188 | 18606 (17%) | 0.94 0.006 | 0.89 0.014 |

In this part, we consider a supervised setting and generate labeled synthetic data. For the experiments of this part, we need labeled data. The supervised setting here includes various classification tasks on different datasets. In the experiments of this section, to pretrain the autoencoder, we used Eq. 2 as the loss function.

Data Processing: For all the datasets except for MIMIC, we follow the same data processing steps that are described in Section V-B. For the MIMIC-III dataset, we used one of the top three codes across hospital admissions [42], which is 414.01 (associated with coronary atherosclerosis) and extracted patients diagnosed with that specific medical code. The classification task will be mortality prediction. We used 12,127 unique admissions and variables such as demographic information (Marital status, ethnicity, and insurance status), admission Information (e.g., days of admission), treatment information (cardiac defibrillator implant with/without cardiac catheterization), diagnostic information (respiratory disorder, cancer, etc.), and lab results (kidney function tests, creatine kinase, etc.) forming a total of 31 variables. Each admission is considered as one data sample. Since multiple admissions might be associated with one patient we will have multiple samples per patient. This comes from the fact that, in a 1-year interval, a patient may survive, but within the next few years and with new medical conditions, the same patient may not survive in the 1-year observation windows.

Evaluation: Let us consider the following two settings: (A) Train and test the model on the real data as the baseline (results shown in Table III). (B) Train on the generated data and test on the real data (this setting is used to quantify the quality of synthetic data). Note that the class distribution of the generated synthetic data must be identical to the real data. Setting (B) can demonstrate how well the model has performed the task of synthetic data generation. The closer the performance of setting (B) is to setting (A), the better the model is in terms of the quality of the generated synthetic data. We conducted different sets of experiments. We conduct ten runs (E=10), for each experiment, and report the averaged AUROC (Area Under the ROC curve) and AUPRC (Area Under the Precision-Recall Curve) for the models’ evaluations. The AUPRC metric is being utilized here since it operates better than AUROC for an imbalanced classification setting.

| Dataset | MedGAN | TableGAN | DPGAN | PATE-GAN | RDP-CGAN |

|---|---|---|---|---|---|

| MIMIC-III | 0.71 0.011 | 0.72 0.017 | 0.71 0.014 | 0.70 0.007 | 0.74 0.012 |

| Kaggle Cervical Cancer | 0.89 0.010 | 0.90 0.012 | 0.90 0.016 | 0.89 0.009 | 0.92 0.009 |

| UCI Epileptic Seizure | 0.87 0.013 | 0.91 0.019 | 0.88 0.024 | 0.85 0.014 | 0.90 0.011 |

| PTB Diagnostic | 0.86 0.018 | 0.94 0.009 | 0.88 0.018 | 0.91 0.006 | 0.93 0.016 |

| Kaggle Cardiovascular Disease | 0.71 0.031 | 0.73 0.016 | 0.71 0.019 | 0.72 0.008 | 0.76 0.014 |

| MIT-BIHArrhythmia | 0.90 0.021 | 0.89 0.019 | 0.88 0.012 | 0.86 0.014 | 0.90 0.009 |

| Dataset | MedGAN | TableGAN | DPGAN | PATE-GAN | RDP-CGAN |

|---|---|---|---|---|---|

| MIMIC-III | 0.68 0.011 | 0.71 0.007 | 0.69 0.006 | 0.70 0.019 | 0.72 0.009 |

| Kaggle Cervical Cancer | 0.55 0.012 | 0.60 0.019 | 0.59 0.008 | 0.58 0.020 | 0.62 0.017 |

| UCI Epileptic Seizure | 0.79 0.013 | 0.86 0.016 | 0.82 0.015 | 0.81 0.012 | 0.84 0.020 |

| PTB Diagnostic | 0.88 0.006 | 0.93 0.008 | 0.90 0.007 | 0.88 0.014 | 0.92 0.012 |

| Kaggle Cardiovascular Disease | 0.69 0.021 | 0.73 0.011 | 0.73 0.021 | 0.72 0.017 | 0.75 0.009 |

| MIT-BIHArrhythmia | 0.77 0.016 | 0.85 0.013 | 0.82 0.008 | 0.81 0.005 | 0.86 0.013 |

V-E1 The effect of architecture

In order to investigate the effect of convolutional GANs and convolutional autoencoders in our model, we perform some experiments with no privacy enforcement. The case corresponds to the setup where we do not enforce any privacy. The AUROC and AUPRC results are reported in Table IV and Table V, respectively. In the aforementioned tables, the best and second best results are indicated with bold and underline text. The classifiers are trained on the synthetic data. The closer the results are to the Real Data experiments, the higher the quality of synthetic data is and hence, we have a better model. As can be observed from those tables, for the challenging datasets where there is a mixture of continuous and discrete variables, our model outperforms others since it not only captures feature correlations but also utilizes convolutional autoencoders which allow our approach learn robust representations for the mixture of data types. For other datasets, TableGAN closely competes with our model. This is because TableGAN also uses convolutional GANs as well as auxiliary classifiers [24] for improving the performance of the GANs. We could incorporate auxiliary classifiers to improve our model, however, it would narrow down the scope of our work since using such auxiliary models would make it infeasible for unsupervised synthetic data generation.

V-E2 The effect of differential privacy

| AUROC | AUPRC | |||||||

|---|---|---|---|---|---|---|---|---|

| Dataset | DPGAN | PATE-GAN | RDP-CGAN | DPGAN | PATE-GAN | RDP-CGAN | ||

| MIMIC-III | 0.74 0.012 | 0.59 0.009 | 0.62 0.011 | 0.67 0.010 | 0.72 0.009 | 0.55 0.013 | 0.58 0.017 | 0.62 0.015 |

| Kaggle Cervical Cancer | 0.92 0.009 | 0.86 0.009 | 0.91 0.005 | 0.89 0.005 | 0.62 0.017 | 0.53 0.008 | 0.54 0.014 | 0.57 0.007 |

| UCI Epileptic Seizure | 0.90 0.011 | 0.72 0.009 | 0.74 0.014 | 0.84 0.008 | 0.84 0.020 | 0.57 0.003 | 0.63 0.016 | 0.69 0.019 |

| PTB Diagnostic ECG | 0.93 0.016 | 0.71 0.012 | 0.75 0.012 | 0.79 0.009 | 0.92 0.012 | 0.71 0.018 | 0.76 0.011 | 0.80 0.008 |

| Kaggle Cardiovascular | 0.76 0.014 | 0.61 0.019 | 0.66 0.006 | 0.69 0.013 | 0.75 0.009 | 0.60 0.001 | 0.63 0.007 | 0.66 0.016 |

| MIT-BIHArrhythmia | 0.90 0.009 | 0.69 0.004 | 0.73 0.006 | 0.77 0.003 | 0.86 0.013 | 0.68 0.023 | 0.73 0.016 | 0.78 0.008 |

We will now investigate how well different methods perform in the differential privacy setting. The base model is when we train using setting (A), which will have the highest accuracy as expected. So the question we investigate here is: how much will the accuracy drop for different models at the same level of privacy budget ? We compare our model with privacy-preserving models (see Table I). Table VI shows these results for the supervised setting described above. In the majority of the experiments, the synthetic data generated by our model, demonstrates higher quality for classification tasks compared to other models under the same privacy budget.

V-E3 The effect of privacy budget

We also investigate how models will perform under different privacy budgets. Fig. 4 demonstrates the trade-off between the privacy budget and the synthetic data quality. RDP-CGAN consistently shows better performance compared to other benchmarks in all the datasets. Our approach demonstrates it is particularly effective in lower privacy budgets. As an example, for the Kaggle Cervical Cancer dataset and for , our model achieves significantly higher AUPRC compared to the PATE-GAN.

V-E4 Ablation study

We will now conduct an ablation study to investigate the effect of each component of our model. In particular, we are interested in assessing the importance and impact of utilizing autoencoders and convolutional architectures. The results of our ablation study are reported in Table VIII and Table VII. The various scenarios reported are described below:

-

•

W/O CAE: without convolutional architecture in autoencoder.

-

•

W/O AE: without autoencoder.

-

•

W/O CG: without convolutional architecture in GAN’s generator.

-

•

W/O CD: without convolutional architecture in GAN’s discriminator.

-

•

W/O CDCG: a standard GAN architecture with MLP.

The results shown in Table VIII and Table VII demonstrate the importance of convolutional architectures. The interesting finding here is that, for the datasets with only continuous values like UCI Epileptic Seizure, PTB Diagnostic ECG, and MIT-BIHArrhythmia) (as opposed to a mixture of continuous-discrete variables), the use of autoencoders actually downgraded the system performance. Hence, we can conclude that autoencoders are very useful in the presence of discrete data or a mixture of both continuous and discrete data types.

| Dataset | W/O CAE | W/O AE | W/O CG | W/O CD | W/O CDCG | |

|---|---|---|---|---|---|---|

| MIMIC-III | 0.74 0.012 | 0.73 0.017 | 0.69 0.031 | 0.72 0.023 | 0.71 0.027 | 0.70 0.016 |

| Kaggle Cervical Cancer | 0.92 0.009 | 0.90 0.019 | 0.86 0.027 | 0.92 0.031 | 0.90 0.014 | 0.88 0.021 |

| UCI Epileptic Seizure | 0.90 0.011 | 0.89 0.016 | 0.91 0.021 | 0.88 0.022 | 0.86 0.008 | 0.85 0.026 |

| PTB Diagnostic ECG | 0.93 0.016 | 0.91 0.011 | 0.94 0.015 | 0.92 0.029 | 0.91 0.027 | 0.89 0.014 |

| Kaggle Cardiovascular | 0.76 0.014 | 0.74 0.012 | 0.71 0.041 | 0.43 0.015 | 0.72 0.021 | 0.71 0.026 |

| MIT-BIHArrhythmia | 0.90 0.009 | 0.88 0.013 | 0.90 0.014 | 0.88 0.012 | 0.86 0.017 | 0.84 0.041 |

| Dataset | W/O CAE | W/O AE | W/O CG | W/O CD | W/O CDCG | |

|---|---|---|---|---|---|---|

| MIMIC-III | 0.72 0.009 | 0.70 0.032 | 0.67 0.054 | 0.70 0.076 | 0.69 0.023 | 0.66 0.065 |

| Kaggle Cervical Cancer | 0.62 0.017 | 0.61 0.031 | 0.58 0.017 | 0.59 0.036 | 0.59 0.041 | 0.55 0.023 |

| UCI Epileptic Seizure | 0.84 0.020 | 0.82 0.034 | 0.86 0.041 | 0.79 0.024 | 0.81 0.012 | 0.80 0.018 |

| PTB Diagnostic ECG | 0.92 0.012 | 0.90 0.028 | 0.94 0.031 | 0.89 0.022 | 0.88 0.022 | 0.88 0.029 |

| Kaggle Cardiovascular | 0.75 0.009 | 0.73 0.052 | 0.71 0.017 | 0.72 0.033 | 0.71 0.041 | 0.70 0.014 |

| MIT-BIHArrhythmia | 0.86 0.013 | 0.83 0.019 | 0.87 0.026 | 0.85 0.038 | 0.84 0.023 | 0.82 0.032 |

VI Conclusion

In this work, we proposed and developed a differentially private framework for synthetic data generation using Rényi Differential Privacy. The model aimed to capture the temporal information and feature correlation using convolutional neural networks. We empirically demonstrate that by employing convolutional autoencoders we can effectively handle variables that are continuous, discrete or a mixture of both. We argue that we will need to secure both encoder and decoder parts of the autoencoder since there is no guarantee that the autoencoder’s training is being done by a trusted third-party. We show our model outperforms other models under the same privacy budget. This phenomenon may come in part from reporting a tighter bound and, in part, from utilizing convolutional networks. We reported the performance of different models by replacing real data with synthetic data for training our machine learning models.

Acknowledgements

This work was supported in part by the US National Science Foundation grants IIS-1619028, IIS-1707498, IIS-1838730 and NVIDIA Corp. We also acknowledge the support provided by NewWave Telecom Technologies during the early stages of this project through a fellowship award.

References

- [1] A. Narayanan and V. Shmatikov, “Robust de-anonymization of large sparse datasets,” in 2008 IEEE Symposium on Security and Privacy (sp 2008), pp. 111–125, IEEE, 2008.

- [2] M. Al-Rubaie and J. M. Chang, “Privacy-preserving machine learning: Threats and solutions,” IEEE Security & Privacy, vol. 17, no. 2, pp. 49–58, 2019.

- [3] Y. Li, C. Bai, and C. K. Reddy, “A distributed ensemble approach for mining healthcare data under privacy constraints,” Information sciences, vol. 330, pp. 245–259, 2016.

- [4] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative Adversarial Nets,” in Advances in Neural Information Processing Systems, pp. 2672–2680, 2014.

- [5] J. Hayes, L. Melis, G. Danezis, and E. De Cristofaro, “LOGAN: Membership inference attacks against generative models,” Proceedings on Privacy Enhancing Technologies, vol. 2019, no. 1, pp. 133–152, 2019.

- [6] C. Dwork, A. Roth, et al., “The algorithmic foundations of differential privacy,” Foundations and Trends® in Theoretical Computer Science, vol. 9, no. 3–4, pp. 211–407, 2014.

- [7] R. Shokri and V. Shmatikov, “Privacy-preserving deep learning,” in Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security, pp. 1310–1321, ACM, 2015.

- [8] M. Abadi, A. Chu, I. Goodfellow, H. B. McMahan, I. Mironov, K. Talwar, and L. Zhang, “Deep learning with differential privacy,” in Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, pp. 308–318, ACM, 2016.

- [9] L. Xie, K. Lin, S. Wang, F. Wang, and J. Zhou, “Differentially private generative adversarial network,” arXiv preprint arXiv:1802.06739, 2018.

- [10] G. Acs, L. Melis, C. Castelluccia, and E. De Cristofaro, “Differentially private mixture of generative neural networks,” IEEE Transactions on Knowledge and Data Engineering, vol. 31, no. 6, pp. 1109–1121, 2018.

- [11] C. Dwork, F. McSherry, K. Nissim, and A. Smith, “Calibrating noise to sensitivity in private data analysis,” in Theory of Cryptography Conference, pp. 265–284, Springer, 2006.

- [12] I. Mironov, “Rényi Differential Privacy,” in 2017 IEEE 30th Computer Security Foundations Symposium (CSF), pp. 263–275, IEEE, 2017.

- [13] E. Choi, S. Biswal, B. Malin, J. Duke, W. F. Stewart, and J. Sun, “Generating multi-label discrete patient records using generative adversarial networks,” arXiv preprint arXiv:1703.06490, 2017.

- [14] N. Park, M. Mohammadi, K. Gorde, S. Jajodia, H. Park, and Y. Kim, “Data synthesis based on generative adversarial networks,” Proceedings of the VLDB Endowment, vol. 11, no. 10, pp. 1071–1083, 2018.

- [15] A. Torfi and E. A. Fox, “CorGAN: Correlation-capturing convolutional generative adversarial networks for generating synthetic healthcare records,” in The Thirty-Third International Flairs Conference, 2020.

- [16] J. Jordon, J. Yoon, and M. van der Schaar, “PATE-GAN: Generating synthetic data with differential privacy guarantees,” in International Conference on Learning Representations, 2018.

- [17] M. K. Baowaly, C.-C. Lin, C.-L. Liu, and K.-T. Chen, “Synthesizing electronic health records using improved generative adversarial networks,” Journal of the American Medical Informatics Association, vol. 26, no. 3, pp. 228–241, 2018.

- [18] A. H. Pollack, T. D. Simon, J. Snyder, and W. Pratt, “Creating synthetic patient data to support the design and evaluation of novel health information technology,” Journal of Biomedical Informatics, vol. 95, p. 103201, 2019.

- [19] J. Guan, R. Li, S. Yu, and X. Zhang, “Generation of synthetic electronic medical record text,” in 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), pp. 374–380, IEEE, 2018.

- [20] J. Walonoski, M. Kramer, J. Nichols, A. Quina, C. Moesel, D. Hall, C. Duffett, K. Dube, T. Gallagher, and S. McLachlan, “Synthea: An approach, method, and software mechanism for generating synthetic patients and the synthetic electronic health care record,” Journal of the American Medical Informatics Association, vol. 25, no. 3, pp. 230–238, 2017.

- [21] J. Chen, D. Chun, M. Patel, E. Chiang, and J. James, “The validity of synthetic clinical data: a validation study of a leading synthetic data generator (synthea) using clinical quality measures,” BMC Medical Informatics and Decision Making, vol. 19, no. 1, p. 44, 2019.

- [22] L. Xu, M. Skoularidou, A. Cuesta-Infante, and K. Veeramachaneni, “Modeling tabular data using conditional GAN,” in Advances in Neural Information Processing Systems, pp. 7333–7343, 2019.

- [23] B. K. Beaulieu-Jones, Z. S. Wu, C. Williams, R. Lee, S. P. Bhavnani, J. B. Byrd, and C. S. Greene, “Privacy-preserving generative deep neural networks support clinical data sharing,” Circulation: Cardiovascular Quality and Outcomes, vol. 12, no. 7, p. e005122, 2019.

- [24] A. Odena, C. Olah, and J. Shlens, “Conditional image synthesis with auxiliary classifier GANs,” in Proceedings of the 34th International Conference on Machine Learning-Volume 70, pp. 2642–2651, JMLR. org, 2017.

- [25] N. Papernot, M. Abadi, U. Erlingsson, I. Goodfellow, and K. Talwar, “Semi-supervised knowledge transfer for deep learning from private training data,” arXiv preprint arXiv:1610.05755, 2016.

- [26] N. Papernot, S. Song, I. Mironov, A. Raghunathan, K. Talwar, and Ú. Erlingsson, “Scalable Private Learning with PATE,” arXiv preprint arXiv:1802.08908, 2018.

- [27] R. Torkzadehmahani, P. Kairouz, and B. Paten, “Dp-cgan: Differentially private synthetic data and label generation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 0–0, 2019.

- [28] U. Tantipongpipat, C. Waites, D. Boob, A. A. Siva, and R. Cummings, “Differentially private mixed-type data generation for unsupervised learning,” arXiv preprint arXiv:1912.03250, 2019.

- [29] C. Dwork, G. N. Rothblum, and S. Vadhan, “Boosting and differential privacy,” in 2010 IEEE 51st Annual Symposium on Foundations of Computer Science, pp. 51–60, IEEE, 2010.

- [30] A. Rényi et al., “On measures of entropy and information,” in Proceedings of the Fourth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Contributions to the Theory of Statistics, The Regents of the University of California, 1961.

- [31] R. D. Hjelm, A. P. Jacob, T. Che, A. Trischler, K. Cho, and Y. Bengio, “Boundary-seeking generative adversarial networks,” arXiv preprint arXiv:1702.08431, 2017.

- [32] D. P. Kingma and M. Welling, “Auto-encoding Variational Bayes,” arXiv preprint arXiv:1312.6114, 2013.

- [33] N. C. Abay, Y. Zhou, M. Kantarcioglu, B. Thuraisingham, and L. Sweeney, “Privacy preserving synthetic data release using deep learning,” in Joint European Conference on Machine Learning and Knowledge Discovery in Databases, pp. 510–526, Springer, 2018.

- [34] I. Gulrajani, F. Ahmed, M. Arjovsky, V. Dumoulin, and A. C. Courville, “Improved training of Wasserstein GANs,” in Advances in Neural Information Processing Systems, pp. 5767–5777, 2017.

- [35] M. Arjovsky, S. Chintala, and L. Bottou, “Wasserstein GAN,” arXiv preprint arXiv:1701.07875, 2017.

- [36] C. Villani, Optimal transport: old and new, vol. 338. Springer Science & Business Media, 2008.

- [37] K. He, X. Zhang, S. Ren, and J. Sun, “Delving deep into rectifiers: Surpassing human-level performance on imagenet classification,” in Proceedings of the IEEE International Conference on Computer Vision, pp. 1026–1034, 2015.

- [38] A. Radford, L. Metz, and S. Chintala, “Unsupervised representation learning with deep convolutional generative adversarial networks,” arXiv preprint arXiv:1511.06434, 2015.

- [39] I. Mironov, K. Talwar, and L. Zhang, “Rényi differential privacy of the sampled gaussian mechanism,” arXiv preprint arXiv:1908.10530, 2019.

- [40] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [41] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” arXiv preprint arXiv:1502.03167, 2015.

- [42] A. E. Johnson, T. J. Pollard, L. Shen, H. L. Li-wei, M. Feng, M. Ghassemi, B. Moody, P. Szolovits, L. A. Celi, and R. G. Mark, “MIMIC-III, a freely accessible critical care database,” Scientific data, vol. 3, p. 160035, 2016.

- [43] K. Fernandes, J. S. Cardoso, and J. Fernandes, “Transfer learning with partial observability applied to cervical cancer screening,” in Iberian Conference on Pattern Recognition and Image Analysis, pp. 243–250, Springer, 2017.

- [44] R. G. Andrzejak, K. Lehnertz, F. Mormann, C. Rieke, P. David, and C. E. Elger, “Indications of nonlinear deterministic and finite-dimensional structures in time series of brain electrical activity: Dependence on recording region and brain state,” Physical Review E, vol. 64, no. 6, p. 061907, 2001.

- [45] R. Bousseljot, D. Kreiseler, and A. Schnabel, “Nutzung der ekg-signaldatenbank cardiodat der ptb über das internet,” Biomedizinische Technik/Biomedical Engineering, vol. 40, no. s1, pp. 317–318, 1995.

- [46] S. Ulianova, “Cardiovascular disease dataset,” 2018. data retrieved from the kaggle dataset, https://www.kaggle.com/sulianova/cardiovascular-disease-dataset.

- [47] G. B. Moody and R. G. Mark, “The impact of the MIT-BIH arrhythmia database,” IEEE Engineering in Medicine and Biology Magazine, vol. 20, no. 3, pp. 45–50, 2001.

- [48] A. L. Goldberger, L. A. Amaral, L. Glass, J. M. Hausdorff, P. C. Ivanov, R. G. Mark, J. E. Mietus, G. B. Moody, C.-K. Peng, and H. E. Stanley, “PhysioBank, PhysioToolkit, and PhysioNet: components of a new research resource for complex physiologic signals,” Circulation, vol. 101, no. 23, pp. e215–e220, 2000.

- [49] Q. Xu, G. Huang, Y. Yuan, C. Guo, Y. Sun, F. Wu, and K. Weinberger, “An empirical study on evaluation metrics of generative adversarial networks,” arXiv preprint arXiv:1806.07755, 2018.

- [50] L. Breiman, “Random forests,” Machine Learning, vol. 45, no. 1, pp. 5–32, 2001.

- [51] T. Chen and C. Guestrin, “Xgboost: A scalable tree boosting system,” in Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 785–794, 2016.

- [52] J. R. Quinlan, “Induction of decision trees,” Machine Learning, vol. 1, no. 1, pp. 81–106, 1986.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/453694e0-96bb-4447-a441-202d372426a7/torfi.jpeg) |

Amirsina Torfi holds a Ph.D. in Computer Science from Virginia Tech, and a B.S. and M.S. from Iran University of Science and Technology in Electrical Engineering and Information Technology, respectively. His research interests include Machine Learning and Deep Learning in various applications such as NLP, healthcare, and computer vision. He published several peer-reviewed. He is the founder of Instill AI, a start-up company aimed to enhance the utilization of AI in real-world applications. He is also a leading expert in open-source development as some of his Deep Learning projects are well-known worldwide. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/453694e0-96bb-4447-a441-202d372426a7/fox.jpg) |

Edward A. Fox holds a Ph.D. and M.S. in Computer Science from Cornell University, and a B.S. from M.I.T. He is a Fellow of both ACM and IEEE, cited for leadership in digital libraries and information retrieval. Since 1983 he has been at Virginia Polytechnic Institute and State University (VPI&SU or Virginia Tech), where he serves as Professor of Computer Science, and by courtesy, of ECE. He was an elected member of the Board of Directors of the Computing Research Association and was Chair (now a member) of the ACM/IEEE-CS Joint Conference on Digital Libraries (JCDL) Steering Committee. He served on the IEEE Thesaurus Editorial Board, and was Chairman of the IEEE-CS Technical Committee on Digital Libraries. He works in the areas of digital libraries, information storage and retrieval, machine learning/AI, computational linguistics (NLP), hypertext/hypermedia/multimedia, computing education, CD-ROM and optical disc technology, electronic publishing, and expert systems. In these areas, he has participated in, organized, presented, and/or reviewed for hundreds of conferences and workshops. 131 grants have funded his research. For the Association for Computing Machinery he served 2018-2019 as a member of its Publications Board (and as co-chair of its digital libraries committee). He was editor for information retrieval and digital libraries in the ACM Books series. He was founder and co-editor-in-chief for the ACM Journal of Educational Resources in Computing, was a member of the editorial board for ACM Transactions on Information Systems, and was General Chair for JCDL 2001. He served as Program Chair for ACM DL’99, ACM DL’96, and ACM SIGIR’95 - and Program Co-chair for JCDL 2018, CIKM 2006, and ICADL 2005. He serves on the editorial boards of Journal of Educational Multimedia and Hypermedia, Journal of Universal Computer Science, and PeerJ Computer Science. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/453694e0-96bb-4447-a441-202d372426a7/reddy.jpg) |

Chandan K. Reddy is a Professor in the Department of Computer Science at Virginia Tech. He received his Ph.D. from Cornell University and M.S. from Michigan State University. His primary research interests are Data Mining and Machine Learning with applications to various real-world domains including healthcare, transportation, social networks, and e-commerce. He has published over 130 peer-reviewed articles in leading conferences and journals. He received several awards for his research work including the Best Application Paper Award at ACM SIGKDD conference in 2010, Best Poster Award at IEEE VAST conference in 2014, Best Student Paper Award at IEEE ICDM conference in 2016, and was a finalist of the INFORMS Franz Edelman Award Competition in 2011. He is serving on the editorial boards of ACM TKDD, IEEE Big Data, and DMKD journals. He is a senior member of the IEEE and distinguished member of the ACM. |