Differential Advising in Multi-Agent Reinforcement Learning

Abstract

Agent advising is one of the main approaches to improve agent learning performance by enabling agents to share advice. Existing advising methods have a common limitation that an adviser agent can offer advice to an advisee agent only if the advice is created in the same state as the advisee’s concerned state. However, in complex environments, it is a very strong requirement that two states are the same, because a state may consist of multiple dimensions and two states being the same means that all these dimensions in the two states are correspondingly identical. Therefore, this requirement may limit the applicability of existing advising methods to complex environments. In this paper, inspired by the differential privacy scheme, we propose a differential advising method which relaxes this requirement by enabling agents to use advice in a state even if the advice is created in a slightly different state. Compared with existing methods, agents using the proposed method have more opportunity to take advice from others. This paper is the first to adopt the concept of differential privacy on advising to improve agent learning performance instead of addressing security issues. The experimental results demonstrate that the proposed method is more efficient in complex environments than existing methods.

Keywords - Multi-Agent Reinforcement Learning, Agent Advising, Differential Privacy

1 Introduction

Multi-agent reinforcement learning (MARL) is one of the fundamental research topics in artificial intelligence [1]. In regular MARL methods, agents typically need a large number of interactions with the environment and other agents to learn proper behaviors. To improve agent learning speed, the agent advising technique is introduced [2, 3, 4], which enables agents to ask for advice between each other.

Existing advising methods share a common limitation that an adviser agent can offer advice to an advisee agent only if the advice is created in the same state as the advisee agent’s concerned state [5]. However, in complex environments, it is usually a strong requirement that two states are the same, because a state may be composed of multiple dimensions and two states being the same implies that all these dimensions in the two states are correspondingly identical. This requirement may hinder the application of existing methods to complex environments. For example, in a multi-robot search and rescue problem (Fig. 1), the aim of each robot is to search and rescue victims as quickly as possible. Each robot is a learning agent which can observe only its surrounding area. To improve robot learning performance, robots can ask for advice between each other. In this problem, an observation of a robot is interpreted as a state of the robot. An observation consists of eight dimensions, where each dimension stands for a small cell around the robot. The format of an observation could be: , where means an empty cell and means an obstacle in a cell. A slightly different observation could be: , as the two states, and , have only the fifth dimension in difference (referring to Definition 4 for more detail). In existing advising methods, when a robot in state asks for advice from another robot , robot can offer advice to robot only if robot has visited state a number of times.

In this paper, we relax this requirement and develop a differential advising method which enables agents to use advice in a state even if the advice is created in a slightly different state. Thus, in the above example, by using our method, even if robot has never visited state , robot can still offer advice to robot as long as robot has visited a neighboring state of state , e.g., state . However, developing this differential advising method is a difficult task due to the following two challenges. First, how to measure and delimit the difference between two states is a challenge, because the amount of the difference between two states may significantly affect the quality of advice. Second, how to use advice in a concerned state, which is created in another state, is also a challenge, because improper advice may harm the learning performance of agents [6]. To address these two challenges, the differential privacy technique is applied.

Differential privacy is a privacy model which guarantees that a query to two slightly different datasets yields almost the same results [7, 8]. This means that the query result yielded from one dataset can be considered approximately identical to the query result yielded from the other dataset. This property of differential privacy can be taken into our method. Specifically, two slightly different states are similar to two slightly different datasets. Advices generated from states are similar to results yielded from datasets. Since two results from two slightly different datasets can be considered approximately identical, two advices generated from two slightly different states can also be considered approximately identical. This property guarantees that the advice created in a state can still be used in another slightly different state.

In summary, this paper has two contributions. First, we are the first to develop a differential advising method which allows agents to use advices created in different states. This method enables agents to receive more advices than existing methods. Second, we are the first to take advantage of the differential privacy technique for agent advising to improve agent learning performance instead of addressing security issues.

2 Related Work

Torrey and Taylor [9] introduced a teacher-student framework, where a teacher agent provides advice to a student agent to accelerate the student’s learning. Their framework is teacher-initiated, where the teacher determines when to give advice to the student. They developed a set of heuristic approaches for the teacher agent to decide when to provide advice. By extending Torrey and Taylor’s framework [9], Amir et al. [10] proposed an interactive student-teacher framework, where the student agent and the teacher agent jointly determine when to ask for/provide advice. They also developed a set of heuristic methods for both the student agent and the teacher agent to decide when to ask for/provide advice.

Later, Silva et al. [2] extended Amir et al.’s [10] work by taking simultaneity into consideration, where multiple agents simultaneously learn in an environment and an agent can be both a teacher and a student at the same time. The decisions regarding advice request and provision are based on a set of heuristic methods.

Wang et al. [11] proposed a teacher-student framework to accelerate the lexicon convergence speed among agents. Their framework is similar to Silva et al.’s framework [2]. The major difference is that, in Wang et al.’s framework, instead of broadcasting a request to all the neighboring agents, a student agent uses a multicast manner to probabilistically ask each neighboring agent for advice. The asking probability is based on the distance between the student and the teacher.

Ye et al. [6] also proposed an agent advising approach in a simultaneous learning environment. Unlike other advising methods which consider only benign and cooperative agents, Ye et al. introduced malicious agents into the environment, which provide false advice to hurt the learning performance of other agents. Ye et al. then used the differential privacy technique to preserve the learning performance of benign agents and reduce the impact of malicious agents.

Omidshafiei et al. [12] presented an advising framework. Unlike existing methods which use only heuristic methods to decide when to advise, their framework enables agents to learn when to teach/request and what to teach. Specifically, an advisee agent has a student policy to decide when to request advice using advising-level observation. The advising-level observation is based on the advisee agentos task-level observation and action-value vectors. Similarly, an adviser agent has a teacher policy to decide when and what to advise using advising-level observation. This advising-level observation is based on 1) the adviser agentos own task-level knowledge in the advisee agentos state, 2) the adviser agentos measure of the advisee agentos task-level state and knowledge.

Zhu et al. [13] developed a -value sharing framework named PSAF (partaker-sharer advising framework). Unlike other advising methods where agents transfer recommended actions as advice, in PSAF, agents transfer -values as advice. Using -values as advice is more flexible than using actions as advice. This is because the policies of agents may be continuously changing and thus an advisee agent’s learning performance may be impaired by following an adviser’s recommended action. By contrast, if the advice is a -value, the advisee can update its policy by using this -value and then uses its own action selection method to pick up an action.

Ilhan et al. [14] developed an action advising method in multi-agent deep reinforcement learning (MADRL). Unlike regular MARL, in MADRL, the number of states may be very large or even infinite. Thus, it is infeasible to use the number of visits to a state to measure the confidence in that state as commonly used in existing methods. They, hence, adopt the random network distillation to measure the confidence in a state by measuring the mean squared error between two neural networks: the target network and the predictor network.

Unlike the above research which focuses on the advising process, some works have different focuses. Fachantidis et al. [15] studied the critical factors that affect advice quality. Gupta et al. [16] suggested that teacher agents should not only advise the action to take in a given state but also provide more informative advice using the synthesis of knowledge they have gained. They proposed an advising framework where a teacher augments a student’s knowledge by providing not only the advised action but also the expected long-term reward of following that action. Vanhee et al. [17] introduced Advice-MDPs which extend Markov decision processes for generating policies that take into account advising on the desirability, undesirability and prohibition of certain states and actions.

These above-mentioned works have a common limitation that an advice can be offered only if the advice is created in the same state as the given state. This limitation may deter the application of their works to complex environments. State similarity has also been researched by Castro [18] who defined a bisimulation metric. However, Castroos research focuses on computing state similarity in deterministic MDP rather than agent advising. Also, the definition of bisimulation provided in [18] may not be applicable to our research, because that definition requires an agent to have the full knowledge of reward functions, state space, and state transition functions. In our research, the environments are partially observable and thus, the full state space is unknown to any agent. Moreover, in our research, as multiple agents coexist in an environment, the state transition of an agent highly depends on the actions of other agents. Hence, state transition functions are hard to be pre-defined. In this paper, we propose a differential advising method which overcomes the common limitation of existing methods by taking advantage of differential privacy.

3 Preliminaries

3.1 Multi-agent reinforcement learning

Reinforcement learning is usually used to solve sequential decision-making problems. A sequential decision-making process can be formally modeled as a Markov decision process (MDP) [19]. An MDP is typically a tuple , where is the set of states, is a set of actions available to the agent, is the transition function, and is the reward function.

At each step, an agent observes the state of the environment, and selects an action based on its policy , which is a probability distribution over available actions. After performing the action, the agent receives a real-valued reward and gets into a new state . The agent then updates its policy based on the reward and the new state . Through this way, the agent can gradually accumulate knowledge and improve its policy to maximize its accumulated long-term expected reward.

An MDP can be extended to model a multi-agent reinforcement learning process as a tuple , where is the cartesian product of the sets of local states from each agent, , is the available action set of agent , is the transition function, and is the reward function of agent . In multi-agent reinforcement learning, state transitions are based on the joint actions of all the agents. Each agent’s individual reward is also based on the joint actions of all the agents.

3.2 Differential privacy

Differential privacy is a prevalent privacy model and has been broadly applied to various applications [20, 21, 22]. Differential privacy can guarantee an individual’s privacy independent of whether the individual is in or out of a dataset [7]. Two datasets and are neighboring datasets if they differ in at most one record. Let be a query that maps dataset to a -dimension vector in range : . The maximal difference on the results of query is defined as sensitivity of query , which determines how much perturbation is required for the privacy-preserving answer. The formal definition of sensitivity is given as follows.

Definition 1 (Sensitivity).

For a query , the sensitivity of is defined as

| (1) |

where means that two datasets and have at most one record different, and .

The aim of differential privacy is to mask the difference in the answer of query between two neighboring datasets. To achieve this aim, differential privacy provides a randomized mechanism to access a dataset. In -differential privacy, parameter is defined as the privacy budget, which controls the privacy guarantee level of mechanism . A smaller represents a stronger privacy. The formal definition of differential privacy is presented as follows.

Definition 2 (-Differential Privacy).

A randomized mechanism gives -differential privacy if for any pair of neighboring datasets and , and for every set of outcomes , satisfies:

| (2) |

One of the prevalent differential privacy mechanisms is the Laplace mechanism. The Laplace mechanism adds Laplace noise to query results. We use to represent the noise sampled from the Laplace distribution with scaling . The mechanism is described as follows.

Definition 3 (Laplace mechanism).

Given any function , the Laplace mechanism is defined as

| (3) |

where are the random noise drawn from .

3.3 Differential advising

Based on the definitions of differential privacy, we provide the definitions of differential advising. The aim of providing the definitions of differential advising is to link the properties of differential privacy to the properties of differential advising. This linkage can guarantee that the differential privacy mechanisms can be used as differential advising mechanisms, as later shown in Lemma 1 and Theorem 1 in Section 5.

The reason of applying differential privacy to advising is to ensure that the knowledge learned in a state can still be used in a different but similar state, i.e., a neighboring state (Definition 4). In previous work, knowledge can only be re-used in identical states. With the introduction of the ‘neighboring state’, a piece of knowledge has more chance to be applied by agents compared with previous work. This idea is reasonable as we human can easily borrow knowledge from a similar environment.

To achieve this goal, we can consider acquiring knowledge, i.e., asking for advice, as querying to a dataset, and consider the -values of state/action pairs constituting a dataset, i.e., a -table. Then, the differential advising process can be simulated as a differentially private query process. To implement differential advising, we apply the Laplace mechanism to mask the difference between the knowledge learned in two neighboring states. Specifically, a -table, shown as below, is a two-dimensional matrix which stores the knowledge learned by an agent. Differential advising is operating on -tables.

| States/Actions | ||||

|---|---|---|---|---|

Without loss of generality, we assume that a state consists of dimensions: . Each dimension could be either an integer or a real number representing discrete or continuous states. The difference between two states and is defined as:

Definition 4 (Difference between states).

| (4) |

Two states and are neighboring, i.e., slightly different, if their difference is at most , i.e., . The difference threshold is set to , because this is the prerequisite of using differential privacy. As described in Definition 2, differential privacy works when two datasets have at most one record in difference. In fact, Definition 4 is slightly different from the prerequisite of differential privacy. This is because data records in a dataset are discrete, and thus means that one record is different in the two datasets. In Definition 4, if states are discrete and each dimension is represented as an integer, also means that one dimension is different in the two states, such as the multi-robot example in Section 1. If states are continuous, could mean that the difference exists in multiple dimensions and the sum of the difference is less than . We leave the further research of continuous states as one of our future works.

Similarly, the advice sensitivity is defined as follows.

Definition 5 (Advice sensitivity).

For an advice generation function , where is the state set and is the number of actions, the sensitivity of is defined as

| (5) |

As an advice is a -vector, for simplicity, we use the letter “” to represent both the advice generation function and the -function. Details will be given in the next section.

Definition 6 (-differential advising).

An advising method is -differential advising, if for any pair of neighboring states and , and for every set of advice , satisfies:

| (6) |

The essence of differential privacy is to guarantee that the query results of two neighboring datasets have a very high probability to be the same. Similarly, in differential advising, we set that the query results of two neighboring -tables have a very high probability to be the same, so that an agent querying to two neighboring -tables can receive almost the same knowledge. Here, two -tables are neighboring if 1) they have one record in difference and 2) the two different records correspond to two neighboring states. The query in differential advising is known as differentially private selection [23] which produces the best answer from a space of outcomes. To implement differential advising, we follow the spirit of the Laplace mechanism by adding Laplace noise on query results to mask the difference between two neighboring -tables. The detail will be given in the next section.

Table I describes the notations and terms used in this paper.

| notations | meaning | ||

|---|---|---|---|

| a set of states of the environment | |||

| a set of actions available to an agent | |||

|

|||

|

|||

|

|||

| learning rates, both of which are in | |||

| a discount factor which is in | |||

| the sensitivity of a query | |||

| the sensitivity of a score function | |||

| privacy budget | |||

| the scale parameter in Laplace mechanism | |||

| , | privacy budget | ||

|

|||

| the communication budget of an agent | |||

| the probability that an agent asks for advice | |||

| the probability that an agent provides advice |

4 The Differential Advising Method

Our method is developed in a simultaneous learning framework, where agents are learning simultaneously and can be in both the roles of adviser and advisee. Each agent has a communication budget to control its communication overhead. Every time when an agent asks for/provides advice from/to another agent, agent ’s communication budget is deducted by till the budget is used up. Here, we use a combined communication budget for asking for and giving advice, instead of two separate budgets, because 1) using a combined budget is more suitable for the real-world applications than using two separate budgets, and 2) using a combined budget can simplify the description of our method. For example, in wireless sensor networks, the communication budget of a sensor is based only on its battery power irrespective of the communication types.

Each agent has the following knowledge:

-

1.

its available actions in each state;

-

2.

the -value of each available action;

-

3.

the existence of its neighboring agents;

Moreover, we assume that 1) a slight change in a state will not significantly change the reward function of an agent, and 2) an agent in two neighboring states has the same action set.

4.1 Overview of the method

In our method, during advising, agents have to address the following three sub-problems:

-

•

whether to ask for advice;

-

•

whether to give advice;

-

•

how to use advice.

The overview of our method is outlined in Fig. 2, and the detail of our method is formally given in Algorithm 1. In summary, Algorithm 1 describes the workflow of our method, which consists of two parts: the advising part (Lines 1-15) and the learning part (Lines 16-23). The advising part of Algorithm 1 depicts the process of an advisee agent asking for and making use of advice. The detail of making use of advice is given in Algorithm 2, where the differential privacy mechanism is introduced. The learning part of Algorithm 1 is a regular reinforcement learning process.

In Lines 1-3, at time step , agent observes a state , and decides whether to ask for advice from other agents (Section 4.2). Specifically, an advice is the -value vector of a state: . In Lines 4 and 5, if agent decides to ask for advice, agent checks whether it has a neighboring state itself. If so, agent takes as a self-advice. Agent , thereafter, uses Algorithm 2, a differentially private algorithm, to process this advice and selects an action based on this advice (Line 6). Here, allowing an agent to query itself can conserve its communication budget. This is called “self-advising”. In Lines 8 and 9, if agent has not visited any neighboring states, agent sends a broadcast message to all communication-reachable, i.e., neighboring, agents and its communication budget is deducted by . If any neighboring agent decides to offer advice, agent sends the advice back to agent and agent ’s communication budget is deducted by (Section 4.3).

In Lines 10 and 11, once agent receives the advice from agent , agent checks whether this advice is created based on state or a neighboring state of . If the advice is created based on , agent directly uses this advice to choose an action (Line 12). Otherwise, in Lines 13 and 14, agent uses Algorithm 2 to modify this advice and then chooses an action by following the modified advice (Section 4.4). In the case that agent receives advice from multiple agents, it selects the advice whose state has the minimum difference to agent ’s current state (recall Equation 4). In the case that no advice is received or agent decides not to ask for advice, agent simply chooses an action based on its own experience (Lines 16-23). Specifically, Lines 16-23 describe a regular reinforcement learning process. In Line 16, agent randomly selects an action based on the probability distribution over its available actions in state . Then, in Line 17, agent performs the selected action and receives a reward . In Line 18, agent uses reward to update the -value of the selected action. In Line 19, agent computes an average reward using the probability distribution in state to multiply the -values of actions in state . After that, in Lines 20 and 21, agent adopts the average reward to update the probability distribution . In Line 22, is normalized to be a valid probability distribution, where each element in is in and the sum of all the elements is . In Line 23, the learning rate is decayed to guarantee the convergence of the algorithm.

It has to be noted that in our method, an advice is a -vector instead of an action suggestion or a -function. This is because our method is differential advising rather than direct advising, and using -vectors enables advisee agents to properly modify the advice before they take it. By contrast, modifying -vectors is easier and more reliable than modifying action suggestions and -functions. Moreover, using -vectors as advice does not mean adviser agents must have a complete -table. As long as an adviser agent has the -values of the concerned state (or its neighboring state ), the adviser can offer advice.

4.2 Deciding whether to ask for advice

At each time step, an agent, , observes a state . Agent decides whether to ask for advice based on probability (Equation 7). A random number between and is generated to compare with . If the random number is less than , agent asks for advice.

The calculation of is based on 1) agent ’s confidence in current state , and 2) agent ’s remaining communication budget, . Agent ’s confidence in state depends on how many times agent has visited state , denoted as . A higher value means a higher confidence and results in a lower probability of asking for advice. Agent ’s remaining communication budget depends on that how many times agent has asked for/provided advice from/to others. Once agent asks for advice, its communication budget is reduced by : . A lower remaining communication budget results in a lower probability of asking for advice. Formally, the probability function takes and as input, and outputs a probability ranged in . The calculation of probability is shown in Equation 7, where square root is used to reduce the decay speed of , and the positive integer, , is a threshold used to avoid agent using up its communication budget in very early stages.

| (7) |

If agent decides to ask for advice, agent checks whether it has any neighboring states. This check is based on Equation 4 to compute the difference between the new state and the stored states of agent . If the difference between and a stored state is less than or equal to , that stored state is a neighboring state of .

On one hand, if agent has a set of neighboring states, agent selects the neighboring state , which has the minimum difference to its current state . Agent utilizes the proposed differential advising method to modify and use the modified to take an action (Section 4.4). On the other hand, if agent does not have any neighboring states, it asks for advice from its neighboring agents (Section 4.3).

4.3 Deciding whether to give advice

When an agent, , receives an advice request message from agent , agent needs to decide whether to provide advice to agent . This decision is based on probability (Equation 8). The calculation of is based on 1) agent ’s confidence in agent ’s concerned state or neighboring states of , and 2) agent ’s remaining communication budget . If agent has more than one neighboring state, agent uses the one which has the minimum difference to state . The higher the confidence of agent , the higher the probability agent will provide advice. The lower remaining communication budget of agent , the lower probability agent will provide advice.

Formally, the probability function takes and as input, and outputs a probability ranged in . Here, for simplicity, we use to denote the number of times that agent has visited either state or a neighboring state of , and to denote the remaining communication budget of agent . Once agent gives advice, its remaining communication budget is reduced by : . It should be noted that agent does not need to know the full state space to find neighboring states, because in some environments, it is infeasible for any individual agent to know the full state space, such as the multi-robot example in Section 1. Thus, agent simply checks the states which it has visited. If all the visited states are not neighboring to the concerned state, this means that agent does not have the experience related to the concerned state and should not offer any advice to agent . Formally, the calculation of is given in Equation 8.

| (8) |

In Equation 8, we set a thresholds for adviser agents. If an adviser agent has visited a state for less than times, that means the adviser agent is less experienced than the advisee agent in state and thus the adviser agent is not allowed to offer advice to the advisee agent for that state. This setting is based on the fact that in theory, an adviser agent can generate advice for any possible states, even if the adviser agent has never visited those states. For example, an adviser agent can generate advice for a never visited state using the initialized -values of actions in that state. However, since the -value of each action can be randomly initialized, such advice is useless and may even harm the learning performance of the advisee.

4.4 How to use advice

When agent receives agent ’s advice, , agent checks whether its concerned state is the same as state . The aim of this check is to decide whether this advice is created based on the same state () or a neighboring state (). If , agent directly takes agent ’s advice and selects an action based on agent ’s advice. If , agent uses the Laplace mechanism to add noise to agent ’s advice, , shown in Algorithm 2. Here is an example. Agent arrives at state s and asks for advice from agent . Agent has visited three states: and are confident in these states. After calculation, agent detects that none of the three states is the same as state and only is a neighboring state of . Thus, agent sends its advice, Q-vector , to agent . After agent receives this advice, agent identifies that this advice is created based on a neighboring state . Agent adopts Algorithm 2 to add Laplace noise to the advice , and then uses the modified advice to guide its action selection.

In Lines 2 and 3, agent ’s -values are adjusted by adding Laplace noise. Specifically, the Laplace noise is added to each of the -values: , where each noise, , is a random number based on the Laplace distribution. Based on the adjusted -values, in Lines 4-6, agent computes its average reward in state and updates its probability distribution . Note that the computation of the average reward uses the probability distribution in state to multiply the -values of the available actions in state . As we have the assumption that an agent has the same action set in two neighboring states, this multiplication is valid. In Line 7, is normalized to be a valid probability distribution.

In the Laplace mechanism in Line 3, is the sensitivity of -values (recall Definition 5). Typically, is based on -functions, and -functions are based on rewards. Thus, essentially, is based on rewards, and we can use rewards to approximately compute . We have to notice that it is infeasible to accurately compute before the learning starts, because the accurate computation of needs the final -values of all the states, and these final -values can only be obtained when the learning finishes.

We use an example to demonstrate how to approximately compute . Definition 5 states that is the maximum difference between two -vectors of any two neighboring states. In the multi-robot problem exemplified in Section 1, for any two neighboring states, the maximum reward difference is given that the maximum reward is finding a victim and the minimum reward is hitting an obstacle. This is because in any two neighboring states, only one dimension, i.e., one cell, is different. This different cell incurs a maximum reward difference, if the cell has a victim in a state while has an obstacle in the other state. Moreover, -value is usually updated based on a learning rate . Thus, in the multi-robot problem, can be approximately computed as . When we set , then .

The rationale of Algorithm 2 is discussed as follows. According to Definition 3 in Section 3.2, the Laplace mechanism is used to add Laplace noise to the numerical output of a query to a dataset , so that both the privacy and the utility of the output can be guaranteed. Typically, a query maps a dataset to real numbers: . In comparison, in advising learning, the -table of an adviser agent is interpreted as a dataset, where each entry in the -table is the -value of a pair of a state and an action, . Advice request from an advisee agent is interpreted as the query to the Q-table of the adviser agent. The output of the query is the advice of the adviser agent, which consists of real numbers, , and is the number of actions in state . As discussed in Section 3.3, we have connected the properties of differential privacy and the properties of differential advising. Hence, by adding Laplace noise to , both the validity and utility of the advice can be guaranteed, as shown in Theorem 1, 2 and 3 in Section 5. Thus, in state , agent can still use the advice , which is created based on a neighboring state .

5 Theoretical Analysis

5.1 Differential advising analysis

Lemma 1.

Any methods, which satisfy -differential privacy and meet the input and output requirements of in Definition 6, also satisfy -differential advising.

Proof.

In Definition 2, the input of can be any type of dataset, while the output can be any valid results. In Definition 6, the input of is a state which is a vector, while the output is also a vector . Thus, Definition 6 can be considered a special case of Definition 2. Formally, let and , where is a data universe with attributes and is a state space. As is a data universe and can be arbitrarily large, we have . Similarly, we also have . Therefore, any methods, which satisfy -differential privacy and meet the input and output requirements of ,also satisfy -differential advising. ∎

Lemma 1 creates a link between differential privacy and differential advising. Then, differential privacy mechanisms, e.g., the Laplace mechanism, can be used to implement differential advising.

Theorem 1.

The proposed method is -differential advising.

Proof.

According to Lemma 1, to prove this theorem, we need only to prove that the proposed method satisfies -differential privacy. In the proposed method, Algorithm 2 utilizes a differential privacy mechanism, i.e., the Laplace mechanism, in Line 3. We re-write Line 3 in the -vector form as , where are random noise sampled from and is the number of actions in state . Since the Laplace mechanism is differentially private, by comparing Equation 3 with the re-written equation of Line 3, we can conclude that satisfies -differential privacy. ∎

Theorem 1 theoretically proves that the proposed method is a valid differential advising method which enables agents to use advice created based on similar states. Moreover, the parameter is used to control the amount of noise added to an advice. The tuning of is left to users.

5.2 Average reward analysis

As shown in Line 4, Algorithm 2, the average reward of an agent is: . We will demonstrate that with a very high probability, the average reward of an agent using differential advising is greater than the average reward without using differential advising.

Definition 7 ((,)-useful).

A differential advising multi-agent system is (,)-useful if for each agent, we have , where and are the average rewards of an agent using and without using differential advising, respectively, and and .

Theorem 2.

For any , with probability at least , the average reward of an agent using differential advising is greater than the average reward without using it. The difference is bounded by , which satisfies , where is the number of learning iterations.

Proof.

The average reward of an agent is . After applying the Laplace mechanism, the average reward becomes . Now, we need to prove that .

According to calculation, we have . Thus, . Because , we have . The property of is presented in Lemma 2.

Lemma 2.

(Laplace Random Variables). For every ,

| (9) |

Proof.

The proof of this lemma can be found in [24]. ∎

Based on this property, we have .

Lemma 2 demonstrates the probability in one learning iteration. If an agent learns iterations, the probability becomes . To guarantee , we have . After calculation, we have . As , we have . ∎

In this proof, we use the average reward equation: . This equation is valid to correctly estimate an agent’s average reward, only if the agent has a good estimation of the -table. Hence, this theorem holds in late learning stages and reveals the final results.

Remark 1: The upper bound of , , relies on . Let . Hence, is monotonically decreasing with the increase of . Specifically, when , , and when , . This means that in early stages, using differential advising can significantly increase agents’ average rewards. However, as time progresses, the improvement of agents’ average rewards decreases. This situation reflects the fact that in early stages, an agent is not knowledgable, so other agents’ knowledge can give the agent significant help. As time progresses, the agent accumulates enough knowledge, and thus other agents’ knowledge is trivial to the agent.

Theorem 2 theoretically demonstrates the effectiveness of our method by analyzing lower bound of an agent’s average reward difference between using and not using our method. The analysis result shows that the lower bound of the average reward difference is positive with a very high probability. This means that the agent using our method can receive a higher average reward than not using our method with a very high probability. Given that the average reward of using and not using the proposed method is and , respectively, the parameter is used to measure the lower bound of the average reward difference, . Particularly, when , the lower bound is a small positive number; and when , the lower bound tends to be . This means that the average reward difference, , has a high probability, , to be a small positive number, while has a low probability to be very large. Certainly, the average reward difference, , cannot be . Thus, for the completeness of Theorem 2, as a supplement, Theorem 3 gives the upper bound of this difference, .

Theorem 3.

Let be the number of actions in state . Let and . Then , where is the number of learning iterations.

Proof.

As shown in Theorem 2, we have . Since each is in , we have . Thus, we have . The property of is given in Lemma 3.

Lemma 3.

(Sum of Laplace random variables) Let be independent variables with distribution , then .

Proof.

The proof of this lemma can be found in [23]. ∎

Lemma 3 demonstrates the probability in one learning iteration. If an agent learns iterations, the probability becomes . ∎

According to Theorem 2 and 3, it can be found that the performance of the proposed method is affected by the advice sensitivity , and a smaller means a better performance. Since advice sensitivity, , is based on the -values of states, we can conclude that the proposed method can work well in the environments where the -values of any pair of neighboring states have a small difference.

5.3 Convergence analysis

In Algorithm 1, let be the learning rate at time step . Since , we can conclude that . Let be the index of the th time that action is performed in state . For example, suppose that the st time that action is performed in state is at time step , then . To prove the convergence of Algorithm 1, we need the results of Lemma 4 [25] and Lemma 5 [19].

Lemma 4.

A series, , converges if and only if for any given small positive number , there is always an integer , such that when , for any positive integer , the inequality holds: .

It should be noted that in Lemma 4, if , the series, , does not converge.

Lemma 5.

Given bounded rewards and learning rate , for any pair of state and action , if series is bounded, i.e., , and series is unbounded, i.e., , then, when , converges, with probability , to the optimal -value, i.e., the expected reward.

Theorem 4.

In Algorithm 1, for any pair of state and action , as , converges, with probability , to the expected reward of an agent performing action in state .

Proof.

Based on Lemma 5, to prove the convergence, we need only to prove that in Algorithm 1, and always hold for any pair of state and action .

First, we prove that always holds. It has been known that , where means time step, a positive integer. Then, the following inequality can be concluded: .

Then, we prove that always holds. Given a small positive number and a positive integer , let . Here, is the mapping lower bound, a small positive number, used to normalize probability distribution , and is the probability with which an agent observes state . As , there is at least one integer , such that in th, th, …, th time steps, the number of times that action is performed in state is greater than or equal to . Therefore, . Based on Lemma 4, as , the series, , does not converge, which means that .

Theorem 4 theoretically proves the convergence of our method, which means that agents using our method can finally receive their expected rewards.

6 Experiments

6.1 Experimental setup

Two experimental scenarios are used to evaluate the proposed differential advising method, denoted as DA-RL (differential advising reinforcement learning).

6.1.1 Scenario 1

The first scenario is the multi-robot problem [26] shown in Fig. 1. Each agent represents a robot which has four actions: up, down, left and right. An observation of an agent is interpreted as its state which consists of dimensions. The aim of the agents is to achieve the targets on the map, which could be victims in search and rescue or rubbish in office cleaning. The reward for achieving a target is set to . The reward for hitting an obstacle is set to . The reward for moving a step is . The reward in each situation is pre-defined as knowledge to each agent. For example, when an agent achieves a target, its reward is automatically added by , while when the agent hits an obstacle, its reward is automatically reduced by . Moreover, agents cannot be at the same cell at the same time step.

The first scenario is classified as three settings. Setting 1 (Static): agents achieve fixed targets. Setting 2 (Dynamic 1): agents achieve dynamically generated targets. Setting 3 (Dynamic 2): agents achieve dynamically moving targets.

The size of environments varies from cells to cells. The number of agents varies from to . The number of targets varies from to . The number of obstacles varies from to .

In this scenario, three evaluation metrics are used.

Metric 1 (Average time): the number of time steps to achieve all targets in average in one learning round. This metric is specific for the first and third settings: Static and Dynamic 2. Here, a learning round is a period during which the agents achieve all the targets.

Metric 2 (Average time for one target): the number of time steps to achieve one target in average in one learning round. This metric is specific for the second setting: Dynamic 1. The reason of using different metrics in different settings will be given at the end of this section.

Metric 3 (Average hit): the number of hits to obstacles in average in one learning round.

6.1.2 Scenario 2

This scenario is the multi-agent load balancing [27]. Each agent represents a factory. Each factory has a backlog which stores a set of types of items. Let the number of item types be and the maximum stock for each type be . A state of an agent is the current number of items of each type in stock. A state consists of dimensions.

At each time step, a random item from each agent is processed with a given probability , and a new item arrives at each agent with another given probability . The actions of an agent include whether or not to pass an arrived item to another agent. The reward of an agent is based on its backlog: , where is the importance weight of items of type and is the current number of items of type . In addition, if agent passes an item to agent , agent ’s reward is reduced by as a cost for redistribution. The aim of agents is to maximize their accumulated reward.

The number of agents varies from to . Item types varies from to , where for two types, the weights are set to and ; for three types, the weights are set to , and ; and so on. The maximum stock for each type of item varies from to . The probability of processing an item varies from to . The probability of arriving at an item is set to .

The evaluation metric is the average reward of all the agents in one learning round. Here, a learning round means a pre-defined number of learning iterations.

6.1.3 The methods for comparison

In this experiment, two methods are involved for comparison. The first method is a regular reinforcement learning method, denoted as RL, which does not include any advising. The RL method is used as a comparison standard. The second method is from [2], denoted as SA-RL (simultaneous advising reinforcement learning). The SA-RL method is an Ad hoc TD advising method, where agents simultaneously and independently learn and share advice when needed. The SA-RL method is similar to our method. The difference includes 1) the SA-RL method allows agents to offer advice only in the same states; and 2) agents in the SA-RL method transfers recommended actions as advice. Moreover, the SA-RL method involves two parameters, and , used to control the probability of asking for and giving advice, respectively. A higher value results in a lower probability of asking for advice, while a higher results in a higher probability of giving advice. The SA-RL method is used to demonstrate the effectiveness of the differential privacy technique on agent advising.

The values of the parameters used in the experiments are set as follows: , , , , and . The values of these parameters are experimentally chosen to yield good results. Moreover, in Scenario 1, while in Scenario 2. The experimental results are obtained by averaging the results of runs.

6.2 Experimental results of scenario 1

6.2.1 The first setting: agents achieve targets

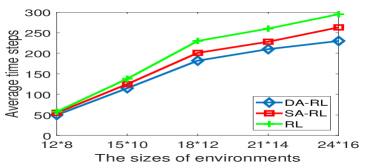

Fig. 3 demonstrates the performance of the three methods in different sizes of environments in the first setting. It can be seen that with the increase of the environmental size, in all three methods, agents take more steps to achieve targets and hit more obstacles. This is because with the increase of size, more obstacles are there in the environments, which inevitably increases the difficulty for agents to achieve targets.

In Fig. 3(a), when the environmental size is smaller than , the performance difference among the three methods is small. However, when the environmental size is larger than , the performance difference in average time steps enlarges up to between DA-RL and RL, and between DA-RL and SA-RL, respectively. In addition, in Fig. 3(b), the performance difference in average hits enlarges up to between DA-RL and RL, and between DA-RL and SA-RL, respectively. This is because when the environmental size increases, the number of targets and obstacles also increases. Thus, the number of states of each agent may increase as well. In this situation, since DA-RL and SA-RL enable agent advising, their performance is better than RL. Moreover, as DA-RL adopts the differential privacy technique, agents using DA-RL can take more advice than using SA-RL. Thus, the performance of DA-RL is better than SA-RL.

This result shows that by using agent advising, agents can ask for advice about the positions of obstacles and targets. Thus, agents’ learning performance can be improved. Moreover, in the DA-RL method, an agent’s advice, created in one state, can also be used by other agents in neighboring states, which further improves those agents’ learning performance.

Fig. 4 shows the performance of the three methods as time progresses in the first setting. The size of the environment is set to ; the number of agents is set to ; the number of targets is set to ; and the number of obstacles is set to . In Figs. 4(a), DA-RL and SA-RL methods continuously outperform RL method. Moreover, DA-RL and SA-RL methods converge faster than RL method. This can be explained by the fact that agents using DA-RL and SA-RL methods can ask for advice between each other, which can significantly improve the learning performance, especially in early stages. In Fig. 4(b), the number of average hits are almost the same among the three methods in late stages. This is because the positions of obstacles are fixed and agents using these methods have the learning ability. As time progresses, the positions of obstacles can be gradually memorized by agents. Thus, the number of hits decreases and finally converges to a stable point.

6.2.2 The second setting: agents achieve dynamically generated targets

Fig. 5 demonstrates the performance of the three methods in different sizes of environments in the second setting. It can be seen that the proposed DA-RL method outperforms the other two methods.

Compared to the first setting (Fig. 3(a)), all the three methods need more time steps to achieve targets in the second setting (Fig. 5(a)). This is because in the second setting, new targets are dynamically introduced. It is unavoidable to take more time steps to achieve these new targets.

The average number of hits in the three methods in the second setting (Fig. 5(b)) is almost the same as that in the first setting (Fig. 3(b)). In both first and second settings, the number and positions of obstacles are fixed. Therefore, the positions of obstacles can be learned by agents. Agents then can avoid the obstacles to some extent.

Fig. 6 shows the performance of the three methods as time progresses in the second setting. The size of the environment is set to ; the number of agents is set to ; the number of targets is set to ; and the number of obstacles is set to . In Fig. 6(a), as time progresses, the average number of time steps for one target in the three methods decreases in a fluctuant manner. This is caused by the introduction of new targets. The introduction of new targets implies that new knowledge is introduced in environments. Thus, agents need time to learn the new knowledge, which results in the increase of the number of time steps. In Fig. 6(b), as time progresses, the average number of hits gradually decreases in the three methods, because the positions of obstacles can be learned and thus obstacles can be avoided.

6.2.3 The third setting: agents achieve dynamically moving targets

Fig. 7 demonstrates the performance of the three methods in different sizes of environments in the third scenario. Again, the proposed DA-RL method outperforms the other two methods. Comparing Fig. 7 and Fig. 5, the performance trend of the three methods in the third setting is similar to the second setting. This is because both the second and third settings are dynamic, which means that agents need more time steps to achieve targets than in the first setting.

Fig. 8 shows the performance of the three methods as time progresses in the third setting. The size of the environment is set to ; the number of agents is set to ; the number of targets is set to ; and the number of obstacles is set to . Unlike the second scenario (Fig. 6(a)), there is no fluctuation in the three methods in the third setting (Fig. 8(a)). This is because in the third setting, although targets are moving, the number of targets does not increase. For example, there are targets in an environment. In the third setting, the experiment finishes when all the targets are achieved. In the second setting, however, there may be new targets introduced. The experiment will not finish until all the and the new targets are achieved. If new targets are generated constantly, the experiment is hard to finish. This means that in the second setting, the completion of an experiment heavily depends on the generation probability of new targets. Hence, there is more fluctuation in the second setting than in the third setting.

6.3 Experimental results of scenario 2

Fig. 9(a) demonstrates the performance of the three methods with different number of agents, where the number of item types is set to , the maximum stock of each item type is set to , and the probability of processing an item is set to . In Fig. 9(a), with the increase of the number of agents, the average reward of agents in the three methods also rises. When the number of agents increases, each agent can ask for advice from more agents. Thus, agents can learn faster and receive more reward. However, it should also be noted that when the number of agents is larger than , the average reward keeps almost steady. This can be explained by the fact that in a given environment, the amount of knowledge is limited. Agents can share knowledge but cannot create new knowledge. When the number of agents is large enough to guarantee a good learning speed, increasing the number of agents does not improve agents’ learning speed or increase agents’ reward.

Fig. 9(b) shows the performance of the three methods with different number of item types, where the number of agents is set to , the maximum stock of each item type is set to , and the probability of processing an item is set to . In Fig. 9(b), with the increase of the number of item types, the average reward of agents in the three methods reduces gradually. When the number of item types increases, the dimension of states of each agent also increases. This decreases the learning speed of agents, which reduces the average reward of agents as agents have more opportunity to make non-optimal decisions.

Fig. 9(c) displays the performance of the three methods with different maximum stock, where the number of agents is set to , the number of item types is set to , and the probability of processing an item is set to . In Fig. 9(c), with the increase of the maximum stock, the average reward of agents in the three methods increases gracefully. When the stock capacity of agents increases, agents prefer to store new items instead of redistributing them. This preference will augment agents’ average reward, because, based on the experimental setting, storing an item can incur more reward than redistributing it.

Fig. 9(d) exhibits the performance of the three methods with different probabilities of processing an item, where the number of agents is set to , the number of item types is set to , and the maximum stock of each item type is set to . In Fig. 9(d), with the increase of processing probability, the average reward of agents in the three methods rises. When the processing probability increases, items are processed faster and hence, the stock amount of each agent reduces. This improves the average reward of agents, as the reward is based on stock amount in the experimental setting. However, it should also be noted that when the processing probability is too large, e.g., larger than , the difference between the three methods reduces. As mentioned before, a large processing probability implies a small stock amount. Since the state of an agent is based on the stock amount, i.e., the number of items of each item type, small stock amount means a small number of states. Because each agent observes only a small number of states, after a short learning process, each agent is confident in these states and does not ask for advice any more. If agents do not ask for advice, the DA-RL and SA-RL methods are the same as RL method. Hence, they have similar results.

In the above four situations, the proposed DA-RL method outperforms the SA-RL and RL methods, though the performance of the three methods has a similar trend. According to the experimental results, the advantage of differential advising has been empirically proven.

Fig. 10 demonstrates the performance of the three methods as learning progresses. Fig. 10(a) shows the results in a simple environment. The number of agents is set to , the number of item types is set to , the maximum stock of each item type is set to , and the probability of processing an item is set to . Fig. 10(b) shows the results in a complex environment. The number of agents is set to , the number of item types is set to , the maximum stock of each item type is set to , and the probability of processing an item is set to .

In Fig. 10(a), the three methods converge in very early stages (around learning iterations). In comparison, in Fig. 10(b), the three methods converge much slower, where DA-RL converges at around learning iterations, SA-RL converges at around iterations, and RL converges at around iterations. This can be explained by the fact that in the complex environment, the number of states is much more than the number of states in the simple environment. As more states typically imply more knowledge, agents have to take more learning iterations to learn the knowledge. Therefore, the three methods converge slower in the complex environment than in the simple environment. However, the proposed DA-RL method in the complex environment converges faster than the SA-RL and RL methods. This result demonstrates that by using differential advising, agents in the proposed DA-RL method learn faster than the other two methods.

6.4 Discussion and summary

6.4.1 The metric used in the second setting of the first scenario

In the second setting of the first scenario (Fig. 6(a)), we use the metric, average number of time steps for one target, instead of the metric, average number of time steps for all targets, used in the first and third scenarios.

The average number of time steps for all targets in the second scenario does not converge as time progresses in the three methods. This is because agents in the three methods have learning ability. The aim of learning is to maximize agents’ long-term accumulated rewards. In the experiments, an agent can receive a positive reward only when the agent achieves a target. In the second setting, new targets are introduced dynamically. This motivates agents to stay in the experiment to keep achieving targets so as to increase their accumulated rewards. Therefore, the average number of time steps for achieving all the targets in the second scenario increases gradually. However, this does not mean that the three methods do not converge in other aspects in the second setting. Although the number of time steps in the three methods increases, the number of achieved targets also increases. Since the average number of time steps for one target is the ratio between the total number of time steps and the total number of achieved targets, the increased number of achieved targets can offset the increased number of time steps. Thus, the average number of time steps for one target still converges (Fig. 6(a)).

6.4.2 Performance of the three methods

According to the experimental results in the first scenario, the average time steps used in the DA-RL method is fewer than the other two methods in all the three settings: about and fewer than the SA-RL and RL methods, respectively. Moreover, the DA-RL converges about and faster than the SA-RL and RL methods, respectively. In the second scenario, the average reward of agents in the DA-RL method is about and more than the SA-RL and RL methods, respectively. In addition, the DA-RL method converges about and faster than the SA-RL and RL methods, respectively. In summary, the proposed DA-RL method outperforms the other two methods in both scenarios.

7 Conclusion and Future Work

This paper proposed a differential advising method for multi-agent reinforcement learning. This method is the first to allow agents in one state to use advice created in another different state. This method is also the first to take advantage of the differential privacy technique for agent advising to improving learning performance instead of preserving privacy. Experimental results demonstrate that our method outperforms other benchmark methods in various aspects.

In this paper, we have an assumption that in two similar states, the applicability and adequacy of actions to take are similar. This assumption, however, are not applicable in some situations. For example, the good actions to take in a rainy day can become the bad actions to take in a sunny day. In the future, we will relax this assumption by assigning weights to dimensions of states. Moreover, our method mainly focuses on discrete states. It is interesting to extend our method to continuous environments. An intuitive way for the extension is to discretize continuous states to discrete states. Finally, extending our method to multi-agent deep reinforcement learning is also interesting future work. A potential way is to allow agents to transfer experience samples as advice. However, if the number of transferred samples is large, how to select high-quality samples is a challenge to the adviser agent.

References

- [1] F. L. D. Silva, R. Glatt, and A. H. R. Costa, “MOO-MDP: An Object-Oriented Representation for Cooperative Multiagent Reinforcement Learning,” IEEE Trans. on Cybernetics, vol. 49, pp. 567–579, 2019.

- [2] F. L. D. Silva, R. Glatt, and A. H. R. Costa, “Simultaneously Learning and Advising in Multiagent Reinforcement Learning,” in Proc. of AAMAS, Brazil, May 2017, pp. 1100–1108.

- [3] F. L. D. Silva, M. E. Taylor, and A. H. R. Costa, “Autonomously Reusing Knowledge in Multiagent Reinforcement Learning,” in Proc. of IJCAI 2018, Sweden, July 2018, pp. 5487–5493.

- [4] F. L. D. Silva, “Integrating Agent Advice and Previous Task Solutions in Multiagent Reinforcement Learning,” in AAMAS, 2019, pp. 2447–2448.

- [5] F. L. D. Silva and A. H. R. Costa, “A Survey on Transfer Learning for Multiagent Reinforcement Learning Systems,” Journal of Artificial Intelligence Research, vol. 64, pp. 645–703, 2019.

- [6] D. Ye, T. Zhu, W. Zhou, and P. S. Yu, “Differentially Private Malicious Agent Avoidance in Multiagent Advising Learning,” IEEE Transactions on Cybernetics, vol. 50, no. 10, pp. 4214–4227, 2020.

- [7] C. Dwork, “Differential privacy,” in Proc. of ICALP, 2006, pp. 1–12.

- [8] T. Zhu, G. Li, W. Zhou, and P. S. Yu, “Differentially private data publishing and analysis: A survey,” IEEE Transactions on Knowledge and Data Engineering, vol. 29, no. 8, pp. 1619–1638, 2017.

- [9] L. Torrey and M. E. Taylor, “Teaching on A Budget: Agents Advising Agents in Reinforcement Learning,” in AAMAS, 2013, pp. 1053–1060.

- [10] O. Amir, E. Kamar, A. Kolobov, and B. Grosz, “Interactive Teaching Strategies for Agent Training,” in Proc. of IJCAI, 2016, pp. 804–811.

- [11] Y. Wang, W. Lu, J. Hao, J. Wei, and H. Leung, “Efficient Convention Emergence through Decoupled Reinforcement Social Learning with Teacher-Student Mechanism,” in Proc. of AAMAS, 2018, pp. 795–803.

- [12] S. Omidshafiei, D. Kim, M. Liu, G. Tesauro, M. Riemer, C. Amato, M. Campbell, and J. P. How, “Learning to Teach in Cooperative Multiagent Reinforcement Learning,” in AAAI, 2019, pp. 6128–6136.

- [13] C. Zhu, H. Leung, S. Hu, and Y. Cai, “A Q-values Sharing Framework for Multiple Independent Q-learners,” in AAMAS, 2019, pp. 2324–2326.

- [14] E. Ilhan, J. Gow, and D. Perez-Liebana, “Teaching on a Budget in Multi-Agent Deep Reinforcement Learning,” in Proc. of IEEE Conference on Games, 2019.

- [15] A. Fachantidis, M. E. Taylor, and I. Vlahavas, “Learning to Teach Reinforcement Learning Agents,” ML and Knowledge Extraction, vol. 1, pp. 21–42, 2018.

- [16] V. Gupta, D. Anand, P. Paruchuri, and B. Ravindran, “Advice Replay Approach for Richer Knowledge Transfer in Teacher Student Framework,” in Proc. of AAMAS, 2019, pp. 1997–1999.

- [17] L. Vanhee, L. Jeanpierre, and A.-I. Mouaddib, “Augmenting Markong Decision Processes with Advising,” in AAAI, 2019, pp. 2531–2538.

- [18] P. S. Castro, “Scalable Methods for Computing State Similarity in Deterministic Markov Decision Processes,” in AAAI, 2020, pp. 10 069–10 076.

- [19] C. J. Watkins, “Q-Learning,” Machine Learning, 1992.

- [20] T. Zhu, D. Ye, W. Wang, W. Zhou, and P. S. Yu, “More Than Privacy: Applying Differential Privacy in Key Areas of Artificial Intelligence,” IEEE Transactions on Knowledge and Data Engineering, p. DOI: 10.1109/TKDE.2020.3014246, 2020.

- [21] D. Ye, T. Zhu, S. Shen, W. Zhou, and P. S. Yu, “Differentially Private Multi-Agent Planning for Logistic-like Problems,” IEEE Transactions on Dependable and Secure Computing, p. DOI: 10.1109/TDSC.2020.3017497, 2020.

- [22] D. Ye, T. Zhu, S. Shen, and W. Zhou, “A Differentially Private Game Theoretic Approach for Deceiving Cyber Adversaries,” IEEE Transactions on Information Forensics and Security, vol. 16, pp. 569–584, 2021.

- [23] C. Dwork and A. Roth, “The Algorithmic Foundations of Differential Privacy,” Foundations and Trends in Theoretical Computer Science, vol. 9, no. 3-4, pp. 211–407, 2014.

- [24] S. Kasiviswanathan, H. Lee, K. Nissim, S. Raskhodnikova, and A. Smith, “What can we learn privately?” in Proc. of Annual IEEE Symposium on Foundations of Computer Science, 2008, pp. 531–540.

- [25] H. Anton, I. Bivens, and S. Davis, Calculus. Wiley, 2012.

- [26] Y. Wang and C. W. D. Silva, “A Machine-Learning Approach to Multi-Robot Coordination,” Engineering Applications of Artificial Intelligence, vol. 21, no. 3, pp. 470–484, 2008.

- [27] J. Sakuma, S. Kobayashi, and R. N. Wright, “Privacy-Preserving Reinforcement Learning,” in Proc. of ICML, 2008.