Differentiable Neural Networks with RePU Activation: with Applications to Score Estimation and Isotonic Regression

Abstract

We study the properties of differentiable neural networks activated by rectified power unit (RePU) functions. We show that the partial derivatives of RePU neural networks can be represented by RePUs mixed-activated networks and derive upper bounds for the complexity of the function class of derivatives of RePUs networks. We establish error bounds for simultaneously approximating smooth functions and their derivatives using RePU-activated deep neural networks. Furthermore, we derive improved approximation error bounds when data has an approximate low-dimensional support, demonstrating the ability of RePU networks to mitigate the curse of dimensionality. To illustrate the usefulness of our results, we consider a deep score matching estimator (DSME) and propose a penalized deep isotonic regression (PDIR) using RePU networks. We establish non-asymptotic excess risk bounds for DSME and PDIR under the assumption that the target functions belong to a class of smooth functions. We also show that PDIR achieves the minimax optimal convergence rate and has a robustness property in the sense it is consistent with vanishing penalty parameters even when the monotonicity assumption is not satisfied. Furthermore, if the data distribution is supported on an approximate low-dimensional manifold, we show that DSME and PDIR can mitigate the curse of dimensionality.

Keywords: Approximation error, curse of dimensionality, differentiable neural networks, isotonic regression, score matching

1 Introduction

In many statistical problems, it is important to estimate the derivatives of a target function, in addition to estimating a target function itself. An example is the score matching method for distribution learning through score function estimation (Hyvärinen and Dayan, 2005). In this method, the objective function involves the partial derivatives of the score function. Another example is a newly proposed penalized approach for isotonic regression described below, in which the partial derivatives are used to form a penalty function to encourage the estimated regression function to be monotonic. Motivated by these problems, we consider Rectified Power Unit (RePU) activated deep neural networks for estimating differentiable target functions. A RePU activation function has continuous derivatives, which makes RePU networks differentiable and suitable for derivative estimation. We study the properties of RePU networks along with their derivatives, establish error bounds for using RePU networks to approximate smooth functions and their derivatives, and apply them to the problems of score estimation and isotonic regression.

1.1 Score matching

Score estimation is an important approach to distribution learning and score function plays a central role in the diffusion-based generative learning (Song et al., 2021; Block et al., 2020; Ho et al., 2020; Lee et al., 2022). Let , where is a probability density function supported on , then -dimensional score function of is defined as , where is the vector differential operator with respect to the input .

A score matching estimator (Hyvärinen and Dayan, 2005) is obtained by solving the minimization problem

| (1) |

where denotes the Euclidean norm, is a prespecified class of functions, often referred to as a hypothesis space. However, this objective function is computationally infeasible because is unknown. Under some mild conditions given in Assumption 9 in Section 4, it can be shown that (Hyvärinen and Dayan, 2005)

| (2) |

with

| (3) |

where denotes the Jacobian matrix of and the trace operator. Since the second term on the right side of (2), , does not involve , it can be considered a constant. Therefore, we can just use in (3) as an objective function for estimating the score function . When a random sample is available, we use a sample version of as the empirical objective function. Since involves the partial derivatives of , we need to compute the derivatives of the functions in during estimation. And we need to analyze the properties of and their derivatives to develop the learning theories. In particular, if we take to be a class of deep neural network functions, we need to study the properties of their derivatives in terms of estimation and approximation.

1.2 Isotonic regression

Isotonic regression is a technique that fits a regression model to observations such that the fitted regression function is non-decreasing (or non-increasing). It is a basic form of shape-constrained estimation and has applications in many areas, such as epidemiology (Morton-Jones et al., 2000), medicine (Diggle et al., 1999; Jiang et al., 2011), econometrics (Horowitz and Lee, 2017), and biostatistics (Rueda et al., 2009; Luss et al., 2012; Qin et al., 2014).

Consider a regression model

| (4) |

where and is an independent noise variable with and In (4), is the underlying regression function, which is usually assumed to belong to certain smooth function class.

In isotonic regression, is assumed to satisfy a monotonicity property as follows. Let denote the binary relation “less than” in the partially ordered space , i.e., if for all where . In isotonic regression, the target regression function is assumed to be coordinate-wisely non-decreasing on , i.e., if The class of isotonic regression functions on is the set of coordinate-wisely non-decreasing functions

The goal is to estimate the target regression function under the constraint that based on an observed sample . For a possibly random function , let the population risk be

| (5) |

where follows the same distribution as and is independent of . Then the target function is the minimizer of the risk over i.e.,

| (6) |

The empirical version of (6) is a constrained minimization problem, which is generally difficult to solve directly. In light of this, we propose a penalized approach for estimating based on the fact that, for a smooth , it is increasing with respect to the th argument if and only if its partial derivative with respect to is nonnegative. Let denote the partial derivative of with respective to We propose the following penalized objective function

| (7) |

where with , are tuning parameters, is a penalty function satisfying for all and if . Feasible choices include , and more generally for a Lipschitz function with .

The objective function (7) turns the constrained isotonic regression problem into a penalized regression problem with penalties on the partial derivatives of the regression function. Therefore, if we analyze the learning theory of estimators in (7) using neural network functions, we need to study the partial derivatives of the neural network functions in terms of their generalization and approximation properties.

It is worth mentioning that an advantage of our penalized formulation over the hard-constrained isotonic regressions is that the resulting estimator remains consistent with proper tuning when the underlying regression function is not monotonic. Therefore, our proposed method has a robustness property against model misspecification. We will discuss this point in detail in Section 5.2.

1.3 Differentiable neural networks

A commonality between the aforementioned two quite different problems is that they both involve the derivatives of the target function, in addition to the target function itself. When deep neural networks are used to parameterize the hypothesis space, the derivatives of deep neural networks must be considered. To study the statistical learning theory for these deep neural methods, it requires the knowledge of the complexity and approximation properties of deep neural networks along with their derivatives.

Complexities of deep neural networks with ReLU and piece-wise polynomial activation functions have been studied by Anthony and Bartlett (1999) and Bartlett et al. (2019). Generalization bounds in terms of the operator norm of neural networks have also been obtained by several authors (Neyshabur et al., 2015; Bartlett et al., 2017; Nagarajan and Kolter, 2019; Wei and Ma, 2019). These generalization results are based on various complexity measures such as Rademacher complexity, VC-dimension, Pseudo-dimension, and norm of parameters. These studies shed light on the complexity and generalization properties of neural networks themselves, however, the complexities of their derivatives remain unclear.

The approximation power of deep neural networks with smooth activation functions has been considered in the literature. The universality of sigmoidal deep neural networks has been established by Mhaskar (1993) and Chui et al. (1994). In addition, the approximation properties of shallow RePU-activated networks were analyzed by Klusowski and Barron (2018) and Siegel and Xu (2022). The approximation rates of deep RePU neural networks for several types of target functions have also been investigated. For instance, Li et al. (2019, 2020), and Ali and Nouy (2021) studied the approximation rates for functions in Sobolev and Besov spaces in terms of the norm, Duan et al. (2021), Abdeljawad and Grohs (2022) studied the approximation rates for functions in Sobolev space in terms of the Sobolev norm, and Belomestny et al. (2022) studied the approximation rates for functions in Hölder space in terms of the Hölder norm. Several recent papers have also studied the approximation of derivatives of smooth functions (Duan et al., 2021; Gühring and Raslan, 2021; Belomestny et al., 2022). We will have more detailed discussions on the related works in Section 6.

Table 1 provides a summary of the comparison between our work and the existing results for achieving the same approximation accuracy on a function with smoothness index in terms of the needed non-zero parameters in the network. We also summarize the results on whether the neural network approximator has an explicit architecture, where the approximation accuracy holds simultaneously for the target function and its derivative, and whether the approximation results were shown to adapt to the low-dimensional structure of the target function.

1.4 Our contributions

In this paper, motivated by the aforementioned estimation problems involving derivatives, we investigate the properties of RePU networks and their derivatives. We show that the partial derivatives of RePU neural networks can be represented by mixed-RePUs activated networks. We derive upper bounds for the complexity of the function class of the derivatives of RePU networks. This is a new result for the complexity of derivatives of RePU networks and is crucial to establish generalization error bounds for a variety of estimation problems involving derivatives, including the score matching estimation and our proposed penalized approach for isotonic regression considered in the present work.

We also derive our approximation results of the RePU network on the smooth functions and their derivatives simultaneously. Our approximation results of the RePU network are based on its representational power on polynomials. We construct the RePU networks with an explicit architecture, which is different from those in the existing literature. The number of hidden layers of our constructed RePU networks only depends on the degree of the target polynomial but is independent of the dimension of input. This construction is new for studying the approximation properties of RePU networks.

We summarize the main contributions of this work as follows.

-

1.

We study the basic properties of RePU neural networks and their derivatives. First, we show that partial derivatives of RePU networks can be represented by mixed-RePUs activated networks. We derive upper bounds for the complexity of the function class of partial derivatives of RePU networks in terms of pseudo dimension. Second, we derive novel approximation results for simultaneously approximating smooth functions and their derivatives using RePU networks based on a new and efficient construction technique. We show that the approximation can be improved when the data or target function has a low-dimensional structure, which implies that RePU networks can mitigate the curse of dimensionality.

-

2.

We study the statistical learning theory of deep score matching estimator (DSME) using RePU networks. We establish non-asymptotic prediction error bounds for DSME under the assumption that the target score is smooth. We show that DSME can mitigate the curse of dimensionality if the data has low-dimensional support.

-

3.

We propose a penalized deep isotonic regression (PDIR) approach using RePU networks, which encourages the partial derivatives of the estimated regression function to be nonnegative. We establish non-asymptotic excess risk bounds for PDIR under the assumption that the target regression function is smooth. Moreover, we show that PDIR achieves the minimax optimal rate of convergence for non-parametric regression. We also show that PDIR can mitigate the curse of dimensionality when data concentrates near a low-dimensional manifold. Furthermore, we show that with tuning parameters tending to zero, PDIR is consistent even when the target function is not isotonic.

The rest of the paper is organized as follows. In Section 2 we study basic properties of RePU neural networks. In Section 3 we establish novel approximation error bounds for approximating smooth functions and their derivatives using RePU networks. In Section 4 we derive non-asymptotic error bounds for DSME. In Section 5 we propose PDIR and establish non-asymptotic bounds for PDIR. In Section 6 we discuss related works. Concluding remarks are given in Section 7. Results from simulation studies, proofs, and technical details are given in the Supplementary Material.

2 Basic properties of RePU neural networks

In this section, we establish some basic properties of RePU networks. We show that the partial derivatives of RePU networks can be represented by RePUs mixed-activated networks. The width, depth, number of neurons, and size of the RePUs mixed-activated network have the same order as those of the original RePU networks. In addition, we derive upper bounds of the complexity of the function class of RePUs mixed-activated networks in terms of pseudo dimension, which leads to an upper bound of the class of partial derivatives of RePU networks.

2.1 RePU activated neural networks

Neural networks with nonlinear activation functions have proven to be a powerful approach for approximating multi-dimensional functions. One of the most commonly used activation functions is the Rectified linear unit (ReLU), defined as , due to its attractive properties in computation and optimization. However, since partial derivatives are involved in our objective function (3) and (7), it is not sensible to use networks with piecewise linear activation functions, such as ReLU and Leaky ReLU. Neural networks activated by Sigmoid and Tanh, are smooth and differentiable but have been falling from favor due to their vanishing gradient problems in optimization. In light of these, we are particularly interested in studying the neural networks activated by RePU, which are non-saturated and differentiable.

In Table 2 below we compare RePU with ReLU and Sigmoid networks in several important aspects. ReLU and RePU activation functions are continuous and non-saturated111An activation function is saturating if ., which do not have “vanishing gradients” as Sigmodal activations (e.g. Sigmoid, Tanh) in training. RePU and Sigmoid are differentiable and can approximate the gradient of a target function, but ReLU activation is not, especially for estimation involving high-order derivatives of a target function.

| Activation | Continuous | Non-saturated | Differentiable | Gradient Estimation |

|---|---|---|---|---|

| ReLU | ✓ | ✓ | ✗ | ✗ |

| Sigmoid | ✓ | ✗ | ✓ | ✓ |

| RePU | ✓ | ✓ | ✓ | ✓ |

We consider the th order Rectified Power units (RePU) activation function for a positive integer The RePU activation function, denoted as , is simply the power of ReLU,

Note that when , the activation function is the Heaviside step function; when , the activation function is the familiar Rectified Linear unit (ReLU); when , the activation functions are called rectified quadratic unit (ReQU) and rectified cubic unit (ReCU) respectively. In this work, we focus on the case with , implying that the RePU activation function has a continuous th continuous derivative.

With a RePU activation function, the network will be smooth and differentiable. The architecture of a RePU activated multilayer perceptron can be expressed as a composition of a series of functions

where is the dimension of the input data, is a RePU activation function (defined for each component of if is a vector), and Here is the width (the number of neurons or computational units) of the -th layer, is a weight matrix, and is the bias vector in the -th linear transformation . The input data is the first layer of the neural network and the output is the last layer. Such a network has hidden layers and layers in total. We use a -vector to describe the width of each layer. The width is defined as the maximum width of hidden layers, i.e., ; the size is defined as the total number of parameters in the network , i.e., ; the number of neurons is defined as the number of computational units in hidden layers, i.e., . Note that the neurons in consecutive layers are connected to each other via weight matrices , .

We use the notation to denote a class of RePU activated multilayer perceptrons with depth , width , number of neurons , size and satisfying and for some , where is the sup-norm of a function .

2.2 Derivatives of RePU networks

An advantage of RePU networks over piece-wise linear activated networks (e.g. ReLU networks) is that RePU networks are differentiable. Thus RePU networks are useful in many estimation problems involving derivative. To establish the learning theory for these problems, we need to study the properties of derivatives of RePU.

Recall that a -hidden layer network activated by th order RePU can be expressed by

Let denote the th linear transformation composited with RePU activation for and let denotes the linear transformation in the last layer. Then by the chain rule, the gradient of the network can be computed by

| (8) |

where denotes the gradient operator used in vector calculus. With a differentiable RePU activation , the gradients in (8) can be exactly computed by activated layers since . In addition, the are already RePU-activated layers. Then, the network gradient can be represented by a network activated by (and possibly for ) according to (8) with a proper architecture. Below, we refer to the neural networks activated by the as Mixed RePUs activated neural networks, i.e., the activation functions in Mixed RePUs network can be for , and for different neurons the activation function can be different.

The following theorem shows that the partial derivatives and the gradient of a RePU neural network indeed can be represented by a Mixed RePUs network with activation functions .

Theorem 1 (Neural networks for partial derivatives)

Let be a class of RePU activated neural networks with depth (number of hidden layer) , width (maximum width of hidden layer) , number of neurons , number of parameters (weights and bias) and satisfying and . Then for any and any , the partial derivative can be implemented by a Mixed RePUs activated multilayer perceptron with depth , width , number of neurons , number of parameters and bound .

Theorem 1 shows that for each , the partial derivative with respect to the -th argument of the function can be exactly computed by a Mixed RePUs network. In addition, by paralleling the networks computing , the whole vector of partial derivatives can be computed by a Mixed RePUs network with depth , width , number of neurons and number of parameters .

Let be the partial derivatives of the functions in with respect to the -th argument. And let denote the class of Mixed RePUs networks in Theorem 1. Then Theorem 1 implies that the class of partial derivative functions is contained in a class of Mixed RePUs networks, i.e., for . This further implies the complexity of can be bounded by that of the class of Mixed RePUs networks .

The complexity of a function class is a key quantity in the analysis of generalization properties. Lower complexity in general implies a smaller generalization gap. The complexity of a function class can be measured in several ways, including Rademacher complexity, covering number, VC dimension, and Pseudo dimension. These measures depict the complexity of a function class differently but are closely related to each other.

In the following, we develop complexity upper bounds for the class of Mixed RePUs network functions. In particular, these bounds lead to the upper bound for the Pseudo dimension of the function class , and that of .

Lemma 2 (Pseudo dimension of Mixed RePUs multilayer perceptrons)

Let be a function class implemented by Mixed RePUs activated multilayer perceptrons with depth , number of neurons (nodes) and size or number of parameters (weights and bias) . Then the Pseudo dimension of satisfies

where denotes the pseudo dimension of a function class

With Theorem 1 and Lemma 2, we can now obtain an upper bound for the complexity of the class of derivatives of RePU neural networks. This facilitates establishing learning theories for statistical methods involving derivatives.

Due to the symmetry among the arguments of the input of networks in , the concerned complexities for are generally the same. For notational simplicity, we use

in the main context to denote the quantities of complexities such as pseudo dimension, e.g., we use instead of for .

3 Approximation power of RePU neural networks

In this section, we establish error bounds for using RePU networks to simultaneously approximate smooth functions and their derivatives.

We show that RePU neural networks, with an appropriate architecture, can represent multivariate polynomials with no error and thus can simultaneously approximate multivariate differentiable functions and their derivatives. Moreover, we show that the RePU neural network can mitigate the “curse of dimensionality” when the domain of the target function concentrates in a neighborhood of a low-dimensional manifold.

In the studies of ReLU network approximation properties (Yarotsky, 2017, 2018; Shen et al., 2020; Schmidt-Hieber, 2020), the analyses rely on two key facts. First, the ReLU activation function can be used to construct continuous, piecewise linear bump functions with compact support, which forms a partition of unity of the domain. Second, deep ReLU networks can approximate the square function to any error tolerance, provided the network is large enough. Based on these facts, the ReLU network can compute Taylor’s expansion to approximate smooth functions. However, due to the piecewise linear nature of ReLU, the approximation is restricted to the target function itself rather than its derivative. In other words, the error in approximation by ReLU networks is quantified using the norm, where or . On the other hand, norms such as Sobolev, Hölder, or others can indicate the approximation of derivatives. Gühring and Raslan (2021) extended the results by showing that the network activated by a general smooth function can approximate the partition of unity and polynomial functions, and obtain the approximation rate for smooth functions in the Sobolev norm which implies approximation of the target function and its derivatives. RePU-activated networks have been shown to represent splines (Duan et al., 2021; Belomestny et al., 2022), thus they can approximate smooth functions and their derivatives based on the approximation power of splines.

RePU networks can also represent polynomials efficiently and accurately. This fact motivated us to derive our approximation results for RePU networks based on their representational power on polynomials. To construct RePU networks representing polynomials, our basic idea is to express basic operators as one-hidden-layer RePU networks and then compute polynomials by combining and composing these building blocks. For univariate input , the identity map , linear transformation , and square map can all be represented by one-hidden-layer RePU networks with only a few nodes. The multiplication operator can also be realized by a one-hidden-layer RePU network. Then univariate polynomials of degree can be computed by a RePU network with a proper composited construction based on Horner’s method (also known as Qin Jiushao’s algorithm) (Horner, 1819). Further, a multivariate polynomial can be viewed as the product of univariate polynomials, then a RePU network with a suitable architecture can represent multivariate polynomials. Alternatively, as mentioned in Mhaskar (1993); Chui and Li (1993), any polynomial in variables with total degree not exceeding can be written as a linear combination of quantities of the form where denotes the combinatorial number and denotes the linear combination of variables . Given this fact, RePU networks can also be shown to represent polynomials based on proper construction.

Theorem 3 (Representation of Polynomials by RePU networks)

For any non-negative integer and positive integer , if is a polynomial of variables with total degree , then can be exactly computed with no error by RePU activated neural network with

-

(1)

hidden layers, number of neurons, number of parameters and width ;

-

(2)

hidden layers, number of neurons, number of parameters and width ,

where denotes the smallest integer no less than and denotes the factorial of integer .

Theorem 3 establishes the capability of RePU networks to accurately represent multivariate polynomials of order through appropriate architectural designs. The theorem introduces two distinct network architectures, based on Horner’s method (Horner, 1819) and Mhaskar’s method (Mhaskar, 1993) respectively, that can achieve this representation. The RePU network constructed using Horner’s method exhibits a larger number of hidden layers but fewer neurons and parameters compared to the network constructed using Mhaskar’s method. Neither construction is universally superior, and the choice of construction depends on the relationship between the dimension and the order , allowing for potential efficiency gains in specific scenarios.

Importantly, RePU neural networks offer advantages over ReLU networks in approximating polynomials. For any positive integers and , ReLU networks with a width of approximately and a depth of can only approximate, but not accurately represent, -variate polynomials of degree with an accuracy of approximately (Shen et al., 2020; Hon and Yang, 2022). Furthermore, the approximation capabilities of ReLU networks for polynomials are generally limited to bounded regions, whereas RePU networks can precisely compute polynomials over the entire space.

Theorem 3 introduces novel findings that distinguish it from existing research (Li et al., 2019, 2020) in several aspects. First, it provides an explicit formulation of the RePU network’s depth, width, number of neurons, and parameters in terms of the target polynomial’s order and the input dimension , thereby facilitating practical implementation. Second, the theorem presents Architecture (2), which outperforms previous studies in the sense that it requires fewer hidden layers for polynomial representation. Prior works, such as Li et al. (2019, 2020), required RePU networks with hidden layers, along with neurons and parameters, to represent -variate polynomials of degree on . However, Architecture (2) in Theorem 3 achieves a comparable number of neurons and parameters with only hidden layers. Importantly, the number of hidden layers depends only on the polynomial’s degree and is independent of the input dimension . This improvement bears particular significance in dealing with high-dimensional input spaces that is commonly encountered in machine-learning tasks. Lastly, Architecture (1) in Theorem 3 contributes an additional RePU network construction based on Horner’s method (Horner, 1819), complementing existing results based solely on Mhaskar’s method (Mhaskar, 1993) and providing an alternative choice for polynomial representation.

By leveraging the approximation power of multivariate polynomials, we can derive error bounds for approximating general multivariate smooth functions using RePU neural networks. Previously, approximation properties of RePU networks have been studied for target functions in different spaces, e.g. Sobolev space (Li et al., 2020, 2019; Gühring and Raslan, 2021), spectral Barron space (Siegel and Xu, 2022), Besov space (Ali and Nouy, 2021) and Hölder space (Belomestny et al., 2022). Here we focus on the approximation of multivariate smooth functions and their derivatives in space for defined in Definition 4.

Definition 4 (Multivariate differentiable class )

A function defined on a subset of is said to be in class on for a positive integer , if all partial derivatives

exist and are continuous on , for every non-negative integers, such that . In addition, we define the norm of over by

where for any vector .

Theorem 5

Let be a real-valued function defined on a compact set belonging to class for . For any , there exists a RePU activated neural network with its depth , width , number of neurons and size specified as one of the following architectures:

-

(1)

-

(2)

such that for each multi-index satisfying , we have

where is a positive constant depending only on and the diameter of .

Theorem 5 gives a simultaneous approximation result for RePU network approximation since the error is measured in norm. It improves over existing results focusing on norms, which cannot guarantee the approximation of derivatives of the target function (Li et al., 2019, 2020). It is known that shallow neural networks with smooth activation can simultaneously approximate a smooth function and its derivatives (Xu and Cao, 2005). However, the simultaneous approximation of RePU neural networks with respect to norms involving derivatives is still an ongoing research area (Gühring and Raslan, 2021; Duan et al., 2021; Belomestny et al., 2022). For solving partial differential equations in a Sobolev space with smoothness order 2, Duan et al. (2021) showed that ReQU neural networks can simultaneously approximate the target function and its derivative in Sobolev norm . To achieve an accuracy of , the ReQU networks require layers and neurons. Later Belomestny et al. (2022) proved that -Hölder smooth functions () and their derivatives up to order can be simultaneously approximated with accuracy in Hölder norm by a ReQU network with width , layers, and nonzero parameters. Gühring and Raslan (2021) derived simultaneous approximation results for neural networks with general smooth activation functions. Based on Gühring and Raslan (2021), a RePU neural network with constant layer and nonzero parameters can achieve an approximation accuracy measured in Sobolev norm up to th order derivative for a -dimensional Sobolev function with smoothness .

To achieve the approximation accuracy , our Theorem 5 demonstrates that a RePU network requires a comparable number of neurons, namely , to simultaneously approximate the target function up to its -th order derivatives. Our result differs from existing studies in several ways. First, in contrast to Li et al. (2019, 2020), Theorem 5 derives simultaneous approximation results for RePU networks. Second, Theorem 5 holds for general RePU networks (), including the ReQU network () studied in Duan et al. (2021) and Belomestny et al. (2022). Third, Theorem 5 explicitly specifies the network architecture to facilitate the network design in practice, whereas existing studies determine network architectures solely in terms of orders (Li et al., 2019, 2020; Gühring and Raslan, 2021). In addition, as discussed in the next subsection, Theorem 5 can be further improved and adapted to the low-dimensional structured data, which highlights the RePU networks’ capability to mitigate the curse of dimensionality in estimation problems. We again refer to Table 1 for a summary comparison of our work with the existing results.

Remark 6

Theorem 3 is based on the representation power of RePU networks on polynomials as in Li et al. (2019, 2020) and Ali and Nouy (2021). Other existing works derived approximation results based on the representation of the ReQU neural network on B-splines or tensor-product splines (Duan et al., 2021; Siegel and Xu, 2022; Belomestny et al., 2022).

3.1 Circumventing the curse of dimensionality

In Theorem 5, to achieve an approximate error , the RePU neural network should have many parameters. The number of parameters grows polynomially in the desired approximation accuracy with an exponent depending on the dimension . In statistical and machine learning tasks, such an approximation result can make the estimation suffer from the curse of dimensionality. In other words, when the dimension of the input data is large, the convergence rate becomes extremely slow. Fortunately, high-dimensional data often have approximate low-dimensional latent structures in many applications, such as computer vision and natural language processing (Belkin and Niyogi, 2003; Hoffmann et al., 2009; Fefferman et al., 2016). It has been shown that these low-dimensional structures can help mitigate the curse of dimensionality (improve the convergence rate) using ReLU networks (Schmidt-Hieber, 2019; Shen et al., 2020; Jiao et al., 2023; Chen et al., 2022). We consider an assumption of approximate low-dimensional support of data distribution (Jiao et al., 2023), and show that the RePU network can also mitigate the curse of dimensionality under this assumption.

Assumption 7

The predictor is supported on a -neighborhood of a compact -dimensional Riemannian submanifold , where

and the Riemannian submanifold has condition number , volume and geodesic covering regularity .

We assume that the high-dimensional data concentrates in a -neighborhood of a low-dimensional manifold. This assumption serves as a relaxation from the stringent requirements imposed by exact manifold assumptions (Chen et al., 2019; Schmidt-Hieber, 2019).

With a well-conditioned manifold , we show that RePU networks possess the capability to adaptively embed the data into a lower-dimensional space while approximately preserving distances. The dimensionality of the embedded representation, as well as the quality of the embedding in terms of its ability to preserve distances, are contingent upon the properties of the approximate manifold, including its radius , condition number , volume , and geodesic covering regularity . For in-depth definitions of these properties, we direct the interested reader to Baraniuk and Wakin (2009).

Theorem 8 (Improved approximation results)

Suppose that Assumption 7 holds. Let be a real-valued function defined on belonging to class for . Let be an integer satisfying for some and a universal constant . Then for any , there exists a RePU activated neural network with its depth , width , number of neurons and size as one of the following architectures:

-

(1)

-

(2)

such that for each multi-index satisfying ,

for with a universal constant , where is a positive constant depending only on and .

When data has a low-dimensional structure, Theorem 8 indicates that the RePU network can approximate smooth function up to th order derivatives with an accuracy using neurons. Here the effective dimension scales linearly in the intrinsic manifold dimension and logarithmically in the ambient dimension and the features of the manifold. Compared to Theorem 5, the effective dimensionality in Theorem 8 is instead of , which could be a significant improvement especially when the ambient dimension of data is large but the intrinsic dimension is small.

Theorem 8 shows that RePU neural networks are an effective tool for analyzing data that lies in a neighborhood of a low-dimensional manifold, indicating their potential to mitigate the curse of dimensionality. In particular, this property makes them well-suited to scenarios where the ambient dimension of the data is high, but its intrinsic dimension is low. To the best of our knowledge, our Theorem 8 is the first result of the ability of RePU networks to mitigate the curse of dimensionality. A highlight of the comparison between our result and the existing recent results of Li et al. (2019), Li et al. (2020), Duan et al. (2021), Abdeljawad and Grohs (2022) and Belomestny et al. (2022) is given in Table 1.

4 Deep score estimation

Deep neural networks have revolutionized many areas of statistics and machine learning, and one of the important applications is score function estimation using the score matching method (Hyvärinen and Dayan, 2005). Score-based generative models (Song et al., 2021), which learn to generate samples by estimating the gradient of the log-density function, can benefit significantly from deep neural networks. Using a deep neural network allows for more expressive and flexible models, which can capture complex patterns and dependencies in the data. This is especially important for high-dimensional data, where traditional methods may struggle to capture all of the relevant features. By leveraging the power of deep neural networks, score-based generative models can achieve state-of-the-art results on a wide range of tasks, from image generation to natural language processing. The use of deep neural networks in score function estimation represents a major advance in the field of generative modeling, with the potential to unlock new levels of creativity and innovation. We apply our developed theories of RePU networks to explore the statistical learning theories of deep score matching estimation (DSME).

Let be a probability density function supported on and be its score function where is the vector differential operator with respect to the input . The goal of deep score estimation is to model and estimate by a function based on samples from such that . Here belongs to a class of deep neural networks.

It worths noting that the neural network used in deep score estimation is a vector-valued function. For a -dimensional input , the output is also -dimensional. We let denote the Jacobian matrix of with its entry being . With a slight abuse of notation, we denote by a class of RePU activated multilayer perceptrons with parameter , depth , width , size , number of neurons and satisfying: (i) for some where is the sup-norm of a vector-valued function over its domain ; (ii) , , for some where is the -th diagonal entry (in the -th row and -th column) of . Here the parameters and of can depend on the sample size , but we omit the dependence in their notations. In addition, we extend the definition of smooth multivariate function. We say a multivariate function belongs to if belongs to for each . Correspondingly, we define .

4.1 Non-asymptotic error bounds for DSME

The development of theory for predicting the performance of score estimator using deep neural networks has been a crucial research area in recent times. Theoretical upper bounds for prediction errors have become increasingly important in understanding the limitations and potential of these models.

We are interested in establishing non-asymptoic error bounds for DSME, which is obtained by minimizing the expected squared distance over the class of functions . However, this objective is computationally infeasible because the explicit form of is unknown. Under proper conditions, the objective function has an equivalent formulation which is computationally feasible.

Assumption 9

The density of the data is differentiable. The expectation and are finite for any . And for any when .

Under Assumption 9, the population objective of score matching is equivalent to given in (3). With a finite sample , the empirical version of is

Then, DSME is defined by

| (9) |

which is the empirical risk minimizer over the class of RePU neural networks .

Our target is to give upper bounds of the excess risk of , which is defined as

To obtain an upper bound of , we decompose it into two parts of error, i.e. stochastic error and approximation error, and then derive upper bounds for them respectively. Let , then

where we call the stochastic error and the approximation error.

It is important to highlight that the analysis of stochastic error and approximation error for DSME estimation are unconventional. On one hand, since holds for any , the approximation error can be obtained by examining the squared distance approximation in the norm. Thus, Theorem 5 provides a bound for the approximation error . On the other hand, the empirical squared distance loss is not equivalent to the surrogate loss . In other words, the minimizer of may not be the same as the minimizer of the empirical squared distance over . Consequently, the stochastic error can only be analyzed based on the formulation of rather than the squared loss. This implies that the stochastic error is dependent on the complexities of the RePU networks class , as well as their derivatives . Based on Theorem 1, Lemma 2 and the empirical process theory, it is expected that the stochastic error will be bounded by . Finally, by combining these two error bounds, we obtain the following bounds for the mean squared error of the empirical risk minimizer defined in (9).

Lemma 10

Suppose that Assumption 9 hold and the target score function belongs to for . For any positive integer , let be the class of RePU activated neural networks with depth , width , number of neurons and size , and suppose that and . Then the empirical risk minimizer defined in (9) satisfies

| (10) |

with

where the expectation is taken with respect to and , is a universal constant, and is a constant depending only on and the diameter of .

Remark 11

Lemma 10 established a bound on the mean squared error of the empirical risk minimizer. Specifically, this error is shown to be bounded by the sum of the stochastic error, denoted as , and the approximation error, denoted as . On one hand, the stochastic error exhibits a decreasing trend with respect to the sample size , but an increasing trend with respect to the network size as determined by . On the other hand, the approximation error decreases in the network size as determined by . To attain a fast convergence rate with respect to the sample size , it is necessary to carefully balance these two errors by selecting an appropriate based on a given sample size .

Remark 12

In Lemma 10, the error bounds are stated in terms of the integer . These error bounds can also be expressed in terms of the number of neurons and size , given that we have specified the relationships between these parameters. Specifically, and size , which relate the number of neurons and size of the network to and the dimensionality of .

Lemma 10 leads to the following error bound for the score-matching estimator.

Theorem 13 (Non-asymptotic excess risk bounds)

In Theorem 13, the convergence rate in the error bound is up to a logarithmic factor. While this rate is slightly slower than the optimal minimax rate for nonparametric regression (Stone, 1982), it remains reasonable considering the nature of score matching estimation. Score matching estimation involves derivatives and the target score function value is not directly observable, which deviates from the traditional nonparametric regression in Stone (1982) where both predictors and responses are observed and no derivatives are involved. However, the rate can be extremely slow for large , suffering from the curse of dimensionality. To address this issue, we derive error bounds under an approximate lower-dimensional support assumption as stated in Assumption 7, to mitigate the curse of dimensionality.

Lemma 14

Suppose that Assumptions 7, 9 hold and the target score function belongs to for some . Let be an integer with for some and universal constant . For any positive integer , let be the class of RePU activated neural networks with depth , width , number of neurons and size . Suppose that and . Then the empirical risk minimizer defined in (9) satisfies

| (11) |

with

for , where are universal constants and is a constant depending only on and .

With an approximate low-dimensional support assumption, Lemma 14 implies that a faster convergence rate for deep score estimator can be achieved.

5 Deep isotonic regression

As another application of our results on RePU-activated networks, we propose PDIR, a penalized deep isotonic regression approach using RePU networks and a penalty function based on the derivatives of the networks to enforce monotonicity. We also establish the error bounds for PDIR.

Suppose we have a random sample from model (4). Recall is the proposed population objective function for isotonic regression defined in (7). We consider the empirical counterpart of the objective function :

| (12) |

A simple choice of the penalty function is . In general, we can take for a function with . We focus on Lipschitz penalty functions as defined below.

Assumption 16 (Lipschitz penalty function)

The penalty function satisfies if . Besides, is -Lipschitz, i.e., for any .

Let the empirical risk minimizer of deep isotonic regression denoted by

| (13) |

where is a class of functions computed by deep neural networks which may depend on and can be set to depend on the sample size . We refer to as a penalized deep isotonic regression (PDIR) estimator.

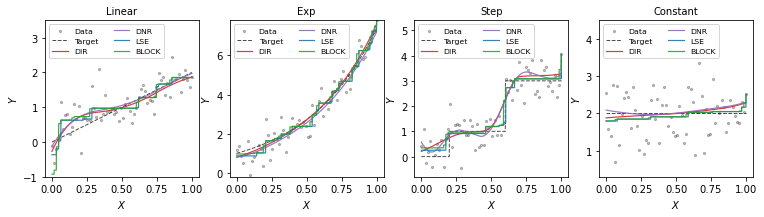

An illustration of PDIR is presented in Figure 1. In all subfigures, the data are depicted as grey dots, the underlying regression functions are plotted as solid black curves and PDIR estimates with different levels of penalty parameter are plotted as colored curves. In the top two figures, data are generated from models with monotonic regression functions. In the bottom left figure, the target function is a constant. In the bottom right figure, the model is misspecified, in which the underlying regression function is not monotonic. Small values of can lead to non-monotonic and reasonable estimates, suggesting that PDIR is robust against model misspecification. We have conducted more numerical experiments to evaluate the performance of PDIR, which indicates that PDIR tends to perform better than the existing isotonic regression methods considered in the comparison. The results are given in the Supplementary Material.

5.1 Non-asymptotic error bounds for PDIR

In this section, we state our main results on the bounds for the excess risk of the PDIR estimator defined in (13). Recall the definition of in (7). For notational simplicity, we write

| (14) |

The target function is the minimizer of risk over measurable functions, i.e., In isotonic regression, we assume that In addition, for any function , under the regression model (4), we have

We first state the conditions needed for establishing the excess risk bounds.

Assumption 17

(i) The target regression function defined in (4) is coordinate-wisely nondecreasing on , i.e., if for . (ii) The errors are independent and identically distributed noise variables with and , and ’s are independent of .

Assumption 17 includes basic model assumptions on the errors and the monotonic target function . In addition, we assume that the target function belongs to the class .

Next, we state the following basic lemma for bounding the excess risk.

Lemma 18 (Excess risk decomposition)

For the empirical risk minimizer defined in (13), its excess risk can be upper bounded by

The upper bound for the excess risk can be decomposed into two components: the stochastic error, given by the expected value of , and the approximation error, defined as . To establish a bound for the stochastic error, it is necessary to consider the complexities of both RePU networks and their derivatives, which have been investigated in our Theorem 1 and Lemma 2. To establish a bound for the approximation error , we rely on the simultaneous approximation results in Theorem 5.

Remark 19

The error decomposition in Lemma 18 differs from the canonical decomposition for score estimation in section 4.1, particularly pertaining to the stochastic error component. However, utilizing the decomposition in Lemma 18 enables us to derive a superior stochastic error bound by leveraging the properties of the PDIR loss function. A similar decomposition for least squares loss without penalization can be found in Jiao et al. (2023).

Lemma 20

Suppose that Assumptions 16, 17 hold and the target function defined in (4) belongs to for some . For any positive integer , let be the class of RePU activated neural networks with depth , width , number of neurons and size . Suppose that and . Then for , the excess risk of PDIR defined in (13) satisfies

| (15) | ||||

| (16) |

with

where the expectation is taken with respect to and , is the mean of the tuning parameters, is a universal constant and is a positive constant depending only on and the diameter of the support .

Lemma 20 establishes two error bounds for the PDIR estimator : (15) for the mean squared error between and the target , and (16) for controlling the non-monotonicity of via its partial derivatives with respect to a measure defined in terms of . Both bounds (15) and (16) are encompasses both stochastic and approximation errors. Specifically, the stochastic error is of order , which represents an improvement over the canonical error bound of , up to logarithmic factors in . This advancement is owing to the decomposition in Lemma 18 and the properties of PDIR loss function, which is different from traditional decomposition techniques.

Remark 21

In (16), the estimator is encouraged to exhibit monotonicity, as the expected monotonicity penalty on the estimator is bounded. Notably, when , the estimator is almost surely monotonic in its th argument with respect to the probability measure of . Based on (16), guarantees of the estimator’s monotonicity with respect to a single argument can be obtained. Specifically, for those where , we have , which provides a guarantee of the estimator’s monotonicity with respect to its th argument. Moreover, larger values of lead to smaller bounds, which is consistent with the intuition that larger values of better promote monotonicity of with respect to its th argument.

Theorem 22 (Non-asymptotic excess risk bounds)

By Theorem 22, our proposed PDIR estimator obtained with proper network architecture and tuning parameter achieves the minimax optimal rate up to logarithms for the nonparametric regression (Stone, 1982). Meanwhile, the PDIR estimator is guaranteed to be monotonic as measured by at a rate of up to a logarithmic factor.

Remark 23

In Theorem 22, we choose to attain the optimal rate of the expected mean squared error of up to a logarithmic factor. Additionally, we guarantee that the estimator to be monotonic at a rate of up to a logarithmic factor as measured by . The choice of is not unique for ensuring the consistency of . In fact, any choice of will result in a consistent . However, larger values of lead to a slower convergence rate of the expected mean squared error, but better guarantee for the monotonicity of .

The smoothness of the target function is unknown in practice and how to determine the smoothness of an unknown function is an important but nontrivial problem. Note that the convergence rate suffers from the curse of dimensionality since it can be extremely slow if is large.

High-dimensional data have low-dimensional latent structures in many applications. Below we show that PDIR can mitigate the curse of dimensionality if the data distribution is supported on an approximate low-dimensional manifold.

Lemma 24

Suppose that Assumptions 7, 16, 17 hold and the target function defined in (4) belongs to for some . Let be an integer with for some and universal constant . For any positive integer , let be the class of RePU activated neural networks with depth , width , number of neurons and size . Suppose that and . Then for , the excess risk of the PDIR estimator defined in (13) satisfies

| (17) | ||||

| (18) |

with

for where are universal constants and is a constant depending only on and the diameter of the support .

Based on Lemma 24, we obtain the following result.

Theorem 25 (Improved non-asymptotic excess risk bounds)

5.2 PDIR under model misspecification

In this subsection, we investigate PDIR under model misspecification when Assumption 17 (i) is not satisfied, meaning that the underlying regression function may not be monotonic.

Let be a random sample from model (4). Recall that the penalized risk of the deep isotonic regression is given by

If is not monotonic, the penalty is non-zero, and consequently, is not a minimizer of the risk when . Intuitively, the deep isotonic regression estimator will exhibit a bias towards the target due to the additional penalty terms in the risk. However, it is reasonable to expect that the estimator will have a smaller bias if are small. In the following lemma, we establish a non-asymptotic upper bound for our proposed deep isotonic regression estimator while adapting to model misspecification.

Lemma 26

Suppose that Assumptions 16 and 17 (ii) hold and the target function defined in (4) belongs to for some . For any positive integer let be the class of RePU activated neural networks with depth , width , number of neurons and size . Suppose that and . Then for , the excess risk of the PDIR estimator defined in (13) satisfies

| (19) | ||||

| (20) |

with

where the expectation is taken with respect to and , is the mean of the tuning parameters, is a universal constant and is a positive constant depending only on and the diameter of the support .

Lemma 26 is a generalized version of Lemma 20 for PDIR, as it holds regardless of whether the target function is isotonic or not. In Lemma 26, the expected mean squared error of the PDIR estimator can be bounded by three errors: stochastic error , approximation error , and misspecification error , without the monotonicity assumption. Compared with Lemma 20 with the monotonicity assumption, the approximation error is identical, the stochastic error is worse in terms of order, and the misspecification error appears as an extra term in the inequality. With an appropriate setup of for the neural network architecture with respect to the sample size , the stochastic error and approximation error can converge to zero, albeit at a slower rate than that in Theorem 22. However, the misspecification error remains constant for fixed tuning parameters . Thus, we can let the tuning parameters converge to zero to achieve consistency.

Remark 27

It is worth noting that if the target function is isotonic, then the misspecification error vanishes, leading the scenario to that of isotonic regression. However, the convergence rate based on Lemma 26 is slower than that in Lemma 20. The reason is that Lemma 26 is general and holds without prior knowledge of the monotonicity of the target function. If knowledge is available about the non-isotonicity of the th argument of the target function , setting the corresponding decreases the misspecification error and helps improve the upper bound.

Theorem 28 (Non-asymptotic excess risk bounds)

According to Lemma 26, under the misspecification model, the prediction error of PDIR attains its minimum when for , and the misspecification error vanishes. Consequently, the optimal convergence rate with respect to can be achieved by setting and for . It is worth noting that the prediction error of PDIR can achieve this rate as long as .

Remark 29

According to Theorem 28, there is no unique choice of that ensures the consistency of PDIR. Consistency is guaranteed even under a misspecified model when the for tend to zero as . Additionally, selecting a smaller value of provides a better upper bound for (19), and an optimal rate up to logarithms of can be achieved with a sufficiently small . An example demonstrating the effects of tuning parameters is visualized in the last subfigure of Figure 1.

6 Related works

In this section, we briefly review the papers in the existing literature that are most related to the present work.

6.1 ReLU and RePU networks

Deep learning has achieved impressive success in a wide range of applications. A fundamental reason for these successes is the ability of deep neural networks to approximate high-dimensional functions and extract effective data representations. There has been much effort devoted to studying the approximation properties of deep neural networks in recent years. Many interesting results have been obtained concerning the approximation power of deep neural networks for multivariate functions. Examples include Chen et al. (2019), Schmidt-Hieber (2020), Jiao et al. (2023). These works focused on the power of ReLU-activated neural networks for approximating various types of smooth functions.

For the approximation of the square function by ReLU networks, Yarotsky (2017) first used “sawtooth” functions, which achieves an error rate of with width 6 and depth for any positive integer . General construction of ReLU networks for approximating a square function can achieve an error with width and depth for any positive integers (Lu et al., 2021b). Based on this basic fact, the ReLU networks approximating multiplication and polynomials can be constructed correspondingly. However, the network complexity in terms of network size (depth and width) for a ReLU network to achieve precise approximation can be large compared to that of a RePU network since a RePU network can exactly compute polynomials with fewer layers and neurons.

The approximation results of the RePU network are generally obtained by converting splines or polynomials into RePU networks and making use of the approximation results of splines and polynomials. The universality of sigmoidal deep neural networks has been studied in the pioneering works (Mhaskar, 1993; Chui et al., 1994). In addition, the approximation properties of shallow Rectified Power Unit (RePU) activated network were studied in Klusowski and Barron (2018); Siegel and Xu (2022). The approximation rates of deep RePU neural networks on target functions in different spaces have also been explored, including Besov spaces (Ali and Nouy, 2021), Sobolev spaces (Li et al., 2019, 2020; Duan et al., 2021; Abdeljawad and Grohs, 2022) , and Hölder space (Belomestny et al., 2022). Most of the existing results on the expressiveness of neural networks measure the quality of approximation with respect to where norm. However, fewer papers have studied the approximation of derivatives of smooth functions (Duan et al., 2021; Gühring and Raslan, 2021; Belomestny et al., 2022).

6.2 Related works on score estimation

Learning a probability distribution from data is a fundamental task in statistics and machine learning for efficient generation of new samples from the learned distribution. Likelihood-based models approach this problem by directly learning the probability density function, but they have several limitations, such as an intractable normalizing constant and approximate maximum likelihood training.

One alternative approach to circumvent these limitations is to model the score function (Liu et al., 2016), which is the gradient of the logarithm of the probability density function. Score-based models can be learned using a variety of methods, including parametric score matching methods (Hyvärinen and Dayan, 2005; Sasaki et al., 2014), autoencoders as its denoising variants (Vincent, 2011), sliced score matching (Song et al., 2020), nonparametric score matching (Sriperumbudur et al., 2017; Sutherland et al., 2018), and kernel estimators based on Stein’s methods (Li and Turner, 2017; Shi et al., 2018). These score estimators have been applied in many research problems, such as gradient flow and optimal transport methods (Gao et al., 2019, 2022), gradient-free adaptive MCMC (Strathmann et al., 2015), learning implicit models (Warde-Farley and Bengio, 2016), inverse problems (Jalal et al., 2021). Score-based generative learning models, especially those using deep neural networks, have achieved state-of-the-art performance in many downstream tasks and applications, including image generation (Song and Ermon, 2019, 2020; Song et al., 2021; Ho et al., 2020; Dhariwal and Nichol, 2021; Ho et al., 2022), music generation (Mittal et al., 2021), and audio synthesis (Chen et al., 2020; Kong et al., 2020; Popov et al., 2021).

However, there is a lack of theoretical understanding of nonparametric score estimation using deep neural networks. The existing studies mainly considered kernel based methods. Zhou et al. (2020) studied regularized nonparametric score estimators using vector-valued reproducing kernel Hilbert space, which connects the kernel exponential family estimator (Sriperumbudur et al., 2017) with the score estimator based on Stein’s method (Li and Turner, 2017; Shi et al., 2018). Consistency and convergence rates of these kernel-based score estimator are also established under the correctly-specified model assumption in Zhou et al. (2020). For denoising autoencoders, Block et al. (2020) obtained generalization bounds for general nonparametric estimators also under the correctly-specified model assumption.

For sore-based learning using deep neural networks, the main difficulty for establishing the theoretical foundation is the lack of knowledge of differentiable neural networks since the derivatives of neural networks are involved in the estimation of score function. Previously, the non-differentiable Rectified Linear Unit (ReLU) activated deep neural network has received much attention due to its attractive properties in computation and optimization, and has been extensively studied in terms of its complexity (Bartlett et al., 1998; Anthony and Bartlett, 1999; Bartlett et al., 2019) and approximation power (Yarotsky, 2017; Petersen and Voigtlaender, 2018; Shen et al., 2020; Lu et al., 2021a; Jiao et al., 2023), based on which statistical learning theories for deep non-parametric estimations were established (Bauer and Kohler, 2019; Schmidt-Hieber, 2020; Jiao et al., 2023). For deep neural networks with differentiable activation functions, such as ReQU and RepU, the simultaneous approximation power on a smooth function and its derivatives were studied recently (Ali and Nouy, 2021; Belomestny et al., 2022; Siegel and Xu, 2022; Hon and Yang, 2022), but the statistical properties of differentiable networks are still largely unknown. To the best of our knowledge, the statistical learning theory has only been investigated for ReQU networks in Shen et al. (2022), where they have developed network representation of the derivatives of ReQU networks and studied their complexity.

6.3 Related works on isotonic regression

There is a rich and extensive literature on univariate isotonic regression, which is too vast to be adequately summarized here. So we refer to the books Barlow et al. (1972) and Robertson et al. (1988) for a systematic treatment of this topic and review of earlier works. For more recent developments on the error analysis of nonparametric isotonic regression, we refer to Durot (2002); Zhang (2002); Durot (2007, 2008); Groeneboom and Jongbloed (2014); Chatterjee et al. (2015), and Yang and Barber (2019), among others.

The least squares isotonic regression estimators under fixed design were extensively studied. With a fixed design at fixed points , the risk of the least squares estimator is defined by where the least squares estimator is defined by

| (21) |

The problem can be restated in terms of isotonic vector estimation on directed acyclic graphs. Specifically, the design points induce a directed acyclic graph with vertices and edges . The class of isotonic vectors on is defined by

Then the least squares estimation in (21) becomes that of searching for a target vector . The least squares estimator is actually the projection of onto the polyhedral convex cone (Han et al., 2019).

For univariate isotonic least squares regression with a bounded total variation target function , Zhang (2002) obtained sharp upper bounds for risk of the least squares estimator for . Shape-constrained estimators were also considered in different settings where automatic rate-adaptation phenomenon happens (Chatterjee et al., 2015; Gao et al., 2017; Bellec, 2018). We also refer to Kim et al. (2018); Chatterjee and Lafferty (2019) for other examples of adaptation in univariate shape-constrained problems.

Error analysis for the least squares estimator in multivariate isotonic regression is more difficult. For two-dimensional isotonic regression, where with and Gaussian noise, Chatterjee et al. (2018) considered the fixed lattice design case and obtained sharp error bounds. Han et al. (2019) extended the results of Chatterjee et al. (2018) to the case with , both from a worst-case perspective and an adaptation point of view. They also proved parallel results for random designs assuming the density of the covariate is bounded away from zero and infinity on the support.

Deng and Zhang (2020) considered a class of block estimators for multivariate isotonic regression in involving rectangular upper and lower sets under, which is defined as any estimator in-between the following max-min and min-max estimator. Under a -th moment condition on the noise, they developed risk bounds for such estimators for isotonic regression on graphs. Furthermore, the block estimator possesses an oracle property in variable selection: when depends on only an unknown set of variables, the risk of the block estimator automatically achieves the minimax rate up to a logarithmic factor based on the knowledge of the set of the variables.

Our proposed method and theoretical results are different from those in the aforementioned papers in several aspects. First, the resulting estimates from our method are smooth instead of piecewise constant as those based on the existing methods. Second, our method can mitigate the curse of dimensionality under an approximate low-dimensional manifold support assumption, which is weaker than the exact low-dimensional space assumption in the existing work. Finally, our method possesses a robustness property against model specification in the sense that it still yields consistent estimators if the monotonicity assumption is not strictly satisfied. However, the properties of the existing isotonic regression methods under model misspecification are unclear.

7 Conclusions

In this work, motivated by the problems of score estimation and isotonic regression, we have studied the properties of RePU-activated neural networks, including a novel generalization result for the derivatives of RePU networks and improved approximation error bounds for RePU networks with approximate low-dimensional structures. We have established non-asymptotic excess risk bounds for DSME, a deep score matching estimator; and PDIR, our proposed penalized deep isotonic regression method.

Our findings highlight the potential of RePU-activated neural networks in addressing challenging problems in machine learning and statistics. The ability to accurately represent the partial derivatives of RePU networks with RePUs mixed-activated networks is a valuable tool in many applications that require the use of neural network derivatives. Moreover, the improved approximation error bounds for RePU networks with low-dimensional structures demonstrate their potential to mitigate the curse of dimensionality in high-dimensional settings.

Future work can investigate further the properties of RePU networks, such as their stability, robustness, and interpretability. It would also be interesting to explore the use of RePU-activated neural networks in other applications, such as nonparametric variable selection and more general shape-constrained estimation problems. Additionally, our work can be extended to other smooth activation functions beyond RePUs, such as Gaussian error linear unit and scaled exponential linear unit, and study their derivatives and approximation properties.

Appendix

This appendix contains results from simulation studies to evaluate the performance of PDIR and proofs and supporting lemmas for the theoretical results stated in the paper.

Appendix A Numerical studies

In this section, we conduct simulation studies to evaluate the performance of PDIR and compare it with the existing isotonic regression methods. The methods included in the simulation include

-

•

The isotonic least squares estimator, denoted by Isotonic LSE, is defined as the minimizer of the mean square error on the training data subject to the monotone constraint. As the squared loss only involves the values at design points, then the isotonic LSE (with no more than linear constraints) can be computed with quadratic programming or using convex optimization algorithms (Dykstra, 1983; Kyng et al., 2015; Stout, 2015). Algorithmically, this turns out to be mappable to a network flow problem (Picard, 1976; Spouge et al., 2003). In our implementation, we compute Isotonic LSE via the Python package multiisotonic222https://github.com/alexfields/multiisotonic.

-

•

The block estimator (Deng and Zhang, 2020), denoted by Block estimator, is defined as any estimator between the block min-max and max-min estimators (Fokianos et al., 2020). In the simulation, we take the Block estimator as the mean of max-min and min-max estimators as suggested in (Deng and Zhang, 2020). The Isotonic LSE is shown to has an explicit mini-max representation on the design points for isotonic regression on graphs in general (Robertson et al., 1988). As in Deng and Zhang (2020), we use brute force which exhaustively calculates means over all blocks and finds the max-min value for each point . The computation cost via brute force is of order .

-

•

Deep isotonic regression estimator as described in Section 5, denoted by PDIR. Here we focus on using RePU activated network with . We implement it in Python via Pytorch and use Adam (Kingma and Ba, 2014) as the optimization algorithm with default learning rate 0.01 and default (coefficients used for computing running averages of gradients and their squares). The tuning parameters are chosen in the way that for .

-

•

Deep nonparametric regression estimator, denoted by DNR, which is actually the PDIR without penalty. The implementation is the same as that of PDIR, but the tuning parameters for .

A.1 Estimation and evaluation

For the proposed PDIR estimator, we set the tuning parameter for across the simulations. For each target function , according to model (4) we generate the training data with sample size and train the Isotonic LSE, Block estimator, PDIR and DNR estimators on . We would mention that the Block estimator has no definition when the input is “outside” the domain of training data , i.e., there exist no such that . In view of this, in our simulation we focus on using the training data with lattice design of the covariates for ease of presentation on the Block estimator. For PDIR and DNR estimators, such fixed lattice design of the covariates are not necessary and the obtained estimators can be smoothly extended to large domains which covers the domain of the training samples.

For each , we also generate the testing data with sample size from the same distribution of the training data. For the proposed method and for each obtained estimator , we calculate the mean squared error (MSE) on the testing data . We calculate the distance between the estimator and the corresponding target function on the testing data by

and we also calculate the distance between the estimator and the target function , i.e.

In the simulation studies, for each data generation model we generate testing data by the lattice points (100 even lattice points for each dimension of the input) where is the dimension of the input. We report the mean squared error, and distances to the target function defined above and their standard deviations over replications under different scenarios. The specific forms of are given in the data generation models below.

A.2 Univariate models

We consider three basic univariate models, including “Linear”, “Exp”, “Step”, “Constant” and “Wave”, which corresponds to different specifications of the target function . The formulae are given below.

-

(a)

Linear :

-

(b)

Exp:

-

(c)

Step:

-

(d)

Constant:

-

(e)

Wave:

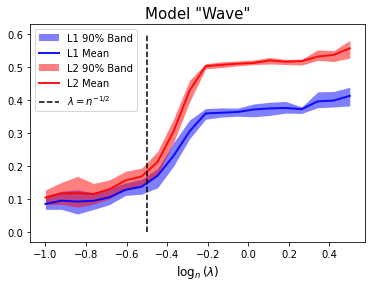

where , and follows normal distribution. We use the linear model as a baseline model in our simulations and expect all the methods perform well under the linear model. The “Step” model is monotonic but not smooth even continuous. The “Constant” is a monotonic but not strictly monotonic model. And the “Wave” is a nonlinear, smooth but non monotonic model. These models are chosen so that we can evaluate the performance of Isotonic LSE, Block estimator PDIR and DNR under different types of models, including the conventional and misspecified cases.

For these models, we use the lattice design for the ease of presentation of Block estimator, where are the lattice points evenly distributed on interval . Figure S2 shows all these univariate data generation models.

Figures S3 shows an instance of the estimated curves for the “Linear”, “Exp”, “Step” and “Constant” models when sample size . In these plots, the training data is depicted as grey dots. The target functions are depicted as dashed curves in black, and the estimated functions are represented by solid curves with different colors. The summary statistics are presented in Table S3. Compared with the piece-wise constant estimates of Isotonic LSE and Block estimator, the PDIR estimator is smooth and it works reasonably well under univariate models, especially for models with smooth target functions.

| Model | Method | ||||||

|---|---|---|---|---|---|---|---|

| MSE | MSE | ||||||

| Linear | DNR | 0.266 (0.011) | 0.101 (0.035) | 0.122 (0.040) | 0.253 (0.011) | 0.055 (0.020) | 0.068 (0.023) |

| PDIR | 0.265 (0.012) | 0.098 (0.037) | 0.118 (0.041) | 0.254 (0.012) | 0.058 (0.024) | 0.070 (0.027) | |

| Isotonic LSE | 0.282 (0.013) | 0.140 (0.027) | 0.177 (0.035) | 0.262 (0.012) | 0.088 (0.012) | 0.113 (0.017) | |

| Block | 0.330 (0.137) | 0.165 (0.060) | 0.243 (0.155) | 0.277 (0.033) | 0.106 (0.021) | 0.153 (0.060) | |

| Exp | DNR | 0.268 (0.014) | 0.103 (0.043) | 0.124 (0.049) | 0.256 (0.012) | 0.055 (0.024) | 0.068 (0.027) |

| PDIR | 0.268 (0.017) | 0.102 (0.049) | 0.124 (0.056) | 0.255 (0.012) | 0.055 (0.022) | 0.068 (0.026) | |

| Isotonic LSE | 0.312 (0.018) | 0.195 (0.028) | 0.246 (0.034) | 0.274 (0.014) | 0.120 (0.014) | 0.153 (0.018) | |

| Block | 0.302 (0.021) | 0.177 (0.028) | 0.223 (0.034) | 0.272 (0.012) | 0.115 (0.015) | 0.146 (0.017) | |

| Step | DNR | 0.375 (0.045) | 0.259 (0.059) | 0.347 (0.061) | 0.315 (0.017) | 0.169 (0.022) | 0.253 (0.018) |

| PDIR | 0.366 (0.042) | 0.245 (0.058) | 0.335 (0.057) | 0.311 (0.018) | 0.153 (0.025) | 0.245 (0.018) | |

| Isotonic LSE | 0.304 (0.020) | 0.151 (0.041) | 0.228 (0.039) | 0.275 (0.014) | 0.081 (0.022) | 0.155 (0.020) | |

| Block | 0.382 (0.217) | 0.208 (0.082) | 0.327 (0.160) | 0.295 (0.046) | 0.108 (0.035) | 0.197 (0.086) | |

| Constant | DNR | 0.266 (0.012) | 0.102 (0.038) | 0.122 (0.042) | 0.258 (0.013) | 0.057 (0.021) | 0.069 (0.023) |

| PDIR | 0.260 (0.011) | 0.080 (0.045) | 0.092 (0.049) | 0.257 (0.012) | 0.051 (0.025) | 0.060 (0.028) | |

| Isotonic LSE | 0.265 (0.013) | 0.087 (0.044) | 0.114 (0.052) | 0.258 (0.012) | 0.044 (0.020) | 0.068 (0.025) | |

| Block | 0.264 (0.012) | 0.085 (0.044) | 0.108 (0.049) | 0.258 (0.012) | 0.044 (0.020) | 0.066 (0.025) | |

| Wave | DNR | 0.289 (0.023) | 0.156 (0.039) | 0.192 (0.044) | 0.262 (0.014) | 0.089 (0.025) | 0.110 (0.029) |

| PDIR | 0.530 (0.030) | 0.398 (0.026) | 0.528 (0.018) | 0.511 (0.022) | 0.368 (0.014) | 0.510 (0.009) | |

| Isotonic LSE | 0.525 (0.027) | 0.399 (0.022) | 0.524 (0.015) | 0.495 (0.020) | 0.353 (0.009) | 0.494 (0.004) | |

| Block | 0.516 (0.024) | 0.391 (0.022) | 0.519 (0.017) | 0.497 (0.023) | 0.358 (0.012) | 0.500 (0.013) | |

A.3 Bivariate models

We consider several basic multivariate models, including polynomial model (“Polynomial”), concave model (“Concave”), step model (“Step”), partial model (“Partial”), constant model (“Constant”) and wave model (“Wave”), which correspond to different specifications of the target function . The formulae are given below.

-

(a)

Polynomial:

-

(b)

Concave:

-

(c)

Step:

-

(d)

Partial:

-

(e)

Constant:

-

(f)

Wave:

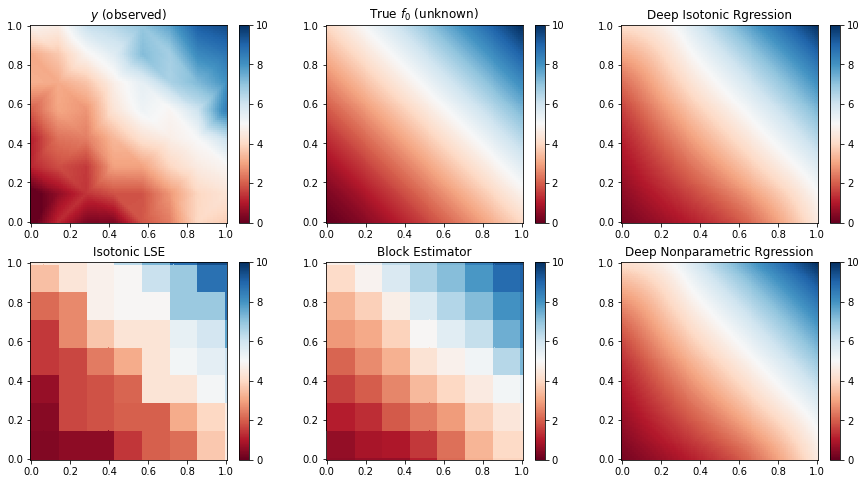

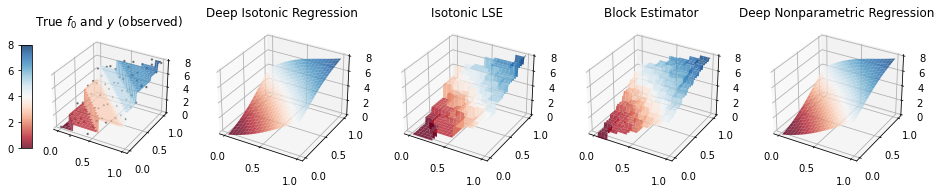

where , , and follows normal distribution. The “Polynomial” and “Concave” models are monotonic models. The “Step” model is monotonic but not smooth even continuous. In “Partial ” model, the response is related to only one covariate. The “Constant” is a monotonic but not strictly monotonic model and the “Wave” is a nonlinear, smooth but non monotonic model. We use the lattice design for the ease of presentation of Block estimator, where are the lattice points evenly distributed on interval . Simulation results over 100 replications are summarized in Table S4. And for each model, we take an instance from the replications to present the heatmaps and the 3D surface of the predictions of these estimates; see Figure S4-S15. In heatmaps, we show the observed data (linearly interpolated), the true target function and the estimates of different methods. We can see that compared with the piece-wise constant estimates of Isotonic LSE and Block estimator, the DIR estimator is smooth and works reasonably well under bivariate models, especially for models with smooth target functions.

| Model | Method | ||||||

|---|---|---|---|---|---|---|---|

| MSE | MSE | ||||||

| Polynomial | DNR | 4.735 (0.344) | 0.138 (0.041) | 0.172 (0.046) | 4.655 (0.178) | 0.078 (0.022) | 0.098 (0.025) |