Differentiable Implicit Soft-Body Physics

Abstract

We present a differentiable soft-body physics simulator that can be composed with neural networks as a differentiable layer. In contrast to other differentiable physics approaches that use explicit forward models to define state transitions, we focus on implicit state transitions defined via function minimization. Implicit state transitions appear in implicit numerical integration methods, which offer the benefits of large time steps and excellent numerical stability, but require a special treatment to achieve differentiability due to the absence of an explicit differentiable forward pass. In contrast to other implicit differentiation approaches that require explicit formulas for the force function and the force Jacobian matrix, we present an energy-based approach that allows us to compute these derivatives automatically and in a matrix-free fashion via reverse-mode automatic differentiation. This allows for more flexibility and productivity when defining physical models and is particularly important in the context of neural network training, which often relies on reverse-mode automatic differentiation (backpropagation). We demonstrate the effectiveness of our differentiable simulator in policy optimization for locomotion tasks and show that it achieves better sample efficiency than model-free reinforcement learning.

1 Introduction

Physics engines have been extensively used in robotics and computer graphics, but the increased adoption of neural networks to solve inverse problems in these domains has revealed the need for additional features in such engines (Degrave et al., 2019; Hu et al., 2019c, b, a). For example, using neural networks to solve inverse problems where physical constraints must be satisfied, such as motor control, often requires model-free reinforcement learning algorithms that approximate the derivatives of the simulator by sampling multiple trajectories using stochastic control policies. This is due to the non-differentiable nature of most existing simulators, which have traditionally focused on efficiency and stability of forward computations only. Differentiable simulators are useful when we want to incorporate physics into neural networks as a differentiable layer, which allows us to run backpropagation as commonly done in supervised learning.

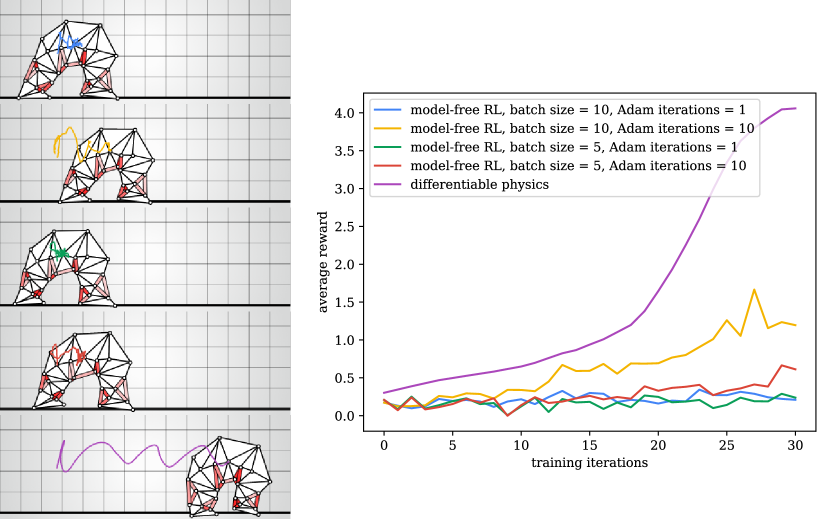

In this paper, we focus on soft-body physics and muscle-like actuation mechanisms, motivated by humans and other animals that control their bodies via storage and release of energy in their muscles to generate a wide range of motions, and we demonstrate that our differentiable simulator is effective in optimizing control policies for locomotion (Figure 1) and can achieve better sample efficiency than model-free reinforcement learning. In contrast to other differentiable soft-body physics approaches that use explicit forward models to define state transitions, we focus on implicit state transitions defined via function minimization. Implicit state transitions appear in implicit numerical integration methods, which offer the benefits of large time steps and excellent numerical stability, but require a special treatment to achieve differentiability due to the absence of an explicit differentiable forward pass.

2 Related work

2.1 Differentiable explicit soft-body physics

Recent work (Hu et al., 2019c) showed that incorporating a differentiable physics engine, ChainQueen, into machine learning workflows can achieve better sample efficiency than model-free reinforcement learning in soft robotics tasks. Besides differentiability, the key characteristic of ChainQueen is its efficient GPU implementation of soft-body dynamics using the material point method and explicit numerical integration, which has been shown to have better runtime performance than an equivalent implementation in TensorFlow, whose numerical kernels are not as optimized for sparse operations.

This then led to the development of DiffTaichi (Hu et al., 2019a), now part of the Taichi programming language (Hu et al., 2019b), which introduced an optimizing compiler for differentiable sparse operations that commonly appear in physics-based simulation. The results presented in such line of work have focused on explicit numerical integration. An explicit differentiable forward pass is required in order to compute derivatives with DiffTaichi. Although standard deep learning packages can achieve differentiability of explicit operations too, they often lack efficient implementations of sparse operations, which is one of the main problems addressed by DiffTaichi.

However, explicit differentiation is not applicable to simulators that define implicit state transitions. For example, simulators that use implicit numerical integration offer the benefits of large time steps and better numerical stability (Gast & Schroeder, 2015), but do not define an explicit differentiable forward pass. The availability of efficient implementations of sparse operations has an important effect on the runtime performance of the simulator, but this aspect is orthogonal to the problem of achieving differentiability through implicit state transitions, which we tackle in this paper.

2.2 Differentiable optimization

The implicit state transitions that we focus on in this paper are primarily driven by optimization procedures. For example, quasistatic motions are driven by potential energy minimization. There has been recent work incorporating numerical optimization into neural networks as a differentiable layer (Amos & Kolter, 2017; Agrawal et al., 2019). However, work in this area has focused on convex optimization, while the optimization problems that arise in soft-body physics are non-convex.

2.3 Implicit layers

Differentiable optimization can be considered part of a larger class of models sometimes referred to as implicit layers, which also include models such as neural ODEs (Chen et al., 2018) and deep equilibrium models (Bai et al., 2019). These are layers whose output is not defined in terms of its input via some explicit rule , but rather implicitly via some specification . Differentiating through implicit definitions requires special treatment and although there is previous work on implicit differentiation (often presented under the name of sensitivity analysis and adjoint method) for material parameter estimation and trajectory optimization in soft-body simulation (Sifakis et al., 2005; Bern et al., 2019, 2017b, 2017a), incorporating this implicit differentiation approach into neural networks for motor control and learning via backpropagation is less explored (Geilinger et al., 2020). One common pattern in these previous approaches is that they use explicit formulas for the force function and the force Jacobian matrix. In this paper, however, we take an energy-based approach that allows us to compute these derivatives automatically using reverse-mode automatic differentiation, allowing for more flexibility and productivity when defining physical models.

2.4 Energy-based models

Energy-based modeling is a framework to define dependencies between variables via scalar-valued energy functions (LeCun et al., 2006). In these models, the forward pass is also defined implicitly, via energy minimization, and the term “energy” used in the context of energy-based modeling has a particularly close connection to the notion of potential energy in quasistatic motions that we consider in Section 3.

One of the main problems studied in energy-based modeling is how to learn energy functions, which can be modeled using neural networks. However, since in this paper we consider problems in simulation where we already have access to the energy functions used to model the environment, we do not tackle this problem. Instead, we focus on using our differentiable model to train control policies, but we emphasize that the energy functions that we use can be viewed as neural networks and our simulator is essentially a collection of energy functions implemented using operations commonly available in deep learning packages. Additional quantities required for the forward and backward pass, such as forces and force Jacobians, can be derived from the energy function using automatic differentiation. Our approach is especially relevant in the context of energy-based modeling, where the premise is that the primary definition should be the energy function and everything else is derived from such definition.

3 Quasistatic motions

We first consider quasistatic motions, which are primarily driven by potential energy minimization and ignore inertial effects, which we will consider in Section 5. While typical elastic objects are passive and their motion is determined by initial conditions, in this work we focus on active objects, which combine passive elasticity with an internal actuation mechanism such as muscles or robotic actuators.

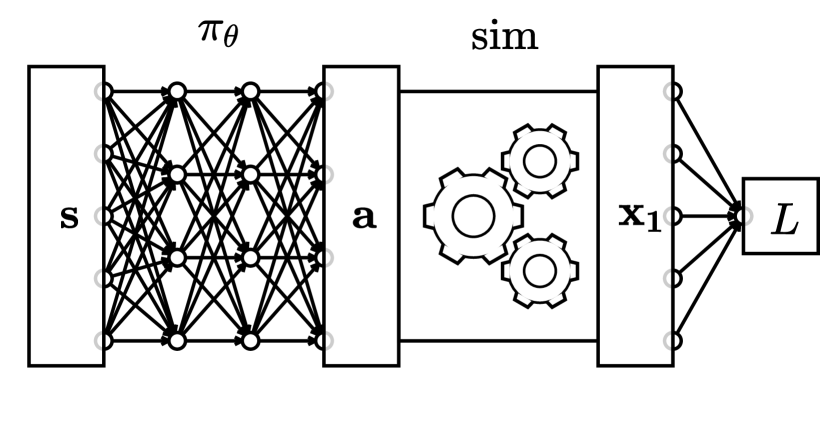

The model we use for our experiments is based on deformable meshes simulated using the finite element method (FEM) and contractile fibers modeled as springs. As seen in Figure 2, the output of the simulator depends on the action signal . More specifically, it is computed by minimizing the potential energy of the system. For soft-body physics, the potential energy is conventionally defined as a function of vertex positions , but to support actuation mechanisms we consider energy functions that also take action signals as input:

| (1) |

The energy function we use for each contractile fiber in the model shown in Figure 2 is:

| (2) |

where is the current length of the fiber, is its original rest length and is its stiffness. However, we want to emphasize that the approach we propose to achieve differentiability is not tied to this particular model. The important characteristic of the model presented in this section is that it defines the output of the simulator via energy minimization (Equation 1), but many possible energy functions exist for this purpose and our approach can achieve differentiability for arbitrary energy functions.

The energy function we use for our experiments also includes contributions from triangle deformations due to Neo-Hookean elasticity. Although there are many variants of Neo-Hookean elasticity (Smith et al., 2018), the specific model we use is based on the energy density function presented in (Sifakis & Barbič, 2012), which can be expressed in terms of the isotropic invariants and that can be computed from vertex positions :

| (3) |

The only modification we make to this model is that we use a quadratic approximation of the logarithmic function to avoid issues with non-positive values, which may appear when triangles are inverted (Irving et al., 2004). We refer to (Sifakis & Barbič, 2012) for additional details on how to compute and from vertex positions and additional background in FEM simulation. However, we want to emphasize again that our goal in this paper is not to advocate any specific elasticity model or actuation mechanism. We provide some details of the energy functions we use for our experiments for reference, but our goal is to provide a general framework to achieve differentiability in soft-body simulations where the forward pass requires a minimization procedure. The specific function that the simulator minimizes and the minimization algorithm implemented in the simulator may vary depending on application requirements.

Although the specifics may have some differences, there is a high-level interpretation of the energy functions commonly used in soft-body physics that can reveal some of their similarities with neural networks, which are not always evident in more conventional presentations of this topic in the context of continuum mechanics and partial differential equations. Many energy models used in FEM simulation such as Neo-Hookean, Saint Venant-Kirchhoff and corotated elasticity are defined as a non-linear function of the deformation gradient , a square matrix (2x2 for triangles and 3x3 for tetrahedra) that can be defined as a linear function of the vertex positions . In that sense, all these models can be understood as shallow neural networks that use different non-linearities. We can also see a similar pattern in spring energy functions. Figure 3 shows a diagram that makes this interpretation more evident.

One difference with more conventional neural network architectures is that the non-linearity in FEM models is not element-wise. For example, an FEM model on 2D triangle meshes computes the energy of every triangle by applying a non-linear function on 2x2 matrices. This approach has some similarities with capsule networks (Sabour et al., 2017), since the non-linearity acts on groups of neurons whose values represent properties of the same entity (one triangle of the mesh), and a similar observation can be made about spring energy functions.

In fact, the energy function used in our simulator is implemented using differentiable operations commonly available in deep learning packages (we used PyTorch (Paszke et al., 2019), without any additional libraries). This also allows us to automatically differentiate the energy function which is useful to implement the forward pass that requires running a minimization procedure (Equation 1).

Note, however, that the fact that we can implement the energy function using differentiable operations does not immediately provide us with a backward pass for the simulator. Although explicitly unrolling a minimization procedure (for example, gradient descent with a fixed step size) could in principle allow us to run the backward pass, this is not necessarily the best option, since it requires storing in memory all the intermediate steps of the optimizer and limits its applicability to optimization methods that only use differentiable operations in their implementation.

In the next section, we present an implicit differentation approach that allows us to differentiate through the simulator regardless of how the optimization procedure is implemented, it does not require storing intermediate steps and is applicable even if the optimizer runs non-differentiable operations such as line search, which is common in implementations of soft-body physics (Wang & Yang, 2016; Gast & Schroeder, 2015; Liu et al., 2017; Smith et al., 2018).

4 Differentiable quasistatic motions

For machine learning problems, we are interested in incorporating physics into neural networks as a layer, as shown in Figure 4. The difficulty in running backpropagation on the computation graph presented in Figure 4 is that is not readily available because is not defined explicitly as a differentiable function of . In this section, we present an implicit differentiation approach to compute this derivative, and we point out some aspects that make this approach feasible to implement in reverse-mode automatic differentiation systems commonly used for neural network training. To present an initial derivation using conventional matrix operations, we assume vector representations using one-dimensional arrays for the vertex positions (where is the number of vertices) and the action (where is the number of actuators), but we show how to generalize this derivation to support other representations for and in Section 4.1.

The output of the simulation layer is defined implicitly via energy minimization, which implies that the force (Equation 4) at the solution is zero (Equation 5).

| (4) |

| (5) |

We observe that the fact that is zero for any (Equation 5) implies that is also zero for any . Applying the chain rule, we get:

| (6) |

In the next equations, we will omit the explicit evaluations at for notational convenience. From Equation 6 we can obtain a formula for :

| (7) |

Considering the loss as a function of , we can use Equation 7 to obtain a formula for :

| (8) |

We then reorder the matrix multiplications of Equation 8 to get our final formula for the backward pass:

| (9) |

The derivation of the last equation leveraged the fact that is symmetric, since it is equal to the Hessian of the energy as can be seen via Equation 4. This Hessian is positive definite when evaluated at a quasistatic configuration , since it corresponds to a local minimum of the energy . Thus, the quantity inside the parenthesis in Equation 9, which requires solving a linear system, can be conveniently computed via a conjugate gradient solver in a matrix-free fashion. The fact that we can implement Equation 9 without explicitly constructing the Hessian matrix is particularly important in the context of neural networks. Neural networks are often implemented using reverse-mode automatic differentiation systems which do not provide an efficient way to compute Hessian matrices, but can compute Hessian-vector products in a matrix-free fashion, which is sufficient to implement Equation 9 (we used PyTorch). We provide more details of this matrix-free implementation in Section 4.1.

4.1 Matrix-free second-order differentiation via backpropagation

The formula for the backward pass (Equation 9) includes and . We can interpret these quantities as force Jacobian matrices, or matrices that contain second derivatives of the energy function . However, the matrix interpretation of these quantities is not always the most convenient for implementation purposes. In fact, to implement Equation 9 we do not need to construct these matrices, we only need a way to evaluate matrix-vector products.

For example, the quantity inside the parenthesis in Equation 9 requires solving a linear system that can be implemented using a conjugate gradient solver if we can evaluate matrix-vector products for arbitrary vectors . This can be done using the following identity:

| (10) |

This requires creating the force as a differentiable node in the computation graph (for example, in PyTorch, this can be done by running torch.autograd.grad on the energy ), and then running backpropagation on the dot product . Since the dot product is a scalar, running reverse-mode automatic differentiation (backpropagation) on it can be performed efficiently, and it effectively computes the matrix-vector product that we need. A similar approach can be used for in order to compute the full expression presented in Equation 9.

We originally assumed vectors represented as one-dimensional arrays for the action and vertex positions to present the initial derivation using conventional matrix operations, but with a matrix-free approach it becomes more clear that we do not have to restrict these quantities to one-dimensional arrays. A matrix is just one particular representation of a linear function and matrix-vector multiplication is just one particular implementation of linear function application. Instead of interpreting as a matrix, we can interpret it as a linear function that takes as input one argument of the same type of and outputs a value of the same type of ( is one possible choice, but not the only one).

We can implement this for arbitrary representations of (as well as ) if we can define the appropriate dot product used in Equation 10 to compute second derivatives in a matrix-free fashion. The dot product can be easily defined in general for multidimensional arrays of arbitrary order (or tensors, as commonly referred to in deep learning packages) via an element-wise multiplication followed by a sum of all the elements. In fact, as suggested by Figures 2 and 3, vertex positions are more naturally represented as an array of two dimensions, where one dimension indexes vertices and the other indexes spatial dimensions. This and other multidimensional array representations are naturally handled by our matrix-free approach without any modifications since we do not require to be a one-dimensional array nor do we require constructing explicitly as a matrix.

5 Differentiable implicit numerical integration

We now consider the effect of inertia, which we previously ignored in quasistatic motions. For this purpose, we consider sequences of states that evolve over time. The state of our simulation is defined by vertex positions and velocities . A good starting point to characterize the dynamics of our system is to consider the equations of motion in a continuous-time setting:

| (11) |

where is the force and is the mass. For simulation purposes, we assume a particular time discretization scheme given by a numerical integration method, defining a function that updates the state of the system after units of time:

| (12) |

Explicit methods such as symplectic Euler have been used before for differentiable soft-body physics (Hu et al., 2019c, a). However, in this paper we focus on implicit numerical integration methods that do not provide an explicit differentiable forward pass. Although implicit numerical integration methods are sometimes formulated as root finding problems using the force function, many implicit numerical integration methods can be reformulated as minimization problems using the energy function. The latter formulation is preferred in practical implementations due to its better numerical properties (Martin et al., 2011; Gast & Schroeder, 2015; Liu et al., 2017; Rojas et al., 2018). We adopt the optimization-based formulation of implicit numerical integration for our differentiable simulator. The general form that these methods have is the following:

| (13) |

where is a scalar-valued function that includes the potential energy , and is an explicit velocity update rule. For example, the optimization-based formulation of backward Euler integration commonly used in simulation (Gast & Schroeder, 2015; Liu et al., 2017) can be written as:

| (14) |

The implicit update rule for can be differentiated using the same implicit differentiation strategy presented in Section 4 and the explicit update rule for can be differentiated using standard backpropagation. This provides us with a fully-differentiable implicit numerical integration layer (Figure 5) that we can use for policy optimization.

5.1 Locomotion

To demonstrate the effectiveness of our proposed implicit numerical integration layer, we consider episodic tasks where an agent gets a reward at the end of the episode equal to its total horizontal displacement. We used backward Euler integration for our simulation and introduced energy terms to model collisions and friction with a flat terrain (see Section 5.2 for details). Since every step of the simulation is differentiable, we can run backpropagation through time to optimize control policies. Our policies are modeled as neural networks with a single hidden layer with 32 ReLU units that take as input the current state of the simulation (vertex positions and velocities) and output actions that change the rest length of the contractile fibers.

We compared the performance of our method with model-free reinforcement learning. We used the same training strategy based on PPO (Schulman et al., 2017) used in (Rojas et al., 2019) for soft-body locomotion (clipping parameter ) and found that, overall, our differentiable simulator requires fewer iterations and samples to make progress, as shown in Figure 7 and Figure 8. Note that PPO requires sampling multiple trajectories to approximate the gradient of the simulator, whose accuracy depends on the number of trajectories sampled every training iteration (batch size) and requires running a stochastic policy, while with a differentiable simulator we can train a deterministic policy using an effective batch size of 1. Also note that one training iteration of PPO actually performs multiple optimization steps (we used Adam (Kingma & Ba, 2015) with a learning rate of ), while our differentiable simulator performs a single Adam iteration per training iteration.

For model-free RL, we modeled the stochastic policy as a multivariate Gaussian distribution, where the mean is computed using the same architecture of the deterministic policy used for our differentiable simulator, and they were both initialized with the same parameters. The stochastic policy samples actions from this distribution, using a trainable standard deviation for every muscle fiber, which is initialized with the same value for all fibers. We also repeated these experiments with multiple random seeds and different initialization values for the standard deviation of the stochastic policy (Figure 9 and Figure 10) and found that the differentiable simulator can also make progress in fewer training iterations across multiple runs.

5.2 Ground collisions and friction

To handle collisions with the ground, we implemented a one-sided quadratic energy term. Assuming a flat terrain that coincides with the horizontal axis, as shown in our experiments, we can define a collision energy using the ReLU function to introduce a penalty term (scaled by a stiffness coefficient ) that is only active when a vertex with position is in contact with the ground:

| (15) |

We also implemented friction between the deformable mesh and the ground to enable locomotion. Conceptually, the value of the friction term should depend on the horizontal component of the velocity. Hence, we introduce a velocity function that can be expressed in terms of vertex positions and is consistent with the explicit velocity update rule used in backward Euler:

| (16) |

The final value of the friction term we used also depends on the amount of overlap between the ground and the vertical component of the vertex position in the previous time step (using a numerical tolerance ):

| (17) |

The effect that this energy term has is that it penalizes high speeds in the horizontal plane () for vertices that are in contact with the ground (determined by ). Since this term is part of the function that the simulator minimizes in the forward pass, it naturally tends to reduce the speed of vertices that are in contact with the ground over time, effectively modeling a type of friction. As with the other energy functions used in our simulation, this model can be easily changed to accomodate different friction models, since our energy-based formulation detailed in Section 4 allows us to compute all the required derivatives automatically via backpropagation provided that the energy function is implemented using differentiable operations.

6 Conclusion and future work

We presented a soft-body physics layer that can be differentiated even when an explicit differentiable forward pass is not available. By adopting an optimization-based approach to define state transitions, we derived a differentiation rule that is agnostic to the specific implementation of the forward pass, which allows us to achieve differentiability for implicit numerical integration methods. We showed how our implicit differentiation approach can be implemented using reverse-mode automatic differentiation, which allows us to implement and compose our simulator with neural networks using the same automatic differentiation system.

As with recent work in differentiable physics for explicit methods, such as DiffTaichi (Hu et al., 2019a), one interesting direction of future work for implicit methods is to devise performance optimizations for the simulator while keeping the same flexibility and productivity provided by automatic differentiation. Our current implementation does not take full advantage of spatial sparsity, which is one of the main reasons why simulators implemented in DiffTaichi are often faster than implementations in conventional deep learning packages. The forward pass of our simulator can also be further improved by adopting different numerical optimization strategies. We adopted a descent method with line search that only uses information of first-order derivatives, but this could be improved in the future. Our goal in this paper is not to provide a specific implementation of the forward pass, but rather to present a general approach that leverages reverse-mode automatic differentiation and allows us to use different implementations of the forward pass while keeping the simulator differentiable.

We demonstrated that our differentiable simulator is compatible with backpropagation through time and can be used to optimize control policies taking into account the effect of multiple simulation steps. However, as with other recurrent models, vanishing and exploding gradients could become an issue for very long time horizons. We ran some preliminary experiments that indicate that combining our differentiable simulator with a trainable value function can mitigate this issue, which is also common in infinite-horizon reinforcement learning, but further research is needed to properly assess the benefits of this approach. Although a differentiable physics engine allows us to optimize deterministic policies, it is possible that adding some stochasticity, for example to improve exploration during training, could mitigate potential issues with local optima, which has been reported in prior work in differentiable physics. We believe it is worth evaluating the benefits of incorporating these modifications into the training loop in future work.

Acknowledgements

We would like to thank Alexander Winkler for the useful discussions. This material is based upon work supported by the National Science Foundation under Grant Numbers IIS-2008915, IIS-2008584, IIS-1763638 and IIS-1764071. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

References

- Agrawal et al. (2019) Agrawal, A., Amos, B., Barratt, S., Boyd, S., Diamond, S., and Kolter, Z. Differentiable convex optimization layers. In Advances in Neural Information Processing Systems, 2019.

- Amos & Kolter (2017) Amos, B. and Kolter, J. Z. OptNet: Differentiable optimization as a layer in neural networks. In Proceedings of the 34th International Conference on Machine Learning, volume 70 of Proceedings of Machine Learning Research, pp. 136–145. PMLR, 2017.

- Bai et al. (2019) Bai, S., Kolter, J. Z., and Koltun, V. Deep equilibrium models. In Wallach, H., Larochelle, H., Beygelzimer, A., d'Alché-Buc, F., Fox, E., and Garnett, R. (eds.), Advances in Neural Information Processing Systems 32, pp. 690–701. Curran Associates, Inc., 2019. URL http://papers.nips.cc/paper/8358-deep-equilibrium-models.pdf.

- Bern et al. (2017a) Bern, J., Kumagai, G., and Coros, S. Fabrication, modeling, and control of plush robots. In 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 3739 – 3746, Piscataway, NJ, 2017a. IEEE. ISBN 978-1-5386-2682-5. doi: 10.1109/IROS.2017.8206223. 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2017); Conference Location: Vancouver, Canada; Conference Date: September 24-28, 2017.

- Bern et al. (2017b) Bern, J. M., Chang, K.-H., and Coros, S. Interactive design of animated plushies. ACM Trans. Graph., 36(4):80:1–80:11, July 2017b. ISSN 0730-0301. doi: 10.1145/3072959.3073700. URL http://doi.acm.org/10.1145/3072959.3073700.

- Bern et al. (2019) Bern, J. M., Banzet, P., Poranne, R., and Coros, S. Trajectory optimization for cable-driven soft robot locomotion. Proceedings of Robotics: Science and Systems, FreiburgimBreisgau, Germany, 2019.

- Chen et al. (2018) Chen, R. T. Q., Rubanova, Y., Bettencourt, J., and Duvenaud, D. K. Neural ordinary differential equations. In Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., and Garnett, R. (eds.), Advances in Neural Information Processing Systems, volume 31, pp. 6571–6583. Curran Associates, Inc., 2018. URL https://proceedings.neurips.cc/paper/2018/file/69386f6bb1dfed68692a24c8686939b9-Paper.pdf.

- Degrave et al. (2019) Degrave, J., Hermans, M., Dambre, J., and wyffels, F. A differentiable physics engine for deep learning in robotics. Frontiers in Neurorobotics, 13:6, 2019. ISSN 1662-5218. doi: 10.3389/fnbot.2019.00006. URL https://www.frontiersin.org/article/10.3389/fnbot.2019.00006.

- Gast & Schroeder (2015) Gast, T. F. and Schroeder, C. Optimization integrator for large time steps. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation, SCA ’14, pp. 31–40, Goslar, DEU, 2015. Eurographics Association.

- Geilinger et al. (2020) Geilinger, M., Hahn, D., Zehnder, J., Bächer, M., Thomaszewski, B., and Coros, S. ADD: Analytically differentiable dynamics for multi-body systems with frictional contact. ACM Trans. Graph., 39(6), November 2020. ISSN 0730-0301. doi: 10.1145/3414685.3417766. URL https://doi.org/10.1145/3414685.3417766.

- Hu et al. (2019a) Hu, Y., Anderson, L., Li, T.-M., Sun, Q., Carr, N., Ragan-Kelley, J., and Durand, F. DiffTaichi: Differentiable programming for physical simulation, 2019a.

- Hu et al. (2019b) Hu, Y., Li, T.-M., Anderson, L., Ragan-Kelley, J., and Durand, F. Taichi: A language for high-performance computation on spatially sparse data structures. ACM Trans. Graph., 38(6):201:1–201:16, November 2019b. ISSN 0730-0301. doi: 10.1145/3355089.3356506. URL http://doi.acm.org/10.1145/3355089.3356506.

- Hu et al. (2019c) Hu, Y., Liu, J., Spielberg, A., Tenenbaum, J. B., Freeman, W. T., Wu, J., Rus, D., and Matusik, W. ChainQueen: A real-time differentiable physical simulator for soft robotics. In 2019 International Conference on Robotics and Automation (ICRA), pp. 6265–6271. IEEE, 2019c.

- Irving et al. (2004) Irving, G., Teran, J., and Fedkiw, R. Invertible finite elements for robust simulation of large deformation. In Proceedings of the 2004 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, SCA ’04, pp. 131–140, Goslar, DEU, 2004. Eurographics Association. ISBN 3905673142. doi: 10.1145/1028523.1028541. URL https://doi.org/10.1145/1028523.1028541.

- Kingma & Ba (2015) Kingma, D. P. and Ba, J. Adam: A method for stochastic optimization. In ICLR, 2015.

- LeCun et al. (2006) LeCun, Y., Chopra, S., Hadsell, R., Huang, F. J., and et al. A tutorial on energy-based learning. In Predicting Structured Data. MIT Press, 2006.

- Liu et al. (2017) Liu, T., Bouaziz, S., and Kavan, L. Quasi-Newton methods for real-time simulation of hyperelastic materials. ACM Transactions on Graphics (TOG), 36(3):23, 2017.

- Martin et al. (2011) Martin, S., Thomaszewski, B., Grinspun, E., and Gross, M. Example-based elastic materials. In ACM Transactions on Graphics (TOG), volume 30, pp. 72. ACM, 2011.

- Paszke et al. (2019) Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., Desmaison, A., Kopf, A., Yang, E., DeVito, Z., Raison, M., Tejani, A., Chilamkurthy, S., Steiner, B., Fang, L., Bai, J., and Chintala, S. PyTorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems 32. 2019.

- Rojas et al. (2018) Rojas, J., Liu, T., and Kavan, L. Average vector field integration for St. Venant-Kirchhoff deformable models. IEEE Transactions on Visualization and Computer Graphics, 2018.

- Rojas et al. (2019) Rojas, J., Coros, S., and Kavan, L. Deep reinforcement learning for 2D soft body locomotion. In NeurIPS Workshop on Machine Learning for Creativity and Design 3.0, 2019.

- Sabour et al. (2017) Sabour, S., Frosst, N., and Hinton, G. E. Dynamic routing between capsules. In Guyon, I., Luxburg, U. V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., and Garnett, R. (eds.), Advances in Neural Information Processing Systems, volume 30, pp. 3856–3866. Curran Associates, Inc., 2017. URL https://proceedings.neurips.cc/paper/2017/file/2cad8fa47bbef282badbb8de5374b894-Paper.pdf.

- Schulman et al. (2017) Schulman, J., Wolski, F., Dhariwal, P., Radford, A., and Klimov, O. Proximal policy optimization algorithms. CoRR, abs/1707.06347, 2017.

- Sifakis & Barbič (2012) Sifakis, E. and Barbič, J. FEM simulation of 3D deformable solids: A practitioner’s guide to theory, discretization and model reduction. http://www.femdefo.org, 2012.

- Sifakis et al. (2005) Sifakis, E., Neverov, I., and Fedkiw, R. Automatic determination of facial muscle activations from sparse motion capture marker data. volume 24, pp. 417–425, 2005.

- Smith et al. (2018) Smith, B., Goes, F., and Kim, T. Stable neo-hookean flesh simulation. ACM Transactions on Graphics, 37:1–15, 03 2018. doi: 10.1145/3180491.

- Wang & Yang (2016) Wang, H. and Yang, Y. Descent methods for elastic body simulation on the gpu. ACM Trans. Graph., 35(6), November 2016. ISSN 0730-0301. doi: 10.1145/2980179.2980236. URL https://doi.org/10.1145/2980179.2980236.