Differentiable and Scalable Generative Adversarial Models for Data Imputation

Abstract

Data imputation has been extensively explored to solve the missing data problem. The dramatically increasing volume of incomplete data makes the imputation models computationally infeasible in many real-life applications. In this paper, we propose an effective scalable imputation system named SCIS to significantly speed up the training of the differentiable generative adversarial imputation models under accuracy-guarantees for large-scale incomplete data. SCIS consists of two modules, differentiable imputation modeling (DIM) and sample size estimation (SSE). DIM leverages a new masking Sinkhorn divergence function to make an arbitrary generative adversarial imputation model differentiable, while for such a differentiable imputation model, SSE can estimate an appropriate sample size to ensure the user-specified imputation accuracy of the final model. Extensive experiments upon several real-life large-scale datasets demonstrate that, our proposed system can accelerate the generative adversarial model training by 7.1x. Using around 7.6% samples, SCIS yields competitive accuracy with the state-of-the-art imputation methods in much shorter computation time.

Index Terms:

Data imputation, generative adversarial network, differentiable model, large-scale incomplete dataI Introduction

Many real-life scenarios, such as e-commerce, transportion science, and health care, encounter the problem of missing data [1, 2, 3, 4, 5] as long as the data collection is involved. Data might be missing for various reasons, including collection device failure [6], instable system environment [5], privacy concerns [7], etc. The missing data problem poses a daunting challenge to the downstream data analysis.

To alleviate the missing data problem, a substantial amount of data imputation studies [8, 9] has been carried out, including the statistical ones [10], machine learning ones [11], multi-layer perceptron (MLP) based ones [12], autoencoder (AE) based ones [13], and generative adversarial network (GAN) based ones [14, 15]. Ideally, a preferable data imputation algorithm is to learn the true underlying data distribution well from the observed data, and count on the learned distribution to impute the missing data. GAN-based imputation methods [14, 15] are attempts in this direction. They maximize the distributional proximity of generated data and true underlying data by introducing an adversarial game between a generator and a discriminator. Many empirical investigations [14, 15, 16] have demonstrated the promising performance brought by GAN-based methods for data imputation.

The ubiquity of data collection technologies with unprecedented processing power and substantial storage capacity has given rise to a dramatic increase in the volume of incomplete data. For example, a real-world COVID-19 case surveillance public use dataset [17] contains 22,507,139 cases with 7 clinical and symptom features, taking a 47.62% missing rate. The large volume of incomplete data, however, means that it is expensive and unwieldy to exploit the above imputation algorithms. Although GAN-based methods achieve better imputation performance than other imputation approaches, they deplete exceedingly high training cost over large-scale incomplete data. Our experimental results show that, almost all imputation methods take more than seconds on training over the million-size incomplete data. In general, the effective and scalable data imputation over large-scale incomplete data is indispensable in many real-life scenarios.

It is challenging to apply the GAN-based imputation methods to specific real-life scenarios, particularly for large-scale incomplete data. First, there is a strong theoretical evidence showing that, the Jensen-Shannon (JS) divergence of the GAN-based imputation model fails in the case that the true underlying and generated data distributions have non-overlapping supports111The support of a distribution is the complement of the largest open set of the random variables having zero-probability.[18, 19, 20]. This makes the JS divergence based imputation loss function non-differentiable, and suffer from the “vanishing” gradient problem. Second, existing GAN-based imputation methods consume high training cost to calculate gradients with the mini-batch strategy for both generator and discriminator over the entire dataset. In general, the model complexity and training sample size are two primary factors that affect the efficiency of the GAN-based imputation.

Therefore, in this paper, we propose an effective SCalable Imputation System SCIS to optimize GAN-based imputation models. SCIS makes the GAN-based imputation model differentiable via using the optimal transport theory. Then, for the differentiable model, it pays attention to the training sample size, and estimates an appropriate sample size for efficient and accuracy-guaranteed training. The system is composed of two modules, a differentiable imputation modeling (DIM) module and a sample size estimation (SSE) module. In terms of the first challenge, the DIM module leverages a masking Sinkhorn (MS) divergence to convert a GAN-based imputation model into a differentiable one, which can always provide reliable gradients to avoid the “vanishing” gradient problem. Regarding the second challenge, the SSE module estimates the minimum sample size to enable the trained differentiable GAN-based model to meet a user-specified error tolerance. Hence, SCIS employs the minimum sample size to make the differentiable GAN-based model scalable on large-scale incomplete data through SSE. In summary, the main contributions of this paper are described as follows.

-

•

We propose an effective scalable imputation system SCIS with differentiable GAN-based imputation models, which can be effectively and efficiently trained.

-

•

In the DIM module, we put forward an MS divergence to quantify the closeness between the true underlying and generated data distributions. It employs the optimal transport theory to make the GAN-based imputation model differentiable, for avoiding the “vanishing” gradient issue.

-

•

The SSE module leverages the differentiability of the MS divergence to estimate the minimum sample size for training an approximate differentiable GAN-based model, according to a user-specified imputation accuracy. It thus significantly saves the model training cost.

-

•

Extensive experiments using several real-life large-scale incomplete datasets demonstrate the computational benefits of SCIS, compared with the state-of-the-art methods.

The rest of the paper is organized as follows. We introduce the background in Section II. Section III gives an overview of the proposed system SCIS. We elaborate the DIM and SSE modules in Section IV and Section V, respectively. Section VI reports the experimental results and findings. Finally, we conclude this work in Section VII.

II Background

II-A Existing Imputation Methods

Existing imputation algorithms can be categorized by their mainly used models, including statistical ones, machine learning ones, and deep learning ones. The statistical imputation methods substitute the missing values with the statistics [10], or the most similar ones among the training data [21, 22]. This kind of method has a limited ability to handle the missing data problem, since they ignore the data distribution analysis.

In contrast, the machine learning imputation approaches are to train parametric models in machine learning [23, 24] to estimate the missing values, including decision tree models like XGBoost imputation [25], MissFI (MissForest imputation) [11], and Baran [26], and regression models like MICE (multivariate imputation by chained equations) [8] and imputation via individual model [27]. These imputation methods employ the batch gradient descent techniques [28] to calculate the model gradient over the entire dataset. The main issue is that, the incomplete dataset may be too large to fit in memory.

Moreover, the deep learning imputation methods leverage deep learning models [29, 30] to impute missing values with the mini-batch gradient descent. This category consists of i) MLP-based ones like DataWig [31] and RRSI (round-robin Sinkhorn imputation) [12], ii) AE-based ones, e.g., MIDAE (multiple imputation denoising autoencoder) [32], VAEI (variational autoencoder imputation) [33], EDDI (efficient dynamic discovery of highvalue information framework) [34], HIVAE (heterogeneous incomplete variational autoencoder) [35], MIWAE (missing data importance-weighted autoencoder) [9], and not-MIWAE (not-missing-at-random importance-weighted autoencoder) [13], and iii) GAN-based ones, such as GINN (graph imputation neural network) [15] and GAIN (generative adversarial imputation network) [14]. The above methods calculate the model gradients with a series of random partitions of the dataset, to train the imputation models over large-scale incomplete data. Nevertheless, both the iteration times and training cost of these methods are dramatically increasing with the rising volume of incomplete data.

As analyzed earlier, the data generated by GAN-based methods tend to be closer to the true underlying data manifold than that of the other ones. Nevertheless, due to the high training cost and “vanishing” gradient problem, the GAN-based imputation methods are faced with a big challenge to deal with large-scale incomplete data imputation. In this paper, our proposed system SCIS aims to provide good effectiveness and scalability for GAN-based imputation models, so as to make them practical in real-world applications.

| Symbol | Description |

|---|---|

| an input incomplete dataset (stored in a matrix) | |

| the mask matrix w.r.t. | |

| and | an imputation model and the optimized model |

| and | the reconstructed matrix and imputed matrix of |

| and | the initial dataset matrix and its mask matrix |

| and | the validation dataset matrix and its mask matrix |

| and | the initial sample size and validation sample size |

| and | the user-tolerated error bound and confidence level |

II-B Problem Definition

The input incomplete dataset is stored in a matrix , in which each data sample with takes values from a dimensional space . For encoding its missing information, we define a mask matrix , where each mask vector corresponds to a data sample . In particular, takes value from , , and ; = 1 (resp. 0) iff the -th dimension is observed (resp. missing). Note that, we use and interchangeably throughout this paper. Table I lists the frequently used symbols throughout this paper.

In general, we formalize the data imputation problem over and in Definition 1.

Definition 1

(Data imputation). Given an incomplete dataset with its mask matrix , the data imputation problem aims to build an imputation model to find appropriate values for missing values in , i.e., the imputed matrix

| (1) |

where is the element-wise multiplication; is the reconstructed matrix predicted by over . In this way, the imputation model , (i) makes as close to the real complete dataset (if it exists) as possible, or (ii) helps downstream prediction tasks to achieve better performance if adopting than that only with original one .

In this paper, our study mission is to empower the GAN-based imputation model with efficiency and effectiveness for large-scale incomplete data, such that for the optimized model, (i) the training cost is minimized, and (ii) the imputation accuracy satisfies a user-tolerated error bound.

In particular, the GAN-based imputation model builds an adversarial training platform for two players, i.e., the generator and discriminator. The generator is applied to generate data as close to the true underlying data distribution as possible. While the discriminator distinguishes the difference between the generated data and true underlying data as correctly as possible. The objective function of GAN-based imputation is defined as a minimax problem over the generator and discriminator. As a result, the GAN-based model employs the optimized generator to impute missing values via using Eq. 1.

III System Overview

In this section, we present an overview of the proposed system SCIS. It consists of a differentiable imputation modeling (DIM) module and a sample size estimation (SSE) module.

To facilitate the effective and scalable imputation on large-scale incomplete data, our proposed system SCIS first converts a GAN-based imputation model into a differentiable one, to avoid the “vanishing” gradient problem. Then, for such a differentiable GAN-based imputation model, SCIS minimizes the training sample size under accuracy-guarantees to accelerate the imputation. Its general procedure is described in Algorithm 1. At first, SCIS samples a size- validation dataset (with the validation mask matrix ) from the incomplete input dataset (with the mask matrix ), while it samples a size- dataset (with the initial mask matrix ) from the rest (line 1). Then, SCIS invokes the DIM module to train an initial model with the masking Sinkhorn (MS) divergence imputation loss function over and (line 2).

Next, with the support of the differentiablity of MS divergence, SCIS consults the SSE module to estimate the minimum sample size (with ) based on , such that the imputation difference of the trained models over the size- dataset and the (whole) size- dataset would not exceed the user-tolerated error bound with probability at least (). In particular, if is equal to , SCIS simply outputs and the matrix imputed by . Otherwise, SCIS constructs a size- sample set and its mask matrix from and . It invokes the DIM module again to retrain using and (lines 3-5). Finally, SCIS returns the trained model and the matrix imputed by .

IV Differentiable Imputation Modeling

For the GAN-based imputation model, the intersection in the supports of the true underlying and generated data distributions is usually negligible [18]. In such case, the JS divergence makes the GAN-based imputation models non-differentiable, and even suffer from the “vanishing” gradient problem. This problem may prevent the model parameter from updating its value or even stop model from further training.

In the SCIS, the differentiable imputation modeling (DIM) module designs a masking Sinkhorn (MS) divergence by deploying the mask matrix from the imputation task and optimal transport theory, to make the GAN-based imputation models differentiable, and thus obtain reliable gradients through the model training and circumvent “vanishing” gradient problem. In this section, we first introduce the MS divergence imputation loss function. Then, we elaborate how to optimize the GAN-based imputation model via the MS divergence.

IV-A Masking Sinkhorn Divergence

The differentiable masking Sinkhorn (MS) divergence is devised by introducing the mask matrix, entropic regularizer, and corrective terms into the optimal transport metric. Specifically, in the MS divergence, we first empower the optimal transport metric with the mask matrix, to devise the masking optimal transport metric for data imputation. Then, we put forward the entropic regularizer to make the masking regularized optimal transport metric differentiable and programmable. We also equip the MS divergence with corrective terms to correct the bias from the entropic regularizer.

To be more specific, the masking optimal transport metric is introduced by deploying the mask matrix and optimal transport metric, as stated in Definition 2. It is based on a simple intuition that the observed and generated data should share the same distribution. Let , , and denote the empirical measures over a size- data matrix , its mask matrix , and the reconstructed matrix respectively, where , , and are the Dirac distributions.

Definition 2

(The masking optimal transport). The masking optimal transport metric over and is defined as the optimal transport metric over and , i.e.,

where (resp. ) stands for the product measure of (resp. ) and . The transportation plan matrix is from the set . The masking cost matrix is defined as

where is the element-wise multiplication; is the cost function; is the Frobenius dot-product of and .

Nervertheless, the masking optimal transport metric is still not differentiable [36]. Moreover, there exists a costly linear program for computing the transport plan in the masking optimal transport metric. As a result, we further introduce a masking regularized optimal transport metric, as stated in Definition 3. This metric becomes differentiable and programmable through an entropic regularization term [37].

Definition 3

(The masking regularized optimal transport). The masking regularized optimal transport metric over and is defined as

| (2) | ||||

where is a hyper-parameter.

Due to the entropic regularizer in Eq. 2, the resolution of the optimal transport plan can be tractable by using Sinkhorn’s algorithm [38], and thus the masking regularized optimal transport metric becomes algorithmically differentiable and easily programmable. This entropic regularizer makes the masking regularized optimal transport metric no longer positive. This may make the imputation models focus too much on learning the mean of the true underlying data distribution (i.e., ignoring the whole distribution). To get rid of this issue, we add corrective terms into the MS divergence to assure itself of positivity.

Definition 4

(The masking Sinkhorn divergence). The masking Sinkhorn (MS) divergence between the empirical measures and is defined as

Additionally, we instantiate how the MS divergence handles the “vanishing” gradient problem in the following example. Hereby, we also show the non-differentiable property of the JS divergence and the differentiable property of the MS divergence. Throughout this paper, we make the assumption that the data are missing completely at random (i.e., MCAR).

Example 1

For the imputation task defined in Definition 1, we consider and . Under MCAR mechanism, the mask vector is independent of the sample , i.e., . Thus, a joint distribution of and can be defined as . In particular, the true underlying and generated data distributions are defined as and with the parameter , respectively. Besides, the missingness distribution is supposed to follow a Bernoulli distribution with a constant . For simplicity, we denote and as the distributions of and , respectively. Thus, the JS divergence between and is calculated by

We can find that, is not continuous at , and thus non-differentiable. Moreover, the gradients of are 0 almost everywhere. Thus, provides useless gradient information to update the model parameter , which underlies the “vanishing” gradient problem.

In contrast, the differentiable MS divergence between and is calculated by

in particular, . is calculated by

Moreover, is defined as

where denotes the set of all joint distributions , whose marginals are and , respectively. The second equality exploits the fact that the optimal joint distribution is calculated by

Thus, . It is obvious that, is differentiable everywhere with respect to . The gradients of vary linearly, which can always provide reliable gradient information to update , and thus dispose of the “vanishing” gradient problem.

In general, the differentiable MS divergence imputation loss function can be defined as

By virtue of the differentiable MS divergence, the MS divergence imputation loss function can provide a usable and reliable gradient during the training of the GAN-based model, and thus it helps to get rid of the “vanishing” gradient issue.

It is worthwhile to note that, the proposed MS divergence from SCIS is to minimize the MS divergence between the true underlying data distribution in the observed data and generated data distribution for GAN-based imputation models. Hence, with the support of the MS divergence, the true underlying data distribution can be well preserved in the imputed data from observed values. It is different from the Sinkhorn divergence used in the round-robin Sinkhorn imputation algorithm (RRSI) [12], where the Sinkhorn divergence between any two imputed batches is minimized for the MLP-based imputation model. In essence, the data distribution predicted by RRSI easily converges to a mixed distribution of the observed data and initial imputed data, rather than the true underlying one, especially when there exist a large amount of missing data. More intuitively, at the initial step, RRSI fills the missing data by the mean value of the observed data for each incomplete feature separately. Thus, RRSI is likely to regard two samples with similar missing patterns as similar ones, while these two samples are actually different in the ground true space.

IV-B Imputation Optimization with MS Divergence

In DIM module, we convert a GAN-based imputation model into a differentiable one, by taking the MS divergence to measure the closeness between the true underlying data distribution in and the generated data distribution in . In pursuit of deriving the GAN-based imputation model to predict as similar to the observed data in as possible, our goal becomes to find the optimized parameter minimizing from a parameter space . Formally, we rewrite as . Therefore, the MS divergence imputation loss minimizer is given by

| (3) |

By using the chain rule and the barycentric transport map [39], the MS divergence gradient function can be derived by the following proposition.

Proposition 1

The MS divergence gradient function can be calculated by

where and are the derivatives of with respect to the parameter and the reconstructed matrix , respectively; is the derivative of with respect to ; is the optimal transport plan; is to transform a mask vector to a diagonal matrix.

Proof:

First, by using the barycentric transport map, we obtain that ,

Then, by using the chain rule, the gradient of can be calculated by

∎

Consequently, as depicted in Figure 1, the DIM module takes the data matrix and its mask matrix as inputs, and outputs the optimized GAN-based imputation model and the data matrix imputed by . Using the MS divergence gradient function, DIM employs a mini-batch gradient descent technique [40] to solve the optimization problem in Eq. 3 for the differentiable GAN-based imputation model. To be more specific, similar as the studies [19, 41], the discriminator is trained to maximize the MS divergence between the true underlying and generated data distributions, while the generator is trained by minimizing the MS divergence metric evaluated by the newly updated discriminator. As a result, the DIM module employs the optimized GAN-based imputation model to impute missing values in via using Eq. 1.

V Sample Size Estimation

With the support of the differentiable GAN-based imputation model in the DIM module, the goal of the sample size estimation (SSE) module is to analytically infer the minimum sample size (with ) under accuracy guarantees. With the SSE module, the imputation difference between the model trained on the size- (in)complete samples from and the model trained on the given incomplete dataset is well-controlled, i.e.,

| (4) |

where is a user-tolerated error bound; is a confidence level; is the mask vector of ; and are the vectors reconstructed by and over , respectively.

The core idea of SSE is that, the differentiablity of MS divergence enables SCIS to determine the distributions of the parameters and for GAN-based imputation models (i.e., and ), based on which we exploit an empirical estimation to calculate the probability , i.e., . Specifically, in the SSE module, we first estimate the distributions of the parameters and with the given parameter of the initial model by using the minimum distance estimator on differentiable MS divergence, as shown in Theorem 1. Then, we employ an empirical estimation to calculate the probability , as presented in Proposition 2. Finally, with the estimation of , we use binary search to find the minimum size (in)complete samples to satisfy the users’ expectation on imputation accuracy in Eq. 4.

To begin with, we study the estimation on the distribution of the parameter in an unknown differentiable GAN-based imputation model with a size- () (in)complete sample set from , by using the parameter of the initial model . Inspired by the asymptotic normality of minimum distance estimator [42], we estimate the parameter distribution of in with the given of , as stated in Theorem 1. For brevity, we make a notation that for two positive-value functions and over the same domain and upper bounded by a positive constant, iff and are bounded by a constant. In particular, the MS divergence gradient function makes Theorem 1 work for GAN-based imputation models, while the corresponding theorem in [43] is only targeted for traditional machine learning models.

Theorem 1

Given the parameter of a differentiable generative adversarial imputation model , the conditional probability distribution of with respect to satisfies

| (5) |

as and , where is a hyper-parameter in the MS divergence; is the invertible Hessian matrix of the MS divergence imputation loss function with the given parameter ; denotes a multivariate normal distribution with mean and covariance matrix .

Proof:

To estimate the conditional probability distribution of , we first derive the distribution of by the multivariate central limit theorem, where is the conceptual optimal parameter when the sample size approaches infinity. Then, we infer the distribution of based on that of . Finally, we exploit Bayes’ theorem to estimate the distribution of from the distribution of .

Specifically, since the MS divergence is differentiable, is obtained by finding the parameter at which becomes 0, i.e., satisfies . According to the mean-value theorem, there exists between and that satisfies

Besides, we note that is simply an instance from the distribution of . Therefore, we can find

| (6) | ||||

| (7) | ||||

where ; and are the information matrix and the invertible Hessian matrix of the MS divergence imputation loss function with the given parameter , respectively. The transition from Eq. 6 to Eq. 7 is based on the multidimensional central limit theorem and the fact that the simple complexity for Sinkhorn divergence is [44], where . Here, is the diameter of . is the Lipschitz constant for the cost function . In our case, both and will be 1, since the input data will be normalized to and the cost function is a squared two-norm function. Thus, . Moreover, the information matrix equality implies the last equation.

Then, with the estimated distribution of , we further infer the distribution of . To be more specific, since can be regarded as a union of and , we introduce two random variables and independently following to capture the randomness of and , respectively. Note that . In this way, we can find

Moreover, we exploit the fact that , which is the sum of two independent normal random variables, asymptotically follows a normal distribution. Thus, when both and approach infinity, follows

Finally, we exploit Bayes’ theorem to estimate the distribution of based on the distribution of . Observe that and are independent because the covariance between them is zero, i.e.,

Thus,

which implies

| with |

By using Bayes’ theorem,

for some normalization constant . Since we don’t have any extra information about the prior probability , we set a constant to . Therefore, we can find that, the conditional probability distribution of with respect to satisfies

| with |

∎

For the differentiable GAN-based imputation model , the invertible Hessian matrix in Eq. LABEL:eq:conditional can be computed by

In particular, indicates the Hessian matrix of with respect to . The approximation follows by ignoring the first term in the first equation. The first term is negligible, since the reconstructed vector happens to be very close to the observed values in , where the initial model has been trained on [45].

As a result, with the given parameter and the estimated probability distribution of the parameter , we employ an empirical estimation to determine . It is inspired by the conservative estimation developed on Hoeffding’s inequatity in [43]. We can guarantee the probability at least () by requesting the empirical estimation of no less than a certain value, as described in Proposition 2. In essence, we exploit interval estimation to derive a new lower bound for the empirical statistic of in Proposition 2. The lower bound is orthogonal to the one in [43] by considering an additional hyper-parameter to bound the error between and its empirical statistic.

Proposition 2

Let be the density function of the conditional probability distribution . Suppose are i.i.d. samples drawn from , where is the number of parameter sampling. The inequality holds, if satisfies that,

where is a hyper-parameter () and is the indicator function that returns 1 if its argument is true, otherwise returns 0.

Proof:

By applying Hoeffding’s inequality,

Hence, for a given , we can select , so as to have () probability of assuring not less than .

Furthermore, to guarantee that , we will take conservative estimation, i.e., ensuring that

Thus, we can finally have at least confidence on guaranteeing . ∎

Therefore, according to Proposition 2, the probability can be approximated by

where is a parameter pair sampled from the conditional probability distributions and , respectively. Moreover, is an increasing function with respect to , which is derived in the similar way as [43].

Based on Proposition 2, the SSE module uses binary search to find the minimum size (with ) of (in)complete samples in to satisfy the users’ expectation on imputation accuracy. The search procedure terminates, if satisfies Eq. 4 while () does not. Namely,

where is the parameter of the GAN-based imputation model trained with the size-() (in)complete samples.

VI Experiment

In this section, we evaluate the performance of our proposed system SCIS via comparisons with eleven state-of-the-art imputation methods. All algorithms were implemented in Python. The experiments were conducted on an Intel Core 2.80GHz server with TITAN Xp 12GiB (GPU) and 192GB RAM, running Ubuntu 18.04 system.

Datasets. In the experiments, we use six public real-world incomplete COVID-19 datasets over several scenarios. Table II lists the information of each dataset, including the number of samples (Samples), features (Features), and missing rate, respectively. In particular, (i) COVID-19 trials tracker222https://www.kaggle.com/frtgnn/covid19-trials-tracker?select=Covid-19+Trials Tracker.csv (Trial) dataset shows the clinical trial registries on studies of COVID-19 all around the world and tracks the availability of the studies’ results. It contains 6,433 trials with 9 features, taking an about 9.63% missing rate. (ii) Emergency declarations333https://github.com/GoogleCloudPlatform/covid-19-open-data/blob/main/docs/table-emergency-declarations.md (Emergency) dataset, which is aggregated by the Policy Surveillance Program at the Temple University Center for Public Health Law Research, contains emergency declarations and mitigation policies for each US state starting on January 20, 2020. It includes 8,364 samples with 22 features, taking an about 62.69% missing rate. (iii) Government response444https://github.com/GoogleCloudPlatform/covid-19-open-data/blob/main/docs/table-government-response.md (Response) dataset contains a summary of a government’s response to the events, including a stringency index, collected from the university of Oxford [46]. It includes 200,737 samples with 19 dimensions and a 5.66% missing rate. (iv) Symptom search trends555https://github.com/GoogleCloudPlatform/covid-19-open-data/blob/main/docs/table-search-trends.md (Search) dataset for COVID-19 shows how Google search patterns for different symptoms change based on the relative frequency of searches for each symptom in 2,792 specific regions. It totally contains 948,762 samples with 424 symptoms and an 81.35% missing rate. (v) Daily weather666https://github.com/GoogleCloudPlatform/covid-19-open-data/blob/main/docs/table-weather.md (Weather) dataset shows the 9 daily weather attributes from the nearest station reported by National Oceanic and Atmospheric Administration in specific regions. It includes 4,911,011 samples from 19,284 regions with a 21.56% missing rate. (vi) COVID-19 case surveillance public use777https://data.cdc.gov/Case-Surveillance/COVID-19-Case-Surveillance-Public-Use-Data/vbim-akqf (Surveil) dataset shows the 7 clinical and symptom features for 22,507,139 cases shared by the centers for disease control and prevention, taking a 47.62% missing rate. In particular, the licenses for Trial, Emergency, Response, Search, Weather, and Surveil datasets are ODbL, CC BY, CC BY, CC0, CC BY, and CC0, respectively.

| Name | #Samples | #Features | Missing rate |

|---|---|---|---|

| Trial | 6,433 | 9 | 9.63% |

| Emergency | 8,364 | 22 | 62.69% |

| Response | 200,737 | 19 | 5.66% |

| Search | 948,762 | 424 | 81.35% |

| Weather | 4,911,011 | 9 | 21.56% |

| Surveil | 22,507,139 | 7 | 47.62% |

| Method | Trial | Emergency | Response | ||||||

| RMSE (Bias) | Time (s) | (%) | RMSE (Bias) | Time (s) | (%) | RMSE (Bias) | Time (s) | (%) | |

| MissF | 0.417 ( 0.023) | 152 | 100 | ||||||

| Baran | 0.412( 0.025) | 1,204 | 100 | ||||||

| MICE | 0.402( 0.021) | 592 | 100 | ||||||

| DataWig | 0.458 ( 0.042) | 329 | 100 | 0.389 ( 0.031) | 3,252 | 100 | |||

| RRSI | 0.398 ( 0.011) | 413 | 100 | 0.358 ( 0.025) | 4,673 | 100 | |||

| MIDAE | 0.445 ( 0.025) | 532 | 100 | 0.378 ( 0.032) | 2,321 | 100 | |||

| VAEI | 0.463 ( 0.023) | 321 | 100 | 0.398 ( 0.036) | 892 | 100 | |||

| MIWAE | 0.396 ( 0.015) | 892 | 100 | ||||||

| EDDI | 0.404 ( 0.018) | 132 | 100 | 0.363 ( 0.019) | 662 | 100 | |||

| HIVAE | 0.396 ( 0.020) | 124 | 100 | 0.357 ( 0.0.20) | 450 | 100 | 0.398 ( 0.018) | 969 | 100 |

| GINN | 0.432 ( 0.030) | 327 | 100 | 0.373 ( 0.028) | 1,572 | 100 | |||

| SCIS-GINN | 0.430 ( 0.028) | 311 | 16.72 | 0.369 ( 0.025) | 962 | 13.92 | 0.412 ( 0.023) | 7,263 | 2.53 |

| GAIN | 0.398 ( 0.024) | 90 | 100 | 0.352 ( 0.025) | 340 | 100 | 0.396 ( 0.031) | 649 | 100 |

| SCIS-GAIN | 0.386 ( 0.017) | 85 | 23.56 | 0.342 ( 0.019) | 286 | 12.32 | 0.389 ( 0.021) | 595 | 1.50 |

| Method | Search | Weather | Surveil | ||||||

| RMSE (Bias) | Time (s) | (%) | RMSE (Bias) | Time (s) | (%) | RMSE (Bias) | Time (s) | (%) | |

| HIVAE | 0.174 ( 0.014) | 14,612 | 100 | 0.440 ( 0.008) | 35,041 | 100 | |||

| GINN | |||||||||

| SCIS-GINN | 0.243 ( 0.031) | 78,621 | 1.92 | ||||||

| GAIN | 0.252 ( 0.014) | 78,121 | 100 | 0.165 ( 0.014) | 9,252 | 100 | 0.440 ( 0.012) | 29,102 | 100 |

| SCIS-GAIN | 0.250 ( 0.013) | 3,534 | 0.78 | 0.163 ( 0.016) | 2,275 | 1.90 | 0.438 ( 0.013) | 3,019 | 0.67 |

Metrics. In the evaluation, we use the training time and root mean squared error (RMSE) to measure the efficiency and effectiveness of imputation models. We also report the training sample rate , i.e., how many samples are used for training models (100% for basic original ones and for SCIS). The smaller the metric value, the better the imputation performance. To obtain the RMSE values, we randomly remove 20% observed values during training for imputation, and thus we use these observed values as the ground-truth to the missing values. In evaluation, each value is reported by averaging five times of experimental results under different data random divisions.

Imputation methods. In the experiments, the baselines include eleven state-of-the-art imputation methods, namely three machine learning ones: MissF, Baran, and MICE, two MLP-based ones: DataWig and RRSI, four AE-based ones: MIDAE, VAEI, EDDI, and HIVAE, and two GAN-based ones: GINN and GAIN. We evaluate SCIS on top of the GAN-based imputation methods.

Implementation details. For all imputation methods, we thank the authors of each algorithm for providing the source codes, so that we directly utilize these source codes with default parameter settings in our experiment evaluation. Specifically, for all machine learning imputation methods, the learning rate is 0.3, and the number of iterations is 100. The number of decision trees in MissFI is 100. Baran employs AdaBoost as the prediction model. The imputation times in MICE are 20. For all deep learning imputation methods, the learning rate is 0.001, the dropout rate is 0.5, the training epoch is 100, and the batch size is 128. The ADAM algorithm is utilized to train networks. MIDAE is a 2-layer with 128 units per layer network. For VAEI, the encoder and decoder are fully connected networks with two hidden layers, each with 20 neurons per layer, and the latent space is 10-dimensional. HIVAE uses only one dense layer for all the parameters of the encoder and decoder, each with 10 neurons per layer. In GINN, the discriminator is a simple 3-layer feed-forward network trained 5 times for each optimization step of the generator. In GAIN, both generator and discriminator are modeled as 2-layer fully connected network. Moreover, in SCIS, the hyper-parameter is 130, the confidence level is 0.05, the hyper-parameter is 0.01, the number of parameter sampling in SSE is 20, the user-tolerated error bound is 0.001. The initial sample size is 500 for Trial, 500 for Emergency, 2,000 for Response, 6,000 for Search, 20,000 for Weather, and 20,000 for Surveil. The validation sample size is equal to .

VI-A Scalability Evaluation

Table III and Table IV report the performance of imputation methods over six real-world incomplete datasets. Some results are unavailable (represented by “”), since the corresponding methods are not able to finish within seconds.

One can observe that, SCIS takes less training time and smaller training sample rate than baselines, while it achieves competitive imputation accuracy (i.e., similar RMSE value). Moreover, SCIS-GAIN outperforms the other methods in most cases. Specifically, SCIS adopts only 7.58% training samples and saves 41.75% training time of GAN-based methods in average. For the last three million-size incomplete datasets in Table IV, SCIS takes only 1.53% training samples and saves 86.85% training time of the GAN-based methods in average. In particular, compared with the Sinkhorn divergence based method RRSI, SCIS-GAIN takes only 17.94% training samples and saves 86.65% training time in average, while averagely increases 3.74% accuracy. We omit MissF, Baran, MICE, RRSI, MIDAE, VAEI, MIWAE, and EDDI on the larger million-size datasets, due to the high model complexity.

Moreover, the speedup of SCIS is not very obvious on the first three dataset in Table III, compared with the other ones in Table IV. It is because that, the initial sample size for the first three datasets are significantly smaller than that of the other ones. The smaller , the larger the variance derived by Eq. LABEL:eq:conditional, resulting in higher training sample rate and training time. The training time (resp. training sample rate) even decreases to 4.52% (resp. 0.67%) on Search (resp. Surveil) for GAIN. It is attributed to the SSE module in SCIS that minimizes the required training sample size of GAN-based methods. In addition, the competitive (even better) accuracy with (than) original methods results from the MS divergence imputation loss function employed in SCIS that measures the closeness between the true underlying data and generated data distributions. Also, the accuracy guarantee in SSE benefits the accuracy of SCIS. It even increases 3.02% accuracy for GAIN on Trial. In particular, the experimental results of SCIS-GINN are unavailable over Search and Surveil datasets, since GINN has a high complexity on construction of the similarity graph. For further extensive experimental study on SCIS, we employ GAIN as the baseline, since GAIN can work on these datasets, providing a clear comparison benchmark.

VI-B Parameter Evaluation

Effect of . When varying the missing rate (i.e., how many values in original observed data are dropped) from 10% to 90%, the corresponding results are depicted in Figure 2. It also reports the time cost of the SSE module, which is the core module of SCIS. The reported SCIS training time has included the execution time of SSE. We can find that, compared with GAIN, SCIS takes much less training time and training samples to obtain a similar or even higher imputation accuracy in all cases. It is more robust with the increasing missing rate than GAIN. The SSE module takes 28.31% training time of SCIS in average. In addition, the imputation accuracy of both GAIN and SCIS is comparable, and it descends consistently with the growth of missing rate. The reason is that, as the missing rate increases, the observed information for algorithms becomes less, making imputation algorithms less effective.

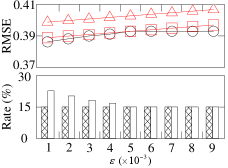

Effect of . To verify the effectiveness of the imputation accuracy guarantee, we study the effect of the user-tolerated error bound on the performance of SCIS with GAIN. With varying from 0.001 to 0.009, Figure 3 plots the corresponding results. The sample rate of the initial training set , i.e., (and that of the minimum sample set , i.e., ). The user-tolerated error is derived by + , where is the RMSE value of GAIN with the MS divergence imputation loss function trained on . In order to verify the effect of the DIM module on SCIS, we also report the imputation error + in the figure, where is the RMSE value of original GAIN model trained on . Some results are unavailable in the figure, because the corresponding methods cannot finish within seconds.

As inferred from the figure, SCIS has the higher imputation accuracy than the user-tolerated error + and the imputation error + in most cases. It means that, the SSE module can estimate an appropriate sample size to get as the good imputation accuracy as the users’ expectation, i.e., it indeed satisfies the accuracy requirement of users. Besides, the error derived by SCIS is smaller than derived by GAIN in many cases. It confirms that, the DIM module using the MS divergence does boost the imputation accuracy. Moreover, in most cases, the RMSE of SCIS increases with the growth of , while is opposite. It is because, a smaller value of signifies a lower user-tolerated error. Hence, more samples (i.e., a larger ) are needed to satisfy a lower RMSE requirement. In addition, when exceeds 0.005, the RMSE of SCIS changes slightly since equals the lower bound .

Effect of . Figure 4 depicts the experimental results of varying the initial sample size . For SCIS with GAIN, different datasets require different optimal . SCIS achieves the best imputation accuracy (in terms of RMSE) when the optimal is chosen, i.e., 500 for Trial, 500 for Emergency, 2,000 for Response, 6,000 for Search, 20,000 for Weather, and 20,000 for Surveil. Meanwhile, the time consumption and training sample rate remain reasonable and acceptable. In addition, the of SCIS increases with the decrease of in most cases. It is partially because that, the less the initial sample size , the larger the variance derived by Eq. LABEL:eq:conditional, leading to more training samples.

| Method | Trial | Emergency | Response | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE (Bias) | Time (s) | (%) | RMSE (Bias) | Time (s) | (%) | RMSE (Bias) | Time (s) | (%) | |

| GAIN | 0.398 ( 0.024) | 90 | 100 | 0.352 ( 0.025) | 340 | 100 | 0.396 ( 0.031) | 649 | 100 |

| DIM-GAIN | 0.383 ( 0.022) | 292 | 100 | 0.340 ( 0.025) | 617 | 100 | 0.386 ( 0.028) | 7,771 | 100 |

| Fixed-DIM-GAIN | 0.389 ( 0.025) | 57 | 10 | 0.348 ( 0.028) | 125 | 10 | 0.388 ( 0.002) | 836 | 10 |

| SCIS-GAIN | 0.386 ( 0.017) | 85 | 23.56 | 0.342 ( 0.019) | 286 | 12.32 | 0.389 ( 0.021) | 595 | 1.50 |

| Method | Search | Weather | Surveil | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE (Bias) | Time (s) | (%) | RMSE (Bias) | Time (s) | (%) | RMSE (Bias) | Time (s) | (%) | |

| GAIN | 0.252 ( 0.014) | 78,121 | 100 | 0.165 ( 0.014) | 9,252 | 100 | 0.440 ( 0.012) | 29,102 | 100 |

| DIM-GAIN | |||||||||

| Fixed-DIM-GAIN | 0.247 ( 0.007) | 27,045 | 10 | 0.158 ( 0.012) | 18,408 | 10 | 0.434 ( 0.015) | 78,508 | 10 |

| SCIS-GAIN | 0.250 ( 0.013) | 3,534 | 0.78 | 0.163 ( 0.016) | 2,275 | 1.90 | 0.438 ( 0.013) | 3,019 | 0.67 |

| Metric | Dataset | GAIN | SCIS-GAIN |

|---|---|---|---|

| AUC | Trial | 0.903 ( 0.040) | 0.905 ( 0.040) |

| Surveil | 0.949 ( 0.020) | 0.952 ( 0.025) | |

| MAE | Emergency | 122.312 ( 5.322) | 121.683 ( 4.985) |

| Response | 106.216 ( 7.689) | 105.343 ( 6.321) | |

| Search | 89.142 ( 9.543) | 88.879 ( 9.901) | |

| Weather | 100.262 ( 7.837) | 99.872 ( 9.222) |

VI-C Ablation Study

We investigate the influence of different modules of SCIS on the imputation performance. The corresponding experimental results of the RMSE, training time, and training sample rate are shown in Table V and Table VI. DIM-GAIN is the variant of SCIS-GAIN without the SSE module over GAIN. Fixed-DIM-GAIN, a variant of DIM-GAIN, randomly selects ten percentage of samples as the training data to accelerate the model training process. In Table VI, the results of DIM-GAIN are unavailable (represented by “”), since they are not able to finish within seconds.

We can observe that, DIM-GAIN gains better imputation accuracy (i.e., smaller RMSE value) than GAIN, while requires higher training time. Besides, compared with DIM-GAIN and Fixed-DIM-GAIN, SCIS-GAIN takes significantly less training time and training samples, while shows a negligible decrease in imputation accuracy, especially on the last three incomplete datasets. Specifically, DIM-GAIN increases 3.24% accuracy for GAIN in average, but its training time is 4.68x averagely of GAIN. It confirms the effectiveness of the MS divergence imputation loss function in the DIM module. SCIS-GAIN takes 6.79% (resp. 67.88%) training samples and saves 72.29% (resp. 20.27%) training time of DIM-GAIN (resp. Fixed-DIM-GAIN) in average, while only averagely decreases 0.72% (resp. 0.51%) accuracy for DIM-GAIN (resp. Fixed-DIM-GAIN). In general, these results further confirm that the sample size estimation strategy in the SSE module is valid and indispensable. Moreover, for Fixed-DIM-GAIN and SCIS-GAIN that use the same imputation model (i.e., DIM-GAIN), the more training samples (i.e., higher training sample rate ) the imputation method requires, the higher imputation accuracy it achieves. It is because that, the more the training samples, the higher the available information for imputation algorithms, making algorithms more powerful.

VI-D Evaluation on Post-imputation Prediction

The ultimate goal of imputing missing data is to benefit the downstream data analytics, e.g., regression and classification. In the last set of experiments, we verify the superiority of SCIS over GAIN on the post-imputation prediction task.

For the classification task over Trial and Surveil and the regression task over Emergency, Response, Search, and Weather, the corresponding prediction results are depicted in Table VII. The larger AUC value corresponds to the better prediction effect, while MAE is opposite. The imputation methods are first employed to impute missing values in the incomplete datasets. Then, a regression/classification model is trained with three fully-connected layers over the imputed data. The training epoch is 30, the learning rate is 0.005, the dropout rate is 0.5, and the batch size is 128.

We can observe that, the prediction performance under different imputation algorithms is consistent with the imputation performance of these algorithms, i.e., SCIS-GAIN gains competitive (even better) accuracy with (than) GAIN. Specifically, SCIS-GAIN decreases 0.51% MAE for GAIN on the regression task, while increases 0.27% AUC for GAIN on the classification task. In addition, on the regression (resp. classification) task, SCIS-GAIN achieves the larger improvement over the Weather (resp. Response) dataset. Thus, it further confirms the effectiveness of SCIS.

VII Conclusion

In this paper, we propose an effective scalable imputation system SCIS to accelerate GAN-based imputation models. It consists of a DIM module and an SSE module. DIM makes the generative adversarial imputation models differentiable via leveraging a new MS divergence. SSE estimates the minimum training sample size for the differentiable imputation model, so that the final trained model satisfies a user-tolerated error bound. Extensive experiments over several real-world datasets demonstrate that, SCIS significantly accelerates the model training and meanwhile harvests the competitive imputation accuracy with the state-of-the-art GAN-based methods.

Almost all existing GAN-based imputation algorithms are under the simple MCAR assumption. It to some extent limits the effectiveness of SCIS under the real complex missing mechanism. In the future, we intend to further explore the imputation problem under more complex missing mechanisms for large-scale incomplete data.

Acknowledgment

This work is partly supported by the Zhejiang Provincial Natural Science Foundation for Distinguished Young Scholars under Grant No.LR21F020005, the National Natural Science Foundation of China under Grants No.61902343, No.62025206, No.61972338, No.62102351, No.61825205, the National Key Research and Development Program of China under Grant No.2019YFE0126200, the National Science and Technology Major Project of China under Grant No.50-D36B02-9002-16/19, and the Fundamental Research Funds for the Central Universities under Grant No.2021FZZX001-25. Xiaoye Miao is the corresponding author of the work.

References

- [1] L. Berti-Equille, H. Harmouch, F. Naumann, N. Novelli, and S. Thirumuruganathan, “Discovery of genuine functional dependencies from relational data with missing values,” Proceedings of the VLDB Endowment, vol. 11, no. 8, pp. 880–892, 2018.

- [2] X. Miao, Y. Wu, J. Wang, Y. Gao, X. Mao, and J. Yin, “Generative semi-supervised learning for multivariate time series imputation,” in AAAI, 2021, pp. 8983–8991.

- [3] T. Rekatsinas, X. Chu, I. F. Ilyas, and C. Ré, “HoloClean: Holistic data repairs with probabilistic inference,” Proceedings of the VLDB Endowment, vol. 10, no. 11, pp. 1190–1201, 2017.

- [4] M. A. Soliman, I. F. Ilyas, and S. Ben-David, “Supporting ranking queries on uncertain and incomplete data,” The VLDB Journal, vol. 19, no. 4, pp. 477–501, 2010.

- [5] Z. Wei and S. Link, “Embedded functional dependencies and data-completeness tailored database design,” Proceedings of the VLDB Endowment, vol. 12, no. 11, pp. 1458–1470, 2019.

- [6] F. Biessmann, D. Salinas, S. Schelter, P. Schmidt, and D. Lange, “Deep learning for missing value imputationin tables with non-numerical data,” in CIKM, 2018, pp. 2017–2025.

- [7] W. Cao, D. Wang, J. Li, H. Zhou, L. Li, and Y. Li, “BRITS: Bidirectional recurrent imputation for time series,” in NeurIPS, 2018, pp. 6775–6785.

- [8] P. Royston and I. R. White, “Multiple imputation by chained equations (MICE): Implementation in Stata,” Journal of Statistical Software, vol. 45, no. 4, pp. 1–20, 2011.

- [9] P.-A. Mattei and J. Frellsen, “MIWAE: Deep generative modelling and imputation of incomplete data sets,” in ICML, 2019, pp. 4413–4423.

- [10] A. Farhangfar, L. A. Kurgan, and W. Pedrycz, “A novel framework for imputation of missing values in databases,” IEEE Transactions on Systems, Man, and Cybernetics -Part A: Systems and Humans, vol. 37, no. 5, pp. 692–709, 2007.

- [11] D. J. Stekhoven and P. Bühlmann, “MissForest non parametric missing value imputation for mixed-type data,” Bioinformatics, vol. 28, no. 1, pp. 112–118, 2011.

- [12] M. Boris, J. Julie, B. Claire, and C. Marco, “Missing data imputation using optimal transport,” in ICML, 2020, pp. 1–18.

- [13] N. B. Ipsen, P.-A. Mattei, and J. Frellsen, “not-MIWAE: Deep generative modelling with missing not at random data,” ArXiv Preprint ArXiv:2006.12871, 2020.

- [14] J. Yoon, J. Jordon, and M. van der Schaar, “GAIN: Missing data imputation using generative adversarial nets,” in ICML, 2018, pp. 5675–5684.

- [15] I. Spinelli, S. Scardapane, and A. Uncini, “Missing data imputation with adversarially-trained graph convolutional networks,” ArXiv Preprint ArXiv:1905.01907, 2019.

- [16] J. Kim, D. Tae, and J. Seok, “A survey of missing data imputation using generative adversarial networks,” in ICAIIC, 2020, pp. 454–456.

- [17] O. Wahltinez et al., “COVID-19 Open-Data: Curating a fine-grained, global-scale data repository for SARS-CoV-2,” 2020, work in Progress. [Online]. Available: https://goo.gle/covid-19-open-data

- [18] M. Arjovsky and L. Bottou, “Towards principled methods for training generative adversarial networks,” ArXiv Preprint ArXiv:1701.04862, 2017.

- [19] M. G. Bellemare, I. Danihelka, W. Dabney, S. Mohamed, B. Lakshminarayanan, S. Hoyer, and R. Munos, “The cramer distance as a solution to biased wasserstein gradients,” ArXiv Preprint ArXiv:1705.10743, 2017.

- [20] M. Arjovsky, S. Chintala, and L. Bottou, “Wasserstein generative adversarial networks,” in ICML, 2017, pp. 214–223.

- [21] B. Twala, M. Cartwright, and M. Shepperd, “Comparison of various methods for handling incomplete data in software engineering databases,” in ESEM, 2005, pp. 234–239.

- [22] N. S. Altman, “An introduction to kernel and nearest -neighbor nonparametric regression,” The American Statistician, vol. 46, no. 3, pp. 175–185, 1992.

- [23] Y. Zhang, H. Zhang, Z. He, Y. Jing, K. Zhang, and X. S. Wang, “Parrot: A progressive analysis system on large text collections,” Data Science and Engineering, vol. 6, no. 1, pp. 1–19, 2021.

- [24] J. Yang, W. Yao, and W. Zhang, “Keyword search on large graphs: A survey,” Data Science and Engineering, vol. 6, no. 2, pp. 142–162, 2021.

- [25] T. Chen and C. Guestrin, “XGBoost: A scalable tree boosting system,” in SIGKDD, 2016, pp. 785–794.

- [26] M. Mahdavi and Z. Abedjan, “Baran: Effective error correction via a unified context representation and transfer learning,” Proceedings of the VLDB Endowment, vol. 13, no. 12, pp. 1948–1961, 2020.

- [27] A. Zhang, S. Song, Y. Sun, and J. Wang, “Learning individual models for imputation,” in ICDE, 2019, pp. 160–171.

- [28] S. Ruder, “An overview of gradient descent optimization algorithms,” ArXiv Preprint ArXiv:1609.04747, 2016.

- [29] G. Du, L. Zhou, Y. Yang, K. Lü, and L. Wang, “Deep multiple auto-encoder-based multi-view clustering,” Data Science and Engineering, pp. 1–16, 2021.

- [30] R. Mahajan and V. Mansotra, “Predicting geolocation of tweets: Using combination of cnn and bilstm,” Data Science and Engineering, vol. 6, no. 4, pp. 402–410, 2021.

- [31] F. Biessmann, T. Rukat, P. Schmidt, P. Naidu, S. Schelter, A. Taptunov, D. Lange, and D. Salinas, “Datawig: Missing value imputation for tables,” Journal of Machine Learning Research, vol. 20, pp. 175–1, 2019.

- [32] L. Gondara and K. Wang, “Multiple imputation using deep denoising autoencoders,” ArXiv Preprint ArXiv:1705.02737, 2017.

- [33] J. T. McCoy, S. Kroon, and L. Auret, “Variational autoencoders for missing data imputation with application to a simulated milling circuit,” IFAC-PapersOnLine, vol. 51, no. 21, pp. 141–146, 2018.

- [34] C. Ma, S. Tschiatschek, K. Palla, J. M. Hernández-Lobato, S. Nowozin, and C. Zhang, “EDDI: Efficient dynamic discovery of high-value information with partial vae,” ArXiv Preprint ArXiv:1809.11142, 2018.

- [35] A. Nazabal, P. M. Olmos, Z. Ghahramani, and I. Valera, “Handling incomplete heterogeneous data using VAEs,” ArXiv Preprint ArXiv:1807.03653, 2018.

- [36] G. Peyré, M. Cuturi et al., “Computational optimal transport: With applications to data science,” Foundations and Trends® in Machine Learning, vol. 11, no. 5-6, pp. 355–607, 2019.

- [37] A. Genevay, M. Cuturi, G. Peyré, and F. Bach, “Stochastic optimization for large-scale optimal transport,” in NeurIPS, 2016, pp. 3440–3448.

- [38] R. Sinkhorn, “A relationship between arbitrary positive matrices and doubly stochastic matrices,” The Annals of Mathematical Statistics, vol. 35, no. 2, pp. 876–879, 1964.

- [39] M. Cuturi and A. Doucet, “Fast computation of Wasserstein barycenters,” in ICML, 2014, pp. 685–693.

- [40] L. Yu, W. Zhang, J. Wang, and Y. Yu, “SeqGAN: Sequence generative adversarial nets with policy gradient,” in AAAI, 2017, pp. 2852–2858.

- [41] T. Salimans, H. Zhang, A. Radford, and D. Metaxas, “Improving gans using optimal transport,” ArXiv Preprint ArXiv:1803.05573, 2018.

- [42] W. K. Newey and D. McFadden, “Large sample estimation and hypothesis testing,” Handbook of Econometrics, vol. 4, pp. 2111–2245, 1994.

- [43] Y. Park, J. Qing, X. Shen, and B. Mozafari, “BlinkML: Efficient maximum likelihood estimation with probabilistic guarantees,” in SIGMOD, 2019, pp. 1135–1152.

- [44] A. Genevay, L. Chizat, F. Bach, M. Cuturi, and G. Peyré, “Sample complexity of sinkhorn divergences,” in AISTATS, 2019, pp. 1574–1583.

- [45] G. K. Nilsen, A. Z. Munthe-Kaas, H. J. Skaug, and M. Brun, “Efficient computation of hessian matrices in tensorflow,” ArXiv Preprint ArXiv:1905.05559, 2019.

- [46] T. Hale, N. Angrist, R. Goldszmidt, B. Kira, A. Petherick, T. Phillips, S. Webster, E. Cameron-Blake, L. Hallas, S. Majumdar et al., “A global panel database of pandemic policies (oxford covid-19 government response tracker),” Nature Human Behaviour, vol. 5, no. 4, pp. 529–538, 2021.