DiffCPS: Diffusion-based Constrained Policy Search for Offline Reinforcement Learning

Abstract

Constrained policy search (CPS) is a fundamental problem in offline reinforcement learning, which is generally solved by advantage weighted regression (AWR). However, previous methods may still encounter out-of-distribution actions due to the limited expressivity of Gaussian-based policies. On the other hand, directly applying the state-of-the-art models with distribution expression capabilities (i.e., diffusion models) in the AWR framework is intractable since AWR requires exact policy probability densities, which is intractable in diffusion models. In this paper, we propose a novel approach, Diffusion-based Constrained Policy Search (dubbed DiffCPS), which tackles the diffusion-based constrained policy search with the primal-dual method. The theoretical analysis reveals that strong duality holds for diffusion-based CPS problems, and upon introducing parameter approximation, an approximated solution can be obtained after number of dual iterations, where denotes the representation ability of the parametrized policy. Extensive experimental results based on the D4RL benchmark demonstrate the efficacy of our approach. We empirically show that DiffCPS achieves better or at least competitive performance compared to traditional AWR-based baselines as well as recent diffusion-based offline RL methods.

1 Introduction

Offline Reinforcement Learning (offline RL) aims to seek an optimal policy without environmental interactions (Fujimoto et al., 2019; Levine et al., 2020). This is compelling for having the potential to transform large-scale datasets into powerful decision-making tools and avoids costly and risky online data collection, which offers significant application prospects in fields such as healthcare (Nie et al., 2021; Tseng et al., 2017) and autopilot (Yurtsever et al., 2020; Rhinehart et al., 2018).

Notwithstanding its promise, applying contemporary off-policy RL algorithms (Lillicrap et al., 2015; Fujimoto et al., 2018; Haarnoja et al., 2018a, b) directly into the offline context presents challenges due to distribution shift (Fujimoto et al., 2019; Levine et al., 2020). Previous methods to mitigate this issue under the model-free offline RL setting generally fall into three categories: 1) value function-based approaches, which implement pessimistic value estimation by assigning low values to out-of-distribution actions (Kumar et al., 2020; Fujimoto et al., 2019), 2) sequential modeling approaches, which casts offline RL as a sequence generation task with return guidance (Chen et al., 2021; Janner et al., 2022; Liang et al., 2023; Ajay et al., 2022), and 3) constrained policy search (CPS) approaches, which regularizes the discrepancy between the learned policy and behavior policy (Peters et al., 2010; Peng et al., 2019; Nair et al., 2020). We focus on the CPS-based offline RL due to its convergence guarantee and outstanding performance in a wide range of tasks.

Prior solutions for CPS (Peters et al., 2010; Peng et al., 2019; Nair et al., 2020) primarily train a parameterized unimodal Gaussian policy through weighted regression. However, recent works (Chen et al., 2022; Hansen-Estruch et al., 2023; Shafiullah et al., 2022) show such unimodal Gaussian models in weighted regression will impair the policy performance due to limited distributional expressivity. For example, if we fit a multi-modal distribution with an unimodal Gaussian distribution, it will unavoidably result in covering the low-density area between peaks. Intuitively, we can choose a more expressive model to eliminate this issue. Nevertheless, Ghasemipour et al. (2020) shows that VAEs (Kingma & Welling, 2013) in BCQ (Fujimoto et al., 2019) do not align well with the behavior dataset, which will introduce the biases in generated actions. Chen et al. (2022) utilizes the diffusion probabilistic model (Sohl-Dickstein et al., 2015; Ho et al., 2020; Song & Ermon, 2019) to generate actions and select the action through the action evaluation model under the well-studied AWR framework. However, AWR requires an exact probability density of behavior policy, which is still intractable for the diffusion-based one. Alternatively, they leverage Monte Carlo sampling to approximate the probability density of behavior policy, which inevitably introduces estimation biases and increases the cost of inference. According to motivating examples in Section 3.1, we visually demonstrate how these issues are pronounced even on a simple bandit task.

To solve the above issues, we present Diffusion-based Constrained Policy Search (DiffCPS) which incorporates the diffusion model to solve the limited expressivity problem of unimodal Gaussian policy. To avoid the exact probability density required by AWR, we solve the diffusion-based CPS problem with the primal-dual method. Thereby, DiffCPS solves the limited policy expressivity problem while avoiding the intractable density calculation brought by AWR. Interestingly, we find that when we use diffusion-based policies, we can eliminate the policy distribution constraint in the CPS through the action distribution of the diffusion model. Then under some mild assumptions, we show that strong duality holds for the diffusion-based CPS problem, which makes it easily solvable using primal-dual methods. We also study the effect of using a finite parametrization for the policies and show that the price to pay in terms of the duality gap depends on the representation ability of the parametrization. And the expressiveness of the model is precisely the greatest strength of diffusion models.

In summary, our main contributions are three-fold: 1)We explicitly eliminate the policy distribution constraint in the CPS problem. This allows us to achieve strong duality under some assumptions. 2)We present DiffCPS, which tackles the diffusion-based constrained policy search without resorting to AWR. Our theoretical analysis shows that our method can achieve near-optimal results by solving the primal-dual problem with a parametric policy. 3)Our experimental results illustrate superior or competitive performance compared to existing offline RL methods in D4RL tasks, which also corroborates the results of our theoretical analysis. Even compared to other diffusion-based methods, DiffCPS achieves state-of-the-art (SOTA) performance in D4RL MuJoCo locomotion and AntMaze average score, which substantiates the effectiveness of our method.

2 Preliminaries

Notation. In this paper, we use or to denote the learned policy with parameter and to denote behavior policy that generated the offline data. We use superscripts to denote the diffusion time step and subscripts to denote RL trajectory timestep. For instance, denotes the -th action in -th diffusion time step.

2.1 Constrained Policy Search in Offline RL

Consider a Markov decision process (MDP): , with state space , action space , environment dynamics , reward function , discount factor , policy , where is the space of probability measures on parametrized by elements of , where are the Borel sets of , and initial state distribution . The action-value or Q-value of policy is defined as . Our goal is to get a policy to maximize the cumulative discounted reward , where is the normalized discounted state visitation frequencies induced by the policy and is the likelihood of the policy being in state after following for timesteps (Sutton & Barto, 2018; Peng et al., 2019).

In offline setting (Fujimoto et al., 2019), environmental interaction is not allowed, and a static dataset is used to learn a policy. To avoid out-of-distribution actions, we restrict the learned policy to be not far away from the behavior policy by the KL divergence constraint. Prior works (Peters et al., 2010; Peng et al., 2019) formulate the above offline RL as a constrained policy search (CPS) problem with the following form:

| (1) | ||||

Previous works (Peters et al., 2010; Peng et al., 2019; Nair et al., 2020) solve Eq. (2.1) through KKT conditions and get the optimal policy as:

| (2) |

where is the partition function. Intuitively we can use Eq. (2) to optimize policy . However, the behavior policy may be very diverse and hard to model. To avoid modeling the behavior policy, prior works (Peng et al., 2019; Wang et al., 2020; Chen et al., 2020) optimize through a parameterized policy , known as AWR:

| (3) | ||||

where being the regression weights. However, AWR requires the exact probability density of policy, which restricts the use of generative models like diffusion models. In this paper, we directly utilize the diffusion-based policy to address Eq. (2.1). Therefore, our method not only avoids the need for explicit probability densities in AWR but also solves the limited policy expressivity problem.

2.2 Diffusion Model

Diffusion Probabilistic Model (DPM). Diffusion models (Sohl-Dickstein et al., 2015; Ho et al., 2020; Song & Ermon, 2019) are composed of two processes: the forward diffusion process and the reverse process. In the forward diffusion process, we gradually add Gaussian noise to the data in steps. The step sizes are controlled by a variance schedule :

| (4) | ||||

In the reverse process, we can recreate the true sample through :

| (5) | ||||

The training objective is to maximize the ELBO of . Following DDPM (Ho et al., 2020), we use the simplified surrogate loss to approximate the ELBO. After training, sampling from the diffusion model is equivalent to running the reverse process.

Conditional DPM. There are two kinds of conditioning methods: classifier-guided (Dhariwal & Nichol, 2021) and classifier-free (Ho & Salimans, 2021). The former requires training a classifier on noisy data and using gradients to guide the diffusion sample toward the conditioning information . The latter does not train an independent but combines a conditional noise model and an unconditional model for the noise. The perturbed noise is used to later generate samples. However Pearce et al. (2023) shows this combination will degrade the policy performance in offline RL. Following Pearce et al. (2023); Wang et al. (2022) we solely employ a conditional noise model to construct our noise model ().

3 Methodology

In this section, we detail the design of our Diffusion-based Constrained Policy Search (DiffCPS). First, we show that the limited policy expressivity in the AWR will degrade the policy performance through the bandit problem (Section 3.1). Second, we formulate CPS via diffusion-based policy and get our diffusion-based CPS problem (Section 3.2). Then, we solve DiffCPS with primal-dual method and show the price to pay in terms of the duality gap depending on the representation ability of the parametrization (Sectioon 3.3). (All proofs are placed in Appendix A due to limited space.)

3.1 Is policy expressivity all you need for AWR?

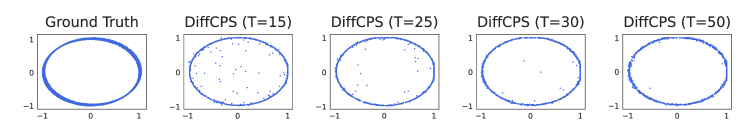

Before showing our method, we first present that the limited policy expressivity in previous Advantage Weighted Regression (AWR) methods (Peters et al., 2010; Peng et al., 2019; Nair et al., 2020) may degrade the performance through a sample 2-D bandit experiment with real-valued actions. Our offline dataset is constructed by a unit circle with noise (The first panel of Figure 1). Data on the noisy circle have a positive reward of . Note that this offline dataset exhibits strong multi-modality since there are many actions (points on the noisy circle) that can achieve the same reward of if a state is given. However, if we use unimodal Gaussian to represent the policy, although the points on the noisy circle can all receive the same reward of , the actions taken by AWR will move closer to the center of the noisy circle (AWR in Figure 1), due to the incorrect unimodal assumption. Experiment results in Figure 1 illustrate that AWR performs poorly in the bandit experiments compared to other diffusion-based methods. We also notice that compared to other diffusion-based methods, actions generated by SfBC include many points that differ significantly from those in the dataset, when . This is due to the sampling error introduced by incorporating diffusion into AWR.

Therefore, we conclude that policy expressivity is important in offline RL since most offline RL datasets are collected from multiple behavior policies or human experts, which exhibit strong multi-modality. To better model behavior policies, we need more expressive generative models to model the policy distribution, rather than using unimodal Gaussians. Furthermore, we also need to avoid the sampling errors caused by using diffusion in AWR, which is the motivation behind our algorithm.

3.2 Diffusion-Based Constrained Policy Search

In this subsection, we focus on deriving our diffusion-based CPS problem Eq. (12). Firstly, we present the form of constrained policy search (Peng et al., 2019):

| (6) | ||||

| (7) | ||||

| (8) |

where denotes our diffusion-based policy, denotes the behavior policy. Here we represent our policy via the conditional diffusion model:

| (9) | ||||

where the end sample of the reverse chain is the action used for the policy’s output and is the joint distribution of all noisy samples. According to DDPM, we can approximate the reverse process with a Gaussian distribution . We also follow the DDPM to fix the covariance matrix and predict the mean with a conditional noise model :

| (10) |

In the reverse process, we sample and then follow the reverse diffusion chain , parameterized by as

| (11) |

for The reverse sampling in Eq. (11) requires iteratively predicting times. When is large, it will consume much time during the sampling process. To work with small ( in our experiment), we follow (Xiao et al., 2021; Wang et al., 2022) and define

which is a noise schedule obtained under the variance preserving SDE of Song et al. (2020).

Theorem 3.1.

Let be a diffusion-based policy and be the behavior policy. Then, we have

-

(1)

There exists such that Eq. (7) can be transformed to

-

(2)

, .

Corollary 3.2.

Remark 3.3.

Assumption 3.4.

Suppose that is bounded and that Slaters’ condition (Boyd & Vandenberghe, 2004) holds for our offline RL setting.

Assumption 3.5.

Suppose that all policies learned from the offline dataset satisfy

| (13) |

where denotes the normalized discounted state visitation frequencies induced by the behavior policy.

Remark 3.6.

Theorem 3.7.

3.3 Primal-Dual Method for DiffCPS

In this section, the duality is used to solve the Eq. (12) and derive our method. The dual function of Eq. (12) is

| (14) | ||||

The Lagrange dual problem associated with the Eq. (12) is

| (15) |

In Eq. (14), we need to calculate the cross entropy , which is intractable in practice.

Proposition 3.8.

In the diffusion model, we can approximate the entropy with MSE-like loss through ELBO:

| (16) |

where c is a constant.

Remark 3.9.

In practice, to turn the original functional optimization problem into a tractable problem, we often use parametrized policies, such as neural networks. Next, we shift focus to solving the parametric version of Eq. (12), to find the parametrized policies that solve Eq. (14)

| (17) | ||||

| (18) |

Notice that we follow Fujimoto & Gu (2021); Wang et al. (2022) to normalize by dividing it by the target Q-net’s value. We also clip the to keep the constraint holding by , . We also find that we can improve the performance of the policy by delaying the policy update (Fujimoto et al., 2018). The algorithm given by Eq. (17)-Eq. (18) for solving Eq. (12) is summarized under Algorithm 1.

However, there is a cost for introducing such parametrization in Algorithm 1: the duality gap is no longer null. In the following Theorem 3.11, we show that after introducing parameterization, we can obtain a suboptimal solution in a number of dual iterations. Below, we first define -universal parametrization functions.

Definition 3.10.

A parametrization is an -universal parametrization of functions in if, for some , there exists for any a parameter such that

| (19) |

| Dataset | Environment | CQL | IDQL-A | QGPO | SfBC | DD | Diffuser | Diffuison-QL | IQL | DiffCPS(ours) |

|---|---|---|---|---|---|---|---|---|---|---|

| Medium-Expert | HalfCheetah | |||||||||

| Medium-Expert | Hopper | |||||||||

| Medium-Expert | Walker2d | |||||||||

| Medium | HalfCheetah | |||||||||

| Medium | Hopper | |||||||||

| Medium | Walker2d | |||||||||

| Medium-Replay | HalfCheetah | |||||||||

| Medium-Replay | Hopper | |||||||||

| Medium-Replay | Walker2d | |||||||||

| Average (Locomotion) | ||||||||||

| Default | AntMaze-umaze | - | - | |||||||

| Diverse | AntMaze-umaze | - | - | |||||||

| Play | AntMaze-medium | - | - | |||||||

| Diverse | AntMaze-medium | - | - | |||||||

| Play | AntMaze-large | - | - | |||||||

| Diverse | AntMaze-large | - | - | |||||||

| Average (AntMaze) | - | - | ||||||||

| # Diffusion steps | - | - | ||||||||

Theorem 3.11.

Let be an universal parametrization of according to Definition 3.10, Suppose that is bounded by constants and . and be the discount factor. Then, under Assumption 3.4 3.5 and for any , the sequence of updates of Algorithm 1 with step size converges in steps, with

| (20) |

to a neighborhood of (the solution of Eq. (12)), satisfying

| (21) |

where , denotes the error between the parameterized local optimal solution and the optimal solution in Eq. (17). and be the solution to the dual problem associated with Eq. (12).

Remark 3.12.

Notice that the suboptimal solution which the primal-dual algorithm convergences depends on the goodness of the solution of Eq. (17) and the representation ability of the parametrized policy, while the expressiveness of the model is precisely the greatest strength of diffusion models.

Theoretically, we need to precisely solve the Eq. (17) and Eq. (18). However, in practice, we can resort to SGD and parametrized policy to solve the equations. In this way, we can recursively optimize Eq. (12) through Eq. (17) and Eq. (18). The policy improvement described in Eq. (17) and the solution of Eq. (18) constitute the core of DiffCPS.

4 Experiments

We evaluate our DiffCPS on D4RL (Fu et al., 2020) benchmark in Section 4.1. Further, we conduct an ablation experiment to assess the contribution of different parts in DiffCPS in Section 4.2. Due to space limitations, we place the experiment setup, implementation details, and additional experiment results in the Appendix C.

4.1 Results

In Table 1, we compare the performance of DiffCPS to other offline RL methods in D4RL (Fu et al., 2020) tasks. We merely illustrate the results of the MuJoCo locomotion and AntMaze tasks due to the page limit. In traditional MuJoCo tasks, DiffCPS outperforms other methods as well as recent diffusion-based method (Wang et al., 2022; Lu et al., 2023; Hansen-Estruch et al., 2023) by large margins in most tasks, especially in the HalfCheetah medium. The medium datasets are collected by online SAC (Haarnoja et al., 2018a) agent trained to approximate the performance of the expert. Hence, it contains a lot of suboptimal trajectories, which makes the offline RL algorithms hard to learn.

Compared to the locomotion tasks, AntMaze tasks are more challenging since the datasets consist of sparse rewards and suboptimal trajectories. Even so, DiffCPS also achieves competitive or SOTA results compared with other methods. For simpler tasks like umaze, DiffCPS achieves success rate on some seeds, which shows its powerful ability to learn from suboptimal trajectories. In other AntMaze tasks, DiffCPS also shows competitive performance compared to other SOTA diffusion-based approaches. Overall, the experiments demonstrate the effectiveness of DiffCPS compared to several existing methods for offline RL.

| D4RL Tasks | DiffCPS (T=5) | DiffCPS (T=15) | DiffCPS (T=20) | SfBC (T=10) | SfBC (T=15) | SfBC (T=25) |

|---|---|---|---|---|---|---|

| Locomotion | ||||||

| AntMaze |

4.2 Ablation Study

In this section, we analyze why DiffCPS outperforms the other methods quantitatively on D4RL tasks. We conduct an ablation study to investigate the impact of three parts in DiffCPS, diffusion steps, the minimum value of Lagrange multiplier , and policy evaluation interval.

Diffusion Steps. We show the effect of diffusion steps , which is a vital hyperparameter in all diffusion-based methods. In SfBC the best is , while is best for our DiffCPS. Table 2 shows the average performance of different diffusion steps. Figure 2 shows the training curve of selected D4RL tasks over different diffusion steps .

We also note that large works better for the bandit experiment. However, for D4RL tasks, a large will lead to a performance drop. The reason is that compared to bandit tasks, D4RL datasets contain a significant amount of suboptimal trajectories. A larger implies stronger behavior cloning ability, which can indeed lead to policy overfitting to the suboptimal data, especially when combined with actor-critic methods. Poor policies result in error value estimates, and vice versa, creating a vicious cycle that leads to a drop in policy performance with a large .

The Minimum Value of Lagrange multiplier . In our method, serves as the coefficient for the policy constraint term, where a larger implies a stronger policy constraint. Although we need to restrict the according to the definition of Lagrange multiplier, we notice that we could get better results through clip in AntMaze tasks, where is a positive number, see full ablation results in Figure 3 for details.

Policy Evaluation Interval. We include the ablation of policy evaluation interval in Figure 4. We find that the policy delayed update (Fujimoto et al., 2018) has significant impacts on AntMaze large tasks. However, for other tasks, it does not have much effect and even leads to a slight performance decline. The reason is that infrequent policy updates reduce the variance of value estimates, which is more effective in tasks where sparse rewards and suboptimal trajectories lead to significant errors in value function estimation like AntMaze large tasks.

In a nutshell, the ablation study shows that the combination of these three parts, along with DiffCPS, collectively leads to producing good performance.

5 Related Work

Offline Reinforcement Learning. Offline RL algorithms need to avoid extrapolation error. Prior works usually solve this problem through policy regularization (Fujimoto et al., 2019; Kumar et al., 2019; Wu et al., 2019; Fujimoto & Gu, 2021), value pessimism about unseen actions (Kumar et al., 2020; Kostrikov et al., 2021a), or implicit TD backups (Kostrikov et al., 2021b; Ma et al., 2021) to avoid the use of out-of-distribution actions. Another line of research solves the offline RL problem through weighted regression (Peters et al., 2010; Peng et al., 2019; Nair et al., 2020) from the perspective of CPS. Our DiffCPS derivation is related but features with a diffusion model form.

Diffusion Models in RL. There exist several works that introduce the diffusion model to RL. Diffuser (Janner et al., 2022) uses the diffusion model to directly generate trajectory guided with gradient guidance or reward. DiffusionQL (Wang et al., 2022) uses the diffusion model as an actor and optimizes it through the TD3+BC-style objective with a coefficient to balance the two terms. AdaptDiffuser (Liang et al., 2023) uses a diffusion model to generate extra trajectories and a discriminator to select desired data to add to the training set to enhance the adaptability of the diffusion model. DD (Ajay et al., 2022) uses a conditional diffusion model to generate trajectory and compose skills. Unlike Diffuser, DD diffuses only states and trains inverse dynamics to predict actions. QGPO (Lu et al., 2023) uses the energy function to guide the sampling process and proves that the proposed CEP training method can get an unbiased estimation of the gradient of the energy function under unlimited model capacity and data samples. IDQL (Hansen-Estruch et al., 2023) reinterpret IQL as an Actor-Critic method and extract the policy through sampling from a diffusion-parameterized behavior policy with weights computed from the IQL-style critic. EDP (Kang & Ma, ) focuses on boosting sampling speed through approximated actions. SRPO (Chen et al., ) uses a Gaussian policy in which the gradient is regularized by a pretrained diffusion model to recover the IQL-style policy. DiffCPS is distinct from these methods because we derive it from equation 12.

The closest to our work is the method that combines AWR and diffusion models. SfBC (Chen et al., 2022) uses the diffusion model to generate candidate actions and uses the regression weights to select the best action. Our method differs from it as we directly solve the limited policy expressivity problem through the primal-dual method for DiffCPS without resorting to AWR. This makes DiffCPS simple to implement and tune hyperparameters. As shown in Table 4 we can achieve SOTA results in most tasks by merely tuning one hyperparameter.

6 Conclusion

In our work, we solve the limited expressivity problem in the weighted regression through the diffusion model. We first simplify the CPS problem with the action distribution of diffusion-based policy. Then we prove that strong duality holds for diffusion-based CPS problems, and solve it through the primal-dual method with function approximation. Experimental results on the D4RL benchmark illustrate the superiority of our method which outperforms previous SOTA algorithms in most tasks, and DiffCPS is easy to tune hyperparameters, which only needs to tune the constraint in most tasks. We hope that our work can inspire relative researchers to utilize powerful generative models, especially the diffusion model, for offline RL and decision-making.

7 Impact statements

Offline RL aims to learn a policy from an offline dataset, much like supervised learning, but due to extrapolation error and function approximation, the task of offline reinforcement learning is much more challenging compared to supervised learning and pre-training. The DiffCPS algorithm proposed in this paper utilizes diffusion-based policy and primal-dual method to address the CPS problem in offline reinforcement learning. Our method will promote the development of the field of offline reinforcement learning, thus facilitating the implementation of offline reinforcement learning in practical scenarios, such as robotic control, and consequently bring about a series of corresponding ethical issues and social outcomes.

References

- Ajay et al. (2022) Ajay, A., Du, Y., Gupta, A., Tenenbaum, J. B., Jaakkola, T. S., and Agrawal, P. Is conditional generative modeling all you need for decision making? In The Eleventh International Conference on Learning Representations, 2022.

- Borkar (1988) Borkar, V. S. A convex analytic approach to markov decision processes. Probability Theory and Related Fields, 78(4):583–602, 1988.

- Boyd & Vandenberghe (2004) Boyd, S. P. and Vandenberghe, L. Convex optimization. Cambridge university press, 2004.

- (4) Chen, H., Lu, C., Wang, Z., Su, H., and Zhu, J. Score regularized policy optimization through diffusion behavior. URL http://arxiv.org/abs/2310.07297.

- Chen et al. (2022) Chen, H., Lu, C., Ying, C., Su, H., and Zhu, J. Offline reinforcement learning via high-fidelity generative behavior modeling. In The Eleventh International Conference on Learning Representations, 2022.

- Chen et al. (2021) Chen, L., Lu, K., Rajeswaran, A., Lee, K., Grover, A., Laskin, M., Abbeel, P., Srinivas, A., and Mordatch, I. Decision transformer: Reinforcement learning via sequence modeling. Advances in neural information processing systems, 34:15084–15097, 2021.

- Chen et al. (2020) Chen, X., Zhou, Z., Wang, Z., Wang, C., Wu, Y., and Ross, K. Bail: Best-action imitation learning for batch deep reinforcement learning. Advances in Neural Information Processing Systems, 33:18353–18363, 2020.

- Dhariwal & Nichol (2021) Dhariwal, P. and Nichol, A. Diffusion models beat gans on image synthesis. Advances in neural information processing systems, 34:8780–8794, 2021.

- Fu et al. (2020) Fu, J., Kumar, A., Nachum, O., Tucker, G., and Levine, S. D4rl: Datasets for deep data-driven reinforcement learning. arXiv preprint arXiv:2004.07219, 2020.

- Fujimoto & Gu (2021) Fujimoto, S. and Gu, S. S. A minimalist approach to offline reinforcement learning. Advances in neural information processing systems, 34:20132–20145, 2021.

- Fujimoto et al. (2018) Fujimoto, S., Hoof, H., and Meger, D. Addressing function approximation error in actor-critic methods. In International Conference on Machine Learning, pp. 1587–1596. PMLR, 2018.

- Fujimoto et al. (2019) Fujimoto, S., Meger, D., and Precup, D. Off-policy deep reinforcement learning without exploration. In International Conference on Machine Learning, pp. 2052–2062. PMLR, 2019.

- Ghasemipour et al. (2020) Ghasemipour, S. K. S., Schuurmans, D., and Gu, S. S. Emaq: Expected-max q-learning operator for simple yet effective offline and online rl. arXiv preprint arXiv:2007.11091, 2020.

- (14) Gürtler, N., Blaes, S., Kolev, P., Widmaier, F., Wüthrich, M., Bauer, S., Schölkopf, B., and Martius, G. BENCHMARKING OFFLINE REINFORCEMENT LEARN- ING ON REAL-ROBOT HARDWARE.

- Haarnoja et al. (2018a) Haarnoja, T., Zhou, A., Abbeel, P., and Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In International Conference on Machine Learning, pp. 1861–1870. PMLR, 2018a.

- Haarnoja et al. (2018b) Haarnoja, T., Zhou, A., Hartikainen, K., Tucker, G., Ha, S., Tan, J., Kumar, V., Zhu, H., Gupta, A., and Abbeel, P. Soft actor-critic algorithms and applications. arXiv preprint arXiv:1812.05905, 2018b.

- Hansen-Estruch et al. (2023) Hansen-Estruch, P., Kostrikov, I., Janner, M., Kuba, J. G., and Levine, S. Idql: Implicit q-learning as an actor-critic method with diffusion policies. arXiv preprint arXiv:2304.10573, 2023.

- Ho & Salimans (2021) Ho, J. and Salimans, T. Classifier-free diffusion guidance. In NeurIPS 2021 Workshop on Deep Generative Models and Downstream Applications, 2021.

- Ho et al. (2020) Ho, J., Jain, A., and Abbeel, P. Denoising diffusion probabilistic models. Advances in neural information processing systems, 33:6840–6851, 2020.

- Janner et al. (2022) Janner, M., Du, Y., Tenenbaum, J. B., and Levine, S. Planning with diffusion for flexible behavior synthesis. arXiv preprint arXiv:2205.09991, 2022.

- (21) Kang, B. and Ma, X. Efficient diffusion policies for offline reinforcement learning.

- Kidambi et al. (2020) Kidambi, R., Rajeswaran, A., Netrapalli, P., and Joachims, T. Morel: Model-based offline reinforcement learning. Advances in neural information processing systems, 33:21810–21823, 2020.

- Kingma & Ba (2014) Kingma, D. P. and Ba, J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- Kingma & Welling (2013) Kingma, D. P. and Welling, M. Auto-encoding variational bayes. arXiv e-prints, pp. arXiv–1312, 2013.

- Kostrikov et al. (2021a) Kostrikov, I., Fergus, R., Tompson, J., and Nachum, O. Offline reinforcement learning with fisher divergence critic regularization. In International Conference on Machine Learning, pp. 5774–5783. PMLR, 2021a.

- Kostrikov et al. (2021b) Kostrikov, I., Nair, A., and Levine, S. Offline reinforcement learning with implicit q-learning. In International Conference on Learning Representations, 2021b.

- Kumar et al. (2019) Kumar, A., Fu, J., Soh, M., Tucker, G., and Levine, S. Stabilizing off-policy q-learning via bootstrapping error reduction. Advances in Neural Information Processing Systems, 32, 2019.

- Kumar et al. (2020) Kumar, A., Zhou, A., Tucker, G., and Levine, S. Conservative q-learning for offline reinforcement learning. Advances in Neural Information Processing Systems, 33:1179–1191, 2020.

- Levine et al. (2020) Levine, S., Kumar, A., Tucker, G., and Fu, J. Offline reinforcement learning: Tutorial, review, and perspectives on open problems. arXiv preprint arXiv:2005.01643, 2020.

- Liang et al. (2023) Liang, Z., Mu, Y., Ding, M., Ni, F., Tomizuka, M., and Luo, P. Adaptdiffuser: Diffusion models as adaptive self-evolving planners. arXiv preprint arXiv:2302.01877, 2023.

- Lillicrap et al. (2015) Lillicrap, T. P., Hunt, J. J., Pritzel, A., Heess, N., Erez, T., Tassa, Y., Silver, D., and Wierstra, D. Continuous control with deep reinforcement learning. arXiv preprint arXiv:1509.02971, 2015.

- Lu et al. (2023) Lu, C., Chen, H., Chen, J., Su, H., Li, C., and Zhu, J. Contrastive energy prediction for exact energy-guided diffusion sampling in offline reinforcement learning. arXiv preprint arXiv:2304.12824, 2023.

- Ma et al. (2021) Ma, X., Yang, Y., Hu, H., Liu, Q., Yang, J., Zhang, C., Zhao, Q., and Liang, B. Offline reinforcement learning with value-based episodic memory. arXiv preprint arXiv:2110.09796, 2021.

- (34) Matsushima, T., Furuta, H., Matsuo, Y., Nachum, O., and Gu, S. S. Deployment-efficient reinforcement learn-ing via model-based offline optimization. Policy, 100:1000s.

- Nair et al. (2020) Nair, A., Gupta, A., Dalal, M., and Levine, S. Awac: Accelerating online reinforcement learning with offline datasets. arXiv preprint arXiv:2006.09359, 2020.

- Nie et al. (2021) Nie, X., Brunskill, E., and Wager, S. Learning when-to-treat policies. Journal of the American Statistical Association, 116(533):392–409, 2021.

- Paternain et al. (2019) Paternain, S., Chamon, L., Calvo-Fullana, M., and Ribeiro, A. Constrained reinforcement learning has zero duality gap. Advances in Neural Information Processing Systems, 32, 2019.

- Pearce et al. (2023) Pearce, T., Rashid, T., Kanervisto, A., Bignell, D., Sun, M., Georgescu, R., Macua, S. V., Tan, S. Z., Momennejad, I., Hofmann, K., et al. Imitating human behaviour with diffusion models. arXiv preprint arXiv:2301.10677, 2023.

- Peng et al. (2019) Peng, X. B., Kumar, A., Zhang, G., and Levine, S. Advantage-weighted regression: Simple and scalable off-policy reinforcement learning. arXiv preprint arXiv:1910.00177, 2019.

- Peters et al. (2010) Peters, J., Mulling, K., and Altun, Y. Relative entropy policy search. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 24, pp. 1607–1612, 2010.

- Rashidinejad et al. (2021) Rashidinejad, P., Zhu, B., Ma, C., Jiao, J., and Russell, S. Bridging offline reinforcement learning and imitation learning: A tale of pessimism. Advances in Neural Information Processing Systems, 34:11702–11716, 2021.

- Rhinehart et al. (2018) Rhinehart, N., McAllister, R., and Levine, S. Deep imitative models for flexible inference, planning, and control. arXiv preprint arXiv:1810.06544, 2018.

- Rockafellar (1970) Rockafellar, R. Convex analysis. Princeton Math. Series, 28, 1970.

- Shafiullah et al. (2022) Shafiullah, N. M., Cui, Z., Altanzaya, A. A., and Pinto, L. Behavior transformers: Cloning modes with one stone. Advances in neural information processing systems, 35:22955–22968, 2022.

- Sohl-Dickstein et al. (2015) Sohl-Dickstein, J., Weiss, E., Maheswaranathan, N., and Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In International Conference on Machine Learning, pp. 2256–2265. PMLR, 2015.

- Song & Ermon (2019) Song, Y. and Ermon, S. Generative modeling by estimating gradients of the data distribution. Advances in neural information processing systems, 32, 2019.

- Song et al. (2020) Song, Y., Sohl-Dickstein, J., Kingma, D. P., Kumar, A., Ermon, S., and Poole, B. Score-based generative modeling through stochastic differential equations. arXiv preprint arXiv:2011.13456, 2020.

- Suh et al. (2023) Suh, H., Chou, G., Dai, H., Yang, L., Gupta, A., and Tedrake, R. Fighting uncertainty with gradients: Offline reinforcement learning via diffusion score matching. arXiv preprint arXiv:2306.14079, 2023.

- Sutton & Barto (2018) Sutton, R. S. and Barto, A. G. Reinforcement Learning: An Introduction. MIT press, 2018.

- Tseng et al. (2017) Tseng, H.-H., Luo, Y., Cui, S., Chien, J.-T., Ten Haken, R. K., and Naqa, I. E. Deep reinforcement learning for automated radiation adaptation in lung cancer. Medical physics, 44(12):6690–6705, 2017.

- Wang et al. (2020) Wang, Z., Novikov, A., Zolna, K., Merel, J. S., Springenberg, J. T., Reed, S. E., Shahriari, B., Siegel, N., Gulcehre, C., and Heess, N. Critic regularized regression. Advances in Neural Information Processing Systems, 33:7768–7778, 2020.

- Wang et al. (2022) Wang, Z., Hunt, J. J., and Zhou, M. Diffusion policies as an expressive policy class for offline reinforcement learning. arXiv preprint arXiv:2208.06193, 2022.

- Wu et al. (2019) Wu, Y., Tucker, G., and Nachum, O. Behavior regularized offline reinforcement learning. arXiv preprint arXiv:1911.11361, 2019.

- Xiao et al. (2021) Xiao, Z., Kreis, K., and Vahdat, A. Tackling the generative learning trilemma with denoising diffusion gans. arXiv preprint arXiv:2112.07804, 2021.

- Yu et al. (2020) Yu, T., Thomas, G., Yu, L., Ermon, S., Zou, J. Y., Levine, S., Finn, C., and Ma, T. Mopo: Model-based offline policy optimization. Advances in Neural Information Processing Systems, 33:14129–14142, 2020.

- Yurtsever et al. (2020) Yurtsever, E., Lambert, J., Carballo, A., and Takeda, K. A survey of autonomous driving: Common practices and emerging technologies. IEEE access, 8:58443–58469, 2020.

In the appendix, we provide the proofs of the main theorems in the paper (Appendix A), the notation table (Appendix B), details of the experiments (Appendix C), some additional experiments (Appendix D,F,E), and a discussion on the use of the augmented Lagrangian method (Appendix G).

Appendix A Proofs

A.1 Theorem 3.1

We provide the proof of Theorem 3.1.

Proof.

(1): Since the pointwise KL constraint in Eq. (2.1) at all states is intractable, we follow the Peng et al. (2019) to relax the constraint by enforcing it only in expectation

The LHS can be transformed to

| (22) |

where

is the negative entropy of is a constant for . So the constraint in Eq. (7) can be transformed to

| (23) |

where .

A.2 Theorem 3.7

Then we prove the Theorem 3.7.

The perturbation function associated to Eq. (25) is defined as

| (26) | ||||

Lemma A.1.

Proof.

See, e.g., Rockafellar (1970)[Cor. 30.2.2] ∎

Proof.

(The proof skeleton is essentially based on Paternain et al. (2019) Theorem 1)

Condition (i) and (ii) are satisfied by the Assumption 3.4. To prove the strong duality of Eq. (25), it suffices then to show the perturbation function is concave [(iii)], i.e., that for every , and ,

| (27) |

If for either perturbation or the problem becomes infeasible then or and thus Eq. (27) holds trivially. For perturbations which keep the problem feasible, suppose and are achieved by the policies and respectively. Then, with and with . To establish Eq. (27) it suffices to show that for every there exists a policy such that and . Notice that any policy satisfying the previous conditions is a feasible policy for the slack . Hence, by definition of the perturbed function Eq. (27), it follows that

| (28) |

If such a policy exists, the previous equation implies Eq. (27). Thus, to complete the proof of the result we need to establish its existence. To do so we start by formulating the objective as a linear function.

After sufficient training, we can suppose as an unbiased approximation of ,

| (29) |

where denotes the trajectory generated by policy , start state distribution and environment dynamics .

So the objective function Eq. (6) can be written as

| (30) | ||||

which implies the objective function Eq. (6) equivalent to

| (31) |

where is the set of occupation measures, which is convex and compact.[ Borkar (1988), Theorem 3.1]

So Eq. (31) is a linear function on . Let be the occupation measures associated to and . Since, is convex, there exists a policy such that its corresponding occupation measure is and . To prove Eq. (27), it suffices to show such satisfies . Using Assumption 3.5, we can get

| (32) | ||||

This completes the proof that the perturbation function is concave and according to Lemma A.1 strong duality for Eq. (12) holds.

For Eq. (15), if strong duality holds and a dual optimal solution exists, then any primal optimal point of Eq. (12) is also a maximizer of , this is obvious through the KKT conditions.[ Boyd & Vandenberghe (2004) Ch.5.5.5.]

So if we can prove that the solution of the is unique, we can compute the optimal policy of Eq. (12) from a dual problem Eq. (15):

| (33) | ||||

According to Eq. (30) is a linear function, and negative relative entropy is concave, this implies that is a strictly concave function of with only a unique maximum value and since we have that the occupancy measure has a one-to-one relationship with policy , we can obtain the unique corresponding to . ∎

A.3 Proposition 3.8

Proof.

| (34) | ||||

is true if and only if . By letting the KL divergence between and be small enough, we can approximate the with . Furthermore, we can use the variational lower bound to approximate the KL divergence (Ho et al., 2020):

A.4 Theorem 3.11

We first claim lemma A.2 to show the error introduced by the parametrization is bound by a constant.

Lemma A.2.

Let and be occupation measures induced by the policies and respectively, where is an - parametrization of . Then, it follows that

| (38) |

See proof in Paternain et al. (2019)[Appendix A].

We next introduce Theorem A.3, which shows that the dual gap introduced after parameterization is bounded by a linear function of the model’s expressive capability.

Theorem A.3.

Note that Theorem A.3 is actually based on Theorem 2 of Paternain et al. (2019), and the proof is as follows.

Proof of Theorem A.3.

Notice that the dual functions and associated to the problems Eq. (12) and Eq. (17) respectively are such that for every we have that . The latter follows from the fact that the set of maximizers of the Lagrangian for the parametrized policies is contained in the set of maximizers of the non-parametrized policies. In particular, this holds for the solution of the dual problem associated to Eq. (12). Hence we have the following sequence of inequalities

| (40) |

where the last inequality follows from the fact that is the minimum of Eq. (18). The zero duality gap established in Theorem 3.7 completes the proof of the upper bound for . We next work towards proving the lower bound for . Let us next write the dual function of the parametrized problem Eq. (17) as

| (41) |

Let and let be an -approximation of . Then, by definition of the maximum it follows that

| (42) |

We next work towards a bound for . To do so, notice that we can write the difference in terms of the occupation measures where and are the occupation measures associated to the the policies and the policy

| (43) |

Since is by definition an approximation of it follows from Lemma A.2 that

| (44) |

Using the bounds on the reward functions and occupation measures we can upper bound the difference by

| (45) |

The second term follows from . Combining the previous bound with Eq. (42) we can lower bound as

| (46) |

Since the previous expression holds for every , in particular it holds for , the dual solution of the parametrized problem Eq. (18). Thus, we have that

| (47) |

Recall that , and use the definition of the dual function to lower bound by

| (48) |

By definition of maximum, we can lower bound the previous expression by substituting by any . In particular, we select the solution to Eq. (12)

| (49) |

Since is the optimal solution to Eq. (12) it follows that and since the previous expression reduces to

| (50) |

Which completes the proof of the result ∎

Next, we claim that the subgradient calculation error in the dual update (line in Algorithm 1) through Proposition A.5

Assumption A.4.

Let be a parametrization of functions in and let with be the Lagrangian associated to Eq. (17). Denote by the maximum of and a local maximum respectively achieved by a generic reinforcement learning algorithm. Then, there exists such that for all it holds that .

Proposition A.5.

See proof in Paternain et al. (2019) (Appendix A).

Finally, we prove Theorem 3.11.

Proof of Theorem 3.11.

We start by showing the lower bound, which in fact holds for any . Notice that for any and by definition of the dual problem it follows that . Combining this bound with the result of Theorem A.3 it follows that

| (52) |

To show the upper bound we start by writing the difference between the dual multiplier and the solution of Eq. (18) in terms of the iteration at time . Since and using the non-expansive property of the projection it follows that

| (53) |

Expanding the square and using that is a bound which is calculated by the KL divergence in Eq. (7) is nonnegative on the norm squared of it follows that

| (54) |

Using the result of Proposition A.5 we can further upper bound the inner product in the previous expression by the difference of the dual function evaluated at and plus , the error in the solution of the primal maximization,

| (55) |

Defining and writing recursively the previous expression yields

| (56) |

Since is the minimum of the dual function, the difference is always negative. Thus, when is not close to the solution of the dual problem is negative. The latter implies that the distance between and is reduced by virtue of Eq. (56). To be formal, for any , when we have that

| (57) |

Using the result of Theorem A.3 we can upper bound by which establishes the neighborhood defined in Eq. (21). We are left to show that the number of iterations required to do so is bounded by

| (58) |

To do so, let be the first iterate in the neighborhood Eq. (21). Formally, . Then it follows from the recursion that

| (59) |

Since is positive the previous expression reduces to . Which completes the proof of the result. ∎

Appendix B Notation Table

| . | ||

| , or | policy. | |

| , or | behavior policy. | |

| Probability of landing in state at time , when following policy from an initial state sampled from , in an environment with transition dynamics . | ||

| , or | transition dynamics. | |

| initial state distribution of behavior policy. | ||

| is the unnormalized discounted state visitation frequencies. | ||

| is the occupation measure of policy . | ||

| parameterized state action function. | ||

| parameterized target state action function. | ||

| parameterized policy. | ||

| parameterized target policy. | ||

| parameterized Gaussian noise predict network in diffusion model. | ||

| parameterized classifier. |

Appendix C EXPERIMENTAL DETAILS

We follow the Wang et al. (2022) to build our policy with an MLP-based conditional diffusion model. Following the DDPM, we recover the action from a residual network , and we model the as a 3-layers MLPs with Mish activations. All the hidden units have been set to 256. We also follow the Fujimoto et al. (2018) to build two Q networks with the same MLP setting as our critic network. All the networks are optimized through Adam (Kingma & Ba, 2014). We provide all the hyperparameters in Table 4.

We train for epochs ( for Gym tasks). Each epoch consists of gradient steps with batch size . The training in MuJoCo locomotion is usually quite stable as shown in Figure 2. However, the training for AntMaze is relatively unstable due to its sparse reward setting and suboptimal trajectories in the offline datasets.

| Dataset | Environment | Learning Rate | Reward Tune | max Q backup | Policy evaluation interval | ||

|---|---|---|---|---|---|---|---|

| Medium-Expert | HalfCheetah | 3e-4 | None | False | |||

| Medium-Expert | Hopper | 3e-4 | None | False | |||

| Medium-Expert | Walker2d | 3e-4 | None | False | |||

| Medium | HalfCheetah | 3e-4 | None | False | |||

| Medium | Hopper | 3e-4 | None | False | |||

| Medium | Walker2d | 3e-4 | None | False | |||

| Medium-Replay | HalfCheetah | 3e-4 | None | False | |||

| Medium-Replay | Hopper | 3e-4 | None | False | |||

| Medium-Replay | Walker2d | 3e-4 | None | False | |||

| Default | AntMaze-umaze | 3e-4 | CQL | False | |||

| Diverse | AntMaze-umaze | 3e-4 | CQL | True | |||

| Play | AntMaze-medium | 1e-3 | CQL | True | |||

| Diverse | AntMaze-medium | 3e-4 | CQL | True | |||

| Play | AntMaze-large | 3e-4 | CQL | True | |||

| Diverse | AntMaze-large | 3e-4 | CQL | True |

Runtime. We test the runtime of DiffCPS on a RTX 3050 GPU. For algorithm training, the runtime cost of training the gym Locomotion tasks is about 4h26min for epochs (2e6 gradient steps), see Table 5 for details.

| D4RL Tasks | DiffCPS (T=5) | DiffusionQL (T=5) | SfBC (T=5) |

|---|---|---|---|

| Locomotion Runtime ( epoch) | s | s | s |

| AntMaze Runtime ( epoch) | s | s | s |

From Table 5, it’s evident that the runtime of DiffCPS is comparable to other diffusion model-based methods. Further optimization like using Jax and incorporating other diffusion model acceleration tricks can improve the runtime of DiffCPS.

Appendix D More Toy experiments

In this chapter, we provide further detailed information about the toy experiments. The noisy circle is composed of a unit circle with added Gaussian noise . The offline data are collected from the noisy circle. We train all methods with 20,000 steps to ensure convergence. The network framework of SfBC remains consistent with the original SfBC paper. Both DQL and DiffCPS use the same MLP architecture. The code for DQL and SfBC is sourced from the official code provided by the authors, while the code for AWR is adopted from a PyTorch implementation available on GitHub.

In Figure 6, we put the full results of diffusion-based methods and Figure 5 displays the scores of diffusion-based algorithms under different diffusion steps, denoted as . The score calculation involves a modified Jaccard Index, which computes how many actions fall into the offline dataset (the minimum distance is less than 1e-8). The dashed line represents the score of AWR.

In Figure 7, we compare DiffCPS with prior offline RL methods, including the TD3+BC (Fujimoto & Gu, 2021) with Gaussian policy, BCQ (Fujimoto et al., 2019), and BEAR (Kumar et al., 2019). Note that both BCQ and BEAR use the VAE to model behavior policy, and then get the policy by regularizing the cloned behavior policy. However, results in Figure 7 show that VAE cannot exactly model the multi-modal data in offline datasets. TD3+BC with Gaussian policy also fails to model the optimal behavior due to the limited policy expressivity described in Section 3.1.

Appendix E Extra Related Work

Offline Model-based RL. Model-based RL methods represent another approach to address offline reinforcement learning. Similar to model-free offline RL, model-based RL also needs to address extrapolation error. Kidambi et al. (2020); Yu et al. (2020) perform pessimistic planning in the learned model, which penalizes the reward for uncertainty. BREMEN (Matsushima et al., ) uses trust region constraint to update policy in an ensemble of dynamic models to avoid the extrapolation error. VL-LCB (Rashidinejad et al., 2021) employs offline value iteration with lower confidence bound to address the extrapolation error. SGP (Suh et al., 2023) uses the score function of the diffusion model to approximate the gradient of the proposed uncertainty estimation. Our method differs from them for DiffCPS tackles the diffusion-based constrained policy search problem in offline RL through Lagrange dual and recursive optimization.

Appendix F Extra Experiments

F.1 Trajectory Stitching

The offline dataset is depicted in the Figure 9 (a). The reward function is the sum of the negative Euclidean distance from the point and a constant, with both actions and states represented as 2D coordinates. The dataset consists only of trajectories forming an X shape. During the evaluation, the starting point is at . To achieve maximum reward, the policy needs to stitch together offline data to reach the goal. The trajectories generated by our policy are shown in Figure 9 (b). We note that DiffCPS successfully combines trajectories from the dataset to reach the vicinity of the target point while avoiding low-reward areas.

F.2 TriFinger Dataset Experiment

The TriFinger dataset (Gürtler et al., ) is an offline dataset for the TriFinger robotic arm that includes both real and simulated data. The dataset primarily focuses on two tasks: Push and Lift. We utilize the Push-Sim-Expert dataset to train our DiffCPS, and the network architecture is consistent with the details provided in Appendix C. The goal of the push task is to move the cube to a target location. This task does not require the agent to align the orientation of the cube; the reward is based only on the desired and the achieved position.

When trained with 2e6 training steps (gradient steps) on the sim-expert dataset, DiffCPS (, target_kl , ) achieves a success rate of in the push task based on 100 evaluation episodes and 5 random seeds, as detailed in the Table 6.

| TriFinger-Push | BC | CRR | IQL | DiffCPS (Ours) |

|---|---|---|---|---|

| Sim-Expert |

Appendix G Augmented Lagrangian Method

In Algorithm 1, we utilize alternating optimization (dual ascent) to solve the dual problem in Eq. (15). In practice, we can employ the Augmented Lagrangian Method (ALM) to enhance the stability of the algorithm. ALM introduces a strongly convex term (penalty function) into the Lagrangian function to improve convergence. Our experimental results suggest that ALM can enhance training stability but may lead to a performance decrease. This could be attributed to (i) the introduction of the penalty function altering the optimal KL divergence constraint value and (ii) the penalty function making the policy too conservative, thereby preventing the adoption of high-reward actions. We believe that further research will benefit our algorithm by incorporating ALM and other optimization algorithms.