Diamonds are Forever in the Blockchain:

Geometric Polyhedral Point-Set Pattern Matching

Abstract

Motivated by blockchain technology for supply-chain tracing of ethically sourced diamonds, we study geometric polyhedral point-set pattern matching as minimum-width polyhedral annulus problems under translations and rotations. We provide two -approximation schemes under translations with -time for dimensions and -time for two dimensions, and we give an -time algorithm when also allowing for rotations, parameterized on , which we define as the slimness of the point set.

1 Introduction

A notable recent computational geometry application is for tracking supply chains for natural diamonds, for which the industry and customers are strongly motivated to prefer ethically-sourced provenance (e.g., to avoid so-called “blood diamonds”). For example, the Tracr system employs a blockchain for tracing the supply chain for a diamond from its being mined as a rough diamond to a customer purchasing a polished diamond [23]. (See Figure 1.)

Essential steps in the Tracr blockchain supply-chain process require methods to match point sets against geometric shapes, e.g., to guarantee that a diamond has not been replaced with one of questionable provenance [23]. Currently, the Tracr system uses standard machine-learning techniques to perform the shape matching steps. We believe, however, that better accuracy can be achieved by using computational geometry approaches. In particular, motivated by the Tracr application, we are interested in this paper in efficient methods for matching point sets against geometric shapes, such as polyhedra. Formalizing this problem, we study the problem of finding the best translation and/or rotation of the boundary of a convex polytope, (e.g., defining a polished diamond shape), to match a set of points in a -dimensional () space, where the point set is a “good” sample of the boundary of a polytope that is purported to be . Since there may be small inaccuracies in the sampling process, our aim is to compute a minimum width polyhedral annulus determined by that contains the sampled points. In the interest of optimizing running time, rather than seeking an exact solution, we seek an approximate solution that deviates from the real solution by a predefined quantity .

Related Work.

We are not familiar with any previous work on the problems we study in this paper. Nevertheless, there is considerable prior work on the general area of matching a geometric shape to a set of points, especially in the plane. For example, Barequet, Bose, Dickerson, and Goodrich [12] give solutions to several constrained polygon annulus placement problems for offset and scaled polygons including an algorithm for finding the translation for the minimum offset of an -vertex polygon that contains a set of points in time. Barequet, Dickerson, and Scharf [13] study the problem of covering a maximum number of points with an -vertex polygon (not just its boundary) under translations, rotations, and/or scaling, giving, e.g., an algorithm running in time for the general problem. There has also been work on finding a minimum-width annulus for rectangles and squares, e.g., see [19, 9, 11, 21].

Chan [15] presents a -approximation method that finds a minimum-width spherical annulus of points in dimensions in time, and Agarwal, Har-Peled, and Varadarajan [1] improve this to time via coresets [2, 22, 24, 3]. A line of work has considered computing the spherical annulus under stronger assumptions on the points samples. Most notably Devillers and Ramos [17] combine various definitions for “minimum quality assumptions” by Melhorn, Shermer and Yap [20] and Bose and Morin [14] and show that under this assumption the spherical annulus can be computed in linear time for and present empirical evidence for higher dimensions. Arya, da Fonseca, and Mount [6] show how to find an -approximation of the width of points in time, for a constant . Bae [10] shows how to find a min-width -dimensional hypercubic shell in expected time.

Our Results.

Given a set of points in , we provide an -time -approximate polytope-matching algorithm under translations, for , and time for , and we provide an -time algorithm when also allowing for rotations, where the complexity of the polytope is constant and for rotations is parameterized by , which we define as the slimness of the point set.

The paper is organized as follows. In Section 2, we set the ground for this work by providing some necessary definitions. In Section 3, we approximate the MWA under only translations. In this section, we provide a constant factor approximation scheme, a -approximation scheme and describe how to improve the running time in two dimensions. In Section 4, we consider the MWA under rotations.

2 Preliminaries

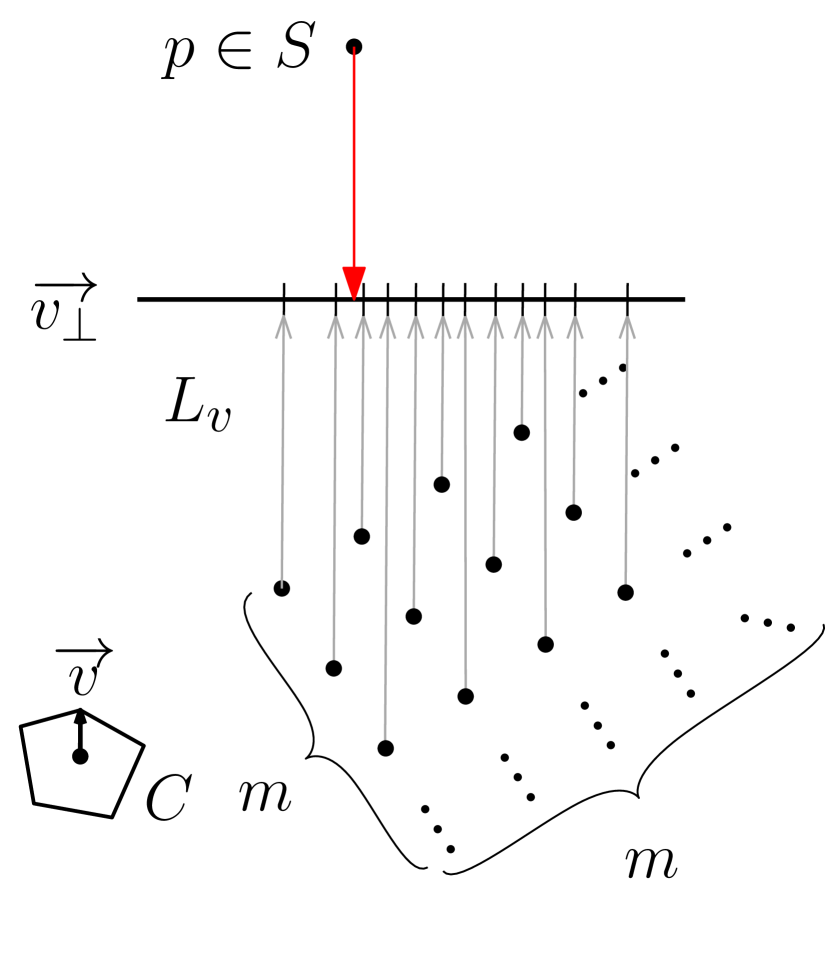

Following previous convention [4, 7, 8, 18, 5], we say that a point set is a -uniform sample of a surface if for every point , there exists a point such that . Let be a closed, convex polyhedron containing the origin in its interior. Given , and , define (the translation of by ), and for , define . A placement of is a pair , where and , representing the translated and scaled copy . We refer to and as the center and radius of the placement, respectively. Two placements are concentric if they share the same center.

Let be any closed convex body in containing the origin in its interior. The convex distance function induced by is the function , where

Thus, the convex distance between and is determined by the minimum radius placement of centered at that contains (see Figure 2). When is centrally symmetric, this defines a metric, but for general , the function may not be symmetric. We call the original shape the unit ball under the distance function . Note that when , and are collinear and appear in that order.

Define an annulus for to be the set-theoretic difference of two concentric placements , for . The width of the annulus is . Given a -uniform sample of points, , there are three placements of we are interested in:

Minimum enclosing ball (MinBall): A placement of of the smallest radius that contains all of the points in .

Maximum enclosed ball (MaxBall): A placement of of the largest radius, centered within the convex hull of , that contains no points in .

Minimum width annulus (MWA): A placement of an annulus for of minimum width, that contains all of the points in .

Note that, following the definition of the MaxBall, we require that the center of the MWA must also lie within the convex hull of . For each of the above placements, we also refer to parameterized versions, for example MinBall(), MaxBall(), or MWA(). These respectively refer to the minimum enclosing ball, maximum enclosed ball, or minimum width annulus centered at the point .

Further, we use and to denote the radius of MinBall and MaxBall, respectively, and we use to denote the width of MWA.

The ratio, , of the MinBall over the MaxBall of under distance function defines the fatness of under , such that . Also, we define the concentric fatness as the ratio of the MinBall and MaxBall centered at the MWA, such that where is the center of the MWA. Conversely, we define the slimness to be , which corresponds to the ratio of the over the MWA, i.e., .

Remark 1

In order for a -uniform sample to represent the surface, , with sufficient accuracy for a meaningful , we assume that the sample must contain at least one point between corresponding facets of the . Where corresponding facets refer to facets of the and representing the same facet of . Therefore, in the remainder of the paper, we assume we have a -uniform sample and that is small enough to guarantee this condition for even the smallest facets.

In practice, it would be easy to determine a small enough before sampling , since only sufficiently slim surfaces would benefit from finding the , and very fat surfaces would yield increasingly noisy . One easy approach would be setting to the smallest facet of the and scaling down by an arbitrary constant larger than the maximum expected fatness, such as 100. This example imposes a very generous bound on fatness since it would allow the inner shell to be 1% of the size of the outer shell, practically a single digit constant would often suffice.

Also, note that, for a given center point , is uniquely defined as the annulus centered at with inner radius and outer radius . Further, let us assume that the reference polytope defining our polyhedral distance function has facets, where is a fixed constant, since the sample size is expected to be much larger than . Thus, can be calculated in time; hence, can be found in time, which is under our assumption.

3 Approximating the Minimum Width Annulus

Let us first describe how to find a constant factor approximation of under translations. Note that, by assumption, the center of our approximation lies within the convex hull of . Let us denote the center, outer radius, inner radius, and width of the optimal MWA as , , , and .

We begin with Lemma 3.1, where we prove is within a certain distance from the center of the MinBall , providing a search region for . In Lemma 3.3, we bound the width achieved by a center-point that is sufficiently close to . We then use this in Lemma 3.5 to prove that achieves a constant factor approximation.

Lemma 3.1.

The center of the , , is within distance of the center of the , . That is, .

Proof 3.2.

Recall our assumption from Remark 1. By our assumption that at least one sample point lies on each facet, MinBall cannot shrink past any facets of MaxBall().

Suppose for contradiction that . Let be the point where a ray projected from through intersects the boundary of MaxBall(), and let denote the radius of the MinBall. Observe that must be large enough for MinBall to contain and therefore .

| by collinearity | ||||

| by assumption | ||||

| by MaxBall(). |

Thus, since , we find , which is a contradiction since must be the smallest radius of the MinBall across all possible centers. Therefore, we have that cannot be larger than .

Lemma 3.1 helps us constrain the region within which must be contained. Let us now reason about how a given center point, , would serve as an approximation. For convenience, let us define and as the radii of the MinBall and MaxBall centered at , respectively.

Lemma 3.3.

Suppose is an arbitrary center-point in our search region, and the two directed distances between and are at most , i.e., . Then, we have that .

Proof 3.4.

Knowing that all sample points must be contained within the , the cannot expand past the furthest or closest point in from under . Let us now define these two points and use them to bound the radii for and .

Let be the point where the ray from through intersects the boundary of MinBall(). cannot extend further than .

Conversely, let be the intersection point where the ray projected from through intersects the boundary of MaxBall(), in which case cannot collapse further than .

Combining these bounds with the fact that we find that .

For simplicity, let us consider two points to be -close (under ) whenever .

Lemma 3.5.

If is the center of , then is a constant factor approximation of the , that is, , for some constant , under translations.

Proof 3.6.

From Lemma 3.1, we have that . If and are -close, then we can directly apply the second part of Lemma 3.3 to find and , such that , thus proving that this is a 2-approximation. If is a metric, then and this must always be the case. However, if , then we must use the Euclidean distance to find . Let vector , and let us define unit vectors with respect to and , such that

| from Lemma 3.1. |

Under any convex distance function, is bounded from above by , which corresponds to finding the direction, , of the largest asymmetry in . Thus, by Lemma 3.3, . Under our (fixed) polyhedral distance function, is constant; hence, MWA() is a constant-factor approximation.

-approximation.

Let us now describe how to compute a -approximation of .

We begin with Lemma 3.7, which defines how close to is sufficient for a -approximation. In Theorem 3.9, we define a grid of candidate center-points so that any point in the search region has a gridpoint sufficiently close to it.

Lemma 3.7.

Suppose and are -close, where , is the center of , and is the constant from Lemma 3.5. Then, is a -approximation of under translations.

Proof 3.8.

It suffices to show that the width of our approximation only exceeds the optimal width by a factor of at most .

Assuming and are -close, and using Lemma 3.3, we require that , i.e., .

Let us then choose , knowing that from Lemma 3.5, which is sufficient for achieving a -approximation.

Knowing how close our approximation’s center must be, we can now present a -approximation algorithm to find a center satisfying this condition.

Theorem 3.9.

One can achieve a -approximation of the under translations in time.

Proof 3.10.

The MinBall can be computed in time [16]. By Lemma 3.1, we have that , where is the MinBall center. This implies that must lie within the placement or more generously in , defined as . Furthermore, from Lemma 3.7, we know that being -close to suffices for an -approximation. Therefore, overlaying a grid that covers , such that any point in is -close to a gridpoint, guarantees the existence of a point for which is a -approximation.

Since and -closeness are both defined under , we translate this to a cubic grid for simplicity. Let be the smallest cube enclosing and be the largest cube enclosed by . Let us now define a grid, , to span over with cells the size of .

This grid, , has gridpoints per direction and gridpoints in total, where corresponds to the fatness of under the cubic distance function.

Let us define the cubic distance function, , with unit cube , such that is the largest cube enclosed by .

The grid guarantees that for every point , there exists a gridpoint such that . Since the unit cube is contained within the unit polyhedron, we have that ; and since defines a metric, must also be -close under . Finding the gridpoint providing the -approximation takes time,111For metrics, MinBall provides a 2-approximation, thus . For non-metrics, we can remove this constant by first using this algorithm with in order to find a 2-approximation in linear-time, and using this approximation for gridding in the main step. which, under a fixed , is time.

Faster grid-search in two dimensions.

The algorithm of Theorem 3.9 recalculates the MWA at every gridpoint. However, small movements along the grid should not affect the MWA much. We use this insight to speed up MWA recalculations for two dimensions.

Let us first define the contributing edge of a sample point, , as the edge of intersected by the ray emanating from a gridpoint, , towards . Under this center-point, will only directly affect the placement of the contributing edge. Observe that given vectors , defined as the vectors directed from the center towards each vertex, the planar subdivision, created by rays for each originating from , separates points by their contributing edge. For any two gridpoints, and , and rays projected from them parallel to , any points within these two rays will contribute to different edges under and . We denote this region as the vertex slab of vertex , and the regions outside of this as edge slabs. Points within an edge slab contribute to the same edge under both gridpoints, maintaining the constraints this imposes on the MWA, can therefore be achieved with the two extreme points per edge slab. If we consider vertex slabs for all , we must be able to quickly calculate the strictest constraints imposed by points in a subset of vertex slabs. An example of the planar subdivision for two points is shown in Figure 3.

Given a grid , we write to be the gridpoint at index . Consider the set of all grid lines defined by rays parallel to starting at each gridpoint. defines a planar subdivision corresponding to the edge slabs between gridpoints. Before attempting to identify the extreme points for each edge slab, we first need to find a quick way to identify the slab in that contains a given sample-point, .

Lemma 3.11.

For a specific vector and an grid, we can identify which slab contains a sample point, , in time with -time preprocessing.

Proof 3.12.

Consider the orthogonal projection of grid lines in onto a line perpendicular to , the order in which these lines appear in defines the possible slabs that could contain (see Figure 4(a)). We can project a given grid line onto in constant time. With the grid lines in sorted order, we can perform a binary search through the points in time to identify the slab containing .

Using general sorting algorithms, we could sort the grid lines in time. However, since these lines belong to a grid, we can exploit the uniformity to sort them in only time. Consider the two basis vectors defining gridpoint positions and , and their sizes after orthogonal projection onto , , and . Without loss of generality, assume that , in which case grid lines originating from adjacent gridpoints in the same row must be exactly away. In addition, any region -wide, that does not start at a grid line, must contain at most a single point from each row. Furthermore, since points in the same row are always away, they must appear in the same order in each region.

We can therefore initially split into regions wide. Sorting the grid lines into their region can therefore be calculated in time. Now we can sort the points in the region containing points from every row in time. Since each region has the same order, we can place points in other regions by following the order found in our sorted region, thus taking preprocessing time for sorting the points.

Recall that points to the left of a given line contribute to the edge to the left of , i.e., all points belonging to slabs to the left of . We can therefore isolate the points in these slabs causing the largest potential change in MWA.

Lemma 3.13.

For a vertex and grid line through gridpoint , let and refer to the slabs on the subdivision imposed by immediately to the left and right of , respectively. Assuming maintains the points to the left of imposing the strictest constraints on , and to the right, one can calculate in time.

Proof 3.14.

Finding and can now be achieved by optimizing only over the set of points in and all points in edge slabs. This set would contain two points per vertex and two points per edge, yielding a constant number of points. Thus, MWA() can be found in constant time.

Theorem 3.15.

A -approximation of the in two dimensions can be found in time under translations.

Proof 3.16.

For each vertex, , we use Lemma 3.11 to identify the slab for every sample point. For each slab, we maintain only the two extreme points for each of the edges incident on . Let denote the vector describing the edge incident on from the left, and vice versa for incident from the right. For each slab, we maintain only points which when projected in the relevant direction, , cause the furthest and closest intersections with the boundary (shown for in Figure 4(b)).

With a left-to-right pass, we update a slab’s extreme points relative to to maintain the extreme points for itself and slabs to its left. With a right-to-left pass, we do the same for and maintain points in its slab and slabs to its right.

Thus, for each vertex, we create the slabs in time, place every sample point in its slab in time, and maintain only the extreme points per slab in constant time per sample point. With time to update each slab after processing the sample points, we can update the slabs such that they hold the extreme points across all slabs to their left or right (relative to and , respectively).

For each edge slab, finding the extreme points is much simpler since finding and will always be based on the same contributing facet for all points within the same edge slab .

Thus, after finding the extreme points in both vertex slabs, we can calculate in constant time as described in Lemma 3.13. Taking time to find , which by Theorem 3.9 provides a -approximation of the minimum width annulus, and considering the pre-processing time completes the proof of the claimed time bound.

4 Approximating MWA allowing rotations

In this section we consider rotations. As with Lemma 3.7, our goal is to find the maximum tolerable rotation sufficient for a -approximation. Observe that when centered about the global optimum, the solution found under both rotation and translation is at least as good as the solution found solely through rotation (i.e., under a fixed center). We will therefore first prove necessary bounds for a -approximation under rotation only with the understanding that they remain when also allowing for translation.

Consider the polyhedral cone around , and define the bottleneck angle as the narrowest angle between a point on the surface of the polyhedral cone and . Let be the smallest bottleneck angle across all . Let MWA) denote the MWA centered at , where C has been rotated by angle . Let us also use similar notations for MinBall and MaxBall.

Lemma 4.1.

Rotating by causes to grow by at most (and the reciprocal for ).

Proof 4.2.

Similarly to Lemma 3.3, all sample points must be contained within . MinBall can only expand to the furthest point within under the new rotated distance function. Let us now consider the triangle formed between , the vertex of the original MinBall, , and the rotated vertex (shown in Figure 5(a)). Since our calculations focus towards the same vertex, we can work with Euclidean distances. The quantity defines the radius of the original polyhedron, and the radius of the rotated one. With as the remaining angle in our triangle and using the sine rule, we find that

Observe that is the angle maximizing this scale difference. This applies to rotating by in any direction about (as shown in Figure 5(b)), and since this direction need not coincide with , the scaled polyhedron might not touch the original.

For MaxBall to be contained within MaxBall, the same example holds after switching references to the scaled and original. In this case, minimizes .

Let us now determine the rotation from the optimal orientation that achieves a -approximation.

Lemma 4.3.

Given a center , we have that is a -approximation when is smaller than

Proof 4.4.

Define as the ratio of the radius of MinBall() to (i.e., ). Note that corresponds to the slimness of under over all rotations of . Using Lemma 4.1, we know that

| (1) | |||

| (2) |

For a -approximation, imposing the right side of Relation (1), its left side follows by definition of , and Relation (2) by cancellation of . Since is constant, we can rearrange the above into a quadratic equation and solve for .

| (3) |

However, will find , whereas we need the obtuse angle .

Thus, proving this lemma’s titular bound,

and achieving a -approximation.

Let us now establish a more generous lower-bound that will prove helpful when developing algorithms.

Lemma 4.5.

The angular deflection required for a -approximation is larger than .

Proof 4.6.

Observe that is of the form and thus, in order for to be positive, we must have and . We will prove this is the case.

| (4) | |||

| (5) | |||

| (6) |

Equation (4) follows from Equation (3) after expanding. Relation (6) follows after using Equation (5) as a lower bound for the square root term in Equation (4) since and . This allows us to bound by using a Taylor’s series expansion to find ,

thus proving that the bound from Lemma 4.3 is greater than .

Lemma 4.7.

For fixed rotation of , assume we have an -time algorithm for the optimal minimum-width annulus under translation.

We can find a-approximation of the under rotations and translations in time.

Proof 4.8.

A -dimensional shape has a -dimensional axis of rotation. Let us evenly divide the unit circle into directions. Let us also define a collection of all possible direction combinations as a grid of directions. For each grid direction, rotate by the defined direction and calculate the MWA in time. The optimal orientation must lie between the -dimensional cube formed by grid directions.

Therefore, as long as the diagonal is smaller than , there exists a grid direction within of the optimal orientation, which implies a -approximation by Lemma 4.5. Thus, we can achieve a -approximation in time , where and are constant under a fixed distance function .

With a fixed center, Lemma 4.7 can be used to approximate under rotations in time.

Theorem 4.9.

One can find a -approximation of under rotations and translations in time for , and time for .

Proof 4.10.

Consider using an approximation algorithm (from Theorems 3.9 or 3.15) instead of an exact algorithm as in Lemma 4.7. Let us define as the approximation ratio necessary from the subroutines in order to achieve an overall approximation ratio of , such that . Since and , must be larger than , and thus, we can always pick a value for which is and achieves the desired approximation. Thus, by following Lemma 4.7, we can find a -approximation using the -approximation algorithm from Theorem 3.9 to find a -approximation in time. Alternatively, for two dimensions, we can instead use the algorithm from Theorem 3.15 to find a -approximation in time.

References

- [1] P. K. Agarwal, S. Har-Peled, and K. R. Varadarajan. Approximating extent measures of points. J. ACM, 51(4):606–635, 2004.

- [2] P. K. Agarwal, S. Har-Peled, K. R. Varadarajan, et al. Geometric approximation via coresets. Combinatorial and Computational Geometry, 52(1), 2005.

- [3] P. K. Agarwal, S. Har-Peled, and H. Yu. Robust shape fitting via peeling and grating coresets. Discrete & Computational Geometry, 39(1):38–58, 2008.

- [4] N. Amenta, D. Attali, and O. Devillers. Size of Delaunay triangulation for points distributed over lower-dimensional polyhedra: a tight bound. Neural Information Processing Systems (NeurIPS): Topological Learning, 2007.

- [5] N. Amenta and M. Bern. Surface reconstruction by Voronoi filtering. Discrete & Computational Geometry, 22(4):481–504, 1999.

- [6] S. Arya, G. D. da Fonseca, and D. M. Mount. Approximate convex intersection detection with applications to width and Minkowski sums. In 26th European Symposium on Algorithms (ESA). Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik, 2018.

- [7] D. Attali and J.-D. Boissonnat. Complexity of the delaunay triangulation of points on polyhedral surfaces. Discrete & Computational Geometry, 30(3):437–452, 2003.

- [8] D. Attali and J.-D. Boissonnat. A linear bound on the complexity of the Delaunay triangulation of points on polyhedral surfaces. Discrete & Computational Geometry, 31(3):369–384, 2004.

- [9] S. W. Bae. Computing a minimum-width square annulus in arbitrary orientation. Theoretical Computer Science, 718:2–13, 2018.

- [10] S. W. Bae. Computing a minimum-width cubic and hypercubic shell. Operations Research Letters, 47(5):398–405, 2019.

- [11] S. W. Bae. On the minimum-area rectangular and square annulus problem. Computational Geometry, 92:101697, 2021.

- [12] G. Barequet, P. Bose, M. T. Dickerson, and M. T. Goodrich. Optimizing a constrained convex polygonal annulus. J. Discrete Algorithms, 3(1):1–26, 2005.

- [13] G. Barequet, M. T. Dickerson, and Y. Scharf. Covering points with a polygon. Computational Geometry, 39(3):143–162, 2008.

- [14] P. Bose and P. Morin. Testing the Quality of Manufactured Disks and Cylinders. In G. Goos, J. Hartmanis, J. van Leeuwen, K.-Y. Chwa, and O. H. Ibarra, editors, Algorithms and Computation, volume 1533, pages 130–138. Springer Berlin Heidelberg, Berlin, Heidelberg, 1998.

- [15] T. M. Chan. Approximating the diameter, width, smallest enclosing cylinder, and minimum-width annulus. In 16th Symposium on Computational Geometry (SoCG), pages 300–309, 2000.

- [16] S. Das, A. Nandy, and S. Sarvottamananda. Linear time algorithm for 1-Center in under convex polyhedral distance function. In D. Zhu and S. Bereg, editors, Frontiers in Algorithmics, volume 9711, pages 41–52. Springer, 2016.

- [17] O. Devillers and P. A. Ramos. Computing roundness is easy if the set is almost round. International Journal of Computational Geometry & Applications, 12(3):229–248, 2002.

- [18] J. Erickson. Nice point sets can have nasty Delaunay triangulations. In 17th Symposium on Computational Geometry (SoCG), pages 96–105, 2001.

- [19] O. N. Gluchshenko, H. W. Hamacher, and A. Tamir. An optimal algorithm for finding an enclosing planar rectilinear annulus of minimum width. Operations Research Letters, 37(3):168–170, 2009.

- [20] K. Mehlhorn, T. C. Shermer, and C. K. Yap. A complete roundness classification procedure. In Proceedings of the Thirteenth Annual Symposium on Computational Geometry - SCG ’97, pages 129–138, Nice, France, 1997. ACM Press.

- [21] J. Mukherjee, P. R. Sinha Mahapatra, A. Karmakar, and S. Das. Minimum-width rectangular annulus. Theoretical Computer Science, 508:74–80, 2013.

- [22] J. M. Phillips. Coresets and sketches. In Handbook of Discrete and Computational Geometry, pages 1269–1288. Chapman and Hall/CRC, 2017.

- [23] U. Thakker, R. Patel, S. Tanwar, N. Kumar, and H. Song. Blockchain for diamond industry: Opportunities and challenges. IEEE Internet of Things Journal, pages 1–1, 2020.

- [24] H. Yu, P. K. Agarwal, R. Poreddy, and K. R. Varadarajan. Practical methods for shape fitting and kinetic data structures using coresets. Algorithmica, 52(3):378–402, 2008.