Detecting Backdoor Attacks in Federated Learning via Direction Alignment Inspection

Abstract

The distributed nature of training makes Federated Learning (FL) vulnerable to backdoor attacks, where malicious model updates aim to compromise the global model’s performance on specific tasks. Existing defense methods show limited efficacy as they overlook the inconsistency between benign and malicious model updates regarding both general and fine-grained directions. To fill this gap, we introduce AlignIns, a novel defense method designed to safeguard FL systems against backdoor attacks. AlignIns looks into the direction of each model update through a direction alignment inspection process. Specifically, it examines the alignment of model updates with the overall update direction and analyzes the distribution of the signs of their significant parameters, comparing them with the principle sign across all model updates. Model updates that exhibit an unusual degree of alignment are considered malicious and thus be filtered out. We provide the theoretical analysis of the robustness of AlignIns and its propagation error in FL. Our empirical results on both independent and identically distributed (IID) and non-IID datasets demonstrate that AlignIns achieves higher robustness compared to the state-of-the-art defense methods. The code is available at https://github.com/JiiahaoXU/AlignIns.

1 Introduction

Unlike traditional centralized training methods, which require gathering and processing all data at a central location such as a server, Federated Learning (FL) [32], as a decentralized training paradigm, allows a global model to learn from data distributed across various local clients, thereby achieving the goal of privacy-preserving. During training, the server distributes the global model to local clients, and each client trains the received global model using its local dataset, and then submits its local model update to the server for global model refinement. FL has been applied in various fields, including healthcare[35], finance [28], and remote sensing [27], where local data privacy is essential.

Although promising, the distributed nature of FL systems makes them vulnerable to a range of advanced poisoning attacks [15, 26, 45]. This vulnerability primarily stems from the server’s lack of close monitoring of the local data and the training algorithm on clients. Consequently, this drawback allows attackers to compromise the data of local clients or interfere with the training algorithm, enabling them to inject malicious local model updates that distort the performance of the global model. For example, backdoor attacks [4, 48, 14, 54, 46] have gained significant attention due to their stealthiness and practical effectiveness. In detail, backdoor attacks in FL seek to preserve the performance of the global model on clean inputs (i.e., the main task), while inducing the global model to make incorrect predictions on inputs that contain a certain predefined feature (i.e., the backdoor task). As backdoor attacks maintain the main task and the backdoor task simultaneously, the malicious local model updates are statistically similar to benign ones [46, 36] (poison-coupling effect [20]), making anomaly detection more challenging on the server side.

Existing defense methods (i.e., aggregation rule) usually aim to identify malicious model updates and filter them out to achieve better robustness by magnitude-based metrics extracted from local model updates (e.g., Manhattan distance [19, 22] and Euclidean distance [6, 15]). However, magnitude-based metrics are ineffective in distinguishing stealthy backdoor attacks where benign and malicious model updates are usually similar in magnitude. Additionally, when the global model tends to converge, the magnitude of each model update becomes very small, making effective malicious manipulation on magnitude negligible. To this end, some works employ Cosine similarity to check the pair-wise directional information of model updates [11, 42, 36]. However, pair-wise Cosine similarity between two model updates only captures their general directional similarity and overlooks fine-grained information (e.g., signs of parameters), resulting in limited robustness. In addition, in FL settings with non-IID data, the pair-wise Cosine similarity of model updates can be easily perturbed by the naturally diverse benign model updates. Furthermore, there is a deficiency in theoretical analysis within the literature concerning the effects of data heterogeneity on defense methods deployed by the server in FL.

In this work, we propose a novel defense method designed to defend against backdoor attacks in FL, named AlignIns (Direction Alignment Inspection), which examines local model updates for directional alignment at different granularity levels to identify malicious updates. Specifically, after receiving all model updates from clients, AlignIns evaluates each update by (1) inspecting temporal directional alignment with the global model of the latest round with Cosine similarity and (2) assessing more fine-grained sign alignment with the principal sign across all updates with a novel metric sign alignment ratio. Particularly, when calculating the sign alignment ratio, AlignIns focuses on the signs of important parameters in each update to accurately capture alignment information. Using these two directional metrics, AlignIns performs anomaly detection with the robust which requires minimal hyperparameters to filter updates with unusual directional patterns out. Finally, AlignIns clips the remaining updates to mitigate the impact of updates with abnormally large magnitudes. We also provide a theoretical analysis of AlignIns’ robustness and its propagation error in FL. The main contributions of this work are three folds:

-

•

We present a novel defense method, AlignIns, to defend against backdoor attacks in FL. To the best of our knowledge, AlignIns is the first defense method in FL that analyzes the directional patterns of local model updates at different levels of granularity. AlignIns is fully compatible with existing FL frameworks.

-

•

To the best of our knowledge, we provide the first theoretical robustness analysis for a filtering-based defense method against backdoor attacks under non-IID data in FL. Moreover, we prove that the propagation error of AlignIns is bounded during the training of FL.

-

•

We empirically evaluate the effectiveness of AlignIns through extensive experiments on both IID and non-IID datasets against various state-of-the-art (SOTA) backdoor attacks. Compared to existing SOTA defense methods, AlignIns exhibits superior robustness.

2 Background and Related Works

Federated Learning. In a typical FL system, a central server controls a set of clients to train a global model collaboratively. The objective of FL is to solve the following optimization problem: where denotes the learning objective specific to client and denotes the local dataset for client . The commonly used method to solve this problem iteratively is FedAvg [33]. In detail, at round of FedAvg, each client downloads the current global model , updates it by optimizing its local objective, resulting in , and transmits its model update to the server. The server then refines the global model by averaging these updates as follows: . This process continues until the global model reaches convergence.

Backdoor attacks in FL. Empirical evidence has shown that FL is vulnerable to backdoor attacks [14, 4, 46, 48, 54, 9, 52, 37, 23] due to its lack of access to local training data [4]. For instance, Projected Gradient Descent (PGD) attack [46] periodically projects the local model onto a small sphere centered around the global model from the previous training round, with a predefined radius. Distributed Backdoor Attack (DBA) [48] decomposes the centralized trigger into several smaller, distributed local triggers. Each poisoned client uses one of these local triggers, but during testing, the adversary injects the full trigger into the test samples. Recently, research has focused on trigger-optimization backdoor attacks [52, 9, 37, 29, 1], which aim to search optimized triggers to enhance the effectiveness and stealthiness.

Defending against backdoor attacks in FL. Generally, based on how defense methods mitigate the impact of malicious updates, existing defense methods can be categorized into filtering-based methods [6, 42, 7, 22, 19, 36, 50, 51] and influence-reduction methods [40, 41, 11, 38, 20].

1) influence-reduction methods aim to integrate all model updates but employ strategies to reduce the impact of malicious updates. For instance, RFA [40] is proposed to use the geometric median of local models as the aggregation result, under the assumption that malicious models significantly deviate from benign models. Foolsgold [11] assumes that the malicious updates are consistent with each other. It assigns aggregation weights to model updates based on the maximum Cosine similarity between the last layers of pairwise model updates. A higher Cosine similarity value indicates a higher probability that the updates are malicious, leading to smaller aggregation weights being assigned. The effectiveness of influence-reduction methods is inherently limited because they cannot eliminate the impact of malicious activity, leading to a significant risk of compromise.

2) Filtering-based methods aim to detect and remove malicious local model updates before aggregation thus attempting to achieve the highest robustness. For example, Multi-Krum [6] selects the multiply reliable local model updates for aggregation by identifying the one with the smallest sum of squared Euclidean distances to all other updates. Multi-Metrics [19] explores the combination of Manhattan distance, Euclidean distance, and Cosine similarity for each update to collaboratively filter out outliers. However, due to the dual objectives of backdoor attacks—that is, maintaining accuracy on the main task while maximizing accuracy on the backdoor task—malicious updates must mimic benign model updates, important weights for the main task typically have large values and can dominate the magnitude of a model update. As a result, magnitude-based detection methods become ineffective against backdoor attacks. Additionally, methods that rely solely on Cosine similarity also show limited effectiveness since they capture general directional alignment and overlook finer-grained information.

3 Our Solution: AlignIns

Our method, AlignIns, detailed in Algorithm 1, mitigates the impact of malicious updates through a two-step process. First, direction alignment inspection is applied to examine each local model update comprehensively in terms of direction. Second, post-filtering model clipping is used to further enhance the robustness of AlignIns on defending potential magnitude-based attack methods before final aggregation.

Direction alignment inspection. Existing defense methods against backdoor attacks in FL primarily focus on examining the magnitude (e.g., Manhattan distance and Euclidean distance) and the overall direction (e.g., Cosine similarity) of model updates. However, backdoor attacks are designed to maintain the main task accuracy, making the magnitude difference between malicious and benign updates nearly indistinguishable. Additionally, advanced attacks such as PGD [46] and Lie [5] attacks are specifically crafted to bypass magnitude-based defenses. Therefore, AlignIns focuses on direction-based analysis to identify suspect updates, using two processes described below.

1) Temporal direction alignment checking: Since malicious clients need to maintain both the main task and the backdoor task, the optimization direction of a malicious local model tends to deviate from that of benign models. AlignIns leverages this deviation and performs a Temporal Direction Alignment () checking, which calculates the Cosine similarity between a local update and the latest global model (algorithm 1 in Algorithm 1) to assess the general alignment level of each local update. Formally, the value of a local model update is calculated as

| (1) |

We use local model updates rather than local models because our goal is to measure how closely each client’s updates align with the direction of the global model. Local model updates specifically capture these incremental adjustments. Notably, malicious clients tend to exhibit similar values, which differ from those of benign clients, creating an opportunity for detection. It is important to note that while the magnitude of model updates typically decreases as the global model converges, the value does not follow the same trend. Consequently, magnitude-based anomaly detection becomes progressively less effective throughout training due to the decreasing magnitude. In contrast, the variability in values continues to be useful for identifying malicious behavior.

2) Masked principal sign alignment checking: In backdoor attacks where the manipulations are stealthy, subtle malicious directional information can easily blend into the parameters of models with large magnitude, especially for models with large dimensions, which makes the less useful under strong backdoor attacks since the captures the overall directional information. Therefore, in addition to the , we look into the signs of parameters to provide a finer-grained directional assessment of local model updates. The signs of a vector represent its coordinate-wise direction. In the context of backdoor attacks, the distributions of the signs of malicious model updates differ from those of benign updates. This is particularly significant when the model is close to convergence, at which point the magnitude of model updates becomes very small, making large manipulations on magnitudes impractical. Therefore, manipulation of the direction, or the signs of parameters, can emerge as a more significant and effective strategy. Several works also utilize the signs of models for enhancing backdoor robustness. For example, RLR [38] assigns an opposite global learning rate to a coordinate of the averaged model update if the signs on this coordinate do not consistently align with the majority across all updates. SignGuard [49] calculates the proportions of positive, zero, and negative signs for each model update as the input of a clustering algorithm to identify malicious model updates. However, these methods utilize the signs of all parameters in the model update, regardless of their significance. Consequently, the performance of sign-based metrics can be significantly impacted by those many unimportant parameters, especially for large DNN models, leading to an inaccurate representation of the model update’s direction.

To this end, AlignIns utilizes a Masked Principle Sign Alignment () checking to inspect the sign alignment degree between the important parameters of each local update and a well-designed principle sign of all local updates. Specifically, to construct the principle sign over local updates, for each coordinate of local updates, we take the majority of the signs across all model updates as the principal sign of this coordinate, which can be mathematically formulated as where represents the vector of principal signs and is the function to take the signs of a vector. Note that the principal sign represents sign-voting results for each coordinate, making it stand for the major direction/dynamic for each coordinate. With this principle sign over local updates, we inspect the alignment of the signs of important parameters of each model update with it. More specifically, we use a Top- indicator defined as follows to identify the most important parameters that have the largest absolute values in a vector.

Definition 1 (Top- Indicator ).

For a vector and a masking parameter , where , the Top- indicator : is defined as if and otherwise, where , here is a permutation of such that for all .

The Top- indicator takes each local model update as input and outputs a mask vector in which each element is either 1 or 0 with the same size as the input. To quantify the alignment in sign distributions of each local model update and the principle sign, we define the Sign Alignment Ratio () as follows.

Definition 2 (Sign Alignment Ratio).

For vectors and , the sign alignment ratio of to is defined as where is -norm.

Here, and a larger indicate a higher degree of alignment between the signs of and . Combining and , we have the value for local update formulated as follows:

| (2) |

where is the Hadamard product, computes a sign difference vector, capturing the difference between the sign of and the principal reference sign . Since checking focuses on the important parameters, this difference vector is element-wise multiplied with the Top- mask derived from , effectively setting unimportant coordinates to zero. The -norm is then applied to count the not-aligned elements and with the masking parameter to ultimately determine the . checking effectively reveals malicious local updates by combining both magnitude and directional information from model updates, allowing for clear differentiation between malicious and benign updates. AlignIns calculates the value for each update with the principal sign iteratively (algorithm 1–1) and forward them to the following anomaly detection process.

3) Efficient anomaly detection with : W apply robust filtering to remove updates with abnormal and values. Specifically, we use the robust standardization metric named the Median-based Z-score () [50, 51], detailed in Definition 3, which is a variant of the traditional Z-score standardization metric.

Definition 3 ().

For a set of values with median and standard deviation , the of any is defined as

| (3) |

calculates the number of standard deviations an element is from the median, which may be either positive or negative. In AlignIns, the s for and values are computed for each local update (algorithm 1–1). Those with high absolute s (i.e., outliers) are excluded using two predetermined filtering radii: for and for (algorithm 1–1). The use of the allows for the adaptation to the varying range of and values during training, requiring only minimal hyper-parameters. Additionally, by configuring and , we can manage the trade-off between the robustness and main task accuracy of AlignIns. For example, when robustness is the primary concern in the FL, choosing small and values is essential to attain the highest robustness.

Post-filtering model clipping. After filtering, the remaining clients, considered benign, are included in the set (algorithm 1) and contribute to the model averaging process. However, since our filtering primarily focuses on the direction of model updates (although does consider magnitude when using the Top- indicator), there is a risk that it might overlook updates with large magnitudes, such as those updates generated by Scaling attack [4]. To this end, AlignIns re-scales model updates in by using the median of the -norms of these updates as a clipping threshold and aggregates the clipped model updates as the global model update (algorithm 1–1). It is worth noting that performing clipping before filtering does not affect the filtering results. However, clipping after filtering enhances robustness, as the clipping threshold is more likely determined by benign updates. We discuss the computational cost of AlignIns and compare it with other baselines in Appendix Section 12.

4 Robustness and Propagation Error Analysis

In this section, we conduct a theoretical analysis of the robustness of AlignIns, as well as its propagation error in FL. Before presenting our theoretical results, we make the following assumptions. Note that Assumption 1–2 are commonly used in the theoretical analysis of distributed learning systems [18, 49, 34]. Assumption 3 states a standard measure of inter-client heterogeneity in FL [2, 21, 8]. This heterogeneity complicates the problem of FL with backdoor adversaries, as it may cause the server to confuse malicious updates with flawed model updates from benign clients holding outlier data points [2].

Assumption 1 (-smoothness [34]).

Each local objective function for benign client is -Lipschitz smooth with , i.e., for any , which further gives:

Assumption 2 (Unbiased gradient and bounded variance).

The stochastic gradient at each benign client is an unbiased estimator of the local gradient, i.e., and has bounded variance, i.e., for any , where the expectation is over the local mini-batches. We also denote for convenience.

Assumption 3 (Bounded heterogeneity).

There exist a real value such that for any , where the .

Note that these assumptions apply to benign clients only since malicious clients do not need to follow the prescribed local training protocol of FL.

4.1 Robustness Analysis of AlignIns

To theoretically evaluate the efficacy of a filtering-based defense method like AlignIns, we introduce the concept of -robust filtering [50] as defined in Definition 4. Note that Definition 4 is similar to -robustness defined in [2, 3], -ARAgg defined in [21, 13, 31], and -resilient averaging defined in [10]. Our robustness definition adopts a constant upper bound and focuses on quantifying the distance between the output of a filtering-based defense method and the average of all benign updates, which represents the optimal output of such a rule.

Definition 4 (-robust filtering [50]).

A filtering-based aggregation rule is called -robust if for any vectors and a benign set of size , the output satisfies where , and refers to the robustness coefficient of .

Remark 1.

The -robust filtering guarantees that the error of a filtering-based aggregation rule in estimating the average of the benign inputs is upper-bounded by a constant . This measure provides a quantitative way to assess the robustness of the filtering-based aggregation rule. A smaller indicates a smaller discrepancy between the empirical output and the optimal output of . If identifies and removes all malicious inputs and keeps all benign inputs, we have , achieving the highest level of robustness.

Based on Definition 4, we prove that the proposed AlignIns, when applied to input models, of which are malicious, satisfies -robust filtering with , as stated in Lemma 1.

Lemma 1 (-robustness of AlignIns).

Under Assumption 2–3, assume , with a positive constant , AlignIns satisfies -robust filtering with

if the local learning rate satisfies and there exist two sufficiently large filtering radii such that . Here, and represent the gradient variance and local divergence, respectively; is the clipping threshold.

Proof.

The proof is given in Appendix Section 13.2. ∎

Remark 2.

The condition on highlights the importance of selecting appropriate filtering radii. These radii cannot be zero or too small; otherwise, only the median or a few model updates will be averaged to update the global model. This can lead to a performance drop due to the lack of model updates. Moreover, the model clipping threshold can effectively control the magnitude of potential malicious updates in the selection set, thus preventing from exploding due to updates with large magnitudes. Indeed, in the literature, model clipping has demonstrated its effectiveness in mitigating the impact of malicious model updates [53, 39, 49]. In addition, the result also shows the importance of reducing the gradient variance of stochastic gradient and local heterogeneity to enhance robustness performance. Our work is orthogonal to existing variance or divergence reduction methods [13, 30] and can be combined with them to further improve the robustness. We argue that AlignIns enjoys comparable robustness with several classical defense methods, for example, non-filtering-based method RFA [40] (), and filtering-based method Krum [6] ()111Results of RFA and Krum are taken from [2]. Note that the definition of in [2] is different from ours, but the difference part can be reduced to a constant bound. Therefore, we can safely incorporate these results into our discussion without losing generality..

4.2 Propagation Error of AlignIns in FL

Based on the -robustness of AlignIns, we analyze its propagation error during training. Specifically, let denote the model trained with Fed-AlignIns under backdoor attacks, where of the clients are malicious, and let denote a model trained exclusively with benign clients using FedAvg. Starting from the same initial model , we aim to measure the difference between these two models after rounds of training, defined as referred to as the propagation error [39]. Let represent the output of AlignIns at the -th round. If the highest level of robustness is not achieved at round , the error will propagate to the next round, resulting in a shifted starting point for local SGD at round . This discrepancy will gradually widen the gap between and . Our analysis captures this robustness error at each round and examines its cumulative effect after rounds. In Lemma 2, we show that, assuming Assumption 1–3 hold, the propagation error of AlignIns remains bounded.

Lemma 2 (Bounded Propagation Error).

Let Assumption 1–3 hold. If the local learning rate , the propagation error of AlignIns is bounded as

under backdoor attacks where out of clients are malicious. Here, is given in Lemma 1, is the cumulative global learning rate, and is a global learning rate scheduler, possibly static.

Proof.

The detailed proof is in Appendix Section 13.3. ∎

Remark 3.

When , converges to a constant for learning rate schedulers like exponential decay, which implies a constant bounded on propagation error. The result shows that besides the robustness error bounded by , the error of local gradient estimation, which is bounded by , in local SGD also propagates during the training, increasing the overall propagation error. This is because at any round , if the benign starting point for local training is the same, i.e., , then the local gradients/model updates on and will be identical for benign clients. Therefore, the gap between the updated global models and solely depends on the robustness error (i.e., the effectiveness of AlignIns in filtering out malicious updates). However, if , which means is not benign and has been poisoned in previous rounds, the local gradients/model updates on and will differ for benign clients, resulting in an error bounded by the gradient variance, even if AlignIns successfully filters out all malicious updates. Hence, to further reduce the propagation error, AlignIns can be combined with variance-reduction methods like [13, 30], which is orthogonal to AlignIns.

5 Experimental Settings

Datasets: In our experiments, we primarily use CIFAR-10 [24] and CIFAR-100 [24] datasets to evaluate the performance of various defense methods. Additionally, we present the superior performance of AlignIns on other benchmark datasets (MNIST [25], FMNIST [47], and Sentiment140 [12]) in Appendix Section 10.6. For all datasets, we simulate a cross-silo FL system with clients. Additionally, we also present the superior performance of AlignIns on a cross-device FL system with clients and client sampling. We consider both IID and non-IID settings. For IID settings, we distribute the training data evenly to local clients. For non-IID settings, we follow [17, 20, 19] to use Dirichlet distribution to simulate the non-IID settings with a default non-IID degree .

Learning Settings: We use SGD as the local solver, with the initial learning rates set as and and the number of local training epochs set as . The number of training rounds is set as for CIFAR-100 and for CIFAR-10. For AlignIns, the default filtering radii are set as and . We conduct extensive experiments to study the impact of filtering radii and present results and analysis in Appendix Section 11. The default masking parameter is set as , where is the model dimension so that the Top-% of model parameters are used for the checking.

Evaluated Attack Methods: We consider SOTA backdoor attacks, including Badnet [14], DBA [48], Scaling [4], PGD [46], and Neurotoxin [54]. We provide the detailed attack model and settings for attack methods in Appendix Section 8.1–8.2. We present the empirical performance of AlignIns under the strong trigger-optimization attack [9] in Appendix Section 10.3. Moreover, we study the potential adaptive attacks tailored to AlignIns and untargeted attacks [49] in Appendix Section 10.4–10.5, although these are beyond the primary scope of this work. To simulate effective backdoor attacks (achieving a BA over [22]), the malicious client will poison of its local data, where represents the data poisoning ratio. The attack ratio is set to % by default, which means % of the clients in the system are malicious. Experiments of AlignIns on defending backdoor attacks with various attack ratios are given in Appendix Section 10.7.

| Dataset (Model) | Methods | Clean MA | Badnet | DBA | Neurotoxin | Avg. BA | Avg. RA | |||||||||

| BA | RA | BA | RA | BA | RA | |||||||||||

| =0.3 | =0.5 | =0.3 | =0.5 | =0.3 | =0.5 | =0.3 | =0.5 | =0.3 | =0.5 | =0.3 | =0.5 | |||||

| CIFAR-10 (ResNet9 [16]) | FedAvg | 89.47 | 51.56 | 67.61 | 45.79 | 31.24 | 56.21 | 70.42 | 40.62 | 27.92 | 44.89 | 79.40 | 50.41 | 19.60 | 61.68 | 35.93 |

| FedAvg* | 89.47 | 2.06 | 2.06 | 85.60 | 85.60 | 2.06 | 2.06 | 85.60 | 85.60 | 2.06 | 2.06 | 85.60 | 85.60 | 2.06 | 85.60 | |

| RLR | 79.16 | 2.32 | 2.00 | 76.72 | 73.33 | 3.01 | 3.04 | 77.09 | 77.13 | 3.12 | 3.87 | 73.98 | 73.29 | 2.89 | 35.93 | |

| RFA | 87.73 | 70.67 | 90.24 | 27.74 | 9.26 | 47.67 | 66.97 | 47.29 | 30.14 | 81.27 | 96.13 | 17.11 | 3.69 | 75.49 | 22.54 | |

| MKrum | 87.02 | 81.10 | 97.47 | 18.11 | 2.51 | 2.17 | 4.33 | 83.89 | 79.10 | 65.28 | 89.18 | 31.81 | 10.01 | 56.59 | 37.57 | |

| Foolsgold | 89.49 | 69.14 | 68.84 | 29.64 | 30.10 | 51.18 | 60.73 | 44.83 | 36.08 | 2.91 | 2.82 | 85.27 | 84.76 | 42.60 | 51.78 | |

| MM | 89.15 | 41.19 | 93.88 | 53.88 | 6.01 | 52.24 | 51.30 | 43.54 | 45.08 | 43.92 | 83.92 | 51.12 | 15.11 | 61.08 | 35.79 | |

| Lockdown | 88.56 | 6.31 | 10.82 | 81.88 | 79.50 | 11.63 | 6.03 | 78.82 | 75.77 | 3.40 | 3.27 | 82.73 | 83.14 | 6.91 | 80.31 | |

| AlignIns | 88.64 | 1.91 | 2.21 | 86.03 | 85.57 | 2.13 | 2.14 | 85.77 | 85.88 | 2.66 | 2.20 | 85.46 | 85.31 | 2.21 | 85.67 | |

| CIFAR-100 (VGG9 [44]) | FedAvg | 64.29 | 99.20 | 99.54 | 0.68 | 0.35 | 99.25 | 99.36 | 0.64 | 0.54 | 94.41 | 93.36 | 4.36 | 5.28 | 97.52 | 1.98 |

| FedAvg* | 64.29 | 0.62 | 0.62 | 53.03 | 53.03 | 0.62 | 0.62 | 53.03 | 53.03 | 0.62 | 0.62 | 53.03 | 53.03 | 0.62 | 53.03 | |

| RLR | 44.34 | 96.57 | 99.85 | 1.81 | 0.12 | 24.41 | 94.08 | 24.97 | 3.22 | 0.04 | 0.00 | 29.07 | 29.73 | 52.49 | 14.82 | |

| RFA | 53.92 | 4.32 | 1.45 | 37.60 | 39.88 | 2.15 | 0.78 | 39.73 | 41.51 | 99.74 | 89.59 | 0.21 | 6.59 | 33.01 | 27.59 | |

| MKrum | 51.28 | 1.33 | 1.54 | 38.13 | 38.49 | 1.36 | 1.54 | 37.85 | 37.91 | 99.82 | 99.87 | 0.12 | 0.10 | 36.21 | 25.49 | |

| Foolsgold | 64.13 | 99.02 | 99.30 | 0.83 | 0.57 | 99.15 | 99.39 | 0.74 | 0.51 | 21.79 | 6.21 | 42.06 | 46.40 | 70.81 | 15.19 | |

| MM | 63.26 | 99.51 | 99.87 | 0.37 | 0.11 | 99.53 | 99.70 | 0.35 | 0.19 | 98.48 | 98.97 | 1.32 | 0.83 | 99.34 | 0.53 | |

| Lockdown | 62.88 | 55.21 | 24.14 | 28.45 | 43.06 | 34.37 | 49.02 | 34.06 | 27.93 | 0.85 | 0.67 | 42.66 | 47.04 | 27.38 | 37.20 | |

| AlignIns | 63.45 | 0.79 | 0.71 | 50.45 | 51.53 | 0.45 | 0.57 | 50.81 | 52.08 | 0.49 | 0.53 | 51.11 | 50.66 | 0.59 | 51.11 | |

Evaluated Defense Methods: We present the detailed defense model in Appendix Section 9. We comprehensively compare AlignIns with the non-robust baseline FedAvg and six existing SOTA defense methods, including RLR [38], RFA [40], Multi-Krum (MKrum) [6], Foolsgold [11], Multi-Metric (MM) [19], and Lockdown [20]. Additionally, we compare our approach with an ideally perfect filtering-based robust aggregation, FedAvg*, which is assumed to perfectly identify and remove all malicious updates and average all the benign updates to update the global model.

Evaluation Metrics: We use three metrics to evaluate the performance of defense methods, including main task accuracy (MA), which measures the percentage of clean test samples that are accurately classified to their ground truth labels by the global model; backdoor attack accuracy (BA), which measures the percentage of triggered samples that are misclassified to the target label by the global model; and robustness accuracy (RA), which measures the percentage of triggered samples that are accurately classified to their ground-truth labels by the global model, despite the presence of the trigger. A good defense method should achieve high MA and RA and low BA.

6 Experimental Results

Main results in IID setting. In Table 1, we report the performance of various defense methods under no attack (denoted by “Clean”), Badnet, DBA, and Neurotoxin attacks for IID CIFAR-10 and CIFAR-100. The best results are highlighted in bold font, and the second best results are underlined. Overall, AlignIns demonstrates superior performance compared with other baselines as it achieves the best average BA and RA over three attack methods. Specifically, for CIFAR-10, while RLR offers a satisfactory degree of robustness (an average BA of ), it suffers from a notable decline in RA, with an average reduction of in comparison to AlignIns. This drop results from RLR’s strategy of flipping the global learning rate for parameters in the aggregated model update that are inconsistent with the majority’s sign, consequently resulting in the loss of benign local parameters. AlignIns, however, demonstrates outstanding performance with consistently low BA and high RA, ranking first or second among its counterparts. Notably, compared to the second-best results, AlignIns achieves an average improvement of in BA and in RA. Similarly, superior results are observed in CIFAR-100 experiments, where AlignIns significantly outperforms other methods in both BA and RA. These results underscore AlignIns’ effectiveness as a promising defense method for protecting FL from various backdoor attacks, significantly enhancing the trustworthiness of FL systems.

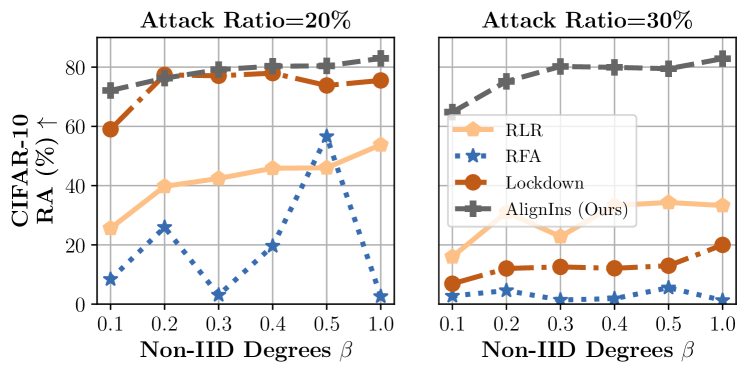

Effectiveness under various Non-IID degrees. We examine the defense performance of AlignIns across various degrees of non-IIDness, a factor that significantly complicates backdoor defense. Figure 1 presents the RA of AlignIns under different non-IID conditions on the CIFAR-10 dataset, compared with Lockdown, RFA, and RLR. The experiments were conducted using the Neurotoxin attack, with both a default attack ratio of and a higher attack ratio of . The Dirichlet parameter varies from to , where a smaller suggests a more intense non-IIDness. We observe that only AlignIns consistently attains robustness against strong Neurotoxin attacks with a varying . Specifically, as increases, the RA of AlignIns, Lockdown, and RLR increases correspondingly. However, AlignIns outperforms them with a consistently higher RA. When the attack ratio rises to , RLR, RFA, and Lockdown fail to provide satisfactory robustness. However, our method AlignIns still demonstrates its robustness under various non-IIDness, even in an extremely non-IID case when . AlignIns is designed to examine the alignment of model updates on important parameters only, hence, it mitigates the challenge of identifying malicious model updates in non-IID settings where updates are heterogeneous, thereby achieving superior performance in even extreme non-IID settings compared with existing methods. We also provide more comprehensive results of AlignIns and other baselines on non-IID datasets in Appendix Section 10.1.

Effectiveness of AlignIns in cross-device FL with client sampling. While most of our experiments focus on the cross-silo FL setting, evaluating the cross-device FL scenario is also essential given the large number of clients involved. For this purpose, we simulate a cross-device FL environment with clients, where the server randomly selects clients per round for training. We conduct experiments on IID and non-IID CIFAR-10 cases using Foolsgold, Lockdown, RLR, and AlignIns and summarize the MA, BA, and RA results in Table 2. The results show that both Foolsgold and Lockdown completely lose their effectiveness in both cases, achieving an average RA of nearly . RLR achieves a moderate level of backdoor robustness but at the cost of main task accuracy, with an average MA of only . In contrast, AlignIns performs robustly in the cross-device FL setting, achieving a significantly lower BA in both IID () and non-IID () cases compared with other methods. Furthermore, AlignIns achieves an average RA of . These results highlight AlignIns’s ability to maintain both accuracy and robustness in challenging cross-device FL scenarios, underscoring its adaptability and effectiveness in real-world applications.

| Method | CIFAR-10 (IID) | CIFAR-10 (Non-IID) | Avg. RA | ||||

| MA | BA | RA | MA | BA | RA | ||

| Foolsgold | 82.99 | 99.99 | 0.01 | 67.97 | 99.99 | 0.00 | 0.01 |

| Lockdown | 83.52 | 99.99 | 0.00 | 73.91 | 99.92 | 0.06 | 0.00 |

| RLR | 56.81 | 4.67 | 55.38 | 41.56 | 14.12 | 38.17 | 46.78 |

| AlignIns | 85.01 | 0.92 | 82.74 | 79.51 | 1.90 | 75.81 | 79.28 |

Ablation study of AlignIns. As AlignIns consists of two alignment components ( and ) to improve backdoor robustness, we conduct a detailed ablation study to investigate how each component functions. Experimental results on IID and non-IID CIFAR-10 datasets under Badnet attack are summarized in Table 3. (i) Component ablation. We observe that using or alone in IID scenarios only slightly reduces robustness compared to AlignIns, as benign updates follow consistent patterns that enable effective detection by a single metric. In non-IID settings, however, where local updates diverge, neither nor alone provides sufficient robustness. When combined, and improve BA and RA from and to and , respectively, showing their complementary strengths. AlignIns further enhances robustness by integrating , , and post-filtering model clipping, which normalizes benign update magnitudes and improves malicious update detection, yielding the highest average RA. (ii) Masking parameter ablation. We try to involve more non-essential parameters in the checking by using the Top-%% of parameters to calculate values. By doing so, the effectiveness of malicious identification is reduced. In contrast, when using the Top-% of parameters, compared to the Top-% case, BA and RA are improved by and , respectively. This demonstrates the effectiveness of focusing important parameters when calculating in improving the filtering accuracy, especially in non-IID cases. (iii) Variance reduction method further enhances robustness. Our theoretical results reveal the impact of variance reduction techniques on improving the robustness of AlignIns and reducing the propagation error of AlignIns in FL, we additionally test a variant of AlignIns named “AlignIns+”, in which local SGD with momentum is used to reduce the local gradient variance with momentum coefficient . AlignIns+ achieves a slightly better performance than AlignIns, verifying our theoretical results.

| Configuration | CIFAR-10 (IID) | CIFAR-10 (non-IID) | Avg. RA | ||||

| MA | BA | RA | MA | BA | RA | ||

| () | 88.55 | 2.88 | 85.02 | 80.65 | 94.07 | 5.79 | 45.41 |

| 88.56 | 3.82 | 83.88 | 83.86 | 77.58 | 21.31 | 52.60 | |

| (+ | 88.14 | 2.18 | 85.77 | 83.84 | 61.83 | 31.86 | 58.82 |

| ()+ | 88.05 | 2.21 | 85.46 | 84.12 | 77.93 | 19.96 | 52.71 |

| ()+ | 88.14 | 2.04 | 85.82 | 83.65 | 47.04 | 45.30 | 65.56 |

| AlignIns | 88.05 | 2.44 | 85.27 | 82.88 | 1.70 | 81.32 | 83.30 |

| AlignIns+ | 88.48 | 2.14 | 85.74 | 83.31 | 1.11 | 82.13 | 83.94 |

7 Conclusion

This paper introduces a novel defense method AlignIns to defend against backdoor attacks in FL. AlignIns examines each model update’s direction at different granularity levels, thus effectively identifying stealthy malicious local model updates and filtering them out to avoid them participating in aggregation in FL to enhance robustness. We provide a theoretical analysis of AlignIns’ robustness and its impact on propagation errors in FL. Extensive experiments demonstrate the effectiveness of AlignIns, with results showing that it outperforms SOTA defense methods against various advanced attacks.

References

- Alam et al. [2022] Manaar Alam, Esha Sarkar, and Michail Maniatakos. Perdoor: Persistent non-uniform backdoors in federated learning using adversarial perturbations. arXiv preprint arXiv:2205.13523, 2022.

- Allouah et al. [2023a] Youssef Allouah, Sadegh Farhadkhani, Rachid Guerraoui, Nirupam Gupta, Rafaël Pinot, and John Stephan. Fixing by mixing: A recipe for optimal byzantine ml under heterogeneity. In International Conference on Artificial Intelligence and Statistics, pages 1232–1300. PMLR, 2023a.

- Allouah et al. [2023b] Youssef Allouah, Rachid Guerraoui, Nirupam Gupta, Rafael Pinot, and Geovani Rizk. Robust distributed learning: Tight error bounds and breakdown point under data heterogeneity. In Thirty-seventh Conference on Neural Information Processing Systems, 2023b.

- Bagdasaryan et al. [2020] Eugene Bagdasaryan, Andreas Veit, Yiqing Hua, Deborah Estrin, and Vitaly Shmatikov. How to backdoor federated learning. In International conference on artificial intelligence and statistics, pages 2938–2948. PMLR, 2020.

- Baruch et al. [2019] Gilad Baruch, Moran Baruch, and Yoav Goldberg. A little is enough: Circumventing defenses for distributed learning. Advances in Neural Information Processing Systems, 32, 2019.

- Blanchard et al. [2017] Peva Blanchard, El Mahdi El Mhamdi, Rachid Guerraoui, and Julien Stainer. Machine learning with adversaries: Byzantine tolerant gradient descent. Advances in neural information processing systems, 30, 2017.

- Cao et al. [2020] Xiaoyu Cao, Minghong Fang, Jia Liu, and Neil Zhenqiang Gong. Fltrust: Byzantine-robust federated learning via trust bootstrapping. arXiv preprint arXiv:2012.13995, 2020.

- El-Mhamdi et al. [2021] El Mahdi El-Mhamdi, Sadegh Farhadkhani, Rachid Guerraoui, Arsany Guirguis, Lê-Nguyên Hoang, and Sébastien Rouault. Collaborative learning in the jungle (decentralized, byzantine, heterogeneous, asynchronous and nonconvex learning). Advances in Neural Information Processing Systems, 34:25044–25057, 2021.

- Fang and Chen [2023] Pei Fang and Jinghui Chen. On the vulnerability of backdoor defenses for federated learning. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 11800–11808, 2023.

- Farhadkhani et al. [2022] Sadegh Farhadkhani, Rachid Guerraoui, Nirupam Gupta, Rafael Pinot, and John Stephan. Byzantine machine learning made easy by resilient averaging of momentums. In International Conference on Machine Learning, pages 6246–6283. PMLR, 2022.

- Fung et al. [2020] Clement Fung, Chris JM Yoon, and Ivan Beschastnikh. The limitations of federated learning in sybil settings. In 23rd International Symposium on Research in Attacks, Intrusions and Defenses (RAID 2020), pages 301–316, 2020.

- Go et al. [2009] Alec Go, Richa Bhayani, and Lei Huang. Twitter sentiment classification using distant supervision. CS224N project report, Stanford, 1(12):2009, 2009.

- Gorbunov et al. [2022] Eduard Gorbunov, Samuel Horváth, Peter Richtárik, and Gauthier Gidel. Variance reduction is an antidote to byzantines: Better rates, weaker assumptions and communication compression as a cherry on the top. arXiv preprint arXiv:2206.00529, 2022.

- Gu et al. [2017] Tianyu Gu, Brendan Dolan-Gavitt, and Siddharth Garg. Badnets: Identifying vulnerabilities in the machine learning model supply chain. arXiv preprint arXiv:1708.06733, 2017.

- Guerraoui et al. [2018] Rachid Guerraoui, Sébastien Rouault, et al. The hidden vulnerability of distributed learning in byzantium. In International Conference on Machine Learning, pages 3521–3530. PMLR, 2018.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- Hsu et al. [2019] Tzu-Ming Harry Hsu, Hang Qi, and Matthew Brown. Measuring the effects of non-identical data distribution for federated visual classification. arXiv preprint arXiv:1909.06335, 2019.

- Hu et al. [2023] Rui Hu, Yuanxiong Guo, and Yanmin Gong. Federated learning with sparsified model perturbation: Improving accuracy under client-level differential privacy. IEEE Transactions on Mobile Computing, 2023.

- Huang et al. [2023] Siquan Huang, Yijiang Li, Chong Chen, Leyu Shi, and Ying Gao. Multi-metrics adaptively identifies backdoors in federated learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 4652–4662, 2023.

- Huang et al. [2024] Tiansheng Huang, Sihao Hu, Ka-Ho Chow, Fatih Ilhan, Selim Tekin, and Ling Liu. Lockdown: Backdoor defense for federated learning with isolated subspace training. Advances in Neural Information Processing Systems, 36, 2024.

- Karimireddy et al. [2021] Sai Praneeth Karimireddy, Lie He, and Martin Jaggi. Byzantine-robust learning on heterogeneous datasets via bucketing. In International Conference on Learning Representations, 2021.

- Krauß and Dmitrienko [2023] Torsten Krauß and Alexandra Dmitrienko. Mesas: Poisoning defense for federated learning resilient against adaptive attackers. In Proceedings of the 2023 ACM SIGSAC Conference on Computer and Communications Security, pages 1526–1540, 2023.

- Krauß et al. [2024] Torsten Krauß, Jan König, Alexandra Dmitrienko, and Christian Kanzow. Automatic adversarial adaption for stealthy poisoning attacks in federated learning. In To appear soon at the Network and Distributed System Security Symposium (NDSS), 2024.

- Krizhevsky et al. [2009] Alex Krizhevsky et al. Learning multiple layers of features from tiny images. University of Toronto, 2009.

- LeCun et al. [1998] Yann LeCun, Léon Bottou, Yoshua Bengio, and Patrick Haffner. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

- Liu et al. [2022] Han Liu, Zhiyuan Yu, Mingming Zha, XiaoFeng Wang, William Yeoh, Yevgeniy Vorobeychik, and Ning Zhang. When evil calls: Targeted adversarial voice over ip network. In Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security, pages 2009–2023, 2022.

- Liu et al. [2020] Yi Liu, Jiangtian Nie, Xuandi Li, Syed Hassan Ahmed, Wei Yang Bryan Lim, and Chunyan Miao. Federated learning in the sky: Aerial-ground air quality sensing framework with uav swarms. IEEE Internet of Things Journal, 8(12):9827–9837, 2020.

- Long et al. [2020] Guodong Long, Yue Tan, Jing Jiang, and Chengqi Zhang. Federated learning for open banking. In Federated Learning: Privacy and Incentive, pages 240–254. Springer, 2020.

- Lyu et al. [2023] Xiaoting Lyu, Yufei Han, Wei Wang, Jingkai Liu, Bin Wang, Jiqiang Liu, and Xiangliang Zhang. Poisoning with cerberus: Stealthy and colluded backdoor attack against federated learning. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 9020–9028, 2023.

- Malinovsky et al. [2022] Grigory Malinovsky, Kai Yi, and Peter Richtárik. Variance reduced proxskip: Algorithm, theory and application to federated learning. Advances in Neural Information Processing Systems, 35:15176–15189, 2022.

- Malinovsky et al. [2023] Grigory Malinovsky, Peter Richtárik, Samuel Horváth, and Eduard Gorbunov. Byzantine robustness and partial participation can be achieved simultaneously: Just clip gradient differences. arXiv preprint arXiv:2311.14127, 2023.

- McMahan et al. [2017a] Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Aguera y Arcas. Communication-efficient learning of deep networks from decentralized data. In Artificial intelligence and statistics, pages 1273–1282. PMLR, 2017a.

- McMahan et al. [2017b] Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Aguera y Arcas. Communication-efficient learning of deep networks from decentralized data. In Artificial intelligence and statistics, pages 1273–1282. PMLR, 2017b.

- Nesterov et al. [2018] Yurii Nesterov et al. Lectures on convex optimization. Springer, 2018.

- Nguyen et al. [2022a] Dinh C Nguyen, Quoc-Viet Pham, Pubudu N Pathirana, Ming Ding, Aruna Seneviratne, Zihuai Lin, Octavia Dobre, and Won-Joo Hwang. Federated learning for smart healthcare: A survey. ACM Computing Surveys (CSUR), 55(3):1–37, 2022a.

- Nguyen et al. [2022b] Thien Duc Nguyen, Phillip Rieger, Roberta De Viti, Huili Chen, Björn B Brandenburg, Hossein Yalame, Helen Möllering, Hossein Fereidooni, Samuel Marchal, Markus Miettinen, et al. FLAME: Taming backdoors in federated learning. In 31st USENIX Security Symposium (USENIX Security 22), pages 1415–1432, 2022b.

- Nguyen et al. [2024] Thuy Dung Nguyen, Tuan A Nguyen, Anh Tran, Khoa D Doan, and Kok-Seng Wong. Iba: Towards irreversible backdoor attacks in federated learning. Advances in Neural Information Processing Systems, 36, 2024.

- Ozdayi et al. [2021] Mustafa Safa Ozdayi, Murat Kantarcioglu, and Yulia R Gel. Defending against backdoors in federated learning with robust learning rate. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 9268–9276, 2021.

- Panda et al. [2022] Ashwinee Panda, Saeed Mahloujifar, Arjun Nitin Bhagoji, Supriyo Chakraborty, and Prateek Mittal. Sparsefed: Mitigating model poisoning attacks in federated learning with sparsification. In International Conference on Artificial Intelligence and Statistics, pages 7587–7624. PMLR, 2022.

- Pillutla et al. [2022] Krishna Pillutla, Sham M Kakade, and Zaid Harchaoui. Robust aggregation for federated learning. IEEE Transactions on Signal Processing, 70:1142–1154, 2022.

- Rieger et al. [2022a] Phillip Rieger, Torsten Krauß, Markus Miettinen, Alexandra Dmitrienko, and Ahmad-Reza Sadeghi. Crowdguard: Federated backdoor detection in federated learning. arXiv preprint arXiv:2210.07714, 2022a.

- Rieger et al. [2022b] Phillip Rieger, Thien Duc Nguyen, Markus Miettinen, and Ahmad-Reza Sadeghi. Deepsight: Mitigating backdoor attacks in federated learning through deep model inspection. arXiv preprint arXiv:2201.00763, 2022b.

- Shejwalkar and Houmansadr [2021] Virat Shejwalkar and Amir Houmansadr. Manipulating the byzantine: Optimizing model poisoning attacks and defenses for federated learning. In NDSS, 2021.

- Simonyan and Zisserman [2014] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- Tian et al. [2022] Zhiyi Tian, Lei Cui, Jie Liang, and Shui Yu. A comprehensive survey on poisoning attacks and countermeasures in machine learning. ACM Computing Surveys, 55(8):1–35, 2022.

- Wang et al. [2020] Hongyi Wang, Kartik Sreenivasan, Shashank Rajput, Harit Vishwakarma, Saurabh Agarwal, Jy-yong Sohn, Kangwook Lee, and Dimitris Papailiopoulos. Attack of the tails: Yes, you really can backdoor federated learning. Advances in Neural Information Processing Systems, 33:16070–16084, 2020.

- Xiao et al. [2017] Han Xiao, Kashif Rasul, and Roland Vollgraf. Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747, 2017.

- Xie et al. [2019] Chulin Xie, Keli Huang, Pin-Yu Chen, and Bo Li. Dba: Distributed backdoor attacks against federated learning. In International conference on learning representations, 2019.

- Xu et al. [2021] Jian Xu, Shao-Lun Huang, Linqi Song, and Tian Lan. Signguard: Byzantine-robust federated learning through collaborative malicious gradient filtering. arXiv preprint arXiv:2109.05872, 2021.

- Xu et al. [2024a] Jiahao Xu, Zikai Zhang, and Rui Hu. Achieving byzantine-resilient federated learning via layer-adaptive sparsified model aggregation. arXiv preprint arXiv:2409.01435, 2024a.

- Xu et al. [2024b] Jiahao Xu, Zikai Zhang, and Rui Hu. Identify backdoored model in federated learning via individual unlearning. arXiv preprint arXiv:2411.01040, 2024b.

- Zhang et al. [2024] Hangfan Zhang, Jinyuan Jia, Jinghui Chen, Lu Lin, and Dinghao Wu. A3fl: Adversarially adaptive backdoor attacks to federated learning. Advances in Neural Information Processing Systems, 36, 2024.

- Zhang et al. [2019] Jingzhao Zhang, Tianxing He, Suvrit Sra, and Ali Jadbabaie. Why gradient clipping accelerates training: A theoretical justification for adaptivity. In International Conference on Learning Representations, 2019.

- Zhang et al. [2022] Zhengming Zhang, Ashwinee Panda, Linyue Song, Yaoqing Yang, Michael Mahoney, Prateek Mittal, Ramchandran Kannan, and Joseph Gonzalez. Neurotoxin: Durable backdoors in federated learning. In International Conference on Machine Learning, pages 26429–26446. PMLR, 2022.

Supplementary Material

8 Attack Model and Detailed Attack Settings

8.1 Attack Model

We follow the threat model in previous works [42, 6, 43]. Specifically, the attacker controls malicious clients, which can be fake injected into the system by the attacker or benign clients compromised by the attacker. These malicious clients are allowed to co-exist in the FL system. i) Attacker’s goal. The backdoor attackers in FL have two primary objectives. First, they aim to maintain the accuracy of the global model on benign inputs, ensuring that its overall performance remains unaffected. Second, they seek to manipulate the global model so that it behaves as predefined by the attacker on inputs containing a specific trigger, such as misclassifying triggered inputs to a specific backdoor label. ii) Attacker’s capability. The attacker controls malicious clients in FL. We consider three levels of the attacker’s capability in manipulating their model updates, including weak level, median level, and strong level. The malicious clients controlled by weak attackers (e.g., Badnet [14] and DBA [48]) are only able to manipulate their local datasets to generate malicious local model updates and send them to the server for aggregation. For a median attacker, malicious clients can additionally modify the training algorithm (e.g., Scaling [4] and PGD [46]) to generate malicious local model updates. These two assumptions are common in existing works for attackers who control malicious devices but do not have access to additional information from servers or benign clients. For a strong attacker (e.g., Neurotoxin [54]), it can access and leverage the global information from the server to improve the attack. Note that the defense method employed by the server is confidential to the attacker.

8.2 More Detailed Settings of Attack Methods.

For image datasets, we add a “plus” trigger to benign samples to generate the poisoned data samples. For Sentiment140 dataset, we insert a trigger sentence “This is a backdoor trigger” into benign samples to generate poisoned data samples. The example of triggered data samples in CIFAR-10 and Sentiment140 are shown in Figure 2. For DBA attack, we decompose the ”plus” trigger into four local patterns, and each malicious client only uses one of these local patterns. For Scaling attack, we use a scale factor of to scale up all malicious model updates. For PGD attack, malicious local models are projected onto a sphere with a radius equal to the -norm of the global model in the current round for all datasets, except CIFAR-10 where we make the radius of the sphere be 10 times smaller than the norm. For Neurotoxin attack, malicious model updates are projected to the dimensions that have Bottom- importance in the aggregated update from the previous round.

9 Defense Model

In this work, we assume the server to be the defender. i) Defender’s goal. As stated in [7], an ideal defense method against poisoning attacks in FL should consider the following three aspects: Fidelity, Robustness, and Efficiency. To ensure fidelity, the defense method does not significantly degrade the global model’s performance on benign inputs, thus preserving its effectiveness. For robustness, the defense method should successfully mitigate the impact of malicious model updates, limiting the global model’s malicious behavior on triggered inputs. Regarding efficiency, the defense method should be computationally efficient, ensuring that it does not hinder the overall efficiency of the training process. In this work, we assume that the server aims to achieve the highest level of robustness by removing all malicious updates without significant computational complexity and accuracy degradation on benign inputs. ii) Defender’s capability. In FL, the server has no access to the local datasets of clients, but it has the global model and all the local model updates. We assume the server has no prior knowledge of the number of malicious clients. We also assume that each client transmits their local update anonymously, making the actions of individual clients untraceable. Additionally, the server does not know the specifics of backdoor attacks, such as the type of trigger involved. To defend against backdoor attacks, the server will apply a robust aggregation rule to the local model updates received from clients and generate an aggregated model update at each training round.

10 More Superior Results of AlignIns

10.1 Comprehensive Results on non-IID Datasets

| Dataset (Model) | Methods | Clean MA | Badnet | DBA | Neurotoxin | Avg. BA | Avg. RA | |||||||||

| BA | RA | BA | RA | BA | RA | |||||||||||

| =0.3 | =0.5 | =0.3 | =0.5 | =0.3 | =0.5 | =0.3 | =0.5 | =0.3 | =0.5 | =0.3 | =0.5 | |||||

| CIFAR-10 (ResNet9) | FedAvg | 85.05 | 42.34 | 86.33 | 51.60 | 13.22 | 42.24 | 71.64 | 49.63 | 25.26 | 42.29 | 76.63 | 48.76 | 20.73 | 53.57 | 36.29 |

| FedAvg* | 85.05 | 1.78 | 1.78 | 83.09 | 83.09 | 1.78 | 1.78 | 83.09 | 83.09 | 1.78 | 1.78 | 83.09 | 83.09 | 1.78 | 83.09 | |

| RLR | 59.87 | 3.27 | 0.94 | 55.54 | 55.53 | 1.98 | 1.87 | 59.98 | 59.52 | 0.21 | 0.27 | 45.60 | 46.02 | 1.92 | 53.04 | |

| RFA | 79.80 | 56.26 | 97.42 | 36.49 | 2.30 | 53.70 | 90.70 | 39.00 | 8.10 | 4.29 | 22.26 | 71.93 | 56.60 | 50.27 | 39.36 | |

| MKrum | 70.89 | 72.70 | 95.57 | 20.98 | 3.71 | 2.12 | 53.81 | 69.80 | 35.09 | 1.18 | 1.22 | 74.02 | 71.08 | 49.58 | 37.78 | |

| Foolsgold | 85.97 | 20.24 | 83.27 | 68.91 | 16.14 | 42.20 | 63.56 | 50.79 | 31.62 | 3.77 | 1.49 | 78.08 | 80.22 | 42.88 | 62.45 | |

| MM | 82.02 | 50.52 | 95.70 | 41.41 | 4.08 | 66.88 | 43.69 | 28.18 | 47.38 | 85.58 | 98.86 | 13.02 | 1.04 | 63.12 | 30.83 | |

| Lockdown | 84.05 | 6.68 | 8.01 | 75.23 | 75.73 | 7.11 | 6.03 | 76.63 | 75.77 | 1.24 | 2.19 | 73.82 | 73.81 | 5.21 | 75.07 | |

| AlignIns | 83.77 | 2.48 | 1.7 | 81.17 | 81.32 | 1.54 | 1.10 | 81.24 | 81.11 | 2.73 | 2.08 | 81.54 | 80.42 | 1.77 | 80.48 | |

| CIFAR-100 (VGG9) | FedAvg | 63.33 | 99.57 | 99.63 | 0.35 | 0.33 | 99.52 | 99.74 | 0.45 | 0.23 | 97.58 | 97.18 | 1.94 | 2.25 | 98.66 | 0.92 |

| FedAvg* | 63.33 | 0.59 | 0.59 | 50.21 | 50.21 | 0.59 | 0.59 | 50.21 | 50.21 | 0.59 | 0.59 | 50.21 | 50.21 | 0.59 | 50.21 | |

| RLR | 35.83 | 58.31 | 98.94 | 9.22 | 0.47 | 2.31 | 76.82 | 22.61 | 7.79 | 0.00 | 15.54 | 11.31 | 15.54 | 42.26 | 11.66 | |

| RFA | 34.16 | 3.19 | 0.89 | 25.07 | 26.58 | 0.91 | 4.25 | 24.68 | 25.66 | 99.47 | 8.52 | 0.36 | 22.82 | 22.51 | 20.93 | |

| MKrum | 45.10 | 99.44 | 1.84 | 0.43 | 34.89 | 99.30 | 1.22 | 0.55 | 34.05 | 99.71 | 99.20 | 0.23 | 0.49 | 54.69 | 14.69 | |

| Foolsgold | 62.77 | 99.58 | 99.56 | 0.38 | 0.38 | 99.52 | 99.67 | 0.43 | 0.29 | 11.64 | 11.06 | 43.01 | 42.20 | 70.23 | 10.27 | |

| MM | 60.22 | 99.65 | 99.93 | 0.28 | 0.04 | 99.90 | 99.94 | 0.10 | 0.06 | 99.73 | 99.82 | 0.23 | 0.14 | 99.53 | 0.18 | |

| Lockdown | 60.91 | 29.19 | 40.08 | 32.91 | 30.60 | 11.90 | 20.08 | 34.97 | 32.79 | 0.13 | 0.07 | 44.42 | 42.72 | 21.73 | 36.47 | |

| AlignIns | 59.18 | 0.66 | 0.54 | 47.51 | 44.67 | 0.19 | 0.42 | 47.33 | 48.77 | 1.20 | 1.09 | 49.17 | 45.70 | 0.64 | 47.86 | |

In non-IID settings, the divergence between benign model updates will increase, thus defense methods are hard to identify malicious model updates. From Table 4, We can conclude MM still fails to detect malicious model updates on two non-IID cases. Foolsgold can only exhibit a limited degree of robustness under Neurotoxin attack. Specifically, in the non-IID CIFAR-10 under DBA attack, Foolsgold was unable to effectively detect malicious model updates. This resulted in a BA of and and an RA of and . The reason for this lies in the feature of the Neurotoxin attack, where the malicious model updates are projected to the Bottom- parameters of the aggregated model update in the latest round. This process makes the malicious model updates generated by Neurotoxin attacks have the same Top parameters, reducing local variance between them. Foolsgold enjoys a more accurate identification of malicious model updates as it works based on the assumption that malicious model updates are consistent with each other. In contrast, AlignIns exhibits outstanding robustness in the same case as AlignIns achieves significantly superior performance, yielding the lowest BA at and , and the highest RA at and . This marks an improvement of and in BA and and in RA over Foolsgold. For CIFAR-100 dataset, AlignIns still have a lower BA and higher RA than their counterparts, underlining the enhanced detection and robustness capabilities of AlignIns in challenging non-IID conditions.

| Method | Badnet | Neurotoxin | Avg. BA | Avg. RA | ||

| BA | RA | BA | RA | |||

| RLR | 55.54 | 18.25 | 0.54 | 22.01 | 28.04 | 20.13 |

| RFA | 0.38 | 32.40 | 97.41 | 1.97 | 48.90 | 17.19 |

| MKrum | 0.36 | 32.60 | 29.37 | 25.55 | 14.87 | 29.08 |

| Foolsgold | 93.59 | 4.68 | 0.26 | 37.05 | 46.93 | 20.87 |

| MM | 97.01 | 2.11 | 90.85 | 5.27 | 93.93 | 3.69 |

| Lockdown | 72.08 | 17.09 | 0.34 | 28.18 | 36.21 | 22.64 |

| AlignIns | 0.22 | 34.55 | 0.40 | 36.30 | 0.31 | 35.43 |

10.2 Results on Larger Datasets

We also evaluate AlignIns on the Tiny-ImageNet dataset, which is typically the largest dataset considered in related works. The BA and RA results are summarized in Table 5. AlignIns demonstrates strong robustness against both BadNet and Neurotoxin attacks, achieving the lowest BA () and the highest RA (). These results highlight the practical effectiveness of AlignIns on large, real-world datasets.

10.3 Trigger-Optimization Attack

We evaluate the experimental performance of AlignIns under the strong trigger-optimization attack. Specifically, we consider the SOTA trigger-optimization attack F3BA [9] and conduct experiments on CIFAR-10 dataset under both IID and varying degrees of non-IID settings. As the results shown in Table 6, FedAvg is vulnerable to F3BA as it has a high BA and low RA. Similarly, RLR also cannot provide enough robustness to F3BA especially when the data heterogeneity is high. In contrast, AlignIns consistently achieves the highest robustness across all scenarios. Specifically, compared to Bulyan, AlignIns yields an average increase of in BA and in RA. While trigger-optimization attacks typically search for an optimal trigger to enhance their stealthiness and effectiveness, AlignIns can still identify malicious and benign model updates by inspecting their alignments.

| Method | Data Distritbuion | |||||||

| =0.3 | =0.5 | =0.7 | IID | |||||

| BA | RA | BA | RA | BA | RA | BA | RA | |

| FedAvg | 93.97 | 5.13 | 93.44 | 6.06 | 94.76 | 4.83 | 94.16 | 5.50 |

| RLR | 92.58 | 6.71 | 93.20 | 6.42 | 81.38 | 15.80 | 86.23 | 13.23 |

| Bulyan | 60.97 | 27.49 | 8.57 | 58.12 | 17.82 | 57.71 | 15.61 | 64.40 |

| AlignIns | 5.22 | 65.12 | 2.33 | 72.82 | 1.99 | 70.50 | 2.91 | 75.71 |

10.4 Effectiveness under Adaptive Attack

Recall that in our attack model, the attacker is assumed to be unaware of the defense method the server deployed. Here, we assume the attacker has such knowledge and evaluate AlignIns under attacks tailored to circumvent it. Specifically, we design two adaptive attacks: ADA_A, where each malicious client randomly selects a benign model update and mirrors its sign, and ADA_B, where each malicious client aligns with the principal sign of all model updates. Results are summarized in Table 7. In the results, AlignIns shows strong resistance to both ADA_A and ADA_B attacks. For ADA_A, although it leverages benign signs, focuses on the signs of important weights, which typically differ from those of benign models, allowing AlignIns to counter ADA_A effectively. For ADA_B, using the principal sign yields an value of , which our can readily detect. These results confirm that AlignIns effectively limits backdoor success and preserves the main task and robust accuracy, even against adaptive attack strategies tailored to exploit its defenses.

| Dataset | ADA_A | ADA_B | ||||

| MA | BA | RA | MA | BA | RA | |

| CIFAR-10 | 88.22 | 2.34 | 85.44 | 88.33 | 1.82 | 86.49 |

| CIFAR-100 | 62.10 | 0.48 | 51.87 | 62.86 | 0.37 | 53.55 |

10.5 Effectiveness under Untargeted Attack

| Method | Attack Ratio=10% | Attack Ratio=20% | ||||||

| =0.3 | =0.5 | =0.7 | IID | =0.3 | =0.5 | =0.7 | IID | |

| FedAvg | 10.95 | 13.21 | 11.66 | 20.71 | 10.85 | 12.96 | 10.33 | 18.62 |

| RFA | 77.43 | 78.26 | 80.45 | 87.03 | 77.02 | 76.93 | 79.76 | 86.03 |

| MKrum | 67.99 | 71.14 | 76.76 | 86.87 | 65.61 | 74.39 | 77.16 | 86.39 |

| SignGuard | 85.11 | 85.58 | 86.84 | 89.23 | 85.71 | 84.69 | 86.22 | 88.45 |

| AlignIns | 85.32 | 85.61 | 87.13 | 89.23 | 85.49 | 84.98 | 86.18 | 88.54 |

In this section, we conduct experiments to illustrate how AlignIns performs with respect to untargeted attacks (also known as Byzantine attacks). Byzantine attacks aim to degrade the model’s overall performance during the training as much as possible. We consider the SOTA Byzantine attack method ByzMean [49] which uses the Lie attack [5] as the backbone of the attack baseline. We also involve the SOTA Byzantine-robust method SignGuard [49] in our experiments. Table 8 reports the MA of FedAvg, RFA, MKrum, SignGuard, and our method AlignIns, in defending against ByzMean attack on CIFAR-10 dataset with attack ratios of and under different data settings. The results indicate that non-robust baseline FedAvg collapsed when facing to ByzMean attack in all cases, yielding an accuracy below . RFA and MKrum provide a certain but limited Byzantine-robustness. In contrast, AlignIns consistently achieves comparable accuracy with SOTA SignGuard across all scenarios. These results demonstrate AlignIns’ generalization ability for both backdoor and Byzantine attacks, making it a potential and potent method for practical application in real-world scenarios where there is no prior knowledge about the attack type.

10.6 Effectiveness on More Datasets

To validate that the achieved robustness by AlignIns can be generalized to other datasets, we show our evaluation results on MNIST, FMNIST, and Sentiment140 under Badnet attack in Table 9. We also involve the perfectly robust FedAvg* for comparison. Notably, AlignIns consistently aligns with FedAvg* in MA, BA, and RA, indicating AlignIns can accurately identify malicious model updates and preserve benign model updates at the same time to attain such a high robustness and model performance. Additionally, AlignIns shows SOTA defense efficacy compared to other counterparts. For example, AlignIns maintains the highest BA at , , and , with an improvement of , , and over RLR on the respective three datasets. Besides, AlignIns also achieves the highest RA across all datasets, averaging a increase compared to RFA. These findings verify the robustness and stability of AlignIns across various datasets.

| Method | MNIST | FMNIST | Sentiment140 | ||||||

| MA | BA | RA | MA | BA | RA | MA | BA | RA | |

| FedAvg | 97.66 | 99.87 | 0.13 | 88.34 | 98.40 | 1.46 | 66.16 | 85.55 | 14.45 |

| FedAvg* | 97.63 | 0.37 | 97.60 | 88.44 | 0.60 | 76.72 | 67.31 | 41.57 | 58.43 |

| RLR | 96.48 | 21.78 | 75.39 | 86.51 | 0.04 | 75.44 | 51.23 | 99.05 | 0.95 |

| RFA | 97.72 | 0.61 | 97.53 | 88.53 | 13.08 | 69.09 | 60.71 | 99.90 | 0.10 |

| AlignIns | 97.76 | 0.36 | 97.73 | 88.50 | 0.01 | 77.04 | 69.26 | 41.43 | 58.57 |

10.7 Effectiveness under Various Attack Ratios.

We further evaluate the performance of AlignIns under various attack ratios in non-IID settings. We conduct the experiments under PGD and Scaling attacks with the attack ratio varying from to on non-IID CIFAR-10 and CIFAR-100 datasets. As shown in Figure 3, the RA of RLR and MKrum generally decreases as the attack ratio increases. For instance, when the attack ratio exceeds , MKrum loses effectiveness, with RA dropping to as low as . This decline is primarily due to the PGD attack, which projects malicious model updates within a sphere centered around the global model, limiting magnitude changes and evading detection by magnitude-based methods like MKrum. Lockdown achieves comparable robustness with AlignIns at low attack ratios on the CIFAR-10 dataset. Yet, it fails to effectively protect against both types of attacks when the attack ratios are high (), resulting in considerable declines in robustness. Compared to its counterparts, AlignIns achieves a higher and more stable performance. As the attack ratio increases, AlignIns only has a minor decrease in RA.

11 Impact of Filtering Radii

Here, we dive into the impact of different configurations of filtering radii, and , on the efficacy of AlignIns. A smaller or indicates more stringent filtering and results in a smaller benign set for aggregation. We conduct the experiments on non-IID CIFAR-10 and CIFAR-100 datasets under Badnet and PGD attacks. The results, as detailed in Table 10, show the ideal configurations of and that effectively balance the filtering intensity while maximizing the robustness of the model. Specifically, for CIFAR-10 dataset, the optimal RA is attained when and are both set to under both Badnet and PGD attacks, suggesting an ideal level of filtering intensity. A reduction in either or leads to a slight drop in RA, implying that some benign updates may be erroneously discarded due to an overly stringent filtering radius. In contrast, when and are increased to , there’s a significant decline in AlignIns’ RA, due to the excessively permissive filtering threshold. As for CIFAR-100 dataset, AlignIns’ performance remains stable against variations in both radii. Specifically, under the Badnet attack, AlignIns performs best when both radii are at , while for the PGD attack, the radii at are most effective. This is mainly because PGD attack limits the large malicious model update changes, conducting a more stealthy attack than Badnet. By doing so, it makes the malicious model updates more similar to benign ones, leading to a smaller filter radius.

| Config. | CIFAR-10 | CIFAR-100 | |||||||

| Badnet | PGD | Badnet | PGD | ||||||

| BA | RA | BA | RA | BA | RA | BA | RA | ||

| 0.5 | 0.5 | 0.58 | 76.37 | 3.29 | 79.39 | 0.59 | 43.22 | 0.59 | 46.17 |

| 1.0 | 0.5 | 4.71 | 78.27 | 63.60 | 32.27 | 0.49 | 44.41 | 0.62 | 46.83 |

| 0.5 | 1.0 | 3.11 | 78.99 | 1.73 | 79.37 | 0.58 | 43.18 | 0.19 | 44.67 |

| 1.0 | 1.0 | 1.70 | 81.32 | 2.31 | 81.18 | 0.54 | 44.67 | 0.52 | 48.37 |

| 2.0 | 2.0 | 57.47 | 37.53 | 81.33 | 17.69 | 0.76 | 47.07 | 0.68 | 46.99 |

12 Computational Cost of AlignIns

We compare the computational cost of AlignIns with other counterparts. AlignIns calculates the metric using the Top- indicator, incurring a complexity of due to the use of sorting algorithms like merge sort in the parameter space of the local update. As a result, the total computational expense of AlignIns in the worst-case scenario is . Nonetheless, we argue that the computational burden of AlignIns is comparable with several robust aggregation methods such as Krum and MKrum, both of which have a complexity of , the Coordinate-wise median with , and Trmean at . Each method shows a linear dependency on , which can be considerably large in modern deep neural networks (i.e., ), and thus is the predominant factor in computational complexity. Empirically, AlignIns imposes minimal computational overhead on the server side ( seconds per round), compared to seconds for another filtering-based method MM. Other methods like Lockdown introduce additional computational overhead on local clients, which is undesirable in many scenarios.

13 Proof preliminaries

13.1 Useful Inequalities

Lemma 3.

Given any two vectors ,

Lemma 4.

Given any two vectors ,

Lemma 5.

Given arbitrary set of n vectors , ,

Lemma 6.

If the learning rate , under Assumption 2 and Assumption 3, the local divergence of benign model updates are bounded as follows:

Proof.

Given that where is the learning rate and is the local stochastic gradient over the mini-batch . We have

| (4) |

where the first inequality follows Lemma 5, and the last second follows Lemma 4. For , with Assumption 2, we have

| (5) |

For , we have

| (6) |

where the first inequality follows Lemma 5, and the last inequality follow Assumption 2. For , by Assumption 3, we have

| (7) |

Plugging Inequality (5), Inequality (6), and Inequality (7) back to Inequality (4), with , we have

| (8) |

This concludes the proof. ∎

13.2 Proof of Lemma 1

Proof.

Recall that our method is denoted by . Given that where is the selected set by in round and . Let be the average of benign updates in round , where . We have

| (9) |

where the first inequality follows Lemma 5.

Due to the use of MZ-score, models in are centered around the median within a (and ) radius. If the radius parameter or equals zero, only the median model (based on Cosine similarity or masked principal sign alignment ratio) will be selected for averaging. To maximize benign model inclusion in averaging, we assume the radius parameters and are set sufficiently large to ensure . More precisely, assume there exist two positive constants and , and if the radius parameters and in Algorithm 1 satisfy , we have . Additionally, if , we can have at least one benign clients in and the ratio of is bounded by . Consequently, we have

| (12) |

where the first inequality follows Lemma 5, the second inequality holds as the model updates in is bounded by , the third inequality follows Lemma 6.

13.3 Proof of Lemma 2

Proof.

We use to denote the model trained over which contains where is the set of benign clients and is the set of malicious clients. Obviously, and . We use to denote the clean model which is trained over . The update rules for and are as follows.

| (14) | ||||

| (15) |

With Equation (14) and Equation (15), we have

| (16) |

where the first inequality follows Lemma 4.

We now treat , , respectively. For , given that , we have

| (18) |

where the first inequality follows Lemma 1 in the paper. Define . For , we have

| (19) |

where the first inequality follows Lemma 4. For , we have

| (20) |

where the both first and second inequality follow Lemma 5, the third inequality follows Assumption 2.

Plugging Inequality (22), Inequality (21), and Inequality (20) back to Inequality (19), we have:

| (23) |

Plugging Inequality (23), Inequality (18) back to Inequality (17), we have

| (24) |

Therefore, we have

| (25) |

where the second inequality follows , and the last inequality holds as .

We inductively prove the Lemma 2, assume for the statement of Lemma holds. Let , by Inequality (25), we have

| (26) |

By Bernoulli’s inequality we have

| (27) |

which concludes the proof. ∎