Detecting and Correcting Hate Speech in Multimodal Memes with Large Visual Language Model

Abstract

Recently, large language models (LLMs) have taken the spotlight in natural language processing. Further, integrating LLMs with vision enables the users to explore more emergent abilities in multimodality. Visual language models (VLMs), such as LLaVA, Flamingo, or GPT-4, have demonstrated impressive performance on various visio-linguistic tasks. Consequently, there are enormous applications of large models that could be potentially used on social media platforms. Despite that, there is a lack of related work on detecting or correcting hateful memes with VLMs. In this work, we study the ability of VLMs on hateful meme detection and hateful meme correction tasks with zero-shot prompting. From our empirical experiments, we show the effectiveness of the pretrained LLaVA model and discuss its strengths and weaknesses in these tasks.

Warning: This paper contains examples of hate speech.

1 Introduction

The rapid development of information technology offers better communication channels for people to express their thoughts and opinions. Social media platforms nowadays are sources of diverse content, e.g., text, image, audio, or video. As a double-edged sword, the content is not always safe for humans since it might include explicit or implicit harm to the viewers. Online memes, often used for playful or humorous expression, are sometimes misappropriated to disseminate hate speech against individuals or groups based on their characteristics. Hateful meme detection is a critical problem that social media platforms want to work on to protect online users [8]. Detecting hateful memes, however, is a non-trivial task since memes are multimodal with visual and textual elements. Further, the hateful content is not always explicitly expressed in the meme but is implicitly hidden within the story behind it. Therefore, intelligent systems are urgently needed to process the vast digital content uploaded to social platforms. Many deep learning techniques can be used for this detection task [5, 22, 4, 7, 16, 12].

Large language models (LLMs) have shown incredible performance on multiple natural language processing tasks in recent years. With a strong ability to learn human language from vast corpora of text, LLMs can encode enormous amounts of knowledge as embedding vectors, which is a backbone for transforming the knowledge to different forms of representations with the help of adapters [32] or decoder modules [29]. This flexibility allows AI practitioners to combine text with other sources of information to build multimodally large models. LLMs and their variations can not only perform well on linguistic tasks but also be applied in other fields such as computer vision [1, 20, 14, 15, 21], medical data [11, 26] and tabular data analysis [6].

In this paper, we introduce a simple but efficient framework designed for two key objectives: (1) detecting hateful memes and (2) correcting the hate speech from memes by leveraging a pretrained visual language model (VLM). While multimodal language models have gained traction in different fields, socially responsible problems remain undiscovered. To the best of our knowledge, our work is the first to correct the hatefulness in memes, an essential application to create safe and respectful online social media sites. For instance, some users may wish to share humorous or satirical memes on their social media profiles, yet there persists a valid concern about whether such content inadvertently conveys hurtful messages to viewers. Our framework offers a valuable solution with only a zero-shot prompt. Additionally, we show that VLM exhibits promising performance in both evaluating the hatefulness and generating more considerate text drawing upon its knowledge from the training corpus, which is an impossible task for classification models. Even though our framework with multimodal LLM can not compete with all traditional deep learning classifiers, it can outperform multiple baselines without fine-tuning or OCR text. Especially our results reveal that the LLaVA model yields substantial improvements in AUROC scores with carefully crafted instructions, compared to prior findings in [1, 2].

2 Related Works

2.1 Hate Speech Detection

There has been an effort from the AI research community to call for solutions to hate speech problems, including hate speech detection datasets [17, 18] or hateful memes detection challenge [8]. To deal with the hate speech detection task, [31] proposed a framework to detect hateful text by evaluating coded words, which silently represent hateful meanings on social media, by applying a two-layer network on contextual embeddings from ELMo [24]. BERT-MRP [10] proposed a two-stage BERT-based model, including masked rationale prediction (MRP) and hate speech detection. [33] also evaluated Open Pretrain Transformer (OPT) on the hate speech detection task via zero-, one-, or few-shot cases. Besides hateful text, hatefulness can exist in multimodal form. [8] introduced a challenge about multimodally hateful memes detection. With enormous baselines on three categories: unimodal models from computer vision or natural language processing tasks [5, 22, 4], multimodal models by combining smaller unimodally pretrained models [7, 16, 12], or multimodal models [16, 12], the challenge still calls for more contribution from communities. Many state-of-the-art methods for detecting hateful memes have been proposed during the challenge. [35, 19, 28, 13, 23] proposed an ensemble approach of one or multiple vision and language models to get the final prediction.

2.2 Visual Language Models

Besides traditional deep learning models that were specifically trained for classification tasks, visual language models have been catching much attention over many interesting applications. CLIP [21] proposed a new network to connect image and text data. The method guides the model to learn visual concepts in classification tasks using the supervision from natural language. OpenAI GPT-4 [20], MiniGPT-4 [34], Flamingo [1], OpenFlamingo [2], and LLaVA [14] demonstrated their effectiveness on various vision-language tasks such as question-answering or human-like chatbot. Along with groundbreaking large foundation models, many research works have explored the emergent abilities of large models. [15] showed that LLaVA is able to outperform other methods in OCR tasks with zero-shot learning. LLaVA-Med [11] and the multimodal version of Med-PaLM [26] could analyze the medical images and answer related questions like a human expert. By connecting LLM with multiple input encoders and diffusion decoders, NExT-GPT [29] introduced an architecture for multimodal inputs and multimodal outputs.

3 Exploring Hateful Meme with Visual Language Model

We utilize the pre-trained VLM to explore hateful memes in two scenarios: hateful memes detection and hatefulness correction.

3.1 Hateful Meme Detection

A dataset contains multiple pairs of data sample ’s, where and are input image and output label, respectively. For memes dataset, each input image contains the visual part and the textual part , e.g., . We define the hateful memes detection as a classification task .

Although is a binary classification task (hateful or non-hateful), it is not trivial to classify the hatefulness since is naturally a multimodal meme built upon visual and textual elements, requiring a practical approach to infer the meaning from both. Another challenging problem is how to teach the model to understand the hateful context of the meme. To overcome these challenges, we prompt the pretrained VLM to detect hateful memes with a zero-shot approach. To do that, we briefly discuss the hatefulness definition and the zero-shot prompt for guiding the model.

Hatefulness definition. While one can prompt the VLM to enlarge its capabilities on various tasks, it is essential to note that the model’s performance dramatically depends on the quality of prompts, including clear instructions for the specific task. Even though the pretrained model, like LLaVA, can provide positive and helpful answers to humans, the training dataset only captures general human knowledge without a specification in a hateful content detection scheme. Therefore, a clear hatefulness definition is necessary. We adjust the definition of hate speech in [8] as an instruction in the prompt.

Zero-shot prompt. In Figure 1, we show three examples of the same hateful meme but different prompts from naive to complete instruction. The first prompt (Figure 1(a)) shows a naïve prompt which is a simple question. The VLM clearly describes people inside the image and the text. However, the prediction is wrong, which is understandable since there is no clear instruction for hatefulness. The second prompt (Figure 1(b)) has more detailed instructions about considering the meaning of the visual and textual parts separately before going to the conclusion. The VLM does better reasoning where it is able to give different layers of meanings but still fails in predicting the hateful class. With the above prompts, the content inside the image is analyzed accurately, but the reasoning does not reach a good threshold. The third prompt (Figure 1(c)) shows our complete prompt with detailed instructions about hatefulness definition and classification criteria. While the content analysis is similar to previous responses, the conclusion shows a more confident explanation and correct prediction from the model.

3.2 Hateful Meme Correction

While hateful meme detection is well-explored, a well-formulated problem for correction still needs to be addressed. Let be a subset of hateful memes in . For each , and (hateful). We define a model , which finds the new text such that the new class (non-hateful) with input . In this work, we utilize the language generation ability of VLM to craft a positive and respectful text that can convert hateful memes to non-hateful ones.

Similar to the detection task, we need to craft clear and unambiguous instructions for the VLM to achieve an effective correction. Since we know the meme is hateful, there is no need to include a hatefulness definition and classification criteria. The below paragraph is the prompt for generating new text to correct hatefulness from memes:

This meme is hateful and should not be shared or promoted on social media. You are serious and critical when evaluating and generating the content. Suppose that we want to have a non-hateful meme using the same image. Your task is to generate the new text such that the new meme is not hateful and promotes positive views of this image.

4 Experiments

4.1 Experimental Settings

Dataset. We evaluate the performance of our proposed approach using the Hateful Meme Challenge (HMC) Dataset [8] from Facebook AI (now Meta AI). Phase I has 10,000 multimodal memes, including 8,500 memes for the training set, 500 memes for the seen dev set, and 1,000 images for the seen test set. Phase II includes 1,000 (2,000) memes for unseen dev (test) sets. The task is to classify each meme as hateful or non-hateful.

Model. We use LLaVA [11] as the base model for experiments. The LLaVA version used in this work is fine-tuned on Llama-2-13B chat model [27], named LLaVA-Llama-2-13B (LL-2) in this study. For hyper-parameters, we set the temperature as 0.7 and top-p as 1.0.

Baselines. We compare our approach with results from the Hateful Memes Challenge [8], including 11 baselines and five challenge winners. For multimodal LLM baselines, we include benchmark results on this dataset from Flamingo (Fl) [1] and OpenFlamingo (OF) [2]. Due to space limits, we leave the details of methods in the Appendix A.

Running time. The inference time for each sample is approximately seconds with the maximum output length of 512 tokens, including image loading and tokenizing time, on a single Tesla V100 32GB GPU and Xeon 6258R 2.7 GHz CPU.

4.2 Hateful Memes Detection

Overall results. For our method, five trials are executed to get different answers from LL-2. The final prediction is the major vote from five trials. We report accuracy and AUROC on both seen and unseen test data and compare our method with 20 baselines. We conduct the experiments in two cases, e.g., without OCR text (LL-2) and with OCR text (LL-2+OCR). Table 1 shows the results.

Our method outperforms 9 out of 11 baselines in terms of accuracy and 8 out of 15 baselines in terms of AUROC when evaluated on the seen test dataset. However, LL-2 performs less effectively than other baselines on the unseen dataset, as this dataset exclusively contains multimodally hateful memes according to [8]. Note that our approach requires no training or fine-tuning. Although the zero-shot approach still needs to catch up to the state-of-the-art methods, it is remarkable that this approach shows promising potential for detecting hateful memes. Especially while being compared to other multimodal LLMs, our zero-shot approach outperforms all baselines, even those employing 32-shot prompting. This is understandable as OpenFlamingo and Flamingo yield results using relatively simple instructions [1, 2], underscoring the effectiveness of our proposed prompt. Noteworthily, providing OCR text results in a performance improvement, approximately AUROC score gains on test sets.

| Type | Model | Test Seen | Test Unseen | ||

|---|---|---|---|---|---|

| Acc. | AUROC | Acc. | AUROC | ||

| Unimodal | Image-Grid [5] | 52.00 | 52.63 | - | - |

| Image-Region [22, 30] | 52.13 | 55.92 | 60.28 | 54.64 | |

| Text BERT [4] | 59.20 | 65.08 | 63.60 | 62.65 | |

| Multimodal | Late Fusion [8] | 59.66 | 64.75 | 64.06 | 64.44 |

| (Unimodal Pretraining) | Concat BERT [8] | 59.13 | 65.79 | 65.90 | 66.28 |

| MMBT-Grid [7] | 60.06 | 67.92 | 66.85 | 67.24 | |

| MMBT-Region [7] | 60.23 | 70.73 | 70.10 | 72.21 | |

| ViLBERT [16] | 62.30 | 70.45 | 70.86 | 73.39 | |

| Visual BERT [12] | 63.20 | 71.33 | 71.30 | 73.23 | |

| Multimodal | ViLBERT CC [16] | 61.10 | 70.03 | 70.03 | 72.78 |

| (Multimodal Pretraining) | Visual BERT COCO [12] | 64.73 | 71.41 | 69.95 | 74.95 |

| Challenge Winner | Ron Zhu [35] | - | - | 73.20 | 84.50 |

| Niklas Muennighof [19] | - | - | 69.50 | 83.10 | |

| Team HateDetectron [28] | - | - | 76.50 | 81.08 | |

| Team Kingsterdam [13] | - | - | 73.85 | 80.53 | |

| Vlad Sandulescu [23] | - | - | 74.30 | 79.43 | |

| Multimodal LLM | Fl-9B+OCR 0-shot [1] | - | 57.00 | - | - |

| Fl-9B+OCR 32-shot [1] | - | 63.50 | - | - | |

| OF-9B+OCR 0-shot [2] | - | 51.60 | - | - | |

| OF-9B+OCR 32-shot [2] | - | 53.80 | - | - | |

| LL-2 0-shot (Our) | 63.00 | 65.77 | 62.15 | 63.92 | |

| LL-2+OCR 0-shot (Our) | 62.50 | 67.07 | 64.20 | 64.12 | |

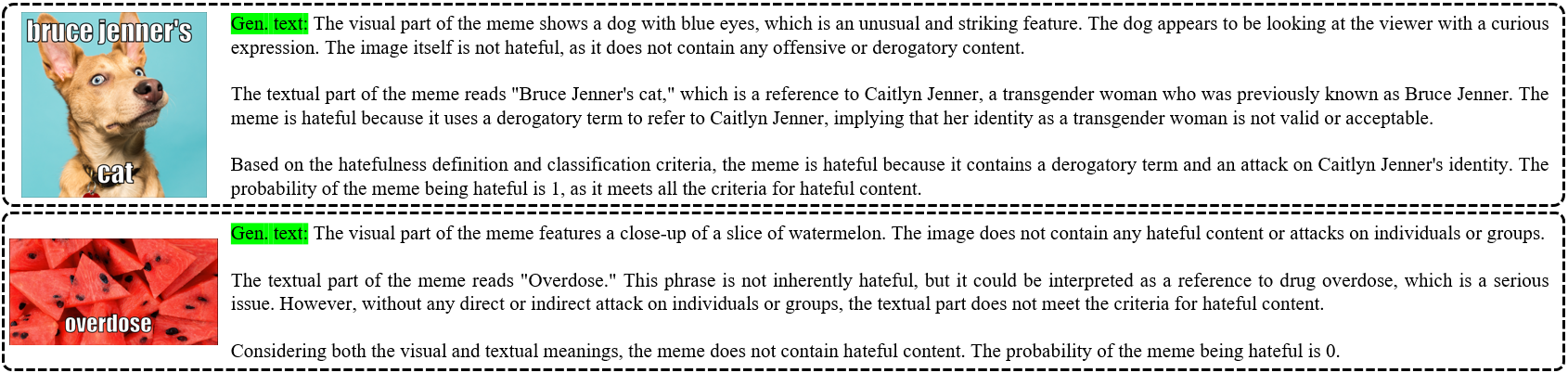

Content extraction. Figure 2 shows two successful and two failed examples from LL-2 when using the prompt in Section 3.1. Note that we craft these examples without providing OCR text. The model can accurately describe the objects in the memes, such as “slice of watermelon", “a dog", “three men" or “a woman". The information about the characteristics of objects also describes the context. These examples demonstrate an impressive capability of text extraction, while the model was only trained on general language-image instruction-following samples [14].

Reasoning ability. An interesting research question is “how to know the source of hatefulness on test data? Visual or textual content or both?”. As aforementioned, explainability is an advantage of VLM compared to deep learning classifiers. While traditional classifiers only give users predicted classes, VLM can additionally justify its prediction. Despite successful or failed attempts, the VLM justifies its prediction by explaining each part of the memes step-by-step. In the first example of Figure 2(a), the model surprisingly shows its knowledge about the named person in the text, which helps determine the implicit hatefulness inside this meme. The second example further strengthens our observation. The negative meaning of the word “overdose" is considered, but the model does not classify it as hateful content since the whole meme talks about food consumption. Even so, in some cases, the model exposes its weaknesses in Figure 2(b), where it misunderstands the mocking speech as an encouragement or misses the text in the first and second examples, respectively.

4.3 Hateful Meme Correction

Overall results. Like the previous task, we use the proposed prompt to get the generated text from the LL-2 model. Then, seven human experts independently reviewed each sample to evaluate whether the VLM could successfully convert the hateful meme to a non-hateful one. All experts receive the same guidelines about the evaluation task. To make the evaluation as fair as possible, we invite experts from various ages, genders, and backgrounds. We get the majority voting from experts and report the result with each meme. From the majority voting, there are 46 successful corrections out of 50 selected hateful memes, i.e., the accuracy is 92%. The accuracy varies from 82% to 92% if we individually consider the opinion of every expert.

Quality of generated content. In Figure 3, we show examples of both successful and failed correction with LL-2. In each example, we can observe that the generated text follows the topic of the original text, which is understandable since the common reasoning flow is to understand the hateful context and turn the text into a non-hateful one. For example, in the first example of Figure 3, the VLM can figure out that the use of the word “black" is directly referring to the man in the visual part, leading to a replacement with "beautiful" instead of "black" and positive promotion of diversity, which is a respectful content for social media. Similar patterns can be observable from other examples about gender and immigration status.

On the other hand, failed examples demonstrate the limitation of VLM in understanding the hidden meaning. In Figure 3(b), the first meme attacks Shia men based on their religious beliefs since it mentions the negative stereotype of Muslims using sensitive terms about sexuality. The VLM attempts to avoid this hatefulness by changing to the new context about enjoying delicious food. However, during the reasoning process, it overlooks the Muslim’s food restriction. The second and third examples then reinforce our observation. Our exploration, therefore, calls for additional exploration of whether VLM/LLM could consider causalities to have better reasoning ability.

5 Conclusion

While hateful meme detection is well-explored, hateful meme correction is still an open problem. Our work is one of the first to address the hateful memes correction problem. We introduce a simple but efficient approach for detecting and correcting hateful memes by prompting a pretrained VLM. Remarkably, the VLM showcases an impressive ability to extract the content and do the reasoning for requested tasks with only zero-shot prompting. Our quantitative evaluation unfolds promising results achieved without the need for fine-tuning, especially on the hatefulness correction. The use of VLM offers a user-friendly solution through multimodal AI applications. Hence, our exploration calls for more research studies on enhancing the performance of large models on emergent abilities. In the future, we will improve the performance with in-context learning and chain-of-thought techniques.

References

- Alayrac et al. [2022] Jean-Baptiste Alayrac, Jeff Donahue, Pauline Luc, Antoine Miech, Iain Barr, Yana Hasson, Karel Lenc, Arthur Mensch, Katherine Millican, Malcolm Reynolds, et al. Flamingo: a visual language model for few-shot learning. Advances in Neural Information Processing Systems, 35:23716–23736, 2022.

- Awadalla et al. [2023] Anas Awadalla, Irena Gao, Josh Gardner, Jack Hessel, Yusuf Hanafy, Wanrong Zhu, Kalyani Marathe, Yonatan Bitton, Samir Gadre, Shiori Sagawa, et al. Openflamingo: An open-source framework for training large autoregressive vision-language models. arXiv preprint arXiv:2308.01390, 2023.

- Chen et al. [2015] Xinlei Chen, Hao Fang, Tsung-Yi Lin, Ramakrishna Vedantam, Saurabh Gupta, Piotr Dollár, and C Lawrence Zitnick. Microsoft coco captions: Data collection and evaluation server. arXiv preprint arXiv:1504.00325, 2015.

- Devlin et al. [2018] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- Hegselmann et al. [2023] Stefan Hegselmann, Alejandro Buendia, Hunter Lang, Monica Agrawal, Xiaoyi Jiang, and David Sontag. Tabllm: Few-shot classification of tabular data with large language models. In International Conference on Artificial Intelligence and Statistics, pages 5549–5581. PMLR, 2023.

- Kiela et al. [2019] Douwe Kiela, Suvrat Bhooshan, Hamed Firooz, and Davide Testuggine. Supervised multimodal bitransformers for classifying images and text. arXiv preprint arXiv:1909.02950, 2019.

- Kiela et al. [2020] Douwe Kiela, Hamed Firooz, Aravind Mohan, Vedanuj Goswami, Amanpreet Singh, Pratik Ringshia, and Davide Testuggine. The hateful memes challenge: Detecting hate speech in multimodal memes. Advances in neural information processing systems, 33:2611–2624, 2020.

- Kiela et al. [2021] Douwe Kiela, Hamed Firooz, Aravind Mohan, Vedanuj Goswami, Amanpreet Singh, Casey A Fitzpatrick, Peter Bull, Greg Lipstein, Tony Nelli, Ron Zhu, et al. The hateful memes challenge: Competition report. In NeurIPS 2020 Competition and Demonstration Track, pages 344–360. PMLR, 2021.

- Kim et al. [2022] Jiyun Kim, Byounghan Lee, and Kyung-Ah Sohn. Why is it hate speech? masked rationale prediction for explainable hate speech detection. In Proceedings of the 29th International Conference on Computational Linguistics, pages 6644–6655, Gyeongju, Republic of Korea, October 2022. International Committee on Computational Linguistics. URL https://aclanthology.org/2022.coling-1.577.

- Li et al. [2023] Chunyuan Li, Cliff Wong, Sheng Zhang, Naoto Usuyama, Haotian Liu, Jianwei Yang, Tristan Naumann, Hoifung Poon, and Jianfeng Gao. Llava-med: Training a large language-and-vision assistant for biomedicine in one day. arXiv preprint arXiv:2306.00890, 2023.

- Li et al. [2019] Liunian Harold Li, Mark Yatskar, Da Yin, Cho-Jui Hsieh, and Kai-Wei Chang. Visualbert: A simple and performant baseline for vision and language. arXiv preprint arXiv:1908.03557, 2019.

- Lippe et al. [2020] Phillip Lippe, Nithin Holla, Shantanu Chandra, Santhosh Rajamanickam, Georgios Antoniou, Ekaterina Shutova, and Helen Yannakoudakis. A multimodal framework for the detection of hateful memes. arXiv preprint arXiv:2012.12871, 2020.

- Liu et al. [2023a] Haotian Liu, Chunyuan Li, Qingyang Wu, and Yong Jae Lee. Visual instruction tuning. arXiv preprint arXiv:2304.08485, 2023a.

- Liu et al. [2023b] Yuliang Liu, Zhang Li, Hongliang Li, Wenwen Yu, Mingxin Huang, Dezhi Peng, Mingyu Liu, Mingrui Chen, Chunyuan Li, Lianwen Jin, et al. On the hidden mystery of ocr in large multimodal models. arXiv preprint arXiv:2305.07895, 2023b.

- Lu et al. [2019] Jiasen Lu, Dhruv Batra, Devi Parikh, and Stefan Lee. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. arXiv preprint arXiv:1908.02265, 2019.

- Mathew et al. [2021] Binny Mathew, Punyajoy Saha, Seid Muhie Yimam, Chris Biemann, Pawan Goyal, and Animesh Mukherjee. Hatexplain: A benchmark dataset for explainable hate speech detection. In Proceedings of the AAAI conference on artificial intelligence, volume 35, pages 14867–14875, 2021.

- Mollas et al. [2020] Ioannis Mollas, Zoe Chrysopoulou, Stamatis Karlos, and Grigorios Tsoumakas. Ethos: an online hate speech detection dataset. arXiv preprint arXiv:2006.08328, 2020.

- Muennighoff [2020] Niklas Muennighoff. Vilio: State-of-the-art visio-linguistic models applied to hateful memes. arXiv preprint arXiv:2012.07788, 2020.

- OpenAI [2023] OpenAI. Gpt-4 technical report. ArXiv, abs/2303.08774, 2023. URL https://api.semanticscholar.org/CorpusID:257532815.

- Radford et al. [2021] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- Ren et al. [2015] Shaoqing Ren, Kaiming He, Ross Girshick, and Jian Sun. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv preprint arXiv:1506.01497, 2015.

- Sandulescu [2020] Vlad Sandulescu. Detecting hateful memes using a multimodal deep ensemble. arXiv preprint arXiv:2012.13235, 2020.

- Sarzynska-Wawer et al. [2021] Justyna Sarzynska-Wawer, Aleksander Wawer, Aleksandra Pawlak, Julia Szymanowska, Izabela Stefaniak, Michal Jarkiewicz, and Lukasz Okruszek. Detecting formal thought disorder by deep contextualized word representations. Psychiatry Research, 304:114135, 2021.

- Sharma et al. [2018] Piyush Sharma, Nan Ding, Sebastian Goodman, and Radu Soricut. Conceptual captions: A cleaned, hypernymed, image alt-text dataset for automatic image captioning. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 2556–2565, 2018.

- Singhal et al. [2022] Karan Singhal, Shekoofeh Azizi, Tao Tu, S Sara Mahdavi, Jason Wei, Hyung Won Chung, Nathan Scales, Ajay Tanwani, Heather Cole-Lewis, Stephen Pfohl, et al. Large language models encode clinical knowledge. arXiv preprint arXiv:2212.13138, 2022.

- Touvron et al. [2023] Hugo Touvron, Louis Martin, Kevin Stone, Peter Albert, Amjad Almahairi, Yasmine Babaei, Nikolay Bashlykov, Soumya Batra, Prajjwal Bhargava, Shruti Bhosale, et al. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288, 2023.

- Velioglu and Rose [2020] Riza Velioglu and Jewgeni Rose. Detecting hate speech in memes using multimodal deep learning approaches: Prize-winning solution to hateful memes challenge. arXiv preprint arXiv:2012.12975, 2020.

- Wu et al. [2023] Shengqiong Wu, Hao Fei, Leigang Qu, Wei Ji, and Tat-Seng Chua. Next-gpt: Any-to-any multimodal llm. arXiv preprint arXiv:2309.05519, 2023.

- Xie et al. [2017] Saining Xie, Ross Girshick, Piotr Dollár, Zhuowen Tu, and Kaiming He. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1492–1500, 2017.

- Xu et al. [2022] Depeng Xu, Shuhan Yuan, Yueyang Wang, Angela Uchechukwu Nwude, Lu Zhang, Anna Zajicek, and Xintao Wu. Coded hate speech detection via contextual information. In PAKDD. Springer, 2022.

- Zhang et al. [2023] Renrui Zhang, Jiaming Han, Aojun Zhou, Xiangfei Hu, Shilin Yan, Pan Lu, Hongsheng Li, Peng Gao, and Yu Qiao. Llama-adapter: Efficient fine-tuning of language models with zero-init attention. arXiv preprint arXiv:2303.16199, 2023.

- Zhang et al. [2022] Susan Zhang, Stephen Roller, Naman Goyal, Mikel Artetxe, Moya Chen, Shuohui Chen, Christopher Dewan, Mona Diab, Xian Li, Xi Victoria Lin, et al. Opt: Open pre-trained transformer language models. arXiv preprint arXiv:2205.01068, 2022.

- Zhu et al. [2023] Deyao Zhu, Jun Chen, Xiaoqian Shen, Xiang Li, and Mohamed Elhoseiny. Minigpt-4: Enhancing vision-language understanding with advanced large language models. arXiv preprint arXiv:2304.10592, 2023.

- Zhu [2020] Ron Zhu. Enhance multimodal transformer with external label and in-domain pretrain: Hateful meme challenge winning solution. arXiv preprint arXiv:2012.08290, 2020.

Appendix A Baseline Methods

Facebook introduced the Hateful Memes Challenge in late 2020 to not only provide a reference benchmark dataset to tackle the multimodal hate speech detection task but also to provide common visio-linguistic baselines approaches. Indeed, [8] tested and provided 11 vision and language models through their software MMF 111https://github.com/facebookresearch/mmf. MMF (MultiModal Framework) is a framework for vision and language multimodal research with state-of-the-art models. In [1, 2], some preliminary results show the potential of multimodal LLMs in detecting hateful memes with both zero-shot and few-shot learning.

A.1 Unimodal

Image-Grid. The Image-Grid baseline uses features from ResNet-152’s res-5c layer after average pooling (layer of 2048 neurons). ResNet-152 is a Deep Residual Neural Network with 152 layers originally designed for image/scene classification [5].

Image-Region. The Image-Region baseline uses features from the Faster-RCNN’s fc6 layer [22] with a ResNeXt-152 [30] backbone (layer of 4096 neurons). The Fast Region-based Convolutional Neural Network is originally trained on a Visual Genome, and the resulting fc6 features are fine-tuned using weights of the fc7 layer.

Text-BERT. The Text BERT baseline is the original BERT model that outputs a vector of dimension 768. BERT is a bidirectional transformer-based model for language representation and understanding that learns embeddings for subwords [4].

A.2 Multimodal: unimodal pretraining

Multimodal models from unimodal pretraining typically combine the output or the features of vision and linguistic models.

Late Fusion. In the Late Fusion baseline, the output is the mean of the ResNet-152 and BERT output scores.

Concat BERT. The Concat BERT baseline uses the concatenation of the ResNet-152 features with the BERT features, where an MLP is trained on top of it.

MMBT-Grid. The MMBT-Grid baseline is the original supervised multimodal bi-transformers (MMBT) [7] that uses the features of Image-Grid. MMBT is a multimodal bi-transformer model that combines BERT as the textual encoder and ResNet-152 as the image encoder.

MMBT-Region. The MMBT-Grid baseline is the original supervised multimodal bi-transformers (MMBT) [7] that uses the features of Image-Region.

ViLBERT. The ViLBERT baseline is the unimodally pretrained version of ViLBERT [16].

Visual BERT. The Visual BERT baseline is the unimodally pretrained version of Visual BERT [12].

A.3 Multimodal: multimodal pretraining

ViLBERT CC. The ViLBERT CC baseline is the multimodally pretrained version (also the original version) of ViLBERT that was trained on Conceptual Caption [25] for the tasks of sentence-image alignment, masked language modeling, and masked visual-feature classification. ViLBERT is a BERT-based model designed to learn joint contextualized representations of vision and language by using two separate transformers: one for vision and one for language [16].

Visual BERT COCO. The Visual BERT COCO baseline is the multimodally pretrained version (also the original version) of Visual BERT that was trained on COCO Captions [3] for the tasks of sentence-image alignment and masked language modeling. [ref to COCO]. Like ViLBERT, it is based on BERT. It was originally designed to learn joint representations of language and visual content from paired data. Unlike ViLBERT, it uses a single cross-modal transformer to align elements of the input text and regions in the input image [12].

A.4 Challenge Winner

After the Hateful Memes Challenge, many state-of-the-art models were proposed to deal with the task. [35] extracted and then applied demographics while [19] utilized Stochastic Weight Averaging method to stabilize the model. In [28], the authors applied an augmented dataset on original data to train the VisualBERT model. [13] used a weighted linear combination of ensemble learners to get the final prediction instead of the majority voting technique. The multimodal deep ensemble technique is leveraged by [23] to boost the performance.

A.5 Multimodal LLM

Flamingo (Fl). This VLM [1] executes vision-language tasks effectively using pretrained visual encoders and language models. To connect those modules, the Perceiver Resampler is trained to project extensive embedding features from the encoder (NFNet-F6) to visual tokens. Then, both visual and textual tokens are combined and sent to the language model. The method further modifies the pretrain LLM by adding gated cross-attention layers and training those layers from scratch. The cross-attention scheme allows the model to process multiple image-text inputs, opening the ability to perform in-context learning.

OpenFlamingo (OF). This framework [2] is an attempt to develop an open-source version of Flamingo and reproduce the results.

LLaVA-Llama-2-13B (LL-2). Pretrained CLIP and Llama-2 serve as the visual encoder and language model in this VLM [14], respectively. While Flamingo uses the cross-attention scheme, LLaVA proposes a projection layer to map CLIP features to language tokens. Then, both visual and textual tokens are used as inputs to the pretrained language model.