DESTRESS: Computation-Optimal and Communication-Efficient Decentralized Nonconvex Finite-Sum Optimization

Abstract

Emerging applications in multi-agent environments such as internet-of-things, networked sensing, autonomous systems and federated learning, call for decentralized algorithms for finite-sum optimizations that are resource-efficient in terms of both computation and communication. In this paper, we consider the prototypical setting where the agents work collaboratively to minimize the sum of local loss functions by only communicating with their neighbors over a predetermined network topology. We develop a new algorithm, called DEcentralized STochastic REcurSive gradient methodS (DESTRESS) for nonconvex finite-sum optimization, which matches the optimal incremental first-order oracle (IFO) complexity of centralized algorithms for finding first-order stationary points, while maintaining communication efficiency. Detailed theoretical and numerical comparisons corroborate that the resource efficiencies of DESTRESS improve upon prior decentralized algorithms over a wide range of parameter regimes. DESTRESS leverages several key algorithm design ideas including randomly activated stochastic recursive gradient updates with mini-batches for local computation, gradient tracking with extra mixing (i.e., multiple gossiping rounds) for per-iteration communication, together with careful choices of hyper-parameters and new analysis frameworks to provably achieve a desirable computation-communication trade-off.

Keywords: decentralized optimization, nonconvex finite-sum optimization, stochastic recursive gradient methods

1 Introduction

The proliferation of multi-agent environments in emerging applications such as internet-of-things (IoT), networked sensing and autonomous systems, together with the necessity of training machine learning models using distributed systems in federated learning, leads to a growing need of developing decentralized algorithms for optimizing finite-sum problems. Specifically, the goal is to minimize the global objective function:

| (1) |

where denotes the parameter of interest, denotes the sample loss of the sample , denotes the entire dataset, and denotes the number of data samples in the entire dataset. Of particular interest to this paper is the nonconvex setting, where is nonconvex with respect to , due to its ubiquity across problems in machine learning and signal processing, including but not limited to nonlinear estimation, neural network training, and so on.

In a prototypical decentralized environment, however, each agent only has access to a disjoint subset of the data samples, and aims to work collaboratively to optimize , by only exchanging information with its neighbors over a predetermined network topology. Assuming the data are distributed equally among all agents,111It is straightforward to generalize to the unequal splitting case with a proper reweighting. each agent thus possesses samples, and can be rewritten as

where

denotes the local objective function averaged over the local dataset at the th agent () and . The communication pattern of the agents is specified via an undirected graph , where denotes the set of all agents, and two agents can exchange information if and only if there is an edge in connecting them. Unlike the server/client setting, the decentralized setting, sometimes also called the network setting, does not admit a parameter server to facilitate global information sharing, therefore is much more challenging to understand and delineate the impact of the network graph.

Roughly speaking, in a typical decentralized algorithm, the agents alternate between (1) communication, which propagates local information and enforces consensus, and (2) computation, which updates individual parameter estimates and improves convergence using information received from the neighbors. The resource efficiency of a decentralized algorithm can often be measured in terms of its computation complexity and communication complexity. For example, communication can be extremely time-consuming and become the top priority when the bandwidth is limited. On the other hand, minimizing computation, especially at resource-constrained agents (e.g., power-hungry IoT or mobile devices), is also critical to ensure the overall efficiency. Achieving a desired level of resource efficiency for a decentralized algorithm often requires careful and delicate trade-offs between computation and communication, as these objectives are often conflicting in nature.

1.1 Our contributions

The central contribution of this paper lies in the development of a new resource-efficient algorithm for nonconvex finite-sum optimization problems in a decentralized environment, dubbed DEcentralized STochastic REcurSive gradient methodS (DESTRESS). DESTRESS provably finds first-order stationary points of the global objective function with the optimal incremental first-order (IFO) oracle complexity, i.e. the complexity of evaluating sample gradients, matching state-of-the-art centralized algorithms, but at a much lower communication complexity compared to existing decentralized algorithms over a wide range of parameter regimes.

To achieve resource efficiency, DESTRESS leverages several key ideas in the algorithm design. To reduce local computation, DESTRESS harnesses the finite-sum structure of the empirical risk function by performing stochastic variance-reduced recursive gradient updates [NvDP+19, FLLZ18, WJZ+19, Li19, LR21, LBZR21, ZXG18]—an approach that is shown to be optimal in terms of IFO complexity in the centralized setting—in a randomly activated manner to further improve computational efficiency when the local sample size is limited. To reduce communication, DESTRESS employs gradient tracking [ZM10] with a few mixing rounds per iteration, which helps accelerate the convergence through better information sharing [LCCC20]; the extra mixing scheme can be implemented using Chebyshev acceleration [AS14] to further improve the communication efficiency. In a nutshell, to find an -approximate first-order stationary points, i.e. , where is the output of DESTRESS, and the expectation is taken with respect to the randomness of the algorithm, DESTRESS requires:

-

•

per-agent IFO calls,222The big- notation is defined in Section 1.3. which is network-independent; and

-

•

rounds of communication,

where is the smoothness parameter of the sample loss, is the mixing rate of the network topology, is the number of agents, and is the local sample size.

| Algorithms | Setting | Per-agent IFO Complexity | Communication Rounds |

|---|---|---|---|

| SVRG | centralized | n/a | |

| [AZH16, RHS+16] | |||

| SCSG/SVRG+ | centralized | n/a | |

| [LJCJ17, LL18] | |||

| SNVRG | centralized | n/a | |

| [ZXG18] | |||

| SARAH/SPIDER/SpiderBoost | centralized | n/a | |

| [NvDP+19, FLLZ18, WJZ+19] | |||

| SSRGD/ZeroSARAH/PAGE | centralized | n/a | |

| [Li19, LR21, LBZR21] | |||

| D-GET | decentralized | Same as IFO | |

| [SLH20] | |||

| GT-SARAH | decentralized | Same as IFO | |

| [XKK20b] | |||

| DESTRESS | decentralized | ||

| (this paper) |

Comparisons with existing algorithms.

Table 1 summarizes the convergence guarantees of representative stochastic variance-reduced algorithms for finding first-order stationary points across centralized and decentralized communication settings.

-

•

In terms of the computation complexity, the overall IFO complexity of DESTRESS—when summed over all agents—becomes

matching the optimal IFO complexity of centralized algorithms (e.g., SPIDER [FLLZ18], PAGE [LBZR21]) and distributed server/client algorithms (e.g., D-ZeroSARAH [LR21]). However, the state-of-the-art decentralized algorithm GT-SARAH [XKK20b] still did not achieve this optimal IFO complexity for most situations (see Table 1). To the best of our knowledge, DESTRESS is the first algorithm to achieve the optimal IFO complexity for the decentralized setting regardless of network topology and sample size.

-

•

When it comes to the communication complexity, it is observed that the communication rounds of DESTRESS can be decomposed into the sum of an -independent term and an -dependent term (up to a logarithmic factor), i.e.,

similar decompositions also apply to competing decentralized algorithms. DESTRESS significantly improves the -dependent term of D-GET and GT-SARAH by at least a factor of , and therefore, saves more communications over poorly-connected networks. Further, the -independent term of DESTRESS is also smaller than that of D-GET/GT-SARAH as long as the local sample size is sufficiently large, i.e. , which also holds for a wide variety of application scenarios. To gain further insights in terms of the communication savings of DESTRESS, Table 2 further compares the communication complexities of decentralized algorithms for finding first-order stationary points under three common network settings.

| Erdős-Rényi graph | 2-D grid graph | Path graph | |

| (spectral gap) | |||

| D-GET | |||

| [SLH20] | |||

| GT-SARAH | |||

| [XKK20b] | |||

| DESTRESS | |||

| (this paper) | |||

| Improvement factors | |||

| for -independent term | |||

| Improvement factors | |||

| for -dependent term |

In sum, DESTRESS harnesses the ideas of variance reduction, gradient tracking and extra mixing in a sophisticated manner to achieve a scalable decentralized algorithm for nonconvex empirical risk minimization that is competitive in both computation and communication over existing approaches.

1.2 Additional related works

Decentralized optimization and learning have been studied extensively, with contemporary emphasis on the capabilities to scale gracefully to large-scale problems — both in terms of the size of the data and the size of the network. For the conciseness of the paper, we focus our discussions on the most relevant literature and refer interested readers to recent overviews [NRB20, XPNK20, XKK20a] for further references.

Stochastic variance-reduced methods.

Many variants of stochastic variance-reduced gradient based methods have been proposed for finite-sum optimization for finding first-order stationary points, including but not limited to SVRG [JZ13, AZH16, RHS+16], SCSG [LJCJ17], SVRG+ [LL18], SAGA [DBLJ14], SARAH [NLST17, NvDP+19], SPIDER [FLLZ18], SpiderBoost [WJZ+19], SSRGD [Li19], ZeroSARAH [LR21] and PAGE [LBZR21, Li21]. SVRG/SVRG+/SCSG/SAGA utilize stochastic variance-reduced gradients as a corrected estimator of the full gradient, but can only achieve a sub-optimal IFO complexity of . Other algorithms such as SARAH, SPIDER, SpiderBoost, SSRGD and PAGE adopt stochastic recursive gradients to improve the IFO complexity to , which is optimal indicated by the lower bound provided in [FLLZ18, LBZR21]. DESTRESS also utilizes the stochastic recursive gradients to perform variance reduction, which results in the optimal IFO complexity for finding first-order stationary points.

Decentralized stochastic nonconvex optimization.

There has been a flurry of recent activities in decentralized nonconvex optimization in both the server/client setting and the network setting. In the server/client setting, [CZC+20] simplifies the approaches in [LLMY17] for distributing stochastic variance-reduced algorithms without requiring sampling extra data. In particular, D-SARAH [CZC+20] extends SARAH to the server/client setting but with a slightly worse IFO complexity and a sample-independent communication complexity. D-ZeroSARAH [LR21] obtains the optimal IFO complexity in the server/client setting. In the network setting, D-PSGD [LZZ+17] and SGP [ALBR19] extend stochastic gradient descent (SGD) to solve the nonconvex decentralized expectation minimization problems with sub-optimal rates. However, due to the noisy stochastic gradients, D-PSGD can only use diminishing step size to ensure convergence, and SGP uses a small step size on the order of , where denotes the total iterations. [TLY+18] introduces a variance-reduced correction term to D-PSGD, which allows a constant step size and hence reaches a better convergence rate.

Gradient tracking [ZM10, QL18] provides a systematic approach to estimate the global gradient at each agent, which allows one to easily design decentralized optimization algorithms based on existing centralized algorithms. This idea is applied in [ZY19] to extend SGD to the decentralized setting, and in [LCCC20] to extend quasi-Newton algorithms as well as stochastic variance-reduced algorithms, with performance guarantees for optimizing strongly convex functions. GT-SAGA [XKK20c] further uses SAGA-style updates and reaches a convergence rate that matches SAGA [DBLJ14, RSPS16]. However, GT-SAGA requires to store a variable table, which leads to a high memory complexity. D-GET [SLH20] and GT-SARAH [XKK20b] adopt equivalent recursive local gradient estimators to enable the use of constant step sizes without extra memory usage. The IFO complexity of GT-SARAH is optimal in the restrictive range , while DESTRESS achieves the optimal IFO over all parameter regimes.

In addition to variance reduction techniques, performing multiple mixing steps between local updates can greatly improve the dependence of the network in convergence rates, which is equivalent of communicating over a better-connected communication graph for the agents, which in turn leads to a faster convergence (and a better overall efficiency) due to better information mixing. This technique is applied by a number of recent literature including [BBKW19, PLW20, BBW21, LCCC20, HAD+20, IW21], and its effectiveness is verified both in theory and experiments. Our algorithm also adopts the extra mixing steps, which leads to better IFO complexity and communication complexity.

1.3 Paper organization and notation

Section 2 introduces preliminary concepts and the algorithm development, Section 3 shows the theoretical performance guarantees for DESTRESS, Section 4 provides numerical evidence to support the analysis, and Section 5 concludes the paper. Proofs and experiment settings are postponed to appendices.

Throughout this paper, we use boldface letters to represent matrices and vectors. We use for matrix operator norm, for the Kronecker product, for the -dimensional identity matrix and for the -dimensional all-one vector. For two real functions and defined on , we say or if there exists some universal constant such that . The notation or means .

2 Preliminaries and Proposed Algorithm

We start by describing a few useful preliminary concepts and definitions in Section 2.1, then present the proposed algorithm in Section 2.2.

2.1 Preliminaries

Mixing.

The information mixing between agents is conducted by updating the local information via a weighted sum of information from neighbors, which is characterized by a mixing (gossiping) matrix. Concerning this matrix is an important quantity called the mixing rate, defined in Definition 1.

Definition 1 (Mixing matrix and mixing rate).

The mixing matrix is a matrix , such that if agent and are not connected according to the communication graph . Furthermore, and . The mixing rate of a mixing matrix is defined as

| (2) |

The mixing rate indicates the speed of information shared across the network. For example, for a fully-connected network, choosing leads to . For general networks and mixing matrices, [NOR18, Proposition 5] provides comprehensive bounds on —also known as the spectral gap—for various graphs. In practice, FDLA matrices [XB04] are more favorable because it can achieve a much smaller mixing rate, but they usually contain negative elements and are not symmetric. Different from other algorithms that require the mixing matrix to be doubly-stochastic, our analysis can handle arbitrary mixing matrices as long as their row/column sums equal to one.

Dynamic average consensus.

It has been well understood by now that using a naive mixing of local information merely, e.g. the local gradients of neighboring agents, does not lead to fast convergence of decentralized extensions of centralized methods [NO09, SLWY15]. This is due to the fact that the quantity of interest in solving decentralized optimization problems is often iteration-varying, which naive mixing is unable to track; consequently, an accumulation of errors leads to either slow convergence or poor accuracy. Fortunately, the general scheme of dynamic average consensus [ZM10] proves to be extremely effective in this regard to track the dynamic average of local variables over the course of iterative algorithms, and has been applied to extend many central algorithms to decentralized settings, e.g. [NOS17, QL18, DLS16, LCCC20]. This idea, also known as “gradient tracking” in the literature, essentially adds a correction term to the naive information mixing, which we will employ in the communication stage of the proposed algorithm to track the dynamic average of local gradients.

Stochastic recursive gradient methods.

Stochastic recursive gradients methods [NvDP+19, FLLZ18, WJZ+19, Li19] achieve the optimal IFO complexity in the centralized setting for nonconvex finite-sum optimization, which make it natural to adapt them to the decentralized setting with the hope of maintaining the appealing IFO complexity. Roughly speaking, these methods use a nested loop structure to iteratively refine the parameter, where 1) a global gradient evaluation is performed at each outer loop, and 2) a stochastic recursive gradient estimator is used to calculate the gradient and update the parameter at each inner loop. In the proposed DESTRESS algorithm, this nested loop structure lends itself to a natural decentralized scheme, as will be seen momentarily.

Additional notation.

For convenience of presentation, define the stacked vector and its average over all agents as

| (3) |

The vectors , , , , and are defined in the same fashion. In addition, for a stacked vector , we introduce the distributed gradient as

| (4) |

2.2 The DESTRESS Algorithm

Detailed in Algorithm 1, we propose a novel decentralized stochastic optimization algorithm, dubbed DESTRESS, for finding first-order order stationary points of nonconvex finite-sum problems. Motivated by stochastic recursive gradient methods in the centralized setting, DESTRESS has a nested loop structure:

-

1.

The outer loop adopts dynamic average consensus to estimate and track the global gradient at each agent in (5), where is the stacked parameter estimate (cf. (4)). This helps to “reset” the stochastic gradient to a less noisy starting gradient of the inner loop. A key property of (5)—which is a direct consequence of dynamic average consensus—is that the average of equals to the dynamic average of local gradients, i.e. .

- 2.

To complete the last mile, inspired by [LCCC20], we allow DESTRESS to perform a few rounds of mixing or gossiping whenever communication takes place, to enable better information sharing and faster convergence. Specifically, DESTRESS performs and mixing steps for the outer and inner loops respectively per iteration, which is equivalent to using

as mixing matrices, and correspondingly a network with better connectivity; see (5), (6a) and (6c). Note that Algorithm 1 is written in matrix notation, where the mixing steps are described by or and applied to all agents simultaneously. The extra mixing steps can be implemented by Chebyshev acceleration [AS14] with improved communication efficiency.

| (5) |

| (6a) | ||||

| (6b) | ||||

| (6c) | ||||

Compared with existing decentralized algorithms based on stochastic variance-reduced algorithms such as D-GET [SLH20] and GT-SARAH [XKK20b], DESTRESS utilizes different gradient estimators and communication protocols: First, DESTRESS produces a sequence of reference points —which converge to a global first-order stationary point—to “restart” the inner loops periodically using fresher information; secondly, the communication and computation in DESTRESS are paced differently due to the introduction of extra mixing, which allow a more flexible trade-off schemes between different types of resources; last but not least, the random activation of stochastic recursive gradient updates further saves local computation, especially when the local sample size is small compared to the number of agents.

3 Performance Guarantees

This section presents the performance guarantees of DESTRESS for finding first-order stationary points of the global objective function .

3.1 Assumptions

We first introduce Assumption 1 and Assumption 2, which are standard assumptions imposed on the loss function. Assumption 1 implies that all local objective functions and the global objective function also have Lipschitz gradients, and Assumption 2 guarantees the absence of trivial solutions.

Assumption 1 (Lipschitz gradient).

The sample loss function has -Lipschitz gradients for all and , namely, , and .

Assumption 2 (Function boundedness).

The global objective function is bounded below, i.e., .

Due to the nonconvexity, first-order algorithms are generally guaranteed to converge to only first-order stationary points of the global loss function , defined below in Definition 2.

Definition 2 (First-order stationary point).

A point is called an -approximate first-order stationary point of a differentiable function if

3.2 Main theorem

Theorem 1, whose proof is deferred to Appendix B, shows that DESTRESS converges in expectation to an approximate first-order stationary point, under suitable parameter choices.

Theorem 1 (First-order optimality).

Assume Assumption 1 and 2 hold. Set , , , , and to be positive and satisfy

| (7) |

The output produced by Algorithm 1 satisfies

| (8) |

If there is only one agent, i.e. , the mixing rate will be , we can choose , and Theorem 1 reduces to [NvDP+19, Theorem 1], its counterpart in the centralized setting. For general decentralized settings with arbitrary mixing schedules, Theorem 1 provides a comprehensive characterization of the convergence rate, where an -approximate first-order stationary point can be found in expectation in a total of

iterations; here, is the number of outer iterations and is the number of inner iterations. Clearly, a larger step size , as allowable by (7), hints on a smaller iteration complexity, and hence a smaller IFO complexity.

There are two conditions in (7). On one end, needs to be large enough (i.e., perform more rounds of extra mixing) to counter the effect when is small (i.e., we compute less stochastic gradients every iteration), or when is close to (i.e., the network is poorly connected). On the other end, the step size needs to be small enough to account for the requirement of the step size in the centralized setting, as well as the effect of imperfect communication due to decentralization. For well-connected networks where , the terms introduced by the decentralized setting will diminish—indicating the iteration complexity is close to that of the centralized setting. For poorly-connected networks, carefully designing the mixing matrix and other parameters can ensure a desirable trade-off between convergence speed and communication cost. The following corollary provides specific parameter choices for DESTRESS to achieve the optimal per-agent IFO complexity. The proof is deferred to Appendix C.

Corollary 1 (Complexity for finding first-order stationary points).

As elaborated in Section 1.1, DESTRESS achieves a network-independent IFO complexity that matches the optimal complexity in the centralized setting. In addition, when the accuracy , DESTRESS reaches a communication complexity of , which is independent of the sample size.

It is worthwhile to further highlight the role of the random activation probability in achieving the optimal IFO by allowing “fractional” batch size. Note that the batch size is set as , where is the local sample size, and is the number of agents.

-

1.

When the local sample size is large, i.e. , we can approximate and . In fact, Corollary 1 continues to hold with in this regime.

-

2.

However, when the number of agents is large, i.e. , the batch size and , which mitigates the potential computation waste by only selecting a subset of agents to perform local computation, compared to the case when we naively set .

Therefore, by introducing random activation, we can view as the effective batch size at each agent, which allows fractional values and leads to the optimal IFO complexity in all scenarios.

4 Numerical Experiments

This section provides numerical experiments on real datasets to evaluate our proposed algorithm DESTRESS with comparisons against two existing baselines: DSGD [NO09, LZZ+17] and GT-SARAH [XKK20b]. To allow for reproducibility, all codes can be found at

For all experiments, we set the number of agents , and split the dataset uniformly at random to each agent. In addition, since in all experiments, we set for simplicity. We run each experiment on three communication graphs with the same data assignment and starting point: Erdös-Rènyi graph (the connectivity probability is set to ), grid graph, and path graph. The mixing matrices are chosen as the symmetric fastest distributed linear averaging (FDLA) matrices [XB04] generated according to different graph topologies, and the extra mixing steps are implemented by Chebyshev’s acceleration [AS14] to save communications as described earlier. To ensure convergence, DSGD adopts a diminishing step size schedule. All the parameters are tuned manually for best performance. We defer a detailed account of the baseline algorithms as well as parameter choices in Appendix A.

4.1 Regularized logistic regression

To begin with, we employ logistic regression with nonconvex regularization to solve a binary classification problem using the Gisette dataset.444The dataset can be accessed at https://archive.ics.uci.edu/ml/datasets/Gisette. We split the Gisette dataset to agents, where each agent receives training samples of dimension . The sample loss function is given as

where represents a training tuple, is the feature vector and is the label, and is the regularization parameter. For this experiment, we set .

(a) Erdös-Rènyi graph

(b) Grid graph

(b) Grid graph

(c) Path graph

(c) Path graph

Figure 1 shows the loss and testing accuracy for all algorithms. DESTRESS significantly outperforms other algorithms both in terms of communication and computation. It is worth noting that, DSGD converges very fast at the beginning of training, but cannot sustain the progress due to the diminishing schedule of step sizes. On the contrary, the variance-reduced algorithms can converge with a constant step size, and hence converge better overall. Moreover, due to the refined gradient estimation and information mixing designs, DESTRESS can bear a larger step size than GT-SARAH, which leads to the fastest convergence and best overall performance. In addition, a larger number of extra mixing steps leads to a better performance when the graph topology becomes less connected.

(a) Erdös-Rènyi graph

(b) Grid graph

(b) Grid graph

(c) Path graph

(c) Path graph

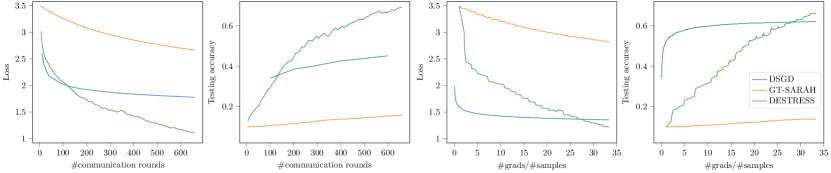

4.2 Neural network training

Next, we compare the performance of DESTRESS with comparisons to DSGD and GT-SARAH for training a one-hidden-layer neural network with hidden neurons and sigmoid activations for classifying the MNIST dataset [Den12]. We evenly split training samples to agents at random. Figure 2 plots the training loss and testing accuracy against the number of communication rounds and gradient evaluations for all algorithms. Again, DESTRESS significantly outperforms other algorithms in terms of computation and communication costs due to the larger step size and extra mixing, which validates our theoretical analysis.

5 Conclusions

In this paper, we proposed DESTRESS for decentralized nonconvex finite-sum optimization, where both its theoretical convergence guarantees and empirical performances on real-world datasets were presented. In sum, DESTRESS matches the optimal IFO complexity of centralized SARAH-type methods for finding first-order stationary points, and improves both computation and communication complexities for a broad range of parameters regimes compared with existing approaches. A natural and important extension of this paper is to generalize and develop convergence guarantees of DESTRESS for finding second-order stationary points, which we leave to future works.

Acknowledgements

This work is supported in part by ONR N00014-19-1-2404, by AFRL under FA8750-20-2-0504, and by NSF under CCF-1901199 and CCF-2007911. The authors thank Ran Xin for helpful discussions.

References

- [ALBR19] M. Assran, N. Loizou, N. Ballas, and M. Rabbat. Stochastic gradient push for distributed deep learning. In International Conference on Machine Learning, pages 344–353. PMLR, 2019.

- [AS14] M. Arioli and J. Scott. Chebyshev acceleration of iterative refinement. Numerical Algorithms, 66(3):591–608, 2014.

- [AZH16] Z. Allen-Zhu and E. Hazan. Variance reduction for faster non-convex optimization. In International conference on machine learning, pages 699–707. PMLR, 2016.

- [BBKW19] A. S. Berahas, R. Bollapragada, N. S. Keskar, and E. Wei. Balancing communication and computation in distributed optimization. IEEE Transactions on Automatic Control, 64(8):3141–3155, 2019.

- [BBW21] A. S. Berahas, R. Bollapragada, and E. Wei. On the convergence of nested decentralized gradient methods with multiple consensus and gradient steps. IEEE Transactions on Signal Processing, 2021.

- [CZC+20] S. Cen, H. Zhang, Y. Chi, W. Chen, and T.-Y. Liu. Convergence of distributed stochastic variance reduced methods without sampling extra data. IEEE Transactions on Signal Processing, 68:3976–3989, 2020.

- [DBLJ14] A. Defazio, F. Bach, and S. Lacoste-Julien. SAGA: A fast incremental gradient method with support for non-strongly convex composite objectives. In Advances in neural information processing systems, pages 1646–1654, 2014.

- [Den12] L. Deng. The MNIST database of handwritten digit images for machine learning research [best of the web]. IEEE Signal Processing Magazine, 29(6):141–142, 2012.

- [DLS16] P. Di Lorenzo and G. Scutari. Next: In-network nonconvex optimization. IEEE Transactions on Signal and Information Processing over Networks, 2(2):120–136, 2016.

- [FLLZ18] C. Fang, C. J. Li, Z. Lin, and T. Zhang. SPIDER: Near-optimal non-convex optimization via stochastic path-integrated differential estimator. In Advances in Neural Information Processing Systems, pages 687–697, 2018.

- [HAD+20] A. Hashemi, A. Acharya, R. Das, H. Vikalo, S. Sanghavi, and I. Dhillon. On the benefits of multiple gossip steps in communication-constrained decentralized optimization. arXiv preprint arXiv:2011.10643, 2020.

- [IW21] C. Iakovidou and E. Wei. S-NEAR-DGD: A flexible distributed stochastic gradient method for inexact communication. arXiv preprint arXiv:2102.00121, 2021.

- [JZ13] R. Johnson and T. Zhang. Accelerating stochastic gradient descent using predictive variance reduction. In Advances in neural information processing systems, pages 315–323, 2013.

- [LBZR21] Z. Li, H. Bao, X. Zhang, and P. Richtárik. PAGE: A simple and optimal probabilistic gradient estimator for nonconvex optimization. In International Conference on Machine Learning, pages 6286–6295. PMLR, 2021. arXiv:2008.10898.

- [LCCC20] B. Li, S. Cen, Y. Chen, and Y. Chi. Communication-efficient distributed optimization in networks with gradient tracking and variance reduction. Journal of Machine Learning Research, 21(180):1–51, 2020.

- [Li19] Z. Li. SSRGD: Simple stochastic recursive gradient descent for escaping saddle points. In Advances in Neural Information Processing Systems, pages 1523–1533, 2019.

- [Li21] Z. Li. A short note of PAGE: Optimal convergence rates for nonconvex optimization. arXiv preprint arXiv:2106.09663, 2021.

- [LJCJ17] L. Lei, C. Ju, J. Chen, and M. I. Jordan. Non-convex finite-sum optimization via SCSG methods. In Advances in Neural Information Processing Systems, volume 30, 2017.

- [LL18] Z. Li and J. Li. A simple proximal stochastic gradient method for nonsmooth nonconvex optimization. In Advances in Neural Information Processing Systems, pages 5569–5579, 2018.

- [LLMY17] J. D. Lee, Q. Lin, T. Ma, and T. Yang. Distributed stochastic variance reduced gradient methods by sampling extra data with replacement. The Journal of Machine Learning Research, 18(1):4404–4446, 2017.

- [LR21] Z. Li and P. Richtárik. ZeroSARAH: Efficient nonconvex finite-sum optimization with zero full gradient computation. arXiv preprint arXiv:2103.01447, 2021.

- [LZZ+17] X. Lian, C. Zhang, H. Zhang, C.-J. Hsieh, W. Zhang, and J. Liu. Can decentralized algorithms outperform centralized algorithms? A case study for decentralized parallel stochastic gradient descent. In Advances in Neural Information Processing Systems, pages 5330–5340, 2017.

- [NLST17] L. M. Nguyen, J. Liu, K. Scheinberg, and M. Takáč. SARAH: A novel method for machine learning problems using stochastic recursive gradient. In International Conference on Machine Learning, pages 2613–2621, 2017.

- [NO09] A. Nedic and A. Ozdaglar. Distributed subgradient methods for multi-agent optimization. IEEE Transactions on Automatic Control, 54(1):48–61, 2009.

- [NOR18] A. Nedić, A. Olshevsky, and M. G. Rabbat. Network topology and communication-computation tradeoffs in decentralized optimization. Proceedings of the IEEE, 106(5):953–976, 2018.

- [NOS17] A. Nedić, A. Olshevsky, and W. Shi. Achieving geometric convergence for distributed optimization over time-varying graphs. SIAM Journal on Optimization, 27(4):2597–2633, 2017.

- [NRB20] M. Nokleby, H. Raja, and W. U. Bajwa. Scaling-up distributed processing of data streams for machine learning. Proceedings of the IEEE, 108(11):1984–2012, 2020.

- [NvDP+19] L. M. Nguyen, M. van Dijk, D. T. Phan, P. H. Nguyen, T.-W. Weng, and J. R. Kalagnanam. Finite-sum smooth optimization with SARAH. arXiv preprint arXiv:1901.07648, 2019.

- [PLW20] T. Pan, J. Liu, and J. Wang. D-SPIDER-SFO: A decentralized optimization algorithm with faster convergence rate for nonconvex problems. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, pages 1619–1626, 2020.

- [QL18] G. Qu and N. Li. Harnessing smoothness to accelerate distributed optimization. IEEE Transactions on Control of Network Systems, 5(3):1245–1260, 2018.

- [RHS+16] S. J. Reddi, A. Hefny, S. Sra, B. Poczos, and A. Smola. Stochastic variance reduction for nonconvex optimization. In International conference on machine learning, pages 314–323, 2016.

- [RSPS16] S. J. Reddi, S. Sra, B. Póczos, and A. Smola. Fast incremental method for smooth nonconvex optimization. In 2016 IEEE 55th Conference on Decision and Control (CDC), pages 1971–1977. IEEE, 2016.

- [SLH20] H. Sun, S. Lu, and M. Hong. Improving the sample and communication complexity for decentralized non-convex optimization: Joint gradient estimation and tracking. In International Conference on Machine Learning, pages 9217–9228. PMLR, 2020.

- [SLWY15] W. Shi, Q. Ling, G. Wu, and W. Yin. EXTRA: An exact first-order algorithm for decentralized consensus optimization. SIAM Journal on Optimization, 25(2):944–966, 2015.

- [TLY+18] H. Tang, X. Lian, M. Yan, C. Zhang, and J. Liu. : Decentralized training over decentralized data. In International Conference on Machine Learning, pages 4848–4856. PMLR, 2018.

- [WJZ+19] Z. Wang, K. Ji, Y. Zhou, Y. Liang, and V. Tarokh. SpiderBoost and momentum: Faster variance reduction algorithms. In Advances in Neural Information Processing Systems, pages 2406–2416, 2019.

- [XB04] L. Xiao and S. Boyd. Fast linear iterations for distributed averaging. Systems and Control Letters, 53(1):65–78, 2004.

- [XKK20a] R. Xin, S. Kar, and U. A. Khan. Decentralized stochastic optimization and machine learning: A unified variance-reduction framework for robust performance and fast convergence. IEEE Signal Processing Magazine, 37(3):102–113, 2020.

- [XKK20b] R. Xin, U. A. Khan, and S. Kar. Fast decentralized non-convex finite-sum optimization with recursive variance reduction. arXiv preprint arXiv:2008.07428, 2020.

- [XKK20c] R. Xin, U. A. Khan, and S. Kar. A fast randomized incremental gradient method for decentralized non-convex optimization. arXiv preprint arXiv:2011.03853, 2020.

- [XPNK20] R. Xin, S. Pu, A. Nedić, and U. A. Khan. A general framework for decentralized optimization with first-order methods. Proceedings of the IEEE, 108(11):1869–1889, 2020.

- [ZM10] M. Zhu and S. Martínez. Discrete-time dynamic average consensus. Automatica, 46(2):322–329, 2010.

- [ZXG18] D. Zhou, P. Xu, and Q. Gu. Stochastic nested variance reduction for nonconvex optimization. Advances in Neural Information Processing Systems, 31:3921–3932, 2018.

- [ZY19] J. Zhang and K. You. Decentralized stochastic gradient tracking for non-convex empirical risk minimization. arXiv preprint arXiv:1909.02712, 2019.

Appendix A Experiment details

For completeness, we list two baseline algorithms, DSGD [NO09, LZZ+17] (cf. Algorithm 2) and GT-SARAH [XKK20b] (cf. Algorithm 3), which are compared numerically against the proposed DESTRESS algorithm in Section 4. Furthermore, the detailed hyperparameter settings for the experiments in Section 4.1 and Section 4.2 are listed in Table 3 and Table 4, respectively.

| Algorithms | DSGD | DESTRESS | GT-SARAH | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Parameters | ||||||||||

| Erdös-Rènyi | ||||||||||

| Grid | ||||||||||

| Path | ||||||||||

| Algorithms | DSGD | DESTRESS | GT-SARAH | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Parameters | ||||||||||

| Erdös-Rènyi | ||||||||||

| Grid | ||||||||||

| Path | ||||||||||

Appendix B Proof of Theorem 1

For notation simplicity, let

throughout the proof. In addition, with a slight abuse of notation, we define the global gradient of an -dimensional vector , where , as follows

| (9) |

The following fact is a straightforward consequence of our assumption on the mixing matrix in Definition 1.

Fact 1.

Let , and , where . For a mixing matrix satisfying Definition 1, we have

-

1.

;

-

2.

.

To begin with, we introduce a key lemma that upper bounds the norm of the gradient of the global loss function evaluated at the average local estimates over agents, in terms of the function value difference at the beginning and the end of the inner loop, the gradient estimation error, and the norm of gradient estimates.

Lemma 1 (Inner loop induction).

Assume Assumption 1 holds. After inner loops, one has

Proof of Lemma 1.

The local update rule (6a), combined with Lemma 1, yields

By Assumption 1, we have

| (10) |

where the last equality is obtained by applying . Summing over finishes the proof. ∎

Because the output is chosen from uniformly at random, we can compute the expectation of the output’s gradient as follows:

| (11) |

where (i) follows from the change of notation using (9), (ii) follows from the Cauchy-Schwartz inequality, and (iii) follows from Assumption 1 and extending the summation to . Then, in view of Lemma 1, (11) can be further bounded by

| (12) |

where we use and .

Next, we present Lemma 2 and 3 to bound the double sum in (12), whose proofs can be found in Appendix D and Appendix E, respectively.

Lemma 2 (Sum of inner loop errors).

Assuming all conditions in Theorem 1 hold. For all , we can bound the summation of inner loop errors as

Lemma 3 (Sum of outer loop gradient estimation error and consensus error).

Assuming all conditions in Theorem 1 hold. We have

Appendix C Proof of Corollary 1

Without loss of generality, we assume . Otherwise, the problem reduces to the centralized setting with a single agent , and the bound holds trivially. We will confirm the choice of parameters in Corollary 1 in the following paragraphs, and finally obtain the IFO complexity and communication complexity.

Step size .

We first assume and , which will be proved to hold shortly, then we can verify the step size choice meets the requirement in (7) as:

Mixing steps and .

Using Chebyshev’s acceleration [AS14] to implement the mixing steps, it amounts to an improved mixing rate of , when the original mixing rate is close to . Set and . We are now positioned to examine the effective mixing rate and , as follows

where (i) follows from , (ii) follows from , , and (iii) follows from and . By a similar argument, we have .

Complexity.

Appendix D Proof of Lemma 2

This section proves Lemma 2. Sections D.1 and D.2 bounds the expected inner loop gradient estimation error and consensus errors by their previous values and the sum of inner loop gradient estimator’s norms, Section D.3 then creates a linear system to compute the summation of inner loop errors using their initial values of each inner loop, which concludes the proof.

D.1 Sum of inner loop gradient estimation errors

To begin with, note that the gradient estimation error at the -th inner loop iteration can be written as

| (14) |

where the first equality follows from (4), and the last inequality is due to Assumption 1. To continue, the expectation of the second term in (14) can be bounded as

| (15) |

Here, (i) follows from the expectation with respect to the activating indicator and random samples , conditioned on and :

| (16) |

(ii) follows by recursively applying the relation obtained from (i); and (iii) follows from the property of gradient tracking, i.e.

| (17) |

which leads to .

We now continue to bound each term in (15), which can be viewed as the variance of the stochastic gradient, as

| (18) |

where (i) follows from the update rules (6b) and (6c), (ii) follows from the independence of samples and , (iii) follows from similar argument with (16), and the last inequality follows from Assumption 1 and .

In view of (6a), the difference between inner loop variables in (18) can be bounded deterministically as

| (19) |

where (i) and (ii) follow from and for any mean vector ; and the last inequality follows from the property of the mixing matrix and .

Plugging (18) and (19) into (15), we can further obtain

Using (14) and the previous inequality, we can bound the summation of inner loop gradient estimation errors as

where the last inequality is obtained by relaxing the upper bound of the summation w.r.t. from to .

The quantity of interest can be now bounded as

| (20) |

D.2 Sum of inner loop consensus errors

Using the update rule (6a), the variable consensus error can be expanded deterministically as follows:

| (21) |

where (i) follows from the fact

and the definition of the mixing rate. The last inequality follows from the elementary inequality , so that .

Furthermore, using the update rules (6b) and (6c) and defining , the gradient consensus error can be similarly expanded as follows:

| (22) |

where the second term in (i) is obtained by Jensen’s inequality, (ii) follows from Assumption 1 and , and (iii) follows from (19).

D.3 Linear system

Let , and . By taking expectation of (21) and (22), we can construct the following linear system

| (23) |

where the second inequality is due to and . Telescope the above inequality to obtain

| (24) |

Thus, the sum of the consensus errors can be bounded by

| (25) |

where (i) follows by changing the order of summation, (ii) and (iii) follows from the nonnegativity of and respectively. To continue, we begin with the following claim about which will be proved momentarily.

Claim 1.

Under the choice of in Theorem 1, the eigenvalues of are in , and the Neumann series converges,

| (26) |

Appendix E Proof of Lemma 3

This section proves Lemma 3. In the following subsections, Sections E.1 and E.2 derive induction inequalities for the consensus errors and Section E.3 creates a linear system of consensus errors to compute the summation.

E.1 Sum of outer loop variable consensus errors

The variable consensus error can be bounded deterministically as following,

where (i) uses , (ii) uses the update rule (6a), and the last two inequalities follow from similar reasonings as (21). Apply the same reasoning to and use , we can prove

| (27) |

where the last equality identifies .

E.2 Sum of outer loop gradient estimation consensus errors

E.3 Linear system

Then, following the same argument as (25), we obtain

| (34) |

Before continuing, we state the following claim about which will be proven momentarily.

Claim 2.

Under the choice of in Theorem 1, the eigenvalues of are in , and the Neumann series converges,

With Claim 2 in hand, and the fact that , we can bound the summation of outer loop consensus errors by

| (35) |

where .

Proof of 2.

For simplicity, denote and . Then can be written as

whose characteristic polynomial is

First, note that can be bounded by

where the last inequality is due to the choice of , namely,

Combined with the trivial fact that and , all eigenvalues of are in . Consequently, the Neumann series converges, leading to

where we use the condition in (7) to prove to bound the denominator.

∎