Description and Technical specification of Cybernetic Transportation Systems: an urban transportation concept -CityMobil2 project approach-

Abstract

The Cybernetic Transportation Systems (CTS) is an urban mobility concept based on two ideas: the car sharing and the automation of dedicated systems with door-to-door capabilities. In the last decade, many European projects have been developed in this context, where some of the most important are: Cybercars, Cybercars2, CyberMove, CyberC3 and CityMobil. Different companies have developed a first fleet of CTSs in collaboration with research centers around Europe, Asia and America. Considering these previous works, the FP7 project CityMobil2 is on progress since 2012. Its goal is to solve some of the limitations found so far, including the definition of the legal framework for autonomous vehicles on urban environment. This work describes the different improvements, adaptation and instrumentation of the CTS prototypes involved in European cities. Results show tests in our facilities at INRIA-Rocquencourt (France) and the first showcase at Leoń (Spain).

I Introduction

In the last decade, some European initiatives have been carried out in the framework of Cybernetic Transportation Systems (CTSs), where some of the most important are: Cybercars, Cybercars2 [1], CyberMove, CityMobil [2] and CATS. A good overview of the past technologies and the present developments of the CTSs advances is given in [3]. CTSs were defined in the first Cybercar project as follows: “CTSs are road vehicles with fully automated driving capabilities, where a fleet of such vehicles forms a transportation system, for passengers or goods, on a network of roads with on-demand and door-to-door capability” [4].

In this context, CTSs use different perception and communication technologies for the recognition of the environment. This information is processed for the motion planning and onboard control, in order to guarantee the performance of the vehicles in dynamic scenarios (considering other agents involved on the trajectory). This system proposed different modules to evaluate the feasibility for its behavior in Asian cities, including a central control room, stations, road monitoring, etc [5].

Within the CTS framework, recent works are related to autonomous maneuvers and motion planning in urban areas. Inevitable Collision State (ICS) for obstacle avoidance, no matter what the future trajectory of the robotic system, has been implemented in [6]. On the other hand, autonomous navigation of Cybercars based on Geographical Information Systems (GIS) were described in Bonnifait et al. [7]. Furthermore, the improvements for the localization in urban environment have been done by the implementation of Kalman filters considering kinematics behavior [8], using GPS and Inertial Measurement Units (IMU) and lidar-based lane marker detection using Bayesian and map matching algorithms.

CTSs are also capable to interact with other autonomous and semi-autonomous vehicles, as was demonstrated in [1]. Nevertheless, in most of these scenarios, the trajectory path was previously recorded. Recently, new kinds of scenarios, as signalized and unsignalized intersections, roundabouts and highly risk collisions situations have been considered [9, 10]. Latest development from RITS team at INRIA111https://team.inria.fr/rits/ are focused on new algorithm generation for, new algorithms for dynamic path generation in urban environments, taking into account structural and sudden changes in straight and bend segments has been recently developed in a simulated environment [3, 11].

This paper presents the integration of these previous algorithms [3, 11] in real scenarios, as part of the CityMobil2 project. A description of the control architecture, in terms of software, hardware and technical/functional specifications of the Cybercars involved in the showcases of the project are also described. The main contribution of this work is the implementation of a dynamic trajectory generation, with scheduled and emergency stops, considering other vehicles and pedestrians in real scenarios with CTSs.

The rest of the paper is organized as follow: a review of the general architecture used for CTSs is explained in Section II. Details of the implementation of the global and local planning are also given. Section III explains the CTSs used at INRIA for demonstrations in urban environments. Tests and results validation is described in Section IV. These were tested in our facilities at INRIA (France) and the first showcase of the CityMobil2 project at Leon (Spain). Finally, conclusions and the future works are listed in Section V.

II General architecture

In the literature, different approaches for control architectures in autonomous vehicles have been presented. Most of them keep a common structure. Autonomous vehicle demonstrations as performed by: Stanley [12] -winner of the Darpa Urban Challenge-, the Boss [13] -winner of the Darpa Urban Challenge-, and the VIAC project [14] in Europe, describe similar architectures. The perception, decision, control and actuation stages are the main blocks used.

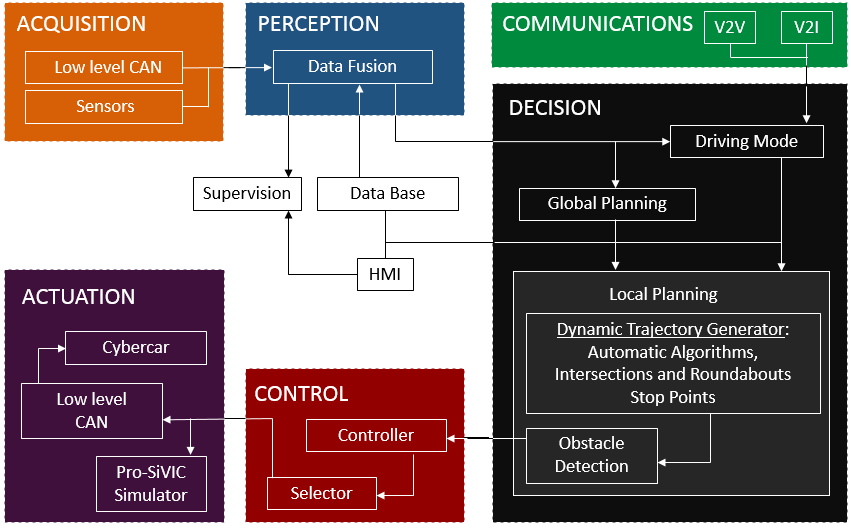

Recently, RITS team has been improving the path planning and control stage for urban areas [3] and [11]. The control architecture for CTSs is shown in Figure 1. The main feature is its modular capability, which allows its implementation in simulated (Pro-sivic) and real environments. The software used for the development is RTMaps222http://intempora.com/. It is a real-time high performance tool, designed for fast and robust implementation in multitask and multivariable systems.

II-A Acquisition, Perception and Communications

The acquisition stage manages the embedded sensors in the vehicle. Using a low level Controller Area Network (CAN) is possible to receive the information describing the environment and the vehicle state (position, speed, accelerations, etc). A detailed description of the available sensors in the vehicle and their requirements is presented in Section III.

The information from the acquisition is processed in the perception stage. Here, fusion algorithms merge the information of the vehicle state (e.g. Kalman filters fusion the SLAM and Inertial Measurement Unit—IMU). Obstacle detection algorithms perceive and classify the different objects and road users in the environment [15].

Communications are provided over WIFI protocol with an Optimized Link State Routing (OLSR) Ad-Hoc network. The transmitted information comes from other vehicles (V2V), providing position, speed, accelerations, planned trajectories, among others. Other static information comes directly from the infrastructure thanks to V2I communications.

II-B Decision

The core of the contributions presented in this work is described in the Decision stage (see Fig. 1). Here global and local planning modules are used to generate the path planning and the reference speed per trajectory.

II-B1 Global planning

Performs the first planning generation process. A data base module (center part of Figure 1) allows to get the information to create a first path, using an XML file. This planner creates a path from the vehicle position to a desired destination. The created path is formed by urban intersection points.

II-B2 Local planning

Based on the raw path given by the global planner, a safe and comfortable local trajectory is generated in this module. Based on penalty weight functions, trajectories at intersections, turns and roundabouts can be smoothly planned—as described for simulations in [11]. Trajectories are computed with Bézier curves due to its modularity, fast computation and continuous curvature characteristics; allowing smooth joints between straight and curved segments.

The trajectory will be set up to the horizon of view, given by the sensors range (approximately 50m). In this sense, an obstacle avoidance module that considers static obstacles have been developed [11]. This module considers also lateral accelerations, planning the velocity profile according to the level of comfort set by the user (levels described in the ISO2631-1 Standard [16]) and the curvature of the path.

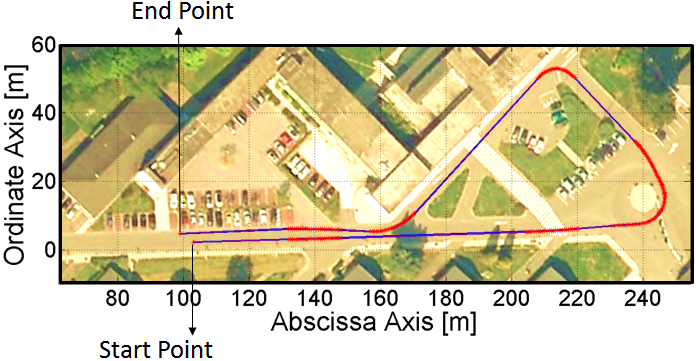

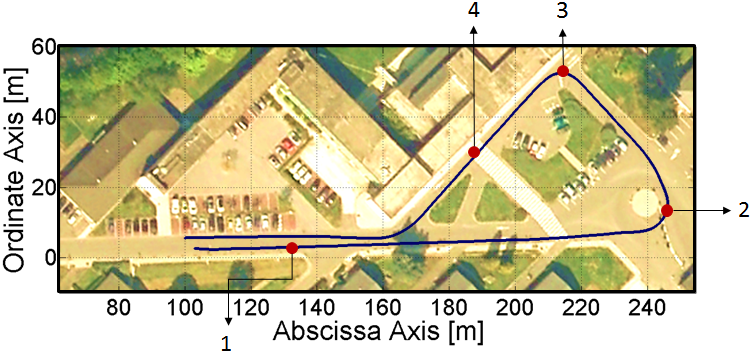

An example of the path planning generated is shown in Figure 2. It depicts the performance of the planner at our facilities (handling intersections, turns and roundabouts), were the red points and blue lines describe the curved and straight segments, respectively.

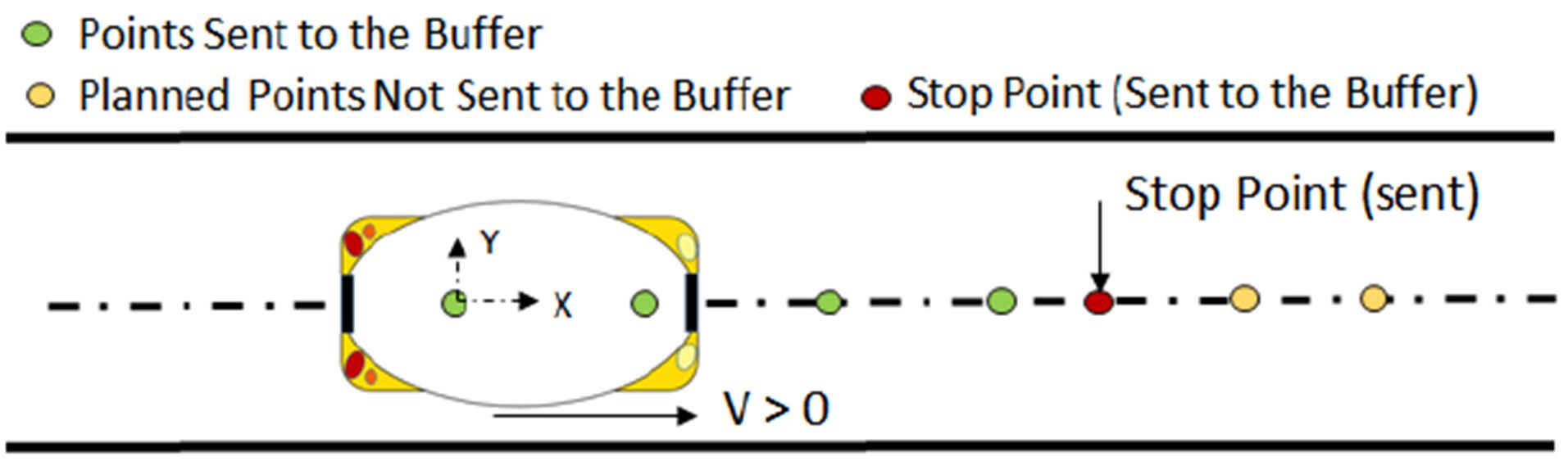

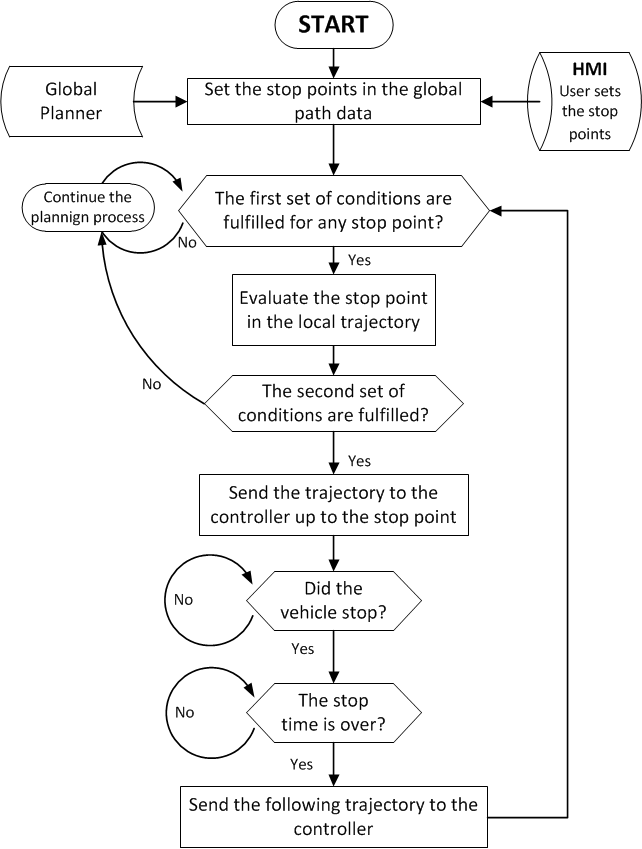

In previous works, only the start and end points of the trajectory were considered in CTSs applications (Fig. 2). Pre-programed stop points is one of the contributions presented in this work. They are considered in the global and local trajectories (Fig. 1).

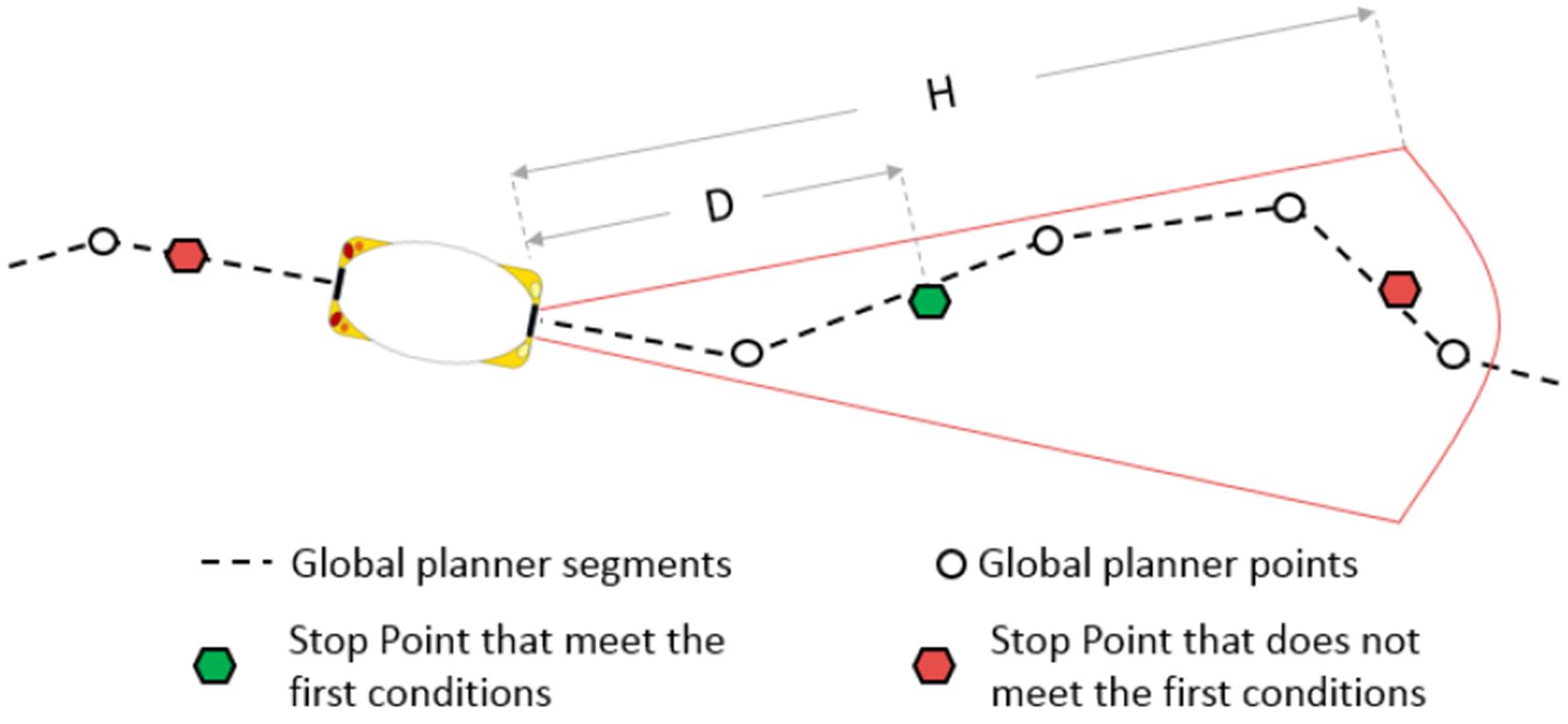

To introduce them in the trajectory, two sets of conditions are evaluated (as showed in Fig. 7). The first set evaluates the stop point with respect to the global path. Figure 3 shows an example of this evaluation, based on the following:

-

1.

The stop point is in the current or following segment on the global path (green point in Fig. 3).

-

2.

The distance from the vehicle to the stop point (D) is lower than the horizon of view of the vehicle (H).

-

3.

The stop point has not yet been sent to the control stage (to avoid considering the same stop point more than once).

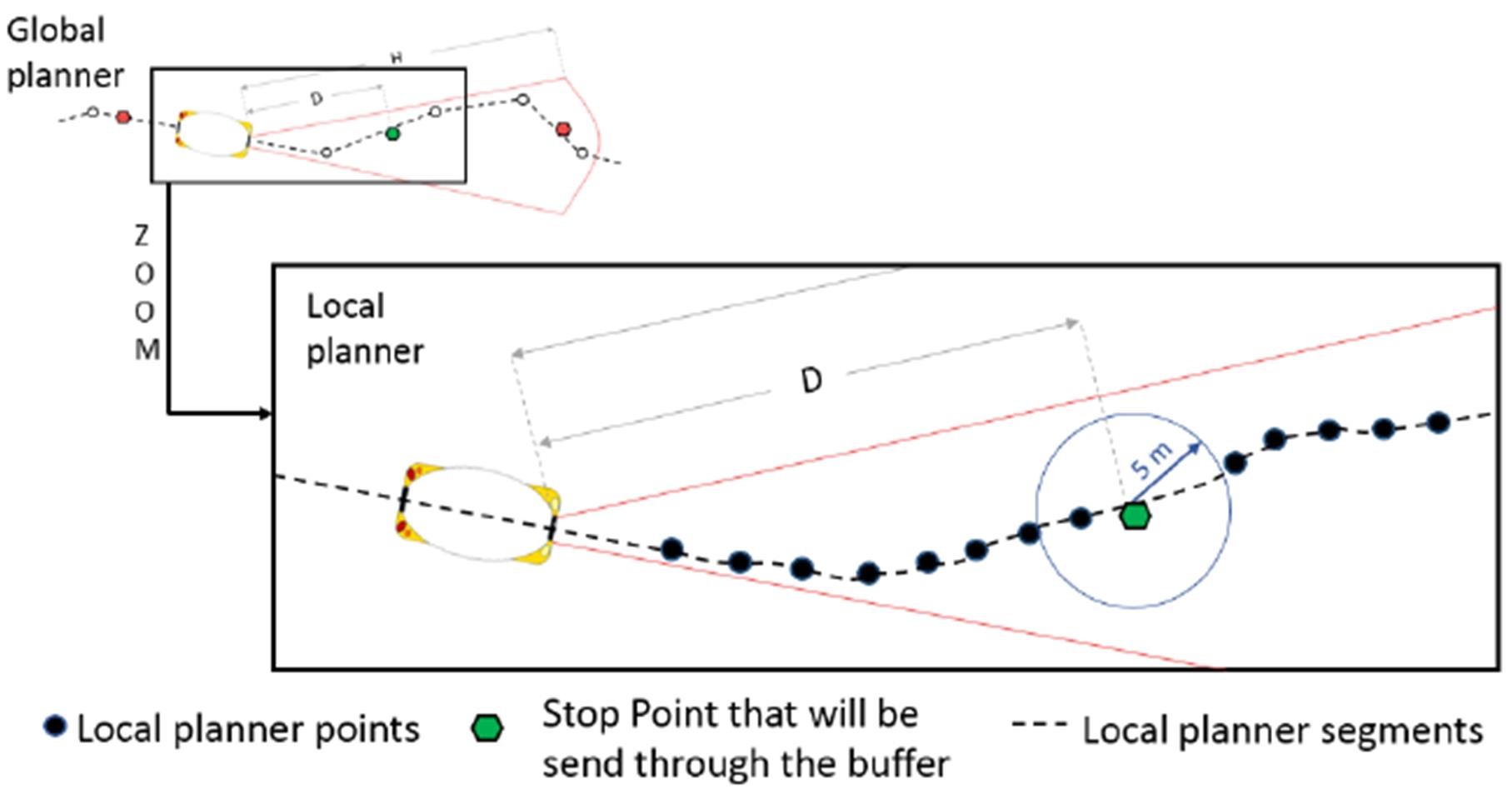

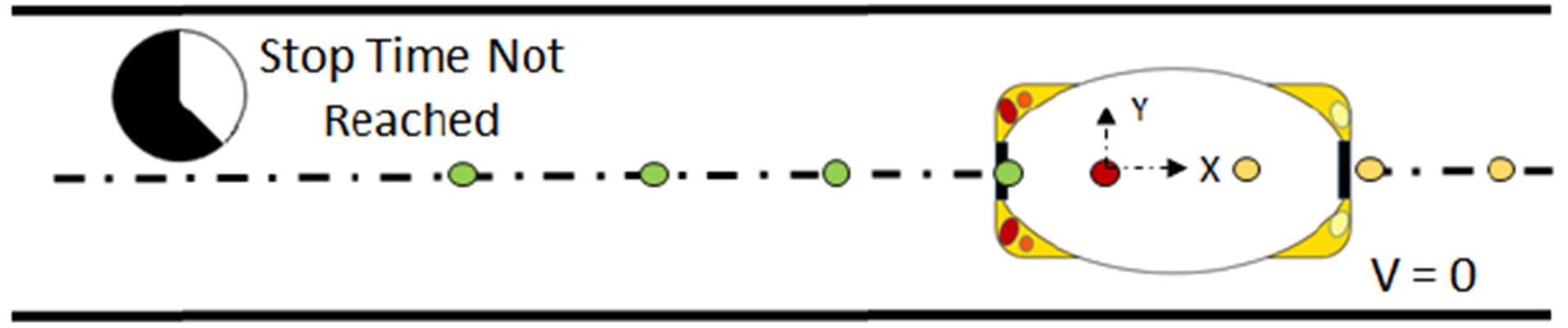

They remain in a temporary buffer, where a second set of conditions are evaluated regarding the local trajectory (Fig. 4). These conditions are:

-

1.

The distance between the stop point coordinates and the closer local segment is lower than 5m.

-

2.

The segment where the stop point will be included must not be the last segment in the local planner (as the red point in Fig. 5).

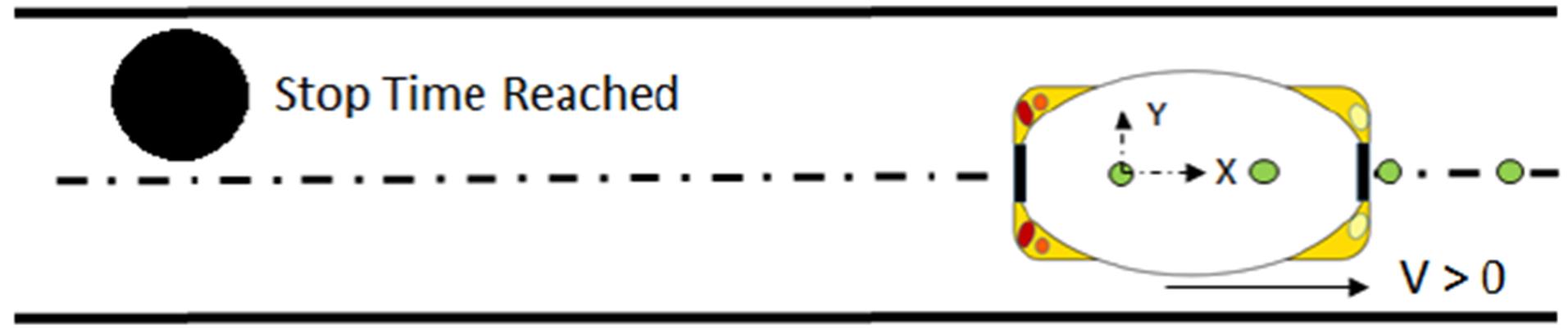

Then, if the conditions are meet, the position of the stop point is saved into the trajectory with an associated stop time. The trajectory will be sent to the control stage up to the stop point (Fig. 5). Future trajectory points are not sent to the controller if the desired stop time has not passed (Fig. 5).

Based on this method, the CTSs are capable to stop in any part of the trajectory, like curved or straight segments. When the time is reached, the buffer sends the new trajectory, and the vehicle returns to the planned itinerary (Fig. 5)).

II-C Control

The control stage receives the trajectory through the buffer. This feature creates a new trajectory in real time (e.g. lane change, pedestrian detection, etc). The control law implemented is defined in equation 1:

| (1) |

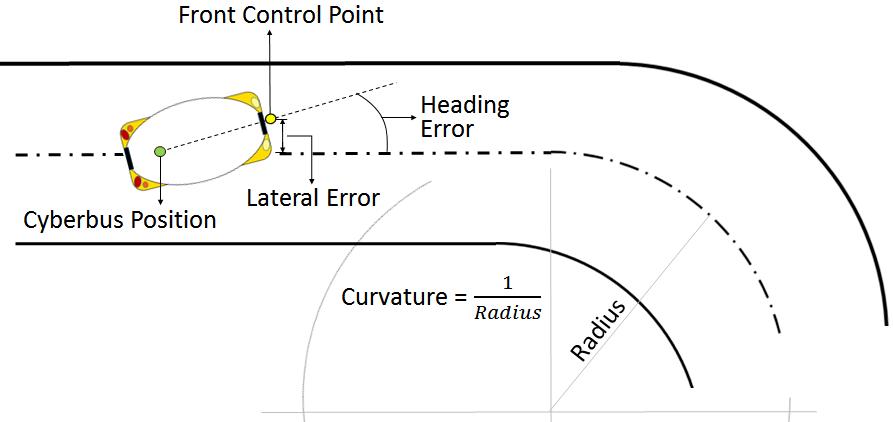

where is the curvature, is the lateral error and is the heading error (see figure 8). The values , and are the controller gains.

II-D Actuation

The actuation stage receives the instructions from the control modules and performs the desired lateral and longitudinal actions on the vehicle. It manages the actuators of the CTS through the CAN protocol, to achieve the desired steering angle and speed. The modularity of the system allows an easy implementation in real platforms, as will be shown in the results section.

III CTS equipment at Inria: The Cyberbus

The Cyberbus (Figure 6) he first is the master, in charge managing the steering, speed, obstacle detection and tracking and control. The slave PC is in charge of executing the localization algorithms, receiving directly the information from the lasers and performing the SLAM.

This prototype CTS was tested during a 3 months demon-stration showed that fully autonomous road vehicles are suitable for public transport [17]. These demonstrations used previously recorded routes, unable to be modified in real time.

For localization, a Simultaneous Localization and Map-ping (SLAM) algorithm is used. It is capable of estimating the joint posterior probability over all past observations using real time information for a large environment reconstruction, as explained on [18]. The environment data is collected by two Ibeo Lasers on the top front and rear parts of the Cyberbus (Figure 6).

Obstacle detection is also possible with the two Sick lasers located on the front and rear down parts of the vehicle. These lasers are capable of detecting an obstacle within a 50m range, avoiding possible collisions. The acceleration is tracked by an IMU (located over the rear axle). Two incremental encoders are responsible of measuring the speed (attached to the engines in the rear axle) and a differential encoder tracks the steering angle of the wheels (over the front axle).

The Cyberbus is equipped with two computers. The first is the master, in charge managing the steering, speed, obstacle detection and tracking and control. The slave PC is in charge of executing the localization algorithms, receiving directly the information from the lasers and performing the SLAM. It sends the information to the master PC.

Finally, a joystick and a touchscreen are part of the Human Machine Interface (HMI). All the vehicle is powered by a 72 Volts supply conformed by six Li-Ion batteries. It counts with two DC/DC voltage transformers that reduce the voltage to a 24 volts stage, which supplies the most components of the vehicle. For safety, 6 emergency stop buttons and a Remote Radio Unit (RRU) are capable to suddenly stop the vehicle in case of emergencies.

IV Tests and results validation

This section introduces the experimental validation of the proposed architecture. Several experiments at the INRIA-Rocquencourt facilities were carried out in order to validate the control architecture described, considering different scenarios with multiple stop points. The robustness of the system was tested after numerous automatic performances with two Cyberbus vehicles. Since the experiments were per-formed in real scenarios, some requirements were previously set to guarantee the safety, specifically of the vulnerable road users, based on CityMomil2 regulations [19]. The next subsection describes how these requirements were taken into account.

IV-A CTS Requirements for Perception and Control

CTSs can navigate through a whole space with the unique target of reaching the final destination in a safe manner. Functional requirements for both control [19] and perception [20] layers were identified within the CityMobil2 project. These requirements were taken into account for the Le´on exhibition. An open square of 40x27 meters where pedestrians are randomly walking in any direction were used as experimental driving area. Pedestrian safety was one of the key requirements when adapting the experimental platform to the driving scenario. The other critical point was the turning radius, that somehow limited the potential areas were the Cyberbus could perform an U-turn. Based on this, the different driving scenarios are depicted in Figure 11.

IV-B Experimental Validation

The improvements of the local planning, tested in real platforms, are described in this section. Figure 9 shows the whole performance of the vehicle in our facilities, based on the path showed in Figure 2. To validate the stop points algorithm, the same path was performed by the vehicle. However, 4 Stop points were added to the path in this case. Different zones were selected to this end: 2 in straight segments, 1 in a curve and another one inside the roundabout. The complete path is shown in figure 9, the route is described as follows:

-

•

The Cybus starts the trajectory at start point (as in figure 2)

-

•

A stop point is set at (first point in figure 9) with a stop time of .

-

•

The 2nd Stop Point is set at the roundabout (2nd point in figure 9) with a stop time

-

•

At , the third stop point is set at a curved segment (3rd point in figure 9) with a stop time of .

-

•

The last stop point is set at the straight segment beside the pedestrian crossing, where the vehicle stops for .

-

•

Finally, the vehicle finishes successfully the planned trajectory.

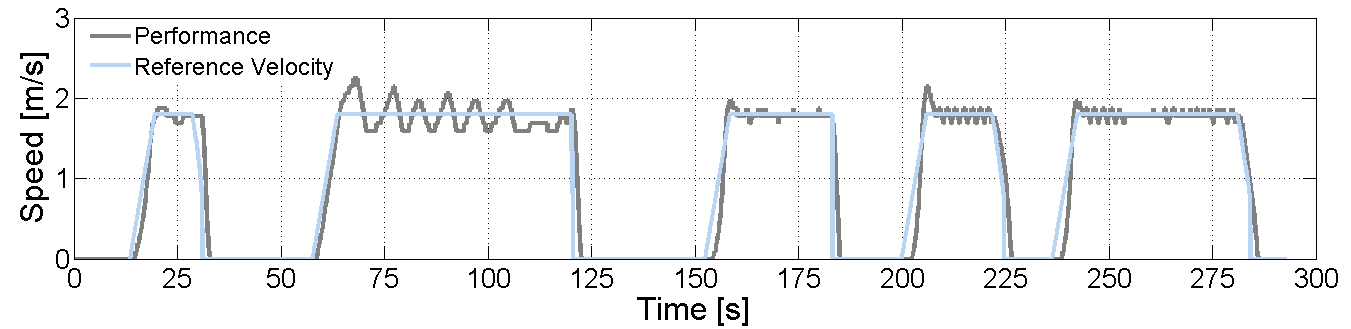

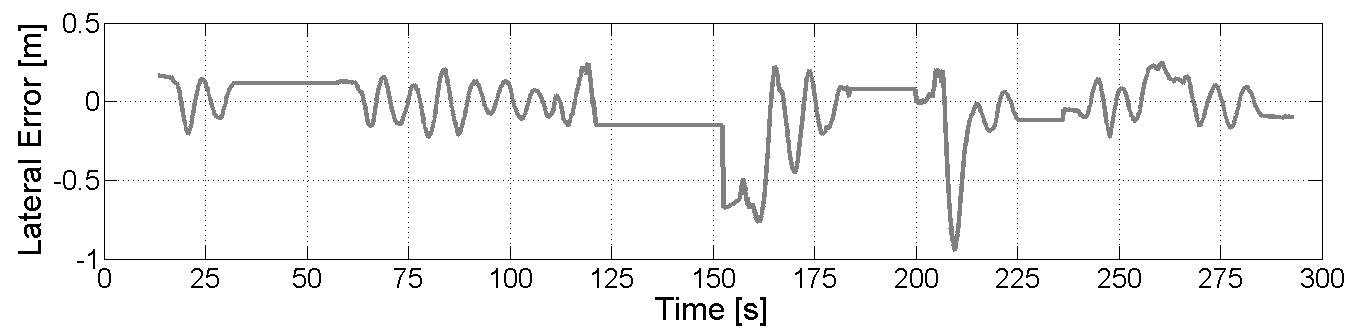

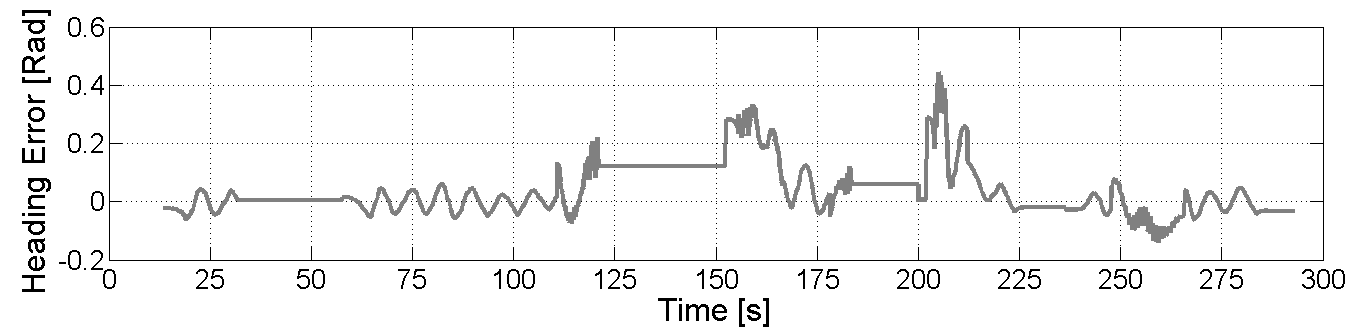

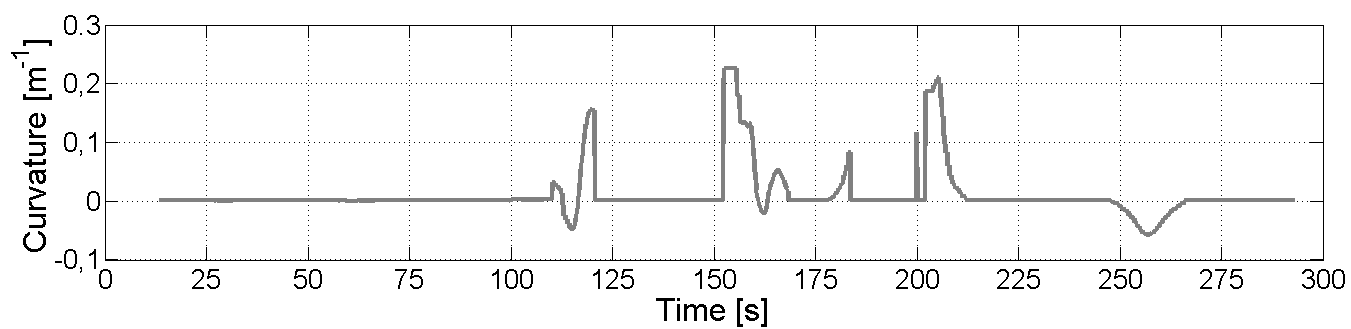

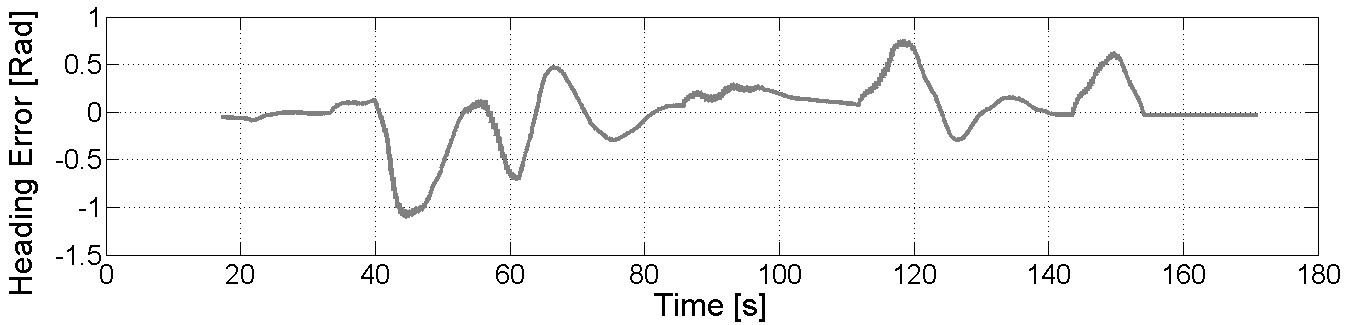

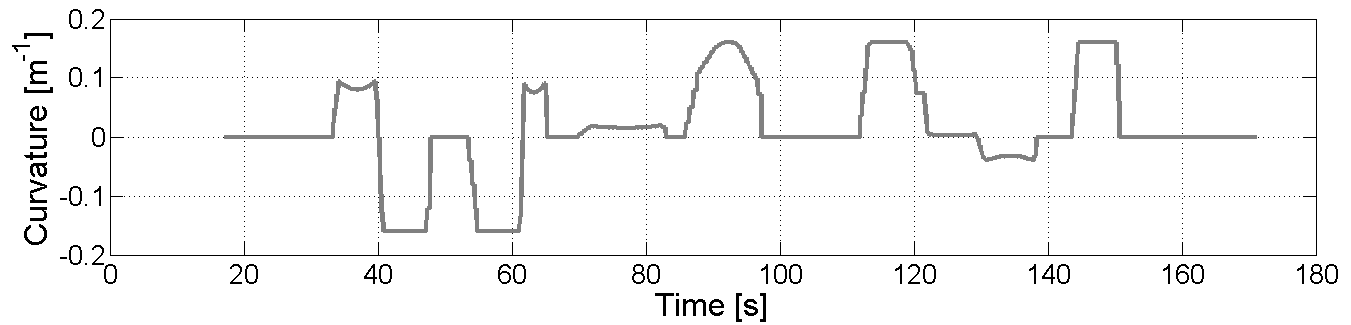

Figure 10 shows the performance of the stop points programmed and the trajectory achieved, for the itinerary described in Fig. 9. Figure 10 shows the real speed of the vehicle. After the first stop, the actual speed presents some oscillations. It is because this part of the path is a slope-down segment, so the electric engine is braking most of the time. In the other segments, we can appreciate how the CTS reaches the desired velocity smoothly, just reducing the speed when a stop point is close. Figures 10 and 10 show the two errors used for the lateral control of the vehicle. The curvature is shown in Figure 10. It is important to notice that the errors stay constant when the car stops on a point. Most of time, the errors are close to zero. The lateral error average in curve segment is less than 0.5m and the heading error is between -0.2 and 0.2 rad. Finally, the curvature never reaches the steering limit of the car, i.e. 0.48 [1/m].

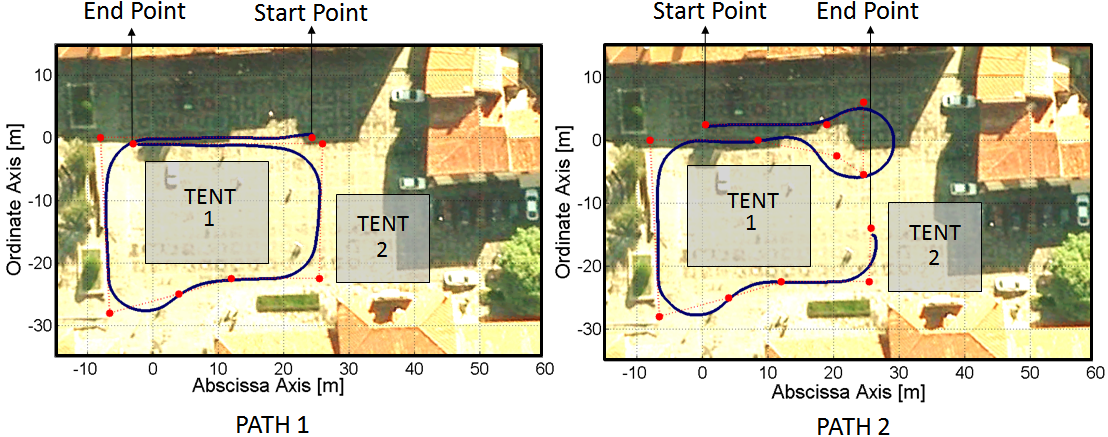

IV-C Showcase demonstration at León

Results obtained in the first showcase of the CityMobil2 project are presented in this section. Figure 11 shows two trajectories performed in a public open square. Two tents were located in the middle square for expositions related to the project, and the traffic on the next street was regulated during the demonstration. The space was reduced, for this reason a path around the big tent (left-hand side of Figure 11), and other performing an U-turn (right-hand side of the same figure), were carried out. The first scenarios shows how the global control points were defined (as explained in Section II), then the blue line shows the performance of the vehicle in autonomous driving.

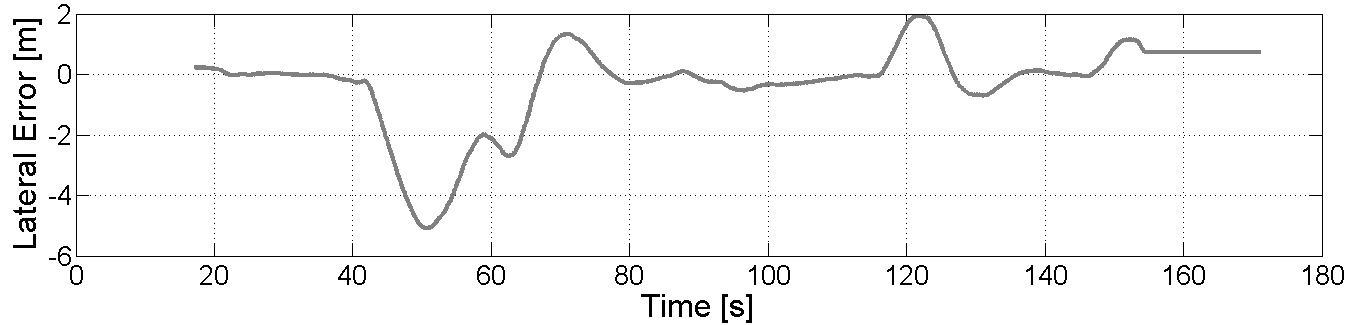

Figure 12 shows the vehicle performance. The lateral and heading remains small in straight segments, and only exceeds the average of the first experiments around the 60 seconds of the experiment. It is because the angles at this curve (The 3rd one, in the bottom right side of the Figure 11) is more pronounced that the other, and also at this time the vehicle is descending the sidewalk. But, the oscillation is reduced by the control stage. Figure 11 shows another trajectory. In this case, due the space limitations, the vehicle achieves the U-turn in around 13 meters. The maximum curvature radius of the CTS is reached, and the controller stabilizes the system when vehicle exits the curve.

V Conclusions

In this paper, the control architecture for CTSs has been described in the framework of the FP7 project CityMobil2. Stop points were introduced and tested in real scenarios. The key stop points throughout the circuit of the vehicle are set, improving the performance of the autonomous CTSs. These are an added special feature of the Local Planning.

Two specific experiments were performed: a demonstra-tion in our facilities and a CityMobil2 showcase at León (Spain). The results of these experiences are described, showing good results, where the performance of the vehicle is described in terms of lateral and heading errors, as well as the curvature and longitudinal speed. The acceptability of this technology is one the objective of the project. In this demonstration more than 50 persons tested our vehicles, with good impressions.

Future works will consider the introduction of vehicle-to-vehicle communications among CTSs in order to intelligently handle complex traffic situations as intersections or round-abouts.

ACKNOWLEDGMENT

Authors wants to thank to the ARTEMIS project DESERVE and FP7 CityMobil2 for its support in the development of this work.

References

- [1] J. Naranjo, L. Bouraoui, R. García, M. Parent, and M. Sotelo, “Interoperable control architecture for cybercars and dual-mode cars,” IEEE Transactions on Intelligent Transportation Systems, vol. 10, pp. 146–154, 2009.

- [2] L. Bouraoui, S. Petti, A. Laouiti, T. Fraichard, and M. Parent, “Cybercar cooperation for safe intersections,” 2006 IEEE Intelligent Transportation Systems Conference, pp. 456–461, 2006.

- [3] D. González and J. Pérez, “Control architecture for cybernetic transportation systems in urban environments,” 2013 IEEE Intelligent Vehicles Symposium (IV), pp. 1119–1124, 2013.

- [4] M. Parent, “Cybercars, cybernetic technologies for the car in the city,” CyberCars project, 2002.

- [5] T. Xia, M. Yang, R. Yang, and C. Wang, “Cyberc3: A prototype cybernetic transportation system for urban applications,” IEEE Transactions on Intelligent Transportation Systems, vol. 11, pp. 142–152, 2010.

- [6] L. Martinez-Gomez and T. Fraichard, “Collision avoidance in dynamic environments: An ics-based solution and its comparative evaluation,” Robotics and Automation, 2009. ICRA ’09, pp. 100–105, 2009.

- [7] P. Bonnifait, M. Jabbour, and V. Cherfaoui, “Autonomous navigation in urban areas using gis-managed information,” International Journal of Vehicle Autonomous Systems, vol. 6, p. 83, 2008.

- [8] Z. Rong-hui, W. Rong-ben, Y. Feng, J. Hong-guang, and C. Tao, “Platform and steady kalman state observer design for intelligent vehicle based on visual guidance,” 2008 IEEE International Conference on Industrial Technology, pp. 1–6, 2008.

- [9] C. Premebida and U. Nunes, “A multi-target tracking and gmm-classifier for intelligent vehicles,” 2006 IEEE Intelligent Transportation Systems Conference, pp. 313–318, 2006.

- [10] J. Pérez, V. Milanés, T. de Pedro, and L. B. Vlacic, “Autonomous driving manoeuvres in urban road traffic environment: A study on roundabouts,” IFAC Proceedings Volumes, vol. 44, pp. 13 795–13 800, 2011.

- [11] J. Pérez, R. Lattarulo, and F. Nashashibi, “Dynamic trajectory generation using continuous-curvature algorithms for door to door assistance vehicles,” 2014 IEEE Intelligent Vehicles Symposium Proceedings, pp. 510–515, 2014.

- [12] S. Thrun, M. Montemerlo, H. Dahlkamp, D. Stavens, A. Aron, J. Diebel, P. Fong, J. Gale, M. Halpenny, G. Hoffmann, K. Lau, C. M. Oakley, M. Palatucci, V. Pratt, P. Stang, S. Strohband, C. Dupont, L.-E. Jendrossek, C. Koelen, C. Markey, C. Rummel, J. V. Niekerk, E. Jensen, P. Alessandrini, G. Bradski, B. Davies, S. Ettinger, A. Kaehler, A. Nefian, and P. Mahoney, “Stanley: The robot that won the darpa grand challenge,” J. Field Robotics, vol. 23, pp. 661–692, 2006.

- [13] D. Ferguson, T. Howard, and M. Likhachev, “Motion planning in urban environments,” Journal of Field Robotics, vol. 25, pp. 939–960, 2008.

- [14] A. Broggi, P. Medici, P. Zani, A. Coati, and M. Panciroli, “Autonomous vehicles control in the vislab intercontinental autonomous challenge,” Annu. Rev. Control., vol. 36, pp. 161–171, 2012.

- [15] G. Trehard, Z. Alsayed, E. Pollard, B. Bradai, and F. Nashashibi, “Credibilist simultaneous localization and mapping with a lidar,” 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 2699–2706, 2014.

- [16] R. Solea and U. Nunes, “Trajectory planning with velocity planner for fully-automated passenger vehicles,” 2006 IEEE Intelligent Transportation Systems Conference, pp. 474–480, 2006.

- [17] L. Bouraoui, C. Boussard, F. Charlot, C. Holguin, F. Nashashibi, M. Parent, and P. Resende, “An on-demand personal automated transport system: The citymobil demonstration in la rochelle,” 2011 IEEE Intelligent Vehicles Symposium (IV), pp. 1086–1091, 2011.

- [18] J. Xie, F. Nashashibi, M. Parent, and O. G. Favrot, “A real-time robust global localization for autonomous mobile robots in large environments,” 2010 11th International Conference on Control Automation Robotics & Vision, pp. 1397–1402, 2010.

- [19] J. Pérez, F. Charlot, and E. Pollard, “Guiding and control specifications -citymobil2-s,” CityMobil2 project (FP7), 2013.

- [20] E. Pollard, F. Charlot, F. Nashashibi, and J. Pérez, “Specification for obstacle detection and avoidance,” CityMobil2 project (FP7), 2013.