Deploying Foundation Model Powered Agent Services: A Survey

Abstract

Foundation model (FM) powered agent services are regarded as a promising solution to develop intelligent and personalized applications for advancing toward Artificial General Intelligence (AGI). To achieve high reliability and scalability in deploying these agent services, it is essential to collaboratively optimize computational and communication resources, thereby ensuring effective resource allocation and seamless service delivery. In pursuit of this vision, this paper proposes a unified framework aimed at providing a comprehensive survey on deploying FM-based agent services across heterogeneous devices, with the emphasis on the integration of model and resource optimization to establish a robust infrastructure for these services. Particularly, this paper begins with exploring various low-level optimization strategies during inference and studies approaches that enhance system scalability, such as parallelism techniques and resource scaling methods. The paper then discusses several prominent FMs and investigates research efforts focused on inference acceleration, including techniques such as model compression and token reduction. Moreover, the paper also investigates critical components for constructing agent services and highlights notable intelligent applications. Finally, the paper presents potential research directions for developing real-time agent services with high Quality of Service (QoS).

Index Terms:

Foundation Model, AI Agent, Cloud/Edge Computing, Serving System, Distributed System, AGI.I Introduction

The rapid advancement of artificial intelligence (AI) has positioned foundation models (FMs) as a cornerstone of innovation, driving progress in various fields such as natural language processing, computer vision, and autonomous systems. These models, characterized by their vast parameter spaces and extensive training on broad datasets, incubate numerous applications from automated text generation to advanced multi-modal question answering and autonomous robot services [1]. Some popular FMs, such as GPT, Llama, ViT, and CLIP, are pivotal in pushing the boundaries of AI capabilities, offering sophisticated solutions for processing and analyzing large volumes of data across different formats and modalities. The continuous advancement of FMs significantly enhances AI’s ability to comprehend and interact with the world in a manner akin to human cognition.

However, traditional FMs are typically confined to providing question-and-answer services and generating responses based on pre-existing knowledge, often lacking the ability to incorporate the latest information or employ advanced tools. FM-powered agents are designed to enhance the capability of FM. These agents are incorporated with dynamic memory management, long-term task planning, advanced computational tools, and interactions with the external environment [2]. For example, FM-powered agents can call different external APIs to access real-time data, perform complex calculations, and generate updated responses based on the most current information available. This approach improves the reliability and accuracy of the responses and enables more personalized interactions with users.

Developing a serving system with low latency, high reliability, high elasticity, and minimal resource consumption is crucial for delivering high-quality agent services to users. Such a system can efficiently manage varying query loads while maintaining swift response and reducing resource costs. Moreover, constructing a serving system on heterogeneous edge-cloud devices is a promising solution to leverage the idle computational resources at the edge and the abundant computational clusters available in the cloud. The collaborative inference of edge-cloud devices can enhance overall system efficiency by dynamically allocating tasks to various edge-cloud machines based on computational load and real-time network conditions.

Although many research works investigate edge-cloud collaborative inference for small models, deploying FMs under this paradigm for diverse agent services still faces several severe challenges. First, the fluctuating query load severely challenges the model serving. The rapidly growing number of users want to experience intelligent agent services with FMs. For example, as of April 2024, ChatGPT has approximately 180.5 million users, with around 100 million of users being active weekly [3]. These users access the service at different times, resulting in varying request rates. An elastic serving system should dynamically scale the system capacity according to the current system characteristics. Secondly, the parameter space of an FM is particularly large, reaching the scale of several hundred billion, which is a significant challenge to the storage system. However, the storage capacity of edge devices and the consumer GPU is limited, making it unable to accommodate an entire model. The large number of parameters results in significant inference overhead and long execution latency. Therefore, it is necessary to design model compression methods and employ different parallelism approaches in diverse execution environments. In addition, users have different service requirements and inputs in different applications. For example, some applications prioritize low latency, while others prioritize high accuracy. This necessitates dynamic resource allocation and adjustment of the inference process. Moreover, AI agents need to deal with lots of hard tasks under complex environments, which require effective management of large-scale memory, real-time processing of updated rules, and specific domain knowledge. Additionally, agents possess distinct personalities and roles, necessitating the design of an efficient multi-agent collaboration framework.

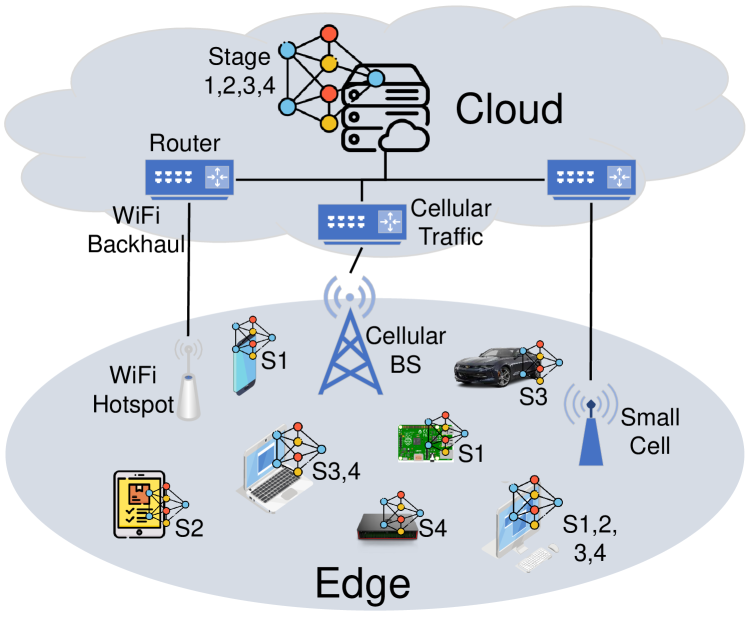

To address the aforementioned challenges and promote the development of the real-time FM-powered agent service, this survey proposes a unified framework and investigates various research works from different optimization aspects. This framework is shown in Figure 1. The bottom layer is the execution layer, where edge or cloud devices execute an inference with FMs. Joint computation optimization, I/O optimization, and communication optimization are applied to accelerate inference and promote the building of a powerful infrastructure for FMs. The resource layer, comprised of two components, facilitates the deployment of the model on various devices. Parallelism methods design different model splitting and placement strategies to utilize the available resources and improve throughput collaboratively. Resource scaling dynamically adjusts the hardware resources during runtime based on query load and resource utilization, thereby improving overall scalability. The model layer focuses on the optimization of FMs. Two lightweight methods, including model compression and token reduction, are specifically designed to promote the widespread adoption of FMs. Based on these FMs, many AI agents are constructed to accomplish various tasks. Numerous methods have been proposed to enhance the four key components of agents, which encompass the multi-agent framework, planning capabilities, memory storage, and tool utilization. Ultimately, leveraging the aforementioned techniques, all kinds of applications can be developed to deliver intelligent and low-latency agent services to users.

I-A Previous Works

Many research works focus on system optimization to deploy machine learning models in edge-cloud environments. KACHRIS reviews some hardware accelerators for Large Language Models (LLMs) to address the computational challenges [4]. Tang et al. summarize scheduling methods designed for optimizing both network and computing resources [5]. Miao et al. present some acceleration methods to improve the efficiency of LLMs [6]. This survey includes system optimizations, such as memory management and kernel optimization, as well as algorithm optimizations, such as architectural design and compression algorithms, to accelerate model inference. Xu et al. focus on the deployment of Artificial Intelligence-Generated Content (AIGC), and they provide an overview of mobile network optimization for AIGC, covering the processes of dataset collection, AIGC pre-training, AIGC fine-tuning, and AIGC inference [7]. Djigal et al. investigate the application of machine learning and deep learning techniques in resource allocation for Multi-access Edge Computing (MEC) systems [8]. The survey includes resource offloading, resource scheduling, and collaborative allocation. Many research works propose different algorithms to optimize the design of FMs and agents. [1], [9] and [10] present popular FMs, especially LLMs. [11], [12] and [13] summarizes model compression and inference acceleration methods for LLM. [2], [14], and [15] review the challenges and progress for the development of agents.

In summary, the above studies either optimize edge-cloud resource allocation and scheduling for small models or design acceleration or efficiency methods for large FMs. To the best of our knowledge, this paper is the first comprehensive survey to review and discuss the deployment of real-time FM-powered agent services in heterogeneous devices, a research direction that has gained significant importance in recent years. We design a unified framework to fill this research gap and review current research works from different perspectives. This framework not only delineates essential techniques for the deployment of FMs but also identifies key components of FM-based agents and corresponding system optimizations specifically tailored for agent services.

I-B Contribution

This paper presents a comprehensive survey on the deployment of FM-powered agent services in edge-cloud environments, covering optimization approaches spanning from hardware to software layers. For the convenience of readers, we provide an outline of the survey in Figure 2. The contributions of this survey are summarized in the following aspects:

-

•

This survey proposes the first comprehensive framework to provide a deep understanding of the deployment of FM-powered agent services within the edge-cloud environment. Such a framework holds the potential to foster the advancement of AGI greatly.

-

•

From a low-level hardware perspective, we present research on various runtime optimization methods and resource allocation and scheduling methods. These techniques are designed to establish a reliable and flexible infrastructure for FMs.

-

•

From a high-level software perspective, we elucidate research efforts focused on model optimization and agent optimization, thereby offering diverse opportunities for building intelligent and lightweight agent applications.

The remainder of this article is organized as follows: Section II presents some low-level execution optimization methods. Section III describes resource allocation and parallelism mechanisms. Section IV discusses current FMs, as well as techniques for model compression and token reduction. Section V illustrates key components for agents. Section VI presents batching methods and some related applications. Finally, Section VII discusses the future works and draws conclusions.

II Execution Optimization

II-A Computation Optimization

Edge deployment of FMs poses severe challenges due to the heterogeneity of edge devices in terms of computing ability, hardware architecture, and communication bandwidth [16]. It is important to design computation optimization methods for different devices to accelerate model inference. Figure 3 provides an overview of this chapter. This diagram provides a global perspective for algorithm design (e.g., computation optimization). It also depicts the components of an edge computing system, including heterogeneous backends and the network. Table I shows some commonly used hardware devices at the edge. Traditionally, devices with CPUs have been deployed at the edge environment. However, due to their limited parallel computing capabilities and memory constraints, various specialized accelerators are designed for Deep Learning (DL) tasks. FPGAs have gained widespread attention for their programmable and parallel computing capabilities. ASICs, while non-programmable after manufacture, offer high speed and low power consumption. Many edge devices, such as personal computers and smartphones, have different computational resources, such as GPU and CPU. Consequently, many approaches focus on optimizing computational efficiency by jointly utilizing these resources for model inference. In-memory computing, with non-Von-Neumann architecture, has emerged as a prominent research area because its intrinsic parallelism can significantly reduce the I/O latency and improve computational efficiency. To enhance the understanding of different devices, we provide some detailed information about different hardware devices as below.

| Resource Type | Features and Functions |

|---|---|

|

Field Programmable Gate Arrays

(FPGAs) |

FPGAs are integrated circuits that can be reconfigured at the hardware level after manufacturing. It can have higher performance and lower latency than GPU accelerators. |

|

Application-Specific Integrated Circuit

(ASIC) |

ASIC is a customized accelerator for a specific use scenario. It is not programmable after manufacturing and offers computing comparable to FPGAs. The producer decides the specifications. |

|

In-memory Compute

(IMC) |

IMC is a non-von-Neumann method, able to conduct computation in the memory. For instance, IMC utilizes physical processes to execute addition and multiplication, thus accelerating matrix-vector computing. |

|

Central Processing Unit

(CPU) |

CPU is a General-purpose processor; It executes basic operations and instructions and can run lowly parallel computing. |

|

Graphics Processing Unit

(GPU) |

GPU excels in parallel computing and is dominantly used as accelerators for DL. |

II-A1 FPGAs

FPGAs are widely deployed in edge computing applications and are re-configurable hardware devices that are efficient in power consumption. FMs are built upon Transformers, which contain non-linear computation components, such as layer normalization, SoftMax, and non-ReLU activation functions. Serving these models requires specific accelerator designs. On the other hand, matrix multiplications are conducted during the inference of FMs. Their substantial computational complexity poses challenges for optimizing FPGA-based accelerators. To solve these problems, a specialized hardware accelerator is designed for Multi-Head Attention (MHA) and feed-forward networks (FFN) in Transformers [17]. It incorporates a matrix partitioning strategy to optimize resource sharing between Transformer blocks, a computation flow that maximizes systolic array utilization, and optimizations of nonlinear functions to diminish complexity and latency. MnnFast provides a scalable architecture specifically for Memory-augmented Neural Networks [18]. It incorporates a column-based streaming algorithm, zero-skipping optimization, and a dedicated embedding cache to tackle the challenges posed by large-scale memory networks. NPE offers software-like programmability to developers [19]. Unlike previous FPGA designs that implement specialized accelerators for each nonlinear function, NPE can be easily upgraded for new models without requiring extensive reconfiguration, making it a more cost-effective solution. DFX is a multi-FPGA system that optimizes the latency and throughput for text generation. It leverages model parallelism and an optimized, model-and-hardware-aware dataflow to handle the sequential nature of text generation. It demonstrates the superior speed and cost-effectiveness of FPGA compared to GPU implementations[20].

Additionally, the development of Transformer-OPU (Overlay Processor)[21] provides a flexible and efficient FPGA-based processor that expedites the computation of Transformer networks. Moreover, implementing a tiny Transformer model through a Neural-ODE (Neural Ordinary Differential Equation) approach [22] leads to a substantial reduction in model size and power usage, making it ideal for edge computing devices in Internet-of-Things (IoT) applications. FlightLLM [23] addresses the computational efficiency, memory bandwidth utilization, and compilation overheads on FPGAs for LLM inference. It includes a configurable Digital Signal Processor (DSP) chain optimized for varying sparsity patterns in LLMs and always-on-chip activations during decoding and a length adaptive compilation method for dynamic sparsity patterns and input lengths.

In summary, the above designs for FPGA-based FM inference focus on several key aspects: enhancing computational efficiency through specialized architectures such as systolic arrays and DSP chains, optimizing memory usage via techniques like matrix partitioning and dedicated caches, reducing latency through efficient dataflows and model-and-hardware-aware optimizations, and improving adaptability and programmability to accommodate diverse and evolving model structures and non-linear functions.

II-A2 ASIC

An ASIC is an integrated circuit chip customized for a specific application. For example, router ASICs can handle packet processing and signal modulation. It often includes microprocessors, memory, and other components as a System-on-Chip (SoC). Recent advancements in ASIC design significantly enhance the performance and efficiency of attention mechanisms, which is crucial for applications across NLP and CV. The [24] accelerator employs algorithmic approximations and a prototype chip to significantly enhance energy efficiency and processing speed. Essentially, the attention mechanism is a content-based search to evaluate the correlation between the currently processed token and previous tokens. addresses the inefficiency of matrix-vector multiplication in the self-attention mechanism, which is sub-optimal for the content-based search, by implementing an efficient greedy candidate search method. Similarly, ELSA (Efficient, Lightweight Self-Attention) [25] tackles the quadratic complexity of self-attention by selectively filtering out less important relations in self-attention and implements an ASIC to achieve high energy efficiency.

SpAtten[26] prunes Transformer models at both the token and head levels and reduces the model size with quantization to minimize the computational and memory demands of Transformers. Sanger[27] framework enables sparse attention mechanisms through a reconfigurable architecture that supports dynamic software pruning and efficient sparse operations. Additionally, the Energon[28] co-processor, working together with other FM accelerators, introduces a dynamic sparse attention mechanism that uses a mix-precision multi-round filtering algorithm to optimize query-key pair evaluations. The above ASIC solutions demonstrate significant advancements in speed and energy efficiency compared to traditional devices while still preserving high accuracy. They pave the way for real-time, resource-efficient implementations for complex FMs.

II-A3 In-memory Compute

In-memory computing (IMC) is an emerging computational paradigm performing computational tasks directly within memory, eliminating frequent I/O requirements between processors and memory units. IMC is a scalable and energy-efficient solution to handle long sequence data in Transformers. [29] introduces ATT, a fault-tolerant Resistive Random-access Memory (ReRAM) accelerator specifically designed for attention-based neural networks. This accelerator capitalizes on the high-density storage capabilities and low leakage power of ReRAM to address the compatibility issues between traditional neural network architectures and the complex data flow of attention mechanisms. ReTransformer[30] is a ReRAM-based IMC architecture for Transformers. It accelerates the scaled dot-product attention mechanism, utilizes a matrix decomposition technique to avoid storing intermediate results, and designs the sub-matrix pipelines of MHA.

The iMCAT utilizes a combination of crossbar arrays to store the matrix in the memory array and uses Content Addressable Memories (CAM) to overcome the significant memory and computational bottlenecks in processing long sequences with MHA [30]. Recent works design different optimization methods to deploy computationally intensive Transformer models on edge AI accelerators [31]. The Google TPU, categorized as ASIC and IMC, is widely used in edge and cloud computing. Large Transformer models can be executed efficiently on the Coral Edge TPU by optimizing the computational graph and employing quantization techniques, ensuring real-time inference with minimal energy consumption. The techniques employed in IMC, including matrix decomposition and quantization, reduce the computational resources required to serve FMs, thereby facilitating the deployment of FMs at the edge.

II-A4 CPU & GPU

Current optimization algorithms, such as CPU-GPU hybrid computation, are hardware-independent and can be applied to various backends. Different methods are designed to accelerate Transformer inference by optimizing the MHA, FFN, skip connections, and normalization modules, which are identified as critical bottlenecks in Transformer architectures [32]. [33] aims to optimize the execution time of the SoftMax layer by decomposing it into multiple sub-layers and then fusing them with adjacent layers. LLMA [34] leverages the overlap between the text generated by LLMs and existing reference texts to enhance computational parallelism and speed up the inference process without compromising output quality. These algorithms are hardware-agnostic and can be applied to various backends besides CPU and GPU. UltraFastBERT optimized inference by activating only a fraction of the available neurons, thereby maintaining competitive performance levels [35]. Intel CPU clusters can serve LLMs by adopting the algorithms [36], such as quantization and optimized kernels, specifically designed to accelerate computations on CPUs.

Many optimizations have been proposed by coordinately utilizing GPUs and CPUs to improve the efficiency and speed of Transformer models. Similarly, [37] introduces PowerInfer, a high-speed LLM inference engine that leverages the high locality and power-law distribution in neuron activation to reduce GPU memory demands and CPU-GPU data transfers. [38] aims to reduce latency by coordinating CPU and GPU computing while mitigating the I/O bottlenecks by overlapping the data processing and I/O time. [39] utilizes a single GPU to serve LLMs, prioritizing throughput at the expense of latency. It designs a scheduling algorithm to schedule model parameters, KV caches, and activations between CPU, GPU, and disk. These advancements focus on hardware-independent algorithms and CPU-GPU collaborative inference strategies, signifying a robust movement towards more efficient FMs.

II-B Memory Optimization

II-B1 Vanilla FMs

Besides computational costs, memory overhead is another major challenge in deploying LLMs at resource-constrained edge devices. The inference process of the LLM involves two key stages: the prefill and decode phases. The prefill phase is responsible for creating the KV cache based on the prompts, while the decode phase focuses on generating the subsequent token autoregressively. The first stage is compute-intensive, and the primary challenge of the decoding stage lies in the memory wall. This barrier prevents the loading of the vast parameters associated with LLMs and the maintenance of the KV cache throughout the inference process. Consequently, considerable research effort has focused on memory scheduling, utilizing multiple storage levels to execute a single model. For example, jointly optimizing CPU and GPU memory usage makes it possible to deploy LLMs on personal computers, smartphones, and other weak devices.

Contextual sparsity, which refers to the input-dependent activation of a small subset of neurons in LLMs, has been exploited to reduce computational and memory costs. [40] proposes a method to predict contextual sparsity on the fly and an asynchronous, hardware-aware implementation to accelerate LLM inference. [41] explores storing model parameters in flash memory and loading only the required subset during inference, considering bandwidth, energy constraints, and read throughput.

Several studies have focused on optimizing the attention mechanism in LLMs to reduce I/O costs. [42] introduces the Multi-Query Attention to accelerate decoding by reducing memory bandwidth requirements during incremental inference. [43] proposes Grouped-Query Attention (GQA), an intermediate between MHA and Multi-Query Attention (MQA), achieving a balance between quality and speed. [44] introduces PagedAttention, which is inspired by virtual memory and paging techniques, to efficiently manage key-value cache memory and integrate it into vLLM, a high-throughput LLM serving system. [45] proposes FlashAttention, an I/O-aware attention algorithm that reduces memory accesses between High Bandwidth Memory (HBM) and on-chip Static Random-Access Memory (SRAM) in GPU, which avoids fully loading the large attention matrix, reducing I/O overhead significantly. [46] further improves FlashAttention to enhance efficiency and scale Transformers to longer sequence lengths by strategically reducing the number of non-matrix-multiplication operations and paralleling the sequence dimension. This adjustment enables improved resource utilization across the GPU thread block. FlashDecoding++ [47] introduces asynchronized partial parallel SoftMax. SoftMax requires subtracting a value from all input numbers before exponential computing to avoid overflow of the denominator. The subtracted value is usually the maximum input value, so synchronization is required to obtain this maximum value when computing softmax in parallel. However, FlashDecoding++ can avoid this synchronization by utilizing a unified max value pre-decided according to the statistical LLM-specified input distribution to the SoftMax.

BMInf[48] introduces the Big Model Inference and Tuning toolkit, which employs model quantization, parameter-efficient tuning, and CPU-GPU scheduling optimization to reduce computational and memory costs. [49] proposes Splitwise, a technique that schedules the prompt computation and token generation phases to different machines, optimizing hardware utilization and overall system efficiency. FastServe is a distributed serving system that optimizes job completion time for LLMs in interactive AI applications [50]. [51] introduces SpecInfer, a system that accelerates generative LLM serving through tree-based speculative inference and verification. LLMCad is an innovative on-device inference engine specifically designed for efficient generative NLP tasks [52]. It executes LLM on weak devices with limited memory capacity by utilizing a compact LLM that resides in memory to generate tokens and a high-precision LLM for validation.

II-B2 MoE FMs

Mixture-of-Experts (MoE), replacing an FFN with a router and multiple FFNs, is a promising approach to enhance the efficiency and scalability of LLMs. However, deploying MoE-LLMs presents challenges in memory scheduling because of their huge number of parameters and the uncertain choices of experts, particularly in resource-constrained environments. Recent research has addressed these challenges through novel architecture designs, model compression techniques, and efficient inference engines. [53] proposes DeepSpeed-MoE, an end-to-end solution for training and deploying large-scale MoE models. [54] introduces EdgeMoE, an on-device inference engine designed for MoE-LLMs that loads popular experts to GPU to prioritize memory and computational efficiency. [55] proposes a novel expert offloading strategy utilizing the intrinsic properties of MoE-LLMs. It uses Least Recently Used (LRU) caching to store experts since certain experts are reused across consecutive tokens. It also accelerates the loading process by predicting the selection of experts for future layers based on the hidden states of earlier layers.

Several studies have focused on developing efficient serving systems and inference engines for MoE-LLMs. [56] introduces MOE-INFINITY, an efficient MoE-LLMs serving system that implements cost-efficient expert offloading through activation-aware techniques, significantly reducing latency overhead and deployment costs. [57] proposes Fiddler, a resource-efficient inference engine that orchestrates CPU and GPU collaboration to accelerate the inference of MoE-LLMs in resource-constrained settings. Furthermore, [58] introduces Pre-gated MoE, an algorithm-system co-design approach that employs a novel pre-gating function to enhance the inference capabilities of MoE-LLMs by enabling more efficient activation of sparse experts and reducing the memory footprint. These advancements in MoE-LLMs deployment and inference engines demonstrate the ongoing efforts to make these powerful models more accessible and efficient across various computing environments.

II-C Communication Optimization

In addition to computation and memory optimization, communication overhead is another major challenge in deploying large models within the edge-cloud environment. The heterogeneity of edge devices and communication channels necessitates a scheduler for computation and network resources when executing FM tasks across different devices.

[59] introduces an “Intelligence-Endogenous Management Platform” for Computing and Network Convergence (CNC), which efficiently matches the supply and demand within a highly heterogeneous CNC environment. The CNC brain is prototyped using a deep reinforcement learning model. It theoretically comprises four key components: perception, scheduling, adaptation, and governance, collectively supporting the entire CNC lifecycle. Specifically, Perception perceives the incoming service request and real-time computing resources. Scheduling assigns the workload to heterogeneous computing nodes in a heterogeneous network. Adaptation is adapting to dynamic resources by ensuring the continuity of services through backup measures, and Governance is the self-governed decentralized computing nodes.

[60] discusses the integration of LLMs into 6G vehicular networks, focusing on the challenges and solutions related to computational demands and energy consumption. It proposes a framework where vehicles handle initial LLM computations locally and offloads more intensive tasks to Roadside Units (RSUs), leveraging edge computing and 6G networks’ capabilities. The authors formulate a multi-objective optimization framework that aims to minimize the cumulative cost incurred by vehicles and RSUs in processing computational tasks, including the cost associated with communication. LinguaLinked[61] is a system to deploy LLMs on distributed mobile devices. The high memory and computational demands of these models typically exceed the capabilities of a single mobile device. It utilized load balancing and ring topology to optimize the delay of computation and communication while maintaining the original model structure. The results demonstrate improvements in inference throughput on mobile devices. [62] presents MegaScale, a production system designed to train and deploy LLMs across over 10,000 GPUs. The synchronization of model parameters and gradients across GPUs can become a bottleneck as the number of GPUs increases. MegaScale addresses communication challenges through a combination of parallelism strategies, overlapping techniques, and network optimizations, resulting in improved training efficiency and fault tolerance [63].

Semantic communication enables much more efficient utilization of limited network resources at the edge by only transmitting the essential semantic information needed for communication purposes. [64] investigates multiple access designs to facilitate the coexistence of semantic and bit-based transmissions in future networks. The authors propose a heterogeneous semantic and bit communication framework where an access point simultaneously sends semantic and bit streams to a semantics-interested user and a bit-interested user. [65] presents a framework for semantic communications enabled by computing networks, aiming to provide sufficient computational resources for semantic processing and transmission. This framework leverages computing networks to support semantic communication. It introduces key techniques to optimize the network, such as semantic sampling and reconstruction, semantic-channel coding, and semantic-aware resource allocation and optimization based on cloud-edge-end computing coordination. Two use cases, an end-cloud computing-enabled video transmission system, and a semantic-aware task offloading system, are provided to demonstrate the advantages of the proposed framework.

II-D Integrated Frameworks

With the emergence of computational and memory optimization methods, numerous integrated frameworks have integrated these techniques, supporting various LLMs and lowering the barriers to LLM deployment. These frameworks leverage CPU and GPU backends, enabling the widespread local execution of LLMs.

The most popular framework to deploy LLMs at the edge is the llama.cpp[66], which pioneered the open-source implementation of LLM execution using only C++. We provide an overview of various open-source frameworks and their respective features in Table II. The frameworks can be categorized into heterogeneous and GPU-only backends, ranging from mobile and embedded devices to high-end computing systems. Different suppliers offer specialized development platforms and APIs for their hardware products (i.e., CPU and GPU). CUDA (Compute Unified Device Architecture) is a parallel computing platform developed for NVIDIA GPU. ROCm (Radeon Open Compute platform) is a software platform for high-performance computing using AMD GPU. Metal is a low-overhead hardware-accelerated 3D graphic and compute shader API developed by Apple for its own GPUs. Some frameworks like Vulkan and OpenCL (Open Computing Language) facilitate development across different hardware and operating systems. Vulkan is an API for cross-platform access to GPUs for graphics and computing. OpenCL is a framework for writing programs that execute across heterogeneous backends, including CPU, GPU, FPGA, and other hardware.

| Framework | Features and Functions | Backend |

|---|---|---|

| LLaMA.cpp[66] | High-performance inference of LLaMA and other LLMs | CPU(x64, ARM), GPU(CUDA, ROCm, Metal), OpenCL |

| MLC-LLM[67] | Accelerating LLMs inference using Machine Learning Compilation | GPU(CUDA, ROCm, Metal, Vulkan, WebGPU), OpenCL |

| MNN-LLM[68] | Inference for Mobile Neural Network LLMs | CPU(x64, ARM), GPU(CUDA), OpenCL |

| FastChat[69] | Platform for both training and inference of LLM-based chatbots | CPU(x64), GPU(CUDA, ROCm, Metal) |

| DeepSpeed[70] | DeepSpeed-Inference integrates multiple parallelisms and custom kernels, communication, and memory optimizations. | CPU(x64, ARM), GPU(CUDA, ROCm) |

| OpenVINO[71] | Convert FMs and deploy them on Intel hardware | CPU(x64), GPU(OpenCL) |

| MLLM[72] | Mobile LLMs Inference | CPU(x86, ARM) |

| FP6[73] | Mixed precision for LLMs | GPU(CUDA) |

| Colossal-AI[74] | Distributed training and inference for LLMs using multiple parallelisms | GPU(CUDA) |

| Megatron-LM[75] | Efficient LLMs inference on GPUs with system-level optimizations | GPU(CUDA) |

| TensorRT-LLM[76] | NVIDIA TensorRT for LLMs inference | GPU(CUDA) |

Recently, several novel frameworks have been specifically designed for the deployment of LLM-based applications/agents. These frameworks provide abstract interfaces that facilitate the design of complex LLM-based applications, such as chain-of-thought reasoning and retrieval-augmented LLMs. LangChain is a powerful framework aimed at simplifying the development of applications that interact with LLMs [77]. It offers a set of tools and abstractions that enable developers to construct complex, dynamic workflows by chaining various operations, such as querying a language model, retrieving documents, or processing data. Parrot is a serving system for LLM applications that optimizes performance across multiple requests [78]. It introduces the concept of a semantic variable to define the input or output information of LLM requests. To enhance system performance, Parrot schedules requests based on a predefined execution graph and latency sensitivity, and it shares the key-value cache among requests to accelerate the prefilling process. SGLang is another LLM agent framework designed to efficiently execute complex language model programs [79]. It simplifies the programming of LLM applications by providing primitives for generation and parallelism control. Additionally, it accelerates the execution of these applications by reusing key-value caches, enabling faster constrained decoding and designing API speculative execution.

III Resource Allocation and Parallelism

III-A Resource Allocation and Scaling

Edge-cloud computing leverages strong cloud servers and distributed edge devices to handle tasks near the data source. As shown in Figure 4, adaptive resource allocation is crucial for achieving an optimal balance between system performance and cost in edge-cloud environments. Resource management facilitates the optimal utilization of system resources, including processing power, storage space, and network bandwidth. Adaptive algorithms are designed to automatically adjust resource configurations based on real-time workloads and environmental changes, ensuring optimal performance under varying conditions.

Despite their numerous advantages, several challenges also arise in edge-cloud environments. 1) In edge-cloud environments, resources need to be allocated and managed between the central cloud and multiple edge nodes. Distributed resource management may increase the system’s complexity and require more fine-grained scheduling and coordination mechanisms. 2) The load in edge computing scenarios is highly dynamic. Accurately predicting this query load and dynamically adjusting resource allocation demands is difficult. 3) To meet the real-time processing requirements, the system must quickly adapt to changes in execution environments and adjust processing strategies and resource allocations in time. 4) Modern computing environments provide a variety of computing resources (CPU, GPU, TPU, etc.). Optimizing the allocation of these heterogeneous resources for various models based on their computational requirements and current workloads is a complex task.

We have summarized previous research works on resource allocation and adaptive optimization in Table III. The collected research works are divided into two categories: optimization for model services in cloud environments and optimization for IoT applications in edge computing environments. 1) Cloud Environments. This task is to efficiently deploy, manage, and scale machine learning models in the cloud. The objective is optimizing resource usage, reducing computing costs, and achieving performance goals in the face of dynamic and unpredictable query loads. This includes resource provisioning algorithms and strategies for utilizing heterogeneous computing resources. 2) Edge Environments. Because of the unique architectures and application scenarios in edge environments, data needs to be processed closer to the user or the geographic location of the data source. This requires well-designed scheduling algorithms and fault tolerance and resilience mechanisms to minimize latency and mitigate network overhead.

| Scenario | Ref. | Year | Target | Method |

|---|---|---|---|---|

| Cloud | Clipper [80] | 17 | Accuracy, Latency and Throughput | Model Containers. |

| MArk [81] | 19 | Latency and Resource Cost | Predictive scaling. | |

| Nexus [82] | 19 | Latency and Throughput | Squishy bin packing. | |

| InferLine [83] | 20 | Latency and Resource Cost | 1. The low-frequency planner. 2. The high-frequency tuner. | |

| Clockwork [84] | 20 | Latency | DNN Workers. | |

| INFaaS [85] | 21 | Latency, Throughput and Resource Cost | Dynamic Model Variant Selection and Scaling. | |

| Morphling [86] | 21 | Resource Cost | Model-Agnostic Meta-Learning. | |

| Cocktail [87] | 22 | Accuracy, Latency and Resource Cost | 1. Resource controller. 2. Autoscaler. | |

| Kairos [88] | 23 | Throughput | Query-distribution mechanism. | |

| SHEPHERD [89] | 23 | Throughput and Utilization | HERD: Planner for Resource Provisioning. | |

| SpotServe [90] | 23 | Resource Cost | Device Mapper. | |

| Edge | Na et al. [91] | 18 | Throughput and Latency | Resource allocation scheme based on Lagrangian and Karush-Kuhn-Tucker conditions. |

| Avasalcai et al. [92] | 19 | Latency | Deployment policy module. | |

| Yang et al. [93] | 19 | Latency | 1. Multi-Dimensional Search and Adjust (MDSA) Algorithm. 2. Cooperative Online Scheduling (COS) Method. | |

| Tong et al. [94] | 20 | Latency and Energy consumption | Deep Reinforcement Learning (DRL) Approach. | |

| Xiong et al. [95] | 20 | Latency and Utilization | Improved deep reinforcement learning (DQN) Algorithm. | |

| CE-IOT [96] | 20 | Resource Cost | 1. Delay-Aware Lyapunov Optimization Technique 2. Economic-Inspired Greedy Heuristic. | |

| Chang et al. [97] | 20 | Latency and Energy consumption | Online Algorithm for Real-Time Decision-Making. | |

| LaSS [98] | 21 | Latency and Utilization | Model-driven approaches. | |

| Ascigil et al. [99] | 21 | Latency and Utilization | Decentralized Strategies. | |

| KneeScale [100] | 22 | Throughput, Latency and Utilization. | Adaptive Auto-scaling with Knee Detection. | |

| CEC [101] | 22 | Latency, Utilization and Accuracy | Control-Based Resource Pre-Provisioning Algorithm (PRCT). |

1) Cloud Environments. The following articles present various advanced solutions to optimize resource allocation in the cloud. Clipper uses model containers to encapsulate the model inference process in a Docker container [80]. Clipper supports replicating these model containers across the cluster to increase the system throughput and utilize additional hardware accelerators for serving. MArk is a generic inference system built on Amazon Web Services. It utilizes serverless functions to address service delays with horizontal and vertical scaling. Horizontal scaling expands the system by adding more hardware instances, while vertical scaling expands the system by increasing the resources of a single instance [81]. MArk uses a Long Short-Term Memory (LSTM) network for multi-step workload prediction. Leveraging the workload prediction results, MArk determines the instance type and quantity required to meet the SLOs using a heuristic approach. Nexus adopts the squishy bin packing method to batch different types of tasks on the same GPU, enhancing resource efficiency by considering the latency requirements and execution costs of each task [82]. It also merges multiple tasks into the same GPU execution cycle as long as the latency constraints are not violated. InferLine utilizes a low-frequency planner and a high-frequency tuner to manage the machine learning prediction pipeline effectively [83]. The low-frequency combinatorial planner finds the cost-optimal pipeline configuration under a given latency SLO. The high-frequency auto-scaling tuner monitors the dynamic request arrival pattern and adjusts the number of replicas for each model. Clockwork achieves an adaptive resource management framework through a fine-grained central controller over worker scheduling and resource management [84, 102]. DNN workers pre-allocate all GPU memory and divide it into three categories: workspace, I/O cache, and page cache. This can avoid repeated memory allocation calls and improve predictability. To cope with changes in query load, INFaaS adopts two automatic scaling mechanisms: vertical auto-scaling at the model level and horizontal auto-scaling at the Virtual Machine (VM) level [85]. The model auto-scaling is handled by the Model-Autoscaler, which decides each model variant’s scaling operations (replication, upgrade, or downgrade) by solving an integer linear programming (ILP) problem. The vertical auto-scaling adds a new VM if the utilization of any hardware resource exceeds a configurable threshold. Morphling utilizes model-agnostic meta-learning techniques to effectively navigate the high-dimensional configuration space, such as CPU cores, GPU memory, GPU timeshare, and GPU type [86]. It can significantly reduce the cost of configuration search and quickly find near-optimal configurations. Cocktail designs a resource controller to manage CPU and GPU instances in a cost-optimized manner and a load balancer to allocate queries to appropriate instances [87]. It also proposes an autoscaler that leverages predictive models to predict future request loads and dynamically adjusts the number of instances in each model pool based on the importance weight of the models. Kairos designs a query distribution mechanism to intelligently allocate queries of different batch sizes to different instances in order to maximize throughput [88]. Kairos transforms the query distribution problem into a minimum-cost bipartite matching problem and uses a heterogeneity coefficient to represent the relative importance of different types of instances. This allows for better balancing of the resource usage of different instance types. SHEPHERD comprises a planner (HERD), a request router, and a scheduler (FLEX) for each serving group [89]. HERD is responsible for partitioning the entire GPU cluster into multiple service groups. Besides, it performs periodic planning and informs each GPU worker of their designated service group and the models it must serve. SpotServe is a serverless LLM system that adjusts the GPU instances and updates the parallelism strategy flexibly [90]. It uses a bipartite graph matching algorithm (Kuhn-Munkres algorithm) to orchestrate model layers to hardware devices, thus maximizing the reusable model parameters and key-value caches.

2) Edge Environments. Given the limited and heterogeneous nature of edge computing resources, efficient management and scaling of these resources are crucial. The following papers present efficient solutions to improve the performance and efficiency of edge systems. To optimize resource allocation and interference management, [91] proposes a resource allocation scheme with Lagrangian and Karush-Kuhn-Tucker conditions. Based on the number of associated IoT end devices (IDs) and the queue length of the edge gateways (EGs), this scheme calculates the optimal resource allocation parameters and allocates resource blocks to each EG. A decentralized resource management technique is proposed in [92] to deploy latency-sensitive IoT applications on edge devices. The key design is the deployment policy module, which is responsible for finding a task allocation scheme that meets the application’s requirements based on the bids from the bidder nodes and deploying the application to the corresponding edge nodes. The authors in [93] introduce a novel method, called MDSA, to address the challenge of joint model partitioning and resource allocation for latency-sensitive applications in mobile edge clouds. They employ the task-oriented online scheduling method, COS, to collaboratively balance the workload across computing and network resources, thereby preventing excessive wait times. [94] designs a DRL algorithm to determine whether a task needs to be offloaded and to allocate computing resources efficiently. Tasks generated by mobile user equipment (UE) will be submitted to the task queue wait for execution. The algorithm models the task queue as a Poisson distribution. Then it uses the DRL method to select the suitable computing node for each task, learning the optimal strategy during the algorithm training process. Authors of [95] formulate the resource allocation problem using a Markov decision process model. They enhance the DQN algorithm by incorporating multiple replay memories to refine the training process. This modification involves using different replay memories to store experiences under different circumstances. The CE-IoT is an online cloud-edge resource provisioning framework based on delay-aware Lyapunov optimization to minimize operational costs while meeting latency requirements of requests [96]. The framework allows for online resource allocation decisions without prior knowledge of system statistics. The authors of [97] propose a dynamic optimization scheme that coordinates and allocates resources for multiple mobile devices in fog computing systems. They introduce a dynamic subcarrier allocation, power allocation, and computation offloading scheme to minimize the system execution cost through Lyapunov optimization. LaSS is a platform designed to run latency-sensitive serverless computations on edge resources [98]. LaSS employs model-driven strategies for resource allocation, auto-scaling, fair-share allocation, and resource reclamation to efficiently manage serverless functions at the edge. In [99], the authors discuss resource provisioning and allocation in FaaS edge-cloud environments, considering decentralized approaches to optimize CPU resource utilization and meet request deadlines. This paper design resource allocation and configuration algorithms with varying degrees of centralization and decentralization. KneeScale optimizes resource utilization for serverless functions by dynamically adjusting the number of function instances until reaching the knee point, where increasing resource allocation no longer provides significant performance benefits [100]. KneeScale utilizes the Kneedle algorithm to detect the knee points of functions through online monitoring and dynamic adjustment. CEC is a containerized edge computing framework that integrates workload prediction and resource pre-provisioning [101]. It adjusts the resource allocation of the container through the PRCT algorithm to achieve zero steady-state error between the actual response time and the ideal response time.

III-B Parallelism

III-B1 Parallelism type

| Model | Ref. | Type | Target | Design |

|---|---|---|---|---|

| LLM | Megatron-lm [103] | TP | Latency | Introduce an intra-layer model parallelism method. |

| DeepSpeed-inference [70] | TP, MP, DP | Latency and Throughput | 1. Leverages heterogeneous memory systems; 2. Custom GEMM operations, kernel fusion, and memory access optimizations. | |

| Pope et al. [104] | TP, MP, DP | Latency and Throughput | Get the best partitioning strategy for a given model size with specific application requirements. | |

| AlpaServe [105] | MP | Latency | Model parallelism & Statistically multiplexing multiple devices when serving multiple models. | |

| LightSeq [106] | Sequence parallelism | Throughput | Partitioning solely the input tokens. | |

| PETALS [107] | MP, DP | Throughput | Fault-tolerant inference algorithms and load-balancing protocols. | |

| SpotServe [90] | TP, MP, DP | Latency | 1. Dynamic Re-Parallelization. 2. Instance Migration Optimization. 3. Stateful Inference Recovery. | |

| SARATHI [108] | MP, DP | Throughput | Constructs a batch using a single prefill chunk and fills the remaining batch with decode requests. | |

| DistServe [109] | MP, DP | Latency | Assigns prefill and decoding computation to different GPUs. | |

| Small Model | Zhou et al. [110] | MP | Latency | Utilizes dynamic spatial partitioning and layer fusion techniques to optimally distribute the DNN computation across multiple devices. |

| DINA [111] | MP | Latency | A fine-grained, adaptive DNN partitioning and offloading strategy. | |

| CoEdge [112] | MP | Latency and Resource | Exploiting model parallelism and adaptive workload partitioning across heterogeneous edge devices. | |

| Li et al. [113] | MP | Throughput | Partitioning the DNN model on the IOT devices and a cloudlet. | |

| JellyBean [114] | MP | Throughput | Create optimized execution plans for complex ML workflows on diverse infrastructures. | |

| PipeEdge [115] | MP, DP | Throughput | An optimal partition strategy that accounts for the heterogeneity in computing power, memory capacity, and network bandwidth. | |

| PDD [116] | MP | Latency | A multipartitioning and offloading approach for streaming tasks with a Directed Acyclic Graph topology. | |

| B&B [117] | MP | Throughput | 1. Latency Modeling and Prediction. 2. A branch and bound solver for DNN partitioning. | |

| Li et al. [118] | MP | Latency | Fine-grained model partitioning mechanism with multi-task learning based A3C approach. | |

| MoEI [119] | MP | Latency | Optimizes model partition and service migration in mobile systems. |

TP: Tensor Parallelism; MP: Model Parallelism; DP: Data Parallelism.

The large FMs, such as GPT series and Llama series, and other transformer-based architectures, have significantly advanced the capabilities of AI applications. However, these models come with a substantial increase in computational requirements due to their size and complexity. Parallelism, including data parallelism (DP), model parallelism (MP), pipeline parallelism (PP), and tensor parallelism (TP), is a critical design aspect that addresses the scalability, efficiency, and performance challenges associated with deploying and serving large-scale machine learning models. As shown in Figure 5, data parallelism is a technique where data is split into smaller batches and distributed across multiple processors. Different machines can execute the inference simultaneously, thus significantly improving the throughput. Model parallelism aims at splitting the model across different processors, with each processor responsible for a portion of the model’s layers or parameters. This approach is useful for very large models that cannot fit into the memory of a single processor. Model parallelism can be more challenging to implement than data parallelism because it requires careful partitioning of the model and management of the dependencies between layers. Pipeline parallelism includes data parallelism and model parallelism, where the data passes through the model in a sequential manner, transitioning from one processor to another as it traverses different layers of the model. Tensor parallelism is a more fine-grained approach than model parallelism. It splits the individual operations within a layer across multiple processors. For example, if a layer performs a large matrix multiplication, the computation of this matrix can be distributed across multiple processors. This approach can be particularly useful for operations that are computationally intensive and can be easily parallelized, albeit at the cost of increased communication volume. Parallelism enhances performance by enabling simultaneous processing, significantly reducing execution time and increasing the scalability of applications to handle lots of users and more complex models.

There are still some challenges when designing a parallelism strategy for FM in the edge-cloud environment. 1. Memory Constraints: Large models may not fit into the memory of a single GPU, necessitating strategies to distribute them across multiple processing units. 2. Computational Load: The volume of computations required for a single request can be substantial, requiring efficient distribution of computational tasks. 3. Latency Requirements: Applications often require real-time responses, imposing strict latency constraints on the serving infrastructure. 4. Scalability: The ability to serve a large number of concurrent requests without degradation in performance is crucial for ensuring user’s satisfaction. 5. Heterogeneous environment: Edge devices can vary widely in their computation and communication capabilities, operating systems, and available software. A parallelism strategy must be adaptable to different platforms and capable of optimizing execution depending on the specific characteristics of each device.

III-B2 Auto-parallelism

Existing research works design different automatic parallelism methods in cloud and edge scenarios. As shown in Figure 6, the key problem lies in determining an optimal partitioning scheme of a model and strategically allocating each stage to an appropriate device. We summarize some automatic parallelism methods in Table IV. NVIDIA Triton and Tensorflow-serving are two famous inference frameworks for machine learning models [120, 121]. Megatron-lm introduces an efficient intra-layer model parallel approach (i.e., tensor parallelism) that allows for the training of transformer models with billions of parameters without requiring new compilers or significant library changes, fully implementable in PyTorch [103]. DeepSpeed-inference is a comprehensive system designed to address the challenges of efficiently executing transformer model inference at large scales with heterogeneous memory systems, custom GEMM operations, and more [70]. To understand and optimize the trade-offs (e.g., efficiency and latency) for LLM inference, Pope et al. develop an abstract and powerful partitioning framework designed for model parallelism [104]. It allows for dynamically analyzing the best partitioning strategy based on the specific requirements of a given model size and application scenario. AlpaServe presents a novel approach that harnesses the power of model parallelism to scale and statistically multiplex multiple devices, enabling efficient serving of multiple models [105]. This approach is particularly beneficial in scenarios where workloads are bursty and the demand fluctuates significantly. LightSeq designs a sequence parallelism method for long-context transformers that performs partitioning solely the input tokens [106]. PETALS explores cost-efficient methods for inference and fine-tuning of LLMs on consumer GPUs that are connected by the Internet [107]. This approach could enable the pooling of idle compute resources from multiple research groups and volunteers to run LLMs efficiently. It introduces two main innovations: fault-tolerant inference algorithms and load-balancing protocols. SpotServe is a novel system designed to serve generative LLMs on preemptible GPU instances in cloud environments [90]. Preemptible instances offer a cost-effective solution for accessing spare GPU resources at significantly reduced prices, although they can be interrupted or terminated at any moment. SpotServe addresses this challenge with dynamic re-parallelization, instance migration optimization and stateful inference recovery. SARATHI detects inference bubbles in pipeline parallelism caused by the imbalance (i.e., different execution times) between two distinct phases in the LLM: the prefill phase and the decode phase [108]. To tackle this issue, SARATHI addresses it through a decode-maximal batching approach and a chunked-prefills approach. This method divides a prefill request into equal-sized chunks and constructs a batch by utilizing a single chunk from the chunked-prefills and then populates the remaining batch slots with decode requests. DistServe enhances the performance of serving LLMs by disaggregating the prefill and decoding phases [109]. The disaggregation allows each phase to be assigned to different GPUs, eliminating interferences between prefill and decoding operations and allowing for tailored resource allocation and parallelism strategies for each phase.

Some research works design parallelism methods to deploy models on edge devices [122]. Zhou et al. present a framework for deploying deep neural network (DNN) inference on edge devices using model partitioning with containers [110]. This approach utilizes dynamic model partitioning and layer fusion techniques to optimally distribute the DNN computation across multiple devices, considering the available computational resources and network conditions. DINA is a system designed to optimize the deployment of DNN across edge devices in fog computing environments [111]. DINA employs a fine-grained, adaptive DNN partitioning and offloading strategy. By leveraging matching theory, DINA dynamically adapts the partitioning and offloading process based on real-time network conditions and device capabilities, aiming to minimize total latency for DNN inference tasks. CoEdge is designed to facilitate cooperative DNN inference across heterogeneous edge devices by dynamically partitioning the DNN inference workload with an adaptive algorithm [112]. Li et al. partition DNN models and utilize parallel processing techniques to meet the real-time requirements of various applications in mobile edge computing (MEC) environments [113]. They design an approximation algorithm and an online algorithm to determine the model partitioning and placement on a cloudlet as well as the local device. JellyBean aims to optimize machine learning inference workflows across heterogeneous computing infrastructures [114]. JellyBean performs model selection and worker assignment to reduce the costs associated with computing and network resources and meet the constraints of input throughput and accuracy. Recognizing the computational and memory limitations of edge devices, PipeEdge implements a strategic partitioning approach that considers the diversity in computational power, memory size, and network speed across different devices [115]. It designs a dynamic programming algorithm to find the optimal partitioning strategy for distributing the DNN inference tasks. PDD designs an efficient partitioning and offloading method based on greedy and dichotomy principles for DNNs with directed acyclic graph in streaming tasks [116]. B&B first introduces a prediction model for predicting both inference and transmission latency in distributed DNN deployments. They formulate the optimization problem for DNN partitioning and present a branch and bound solver to tackle this problem [117]. Li et al. investigate a multi-task learning approach with asynchronous advantage actor-critic (A3C) to optimize model partitioning in MEC networks for reducing inference delay [118]. MoEI is a task scheduling framework for device-edge systems that optimizes model partition and service migration in an MEC environment [119]. They develop two algorithms: one based on game theory for offline optimization and another leveraging proximal policy optimization for online, adaptive decision-making processes in a distributed environment.

IV Foundation Model & Compression

IV-A Current Foundation Model

| Model | Time(Y.M) | AF | Attention Type | PE | ||||

|---|---|---|---|---|---|---|---|---|

| T5 | 19.10 | 60M11B | 624 | 8128 | 5121024 | ReLU | Multi-head | Relative |

| GPT-3 | 20.05 | 125M175B | 1296 | 1296 | 76812288 | GELU | Multi-head | Sinusoidal |

| PanGu- | 21.04 | 2.6B207.0B | 3264 | 40128 | 256016384 | GELU | Multi-head | Learned |

| ERNIE 3.0 | 21.07 | 10B | 48, 12 | 64, 12 | 4096, 768 | GELU | Multi-head | Relative |

| Jurassic-1 | 21.08 | 7.5B, 178B | 32, 76 | 32, 96 | 4096, 13824 | GELU | Multi-head | Sinusoidal |

| Gopher | 21.12 | 44M280B | 880 | 16128 | 51216384 | GELU | Multi-head | Relative |

| LaMDA | 22.01 | 2B137B | 1064 | 40128 | 25608192 | gated-GELU | Multi-head | Relative |

| MT-NLG | 22.01 | 530B | 205 | 128 | 20480 | GELU | Multi-head | \ |

| Chinchilla | 22.04 | 44M16.183B | 847 | 840 | 5125120 | \ | Multi-head | \ |

| PaLM | 22.04 | 8.63B540.35B | 32118 | 1648 | 409618432 | SwiGLU | Multi-query | RoPE |

| OPT* | 22.05 | 125M175B | 1296 | 1296 | 76812288 | ReLU | Multi-head? | RoPE |

| Galactica* | 22.11 | 125M120.0B | 1296 | 1280 | 76810240 | GELU | Multi-head | Learned |

| BLOOM* | 22.11 | 559M176.274B | 2470 | 16112 | 102414336 | GELU | Multi-head | Alibi |

| LLaMA* | 23.02 | 6.7B65.2B | 3280 | 3264 | 40968192 | SwiGLU | Multi-head | RoPE |

| LLaMA 2* | 23.07 | 7B70B | 3280 | 3264 | 40968192 | SwiGLU | Grouped-query | RoPE |

| Baichuan* | 23.09 | 7B, 13B | 32, 40 | 32, 40 | 4096, 5120 | SwiGLU | Multi-head | RoPE, AliBi |

| Qwen* | 23.09 | 1.8B14B | 2440 | 1640 | 20485120 | SwiGLU | Multi-head | RoPE |

| Skywork-13B* | 23.10 | 13B | 52 | 36 | 4608 | SwiGLU | Multi-query | RoPE |

| Falcon* | 23.11 | 7B170B | 3280 | 64 | 454414848 | GELU | Multi-group | RoPE |

| StarCoder* | 23.12 | 15.5B | 40 | 48 | 2048 | \ | Multi-query | Learned |

| Yi* | 24.03 | 6B, 34B | 32, 60 | 32, 56 | 4096, 7168 | SwiGLU | Grouped-query | RoPE |

| Llama 3* | 24.04 | 8B, 70B | \ | \ | \ | SwiGLU | Grouped-query | RoPE |

* indicates open-source. PE: Positional Embedding. AF: Activation Function

| Model | Time(Y.M) | Basic LM | |

|---|---|---|---|

| mT5 | 20.10 | 300M13B | T5 |

| FLAN | 21.09 | 137B | LaMDA |

| Flan-T5 | 21.10 | 80M11B | FLAN, T5 |

| Flan-cont-PaLM | 21.10 | 62B | FLAN, PaLM |

| Flan-U-PaLM | 21.10 | 540B | FLAN, U-PaLM |

| Alpaca | 23.03 | 7B | LLaMA-7B |

| FLM-101B | 23.09 | 101B | FreeLM |

| Model | Time | Language Model | Vision Model | VisionLanguage | Input | Output | ||

|---|---|---|---|---|---|---|---|---|

| Flamingo | 22.04 |

|

NFNet F6 | Perceiver Resampler | text, image, video | text | ||

| MiniGPT-4 | 23.04 | Vicuna | EVA-CLIP ViT-g/14 | Linear | text, image, box | text, box | ||

| mPLUG-owl | 23.04 | Vicuna | CLIP-ViT-L/14 | Linear | text, image | text | ||

| PandaGPT | 23.05 | Vicuna | ImageBind | Linear |

|

text | ||

| Shikra | 23.06 | Vicuna | CLIP ViT-L/14 | Linear | text, box, image, point | text, point, box | ||

| Qwen-VL | 23.08 | Qwen-7B | CLIP ViT-G/14 | Cross Attention | text, image, box | text, box | ||

| NExT-GPT | 23.09 | Vicuna | ImageBind | Linear | text. image, audio, video | text. image, audio, video | ||

| CogVLM | 23.09 |

|

EVA-CLIP Vit-E/14 | MLP | text, image | text | ||

| Ferret | 23.10 | Vicuna | CLIP-ViT-L/14 | Layer | text, image | text | ||

| OneLLM | 23.12 | LLaMA-2 | CLIP-ViT | Transformer |

|

text | ||

| NExT-Chat | 23.12 | Vicuna | CLIP-ViT | \ | text, image, box | text, box, mask | ||

| Gemini | 23.12 | \ | \ | \ | text, image, video, audio | text | ||

| Gemini 1.5 | 24.04 | \ | \ | \ | text, image, video, audio | text |

Since the release of ChatGPT, FMs, especially LLMs, have become increasingly important in daily life. Figure 7 presents a timeline of some popular LLMs. Table V highlights the structural features of several basic LLMs. Table VI and Table VII list various instruction-tuned models and multimodal models respectively.

IV-A1 Large Language Models

Current LLMs are based on Transformer, a neural network based on attention mechanisms[123]. Most LLMs use the decoder-only architecture, which is beneficial to few-shot capabilities. The researchers design different LLMs based on various Transformer architectures with different pretraining datasets.

T5 is based on the encoder-decoder transformer architecture [123]. The authors found that transfer learning can significantly enhance performance, especially when combined with a lot of high-quality data [124], so they design pre-training tasks as text-to-text tasks to pre-train a model. GPT-3 is published by OpenAI, with a major enhancement in in-context learning capabilities compared with GPT-2 [125] through model scaling [126]. To improve the multilingual understanding, Zeng et al train PanGu- on a 1.1TB high-quality Chinese text corpus, which exhibits decent performance in various Chinese NLP tasks under few-shot or zero-shot conditions [127]. ERNIE 3.0 integrates autoregressive and autoencoding networks, which allows easy customization for natural language understanding and generation tasks, solving the disadvantage of downstream language understanding in previous LLMs trained on plain text [128]. Jurassic-1 series includes the J1-Large(7.5B) and the J1-Jumbo(178B). J1-Jumbo’s architecture is improved to address the depth-to-width expressivity tradeoff found in self-attention networks [129]. At a later stage, Gopher is introduced and achieves state-of-the-art performance in most of the 152 tasks [130].

To enhance LLMs’ safety and response quality, LaMDA is fine-tuned on labeled data and can reference external knowledge sources. LaMDA generates multiple response candidates in dialogues, filters out those with lower safety scores, and outputs the one with the highest quality score [131]. MT-NLG, a 540B model with strong zero, one, and few-shot capabilities, is trained with an efficient and scalable 3D parallel system [132]. Previous studies focus on increasing the model parameters without enlarging the size of pretraining tokens. Hence, DeepMind studied how to balance the number of parameters and tokens within a given computational budget and found that the number of parameters and tokens should scale equally. Based on this, Chinchilla is trained with 1.4T tokens and demonstrates superior performance on numerous downstream tasks compared to other LLMs such as Gopher (280B) [133] and GPT-3 (175B) [126] [130]. PaLM uses a decoder-only transformer architecture instead of an encoder-decoder architecture [125] to enhance few-shot capability. OPT, comparable to GPT-3, is developed to address the problem that most LLMs are not open-sourced and have limited access to other developers [134]. To help researchers find useful information, Galactica is trained on a vast amount of scientific corpora, reference materials, and other academic databases and outperforms other LLMs on various scientific tasks [135]. To promote the transparency of LLM research, BLOOM is published and open-sourced. It is trained on a dataset with hundreds of sources in 46 natural languages and 13 programming languages. Benchmarks suggest that fine-tuning BLOOM with multitask prompts can improve its performance [136].

Based on Chinchilla’s contribution, which indicates the number of tokens and parameters should scale equally [130], LLaMA focuses on using more training tokens to achieve optimal performance. Although Hoffman et al. suggest training a 10B model on 200B tokens, LLaMA-7B is trained on 4T tokens, indicating that increasing the number of tokens can still enhance the model’s performance [137]. Building on this observation, MetaAI publishes and open-sources LLaMA 2. It utilizes a larger corpus, longer context lengths, and grouped-query attention to train the model [138]. Following the LLaMA series, Baichuan is released to enhance the performance of Chinese NLP tasks. To enhance the compression rate for Chinese, Byte-Pair Encoding is adopted as the tokenization algorithm, and the tokenization model is trained on 20M multilingual corpora. It separates all numbers into individual digits to enhance the model’s mathematical capabilities and integrates various optimizations for Chinese support, including operator, tensor partitioning, mixed-precision, training recovery, and communication technologies [139] [140]. Bai et al. publish the QWEN series of language models, which includes QWEN, Qwen-Chat, CODE-QWEN, CODE-QWEN-CHAT, and MATH-QWEN-CHAT. QWEN series shows strong performance compared to other open-source models, while a little inferior compared to the proprietary models [141]. Wei et al. develop Skywork-13B, which is trained on a corpus of over 3.2T tokens extracted from English and Chinese texts [142]. Falcon series is trained on high-quality corpora primarily assembled from web data. Almazrouei et al. release a custom distributed training codebase that allows efficient pretraining of these models on up to 4,096 A100s on cloud AWS infrastructure [143]. Given the widespread use of code-generating LLMs, StarCoder, trained in over 80 programming languages with multi-query attention, is released and open-sourced for public use [144].

The team of Yi series designs a model of 34B parameters to retain complex reasoning and emergent capabilities while enabling inference on consumer-grade hardware, such as the RTX 4090. The model is trained on a high-quality dataset with 3.1T tokens [145].

IV-A2 Instruction-tuned Models

Instruction-tuning is an approach that fine-tunes pretrained LLMs on a formatted language dataset [146]. This technique improves the model’s understanding and response to inputs with specific instructions by training them on instructional task datasets. An instance of the dataset usually consists of instruction, input, and output. For example, the instruction is “What is the answer to the formula?”, the input is “7+3”, and the output is “10”.

In this subsection, several instruction-tuned models are introduced to demonstrate the development of instruction-tuning. To improve T5’s multilingual capabilities [124], mT5 is trained on a dataset that includes 101 languages [147]. FLAN is based on LaMDA 137B [131] and instruction-tuned on over 60 datasets with natural language instruction templates, significantly improving its performance. Ablation studies reveal that the number of fine-tuning datasets, model scales, and the utilization of natural language instructions are crucial to the success of instruction tuning [148]. The process of fine-tuning Flan-T5, Flan-PaLM, Flan-cont-PaLM, and Flan-U-PaLM is expanded to include datasets from Muffin, T0-SF, NoV2, and CoT. Notably, adding nine CoT datasets greatly enhances the models’ reasoning capabilities [149].

To promote academic research of LLMs, Taori et al. train Alpaca based on LLaMA-7B, using 52k instruction-following demonstrations generated by OpenAI’s text-davinci-003. Alpaca’s performance is very similar to that of text-davinci-003, yet surprisingly, it is smaller in scale and also inexpensive to train() [150]. Based on FreeLM, different strategies are adapted to train FLM-101B on 0.31T tokens, significantly reducing training costs. With just a $100k training budget, FLM-101B is comparable to GPT-3 [126] and GLM-130B [151] [152].

IV-A3 Multimodal Models

LLMs are designed to process and generate text-based information, whereas humans interact with the world through multiple sensors, such as visual and auditory modalities. To bridge this gap, multimodal LLMs (MLLMs) are designed to handle text, images, videos, audio, points, boxes, inertial measurement unit, functional magnetic resonance imaging, etc, enabling them to process diverse tasks. Currently, the training of MLLMs typically involves three stages: pre-training, fine-tuning, and prompting. Training an MLLM from scratch is very costly. Most prior works on MLLMs have focused on aligning existing VMs with pre-trained LLMs and fine-tuning the alignment module to improve performance. We provide some popular MLLMs in Table VII.

Many research works design different methods to align a VM to an LLM. Flamingo designs new benchmarks for few-shot visual and language tasks. It uses the Perceiver Resampler and Gated Xattn-Dense to align an LLM and a VM, allowing it to process and integrate visual and textual data sequences. It demonstrates strong performance with few-shot capabilities in visual question answering and close-ended tasks [153]. Different from Flamingo, miniGPT-4, mPLUG-owl, and PandaGPT use a linear layer to align. miniGPT-4 demonstrates that correctly aligning visual features with an LLM can unlock the advanced multimodal capabilities of GPT-4 [154]. Training solely on a large-scale image-text paired dataset might lead to unnatural language outputs like repetition and fragmentation. To overcome this, MiniGPT-4 is fine-tuned on a small but higher-quality dataset with more detailed textual descriptions [155]. Meanwhile, mPLUG-owl also demonstrates impressive command and visual comprehension abilities, multi-turn dialogue, and knowledge reasoning [156]. Previous works align LLM and VM by training them on joint image-text tasks, which could compromise LLM’s language capabilities. CogVLM innovatively incorporates trainable visual expert modules between the attention and feed-forward neural network layers to align LLM and VM. This approach allows for deep integration of visual and textual elements without compromising language capabilities because all parameters of the LLM are fixed [157].

Lots of research works focus on enhancing the MLLMs to process different input and output modalities because previous models can only deal with a small set of modalities. PandaGPT utilizes ImageBind as an encoder that can embed data from different modalities into the same feature space [158]. Therefore, PandaGPT has strong cross-modal zero-shot capabilities, allowing it to naturally integrate multimodal inputs and perform complex multimodal tasks efficiently [159]. NExT-GPT is an end-to-end, general-purpose any-to-any MLLM. It connects the Vicuna with multimodal adapters and various diffusion decoders, enabling it to perceive different inputs and generate outputs in arbitrary combinations of text, images, videos, and audio. It leverages pre-trained encoders and decoders and requires only a few parameters to be tuned, thus reducing training costs and facilitating expansion to more modalities [160]. OneLLM aligns eight modalities through a universal encoder and projection modules, along with a step-by-step multimodal alignment process, pioneering a universal MLLM architecture. Initially, it connects the LLM and visual encoder via an image projection module. It then expands to additional modalities using a universal projection module and dynamic routing, demonstrating its scalability and generality [161]. Gemini is an MLLM pre-trained on a large multimodal dataset, capable of handling a variety of text inputs interleaved with audio, video, and images. Gemini 1.5 utilizes an MoE architecture, enabling it to process multimodal inputs up to 10M tokens in length, thus exhibiting exceptional performance across modalities [162, 163].