Density Weighting for Multi-Interest Personalized Recommendation

Abstract

Using multiple user representations (MUR) to model user behavior instead of a single user representation (SUR) has been shown to improve personalization in recommendation systems. However, the performance gains observed with MUR can be sensitive to the skewness in the item and/or user interest distribution. When the data distribution is highly skewed, the gains observed by learning multiple representations diminish since the model dominates on head items/interests, leading to poor performance on tail items. Robustness to data sparsity is therefore essential for MUR-based approaches to achieve good performance for recommendations. Yet, research in MUR and data imbalance have largely been done independently. In this paper, we delve deeper into the shortcomings of MUR inferred from imbalanced data distributions. We make several contributions: (1) Using synthetic datasets, we demonstrate the sensitivity of MUR with respect to data imbalance, (2) To improve MUR for tail items, we propose an iterative density weighting scheme (IDW) with user tower calibration to mitigate the effect of training over long-tail distribution on personalization, and (3) Through extensive experiments on three real-world benchmarks, we demonstrate IDW outperforms other alternatives that address data imbalance.

1 Introduction

††∗This work was done when the author was an intern at Google. Correspondence to {nikhilmehta,animasingh}@google.comRecommender systems are at the core of various online services [49, 16, 34, 38, 2, 40], allowing users to discover items that are of potential interest from a large catalog. Therefore, it is vital for recommendation systems to model user interest behavior effectively. This can be especially challenging for sequential recommender system that takes a sequence of user’s past behavior to predict user’s future interaction, since user’s behavior sequence typically reflects a diverse and dynamic interest profile.

A large scale recommendation system usually consists of two stages, the retrieval stage and the ranking stage. The retrieval stage is critical in narrowing down the candidates items that are relevant to user interests. Existing approaches formulate the retrieval task by assuming a unified low-dimensional representation space for users and items, where a user representation encodes the interest profile of the user and is used to retrieve top candidate items. Recent work [31, 10] has shown that a fixed-length representation can become a bottleneck in modeling user interests for large-scale datasets. Modern approaches [10, 27] circumvent the limitations of a single user representation (SUR) by using multiple user representations (MUR) to model the holistic interest profile from the past behaviors of users. A synthetic experiment in Figure 1 (top-row) illustrates the advantage of using MUR (with 5 representations) over SUR to model the user interests. Specifically, SUR-based model is unable to capture multiple interest regions, whereas MUR can learn multiple representations to enable this, allowing for better personalization.

While MUR can certainly address the desideratum of modeling capacity required for diverse and volatile interests, the overall imbalance in the user interest distribution can hinder the model’s ability to learn representations that reflect different interests of the user. Although there have been recent works on different approaches to learn multiple user representations [31, 10, 27], studying the effect of interest/item imbalance on the ability to capture multiple interests with MUR is largely unexplored. Without taking into account the imbalance problem, existing methods for MUR implicitly assume that the so-called interests of the users are equally represented in the dataset. Unfortunately, real-world settings rarely offer a balanced distribution of items/interests. In fact, training models over highly skewed long-tail distributions is one of the most notorious problems prevalent when building recommender systems [35]. Figure 1 (bottom-row) highlights the issues observed with imbalanced data. Both SUR and MUR dominate on the head interests, while completely ignoring other interests. Therefore, an MUR-based recommender system can be vulnerable to the imbalanced distribution in the training data. We show that a highly skewed distribution of items/interests causes the gains from using MUR over SUR to diminish since the model is unable to capture multiple interests with MUR.

In this paper, we identify and address the vulnerability of MUR to interest imbalance by making a number of technical innovations. We begin by analyzing MUR on synthetic datasets generated for the sequential recommendation task. The generative mechanism, despite its simplicity, can be used to control several characteristics that are inspired from the real-world datasets, including sparsity in items and user interests, user interest volatility, and interest exploration. The main motivation behind using a synthetic dataset is to formulate a framework to analyze the effect of interest and item imbalance on the retrieval performance. Using synthetic experiments, we derive two important insights. First, we empirically show that the relative improvement observed by using MUR over SUR decreases, especially for tails items, as we increase the skewness in the interest distribution. Second, we show, both quantitatively and qualitatively, that a model trained using MUR leads to a better clustering structure for item representations compared to a model trained with a SUR. However, as we increase the skewness in the data, the quality of clustering structure decreases due to underrepresented tail items in the training dataset.

Our findings from the analysis on synthetic data motivate our key contribution to address the vulnerability of MUR to skewness and improve its robustness to data imbalance. We propose a novel density-based weighting approach, referred to as Iterative Density Weighting (IDW), designed to reduce the sensitivity of MUR to imbalanced training distribution. IDW leverages the item representation space to mitigate the effect of training the recommender model on a highly skewed item/interest distribution. In our synthetic experiments, we show that IDW improves the robustness of MUR to distribution skewness by consistently outperforming the baseline MUR for overall and tail performance. Moreover, IDW leads to an improved clustering structure of the item representations even when the model is trained on a highly skewed interest distribution. On applying the proposed weighting method on real-world datasets, we observe improved performance relative to several other baselines.

To summarize, the main contributions of this work are as follows:

-

•

A comprehensive analysis on synthetic data, describing the sensitivity of model performance and clustering structure to data skewness for SUR and MUR. Our analysis depicts the advantage of MUR over SUR from the standpoint of the structure of the learned item representations, which could be of independent interest to the community when comparing MUR and SUR models.

-

•

We propose a novel density-based weighting mechanism that leverage improved item representation structure to make MUR robust to imbalanced interest distributions. Combination of density-based weighting with user model calibration results in improved tail item recommendations along with better clustering structure of the learned item representations.

- •

2 Related Work

Multi-Interest User Representations. Extracting multiple interest user representations for recommendation systems was first proposed in [47], where a user is modeled by a set of latent vectors, and the recommendation score of each item is defined as the maximum dot product of the item representation and each latent vector. This was extended in the Embedding-Mixture Factorization (EM-F) and the Projection-Mixture Factorization (PM-F) [27] where the item recommendation score is a weighted combination of recommendation scores obtained using each user interest representation. Recently, there has been work on sequential recommender systems where multiple interest representations are used to model a user’s item history. In [27], Mixture LSTM (M-LSTM) was proposed that combines the LSTM network with the PM-F model to extract multiple user representations in a sequential setting. ComiRec [30] and MIND [10] are recent methods that propose multi-interest extractor layers based on the capsule routing mechanism. Although there is a vast amount of work that has focused on model architectures for MUR, the robustness of MUR to imbalanced user interest distributions is largely unexplored. We analyze the effect of skewness in user interests on MUR and propose a novel density-based weighting scheme for improving the recommendation performance of tail items with MUR. While this paper focuses on a transformer encoder-based architecture to model MUR (details in Section 3.1), our work nicely complements prior advances made in modeling MUR since the proposed density-based weighting can be used with other multi-interest frameworks [30, 10, 27] proposed for recommendation systems.

Long-tail Learning. In most real-world datasets, the data distribution is highly skewed and often follows a long-tail distribution. Learning models on long-tail distribution has been studied across many areas including computer vision [12, 33], natural language processing [13], and recommendation systems [35]. In recommendation datasets, the long-tail distribution is especially apparent due to the large scale of items and highly skewed user interactions. The recommender systems are heavily influenced [14, 5] by the long-tail distribution, leading to poor performance for the tail items.

There are several approaches that can allow learning from imbalanced data. Recent methods [46, 36, 50] that perform head-to-tail knowledge transfer have gained attention in computer vision. Knowledge transfer has also been extended in recommendation systems [51]. Approaches to tackle cold-start recommendation [53, 32, 15, 29] can also improve tail item prediction. Aforementioned approaches rely on additional side information (item and user content features), which can be difficult to acquire, especially when there are heterogeneous items [52] that do not share a common feature space. In this work, we only focus on approaches that do not depend on additional side information.

Frequency-based regularizers [49, 37, 4] have been used to reduce the sampling bias when training models on long-tail distribution. Some methods modify the loss objective [33, 12, 5, 18] using sample weighting for each class/item in the dataset. However, these methods use the item frequencies in the training dataset to determine the weights, which can lead to sub-optimal performance for sequential recommendation systems as item frequencies alone lack the contextual information contained in user’s past history. Unlike the above methods, our proposed density weighting uses the learned item representations that encapsulate the contextual information from the user’s history to determine and mitigate the effective imbalance. Closest to our method is the recent work [44, 48] used in regression models, which uses density estimation in the target space to mitigate imbalance. However, in contrast to the regression datasets that have fixed labels, in recommendation system, the labels for the user tower ( item representations) are also learned using a separate model, making it a more challenging task. We make several contributions to enable density weighting for recommendations.

3 Methods

Problem Statement. Consider a set of users and items , where denotes a user and denotes an item. We will use the notation and to denote the total number of users and items respectively. Given the user’s historical behaviour in the form of a sequence of item engagements , our recommendation task is to predict the next item that the user is likely to interact with at the step. For ease of notation, we will denote the training dataset as , where refers to the item and is considered the label for the user .

3.1 Background

Neural Deep Retrieval Model. Motivated by the success of deep learning in computer vision and natural language processing, applying deep neural networks (DNNs) for recommender systems is prevalent. Deep representation learning provides a powerful framework to encode the users and the items in a low-dimensional representation space, which can be used for retrieval in recommender systems. We apply the two-tower DNN model that aims to learn a common low-dimensional representation space for users and items. Figure 2 provides an illustration of a typical two-tower model architecture used for next item prediction, where the left and right tower encode the user item history and the candidate items respectively. A user tower consists of a sequence encoder ( Transformer, LSTM, .) and a representation extraction layer that reduces the outputs of the sequence encoder to a single user representation ( by weighted aggregation or by picking the hidden output of the encoder from the latest time step). The item tower is a multi-layer perceptron (MLP) model that learns the representation of item candidates given a one-hot encoding of the item. The towers can also easily incorporate side-information of users and items, if available. We denote the user tower as and the item tower as , where and are the set of trainable parameters of the two respective architectures. The two-tower model uses the batch softmax optimization [4, 11] for training. The item probabilities are calculated over a random batch:

| (1) |

where is the set of item labels in the sampled batch and is the affinity score defined as the standard dot product. Naively using the batch softmax probabilities leads to a biased-estimate of , where popular items are overly penalized as negatives due to high probability of being included in the batch. To mitigate this bias, LogQ correction [21, 49] is used with the sampled softmax loss, where the logit scores are corrected as follows:

| (2) |

Here p(y) denotes the sampling probability of item in a random batch. Given the softmax probabilities, the recommendation task can be formalized as a multi-class classification problem with as the label for the input , and the negative log-likelihood () of the training dataset can be defined as:

| (3) |

The models are trained to minimize with respect to the parameters and . Thus, the affinity score for the user representation and the corresponding label item (also referred to as the “positive item”) is increased, while the affinity for the user representation and other item labels in the mini-batch (referred to as the “negative items”) is reduced. The optimization procedure implicitly pulls items that correspond to the same user interest close to each other in the representation space, while items belonging to different interests are pushed away.

Module for Multi-Interest Extraction. We extend the standard user tower described in Figure 2 to model MUR using a multi-interest extraction layer. The goal of such a layer is to output multiple user representations, , where denotes the number of user representations extracted. While could be learned separately using separate user towers, such an approach will be inefficient in both computation and the number of parameters. Humeau et al. [20] recently proposed an efficient and scalable approach that uses global attention to extract multiple context embeddings for the natural language sentence matching tasks. We adapt this idea of global attention for a recommender system to extract multiple representations for a given user history. Let the output of the sequence encoder be , then to obtain multi-interest user representations, we learn global query vectors , where each extracts the user representation using attention:

Given multiple user representations, we compute the affinity score for each candidate as follows:

| (4) | ||||

As the above multi-interest extraction approach uses global query parameters in attention, we refer this representation extraction layer as parametric attention. Note that there are alternate methods for multi-interest extraction layers [10, 27, 31], but in this work we only consider parametric attention to extract MUR due to its simplicity and scalability. More importantly, the focus of this work is on studying the effect of long-tail distribution on MUR in the two-tower DNN architecture [11], which has become a popular framework for recommendations in the recent years.

3.2 Synthetic Data Analysis

In this section, we discuss our analysis on the synthetic data, which forms the basis of our density weighting scheme. The synthetic data for sequential recommendation is generated using a finite state hidden Markov model [3, 22], where we consider the hidden state clusters as the so-called user interests. To define the Markovian process for the sequence, we assume a user-specific interest transition matrix which is a function of the user interests, distribution skewness, and user interest volatility i.e., probability of switching between different user interests. A detailed description of the data generating process is provided in Appendix A. Figure 3(a)-3(c) show examples of synthetically generated data distributions. The synthetic data models long-tailed distributions for both interest and item space to capture the power-law behavior of items encountered in real recommendation systems.

While the Markovian assumption may not reflect complex real-world scenarios, we find that our observations are consistent in real-world datasets as described later in Section 4. Our key motivation to use synthetic data is to study the effect of distribution skewness on modeling capabilities of MUR. Moreover, item clusters in the synthetic data can be treated as ground-truth interests for the users, allowing us to quantitatively (using mean-silhouette (MS) score [41]) and qualitatively (See Figure 1) evaluate the structure of the learned item representations for different models.

Retrieval performance. To study the sensitivity with respect to an imbalanced distribution, we evaluate the performance of the models trained with varying degree of data skewness. Specifically, we vary the imbalance in the user interest distribution and plot the recommendation performance (Hit Ratio, expressed as %) across different splits: tail/infrequent clusters (Figure 3(d)), head/frequent clusters (Figure 3(e)), and all clusters (Figure 3(f)). As seen in Figure 3(d), increasing the skewness causes the performance of MUR-based model to decrease for tail interests, which also results in decreasing average performance across all the interest clusters (Figure 3(f)). In fact, when the user interests are highly skewed, the performance of MUR-based model becomes comparable to the performance obtained using the SUR-based model. This is due to the under-representation of items in the tail interest clusters, causing the model to dominate on the head interest items. On the other hand, using our proposed density weighting with MUR, referred to as MUR-IDW (described in Section 3.3), makes the model robust to imbalance as shown in Figure 3(d) and Figure 3(f).

Clustering quality. We inspect the structure of the representation space learned using different models. A good representation space would have localized clusters representing user interests, such that the items belonging to a similar interest have item representations close to each other. First, we do a qualitative analysis of the clustering structure learned using MUR and SUR. Figure 3(h)- 3(i) shows that MUR leads to a better clustering structure of the representation space compared to the representations learned with SUR on the synthetic data.

Next, we measure the clustering quality of the item representations using the mean-silhouette (MS) [41] score. The MS score measures the average tightness and separation of the clusters. For a point belonging to the ground truth cluster , we define the silhouette as , where is the mean distance between and other points in the cluster , and is the mean distance between and the points in the closest cluster. Specifically, we can calculate and as:

The MS score is computed by taking the average over all points, . The MS score takes the value , where a higher MS depicts distinct and separated clusters, and a lower MS depicts overlapping clusters. Figure 3(g) shows the MS score for varying degrees of skewness in the synthetic dataset. While the clustering structure obtained with MUR is consistently better than with SUR

, the clustering quality in the representation space deteriorates with increasing degree of imbalance.

3.3 Iterative Density Weighting

In this section, we describe our novel density-weighted loss for the two-tower recommendation system, which advocates leveraging the structure of the item representation space to learn and mitigate the effective imbalance of the items. This proposal aims to leverage the item representation space enabled by MUR to enhance the overall recommendation performance.

To determine the effective item imbalance, we use item distribution smoothing that convolves a symmetric kernel with the empirical distribution of the items. This results in a kernel-smoothed version of the item distribution that accounts for the overlap in information of nearby items in the representation space. We then use this kernel-smoothed item distribution to learn a balanced loss that is uniformly weighted in the item representation space.

We first consider the simple frequency-balanced [18] version of the loss function described in (3):

| (5) |

corresponds to the empirical distribution of the items and denotes the item frequency. Minimizing the balanced loss in (5) aims to learn a uniform loss function across all the items in the training dataset. However, the imbalance in may not reflect the effective imbalance of the items in the representation space, and naively optimizing (5) can lead to overfitting of the model to tail items that have low . As we show in Section 4, such a frequency-based balanced loss can lead to poor overall recommendation performance.

In contrast, our density-weighted loss uses the kernel-smoothed version of the empirical item distribution:

| (6) |

is the symmetric Gaussian kernel that characterizes the distance of items in the representation space. Therefore, leverages the structure of the representation space in determining the effective imbalance of items. In particular, captures the effective weight of the region defined by the neighborhood of item in the representation space, thereby reducing the model’s sensitivity to through kernel smoothing. Note that since is determined using the item representations learned in the item tower, in practice we use . As both the kernel-smoothed density and the loss used to train the parameters of the two-tower recommender model } are inter-dependent, we propose an iterative two-phase procedure (Algorithm 1) to train the two-tower architecture. Given the model parameters and the density at iteration ,

-

•

In the Sleep Phase, the item representations from the item tower are used to update the density through kernel-smoothing.

-

•

In the Wake Phase, the two-tower model is trained with the balanced loss given by the item density from the previous iteration.

Sleep Phase: Update Item Weights. To learn a balanced loss function with respect to , we introduce item-specific weights , such that the initial . The sleep phase updates the item weights based on the item representations defined by the current state of the item tower. In particular, the density update is defined as:

| (7) |

refers to the representation of the item, is the Gaussian kernel function with the bandwidth parameter (the bandwidth is selected based on Scott’s Rule [43], which is a standard bandwidth selection method). In practice, we found using relative normalized density [44] as a better estimate:

| (8) |

The relative item density of each item is and the item weights are updated with resulting in a negative correlation with the relative item density. To avoid a drastic change in the loss function, the weights are updated gradually using a momentum parameter :

| (9) |

where we use the subscript to denote the item. Since the updates are negatively correlated with the item density, they allow the model to progressively upweight/downweight the regions with low/high item density respectively. The complete procedure followed in the sleep phase is summarized in Algorithm 2.

Wake Phase: Train Two-Tower Model. Given , the two-tower model is trained by minimizing the following weighted loss:

| (10) | ||||

| (11) |

Stopping Criterion. To detect convergence in IDW, we track . As the model is trained, the loss contributions becomes uniform in the item representation space. We visualize the evolution of the item representation space for the synthetic data in Figure 4. We observed that making the loss uniform in the representation space, progressively improves the clustering structure of the item representations. The improvement in the clustering structure is also reflected in the mean-silhouette (MS) score in Figure 4 (Top).

User Tower Calibration. While the uniform loss structure improves the clustering structure of the items, the retrieval performance over the head clusters can drop compared to when the model is trained using the unweighted likelihood. Therefore, to mitigate the performance drop for head clusters, once we improve the item clustering structure using IDW, we freeze the item tower and train only the user tower with the unweighted loss. We study the importance of user tower calibration in section 4.6.3.

4 Experiments

| Method | # User Representations (M) | MovieLens 1M | Kindle Store | Clothing, Shoes and Jewelry | |||

|---|---|---|---|---|---|---|---|

| HR@20 | NDCG@20 | HR@20 | NDCG@20 | HR@20 | NDCG@20 | ||

| SUR [20] | 1 | 80.62 0.18 | 47.24 0.14 | 63.33 0.38 | 30.87 0.56 | 32.43 0.66 | 13.44 0.54 |

| MUR [20] | 5 | 80.82 0.20 | 47.72 0.23 | 64.66 0.45 | 31.16 0.38 | 33.92 0.94 | 14.90 0.52 |

| Frequency Balance [18] | 5 | 75.75 0.32 | 40.36 0.24 | 55.96 0.77 | 23.49 0.33 | 22.38 0.52 | 7.44 0.31 |

| Focal Balance [33] | 5 | 80.88 0.22 | 45.48 0.37 | 62.17 0.94 | 28.56 0.83 | 32.39 1.16 | 13.19 0.61 |

| Qmargin [9] | 5 | 80.35 0.37 | 45.43 0.46 | 63.75 0.71 | 29.87 0.50 | 34.71 1.16 | 14.13 0.52 |

| Item Norm [25] | 5 | 81.36 0.42 | 47.19 0.47 | 65.20 0.49 | 32.19 0.24 | 34.57 0.67 | 14.59 0.71 |

| Item Norm (Post-hoc) [23] | 5 | 80.20 0.53 | 45.87 0.67 | 64.05 0.18 | 30.68 0.22 | 34.32 0.77 | 13.83 0.52 |

| SUR-IDW [Ours] | 1 | 81.85 0.40 | 48.95 0.51 | 64.62 0.28 | 31.67 0.26 | 34.88 0.38 | 15.45 0.16 |

| MUR-IDW [Ours] | 5 | 82.65 0.03 | 49.67 0.14 | 65.24 0.25 | 32.25 0.32 | 37.34 0.63 | 16.33 0.18 |

| MUR vs. SUR | - | +0.25% | +1.02% | +2.10% | +0.94% | +4.59% | +10.86% |

| MUR-IDW [Ours] vs. SUR | - | +2.52% | +5.14% | +3.02% | +4.47% | +15.14% | +21.50% |

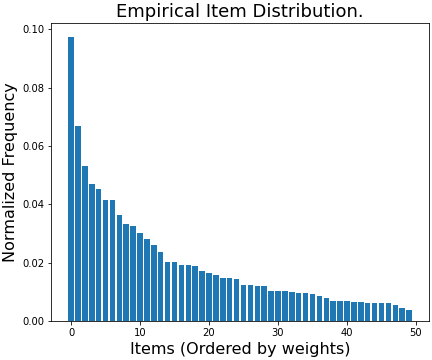

4.1 Datasets

We evaluate the proposed method on three public benchmarks that are used for recommender models. The statistics of the datasets are summarized in Table 2. In all the three datasets, the item distribution follows a highly skewed long-tail distribution (See the item distributions in Appendix C.). For each benchmark dataset, we preprocess the data to construct item sequences using the history of items that users have interacted with. We consider a maximum sequence length of 30, where padding is added if the item sequences are smaller than 30, and the item sequences larger than 30 are truncated and treated as multiple sequences.

4.2 Evaluation Metrics

We employ top-k Hit Ratio (HR@k) and NDCG@K with to evaluate the recommendation performance of all the models. Additional results with are included in the Appendix B. As the goal of IDW is to improve the performance of the tail items without hurting the overall performance across all the items, we further compare the metrics on the tail and head item sets separately. Assuming the Pareto Principle [7], the 20% most frequent items are considered head items, and the remaining items as the tail items. Following the standard evaluation protocol [24, 42], we use the leave-one-out strategy for evaluation. For each item sequence, the last item is used for testing, the item before the last is used for validation, and the rest is used for training. As the item set is typically large, we use randomly sampled negative items [24, 19, 26, 42] at evaluation. Specifically, we pair the ground-truth item with 99 randomly sampled negative items that the user has not previous interacted with to compute the evaluation metrics.

4.3 Baselines

To demonstrate the effectiveness of the proposed IDW on long-tail item distribution, we compare IDW with other commonly used weighting methods that deal with imbalance in recommender models. All the baselines use the two-tower model as the backbone, which has proven to be scalable because of efficient inference through inner products. For extracting multiple user representations to capture diverse user interests, we use parametric attention as described in Section 3.1. We list all the baselines below. We note that methods that rely on knowledge transfer of user/item features [6, 51] or augmentation methods (over/under sampling [8]) to mitigate imbalance can be easily combined with all the following baselines. Our proposed density-weighting and the baselines considered do not depend on user/item features.

-

•

SUR: We use a single user representation as the output of the user tower by using in the parametric attention [20] layer.

- •

- •

-

•

Focal Balance: A recent work by Lin et al. [33] modifies the loss function to train the model on an imbalanced dataset by down-weighting the loss of well-classified examples. This is a dynamic weighting scheme, where the item weights depend on the loss of each input during training.

-

•

Qmargin: Cao et al. [9] proposed to add a per-class (per-item) margin into the cross-entropy loss, where .

- •

4.4 Hyper-parameter Settings

We set the embedding dimension as 16 across all the models for the three benchmarks. We use the two-tower architecture as the backbone for all the models, where the user tower is a self-attention encoder [45] with two multi-head attention layers and four attention heads per layer. The item tower is an MLP with two fully connected layers each with 16 output nodes. For IDW, the momentum update of item weights (Equation 9) and are determined using grid search in the range of and . We use Adam to optimize all the models with a learning rate of 0.01. For all models, we used early stopping based on the validation dataset. The models are implemented with TensorFlow Recommenders (TFRS) [28].

4.5 Recommendation Performance

4.5.1 Overall Performance

We compare our proposed method with several representative baselines proposed for learning on imbalanced/skewed datasets in Table 1. For a fair comparison, all the methods use the same two-tower architecture described in Section 3.1. We first observe that the baseline MUR has marginal gains compared to the vanilla SUR in all the benchmarks. This is likely because of highly skewed long-tail item distributions in the real world datasets (See item distribution in Figure 9). This observation is consistent with our findings from synthetic datasets having high skewness (Figure 3(f)). Whereas, with IDW we see significant gains in performance for all real-world benchmarks we considered. In fact, the relative gains with IDW-based MUR ranged from 1.5x - 10x compared to the gains observed with the naive MUR. Our results also clearly show that IDW outperforms other imbalanced baselines that are typically used to address data imbalance. Finally, MUR coupled with IDW yield the best overall performance, highlighting that IDW is able to successfully leverage the improved clustering structure of MUR over SUR to yield further improvements.

4.5.2 Head vs. Tail Performance

We conduct a performance analysis on the head and tail items separately to get insights where the overall performance gains are coming from. We compare our method with the top three baseline methods from Table 1; namely, MUR, Qmargin, and Item Norm. The NDCG@20 metric for all the three benchmarks is shown in Figure 5111 We omit HR@20 metric for this analysis as it follows the same pattern as NDCG@20.. Compared to the naive MUR, we observe that IDW-based MUR consistently improves the performance on the tail items, while having better or comparable performance across the head items, leading to better overall performance. Whereas, both Qmargin and Item Norm focus primarily on the tail items, while sacrificing significant performance for the head items. This makes IDW a much more appealing method compared to other methods proposed for training on imbalanced datasets.

4.6 Ablation Study

4.6.1 Effect of Number of User Representations

We analyze the effect of the number of user representations () on the model performance for several representative methods. As shown in figure 6, IDW-based models not only perform better than other counterparts for the same , they are the only ones that show gains with increasing . In contrast, the baseline methods do not observe any trend with respect to . This shows that MUR and IDW nicely complement each other.

4.6.2 Sensitivity to IDW Hyperparameters

We also analyze the effect of the two hyperparameters introduced with IDW: and momentum (). The hyperparameter is used to define the stopping criterion in IDW (See Algorithm 1). Figure 7(a) shows the performance for . The model performance can be sensitive to ; a value too low increases the number of iterations causing over-fitting, whereas a value too high reduces the number of iterations causing under-fitting. We found to be the best in this ablation. We also perform an ablation for , which determines the update in item weights (Equation 9). We found that setting a low value for makes the model unstable due to an abrupt change in the loss function. In our ablation, yields the best results, followed by and . The setting with doesn’t use density-based weights and is equivalent to the baseline MUR with our proposed user tower calibration.

4.6.3 Importance of User Tower Calibration

In section 4, we proposed calibrating the user tower on the unweighted training distribution with the item tower fixed, once the model is trained with IDW. To evaluate the effectiveness of calibrating the user tower, we do an ablation study comparing IDW with and without user tower calibration (IDW w/o user calib). Figure 8 shows that the proposed calibration of user tower boosts the overall recommendation performance, making it an essential component of IDW.

5 Conclusions

In conclusion, this paper makes contributions in two key aspects. Firstly, to the best of our knowledge, this is the first study to investigate the impact of skewed item distribution on learning MUR, emphasizing the importance of loss balancing to fully realize the benefits of MUR. This finding alone could hold substantial interest for the user-modeling community. Secondly, the paper introduces a novel density-weighting framework that utilizes item representations to balance the loss, resulting in improved performance with MUR. Experimental results demonstrate that MUR with IDW yields superior overall performance on both public benchmarks and synthetic datasets, highlighting that IDW is successfully able to use improved learned item representation to improve overall recommendation performance. The presented work also sets the stage for further exploration in the field, opening avenues for the development of alternative balancing techniques and more robust MUR extraction layers. We hope that the findings in this work contribute to advancing the field of user modeling in recommendation systems and pave the way for future research endeavors in this domain.

Appendix

Appendix A Synthetic Data Generation

We generate synthetic data containing a sequence of item clicks for each user. The generated item sequences are used for training on the next item prediction task. The parameters for generating the dataset are summarized in Table 3. The data generation process is specified as follows:

-

1.

Assign clusters to users: We assign each user a set of clusters () that depict user’s interests. The cluster assignment is done by sampling from a multinomial distribution given by weights . The sampling weights are chosen to follow a power-law, which results in skewness in the user interest distribution as shown in Figure 3(a).

-

2.

Generate cluster sequence: Given a user’s interests, we assume a finite state Hidden Markov model to define the cluster transitions over time. The resulting sequence reflects a user’s interest over time. The cluster transition is given by:

(12) where depicts the probability of transition from cluster to cluster for a user . In (12), depicts the user’s interest volatility: a high would lead to frequent switching between different clusters that the user is interested in.

-

3.

Generate item sequence: Finally, we generate the item sequence using the cluster sequence generated above. We assume that the items from each cluster are drawn based on a multinomial distribution with , where is the number of items. For all clusters, we assume . Similar to cluster assignment, we induce a power-law distribution for as shown in Figure 3(b).

| Param | Description |

|---|---|

| The number of total clusters or interests. | |

| The sequence length. | |

| The number of total users. | |

| The number of total items. | |

| The set of clusters that a user is interested in. | |

| Probability of staying in the current cluster . | |

| User interest volatility: The total probability of a user switching to a new cluster in . | |

| Probability that a user stays in a “non-interesting” cluster . |

Note that the ground-truth cluster correspondences are not available to the recommendation model during training on the synthetic dataset, the model only has access to the marginal item distribution (Figure 3(c)).

Parameters for generating synthetic data: For the experiments presented in Section 3.2, we use a set of 50 items with 10 items per interest (cluster). The degree of skewness in Figure 3 is set by changing the imbalance in the cluster sampling distribution governed by : the power law exponent is increased from 0.0 to 2.0 to make the interest distribution skewed. The conditional item sampling distribution also uses power law weights, however, for all the experiments the item sampling distribution uses 0.5 as the power law exponent. For all experiments, we set the interest volatility parameters and . We set to induce exploration in the user engagements.

| Method | MovieLens 1M | Kindle Store | Clothing, Shoes … | |||

|---|---|---|---|---|---|---|

| HR@5 | NDCG@5 | HR@5 | NDCG@5 | HR@5 | NDCG@5 | |

| SUR | 54.69 0.20 | 39.72 0.14 | 32.98 0.85 | 22.13 0.66 | 11.87 0.67 | 7.76 0.55 |

| MUR | 55.21 0.29 | 40.25 0.24 | 33.44 0.58 | 22.35 0.44 | 13.13 0.63 | 9.15 0.43 |

| Frequency Bal. | 46.07 0.34 | 31.72 0.25 | 23.10 0.31 | 14.46 0.22 | 5.34 0.31 | 3.16 0.19 |

| Focal Bal. | 53.27 0.48 | 37.42 0.43 | 30.93 1.21 | 19.65 0.90 | 11.49 0.73 | 7.42 0.49 |

| Qmargin | 52.80 0.63 | 37.40 0.53 | 32.03 0.64 | 20.82 0.47 | 12.32 0.53 | 7.94 0.42 |

| Item Norm | 54.93 0.67 | 39.48 0.54 | 35.02 0.44 | 23.55 0.37 | 12.68 0.79 | 8.55 0.75 |

| Post-hoc | 53.09 0.92 | 37.98 0.77 | 32.38 0.37 | 21.66 0.32 | 11.94 0.61 | 7.64 0.50 |

| SUR-IDW | 56.88 0.69 | 41.57 0.59 | 34.29 0.31 | 23.01 0.28 | 13.79 0.18 | 9.62 0.10 |

| MUR-IDW | 57.79 0.16 | 42.42 0.15 | 35.07 0.51 | 23.62 0.39 | 14.67 0.16 | 10.43 0.20 |

Appendix B Recommendation Performance measured as HR@5 and NDCG@5

Appendix C Item distribution in the datasets

In this section, we visualize the empirical distribution of the three benchmarks that we considered in our experiments in Figure 9. In the y-axis we plot the normalized frequency of items and x-axis is the item ids arranged based on their frequency.

References

- [1]

- Abel et al. [2011] Fabian Abel, Qi Gao, Geert-Jan Houben, and Ke Tao. 2011. Analyzing User Modeling on Twitter for Personalized News Recommendations. In User Modeling, Adaption and Personalization, Joseph A. Konstan, Ricardo Conejo, José L. Marzo, and Nuria Oliver (Eds.). Springer Berlin Heidelberg, Berlin, Heidelberg, 1–12.

- Baum and Petrie [1966] Leonard E. Baum and Ted Petrie. 1966. Statistical Inference for Probabilistic Functions of Finite State Markov Chains. The Annals of Mathematical Statistics 37, 6 (1966), 1554 – 1563. https://doi.org/10.1214/aoms/1177699147

- Bengio and Senecal [2008] Yoshua Bengio and Jean-Sébastien Senecal. 2008. Adaptive Importance Sampling to Accelerate Training of a Neural Probabilistic Language Model. IEEE Transactions on Neural Networks 19 (2008), 713–722.

- Beutel et al. [2017a] Alex Beutel, Ed H. Chi, Zhiyuan Cheng, Hubert Pham, and John Anderson. 2017a. Beyond Globally Optimal: Focused Learning for Improved Recommendations. In Proceedings of the 26th International Conference on World Wide Web (WWW ’17). International World Wide Web Conferences Steering Committee, Republic and Canton of Geneva, CHE, 203–212. https://doi.org/10.1145/3038912.3052713

- Beutel et al. [2017b] Alex Beutel, Ed H. Chi, Zhiyuan Cheng, Hubert Pham, and John Anderson. 2017b. Beyond Globally Optimal: Focused Learning for Improved Recommendations. In Proceedings of the 26th International Conference on World Wide Web, WWW 2017, Perth, Australia, April 3-7, 2017.

- Box and Meyer [1986] George E. P. Box and R. Daniel Meyer. 1986. An Analysis for Unreplicated Fractional Factorials. Technometrics 28, 1 (1986), 11–18. http://www.jstor.org/stable/1269599

- Brownlee [2020] Jason Brownlee. 2020. Imbalanced classification with Python: better metrics, balance skewed classes, cost-sensitive learning. Machine Learning Mastery.

- Cao et al. [2019] Kaidi Cao, Colin Wei, Adrien Gaidon, Nikos Arechiga, and Tengyu Ma. 2019. Learning Imbalanced Datasets with Label-Distribution-Aware Margin Loss. Curran Associates Inc., Red Hook, NY, USA.

- Cen et al. [2020] Yukuo Cen, Jianwei Zhang, Xu Zou, Chang Zhou, Hongxia Yang, and Jie Tang. 2020. Controllable Multi-Interest Framework for Recommendation. Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (2020).

- Covington et al. [2016] Paul Covington, Jay Adams, and Emre Sargin. 2016. Deep Neural Networks for YouTube Recommendations. In Proceedings of the 10th ACM Conference on Recommender Systems (RecSys ’16). Association for Computing Machinery, New York, NY, USA, 191–198. https://doi.org/10.1145/2959100.2959190

- Cui et al. [2019] Yin Cui, Menglin Jia, Tsung-Yi Lin, Yang Song, and Serge Belongie. 2019. Class-balanced loss based on effective number of samples. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 9268–9277.

- Czarnowska et al. [2019] Paula Czarnowska, Sebastian Ruder, Edouard Grave, Ryan Cotterell, and Ann Copestake. 2019. Don’t Forget the Long Tail! A Comprehensive Analysis of Morphological Generalization in Bilingual Lexicon Induction. arXiv preprint arXiv:1909.02855 (2019).

- Domingues et al. [2012] Marcos Aurélio Domingues, Fabien Gouyon, Alípio Mário Jorge, José Paulo Leal, João Vinagre, Luís Lemos, and Mohamed Sordo. 2012. Combining Usage and Content in an Online Music Recommendation System for Music in the Long-Tail. In Proceedings of the 21st International Conference on World Wide Web (WWW ’12 Companion). Association for Computing Machinery, New York, NY, USA, 925–930. https://doi.org/10.1145/2187980.2188224

- Dong et al. [2020] Manqing Dong, Feng Yuan, Lina Yao, Xiwei Xu, and Liming Zhu. 2020. MAMO: Memory-Augmented Meta-Optimization for Cold-Start Recommendation. Association for Computing Machinery, New York, NY, USA, 688–697. https://doi.org/10.1145/3394486.3403113

- Hallinan and Striphas [2016] Blake Hallinan and Ted Striphas. 2016. Recommended for you: The Netflix Prize and the production of algorithmic culture. New Media & Society 18, 1 (2016), 117–137. https://doi.org/10.1177/1461444814538646

- Harper and Konstan [2015] F. Maxwell Harper and Joseph A. Konstan. 2015. The MovieLens Datasets: History and Context. ACM Trans. Interact. Intell. Syst. 5, 4, Article 19 (Dec. 2015), 19 pages. https://doi.org/10.1145/2827872

- He and Garcia [2009] Haibo He and Edwardo A. Garcia. 2009. Learning from Imbalanced Data. IEEE Transactions on Knowledge and Data Engineering 21, 9 (2009), 1263–1284. https://doi.org/10.1109/TKDE.2008.239

- He et al. [2017] Xiangnan He, Lizi Liao, Hanwang Zhang, Liqiang Nie, Xia Hu, and Tat-Seng Chua. 2017. Neural Collaborative Filtering. In Proceedings of the 26th International Conference on World Wide Web (WWW ’17). International World Wide Web Conferences Steering Committee, Republic and Canton of Geneva, CHE, 173–182. https://doi.org/10.1145/3038912.3052569

- Humeau et al. [2020] Samuel Humeau, Kurt Shuster, Marie-Anne Lachaux, and Jason Weston. 2020. Poly-encoders: Architectures and Pre-training Strategies for Fast and Accurate Multi-sentence Scoring. In International Conference on Learning Representations. https://openreview.net/forum?id=SkxgnnNFvH

- Jean et al. [2014] Sébastien Jean, Kyunghyun Cho, Roland Memisevic, and Yoshua Bengio. 2014. On using very large target vocabulary for neural machine translation. arXiv preprint arXiv:1412.2007 (2014).

- Juang and Rabiner [1991] B. H. Juang and L. R. Rabiner. 1991. Hidden Markov Models for Speech Recognition. Technometrics 33, 3 (1991), 251–272. http://www.jstor.org/stable/1268779

- Kang et al. [2020] Bingyi Kang, Saining Xie, Marcus Rohrbach, Zhicheng Yan, Albert Gordo, Jiashi Feng, and Yannis Kalantidis. 2020. Decoupling Representation and Classifier for Long-Tailed Recognition. In International Conference on Learning Representations. https://openreview.net/forum?id=r1gRTCVFvB

- Kang and McAuley [2018] Wang-Cheng Kang and Julian McAuley. 2018. Self-Attentive Sequential Recommendation. 197–206. https://doi.org/10.1109/ICDM.2018.00035

- Kim and Kim [2020] Byungju Kim and Junmo Kim. 2020. Adjusting Decision Boundary for Class Imbalanced Learning. IEEE Access 8 (2020), 81674–81685. https://doi.org/10.1109/ACCESS.2020.2991231

- Koren [2008] Yehuda Koren. 2008. Factorization Meets the Neighborhood: A Multifaceted Collaborative Filtering Model. In Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’08). Association for Computing Machinery, New York, NY, USA, 426–434. https://doi.org/10.1145/1401890.1401944

- Kula [2017] Maciej Kula. 2017. Mixture-of-tastes Models for Representing Users with Diverse Interests. ArXiv abs/1711.08379 (2017).

- Kula et al. [2020] Maciej Kula, Tiansheng Yao, Maheswaran Sathiamoorthy, Yihua Chen, Xinyang Yi, Sequoia Eyzaguirre, Lichan Hong, and Ed H. Chi. 2020. TensorFlow Recommenders. https://github.com/tensorflow/recommenders.

- Lee et al. [2019] Hoyeop Lee, Jinbae Im, Seongwon Jang, Hyunsouk Cho, and Sehee Chung. 2019. MeLU: Meta-Learned User Preference Estimator for Cold-Start Recommendation. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (KDD ’19). Association for Computing Machinery, New York, NY, USA, 1073–1082. https://doi.org/10.1145/3292500.3330859

- Li et al. [2019a] Chao Li, Zhiyuan Liu, Mengmeng Wu, Yuchi Xu, Pipei Huang, Huan Zhao, Guoliang Kang, Qiwei Chen, Wei Li, and Dik Lun Lee. 2019a. Multi-Interest Network with Dynamic Routing for Recommendation at Tmall. arXiv:cs.IR/1904.08030

- Li et al. [2019b] Chao Li, Zhiyuan Liu, Mengmeng Wu, Yuchi Xu, Huan Zhao, Pipei Huang, Guoliang Kang, Qiwei Chen, Wei Li, and Dik Lun Lee. 2019b. Multi-Interest Network with Dynamic Routing for Recommendation at Tmall. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management (CIKM ’19). Association for Computing Machinery, New York, NY, USA, 2615–2623. https://doi.org/10.1145/3357384.3357814

- Liang et al. [2020] Tingting Liang, Congying Xia, Yuyu Yin, and Philip S. Yu. 2020. Joint Training Capsule Network for Cold Start Recommendation. arXiv:cs.IR/2005.11467

- Lin et al. [2018] Tsung-Yi Lin, Priya Goyal, Ross Girshick, Kaiming He, and Piotr Dollár. 2018. Focal Loss for Dense Object Detection. IEEE Transactions on Pattern Analysis and Machine Intelligence 42, 2 (2018), 318–327. https://doi.org/10.1109/TPAMI.2018.2858826

- Linden et al. [2003] G. Linden, B. Smith, and J. York. 2003. Amazon.com recommendations: item-to-item collaborative filtering. IEEE Internet Computing 7, 1 (2003), 76–80. https://doi.org/10.1109/MIC.2003.1167344

- Liu and Zheng [2020] Siyi Liu and Yujia Zheng. 2020. Long-Tail Session-Based Recommendation. In Fourteenth ACM Conference on Recommender Systems (RecSys ’20). Association for Computing Machinery, New York, NY, USA, 509–514. https://doi.org/10.1145/3383313.3412222

- Liu et al. [2019] Ziwei Liu, Zhongqi Miao, Xiaohang Zhan, Jiayun Wang, Boqing Gong, and Stella X. Yu. 2019. Large-Scale Long-Tailed Recognition in an Open World. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

- Menon et al. [2021] Aditya Krishna Menon, Sadeep Jayasumana, Ankit Singh Rawat, Himanshu Jain, Andreas Veit, and Sanjiv Kumar. 2021. Long-tail learning via logit adjustment. In International Conference on Learning Representations. https://openreview.net/forum?id=37nvvqkCo5

- Messica and Rokach [2018] Asi Messica and Lior Rokach. 2018. Personal Price Aware Multi-Seller Recommender System: Evidence from eBay. Knowledge-Based Systems 150 (02 2018). https://doi.org/10.1016/j.knosys.2018.02.026

- Ni et al. [2019] Jianmo Ni, Jiacheng Li, and Julian McAuley. 2019. Justifying Recommendations using Distantly-Labeled Reviews and Fine-Grained Aspects. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). Association for Computational Linguistics, Hong Kong, China, 188–197. https://doi.org/10.18653/v1/D19-1018

- Pal et al. [2020] Aditya Pal, Chantat Eksombatchai, Yitong Zhou, Bo Zhao, Charles Rosenberg, and Jure Leskovec. 2020. PinnerSage. Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (Jul 2020). https://doi.org/10.1145/3394486.3403280

- Rousseeuw [1987] Peter J. Rousseeuw. 1987. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 20 (1987), 53–65. https://doi.org/10.1016/0377-0427(87)90125-7

- Ruiyang Ren [2020] Yaliang Li Wayne Xin Zhao Hui Wang Bolin Ding Ji-Rong Wen Ruiyang Ren, Zhaoyang Liu. 2020. Sequential Recommendation with Self-Attentive Multi-Adversarial Network. In Proceedings of the 43rd International ACM SIGIR conference on research and development in Information Retrieval, SIGIR 2020, Virtual Event, China, July 25-30, 2020.

- Scott [2012] David W. Scott. 2012. Multivariate Density Estimation and Visualization.

- Steininger et al. [2021] Michael Steininger, Konstantin Kobs, Padraig Davidson, Anna Krause, and Andreas Hotho. 2021. Density-based weighting for imbalanced regression. Machine Learning 110, 8 (01 Aug 2021), 2187–2211. https://doi.org/10.1007/s10994-021-06023-5

- Vaswani et al. [2017] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Ł ukasz Kaiser, and Illia Polosukhin. 2017. Attention is All you Need. In Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.), Vol. 30. Curran Associates, Inc. https://proceedings.neurips.cc/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

- Wang et al. [2017] Yu-Xiong Wang, Deva Ramanan, and Martial Hebert. 2017. Learning to Model the Tail. In Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.), Vol. 30. Curran Associates, Inc. https://proceedings.neurips.cc/paper/2017/file/147ebe637038ca50a1265abac8dea181-Paper.pdf

- Weston et al. [2013] Jason Weston, Ron J. Weiss, and Hector Yee. 2013. Nonlinear latent factorization by embedding multiple user interests. Proceedings of the 7th ACM conference on Recommender systems (2013).

- Yang et al. [2021] Yuzhe Yang, Kaiwen Zha, Ying-Cong Chen, Hao Wang, and Dina Katabi. 2021. Delving into Deep Imbalanced Regression. In ICML. 11842–11851. http://proceedings.mlr.press/v139/yang21m.html

- Yi et al. [2019] Xinyang Yi, Ji Yang, Lichan Hong, Derek Zhiyuan Cheng, Lukasz Heldt, Aditee Ajit Kumthekar, Zhe Zhao, Li Wei, and Ed Chi (Eds.). 2019. Sampling-Bias-Corrected Neural Modeling for Large Corpus Item Recommendations.

- Yin Cui [2018] Chen Sun Andrew Howard Serge Belongie Yin Cui, Yang Song. 2018. Large Scale Fine-Grained Categorization and Domain-Specific Transfer Learning. In CVPR.

- Zhang et al. [2021] Yin Zhang, Derek Zhiyuan Cheng, Tiansheng Yao, Xinyang Yi, Lichan Hong, and Ed H. Chi. 2021. A Model of Two Tales: Dual Transfer Learning Framework for Improved Long-tail Item Recommendation. arXiv:cs.IR/2010.15982

- Zhu et al. [2021] Feng Zhu, Yan Wang, Chaochao Chen, Jun Zhou, Longfei Li, and Guanfeng Liu. 2021. Cross-Domain Recommendation: Challenges, Progress, and Prospects. arXiv:cs.IR/2103.01696

- Zhu et al. [2018] Yu Zhu, Jinhao Lin, Shibi He, Beidou Wang, Ziyu Guan, Haifeng Liu, and Deng Cai. 2018. Addressing the Item Cold-start Problem by Attribute-driven Active Learning. arXiv:cs.IR/1805.09023

- DNN

- Deep Neural Network

- DNNs

- Deep Neural Networks

- MS

- mean-silhouette

- IDW

- Iterative Density Weighting

- KDE

- Kernel Density Estimation

- MUR

- multiple user representations

- SUR

- single user representation