DEMOS: Dynamic Environment Motion Synthesis in 3D Scenes via Local Spherical-BEV Perception

Abstract

Motion synthesis in real-world 3D scenes has recently attracted much attention. However, the static environment assumption made by most current methods usually cannot be satisfied especially for real-time motion synthesis in scanned point cloud scenes, if multiple dynamic objects exist, e.g., moving persons or vehicles. To handle this problem, we propose the first Dynamic Environment MOtion Synthesis framework (DEMOS) to predict future motion instantly according to the current scene, and use it to dynamically update the latent motion for final motion synthesis. Concretely, we propose a Spherical-BEV perception method to extract local scene features that are specifically designed for instant scene-aware motion prediction. Then, we design a time-variant motion blending to fuse the new predicted motions into the latent motion, and the final motion is derived from the updated latent motions, benefitting both from motion-prior and iterative methods. We unify the data format of two prevailing datasets, PROX and GTA-IM, and take them for motion synthesis evaluation in 3D scenes. We also assess the effectiveness of the proposed method in dynamic environments from GTA-IM and Semantic3D to check the responsiveness. The results show our method outperforms previous works significantly and has great performance in handling dynamic environments.

Index Terms:

Motion Synthesis, 3D Point Cloud Scene, Scene Perception, Dynamic Environment.1 Introduction

Scene-aware motion synthesis, in which we generate human motion sequence in real-world 3D scanned scenes, has attracted a lot of attention recently due to its wide application in robotics navigation, virtual/augmented reality, and simulated data synthesis [1, 2, 3]. However, real-time motion generation in 3D scanned scene point clouds is quite challenging due to the implicit constraint on human-scene interaction and the irregular structure of scanned scene point cloud [4, 5, 6, 7].

Recent works paid more attention to learning the human scene interaction based on the parametric human body model [8, 9], 3D motion and scene datasets [10, 11, 12, 13, 14, 15]. Pioneers [6, 16, 17, 18] explored the relationship between human bodies and static scenes by utilizing various scene hints like occupation, distance, and semantics. Further, iterative methods [19, 20] are used to predict future poses gradually in a simulated virtual world with explicit scene constraints. As for motion synthesis in real scanned point cloud scenes, prior-based works [2, 3] attempted to directly generate entire motion sequences rather than in an iterative manner, and took a further optimization stage to maximize the plausibility of human-scene interaction, acquiring higher stability from motion priors [21, 22] when scene constraints are more implicit.

However, they mainly considered entire motion synthesis and optimization in static scanned scenes, while in the real world, the surrounding environments may change over time. A typical situation is another person moving around as indicated in Figure 1. This requires us to (1) predict reasonable future motion (orange curve) instantly according to the scene point clouds even without an optimization stage and (2) quickly correct and update the latent motion (green to yellow curve) to handle those changes in environments. Unfortunately, prior-based motion synthesis framework alone is unable to handle such a situation.

In this paper, we find that, the underlying principle of a stable Dynamic Environment Motion Synthesis (DEMOS) framework for real scanned point cloud scenes is a collaboration of prior-based instant motion generation and iterative motion updating through time-variant motion blending.

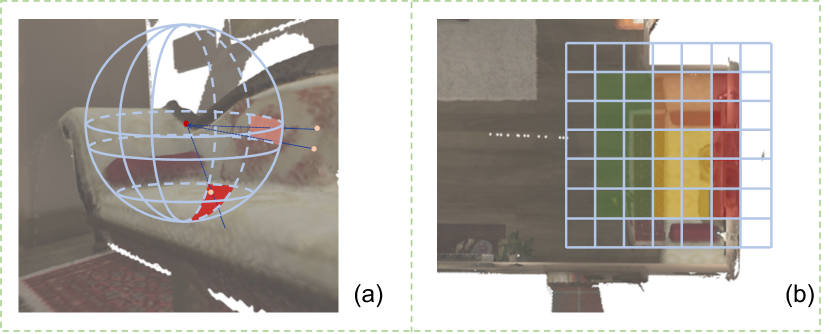

For instant scene-aware motion generation, we need to hypothesize plausible motion according to the current environment without further optimization. In contrast to whole scene feature extraction using PointNet [4] like previous works [2, 3], we focus more on body-centered local scene structures, which is more related to human motion within seconds, especially for large-scale scene point clouds. We observe that the distances between humans and scanned scenes in different directions can well tell the local scene geometry and help predict plausible motions, and the surrounding elevation map provides important hints for future motion trajectories. Thus, two projection-based local scene perception methods are specifically designed for scene-aware motion synthesis as illustrated in Figure 1. The blue spherical coordinate indicates the Spherical Angular Perception and gray mesh grid represents the Bird’s Eye View (BEV) Elevation Perception. After that, we propose a lightweight spherical convolution network to recognize the angular depth patterns and a small network for surrounding elevation feature extraction. These informative geometry hints can make the generated motion consistent with the scanned scene point cloud.

To handle dynamic environments in scanned point cloud scenes (e.g. another person walks around as shown in Figure 1), we attempt to both benefit from the stability of prior-based motion generation and the responsiveness of iterative methods. We iteratively generate a future motion according to the surrounding point cloud and current start/goal information. Then, inspired by trajectory blending with user control [23], we design a time-variant blending for the newly generated motion and previous ones to update the latent motion, as indicated by the fusion of orange and green curves in Figure 1. Consequently, the synthesized long-term motion will be stable due to the intrinsic plausibility of motion prior, and responsive due to the iterative update of motion.

For performance evaluation, we unify the data format of PROX [24] and GTA-IM [1], and conduct experiments on these two 3D motion-scene datasets. We compare our method with cutting-edge works in motion reconstruction quality, physical and realism metrics like previous works [2, 16]. We also present the motion synthesis in common dynamic point cloud scenes with multi-agent or moving vehicles to show the ability of our method in handling dynamic 3D environments. Comprehensive experiments show our method outperforms prevailing ones by a large margin and works well in handling dynamic environments.

Overall, our major contribution can be summarized as follows: (1) We propose the first Dynamic Environment MOtion Synthesis (DEMOS) framework to handle the real-time changes in 3D scanned point cloud scenes. (2) We design a Spherical-BEV perception method to recognize local geometry specifically for instant scene-aware motion generation. (3) We introduce motion blending as a bridge of motion-prior and iteration-based methods to adapt the synthesized motion to dynamic scanned scenes. (4) We align the data format of PROX and GTA-IM and achieve SOTA performance on motion prediction and synthesis in both static and dynamic environments.

2 Related Work

3D Scene Context Perception. Various scene feature extraction methods [4, 25, 26, 5, 27, 28, 29, 30, 31] were proposed to better recognize the scene geometry and semantics. Recently, these scene features were taken into consideration to synthesize scene-aware poses or motions. PLACE [6] utilized Basis Point Sets [32] to measure the distance between sampled points and human meshes, thus could better recognize the affordance information. PSI [16] utilized captured depth images to provide scene structure hint. POSA [17] attempted to place human meshes into scenes with reasonable human-scene interactions. SAMP [20] employed the voxel representation of the target object to learn the goal position and orientation. COINS [18] synthesized static human bodies in scenes given action and semantic guidance, where 3D objects are jointly exploited with human body surface points in a unified latent space. MIME [33] reversely infer the 3D environments according to the human motions. PAAK [34] designed to place human animations in scanned scenes by optimizing the human-scene interactions in keyframes. PointNet [4] was taken to extract the feature of the whole scene which provided external hints for scene-aware motion generation [2, 3].

Compared with them, we propose to learn the local geometry patterns in the spherical and BEV depth representation projected from scene point clouds, which play more important roles in motion synthesis in scanned scenes.

Motion Synthesis. Motion synthesis including trajectory prediction and pose generation had been widely studied for a long time under different situation [35, 36, 23, 19, 37, 22, 2, 38, 39]. Pioneer works [23, 19] attempted to predict pose sequences iteratively in the simulated virtual worlds. PFNN [23] took the phase information into control network weights, and NSM [19] additionally utilized state information to mix expert networks, allowing automatic transition between different states. LMM [40] replaced the key operations in Motion Matching with networks. However, the explicit scene constraints in the simulated virtual world are usually unavailable for motion synthesis in real scanned point cloud scenes, resulting in a loss of stability. For stable motion synthesis, HMP [22] learned a motion prior space from a large-scale motion dataset AMASS [14], where the entire motion can be simply sampled from a normal distribution. Based on the motion prior, Wang et al. [2] was able to generate entire motions in real scanned point cloud scenes, pursuing better stability when scene constraints are implicit. However, further optimization was still required for motion naturalness. Later, motion diversity [3] is introduced to every aspect of the pipeline. DPP [39] used LSTM only for coarse trajectory prediction and VAE for fine-grained motion forecasting.

In this paper, we aim to synthesize motion in scanned point cloud scenes instantly but consider more general dynamic environments. Compared to methods that generate motion in the virtual world, we focus on motion synthesis in real scanned point cloud scenes where scene constraints are much more implicit. For motion synthesis in real scanned scenes, we consider more general real-time motion synthesis in dynamic scene point clouds. To handle this, we introduce local Spherical-BEV perception of scenes into instant motion prediction for higher motion plausibility and stability and design time-variant motion blending to update latent motion in an iterative manner to ensure responsiveness.

Motion Fusion. Motion fusion through parameter blending was commonly utilized to synthesize new motions from existing motion clips [41, 23, 42, 22]. Park et al. [41] calculated the fusion weights by estimating the similarity of example motion clips and the desired one to blend the motion clips in a continuous parameter space. PFNN [23] blended the trajectories generated from networks and those from user control for a trade-off of naturalness and responsiveness. HSNMS [42] introduced a bi-directional interpolation scheme for retrieved short-range clips to synthesize long-term motions. HMP [22] also proved it is realizable to fuse motion by interpolating the latent codes in the prior space.

Inspired by these methods, we design to iteratively blend the newly generated future motion with previous latent motion using time-variant coefficients. Thus, our synthesized motion can maintain the stability of motion-prior-based methods and responsiveness of iteration-based methods.

3 Method

3.1 Overview

Human Representation. We choose the SMPL-X [9] parameters to represent all human bodies following previous works [2, 3] to avoid irrational poses. The SMPL-X human mesh consisting of vertices is defined as where is the body root translation, is the 6D continuous rotation representation of body root orientation, is the body shape parameter, and are the compact latent codes of body pose and hand pose in prior space. Other parameters of SMPL-X are set to zeros as default for simplicity. We use point cloud to represent the scene, as it can be directly obtained from common scanning devices.

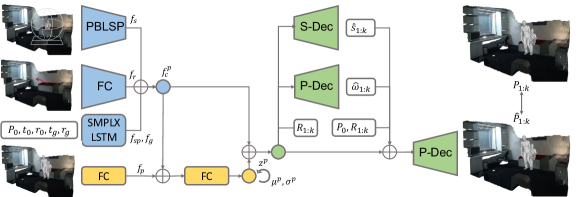

Task Definition and DEMOS Pipeline. In this paper, we attempt to synthesize long-term motions in dynamic scenes where other moving persons or vehicles may appear at any time in an online manner. We choose to decompose such a problem and handle this task in four steps, as shown in Figure 2, where Spherical-BEV scene perception makes the predicted goal&motion plausible and motion blending in a latent space makes the synthesized motion adapted to the changes in dynamic scene point clouds. The whole pipeline is formed as follows.

(a) We take human start information and scene point clond as inputs where the scene is further perceived through the Spherical-BEV projection.

(b) GoalNet was designed to first sample the future goal position and orientation of the human body given current human and scene information.

(c) Then, we take RouteNet to predict a scene-aware route consisting of trajectory and root orientation sequence in the short-term future.

(d) Based on the start/goal information and route, we can generate the appropriate pose sequence according to the scene geometry using PoseNet.

(e) Later, we iteratively predict future motions and utilize newly predicted motions to update latent motion through blending for dynamic environment adaptation.

We will finish the process or sample a new goal once the current goal is achieved. Our iterative motion synthesis method will finally generate long-term motions in dynamic environments where the surrounding point cloud may change over time.

Concretely, we estimate the future goal position distribution based on the scene structure via a CVAE GoalNet in a Bird’s Eye View (BEV), and utilize the elevation map to filter out inaccessible areas. Then, we sample the goal position and adjust the height according to the position elevation with state hint. We also adjust the orientation according to a possible route obtained from the elevation map. Given current human&scene information at time and sampled goal, we will predict the route in the future frames according to the environment point cloud . We will then generate scene-aware pose sequence which also depends on the start-goal information and predicted route. The motion sequence consisting of routes and poses from to is marked as , termed hypothesized motion in this paper. For long-term motion synthesis, we iteratively predict and blend it with previous latent motion to obtain the updated latent motion , and finally synthesize long-term motion where is the total number of pre-defined frames and the execution frame is the route and pose in the first frame of . As for the initialization, we set . Here, we set to frames, i.e. , seconds for a video of 30 FPS.

Anchor-based State Annotation. Human pose is highly related to the current state and the progression of state, thus previous works [19, 20] trained the mixture of expert networks for different actions with manual state annotation. In contrast to manual annotation, we find that the human action state has a tight connection with the human-scene contact information for motion synthesis in the scanned 3D scenes. So, we annotate the state automatically according to the body part (i.e. anchors) that is in contact with scenes without movement.

Based on the human motions from PROX [24] and GTA-IM [1] datasets, we attempt to categorize all the poses into four states. For each pose from these two datasets, we first obtain the SMPL-X body mesh and check the contact [17] and movement [2] information of foot, gluteus, and back vertices. Thus, we can define the state of each pose according to the contact information.

Specifically, as shown in Figure 3, we define bodies with only two feet as anchors to be idle, bodies with only one foot as the anchor to be in locomotion, bodies with gluteus as the anchor (and back is not an anchor) to be sitting, bodies with back as the anchor to be lying. Poses will be invalid and not be used for training/testing if none of the foot, gluteus, and back are anchored body parts. To leverage the prior knowledge of periodic motion like locomotion [43], we will also automatically calculate the phase based on the foot anchor information, while the phases in other states are set to zero. Thanks to these annotations, we can utilize them to guide the scene-aware PoseNet to predict states&phases that correspond to local scenes, and later rational poses. We can also know the anchored parts given the predicted states, which can also be used to avoid skating at contacted parts between consequent poses.

3.2 Projection-based Local Scene Perception

To make the synthesized motion more consistent with the surroundings, we propose two projection-based local scene perception (PBLSP) methods to extract the spherical depth and BEV elevation information from environment point clouds.

Spherical Angular Perception. To better understand the geometry of the surrounding scene from the human body perspective, we propose to recognize the distance to scene points in different directions from the body mesh root, as shown in Figure 4 (a). This is attributed to the observation that the state, contact information, and moving direction of human bodies have a strong relationship with the angular depth distribution pattern.

For spherical angular perception, we first extract the local scene point clouds consisting of nearest points in environment point cloud from human root position. Then, these points are projected onto a unit sphere with pre-defined longitude bins and latitude bins to obtain the angular depth representation of the local scene. In this paper, we simply take longitude bins and latitude bins, and nearest points from the local scene are selected for the angular projection. This real-time point cloud projection is implemented in parallel by extending the Pytorch implementation of z-buffer [44, 45]. Specifically, we calculate the longitude and latitude bin for each point and then scatter the distance value into the sparse matrix with default value . Then, the angular depth at different directions can be easily obtained through finding the minimum among .

After that, we choose to design a lightweight spherical convolution rather than sophisticated ones like KTN [46] (the comparison is available in Section 4.5) to extract the angular depth distribution pattern from the local scene point cloud. Each layer of our spherical convolution consists of a longitude convolution and a latitude convolution. Unlike the common 2D convolution, the longitude convolution with a ring structure should be cyclic. So, we first warp the feature map horizontally and apply 1D convolution on the longitude. As for the latitude convolution without ring structure, we can directly utilize 1D convolution. After a few layers of spherical convolution and angular pooling, we utilize several fully connected layers to extract the angular depth distribution feature .

BEV Elevation Perception. We observe that the future trajectory and pose are highly associated with the local elevation map, as illustrated in Figure 4 (b). Take locomotion for instance, the vertical position at different frames should correspond to the elevation map to ensure foot contact. As for sitting, the seating areas in the scenes are usually typically designed with specific elevations. These scene hints can aid the proposed method in judging feasible trajectories and generating scene-aware poses.

Thus, we attempt to take a Bird’s Eye View (BEV) to extract the elevation map surrounding the current human body. In our setting, we mainly care about the elevation map of the local scene within a body-centered square with side length . This square is also discretized into bins, and surrounding points from the scene are selected for BEV depth rendering. In this paper, the side length is commonly set to meters which is further split into bins, and nearest points are taken for BEV perception. Different from point projection into unit sphere, there will be some points outside all the bins, and these points can not be ignored in batch operation. So, we pad the square map with one bin on each side, i.e. , bins in total (see the bins in Figure 4 (b) without color), and all residual points are rendered to the nearest padding bins. By now, all rendered points can be scattered to another sparse matrix , and then we can obtain the BEV depth map by calculating the minimum depth value among and discarding the padding bins. After that, the BEV elevation map can be extracted from where is a constant hyper-parameter and set to in this paper. Finally, we build a small network consisting of several 2D convolution and pooling layers to extract the BEV elevation features .

By now, we can concatenate the scene points feature extracted from MLPs with the spherical angular pattern and BEV elevation feature to obtain the scene feature . Such Spherical-BEV perception can supply the local scene cues essential for instant scene-aware motion synthesis given any input scenes (demonstrated in Section 4.5).

3.3 Networks

The fundamental architectures of the networks bear resemblance to those employed in previous works [2, 3] for motion synthesis. In this work, our networks focus more on how to model the correlation between human embedding and scene hints extracted from the PBLSP, and later attempt to sample feasible goals in the scene and infer rational future motion sequence under the guidance of designed anchor-based states with human-scene contact information.

GoalNet. Our GoalNet take a CVAE [47] structure shown in Figure 5 (a) to model the goal distribution given the current human body and scene. We concatenate the scene feature from the PBLSP module with the human start information to exploit the human-scene relation and obtain the goal condition feature . Next, we extract the start-goal moving feature from and combine it with to predict the mean and variance for the normal distribution of the goal latent code. We then sample the goal latent code from this distribution and predict the most likely goal given the condition feature with human-scene hints.

For training of GoalNet, we pursue reconstructing the goal position and orientation and pushing the goal latent space close to standard normal distribution, and the supervision is formulated as:

| (1) |

| (2) |

where represents the KL divergence between these two normal distributions, and thus can be simplified as:

| (3) |

In the motion synthesis stage, we also utilize the elevation map to filter out impossible areas in BEV, which works as a filter . Specifically, we build a graph based on the elevation map with each node representing a horizontal position and recording the elevation. Given the current state and position node, we can easily judge the state transition according to the relative elevation between neighboring nodes. For instance, the transition between idle to sit is usually accompanied by an elevation transition of 0.3-0.6 meters. We can then extract the possible area according to the graph nodes with a possible state.

We then sample the final root position from the distribution given by a multiplication of the normal distribution and the filter , and is a hyper-parameter that is commonly set to in our setting. Finally, we adjust the z-axis position according to the elevation map to obtain and adjust z-axis root orientation according to a possible path from to to obtain .

RouteNet. RouteNet is designed to predict the future trajectories and orientations where surrounding scene point cloud , start-goal route , and initial pose are taken as network inputs. The structure of RouteNet is presented in Figure 5 (b). As shown, we again extract the scene feature using the PBLSP module and take a LSTM [48] block to extract the start-goal moving feature . Meanwhile, we take the SMPL-X parameters to represent human start pose . Then, these three features are concatenated together to exploit the human-scene correlation and predict the route in the future frames after several MLPs. To train the RouteNet, we take the L1 loss on position and orientation estimation to guide the route prediction:

| (4) |

In the inference stage, RouteNet can directly predict the future route within frames based on current human-scene information and sampled goal.

PoseNet. As shown in Figure 6, we also decide to take a CVAE network structure for PoseNet to infer the future pose sequences given the current human-scene information and predicted route. For the condition encoding, we extract scene feature , start-goal moving feature and start pose feature similarly, and take a fully-connected network to encode the route feature . These features are concatenated to obtain the pose condition feature . Meanwhile, we utilize several fully connected layers to extract the pose feature from pose sequences . Then, we take several MLPs to exploit the correlation of pose condition feature and pose feature , and later extract the mean and variance values for the normal distribution of pose latent code. After that, we can sample the pose latent vector from .

Along with latent code and human-scene condition , we choose to take an additional LSTM to extract frame-wise route features as position query, and then predict the state probability at each frame accordingly. As for locomotion, we choose the relative transformations between consequent routes rather than routes themselves as input to predict the phase difference , and infer the phase sequence via cumulative summation.

Later, we use a mixture of expert networks to infer the pose sequence following previous works [19, 20]. The mixed network weight at each frame can be controlled by the predicted probability of state at frame via Condition feature , latent code , initial pose , route , and inferred phase are fed into the mixed network to predict the entire pose sequence .

For the training of PoseNet, we supervise the network by anticipating the intermediate state&phase and reconstructing the input pose sequence, where loss can be formulated as follows:

| (5) |

where is KL Divergence for pose latent code distribution. , and are the L1 loss for body joint, mesh and parameter reconstruction. is the cross entropy loss in state classification and is the L1 loss in phase step prediction. The explicit formulation of these losses can be defined as:

| (6) | ||||

| (7) | ||||

| (8) | ||||

| (9) |

| (10) | ||||

| (11) |

Here, and are the predicted mean and variance value of the latent code in PoseNet. and indicate the SMPL-X [9] body joints and mesh vertices formed by the translation, orientation, and body shape and pose. / contains the predicted/P-GT body pose and hand pose parameter /. / is the predicted/P-GT probability of state , while / is the predicted/P-GT phase step.

3.4 Iterative Latent Motion Update

Given current human-scene information and goal, we can predict the motion in future frames, which is reasonable for a static scene. However, in dynamic scene point clouds where other characters or vehicles move around, the future motion should be updated accordingly. So, we choose to iteratively predict future motion and use it to update previous latent motion (derived from , , ) to both benefit from the stability of prior-based motion synthesis and responsiveness of iterative methods when handling dynamic 3D environments.

Here, we introduce a time-variant motion blending to iteratively update the latent motion using newly hypothesized motions, and a continuous space for motion is necessary for this motion blending. Specifically, we choose the required continuous space formed by the Cartesian product of trajectory in Euclidean space, root orientation in 6D continuous space [49] and pose feature in SMPL-X prior space [9]. For initialization, we directly set the latent motion as . Here, we first consider the latent route update. Given previous latent route and current predicted route , we can update the latent route as shown in Figure 2 (e) through blending for motion continuity:

| (12) |

where , is the time-variant blending coefficient, and is a hyper-parameter that equals to to make sure the responsiveness in our implementation. For which works as a placeholder in the formulation will be set to a zero vector. Inspired by previous works [20, 3] which utilize the algorithm to help path planning, we take the trajectory given by the algorithm to adjust our predicted route. Unlike directly blending the route, we find that the low-frequency component of velocity given by can help avoid obstacles. Therefore, we first calculate the velocity sequences and from and (trajectory of ). Then, we utilize the Fast Fourier Transform to obtain the velocity spectrum:

| (13) |

After that, we blend the velocity sequence into the spectrum space through where is a low-pass filter. The desired trajectory is derived from the blended velocity via

| (14) |

Here, we can calculate the z-axis angular difference between current trajectory and desired trajectory at the -th frame in the future where is set to . Then, the fused route can be adjusted in the matrix representation . As for the poses, the latent pose sequence is updated the same as Eq. 12, while the latent motion is updated as .

The final synthesized motion from to is extracted as . We will terminate the generation or sample the next goal when the distance between goal and current position is smaller than a tolerance .

Thanks to the anchor-based state and phase prediction, we can introduce a no-skating operation for contacted parts during iterative latent motion updates. Take locomotion for instance, we can know which part of the body should be in contact with the scene after checking current state and phase . We will calculate the mean position of anchored body part from the execution frame of previous latent motion , and derive the new position from current latent motion . Then, the current latent motion will be corrected for no-skating through a translation of , where is set to in our setting.

4 Experiments

4.1 Datasets

PROX. We take a dataset of real human motion in 3D scenes PROX [24] for performance evaluation. It provides motion sequences (30FPS) of different subjects in scanned scenes. All route and pose information is represented by SMPL-X [9] parameters and obtained through a fitting algorithm. Following Wang et al. [2], we also take the fitted parameters as pseudo-ground truth (P-GT) and take motion sequences in 8 scenes for training and data in 4 residual scenes for testing. Each sample contains current information and goal position & orientation as well as the motion in the future 2 seconds.

GTA-IM. We also take a dataset containing GTA motions in 3D scenes GTA-IM [1] for motion synthesis in more open spaces. It contains motion sequences (30FPS) of various characters in large scenes represented by RGB-D images. Each pose is represented by the location of skeleton joints.

In order to align the data format of two datasets and unify the evaluation, we extend MMHuman3D [50] to fit the SMPL-X parameters using skeleton joints from GTA-IM where SMPL-X parameters of consecutive frames are constrained to ensure continuity, and we take these fitted parameters as pseudo-ground truth (P-GT). We also build a pipeline based on the Open3D [51] to obtain the scene mesh, point cloud, as well as Signed Distance Function (SDF) of each scene from the RGB-D images. We refer to the training/testing split given by Wang et al. [52] where sequences in scenes are used for training and sequences in scenes are used for testing. Similar to the data format of PROX, each sample contains current information, goal position&orientation, and motion in the future 2 seconds, and all starting frames are sampled with a stride of 5 frames.

4.2 Implementation Details

For detailed parameter settings, the spherical angular depth is fed into a small network consisting of several layers of light-weight spherical convolution and fully connected layers to extract the spherical angular pattern . On the other side, the BEV elevation feature is extracted from the BEV elevation map through a small 2D convolution network.

In the GoalNet, the dimension of the goal condition feature is set to , and the mean and variance value of the goal latent code is set to . The start pose feature from the SMPL-X prior and the goal-based moving feature are used in the RouteNet. As for the PoseNet, there are additional route feature to supply route hints, while the dimension of pose sequence latent code is set to .

In the training procedure, are set to , , , , , , , respectively, for both PROX and GTA-IM datasets. GoalNet, RouteNet, and PoseNet are trained independently by Adam [53] optimizers with learning rates {, , }. All these networks are trained for epochs.

Based on these settings, all our networks can be trained on a machine with 16G RAM and 11G VRAM, and the total networks’ inference time is ms on a single GTX 3090 after training.

4.3 Evaluation Metrics

Reconstruction and Physical Metric. In order to evaluate how well different methods can reconstruct the future motion given the current subject information and goal position&orientation as well as surrounding scenes, we follow Wang et al. [2] to calculate the mean L1 distance in motion translation, orientation, and pose parameter in SMPL-X latent space between the pseudo-ground truth and reconstructed motions. We also choose to take the Mean Per Joint Position Error (MPJPE) and Mean Per Vertex Position Error (MPVPE) for measurement as previous works [2, 22] which are more corresponding to the visual difference. For the SMPL-X human meshes of predicted motion, we also consider physical metrics like the Contact and Non-collision scores [16, 17] to check the ratio of bodies in contact with the scene and mesh vertices in free space.

Naturalness and Perception Metric. In order to check the naturalness of the synthesized motion sequence, we additionally conduct a user study to rank the naturalness of generated motion given by different methods along with the pseudo-ground truth. For easier user study, we render all motions in 3D scenes into videos and design an online UI based on the Gradio framework [54] as shown in Figure 7.

Users are required to enter their username to fetch the first batch of samples given by different methods/P-GT or continue the rating procedure. These samples with the same initial information are randomly permuted and then presented to users. They are asked to give a score between to for each sample after watching all videos within the batch. After submitting their scores, they can get a new batch of samples if they have not rated all motion samples. They can also terminate the rating at any time because we will record the results once they submit the scores and usernames can be used to continue the procedure. Finally, we will collect all the results and calculate the mean scores and variance values of different methods for both PROX [24] and GTA-IM [1].

4.4 Experimental Results

Comparison on PROX. The results of compared methods for motion reconstruction in the PROX [24] dataset are reported in Table I where our method outperforms all previous works in the precision of position, orientation, and pose estimation. The contact and non-collision scores of predicted future motions are also reported in this table. We can see that our method has comparable or even better performance than previous methods in these two metrics even without an additional optimization stage. The results given by Wang et al. [2] with the optimization stage achieve the highest non-collision score, however, it can only be a trade-off between contact and non-collision in this situation and will be quite time-consuming for scene-aware motion synthesis.

| Methods | Transl. | Orient. | Pose | MPJPE | MPVPE | Cont. | Non-col. |

|---|---|---|---|---|---|---|---|

| Wang et al. [2] | 11.94 | 12.88 | 41.94 | 33.39 | 31.40 | 99.84 | 93.88 |

| w/ opt [2] | 14.06 | 14.20 | 57.13 | 35.66 | 34.18 | 97.17 | 99.75 |

| Route+PSI [16, 2] | 11.94 | 12.88 | 38.21 | 32.08 | 30.66 | 99.92 | 93.06 |

| HMP [22] | 11.33 | 15.97 | 48.35 | 30.83 | 29.52 | 99.85 | 94.49 |

| Ours | 9.00 | 11.96 | 35.98 | 26.35 | 24.75 | 99.53 | 95.06 |

We also present the visual results of previous works and the proposed method in Figure 8. As can be seen in the first row, the turning around during locomotion given by our method is more continuous than the competitors. Meanwhile, the proposed method achieves a higher level of naturalness in the transition between locomotion and sitting, as indicated in the second row. In addition, our predicted sitting motions in the following three rows are more similar to the pseudo-ground truth and more compatible with surrounding scenes thanks to the specifically designed local scene perception. The generated sitting poses can change continuously according to the distance to the chair, and this is attributed to the geometry perception from the PBLSP module which guides the PoseNet to control scene-aware state coefficients and finish the sitting procedure.

Comparison on GTA-IM. Here, we also report the motion reconstruction performance of different methods on GTA-IM dataset in Table II. This table shows that the proposed method can also achieve the best motion reconstruction performance among all compared methods. As for the physical metrics, synthesized motions predicted by our method achieve the highest non-collision score. Even though the optimization stage can improve the contact score of Wang et al. [2] on this dataset, it sacrifices the non-collision metric and results in a much lower score.

| Methods | Transl. | Orient. | Pose | MPJPE | MPVPE | Cont. | Non-col. |

|---|---|---|---|---|---|---|---|

| Wang et al. [2] | 5.73 | 8.44 | 69.54 | 19.95 | 17.74 | 95.88 | 94.66 |

| w/ opt [2] | 8.40 | 8.71 | 96.83 | 24.85 | 22.59 | 99.35 | 90.93 |

| Route+PSI [16, 2] | 5.73 | 8.44 | 69.09 | 19.43 | 17.43 | 95.73 | 94.75 |

| HMP [22] | 7.28 | 9.91 | 78.98 | 21.65 | 19.67 | 97.00 | 94.63 |

| Ours | 4.88 | 7.38 | 54.28 | 15.28 | 13.86 | 97.89 | 95.82 |

The visual comparison of different methods on GTA-IM is presented in Figure 9. Given the scene point cloud and initial human body information, all methods generate 4-second future motions with the highest probability. From the first row of Figure 9, we can see that the motion predicted by our method is more likely to circumvent the column compared to other methods due to the perception of obstacles. According to the second row, the phase information encoded by the discrete cosine transform utilized by HMP [22] can only provide limited phase hints for short-term motion, while the phase step information predicted by our method can better guide the locomotion and make sure the continuity for long-term motion synthesis. From the last few examples, we can see the proposed method can better reconstruct future motion with scene-aware actions given a goal and start human bodies in 3D scenes. That indicates the recognition of the local geometry in our method can help predict the correct anchor-based state to synthesize plausible motion.

Naturalness and Perception Similarity. Human evaluations are taken into consideration to judge the naturalness of synthesized motions. For both the PROX and GTA-IM datasets, we generate samples for each method and present them to users for scoring. Finally, results are collected for each method ( results per sample). We present the results of user study in Table III, and it demonstrates that the synthesized motions given by the proposed pipeline are more natural than those synthesized by previous methods. The average score of P-GT in GTA-IM is lower than that in PROX, and we think this is attributed to the synthetic essential of GTA-IM.

| Methods | Score | |

|---|---|---|

| PROX | GTA-IM | |

| Wang et al. [2] | 1.62(0.61) | 1.64(0.75) |

| w/ opt [2] | 2.33(0.71) | 1.86(0.74) |

| Route+PSI [16, 2] | 1.63(0.60) | 1.75(0.88) |

| HMP [22] | 1.37(0.48) | 1.38(0.62) |

| Ours | 3.33(0.90) | 3.08(1.08) |

| P-GT | 4.50(0.86) | 3.45(1.14) |

We also report the Fréchet Distance in motion feature space in Table IV which indicates the perception similarity between synthesized motions and original ones. We can see that the synthesized motions given by our method are more similar to motions in the original datasets than other competitors.

| Methods | FD[] | |

|---|---|---|

| PROX | GTA-IM | |

| Wang et al. [2] | 23.71 | 101.46 |

| w/ opt [2] | 28.87 | 110.10 |

| Route+PSI [16, 2] | 19.33 | 85.11 |

| HMP [22] | 33.35 | 110.83 |

| Ours | 15.99 | 48.79 |

Diversity in Motion Generation. Due to the randomness in the sampling procedure of goal and pose generation, we can also benefit from the diversity in the synthesized human motions. Here, we show several synthesized motions in Figure 10 that have the same initial human pose, position, and orientation, and were generated under the same surrounding environment. As can be seen, various future motions (shown in different colors) can be generated by our method given the same initial condition.

Motion Synthesis in Dynamic Environment. In this paper, we also consider that the surrounding scene point cloud may change over time. The most common situation in the real world is that there are other persons or vehicles moving around in the scene. Here, we conduct experiments to evaluate the performance of the proposed methods in two typical dynamic environments: (1) several other persons moving around in an indoor scene from GTA-IM [1], (2) a fork driving on the road in an outdoor scene from Semantic3D [55]. The motions synthesized in these scenes are presented in Figure 11. The results indicate our method can timely perceive their movement and update the latent motion to adapt to these changes in surrounding scene point clouds.

4.5 Ablation Study

In this section, we conducted more experiments to check the effectiveness of the proposed DEMOS pipeline and prove our claims. Without loss of generality, our ablation studies are mainly conducted on the GTA-IM dataset.

Instant Motion Prediction. To evaluate the contribution of different components in our method to instant motion prediction, we first checked the performance of the pure backbone (annotated as Backbone). For the Backbone, we took the same network structures but without supervision on state and phase and only utilized PointNet to extract scene features. Then, we add the supervision given by the automatic annotation of Anchor-Based State (ABS) and the corresponding phase for cyclic locomotion. For the body-correlated Projection-based Local Scene Perception which exploits the relationship between the scene’s local geometry and the start human body, we integrate the Spherical Angular Perception (SAP) and BEV Elevation Perception (BEP) into the PBLSP module gradually.

We report all the results in Table V. The results indicate that guiding the network to predict the state and phase of procedure can help synthesize motion with correct and unambiguous action. Moreover, recognizing the surrounding scene point cloud via Spherical-BEV perception and leveraging its correlation with human start information can enhance the precision of the predicted route and motion. Specifically, the SAP focuses more on route prediction and we think that is because SAP can better recognize the objects in different directions. On the other hand, the BEP is more effective in pose sequence prediction because the body-centered elevation recognition can further provide height hints of surrounding objects for state prediction. These functional components can help the networks to predict scene-aware motions instantly via better recognizing the surrounding environment and exploiting the correlation between the scene and the human body.

| Methods | Transl. | Orient. | Pose | MPJPE | MPVPE | Cont. | Non-col. |

|---|---|---|---|---|---|---|---|

| Backbone | 5.64 | 8.62 | 70.06 | 19.35 | 17.16 | 96.69 | 94.89 |

| +ABS | 5.64 | 8.62 | 64.78 | 17.49 | 15.98 | 95.30 | 95.78 |

| +SAP | 5.07 | 7.46 | 61.68 | 16.26 | 14.63 | 96.70 | 95.85 |

| +BEP | 4.88 | 7.38 | 54.28 | 15.28 | 13.86 | 97.89 | 95.82 |

Spherical Perception. In the PBLSP module, the proposed Spherical Angular Perception (SAP) is utilized to recognize the obstacles or contact objects at different directions. Compared to common spherical convolutions, the network of SAP is designed in a more lightweight way to extract scene hints from the angular depth map. For comparison with common spherical convolution, we replace the network of SAP with KTN [46] and reported the results in Table VI. The results indicate the proposed lightweight network of SAP can achieve comparable performance thanks to the pre-computed angular depth representation.

| Methods | Transl. | Orient. | Pose | MPJPE | MPVPE | Cont. | Non-col. |

|---|---|---|---|---|---|---|---|

| Ours(SAP) | 4.88 | 7.38 | 54.28 | 15.28 | 13.86 | 97.89 | 95.82 |

| Ours(KTN) | 4.86 | 7.20 | 55.35 | 15.22 | 13.80 | 96.76 | 96.05 |

Responsiveness. In the proposed pipeline, we iteratively hypothesize new motion sequences with the help of motion prior from the auto-encoding scheme and utilize them to update latent motion, making the final synthesized motion both stable and responsive to the dynamic environment. In previous works, it is more common to generate the motion in the future frames given the current information, and then take the final frame frames later (sub-goal in Wang et al. [2]) as the new initial information for next motion synthesis. When generating the next hypothesized motions , the surrounding scene point cloud can also be updated. Lastly, will be concatenated as the final synthesized motion. Here, we attempt to compare these two strategies to prove the advantage of the proposed pipeline in responsiveness. Figure 12 illustrates the results of two strategies when another person is moving around. The visualization results indicate that naive motion sequence generation and concatenation is less likely to circumvent another moving person and unable to accommodate dynamic scene point cloud.

Stability. Furthermore, our method demonstrates better stability on long-term motion synthesis in scanned point cloud scenes when compared to the pure iterative competitor with an identical network structure. Here, we iteratively generate the body route and pose parameter in the next frame, and combine all of them to obtain the synthesized motion . We visualize two typical samples of the compared methods in Figure 13. The results indicate that the pure iterative method is more prone to diverge from a natural trajectory or collapse to a mean solution, which is unfavorable in the context of long-term motion synthesis.

5 Conclusion

In this paper, we design a Dynamic Environment Motion Synthesis (DEMOS) framework that benefits both from the stability of prior-based motion generation and the responsiveness of iterative motion updating. We introduce a Spherical-BEV perception to make the instantly generated motion consistent with surrounding geometry without the need for an optimization stage. Thus, we can iteratively blend new hypothesized motion with latent motion in an online manner, making the synthesized motion accommodate to the dynamic environment. We align the data format of PROX and GTA-IM to unify the training and evaluation procedure. Versatile experiments demonstrate the proposed method can generate more precise and natural motions which are also plausible in various dynamic scene point clouds. Even though, this task is still quite challenging and in the early stage where more properties like higher method integration and easier control of motion style can be pursued based on the current pipeline in future works.

References

- [1] Z. Cao, H. Gao, K. Mangalam, Q.-Z. Cai, M. Vo, and J. Malik, “Long-term human motion prediction with scene context,” in European Conference on Computer Vision. Springer, 2020, pp. 387–404.

- [2] J. Wang, H. Xu, J. Xu, S. Liu, and X. Wang, “Synthesizing long-term 3d human motion and interaction in 3d scenes,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 9401–9411.

- [3] J. Wang, Y. Rong, J. Liu, S. Yan, D. Lin, and B. Dai, “Towards diverse and natural scene-aware 3d human motion synthesis,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 20 460–20 469.

- [4] C. R. Qi, H. Su, K. Mo, and L. J. Guibas, “Pointnet: Deep learning on point sets for 3d classification and segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 652–660.

- [5] H. Thomas, C. R. Qi, J.-E. Deschaud, B. Marcotegui, F. Goulette, and L. J. Guibas, “Kpconv: Flexible and deformable convolution for point clouds,” in Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 6411–6420.

- [6] S. Zhang, Y. Zhang, Q. Ma, M. J. Black, and S. Tang, “Place: Proximity learning of articulation and contact in 3d environments,” in 2020 International Conference on 3D Vision (3DV). IEEE, 2020, pp. 642–651.

- [7] Y. Guo, H. Wang, Q. Hu, H. Liu, L. Liu, and M. Bennamoun, “Deep learning for 3d point clouds: A survey,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 43, no. 12, pp. 4338–4364, 2020.

- [8] M. Loper, N. Mahmood, J. Romero, G. Pons-Moll, and M. J. Black, “Smpl: A skinned multi-person linear model,” ACM transactions on graphics (TOG), vol. 34, no. 6, pp. 1–16, 2015.

- [9] G. Pavlakos, V. Choutas, N. Ghorbani, T. Bolkart, A. A. Osman, D. Tzionas, and M. J. Black, “Expressive body capture: 3d hands, face, and body from a single image,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 10 975–10 985.

- [10] C. Ionescu, D. Papava, V. Olaru, and C. Sminchisescu, “Human3. 6m: Large scale datasets and predictive methods for 3d human sensing in natural environments,” IEEE transactions on pattern analysis and machine intelligence, vol. 36, no. 7, pp. 1325–1339, 2013.

- [11] I. Armeni, O. Sener, A. R. Zamir, H. Jiang, I. Brilakis, M. Fischer, and S. Savarese, “3d semantic parsing of large-scale indoor spaces,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 1534–1543.

- [12] A. Dai, A. X. Chang, M. Savva, M. Halber, T. Funkhouser, and M. Nießner, “Scannet: Richly-annotated 3d reconstructions of indoor scenes,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 5828–5839.

- [13] A. Chang, A. Dai, T. Funkhouser, M. Halber, M. Niessner, M. Savva, S. Song, A. Zeng, and Y. Zhang, “Matterport3d: Learning from rgb-d data in indoor environments,” arXiv preprint arXiv:1709.06158, 2017.

- [14] N. Mahmood, N. Ghorbani, N. F. Troje, G. Pons-Moll, and M. J. Black, “Amass: Archive of motion capture as surface shapes,” in Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 5442–5451.

- [15] Y. Ren, C. Zhao, Y. He, P. Cong, H. Liang, J. Yu, L. Xu, and Y. Ma, “Lidar-aid inertial poser: Large-scale human motion capture by sparse inertial and lidar sensors,” IEEE Transactions on Visualization and Computer Graphics, vol. 29, no. 5, pp. 2337–2347, 2023.

- [16] Y. Zhang, M. Hassan, H. Neumann, M. J. Black, and S. Tang, “Generating 3d people in scenes without people,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 6194–6204.

- [17] M. Hassan, P. Ghosh, J. Tesch, D. Tzionas, and M. J. Black, “Populating 3d scenes by learning human-scene interaction,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 14 708–14 718.

- [18] K. Zhao, S. Wang, Y. Zhang, T. Beeler, and S. Tang, “Compositional human-scene interaction synthesis with semantic control,” in European Conference on Computer Vision, 2022.

- [19] S. Starke, H. Zhang, T. Komura, and J. Saito, “Neural state machine for character-scene interactions.” ACM Trans. Graph., vol. 38, no. 6, pp. 209–1, 2019.

- [20] M. Hassan, D. Ceylan, R. Villegas, J. Saito, J. Yang, Y. Zhou, and M. J. Black, “Stochastic scene-aware motion prediction,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 11 374–11 384.

- [21] S. Zhang, Y. Zhang, F. Bogo, M. Pollefeys, and S. Tang, “Learning motion priors for 4d human body capture in 3d scenes,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 11 343–11 353.

- [22] J. Xu, M. Wang, J. Gong, W. Liu, C. Qian, Y. Xie, and L. Ma, “Exploring versatile prior for human motion via motion frequency guidance,” in 2021 International Conference on 3D Vision (3DV). IEEE, 2021, pp. 606–616.

- [23] D. Holden, T. Komura, and J. Saito, “Phase-functioned neural networks for character control,” ACM Transactions on Graphics (TOG), vol. 36, no. 4, pp. 1–13, 2017.

- [24] M. Hassan, V. Choutas, D. Tzionas, and M. J. Black, “Resolving 3d human pose ambiguities with 3d scene constraints,” in Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 2282–2292.

- [25] Y. Shen, C. Feng, Y. Yang, and D. Tian, “Mining point cloud local structures by kernel correlation and graph pooling,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 4548–4557.

- [26] W. Wu, Z. Qi, and L. Fuxin, “Pointconv: Deep convolutional networks on 3d point clouds,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 9621–9630.

- [27] H. Lei, N. Akhtar, and A. Mian, “Spherical kernel for efficient graph convolution on 3d point clouds,” IEEE transactions on pattern analysis and machine intelligence, vol. 43, no. 10, pp. 3664–3680, 2020.

- [28] J. Gong, J. Xu, X. Tan, H. Song, Y. Qu, Y. Xie, and L. Ma, “Omni-supervised point cloud segmentation via gradual receptive field component reasoning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 11 673–11 682.

- [29] J. Gong, J. Xu, X. Tan, J. Zhou, Y. Qu, Y. Xie, and L. Ma, “Boundary-aware geometric encoding for semantic segmentation of point clouds,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 2, 2021, pp. 1424–1432.

- [30] Y. You, Y. Lou, R. Shi, Q. Liu, Y.-W. Tai, L. Ma, W. Wang, and C. Lu, “Prin/sprin: On extracting point-wise rotation invariant features,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 12, pp. 9489–9502, 2021.

- [31] Q. Hu, B. Yang, L. Xie, S. Rosa, Y. Guo, Z. Wang, N. Trigoni, and A. Markham, “Learning semantic segmentation of large-scale point clouds with random sampling,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 11, pp. 8338–8354, 2021.

- [32] S. Prokudin, C. Lassner, and J. Romero, “Efficient learning on point clouds with basis point sets,” in Proceedings of the IEEE/CVF international conference on computer vision, 2019, pp. 4332–4341.

- [33] H. Yi, C.-H. P. Huang, S. Tripathi, L. Hering, J. Thies, and M. J. Black, “Mime: Human-aware 3d scene generation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 12 965–12 976.

- [34] J. F. Mullen, D. Kothandaraman, A. Bera, and D. Manocha, “Placing human animations into 3d scenes by learning interaction-and geometry-driven keyframes,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2023, pp. 300–310.

- [35] A. Alahi, V. Ramanathan, and L. Fei-Fei, “Socially-aware large-scale crowd forecasting,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2014, pp. 2203–2210.

- [36] A. Alahi, K. Goel, V. Ramanathan, A. Robicquet, L. Fei-Fei, and S. Savarese, “Social lstm: Human trajectory prediction in crowded spaces,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 961–971.

- [37] Z. Wang, J. Chai, and S. Xia, “Combining recurrent neural networks and adversarial training for human motion synthesis and control,” IEEE Transactions on Visualization and Computer Graphics, vol. 27, no. 1, pp. 14–28, 2019.

- [38] M. Petrovich, M. J. Black, and G. Varol, “Action-conditioned 3d human motion synthesis with transformer vae,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 10 985–10 995.

- [39] B. Parsaeifard, S. Saadatnejad, Y. Liu, T. Mordan, and A. Alahi, “Learning decoupled representations for human pose forecasting,” in Proceedings of the IEEE/CVF International Conference on Computer Vision Workshop, 2021, pp. 2294–2303.

- [40] D. Holden, O. Kanoun, M. Perepichka, and T. Popa, “Learned motion matching,” ACM Transactions on Graphics (TOG), vol. 39, no. 4, pp. 53–1, 2020.

- [41] S. I. Park, H. J. Shin, and S. Y. Shin, “On-line locomotion generation based on motion blending,” in Proceedings of the 2002 ACM SIGGRAPH/Eurographics symposium on Computer animation, 2002, pp. 105–111.

- [42] J. Xu, H. Xu, B. Ni, X. Yang, X. Wang, and T. Darrell, “Hierarchical style-based networks for motion synthesis,” in European conference on computer vision. Springer, 2020, pp. 178–194.

- [43] B. Wandt, H. Ackermann, and B. Rosenhahn, “3d reconstruction of human motion from monocular image sequences,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 38, no. 8, pp. 1505–1516, 2016.

- [44] W. Straßer, “Schnelle kurven-und flächendarstellung auf grafischen sichtgeräten,” Ph.D. dissertation, 1974.

- [45] J. Gong, F. Liu, J. Xu, M. Wang, X. Tan, Z. Zhang, R. Yi, H. Song, Y. Xie, and L. Ma, “Optimization over disentangled encoding: Unsupervised cross-domain point cloud completion via occlusion factor manipulation,” in European Conference on Computer Vision, 2022.

- [46] Y.-C. Su and K. Grauman, “Kernel transformer networks for compact spherical convolution,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 9442–9451.

- [47] K. Sohn, H. Lee, and X. Yan, “Learning structured output representation using deep conditional generative models,” Advances in neural information processing systems, vol. 28, 2015.

- [48] S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural computation, vol. 9, no. 8, pp. 1735–1780, 1997.

- [49] Y. Zhou, C. Barnes, J. Lu, J. Yang, and H. Li, “On the continuity of rotation representations in neural networks,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019, pp. 5745–5753.

- [50] M. Contributors, “Openmmlab 3d human parametric model toolbox and benchmark,” https://github.com/open-mmlab/mmhuman3d, 2021.

- [51] Q.-Y. Zhou, J. Park, and V. Koltun, “Open3D: A modern library for 3D data processing,” arXiv:1801.09847, 2018.

- [52] J. Wang, S. Yan, B. Dai, and D. Lin, “Scene-aware generative network for human motion synthesis,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 12 206–12 215.

- [53] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [54] A. Abid, A. Abdalla, A. Abid, D. Khan, A. Alfozan, and J. Zou, “Gradio: Hassle-free sharing and testing of ml models in the wild,” arXiv preprint arXiv:1906.02569, 2019.

- [55] T. Hackel, N. Savinov, L. Ladicky, J. D. Wegner, K. Schindler, and M. Pollefeys, “Semantic3d. net: A new large-scale point cloud classification benchmark,” arXiv preprint arXiv:1704.03847, 2017.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3a5f9242-ccbf-47a6-b536-9e4529f1f356/gjy.jpg) |

Jingyu Gong received his B.S. degree in Physics from the Shanghai Jiao Tong University, China in 2019. He is now a Ph.D. student at the Department of Computer Science and Engineering, Shanghai Jiao Tong University, China. His research interests cover 3D point cloud recognition and 3D motion synthesis. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3a5f9242-ccbf-47a6-b536-9e4529f1f356/wangmin.jpg) |

Min Wang received his Ph.D. degrees from the Department of Computer Science and Technologies, Shanghai Jiao Tong University. He is currently the senior researcher of SenseTime, responsible for the research of human-computer interaction and AI content generation. His research interest includes 3D Vision and Computer Graphics. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3a5f9242-ccbf-47a6-b536-9e4529f1f356/wentao.jpg) |

Wentao Liu received the PhD degree from the School of EECS, Peking University. He is currently the research director of SenseTime, responsible for end-edge computing research. The research products are widely applied in augmented reality, smart industry, and business intelligence. His research interests include computer vision and pattern recognition. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3a5f9242-ccbf-47a6-b536-9e4529f1f356/qianchen.jpg) |

Chen Qian is currently the executive research director of SenseTime, where he is responsible for leading the team in AI content generation and end-edge computing research in 2D and 3D sce- narios. The technology is widely used in the top four mobile companies in China, APPs both home and abroad in augmented reality, video sharing and live streaming, vehicle OEMs, and smart industry. He has published dozens of articles on top journals and dozens of papers on top conferences, such as IEEE Transactions on Pattern Analysis and Machine Intelligence, CVPR, ICCV, and ECCV with more than 4000 citations. He has also led the team to achieve the first place in the Competition of Face Identification and Face Verification in Megaface Challenge. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3a5f9242-ccbf-47a6-b536-9e4529f1f356/zzz.png) |

Zhizhong Zhang received the Ph.D. degree in pattern recognition and intelligent systems from the Institute of Automation, Chinese Academy of Sciences (CAS), in 2020. He is currently an Associate Research Professor with the School of Computer Science and Technology, East China Normal University. His research interests include image processing, computer vision, machine learning, and pattern recognition. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3a5f9242-ccbf-47a6-b536-9e4529f1f356/XY.png) |

Yuan Xie received the PhD degree in Pattern Recognition and Intelligent Systems from the Institute of Automation, Chinese Academy of Sciences (CAS), in 2013. He is currently a full professor with the School of Computer Science and Technology, East China Normal University, Shanghai, China. His research interests include image processing, computer vision, machine learning, and pattern recognition. He has published around 90 papers in major international journals and conferences including the IJCV, IEEE TPAMI, TIP, TNNLS, TCYB, NIPS, ICML, CVPR, ECCV, ICCV, etc. He also has served as a reviewer for more than 15 journals and conferences. Dr. Xie received the National Science Fund for Excellent Young Scholars 2022. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3a5f9242-ccbf-47a6-b536-9e4529f1f356/mlz.png) |

Lizhuang Ma received his B.S. and Ph.D. de- grees from the Zhejiang University, China in 1985 and 1991, respectively. He is now a Distinguished Professor, at the Department of Computer Science and Engineering, Shanghai Jiao Tong University, China and the School of Computer Science and Technology, East China Normal University, China. He was a Visiting Professor at the Frounhofer IGD, Darmstadt, Germany in 1998, and a Visiting Professor at the Center for Advanced Media Technology, Nanyang Technological University, Singapore from 1999 to 2000. His research interests include computer vision, computer aided geometric design, computer graphics, scientific data visualization, computer animation, digital media technology, and theory and applications for computer graphics, CAD/CAM. He serves as the reviewer of IEEE TPAMI, IEEE TIP, IEEE TMM, CVPR, AAAI etc. |