Delving Deep into Simplicity Bias for Long-Tailed Image Recognition

Abstract

Simplicity Bias (SB) is a phenomenon that deep neural networks tend to rely favorably on simpler predictive patterns but ignore some complex features when applied to supervised discriminative tasks. In this work, we investigate SB in long-tailed image recognition and find the tail classes suffer more severely from SB, which harms the generalization performance of such underrepresented classes. We empirically report that self-supervised learning (SSL) can mitigate SB and perform in complementary to the supervised counterpart by enriching the features extracted from tail samples and consequently taking better advantage of such rare samples. However, standard SSL methods are designed without explicitly considering the inherent data distribution in terms of classes and may not be optimal for long-tailed distributed data. To address this limitation, we propose a novel SSL method tailored to imbalanced data. It leverages SSL by triple diverse levels, i.e., holistic-, partial-, and augmented-level, to enhance the learning of predictive complex patterns, which provides the potential to overcome the severe SB on tail data. Both quantitative and qualitative experimental results on five long-tailed benchmark datasets show our method can effectively mitigate SB and significantly outperform the competing state-of-the-arts.

Index Terms:

Long-Tailed Image Recognition; Simplicity Bias; Deep Learning; Self-Supervised Learning.1 Introduction

Deep neural networks have revolutionized various computer vision applications such as image recognition, object detection, and semantic segmentation, just name a few. However, even the most powerful deep model suffers when learning from long-tailed distributed data. The major bottleneck lies on the unsatisfactory performance of the tail classes which are underrepresented by limited samples. Thus, at the core of long-tailed image recognition lies the strategies to mitigating such data imbalance, where common solutions include re-sampling [1, 2], loss re-weighting [3, 4, 5, 6], and data augmentation [7], etc.

In this work, we inspect long-tailed image recognition through the lens of simplicity bias (SB) [8, 9, 10]. SB is a phenomenon that when applied to supervised discriminative tasks such as image recognition, deep neural networks tend to rely favorably on simpler predictive patterns but ignore some complex features that can be important for model generalization. To unveil if there exists correlation between SB and the amount of data available for a specific class, we investigate SB in long-tailed image recognition and uncover that, under standard supervised losses such as cross-entropy, tail classes suffer more severely from SB. As shown in Figure 1, for underrepresented classes, simple patterns such as the “digits” are activated but complex patterns such as the “truck” are ignored. This observation partially explains the poor generalization performance on tail classes.

On the other side, different from supervised learning that aims to identify image patterns that are sufficient to distinguish the limited training samples of each particular class, self-supervised learning (SSL) is expected to encourage more comprehensive features to learn augmentation-invariant image representations [11]. Motivated by this, in our preliminary study we combine off-the-shelf SSL and supervised training together on long-tailed image recognition and empirically report that the SSL component can mitigate SB and performs in complementary to the supervised counterpart by enriching the features from tail samples and consequently taking better advantage of such rare samples. However, standard SSL methods are designed without explicitly considering the inherent distribution of the data in terms of classes and thus may not function optimally for long-tailed distributed data. To address such limitation, we propose a novel SSL method termed as “Triple-Level Self-Supervised Learning” (3LSSL) which is tailored to imbalanced data. More specifically, 3LSSL first performs the holistic-level SSL to enhance the learning of comprehensive patterns in the holistic level w.r.t. the raw input. It then develops a partial-level SSL by leveraging a masking manner to force the models to learn more comprehensive (complementary) information from the partial level w.r.t. the holistic level. To further consider the severe SB on tail data, it provides pseudo positive samples for an anchor sample based on the prediction results from the classifier, which essentially plays the role of augmenting the tail classes and forms as the augmented-level SSL.

To evaluate our method, we conduct extensive experiments on five benchmark long-tailed recognition datasets, i.e., long-tailed CIFAR-10/long-tailed CIFAR-100 [12], ImageNet-LT [13], Places-LT [14] and iNaturalist 2018 [15]. Quantitative results of classification accuracy on these datasets show that our 3LSSL method consistently surpasses existing state-of-the-art methods by a large margin. Also, the ablation studies of these crucial components in our method also validate their own effectiveness. In particular, to justify the effects of alleviation of simplicity bias, we demonstrate various qualitative results for visualization analyses. Beyond that, we expect our work can provide new understanding about learning from long-tailed data and inspire new ideas to address SB in long-tailed or rare data.

The rest of the paper is organized as follows. Section 2 retrospects the related work. Section 3 elaborates our investigation about simplicity bias in long-tailed image recognition tasks. Section 4 introduces the details of our proposed method. Experiments and analyses of both qualitative and quantitative aspects are provided in Section 5. Section 6 presents conclusions and future work.

2 Related Work

We briefly review the related work in the following three aspects, i.e., long-tailed image recognition, simplicity bias in neural networks, and self-supervised learning.

2.1 Long-Tailed Image Recognition

Long-tailed image recognition is a fundamental research topic in machine learning and computer vision, where it aims to overcome the data imbalance challenge [16, 1, 4]. In general, existing long-tailed image recognition methods can be roughly separated into the following paradigms. 1) Class re-balancing strategies: Re-balancing strategies, e.g., data re-sampling [17, 18] and loss re-weighting [19, 20], are conventional solutions for handling long-tailed distributed data. The popular re-sampling methods involve over-sampling by simply repeating data of minority classes [17, 2, 21] and under-sampling by abandoning data of dominant classes [18, 2, 1]. But with re-sampling, duplicated tailed samples might lead to over-fitting upon minority classes [22, 4], while discarding precious data will inevitably impair the generalization ability of deep networks. On the other hand, re-weighting belongs to another line of class re-balancing strategies, which works by allocating large weights for training samples of tail classes in loss functions, e.g., [20, 23]. 2) Decoupled learning: It is a recent trend towards effective long-tailed image recognition, which decouples the image representation learning and classifier learning to improve long-tailed classification performance. Specifically, Zhou et al. [24] and Kang et al. [25] decoupled classifier and representation learning and found that uniform sampling could benefit representation learning while classifier learning favors class-balance sampling. Later, Wang et al. [26] further introduced supervised contrastive learning into decoupled learning to improve the discriminative ability of image representations. Recently, Alshammari et al. [27] developed a sequential decoupled learning method with weight balancing strategies and achieved promising performance. 3) Ensembling: Handling different parts of the long-tailed data with multiple experts shows promising performance for long-tailed image recognition, which works essentially as ensembling by integrating these experts to obtain the optimal model over the entire dataset [28, 29, 30]. Concretely, [29] distilled multiple teacher models into a unified model, and each teacher focused on a relatively balanced group such as many-shot, medium-shot and few-shot classes. While for [31], it learned multiple distribution-aware experts by a dynamic expert routing module.

2.2 Simplicity Bias in Neural Networks

In the literature, simplicity bias (SB), i.e., the tendency of supervised neural networks to find simple patterns, was proposed to analyze the generalization of neural networks [8, 10]. In particular, in [8], it originally showed that neural networks trained with SGD are biased to learning the simplest predictive features in the data, while overlooking the complex but equally-predictive patterns. Inspired by this, Teney et al. [9] demonstrated that SB can be mitigated through a diversity constraint and explored how to improve out of distribution generalization by overcoming SB. In this paper, to our best knowledge, we are the first to investigate the SB problem in the long-tailed image recognition task, and propose methods that can effectively mitigate SB in long-tailed distribution learning.

2.3 Self-Supervised Learning

Self-supervised learning (SSL) has attracted increasing attention thanks to its ability to learn meaningful data representations without human annotation. It is capable of adopting self-defined pseudo labels as supervision and employs the learned representations for various downstream tasks, e.g., object detection, segmentation in computer vision. In particular, contrastive learning has recently become a dominant research area in SSL, which aims at learning augmentation-invariant representations. The basic idea thereof is contrasting the agreement between positive pairs, e.g., images under different augmentations, against those from negative pairs. More specifically, in SimCLR [32], a successful end-to-end model consisting of two encoders was proposed, where one encoder generates representations for positive samples and the other learns representations for negative samples. However, SimCLR required a large batch size to achieve satisfactory performance. The idea of memory bank [33] provides a potential solution for alleviating this limitation by pre-storing encodings of negative samples. Later, in MoCo [34], it followed a dictionary look-up perspective for conducting contrastive learning by designing a dynamic dictionary with a queue and a moving-averaged encoder. SwAV [35] was proposed by utilizing a clustering algorithm to group similar feature together for similarity calculation. Very recently, [36] investigated SSL under the dataset imbalance setting and studied the problem of robustness to imbalanced training of self-supervised representations. In this paper, we empirically validate that SSL can learn more comprehensive features from input images, which can mitigate simplicity bias, especially for the tail data in long-tailed distribution. Moreover, we further propose a novel SSL method tailored for imbalanced data to boost the quality of representations learned from long-tailed data.

3 The Pitfalls of Simplicity Bias in Long-Tailed Image Recognition

Simplicity bias (SB) [8] is recently observed from neural networks trained with stochastic gradient descent, which shows the networks rely preferentially on few simple predictive features while ignoring more complex predictive features. The SB phenomenon partially explains the lack of robustness and generalization of deep neural networks in various vision problems, e.g., out of distribution [9], confidence calibration [37], fooling examples [38], etc. In this section, we investigate SB in the long-tailed image recognition task by conducting a series of preliminary experiments, as well as performing a simple baseline method for preliminary empirical verification.

3.1 Preliminary Empirical Studies

We present the dataset and preliminary results in the following, as well as discussing the investigation of simplicity bias in long-tailed image recognition.

3.1.1 Dataset

| Test data | 100 | 10 | 1 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Head | Medium | Tail | All | Head | Medium | Tail | All | Head | Medium | Tail | All \bigstrut[t] | |

| MNIST-CIFAR-LT | 99.90 | 99.40 | 98.50 | 99.33 | 99.90 | 99.80 | 99.63 | 99.79 | 99.87 | 99.93 | 99.90 | 99.90 \bigstrut[t] |

| MNIST-only | 99.60 | 98.40 | 94.90 | 97.83 | 99.55 | 98.93 | 98.23 | 98.97 | 99.72 | 99.47 | 98.96 | 99.42 |

| CIFAR-only | 61.67 | 59.00 | 32.16 | 52.02 | 66.10 | 69.80 | 68.16 | 67.83 | 64.40 | 80.50 | 84.46 | 75.25 |

| CIFAR-only (Oracle) | 88.17 | 73.16 | 69.53 | 78.08 | 87.92 | 85.83 | 88.40 | 87.44 | 85.58 | 92.06 | 95.03 | 91.16 |

| Accuracy gap | -26.50 | -14.16 | -37.37 | -26.06 | -21.82 | -16.03 | -20.24 | -19.61 | -21.18 | -11.56 | -10.57 | -15.91 |

In order to explicitly inspect the simplicity bias (SB) phenomenon on long-tailed image recognition, we construct a long-tailed toy dataset by vertically concatenating MNIST [39] images onto CIFAR-10 [12] images. The dataset contains 10 classes, where each class is a combination of a unique MNIST class and CIFAR-10 class111The constructed dataset has the following correspondences of the original classes of MNIST and CIFAR-10: “0-airplane”, “1-automobile”, “2-bird”, “3-cat”, “4-deer”, “5-dog”, “6-frog”, “7-horse”, “8-ship”, and “9-truck”., cf. Figure 1a. More importantly, these concatenated images follow a long-tailed distribution in terms of classes, where the number of images per class decreases monotonically from class with digit “0” to class with digit “9”. The imbalance ratio222The imbalance ratio , where and denote the number of training samples for the most and least frequent classes, respectively. is varied by 100, 10 and 1, which are similar to the previous work [4, 5]. We term this constructed dataset as MNIST-CIFAR-LT. More specifically, its training and test splits comprise 50,000 and 10,000 images of size , where the training set is long-tailed and the test set is class-balanced. Note that, the images in MNIST are duplicated across three channels to match CIFAR dimensions before concatenation.

3.1.2 Preliminary results and discussions

We first conduct standard supervised training, i.e., network training of ResNet-32 [40] with cross-entropy, on MNIST-CIFAR-LT. After the network converges, we visualize the activations of input MNIST-CIFAR-LT images in the test set. Figure 1a shows ten example images from head, medium, and tail classes in the descending order of image quantity. From the visualization results in Figure 1b we can clearly observe that the MNIST parts are activated in all of the cases while the activations of CIFAR parts are reduced with decreasing number of images, or even nearly completely ignored. This observation indicates SB indeed happens because in this dataset digit patterns of MNIST are simpler than the object patterns of CIFAR. More importantly, the SB phenomenon becomes more significant on the tail data. For example, the “truck” is almost not activated in the “9-truck” example. This empirical study shows there exists correlation between SB and the amount of training data available to a specific class.

To inspect the SB in long-tailed image recognition quantitatively, we leave the training data of MNIST-CIFAR-LT intact, while proposing different setups of test data, i.e., MNIST-CIFAR-LT, MNIST-only, and CIFAR-only. MNIST-only/CIFAR-only means that we only use the MNIST/CIFAR part for testing. The results are reported in Table I. As presented, compared with the testing accuracy on MNIST-CIFAR-LT, the classification accuracy on MNIST-only has a slight drop. However, the test data of CIFAR-only shows a large overall accuracy reduction, which confirms the existence of SB again.

But, when further investigating the influence of SB on the tail data, we face a bias issue in the training set. As seen, when the imbalance ratio is 1, i.e., no long-tailed training distribution, the classification accuracy on CIFAR-only of “head”, “medium” and “tail”333In fact, there are no “head”, “medium” and “tail” classes in a balanced data distribution (i.e., the imbalance ratio is 1). is 64.40%, 80.50%, and 84.46%, respectively. There is a significantly large accuracy gap between these classes based on such a balanced training set. Speaking directly, on a balanced training set of the aforementioned MNIST-CIFAR-LT, the CIFAR parts of “tail” (whose accuracy is 84.46%) are easier for classification than the CIFAR parts of “head” (whose accuracy is 64.40%). To gain a clearer and more quantitative understanding of how much tail classes suffer from SB in long-tailed distribution data, we create an oracle model which trains on randomized blocks of MNIST parts and intact blocks of CIFAR parts in the constructed MNIST-CIFAR-LT dataset by following [9]. Since the oracle trains on randomized blocks of MNIST, it is forced to only learn the patterns of CIFAR parts. Thus, the classification accuracy of such a model on the test set of CIFAR-only will act as the oracle performance on head/medium/tail data, i.e., the results in the row of “CIFAR-only (Oracle)” in Table I.

In concretely, by comparing the results of “CIFAR-only” and “CIFAR-only (Oracle)”, we can get the accuracy reduction gap caused by SB while removing the training bias as much as possible, cf. “Accuracy gap” in Table I. Apparently, due to SB, the accuracy on the test set of CIFAR-only under different imbalance ratio has dropped by at least 10%, which again quantitatively confirms the influence of SB. More importantly, when comparing the accuracy gap results of different imbalance ratio horizontally, we find that as the long-tailed distribution imbalance increases, the tail data is more seriously affected by SB. For example, when the imbalance ratio is 1, the accuracy reduction gap caused by SB on “head” is 21.18%, and the accuracy gap on “tail” is 10.57%. With the increment of imbalance ratios, the accuracy reduction gap on head data grows to 21.82% and 26.50% with imbalance ratios of 10 and 100, respectively. It can be said that the accuracy reduction on head data is basically not affected by the long-tailed distribution (21.18% vs. 21.82%/26.50%). However, the accuracy reduction gap on tail data increases dramatically from 10.57% to 20.24% and 37.37% with imbalance ratios of 10 and 100, which is quite significant. On the other side, from the perspective of absolute accuracy comparison, when the imbalance ratio is 100, even if the CIFAR parts of “tail” are easier to classify due to the aforementioned training bias, its classification accuracy only achieves 32.16% under the influence of more serious SB on tail data under the long-tailed distribution. While also, the classification accuracy of head data on the test set of CIFAR-only is basically stable at about 60% for different imbalance ratios.

From the aforementioned observations, we can have the conclusion that the tail data suffers more severely from SB.

3.2 A Baseline Method Incorporating Self-Supervised Learning

As aforementioned, SB does appear in long-tailed image recognition and has a more dramatic effect on the tail data. To alleviate this problem, we propose to use self-supervised learning (SSL) to learn more comprehensive features/patterns, especially complex features/patterns.

As preliminary verification, we design an experiment with a baseline method incorporating SSL. Concretely, we utilize different approaches to pre-train a ResNet-32 [40] network on the long-tailed CIFAR-100 [5] dataset with imbalance ratio of 100, and then fix the backbone but fine-tune the classifier via class-balanced sampling. In this way, we compare the generalization ability of the learned image representations, which can implicitly reflect the degree of SB [8].

| Approach | Head | Medium | Tail | All |

|---|---|---|---|---|

| CE | 62.83 | 56.85 | 45.36 | 55.50 \bigstrut[t] |

| Ours (CE w. SSL) | 64.29 | 56.37 | 48.20 | 56.69 |

| SSL | 31.23 | 30.43 | 24.37 | 28.89 |

These pre-trained approaches as well as the classification accuracy obtained thereof are presented in Table II. More specifically, when comparing to pre-training with cross-entropy, we further equip self-supervised learning into pre-training by applying MoCo-v2 [41] in a multi-task learning framework, which is denoted by “Ours (CE w. SSL)”, as our SSL baseline in Table II. As shown, combining SSL, the classification accuracy obtains an obvious improvement on the tail data (about 3% improvements). In addition, we also report the results of only using SSL for pre-training, and we can see that it achieves inferior classification accuracy. These phenomena indicate that supervised learning and self-supervised learning are complementary to each other in this case.

Furthermore, we also perform the SSL baseline method on MNIST-CIFAR-LT and show the learning curves with cross-entropy and our SSL baseline in Figure 2. As shown, after applying SSL, the final classification accuracy of CIFAR-only has been improved. These observations in Figure 2 show that SB has been greatly alleviated by the self-supervised fashion. Also, we visualize the activations by performing our SSL baseline in Figure 1c. It can also be clearly observed that the network tends to attend more complex patterns of the CIFAR part thanks to SSL.

4 Methodology

Inspired by the preliminary experiments, we propose a simple but effective method, i.e., Triple-Level Self-Supervised Learning (3LSSL), to incorporate SSL into long-tailed image recognition. It leverages SSL by triple different levels to enhance the learning of predictive complex patterns, providing the potential to overcome the severe SB on tail data.

4.1 Holistic-Level SSL

We follow the classic SSL framework, e.g., MoCo [34, 41], to realize the holistic-level self-supervised learning in our 3LSSL method. More specifically, for an image , we augment it in different ways and obtain and as inputs of the encoder and momentum encoder in SSL. Then, after performing two projectors, the image representations of and are obtained, i.e., and . Different from previous SSL methods using InfoNCE as the loss function [34, 41], we employ -normalization upon and and calculate the cosine similarity to drive the network training, which is as follows:

| (1) |

where we omit -normalization for simplification. The holistic-level SSL is presented as the flow with black arrows in Figure 3, which aims to enhance the learning of comprehensive patterns in the holistic level w.r.t. .

Regarding the update of momentum encoder in our method, we follow [34] by conducting

| (2) |

where represents the current step, and means the previous step. and represent the parameters of encoder and momentum encoder, respectively. is the updating rate of the momentum encoder, which is fixed as .

4.2 Partial-Level SSL

Through the visualized activations in the preliminary experiments on MNIST-CIFAR-LT, cf. Figure 1c vs. Figure 1b, it verifies that holistic SSL (i.e., the SSL baseline in Section 3.2) is able to make models learn more sufficient patterns (i.e., alleviating simplicity bias) than vanilla models to some extent. However, there are still some important but complex image regions that are ignored due to SB, e.g., the upper part of the ship or the head of the truck in Figure 1c. To solve the problem that holistic-level SSL might ignore complex patterns, we develop a partial-level SSL by leveraging a masking manner to force the models to learn more comprehensive information from the partial level w.r.t. the whole image. The partial-level SSL is illustrated as the flow with yellow arrows in Figure 3.

Concretely, for the input , we can obtain its deep feature map by performing the encoder, where , and represent the height, width and depth of the feature map, respectively. Then, we employ an off-the-shelf localization approach, i.e., Class Activation Mapping (CAM) [42], upon with the re-balanced classifier (elaborated lated in Section 4.3) to indicate the class-specific discriminative image regions used by CNNs to identify that class. It could also expose the implicit attention of CNNs w.r.t. a target class on an image. Obeying the notations in [42], we conduct CAM by projecting back the weights of the output layer on to the convolutional feature maps as

| (3) |

where is the weight of w.r.t. class for neural unit in the last convolutional layer of the encoder, and represent the activation of unit at spatial location . Thus, directly indicates the importance of the activation at spatial grid leading to the classification of an image to class , and corresponds to the so-called class activation map. Intuitively, is simply a weighted linear sum of the presence of these visual patterns at different spatial locations. By further simply upsampling to the size of the input image, it can identify the image regions most relevant to the particular class. Therefore, to achieve the goal of our partial-level SSL as aforementioned, we deploy as a mask for filtering out those class-specific image regions that are most likely to be attended by deep networks, and force it to learn more comprehensive information from the remaining image regions. In our implementation, we normalize by

| (4) |

where forms the normalized mask whose values are in the range of . and are the minimum and maximum value of , respectively.

After that, the input w.r.t. the partial-level SSL is obtained by

| (5) |

where is the unit matrix with all elements, is the element-wise product, and represents the upsampling operation to the size of original image resolution. Thus, as shown by the yellow arrows in Figure 3, the embedding of , i.e., , is obtained through the momentum encoder followed by the projector. Similar to the loss in the holistic-level SSL, we calculate the similarity between the -normalized and by

| (6) |

to realize the partial-level SSL in our method.

4.3 Augmented-Level SSL

To further consider the severe SB on tail data, we enhance the similarity-based representation learning of tail classes by borrowing pseudo samples from other classes informed by classifier outputs, which is termed as the augmented-level SSL. As the flow of blue arrows shown in Figure 3, we first build a re-balanced classifier upon and then produce an augmented queue containing augmented samples from for achieving the aforementioned goal.

More specifically, we equip a supervised component by training a classifier upon the encoder’s output for recognizing . It also complies with the conclusion that supervised learning and self-supervised learning complement each other in the case of long-tailed recognition, cf. Table II. The classification loss w.r.t. hereby is the cross-entropy loss function on the ground truth and the calibrated predicted logits which are obtained by adding up re-balanced factors of to original predicted logits (where is the number of samples in class ).

To enhance the similarity-based representation learning of the tail data , in the augmented-level SSL, we use to predict the class affiliation of and select samples from class as pseudo positives of . If we denote the embedding of such pseudo positive as , we add another similarity-based loss on such a pair . By doing this, we augment the tail classes by providing chances to see more semantic-relevant samples for representation learning and consequently learn features that generalize better.

Furthermore, in order to achieve better accuracy and make the training more stable, we develop an augmented queue strategy to pre-store embeddings (i.e., a general form of as aforementioned) and their predicted pseudo labels . In concretely, for such a size- augmented queue , we initially set the feature vectors as and their predictions as . After obtaining a pseudo-label of at each time step, the embedding-pseudo label pair in the earliest time step will be removed, and it adds the newly combing embedding-pseudo label pair to the queue. Then, we collect all embeddings associated with class in the augmented queue to obtain an aggregated feature embedding, which is formulated by

| (7) |

where . After that, the augmented-level SSL is optimized by computing the similarity between the -normalized and , which also follows the holistic- and partial-level SSL:

| (8) |

Overall, the final loss function of our 3LSSL method is optimized by

| (9) |

where denotes the classification loss w.r.t. . All trade-off parameters are for all experiments, which reveals the good potential of our method in practice and its simplicity.

5 Experiments

In this section, we present the empirical settings, implementation details, main results, ablation studies and also some qualitative analyses of visualization.

5.1 Datasets, Settings, and Implementation Details

We conduct experiments on five long-tailed image recognition benchmark datasets, including long-tailed CIFAR-10/long-tailed CIFAR-100 [12], ImageNet-LT [13], Places-LT [14] and iNaturalist 2018 [15]. Furthermore, we also report the results on the constructed MNIST-CIFAR-LT dataset. For quantitative comparisons, we evaluate the methods on the corresponding balanced validation datasets, and report the top-1 accuracy over all classes, which is denoted as “All”. Besides, we follow [25, 13] to split the classes into three subsets and report the average accuracy in these three subsets, i.e., “Many”-shot (100 images), “Medium”-shot (20100 images), and “Few”-shot (20 images), which are also termed as head, medium and tail data, respectively.

-

•

Long-tailed CIFAR-10/long-tailed CIFAR-100: Both CIFAR-10 and CIFAR-100 [12] contain 60,000 images, 50,000 for training and 10,000 for validation with category number of 10 and 100, respectively. For fair comparisons, we use the long-tailed versions of CIFAR datasets as the same as those used in [5] with controllable degrees of data imbalance. We use an imbalance factor to describe the severity of the long tail problem with the number of training samples for the most frequent class and the least frequent class, e.g., . Imbalance factors we use in experiments are , and .

-

•

ImageNet-LT: The ImageNet-LT dataset [13] is a long-tailed version of the original ImageNet-2012 [43], which is constructed by sampling a subset following the Pareto distribution with the power value as . It contains 115.8K large-scale images from 1,000 categories. The number of training samples for each class ranges from 5 to 1,280. The validation set is the same as ImageNet 2012.

-

•

Places-LT: The Places-LT dataset is also a large-scale long-tailed dataset artificially created from the balanced Places-2 [14] dataset. It contains 184.5K images from 365 diverse scene categories. The distribution of labels in the training set is also extremely long-tailed, where the sample number of each class ranges from 5 to 4,980.

-

•

iNaturalist 2018: iNaturalist 2018 [15] is a large-scale real-world dataset with 437.5K images from 8,142 categories. It naturally follows a severe long-tailed distribution with an imbalance factor of 512. Besides the extreme imbalance, it also faces the fine-grained problem [44, 45, 46]. In this paper, the official splits of training and validation images are utilized for fair comparisons.

| Method | Long-tailed CIFAR-10 | Long-tailed CIFAR-100 | ||||

|---|---|---|---|---|---|---|

| 100 | 50 | 10 | 100 | 50 | 10 | |

| LDAM-DRW [5] | 77.0 | 81.0 | 88.2 | 42.0 | 46.6 | 58.7 \bigstrut[t] |

| BBN [24] | 79.8 | 82.2 | 88.3 | 42.6 | 47.0 | 59.1 |

| UniMix [47] | 82.8 | 84.3 | 89.7 | 45.5 | 51.1 | 61.3 |

| RIDE (3 experts) [31] | – | – | – | 48.0 | 51.7 | 61.8 |

| DiVE [48] | – | – | – | 45.4 | 51.1 | 62.0 |

| SSD [49] | – | – | – | 46.0 | 50.5 | 62.3 |

| MiSLAS [50] | 82.1 | 85.7 | 90.0 | 47.0 | 52.3 | 63.2 |

| GCL [51] | 82.7 | 85.5 | – | 48.7 | 53.6 | – |

| DRO-LT [52] | – | – | – | 47.3 | 57.6 | 63.4 |

| SADE [53] | – | – | – | 49.8 | 53.9 | 63.6 |

| ResLT [54] | 82.4 | 85.2 | 89.7 | 49.7 | 54.5 | 63.7 |

| PaCo [55] | – | – | – | 52.0 | 56.0 | 64.2 |

| BCL [56] | 84.3 | 87.2 | 91.1 | 51.9 | 56.6 | 64.9 |

| Our 3LSSL | 85.2 | 88.2 | 92.1 | 54.6 | 58.5 | 66.2 |

| Method | ResNet-50 | ResNeXt-50 | ||||||

|---|---|---|---|---|---|---|---|---|

| Many | Med. | Few | All | Many | Med. | Few | All | |

| MiSLAS [50] | 61.7 | 51.3 | 35.8 | 52.7 | – | – | – | – \bigstrut[t] |

| ResLT [54] | – | – | – | – | 63.0 | 50.5 | 35.5 | 53.0 |

| DiVE [48] | – | – | – | – | 64.1 | 50.4 | 31.5 | 53.1 |

| DRO-LT [52] | 64.0 | 49.8 | 33.1 | 53.5 | – | – | – | – |

| SSD [49] | – | – | – | – | 66.8 | 53.1 | 35.4 | 56.0 |

| GCL [51] | – | – | – | 54.9 | – | – | – | – |

| RIDE (4 experts) [31] | 66.2 | 52.3 | 36.5 | 55.4 | 68.2 | 53.8 | 36.0 | 56.8 |

| BCL [56] | – | – | – | 56.0 | 67.9 | 54.2 | 36.6 | 57.1 |

| RIDE (3 experts)+CMO [57] | 66.4 | 53.9 | 35.6 | 56.2 | – | – | – | – |

| PaCo [55] | 65.0 | 55.7 | 38.2 | 57.0 | 67.5 | 56.9 | 36.7 | 58.2 |

| SADE [53] | – | – | – | – | 66.5 | 57.0 | 43.5 | 58.8 |

| Our 3LSSL | 68.5 | 57.6 | 38.3 | 59.1 | 70.0 | 57.8 | 38.7 | 59.9 |

| Method | Many | Med. | Few | All |

|---|---|---|---|---|

| GistNet [58] | 42.5 | 40.8 | 32.1 | 39.6 \bigstrut[t] |

| MiSLAS [50] | 39.6 | 43.3 | 36.1 | 40.4 |

| GCL [51] | – | – | – | 40.6 |

| SADE [53] | – | – | – | 40.9 |

| ResLT [54] | 40.3 | 44.4 | 34.7 | 41.0 |

| PaCo [55] | 37.5 | 47.2 | 33.9 | 41.2 |

| Our 3LSSL | 42.6 | 44.5 | 34.3 | 42.0 |

| Method | Many | Med. | Few | All |

|---|---|---|---|---|

| DiVE [48] | 70.6 | 70.0 | 67.6 | 69.1 \bigstrut[t] |

| BBN [24] | – | – | – | 69.6 |

| DRO-LT [52] | – | – | – | 69.7 |

| ResLT [54] | 68.5 | 69.9 | 70.4 | 70.2 |

| SSD [49] | – | – | – | 71.5 |

| MiSLAS [50] | 70.4 | 72.4 | 73.2 | 71.6 |

| BCL [56] | – | – | – | 71.8 |

| GCL [51] | – | – | – | 72.0 |

| RIDE (4 experts) [31] | 70.9 | 72.4 | 73.1 | 72.6 |

| RIDE (3 experts)+CMO [57] | 68.7 | 72.6 | 73.1 | 72.8 |

| SADE [53] | – | – | – | 72.9 |

| PaCo [55] | 70.3 | 73.2 | 73.6 | 73.2 |

| Our 3LSSL | 71.0 | 75.7 | 76.2 | 75.8 |

In our 3LSSL, the encoder and the momentum encoder are of the same architecture with the same parameter initialization. We follow previous work, e.g., [24, 47, 50, 51, 53, 54, 55, 56], to employ the corresponding backbone deep model to realize the encoders for fair comparisons. The two projectors are also of the same structure which consists of a two-layer perceptron head (hidden layer 2048-d, with ReLU). Both projectors have the same parameter initialization. While, during network training, two encoders and two projectors update its own parameters independently. The classifier component in our 3LSSL is a linear classification layer. More specifically, for fair comparisons to prior art, we follow the protocol in [24, 47, 50, 51, 53, 54, 55, 56] by employing ResNet-32 [40] as the backbone for long-tailed CIFAR-10/long-tailed CIFAR-100, ResNet-50 [40] and ResNeXt-50 [59] for ImageNet-LT, ResNet-152 [40] for Places-LT, and ResNet-50 [40] for iNaturalist 2018. Regarding other empirical settings, we follow the recent state-of-the-art, e.g., [56, 55], to conduct model training. Regarding the size of the augmented queue, we set for long-tailed CIFAR and for large-scale ImageNet-LT, Places-LT and iNaturalist 2018. All experiments are conducted with four GeForce RTX 3090 Ti GPUs.

5.2 Main Results

5.2.1 Comparisons on Benchmark Long-Tailed Recognition Datasets

We report the classification accuracy on long-tailed CIFAR-10/long-tailed CIFAR-100, ImageNet-LT, Places-LT and iNaturalist in Table III, Table IV, Table V, and Table VI, respectively. On these benchmarks, our 3LSSL method is able to consistently obtain significant accuracy boost over state-of-the-arts.

Comparisons on long-tailed CIFAR. In Table III, we compare our method with state-of-the-art methods with different imbalance ratios (i.e., , , ) on the long-tailed CIFAR datasets. It is obviously to see that the proposed 3LSSL method surpasses the competing methods, including the effective ResLT [54], PaCo [55], BCL [56], etc. In particular, our method achieves 2.7%, 1.9%, 1.3% improvement over BCL [56] on long-tailed CIFAR-100 with the imbalance ratio of , , , respectively.

Comparisons on ImageNet-LT. ImageNet-LT is the most basic large-scale dataset in the comparisons of long-tailed recognition experiments. Different backbones are also performed on this dataset for generalization ability testing. We present the classification accuracy results of ResNet-50 and ResNeXt-50 on ImageNet-LT in Table IV. As shown, equipped with ResNet-50, our 3LSSL obtains 59.1% (having 2.1% improvement over PaCo [55]). Equipped with ResNeXt-50, our method achieves 59.9%, which still outperforms the previous best result 58.8% from SADE [53] by 1.1%.

Comparisons on Places-LT. The comparisons of classification results on Places-LT are summarized in Table V. We can observe that the improvement of the previous methods on this dataset is relatively small, e.g., 0.20.3%. This also illustrates the challenges of Places-LT. In contrast, our method achieves a relatively large accuracy boost of 0.8% over PaCo [55], which proves the effectiveness of the proposed 3LSSL method.

Comparisons on iNaturalist 2018. The iNaturalist 2018 dataset is a natural large-scale long-tailed dataset. Therefore, the comparisons on this dataset, especially the comparisons on the tail data, can better illustrate the effectiveness of the methods. As shown in Table VI, our 3LSSL again achieves the best classification accuracy, i.e., 75.8%, which significantly outperforms SADE [53] and PaCo [55] by 2.9% and 2.6%, respectively. More importantly, on tail data (cf. the results of “Few” in Table VI), our method still obtains 2.6% improvement over PaCo [55].

5.2.2 Comparisons on the Constructed MNIST-CIFAR-LT Dataset

We report the classification accuracy of our method on the constructed MNIST-CIFAR-LT dataset with different imbalance ratios in Table VII. The comparison of the results on this dataset requires to be combined with Table I at the same time. By comparisons of CIFAR-only between these two tables, our 3LSSL method brings significant improvement, i.e., 10.39%, 5.79%, 4.89%, on MNIST-CIFAR-LT with different imbalance ratios of , and , respectively. It is apparent to observe that as the degree of imbalance increases, the accuracy boost by our method becomes more significant. In particular, for tail data, the improvement is 33.76%, 11.46% and 5.81% for the imbalance ratio of , and . In addition, our method can also improve the classification accuracy in the case of MNIST-only by 0.82%, 0.44%, 0.21% for the imbalance ratio of , and on such a high basis of 98%/99%. These observations could further justify the effectiveness of our method, especially for the tail data. Also, they show that our method does learn more comprehensive information to alleviate simplicity bias in long-tailed data thanks to its SSL designs. Further qualitative visualization can be found in Section 5.4.3.

| Test data | 100 | 10 | 1 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Head | Medium | Tail | All | Head | Medium | Tail | All | Head | Medium | Tail | All \bigstrut[t] | |

| MNIST-CIFAR-LT | 99.86 | 99.67 | 99.24 | 99.60 | 99.92 | 99.90 | 99.88 | 99.90 | 99.97 | 99.94 | 99.97 | 99.96 \bigstrut[t] |

| MNIST-only | 99.18 | 99.03 | 97.60 | 98.65 | 99.52 | 99.47 | 99.23 | 99.41 | 99.66 | 99.79 | 99.40 | 99.63 |

| CIFAR-only | 53.82 | 66.21 | 65.92 | 62.41 | 71.94 | 70.38 | 79.62 | 73.62 | 78.95 | 73.44 | 90.27 | 80.14 |

5.3 Ablation Studies and Discussions

In this section, we analyze and discuss our proposed 3LSSL by conducting ablation studies on the ImageNet-LT dataset.

5.3.1 Effects of Triple Levels of SSL

For the crucial components, i.e., triple levels of SSL, in our method, we present the ablation studies of classification accuracy in Table VIII, where different settings are created by adding these triple levels of SSL on top of the baseline. More concretely, comparing #2 with #1, we can find that the holistic-level SSL improves the overall accuracy by 3.3%. While, only equipping the partial-level SSL with the baseline obtains 2.7% improvement, i.e., #3 vs. #1. But, when performing both the holistic- and partial-level SSL, it brings 4.5% accuracy boost (cf. #4 vs. #1). These results demonstrate that the holistic-level SSL is fundamental in our 3LSSL, and the partial-level SSL can contribute well as a counterpart w.r.t. the holistic-level SSL. Furthermore, by additionally equipping with the augmented-level SSL, further improvement of 2.1% is achieved, cf. #5 vs. #4. More importantly, the improvement of 2.6% on tail data on ImageNet-LT is obtained, which justifies the effectiveness of the augmented-level SSL w.r.t. tail data.

| Number | Settings | ImageNet-LT | ||||||

|---|---|---|---|---|---|---|---|---|

| Baseline (CE) | H-SSL | P-SSL | A-SSL | Many | Med. | Few | All | |

| #1 | ✓ | 62.7 | 49.3 | 31.6 | 52.5 \bigstrut[t] | |||

| #2 | ✓ | ✓ | 65.9 | 54.3 | 34.2 | 55.8 | ||

| #3 | ✓ | ✓ | 64.8 | 53.7 | 34.4 | 55.2 | ||

| #4 | ✓ | ✓ | ✓ | 66.8 | 55.4 | 35.7 | 57.0 | |

| #5 | ✓ | ✓ | ✓ | ✓ | 68.5 | 57.6 | 38.3 | 59.1 |

5.3.2 Augmented Queue in the Augmented-level SSL

We hereby conduct different configurations of the augmented queue in the augmented-level SSL. As aforementioned, the augmented queue is designed to enhance the similarity-based representation learning of tail classes by borrowing pseudo samples from other classes. We consider three configurations, including augmented 1) with a single example of class in the queue, 2) with all examples of class in the queue (i.e., our proposal), and 3) with all examples of class (i.e., the ground truth class of ).

The corresponding results on the ImageNet-LT dataset are reported in Table IX. Apparently, augmented with a single example will harm the classification accuracy due to the sample randomization and lacking of generalization. More interesting observations can be found by comparing “2)” with “3)”. Compared with our proposal in the augmented queue, augmented with examples belonging to the ground truth obtains 0.2% overall accuracy drop. In particular, for tail data, a larger drop of 0.5% is observed, which can clearly demonstrate our effectiveness on tail data by providing chances to see more semantic-relevant samples for learning more robust and comprehensive representations via our augmented-level SSL.

| Setting | ImageNet-LT | |||

|---|---|---|---|---|

| Many | Med. | Few | All | |

| With a single example of class | 67.4 | 56.3 | 36.9 | 57.8 \bigstrut[t] |

| With examples of class | 68.6 | 57.4 | 37.8 | 58.9 |

| With examples of class | 68.5 | 57.6 | 38.3 | 59.1 |

5.3.3 Multi-stage Partial-level SSL

For the main results of our 3LSSL method, we employ the partial-level SSL by performing only one stage to mask out the class-specific image regions. In the ablation studies of this section, multiple masking stages of the partial-level SSL are conducted, and we report the results in Table X. As seen, as the number of stages increases, the classification accuracy shows a slight downward trend. The reason might be that with the progress of the masking operation in the partial-level SSL, more and more informative regions are focused and then discarded, and the information in the image regions that are paid attention to in the later stages gradually decreases. Even though our method can force the model to learn comprehensive information from the remaining image regions, the rest information content of these regions is less and less or even non-existent, which could cause redundant and accuracy drop. Therefore, performing one-stage partial-level SSL is both effective and efficient.

| # stages | ImageNet-LT | |||

|---|---|---|---|---|

| Many | Med. | Few | All | |

| 1 | 68.5 | 57.6 | 38.3 | 59.1 \bigstrut[t] |

| 2 | 68.2 | 57.5 | 38.6 | 59.0 |

| 3 | 68.0 | 57.2 | 38.3 | 58.7 |

5.4 Visualization Analyses

To further analyze our 3LSSL method, we provide qualitative visualization analyses from the following aspects, i.e., showing feature embedding visualization by -SNE, demonstrating the distribution of classes in the augmented queue, as well as presenting the activation visualization of the learned models.

5.4.1 Feature Embedding Visualization

In order to intuitively understand the effects of representation learning in 3LSSL, we show -SNE visualization of the learned feature embedding [60] in Figure 4. We compare the feature embedding learned by the baseline method (i.e., a vanilla backbone) and our 3LSSL of both training and test sets. As shown, the decision boundaries and class separations of the baseline on training and test are all greatly altered by the head classes. While, regardless of the training set or test set, our 3LSSL is able to produce more compact feature embedding and clear class separations, which leads to better discriminative representation learning, especially between adjacent head and tail classes.

5.4.2 Distribution of Classes in the Augmented Queue

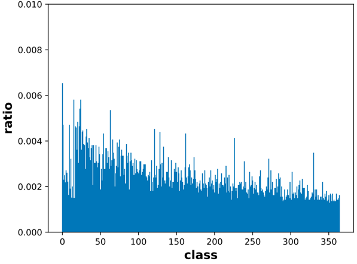

As aforementioned in Section 5.3.2, we demonstrate the effectiveness of the proposed augmented queue. Hereby, we present the distribution of classes in such an augmented queue for in-depth analyses. After the models are convergence during training, we collect the corresponding augmented queue and show the class distribution in Figure 5. Note that, since iNaturalist 2018 contains too many categories (i.e., 8,142 fine-grained classes), not all can be presented in the figure. Thus, we uniformly sample 2,000 categories for illustration. As shown in Figure 5, it can be found that compared to their original distribution (being severe long-tailed), the class distribution in the augmented queue is relatively more balanced, especially the iNaturalist 2018 dataset. More importantly, for the tail data, their proportion in the queue significantly increases, which explains why the augmented queue mechanism of our augmented-level SSL favors tail data.

5.4.3 Activation Visualization

To explicitly demonstrate the alleviation of simplicity bias of our method, we visualize the activations of the test images from MNIST-CIFAR-LT and three real-world datasets, i.e., ImageNet-LT, Places-LT and iNaturalist 2018, in Figure 6. From these activation visualization, it is obvious to see that our 3LSSL can effectively mitigate the simplicity bias in long-tailed recognition by learning more comprehensive patterns covering the whole informative regions, particularly for the tail data. Such an observation is even more pronounced on the Places-LT dataset. We know that scene context plays a crucial role in recognizing scenes. From the visualization results, our method can indeed help the model focus on a more comprehensive scene context region, thereby improving the accuracy of scene recognition.

6 Conclusion

In this work, we investigated simplicity bias (SB) in long-tailed image recognition and uncovered underrepresented classes suffer more severely from SB. We empirically reported that self-supervised learning (SSL) can be used as a remedy to mitigate SB and the associated adverse effects through learning more comprehensive features. Following this observation, we proposed a simple but effective method consisting triple levels of SSL (termed as 3LSSL) which is explicitly tailored for long-tailed data to learn image representations that generalize better. Experiments on five long-tailed benchmark datasets show the advantage of the proposed method as well as other appealing properties. We expect our work can provide new understanding about long-tailed learning and inspire new explorations towards SB, including solutions to evade SB, especially in tasks with insufficient data such as few-shot learning.

There still lacks theoretical support why SSL can mitigate SB. In-depth analyses of the nature of representations learned from SSL is also needed. We will leave such problems in our future work.

References

- [1] H. He and E. A. Garcia, “Learning from imbalanced data,” IEEE Trans. Knowl. Data Eng., vol. 21, no. 9, pp. 1263–1284, 2009.

- [2] M. Buda, A. Maki, and M. A. Mazurowski, “A systematic study of the class imbalance problem in convolutional neural networks,” Neural Netw., vol. 106, pp. 249–259, 2018.

- [3] C. Huang, Y. Li, C. C. Loy, and X. Tang, “Deep imbalanced learning for face recognition and attribute prediction,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 42, no. 11, pp. 2781–2794, 2020.

- [4] Y. Cui, M. Jia, T.-Y. Lin, Y. Song, and S. Belongie, “Class-balanced loss based on effective number of samples,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2019, pp. 9268–9277.

- [5] K. Cao, C. Wei, A. Gaidon, N. Arechiga, and T. Ma, “Learning imbalanced datasets with label-distribution-aware margin loss,” in Advances in Neural Inf. Process. Syst., 2019, pp. 1567–1578.

- [6] K. Salman, H. Munawar, Z. S. Waqas, S. Jianbing, and S. Ling, “Striking the right balance with uncertainty,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2019, pp. 103–112.

- [7] P. Chu, X. Bian, S. Liu, and H. Ling, “Feature space augmentation for long-tailed data,” in Proc. Eur. Conf. Comp. Vis., 2020, pp. 694–710.

- [8] H. Shah, K. Tamuly, A. Raghunathan, P. Jain, and P. Netrapalli, “The pitfalls of simplicity bias in neural networks,” in Advances in Neural Inf. Process. Syst., 2020, pp. 9573–9585.

- [9] D. Teney, E. Abbasnejad, S. Lucey, and A. van den Hengel, “Evading the simplicity bias: Training a diverse set of models discovers solutions with superior OOD generalization,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2022, pp. 16 761–16 772.

- [10] M. Huh, H. Mobahi, R. Zhang, B. Cheung, P. Agrawal, and P. Isola, “The low-rank simplicity bias in deep networks,” arXiv preprint arXiv:2103.10427, 2021.

- [11] S. Gidaris, A. Bursuc, N. Komodakis, P. Pérez, and M. Cord, “Boosting few-shot visual learning with self-supervision,” in Proc. IEEE Int. Conf. Comp. Vis., 2019, pp. 8059–8068.

- [12] A. Krizhevsky and G. Hinton, “Learning multiple layers of features from tiny images,” Citeseer, Tech. Rep., 2009.

- [13] Z. Liu, Z. Miao, X. Zhan, J. Wang, B. Gong, and S. X. Yu, “Large-scale long-tailed recognition in an open world,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2019, pp. 2537–2546.

- [14] B. Zhou, A. Lapedriza, A. Khosla, A. Oliva, and A. Torralba, “Places: A 10 million image database for scene recognition,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 40, no. 6, pp. 1452–1464, 2017.

- [15] G. Van Horn, O. Mac Aodha, Y. Song, Y. Cui, C. Sun, A. Shepard, H. Adam, P. Perona, and S. Belongie, “The iNaturalist species classification and detection dataset,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2018, pp. 8769–8778.

- [16] Z.-H. Zhou and X.-Y. Liu, “Training cost-sensitive neural networks with methods addressing the class imbalance problem,” IEEE Trans. Knowl. Data Eng., vol. 18, no. 1, pp. 63–77, 2006.

- [17] L. Shen, Z. Lin, and Q. Huang, “Relay backpropagation for effective learning of deep convolutional neural networks,” in Proc. Eur. Conf. Comp. Vis., 2016, pp. 467–482.

- [18] N. Japkowicz and S. Stephen, “The class imbalance problem: A systematic study,” Intell. Data Anal., vol. 6, no. 5, pp. 429–449, 2002.

- [19] X.-Y. Liu and Z.-H. Zhou, “The influence of class imbalance on cost-sensitive learning: An empirical study,” in Proc. IEEE Int. Conf. Data Mining, 2006, pp. 970–974.

- [20] C. Huang, Y. Li, C. Change Loy, and X. Tang, “Learning deep representation for imbalanced classification,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2016, pp. 5375–5384.

- [21] J. Byrd and Z. Lipton, “What is the effect of importance weighting in deep learning?” in Proc. Int. Conf. Mach. Learn., 2019, pp. 872–881.

- [22] N. V. Chawla, K. W. Bowyer, L. O. Hall, and W. P. Kegelmeyer, “SMOTE: Synthetic minority over-sampling technique,” J. Arti. Intell. Res., vol. 16, pp. 321–357, 2002.

- [23] Y.-X. Wang, D. Ramanan, and M. Hebert, “Learning to model the tail,” in Advances in Neural Inf. Process. Syst., 2017, pp. 7029–7039.

- [24] B. Zhou, Q. Cui, X.-S. Wei, and Z.-M. Chen, “BBN: Bilateral-branch network with cumulative learning for long-tailed visual recognition,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2020, pp. 9719–9728.

- [25] B. Kang, S. Xie, M. Rohrbach, Z. Yan, A. Gordo, J. Feng, and Y. Kalantidis, “Decoupling representation and classifier for long-tailed recognition,” in Proc. Int. Conf. Learn. Representations, 2020, pp. 1–16.

- [26] P. Wang, K. Han, X.-S. Wei, L. Zhang, and L. Wang, “Contrastive learning based hybrid networks for long-tailed image classification,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2021, pp. 943–952.

- [27] S. Alshammari, Y. Wang, D. Ramanan, and S. Kong, “Long-tailed recognition via weight balancing,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2022.

- [28] Y. Zhang, B. Hooi, L. Hong, and J. Feng, “Test-agnostic long-tailed recognition by test-time aggregating diverse experts with self-supervision,” arXiv preprint arXiv:2107.09249, 2021.

- [29] L. Xiang, G. Ding, and J. Han, “Learning from multiple experts: Self-paced knowledge distillation for long-tailed classification,” in Proc. Eur. Conf. Comp. Vis., 2020, pp. 247–263.

- [30] J. Cai, Y. Wang, and J.-N. Hwang, “ACE: Ally complementary experts for solving long-tailed recognition in one-shot,” in Proc. IEEE Int. Conf. Comp. Vis., 2021, pp. 112–121.

- [31] X. Wang, L. Lian, Z. Miao, Z. Liu, and S. Yu, “Long-tailed recognition by routing diverse distribution-aware experts,” in Proc. Int. Conf. Learn. Representations, 2021, pp. 1–15.

- [32] T. Chen, S. Kornblith, M. Norouzi, and G. Hinton, “A simple framework for contrastive learning of visual representations,” in Proc. Int. Conf. Mach. Learn., 2020, pp. 1597–1607.

- [33] Z. Wu, Y. Xiong, S. X. Yu, and D. Lin, “Unsupervised feature learning via non-parametric instance discrimination,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2018, pp. 3733–3742.

- [34] K. He, H. Fan, Y. Wu, S. Xie, and R. Girshick, “Momentum contrast for unsupervised visual representation learning,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2020, pp. 9729–9738.

- [35] M. Caron, I. Misra, J. Mairal, P. Goyal, P. Bojanowski, and A. Joulin, “Unsupervised learning of visual features by contrasting cluster assignments,” in Advances in Neural Inf. Process. Syst., 2020, pp. 9912–9924.

- [36] H. Liu, J. Z. HaoChen, A. Gaidon, and T. Ma, “Self-supervised learning is more robust to dataset imbalance,” in Proc. Int. Conf. Learn. Representations, 2022, pp. 1–24.

- [37] C. Guo, G. Pleiss, Y. Sun, and K. Q. Weinberger, “On calibration of modern neural networks,” in Proc. Int. Conf. Mach. Learn., 2017, pp. 1321–1330.

- [38] A. Nguyen, J. Yosinski, and J. Clune, “Deep neural networks are easily fooled: High confidence predictions for unrecognizable images,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2015, pp. 427–436.

- [39] D. C. Ciresan, U. Meier, J. Masci, L. M. Gambardella, and J. Schmidhuber, “High-performance neural networks for visual object classification,” arXiv preprint arXiv:1102.0183, pp. 1–12, 2011.

- [40] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2016, pp. 770–778.

- [41] X. Chen, H. Fan, R. Girshick, and K. He, “Improved baselines with momentum contrastive learning,” arXiv preprint arXiv:2003.04297, pp. 1–3, 2020.

- [42] B. Zhou, A. Khosla, A. Lapedriza, A. Oliva, and A. Torralba, “Learning deep features for discriminative localization,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2016, pp. 2921–2929.

- [43] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A. C. Berg, and L. Fei-Fei, “ImageNet Large Scale Visual Recognition Challenge,” Int. J. Comput. Vision, vol. 115, no. 3, pp. 211–252, 2015.

- [44] X.-S. Wei, Y.-Z. Song, O. M. Aodha, J. Wu, Y. Peng, J. Tang, J. Yang, and S. Belongie, “Fine-grained image analysis with deep learning: A survey,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 44, no. 12, pp. 8927–8948, 2022.

- [45] X.-S. Wei, P. Wang, L. Liu, C. Shen, and J. Wu, “Piecewise classifier mappings: Learning fine-grained learners for novel categories with few examples,” IEEE Trans. Image Process., vol. 28, no. 12, pp. 6116–6125, 2019.

- [46] X.-S. Wei, J.-H. Luo, J. Wu, and Z.-H. Zhou, “Selective convolutional descriptor aggregation for fine-grained image retrieval,” IEEE Trans. Image Process., vol. 26, no. 6, pp. 2868–2881, 2017.

- [47] Z. Xu, Z. Chai, and C. Yuan, “Towards calibrated model for long-tailed visual recognition from prior perspective,” in Advances in Neural Inf. Process. Syst., 2021, pp. 7139–7152.

- [48] Y.-Y. He, J. Wu, and X.-S. Wei, “Distilling virtual examples for long-tailed recognition,” in Proc. IEEE Int. Conf. Comp. Vis., 2021, pp. 235–244.

- [49] T. Li, L. Wang, and G. Wu, “Self supervision to distillation for long-tailed visual recognition,” in Proc. IEEE Int. Conf. Comp. Vis., 2021, pp. 630–639.

- [50] Z. Zhong, J. Cui, S. Liu, and J. Jia, “Improving calibration for long-tailed recognition,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2021, pp. 16 489–16 498.

- [51] M. Li, Y. ming Cheung, and Y. Lu, “Long-tailed visual recognition via gaussian clouded logit adjustment,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2022, pp. 6929–6938.

- [52] D. Samuel and G. Chechik, “Distributional robustness loss for long-tail learning,” in Proc. IEEE Int. Conf. Comp. Vis., 2021, pp. 9495–9504.

- [53] S.-S. A. of Diverse Experts for Test-Agnostic Long-Tailed Recognition, in Advances in Neural Inf. Process. Syst., 2022.

- [54] J. Cui, S. Liu, Z. Tian, Z. Zhong, and J. Jia, “ResLT: Residual learning for long-tailed recognition,” IEEE Trans. Pattern Anal. Mach. Intell., DOI: 10.1109/TPAMI.2022.3174892, 2022.

- [55] J. Cui, Z. Zhong, S. Liu, B. Yu, and J. Jia, “Parametric contrastive learning,” in Proc. IEEE Int. Conf. Comp. Vis., 2021, pp. 715–724.

- [56] J. Zhu, Z. Wang, J. Chen, Y.-P. P. Chen, and Y.-G. Jiang, “Balanced contrastive learning for long-tailed visual recognition,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2022, pp. 6908–6917.

- [57] S. Park1, Y. Hong, B. Heo, S. Yun, and J. Y. Choi, “The majority can help the minority: Context-rich minority oversampling for long-tailed classification,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2022, pp. 6887–6896.

- [58] B. Liu, H. Li, H. Kang, and G. Hua, “GistNet: A geometric structure transfer network for long-tailed recognition,” in Proc. IEEE Int. Conf. Comp. Vis., 2021, pp. 8209–8218.

- [59] S. Xie, R. Girshick, P. Dollár, Z. Tu, and K. He, “Aggregated residual transformations for deep neural networks,” in Proc. IEEE Conf. Comp. Vis. Patt. Recogn., 2017, pp. 1492–1500.

- [60] L. van der Maaten and G. Hinton, “Visualizing data using -SNE,” J. Mach. Learn. Res., vol. 9, pp. 2579–2605, 2008.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/5296c656-94d8-4e78-9cf5-1678b201f860/XS.png) |

Xiu-Shen Wei is a Professor with the School of Computer Science and Engineering, Nanjing University of Science and Technology, China. He was a Program Chair for the workshops associated with ICCV, IJCAI, ACM Multimedia, etc. He has also served as an Area Chair or Senior Program Member at AAAI, IJCAI, ICME, BMVC, a Guest Editor of Pattern Recognition Journal, and a Tutorial Chair for Asian Conference on Computer Vision (ACCV) 2022. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/5296c656-94d8-4e78-9cf5-1678b201f860/XH.jpeg) |

Xuhao Sun is a Master Student with School of Computer Science and Engineering, Nanjing University of Science and Technology, China. His main research interests are Computer Vision and Machine Learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/5296c656-94d8-4e78-9cf5-1678b201f860/Yang.jpeg) |

Yang Shen is a Ph.D Candidate under the supervision of Prof. Xiu-Shen Wei with School of Computer Science and Engineering, Nanjing University of Science and Technology, China. His research interests lie in deep learning and computer vision. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/5296c656-94d8-4e78-9cf5-1678b201f860/Xu.png) |

Anqi Xu is currently studying in Mathematical and Physical Sciences at the University of Toronto, majoring in Statistics and Economics. Anqi graduated from the Shanghai World Foreign Language Academy in which she participated in Yau Science Awards (Economic and Financial) and her paper was accepted by the IEEE-CS (Manuscript title: Industrial Structure Factor Analysis of Complex Network Basis). |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/5296c656-94d8-4e78-9cf5-1678b201f860/Peng.jpeg) |

Peng Wang is a lecturer (assistant professor) with School of Computing and Information Technology at University of Wollongong. Prior to joining UOW, he was a research fellow with Australian Institute for Machine Learning (AIML), The University of Adelaide. He obtained his PhD from School of Information Technology and Electrical Engineering, The University of Queensland. His major research interest lies in computer vision and deep learning, with special interest in data-efficient deep learning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/5296c656-94d8-4e78-9cf5-1678b201f860/Zhang.png) |

Faen Zhang received the bachelor’s degree in software engineering from Jilin University and the master’s degree in computer science and theory from Institute of Software, Chinese Academy of Sciences. He is currently the CTO of AInnovation, the Chief Architect of the AI Institute at Sinovation Ventures and Honorary Professor of Ningbo Nottingham University. He holds 10+ US and 30+ Chinese patents. He has more than 15 years of experience in technology development and management in the IT industry, including enterprise-level software, Internet search engine, knowledge-based maps, big data analysis and storage, machine learning, deep learning. |