Deletion and Insertion Tests in Regression Models

Abstract

A basic task in explainable AI (XAI) is to identify the most important features behind a prediction made by a black box function . The insertion and deletion tests of Petsiuk et al., (2018) can be used to judge the quality of algorithms that rank pixels from most to least important for a classification. Motivated by regression problems we establish a formula for their area under the curve (AUC) criteria in terms of certain main effects and interactions in an anchored decomposition of . We find an expression for the expected value of the AUC under a random ordering of inputs to and propose an alternative area above a straight line for the regression setting. We use this criterion to compare feature importances computed by integrated gradients (IG) to those computed by Kernel SHAP (KS) as well as LIME, DeepLIFT, vanilla gradient and inputgradient methods. KS has the best overall performance in two datasets we consider but it is very expensive to compute. We find that IG is nearly as good as KS while being much faster. Our comparison problems include some binary inputs that pose a challenge to IG because it must use values between the possible variable levels and so we consider ways to handle binary variables in IG. We show that sorting variables by their Shapley value does not necessarily give the optimal ordering for an insertion-deletion test. It will however do that for monotone functions of additive models, such as logistic regression.

1 Introduction

Explainable AI methods are used help humans learn from patterns that a machine learning or artificial intelligence model has found, or to judge whether those patterns are scientifically reasonable or whether they treat subjects fairly. As Hooker et al., (2019) note, there is no ground truth for explanations. Mase et al., (2022) attribute this to the greater difficulty of identifying causes of effects compared to effects of causes (Dawid and Musio,, 2021). Lacking a ground truth, researchers turn to axioms and sanity checks to motivate and vet explanatory methods. There are also some numerical measures that one can use to compute a quality measure for methods that rank variables from most to least important. These include the Area Over Perturbation Curve (AOPC) of Samek et al., (2016) and the Area Under the Curve (AUC) measure of Petsiuk et al., (2018) that we focus on. They have the potential to augment intuitive and philosophical distinctions among methods with precise numerical comparisons. In this paper we make a careful study of the properties of those measures and we illustrate their use on two datasets.

Insertion and deletion tests were used by Petsiuk et al., (2018) to compare variable importance methods for black box functions. In their specific case they had an image classifier that would, for example, conclude with high confidence that a given image contains a mountain bike. Then the question of interest was to identify which pixels are most important to that decision. They propose to delete pixels, replacing them by a plain default value (constant values such as black or the average of all pixels from many images) in order from most to least important for the decision that the image was of the given class. If they have ordered the pixels well, then the confidence level of the predicted class should drop quickly as more pixels are deleted. By that measure, their Randomized Input Sampling for Explanation (RISE) performed well compared to alternatives such as GradCAM (Selvaraju et al.,, 2017) and LIME (Ribeiro et al.,, 2016) when explaining outputs of a ResNet50 classifier (Zhang et al.,, 2018). For instance, Figure 2 of Petsiuk et al., (2018) has an example where occlusion of about 4% of pixels, as sorted by their RISE criterion can overturn the classification of an image. They also considered an insertion test starting from a blurred image of the original one and inserting pixels from the real image in order from most to least important. An ordering where the confidence rises most quickly is then to be preferred. Petsiuk et al., (2018) scored their methods by an area under the curve (AUC) metric that we will describe in detail below. The idea to change features in order of importance and score how quickly predictions change is quite natural, and we expect many others have used it. We believe that our analysis of the methods in Petsiuk et al., (2018) will shed light on other similar proposals.

Figure 1 shows an example where an image is correctly and confidently classified as an albatross by an algorithm described in Appendix A.1. The integrated gradients (IG) method of Sundararajan et al., (2017) that we define below can be used to rank the pixels by importance. In this instance the deletion AUC is which can be interpreted as meaning that about 27% of the pixels have to be deleted before the algorithm completely forgets that the image is of an albatross. The model we used accepts square images of size pixels with 3 color channels. As a preprocessing step, we cropped the leftmost image in Figure 1 to its central pixels. The IG feature attributions from each pixel (summed over red, green and blue channels) of this square image are presented in the rightmost panel of Figure 1. A saliency map shows that pixels in the bird’s face, especially the eye and beak are rated as most important.

In this paper we study insertion and deletion metrics for uses that include regression problems in addition to classification. We consider a function such as an estimate of a response given predictors represented as components of . The regression context is different from classification. The trajectory taken by as inputs are switched one by one from a point to a baseline point can be much less monotone than in the image classification problems that motivated Petsiuk et al., (2018). We don’t generally find that either or is near zero. There are also use cases where and are both actual observations; it is not necessary for one of them to be an analogue of a completely gray image or otherwise null data value. Sometimes and yet it can still be interesting to understand what happens to when some components of are changed to corresponding values of .

Our main contributions are as follows. Despite these differences between classification and regression, we find that insertion and deletion metrics can be naturally extended to regression problems. We then develop expressions for the resulting AUC in terms of certain main effects and interactions in building on the anchored decomposition from Kuo et al., (2010) and others. This anchored decomposition is a counterpart to the better known analysis of variance (ANOVA) decomposition. The anchored decomposition does not require a distribution on its inputs, much less the independence of those inputs that the ANOVA requires. In the regression context we prefer to change the AUC computation replacing the horizontal axis by a straight line connecting to . We obtain an expression for the average AUC in a case where variables were inserted in a uniform random order over all possible permutations. In settings without interactions the area between the variable change curve (that we define below) and the straight line has expected value zero under those permutations, but interactions change this. We also show that the expected area between the insertion curve and an analogous deletion curve that we define below does have expected value zero, even in the presence of interactions of any order. Some other contributions described below show that in some widely used models the same ordering that optimizes an area criterion also optimizes a Shapley value.

We take a special interest in the integrated gradients (IG) method of Sundararajan et al., (2017) because it is very fast. The number of function or derivative evaluations that it requires grows only linearly in the number of input variables, for any fixed number of evaluation nodes in the Riemann sum it uses to approximate an integral. The cost of exact computation for kernel SHAP (KS) of Lundberg and Lee, (2017) grows exponentially with the number of variables, although it can be approximated by sampling. We also include LIME of Ribeiro et al., (2016), DeepLIFT of Shrikumar et al., (2017), Vanilla Grad of Simonyan et al., (2013) and input times gradient method of Shrikumar et al., (2016). In the datasets we considered, KS is generally best overall. We note that the term ‘Vanilla’ is not used by Simonyan et al., (2013) to describe their methods but it has been used by others, such as Agarwal et al., (2022).

It is very common for machine learning functions to include binary inputs. For this reason we discuss how to extend IG to handle some dichotomous variables and then compare it to the other methods, especially KS. Simply extending the domain of for such variables from to is easy to do and it avoids the exponential cost that some more principled choices have.

The remainder of this paper is organized as following. Section 2 cites related works, introduces some notation and places our paper in the context of explainable AI (XAI), while also citing some works that express misgivings about XAI. Section 3 defines the AUC and gives an expression for it in terms of main effects and interactions derived from an anchored decomposition of the prediction function . The expected AUC is obtained for a random ordering of variables. We also introduce an area between the curves (ABC) quantity using a linear interpolation baseline curve instead of the horizontal axis. We show that arranging input variables in decreasing order by Shapley value does not necessarily give the order that maximizes AUC, due to the presence of interactions. Models that, like logistic regression are represented by an increasing function of an additive model do get their greatest AUC from the Shapley ordering depsite the interactions introduced by the increasing function. When that function is differentiable, then IG finds the optimal order. Section 4 discusses how to extend IG to some dichotomous variables. We consider three schemes: simply treating binary variables in as if they were continuous values in , multilinear interpolation of the function values at binary points, and using paths that jump from to . The simple strategy of casting the binary inputs to , which many prediction functions can do, is preferable on grounds of speed. Section 5 presents some empirical work. We choose a regression problem about explaining the value of a house in Bangalore using some data from Kaggle (Section 5.1). This is a challenging prediction problem because the values are heavily skewed. It is especially challenging for IG because all but two of the input variables are binary and IG is defined for continuous variables. The model we use is a multilayer perceptron. We compare variable rankings from six methods. KS is overall best but IG is much faster and nearly as good. Section 5.2 looks at a problem from high energy physics using data from CERN. Section 6 includes a ROAR analysis that compares how well the KS and IG measures rank the importance of variables in a setting where the model is to be retrained without its most important variables. Section 7 has some final comments. Appendix A gives some details of the data and models we study. Theorem 1 is proved in Appendix B.

2 Related Work

The insertion and deletion measures we study are part of XAI. Methods from machine learning and artificial intelligence are being deployed in electronic commerce, finance and other industries. Some of those applications are mission critical (e.g., in medicine, security or autonomous driving). When models are selected based on their accuracy on holdout sets, the winning algorithms can be very complicated. There is then a strong need for human understanding of how the methods work in order to reason about their accuracy on future data. For discussions on the motivations and methods for XAI see recent surveys such as Liao and Varshney, (2021), Saeed and Omlin, (2021) and Bodria et al., (2021).

One of the most prominent XAI tasks is attribution in which one quantifies and compares importance of the model inputs to the resulting prediction. Since there is no ground-truth for explanations, these attributions are usually compared based on theoretical justifications. From this viewpoint, SHAP (SHapley Additive exPlanations) (Lundberg and Lee,, 2017) and IG (Sundararajan et al.,, 2017) are popular feature importance methods due to their grounding in cooperative game theory. Given some reasonable axioms, game theory can produce unique variable importance measures in terms of Shapley values and Aumann-Shapley values respectively.

As a complementary method to theoretical a priori justification, one can also examine the outputs of attribution methods numerically, either applying sanity checks or computing quantitative quality measures. The insertion and deletion tests of Petsiuk et al., (2018) that we study are of this type. Quantitative metrics for XAI were recently surveyed in Nauta et al., (2022) who also discuss metrics based on whether the importance ratings align with those of human judges.

As an example of sanity checks, Adebayo et al., (2018) find that some algorithms to produce saliency maps are nearly unchanged when one scrambles the category labels on which the algorithm was trained, or when one replaces trained weights in a neural network by random weights. They also find that some saliency maps are very close to what edge detectors would produce and therefore do not make much use of the predicted or actual class of an image. Another example from Adebayo et al., (2020) checks whether saliency mathods can detect spurious correlation in a setting where the backgrounds in training images are artificially correlated to output labels and the model learns this spurious correlation correctly.

One important method is the ROAR (RemOve And Retrain) approach of Hooker et al., (2019). It sequentially removes information from the least important columns in each observation in the training data and retrains the model with these partially removed training data iteratively. A good variable ranking will show rapidly decreasing performance of the classifier as the most important inputs are removed. The obvious downside of this method is that it requires a lot of expensive retraining that insertion and deletion methods avoid.

The insertion and deletion tests we study have been criticized by Gomez et al., (2022) who note that the synthesized images generated in these tests are unnatural and do not resemble the images on which the algorithms were trained. The same issue of unnatural inputs was raised by Mase et al., (2019). Gomez et al., (2022) also point out that insertion and deletion tests only compare the rankings of the inputs.

Fel et al., (2021) noted that scores on insertion tests can be strongly influenced by the first few pixels inserted into a background image. They then note that images with only a few non-background pixels are quite different from the target image. The policy choice of what baseline to compare an image to affects whether the result can be manipulated. An adversarily selected image might differ from a target image in only a few pixels, and yet have a very different classification. At the same time both of these images differ greatly from a neutral background image. An insertion test comparing the target image to a blurred background might show that the classification depends on many pixels, while a deletion test with the adversarial image will show that only a few pixels need to change. The two tests will thus disagree on whether the classification was influenced by few or many pixels. Both are correct because they address different issues.

A crucial choice in using insertion and deletion tests is which reference input to use when deleting or inserting variables. Petsiuk et al., (2018) decided to insert real pixels into a blurred image instead of inserting them into a solid gray image because, when the most important pixels form an ellipsoidal shape, then inserting them into a gray background might lead a spurious classification (such as ‘balloon’). On the other hand, deleting pixels via blurring might underestimate the salience of those pixels if the algorithm is good at inferring from blurred images. In our albatross example of Figure 1 we used an all black image with zeros. We call such choices ‘policies’ and note that a good policy choice depends on the scientific goals of the study and the strengths and weaknesses of the algorithm under study.

Haug et al., (2021) study different kinds of baseline images for image classification problems noting that the choice of background affects how well a method performs. Among the backgrounds they mention are constants, blurred images, uniform or Gaussian noise, average images and baseline images maximally distant from the target image. They also mention the neutral backgrounds of Izzo et al., (2020) that lie on a decision boundary. Sundararajan and Najmi, (2020) discuss taking every available data point as a baseline and averaging the resulting Shapley values. Sturmfels et al., (2020) note the importance of choosing baselines carefully, pointing out that zero would be a bad baseline for blood sugar and that if a solid color image is used as a baseline in image classification, then the result cannot attribute importance to pixels of that color. They discuss many of the baselines that Haug et al., (2021) do and they propose the ‘farthest image’ baseline.

There are also broader criticisms of XAI methods. Kumar et al., (2020) point out some difficulties in formulating a variable importance problem as a game suitable for use in Shapley value. Their view is that those explanations do not match what people expect from an explanation. They also identify a catch-22 where either allowing or disallowing a variable not used by a model to be important causes difficulties. Rudin, (2019) says that one should not use a black box model to make high stakes decisions but should instead only use interpretable models. She also disputes that this would necessarily cause a loss in accuracy. We see a lot of value in that view, and we know that there are examples where interpretable models perform essentially as well as the best black boxes. However black boxes are very widely used. There is then value in XAI methods that can reveal and quantify any of their flaws. Furthermore, an explanation depends on not just the form of the model but also on the joint distribution of the predictor variables. For example an interpretable model that does not use the race of a subject might still have a discriminatory impact due to associations among the predictors and XAI methods can be used to evaluate such bias.

Prior uses of insertion and deletion tests have mostly been about image or text classification. There have been a few papers using them for tabular data or time series data, such as Cai et al., (2021), Hsieh et al., (2021), Parvatharaju et al., (2021), and Ismail et al., (2020).

Ancona et al., (2017) also describe a strategy of changing variables one at a time and observing how the quality of a prediction changes in response independently of Petsiuk et al., (2018). Their Figure 3c compares the trajectories taken by several different methods on some image classification problems. They have insertion and deletion curves to compare an occlusion method to integrated gradients. Unlike Petsiuk et al., (2018), they do not report an AUC quantity.

The work of Samek et al., (2016) precedes Petsiuk et al., (2018) and uses the same global ablation strategy of deleting information in order from most to least important. Their deletions involve replacing a whole block of pixels (e.g., a block) by uniformly distributed noise. One motivation for using such noise was to generate images outside the manifold of natural images. Their average over a perturbation curve is comparable to the average under the deletion curve of Petsiuk et al., (2018). Where Petsiuk et al., (2018) focus on curves for individual images, Samek et al., (2016) study the average of such curves over many images. In their numerical work they only make the first 100 such perturbations, affecting about of the pixels.

For us the advantage of the approach in Petsiuk et al., (2018) is that their AUC is defined by running the variable substitution to completion, changing every feature to the baseline value . Then we use two orders, one that seeks to increase as fast as possible and one that seeks to decrease it as fast as possible, and study the area between those two curves.

3 The AUC for Regression

The AUC method for comparing variable rankings has not had much theoretical analysis yet. This section develops some of its properties. The development is quite technical in places. We begin with a non technical account emphasizing intuition. Readers may use the intuitive development as orientation to the technical parts or they may prefer to skip the technical parts.

If we order the inputs to a function and then change them one at a time from the value in point to that in a baseline value , the resulting function values trace out a curve over the interval . If we have tried to order the variables starting with those that will most increase and ending with those that least increase (most decrease) then a better ordering is one that gives a larger area under the curve (AUC). We will find it useful to consider the signed area under this curve but above a straight line connecting the end points. This area between the curves (ABC) is the original AUC minus the area under the line segment.

A deletion measure orders the variables from those thought to be most decreasing of to those that are least decreasing, i.e., the opposite order to an insertion test. For deletion we like to use the area ABC below the straight line but above the curve that the deletion process traces out. When we need to refer to insertion and deletion ABCs in the same expression we use ABC′ for the deletion case.

Additive functions are of special interest because additivity simplifies explanation and such models often come close to the full predictive power of a more complicated model. For an additive model, the incremental change in from replacing by , call it , does not depend on for . In this case the AUC is maximized by ordering the variables so that . In this case the Shapley values are and so ordering by Shapley value maximizes both AUC and ABC. We also show that if one orders the predictors randomly, then the expected value of ABC under this randomization is zero for an additive function.

Prediction functions must also capture interactions among the input variables. We study those using some higher order differences of differences. This way of quantifying interactions comes from an anchored decomposition that we present. This anchored decomposition is a less well known alternative to the analysis of variance (ANOVA) decomposition. The interaction quantity for a set of variables is denoted . This interaction does not contribute to any points along the curve until the ‘last’ member of the set , denoted , has been changed. It is thus present only in the final points of the insertion curve. A large AUC comes not just from bringing the large main effects to the front of the list. It also helps to have all elements in a positive interaction included early, and at least one element in a negative interaction appear very late in the ordering.

When there are interactions present, it is no longer true that must be zero under random ordering. We show in Appendix B.1 that the area between the insertion and deletion curves satisfies under random ordering of inputs whether or not interactions are present.

Section 3.4 shows that if we sort variables in decreasing order of their Shapley values, then we do not necessarily get the ordering that maximizes the AUC. This is natural: the Shapley value of variable is defined as a weighted sum of incremental values for changing , while the AUC, uses only one of those incremental values for variable . The anchored decomposition that we present below makes it simple to construct an example with where the Shapley values are while the order has greater AUC than the order . This is not to say that insertion AUCs are somehow in error for not being optimized by the Shapley ordering, nor that Shapley value is in error for not optimizing the AUC. The two measures have different definitions and interpretations. They can reasonably be considered proxies for each other, but the Shapley value weights a variable’s interactions in a different way than the AUC does.

In Appendix B.2 we consider the logistic regression model . Because of the curvature of the logistic transformation, this function has interactions of all orders. At the same time is additive so on this scale the Shapley ordering does maximize AUC. The AUC on the original probability scale is perhaps the more interpretable choice. We show that due to the monotonicity of the logistic transformation, the Shapley ordering for is the same as for and so it also maximizes the for . Because is strictly monotone the Shapley ordering also optimizes AUC for loglinear models and for naive Bayes. It is also shown there that for a differentiable increasing function of an additive function that integrated gradients will compute the optimal order.

The next subsections present the above findings in more detail. Some of the derivations are in an appendix. We use well known properties of the Shapley value. Those are discussed in many places. We can recommend the recent reference by Plischke et al., (2021) because it also discusses Harsanyi dividends and is motivated by variable importance.

3.1 ABC Notation

We study an algorithm that makes a prediction based on input data . The points are written . In most applications . While some attribution methods require real-valued features, the AUC quantity we present does not require it. For classification, could be the estimated probability that a data point with features belongs to class , or it could be that same probability prior to a softmax normalization. Our emphasis is on regression problems.

The set of variable indices is . For any we write for the components that have . We write for . We often need to merge indices from two or more points into one hybrid point. For this is the point with for and for . That is, the parts of and have been properly assembled in such a way that we can pass the hybrid to getting . More generally for disjoint with the point has components , and for in , and respectively.

The cardinality of is denoted . We also write with by convention. It is typographically convenient to shorten the singleton to just where it could only represent a set and not an integer, especially within subscripts.

Suppose that we have two points and are given a method to attribute the difference to the variables . This method produces attribution values with the interpretation that is a measure of the effect on of changing to . We can then sort the variables according to their attribution values . In an insertion test we insert the variables from into in order from ones thought to most increase (i.e., largest ) to ones thought to most decrease (smallest ). Let the constructed points be for with and . If we have chosen a good order there will be a large area under the curve for and consequently also a large (signed) area between that curve and a straight line connecting its endpoints. The left panel in Figure 2 illustrates ABC for insertion.

In a deletion measure we order the variables from the ones thought to have the most negative effect on to the ones thought to have the most positive effect. Those variables are changed from to in that order and a good ordering creates a curve with a small area under it. We keep score by using the signed area above that curve but below the straight line connecting to . Note that we are still inserting variables from into but, in an analogy to what happens in images we are deleting the information that we think would make large, which in that setting made the algorithm confident about what was in the image. Let be the point we get after placing the elements of thought to most decrease into . Our ABC criterion for deletion is the signed area above the curve but below the straight line connecting connecting the endpoints. The right panel in Figure 2 illustrates ABC for deletion.

We also considered taking insertion to mean replacing components by in increasing order of predicted change to when and taking deletion to mean replacement starting with the most negative changes when . This convention may seem like a natural extension of the uses in image classification, but it has two difficulties for regression. First, it is not well defined when . Second, while this exact equality might seldom hold, that definition makes cases very different from those with , for small .

When and are two randomly chosen data points there is a natural symmetry between insertion and deletion. In many settings however, one of the points or is not an actual observation but is instead a reference value such as the gray images discussed above. As mentioned above, choices of and to pair with each other are called policies. Section 5.2 has some example policies on our illustrative data. We include a counterfactual policy with motivation similar to counterfactual XAI. The relation between counterfactual XAI and choice of background data are also discussed in Albini et al., (2021) in detail.

A formal description of the curve is as follows. For a permutation of , and define with . Now let , that is

For our theoretical study it is convenient to define

| (1) |

If we connect the points by line segments then the area we get is a sum of trapezoidal areas

The difference between this trapezoidal area and (1) is unaffected by the ordering permutation because and are invariant to the permutation. One could similarly omit either or (or both) from the sum in (1) without changing the difference between areas attributed to any two permutations.

Our primary measure is the area below the curve but above a straight line from to . It is the (signed) area between those curves, that is

| (2) | ||||

is a measure of the area under the straight line connecting to compatible with our AUC formula from (1). The difference between ABC and AUC is also unaffected by the ordering of variables. The AUC and ABC going from to is the same as that from to . That is, it only depends on the two selected points. The reason for this is that going from to equals when we go from to . For the same reason the deletion areas are the same in both directions, but generally not equal to their insertion counterparts.

Both AUC and ABC have the same units that has. Then for instance if is measured in dollars then is in dollars explained per feature. This normalization is different from that of Petsiuk et al., (2018) whose curve is over the interval instead of and whose AUC is then interpreted in terms of a proportion of pixels.

3.2 Additive Functions

The AUC measurements above are straightforward to interpret when takes the additive form for a constant and functions . We then easily find that

| (3) | ||||

| (4) |

The best ordering is, unsurprisingly, the one that sorts in decreasing values of . If is additive then the insertion and deletion ABCs are the same. Also, the Shapley value for variable is proportional to and so ordering variables by decreasing Shapley value maximizes the AUC and ABC.

3.3 Interactions

The effect of interactions is more complicated, but we only need interactions involving points and . We define the differences and iterated differences of differences that we need via

for with corresponding to no differencing at all. From Theorem 1 of Appendix B we get

We can interpret this as follows: the interaction for variables when represented as differences of differences, takes effect in its entirety once the last element of has been changed from to . It then contributes to of the summands. Thus, in addition to ordering the main effects from largest to smallest, the quality score for a permutation takes account of where large positive and large negative interactions are placed.

It is easy to see from (4) that for an additive function and a uniformly random permutation we have because under such random sampling the expected rank of variable is .

Now suppose that we permute the variables through into a random permutation . A fixed subset is then mapped to . Under this randomization

The next proposition gives .

Proposition 1.

For and let be a simple random sample of elements from . Then

| (5) |

Proof.

The result holds trivially for , so we suppose that . For , because there are equally probable ways to select the elements of and of those have . Subtracting we get

Then using the hockey stick identity,

Next we work out the expected value of . Using the decomposition in Appendix B.1 we have

Then with Proposition 1 we find that

As noted above, if has no interactions because , but otherwise it need not be zero because for . The contribution to from a given interaction has the opposite sign of that interaction because for . We show in Appendix B.1 that under random permutation of the indices.

3.4 AUC Versus Shapley

If we order variables in decreasing order by Shapley value, that does not necessarily maximize the AUC. We can see this in a simple setup for by constructing certain values of . We will exploit the delay with which an interaction gets ‘credited’ to an AUC to find our example.

Consider a setting with and , , , to be chosen later and all other . Because the values are also Harsanyi dividends (Harsanyi,, 1959), the Shapley value shares them equally among their members. Therefore

So long as , the Shapley values are ordered . The for this ordering is

If we order the variables then the AUC is

As a result we see that if , then

It is easy to show that there can be no counterexamples for .

4 Incorporating Binary Features into Integrated Gradients

IG avoids the exponential computational costs that arise for Shapley value. However, as defined it is only available for variables with continuous values. Many problems have binary variables and so we describe here some approaches to including them.

IG is based on the Aumann-Shapley value from Aumann and Shapley, (1974) who present an axiomatic derivation for it. We omit those axioms. See Sundararajan et al., (2017) and Lundberg and Lee, (2017) for the axioms in a variable importance context.

We orient our discussion around Figure 3 that shows a setting with 3 variables. Panel (a) shows a target data point that differs from a baseline point in three coordinates. Panel (b) shows the diagonal path taken by integrated gradients. The gradient of is integrated along that path to get the IG attributions:

If one of the variables is binary then one approach, shown in panel (c) is to simply jump from one value to another at some intermediate point, such as the midpoint. For differentiable the integral of the gradient along the line segment given by the jump would, by the fundamental theorem of calculus, be the difference between at the ends of that interval (times the Euclidean basis vector corresponding to that variable). Such a difference is computable for binary variables even though the points on the path are ill defined. Finally, the vector of Shapley values is an average over paths making jumps from baseline to target in all possible variable orders (Sundararajan et al.,, 2017). Panel (d) shows two of those paths. For differentiable one could integrate gradients along those paths as a way to compute the jumps, again by the fundamental theorem of calculus, and then average the path integrals.

Now suppose that we have binary variables in a set . Without loss of generality suppose that . Then any data and are in and for IG we need to consider arguments to in . We consider three choices that we describe in more detail below:

-

a)

Use the fitted as if the binary , for .

-

b)

Replace by a multilinear interpolation

and compute the integrated gradients of .

-

c)

Take paths that jump for binary as shown in Figure 3(c).

We call these choices, casting, interpolating and jumping, respectively.

Option a is commonly available as many machine learning models cast binary variables to real values when fitting. Option b interpolates: if then . Sundararajan et al., (2017) show that integrated gradients match Shapley values for functions that are a sum of a multilinear interpolation like above plus a differentiable additive function. Unfortunately, the cost of evaluating is , which is exponential in the number of binary inputs. For option c we have to choose where to make the jumps. For we would naturally jump half way along the path though there is not an axiomatic reason for that choice. For we have to choose points on the curve at which to jump. Even if we decide that all of those jumps should be at the midpoint, we are left with possible orders in which to make those jumps. By symmetry we might want to average over those orders but that produces a cost which is exponential in .

Based on the above considerations we think that the best way to apply IG to binary variables is also the simplest. We cast the corresponding booleans to floats.

5 Experimental Results

In this section we illustrate insertion and deletion tests for regression. We compare our variance importance measures on two tabular datasets. The first one is about predicting the value of houses in India. It has mostly binary predictors and two continuous ones. The second dataset is from CERN and it computes the invariant mass produced from some electron collisions.

The methods we compare are Kernel SHAP (Lundberg and Lee,, 2017), integrated gradients (Sundararajan et al.,, 2017), DeepLIFT (Shrikumar et al.,, 2017), Vanilla Grad (Simonyan et al.,, 2013), InputGradient (Shrikumar et al.,, 2016) and LIME (Ribeiro et al.,, 2016). Ancona et al., (2017) make a detailed study of backpropagation-based gradient methods including all of the above ones (this excludes LIME) as well as layer-wise relevance propagation (LRP) of Bach et al., (2015) that we did not include in our computations.

5.1 Bangalore Housing Data

The dataset we use lists the value in Indian rupees (INR) for houses in India. The data are from Kaggle at this URL:

www.kaggle.com/ruchi798/housing-prices-in-metropolitan-areas-of-india.

We use 38 of the 39 other columns in this dataset

(excluding the “Location” column that contains various place names

for simplicity),

and we treat the “Area” and “No. of Bedrooms” columns as continuous variables.

We use only the data from Bangalore (6,207 records). Most of those data points were missing almost every predictor. We use only 1,591 complete data points. We normalized the output value by dividing by 10,000,000 INR. We centered the continuous variables at their means and then divided them by their standard deviations. We selected 80% of the data points at random to train a multilayer perceptron (MLP). The hyperparameters such as number of layers and ratio of dropouts are determined from a search described in Appendix A.2.

For the 20% of points that were held out (391 observations) we computed the ABC from (2) using our collection of variable importance methods. We also included a random variable ordering as a check. For each of those points we made a careful selection of a reference point from holdout points as follows:

-

•

the point had to differ from in at least features,

-

•

it had to be among the smallest such values of , and

-

•

among those it had to have the greatest response difference .

Having numerous different features makes the attribution problem more challenging. Having small brings less exposure to the problems of unrealistic data hybrids. Finally, having large , the absolute value of the sum of feature attributions for XAI algorithms with the completeness axiom, identifies data pairs in most need of an attribution. Despite having close feature vectors those pairs have quite different predicted responses.

Our implementation of KS used 120,000 samples. Our implementation of IG used a Riemann sum on 500 points to approximate the line integrals. The hyperparameters for other XAI methods are summarized in Appendix A.3.

The ABCs and their differences are summarized in Table 1. There we see numerically that KS was best for the insertion ABC and IG was second best. For the deletion measure it was essentially a three way tie for best among KS, IG and DeepLift.

The simple gradient based methods were disappointing. In particular, vanilla grad was worse than random. We note that vanilla grad uses a default variable scaling. We used the standard deviation of each input while another choice is to scale each variable to the interval . Neither of these choices use the specific baseline-target pair and this could cause poor performance.

The difference between KS and IG was not very large. Thus even in this setting where there are lots of binary predictors, IG was able to closely mimic KS. We see in Table 1 that insertion ABCs are on average higher than deletion ABCs for this policy.

While KS and IG and LIME and DeepLIFT make use of reference values in computation of feature attributions, vanilla grad and InputGradient do not require one to specify reference values. They are determined only by local information around the target data. Since our ABC criterion is defined in terms of reference values it is not surprising that methods which use those reference values get larger ABC values. It is interesting that a method like InputGradient that does not even know the baseline we compare to can do as well as it does here. We note that DeepLIFT does reasonably well compared to KS and IG, even though DeepLIFT is derived without any axiomatic properties such as the Aumann-Shapley axioms. Ancona et al., (2017) pointed out a connection wherein DeepLIFT can be interpreted as the approximation of a Riemann sum of IG by a single step with average value in spite of the difference in their computational procedures. Their implementation details are also summarized in Appendix A.3.

KS performed well and IG is a fast approximation to it. Therefore we compare the ABC of insertion and deletion tests for KS and IG in Figure 4. In both cases the left panel shows that the ABC for KS has a long tail. This is also true for IG, but to save space we omit that histogram. Instead we show in the middle panels that the ABC for IG is almost the same as that for KS point by point, with KS usually attaining a somewhat better (larger) ABC than IG. The right panels there show that ABCs for random orderings have nearly symmetric distributions with insertion tests having a few more outliers than deletion tests do.

| Test Mode | Method | Mean | Std. Error |

|---|---|---|---|

| Insertion | Kernel SHAP | 0.628 | 0.034 |

| Integrated Gradients | 0.572 | 0.033 | |

| DeepLIFT | 0.548 | 0.032 | |

| Vanilla Grad | 0.093 | 0.026 | |

| InputGradient | 0.206 | 0.027 | |

| LIME | 0.499 | 0.028 | |

| Random | 0.020 | 0.023 | |

| Kernel SHAP Integrated Gradients | 0.057 | 0.007 | |

| Deletion | Kernel SHAP | 0.423 | 0.032 |

| Integrated Gradients | 0.422 | 0.032 | |

| DeepLIFT | 0.425 | 0.031 | |

| Vanilla Grad | 0.098 | 0.029 | |

| InputGradient | 0.185 | 0.029 | |

| LIME | 0.395 | 0.032 | |

| Random | 0.023 | 0.012 | |

| Kernel SHAP Integrated Gradients | 0.001 | 0.003 |

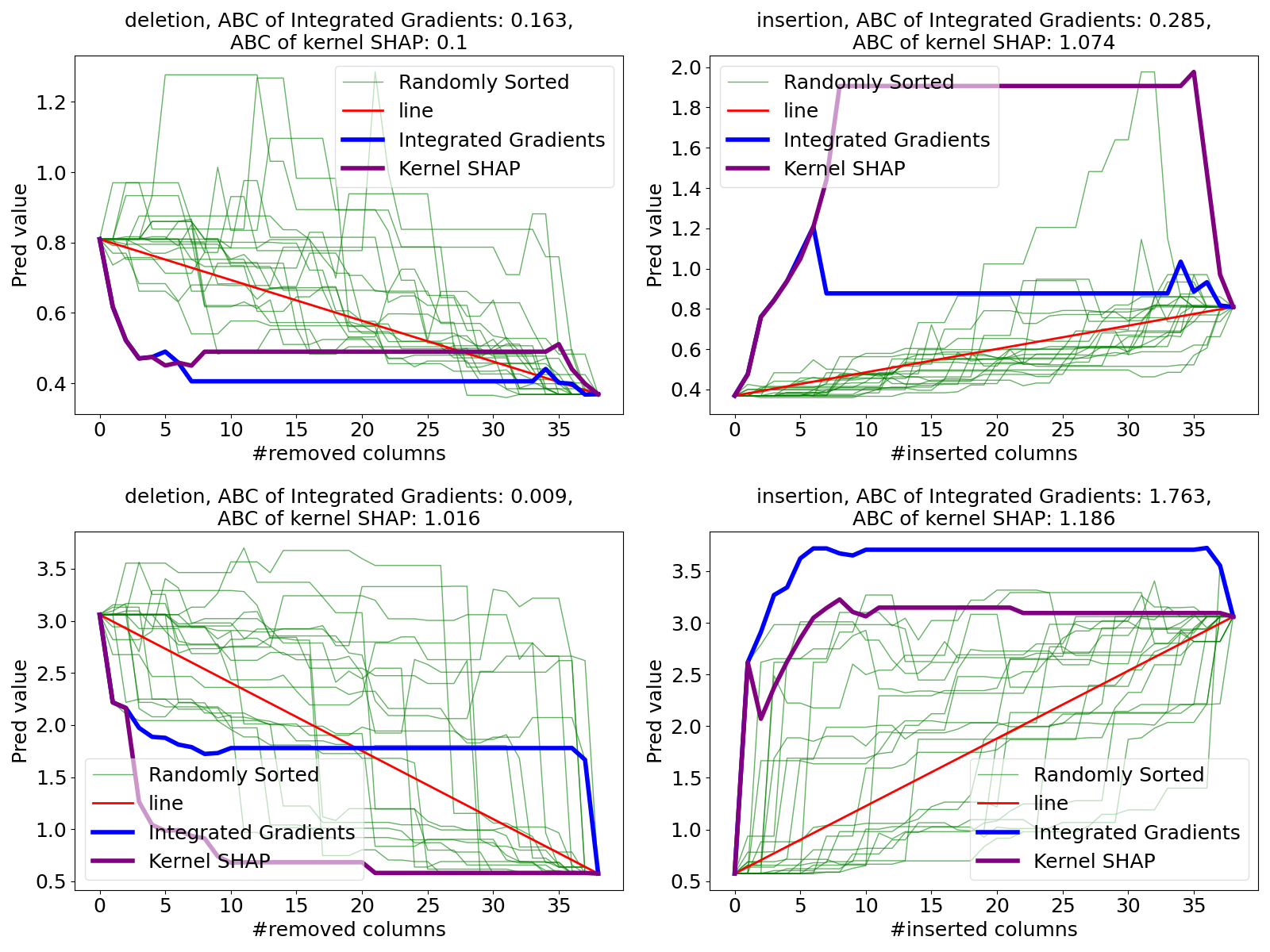

Figure 5 shows some insertion and deletion curves comparing a randomly chosen data point to a counterfactual reference point. Figure 6 shows analogous plots for the data with the greatest differences in ABC between KS and IG. It shows that a very large ABC difference between methods in the insertion test need not have a large difference in the deletion tests and vice versa.

5.2 CERN Electron Collision Data

The CERN Electron Collision Data (McCauley,, 2014) is a dataset about dielectron collision events at CERN. It includes continuous variables representing the momenta and energy of the electrons, as well as discrete variables for the charges of the electrons (: positrons or electrons). Only the data whose invariant mass of two electrons (or positrons) was in the range from to GeV were collected. We treat it as a regression problem to predict their invariant mass from the other 16 features.

The data contains the physical observables of two electrons after the collisions whose tracks are reconstructed from the information captured in detectors around the beam. The features are as follows: The total energy of the two electrons, the three directional momenta, the transverse momentum, the pseudorapidity, the phi angle and the charge of each electron. They are highly dependent features because some of them are calculated from the others. For instance, since a beam line is aligned on the -axis as usual in particle physics, the transverse momenta for are composed of and such that . The phi angle is the angle between and where . The total energy of them is also calculated relativistically. Since the momenta are recorded in GeV unit, which overwhelms the static mass of electrons ( keV in natural unit), the total energies are . The pseudorapidities are given as angles from a beam line. The definition is for each . Regarding these definitions, only 8 (three directional momenta and the charges of each electrons) of the 16 are independent features, and the other features are used as convenient transformed coordinates in particle physics. Actually, the invariant mass , the prediction target feature, is also approximated arithmetically from the momenta as within 0.6% residual error on average for this dataset. From this viewpoint, users of machine learning might confirm that the models properly exclude the charges from evidence of the predictions via XAI. This aspect is, in a sense, a case where ground truth in XAI can be obtained from domain knowledge. We have made such investigations but omit them to save space and because they are not directly related to insertion and deletion testing.

We omit data with missing values, and randomly select 80% of the complete observations (79,931 data points) to construct an MLP. Embedding layers are not placed in this MLP, and all variables are Z-score normalized. Hyperparameters such as the number of units in each layer of the MLP are determined by a hyperparameter search described in Appendix A.4.

The predictions for the 2,000 held out data points were inspected with both KS and IG. The reference data used in XAI methods are collected from these 2,000 data under this policy:

-

•

for all including charges,

-

•

is among the smallest such values, and

-

•

it maximizes subject to the above.

This policy is called the counterfactual policy below. It has similar motivations to the policy we used for the Bangalore housing data. In this case it was possible to compute KS exactly using function evaluations.

The results are given in Table 2. KS is best for both insertion and deletion measures. IG and DeepLIFT are close behind. LIME is nearly as good and the simple gradient methods once again do poorly. As we did for the Bangalore housing data, we make graphical comparisons between KS and IG.

| Test Mode | Methods | Mean | Std. Error |

|---|---|---|---|

| Insertion | Kernel SHAP | 18.535 | 0.215 |

| Integrated Gradients | 18.289 | 0.213 | |

| DeepLIFT | 18.118 | 0.211 | |

| Vanilla Grad | 1.310 | 0.252 | |

| InputGradient | 7.620 | 0.216 | |

| LIME | 17.319 | 0.209 | |

| Random | 0.380 | 0.175 | |

| Kernel SHAP Integrated Gradients | 0.246 | 0.025 | |

| Deletion | Kernel SHAP | 16.752 | 0.176 |

| Integrated Gradients | 16.315 | 0.173 | |

| DeepLIFT | 16.646 | 0.176 | |

| Vanilla Grad | 0.226 | 0.256 | |

| InputGradient | 7.940 | 0.187 | |

| LIME | 15.845 | 0.170 | |

| Random | 0.268 | 0.179 | |

| Kernel SHAP Integrated Gradients | 0.437 | 0.025 |

The results for the insertion test are shown in Figure 7. The deletion test results were very similar and are omitted. These results are similar to what we saw in the previous experiment. As a meaningful XAI metric, KS provides a larger ABC than the other orderings we tried. Also, even in this case where differentiability with respect to charges cannot be assumed, IG does nearly as well as KS.

Although most of data are close to the 45 degree line in the center panel of Figure 7 there are a few cases where KS gets a much larger ABC than IG does. Two such points are shown in Figure 8. Similarly to what we saw in the Bangalore housing example, the comparisons where KS and IG differ greatly in the insertion test has them similar in the deletion test and vice versa. A scatterplot of deletion versus insertion areas is given in Figure 9. Here and in the following we again only pick KS and IG as representative examples. Most of the data are near the diagonal in that plot but there are some exceptional outlying points where the two ABCs are quite different from each other.

Next we consider two more policies, different from our counterfactual policy. In a ‘one-to-one policy’, observations are paired up completely at random. They almost always get different values of the continuous parameters and they get, on average one different value among the two binary values. We also consider an ‘average policy’ where the reference point has the average value for all features. Such points are necessarily unphysical, for instance, they have near zero charge.

The results of these two policies are shown in Table 3. KS attains the best ABC value in all four comparisons there and IG is always close but not always second, even though it treats the particle charges as continuous quantities. One striking feature of that table is that the insertion ABCs are much larger than the deletion ABCs. There is a simple explanation. The invariant masses must be positive and their distribution is positively skewed. The model never predicted a negative value and the predictions also have positive skewness. Because the predicted values satisfy a sharp lower bound, that reduces the maximum possible deletion ABC. Because they are positively skewed, higher insertion ABCs are possible. We see LIME does very well on the one-to-one policy examples as it did on the counterfactual policy, but it does not do well on the (unphysical) average policy comparisons.

| One-to-One | Average | ||||

|---|---|---|---|---|---|

| Test Mode | Method | Mean | Std. Error | Mean | Std. Error |

| Insertion | Kernel SHAP | 30.392 | 0.535 | 23.518 | 0.216 |

| Integrated Gradients | 28.430 | 0.515 | 21.549 | 0.251 | |

| DeepLIFT | 26.740 | 0.433 | 21.333 | 0.238 | |

| Vanilla Grad | 1.707 | 0.215 | 4.803 | 0.194 | |

| InputGradient | 14.677 | 0.397 | 21.610 | 0.236 | |

| LIME | 27.008 | 0.510 | 11.892 | 0.221 | |

| Random | 3.795 | 0.266 | 4.242 | 0.156 | |

| Deletion | Kernel SHAP | 11.806 | 0.299 | 7.621 | 0.149 |

| Integrated Gradients | 10.643 | 0.310 | 6.856 | 0.128 | |

| DeepLIFT | 11.485 | 0.294 | 7.305 | 0.134 | |

| Vanilla Grad | 5.571 | 0.323 | 4.025 | 0.135 | |

| InputGradient | 1.839 | 0.296 | 5.957 | 0.139 | |

| LIME | 10.877 | 0.269 | 1.420 | 0.160 | |

| Random | 3.797 | 0.301 | 4.323 | 0.157 | |

Figure 10 shows results for the one-to-one policy. Unlike Figure 9 for the counterfactual policy the points for are exactly the same as those that had .

One data pair attaining an extreme difference in Figure 10 is inspected in Figure 11. This data gains over 150 GeV in the insertion ABC, which is anomalously large as this dataset is composed of events with invariant mass between and GeV. The figure shows that this large output is due to an extraordinary response to artificial data which appear on the path connecting and . Both XAI methods (IG and KS) correctly identify the features that bring on this effect for the insertion test. Since the one-to-one policy can make long distance pairs compared to the counterfactual policy, synthetic data in the insertion and deletion processes can be far from the data manifold.

The result for the average policy where the reference data is common to all test data is shown in Figure 12. This is a setting where the reference data are very far out of distribution, just like a single color image is for an image classification task. In this case the deletion test, replacing real pixels in order by average ones, attains much smaller ABC values than the insertion test that starts with the average values. From the above results in Figure 9, 10 and 12 it is easy to observe that the relational distributions of insertion and deletion tests are totally different depending on the reference data policy, even for the same XAI algorithm. One cause is asymmetry in the response: if is sharply bounded below but not above then insertion ABCs can be much larger than deletion ones.

| Number of Different Cols | Correlation Coefficients | ||

|---|---|---|---|

| Policy | (Total Number ) | KS | IG |

| Counterfactual | 16 | 0.820 | 0.799 |

| One-to-One | 14.991 | 0.276 | 0.297 |

| Average | 16 | 0.587 | 0.499 |

The ABC including other XAI methods are aggregated in Table 3. The relationships among their magnitudes are almost same as those in the counterfactual policy of Table 2. It is also confirmed that insertion tests have larger ABC values than deletion tests with same setup in general and their differences are larger than those of the counterfactual policy. This point supports our previous discussion about their asymmetry. The computation of InputGradient does not take the reference data into account, and this might explain why InputGradient has comparatively scores compared to other methods in the average policy.

The average number of different columns between reference data and target data and correlation coefficients of ABCs in two kinds of tests are summarized in Table 4. All sixteen columns have different values in the counterfactual policy by definition. The deviation from sixteen in the value in one-to-one policy is mostly from the two charges of the data, which can take only two levels, or . In this sense, the reference data in average policy is unphysical data since it has charges that are near zero. The correlation coefficients between two tests also vary between policies. We note also that the behavior of the two ABCs in the average policy strongly depends on whether or as seen in the scatter plots Figure 12.

6 RemOve and Retrain Methods

In this section we compare KS and IG via ROAR (RemOve And Retrain) (Hooker et al.,, 2019). ROAR is significantly more expensive to study than the other methods we consider as it requires retraining the models, and so we did not apply it to all of the methods. We opted to apply it just to Kernel SHAP and IG. We chose IG as our representative fast method because IG can be used on more general models than DeepLIFT can. We chose Kernel SHAP as the other method because it had best or near best ABC values on our numerical examples. The task in ROAR is about which variables are important to the model’s accuracy and not about which variables are important to any specific prediction. As a result the values in ROAR are not comparable to the other AUC and ABC values that we have computed.

The original proposal of ROAR measures the drop in accuracy for image classification tasks and applying it to regression tasks with tabular data raises the same issues as extending insertion/deletion tests to regression. We measure the test loss on held out data as a measure of retrained model performance. Retraining procedures are taken with an increasing number of removed features at each quantile in , where means that all features are removed. The model architecture and hyperparameters of retrained models are the original models as described in Sections A.2 and A.4.

To use ROAR we must decide how to remove features in the data. Removing in the original ROAR algorithm means padding pixels of the original images with noninformative values such as gray levels. In our experiments, the important features are overwritten by average values for continuous features and by modes for discrete features. These average and modal values are taken from the training data.

The results of our ROAR calculations are shown in Figure 13. Features to be removed are sorted both by their absolute values and by their original signed values for both KS and IG. The error bars show plus or minus one standard error computed from five replicates.

Since ROAR in this experiment measures the Huber loss on the test points, it should be unaffected by the signs of the attributions and sensitive to their magnitude. For this reason, sorting features by the absolute values of their attributions should give a better score than sorting by their signed values. This is a contrasting point to insertion and deletion tests.

The results in Figure 13 are surprising to us. The curves we see are very nearly straight lines connecting the loss with all features present to the loss with no features present, so there is very little area between them and a straight line connecting the end points. This means that the loss in accuracy from variables deemed most important is about the same as those deemed less important. This could be because neither KS nor IG are able to identify important predictors for this task. It could also be that the majority of predictor variables in this data can be replaced by a combination of some other predictors which then prevents a large reduction in accuracy from removing a subset of predictors prior to retraining.

A second surprise is that the signed ordering, that we used as a control that should have been beaten by the absolute ordering was nearly as good as the absolute ordering.

As before KS and IG are comparable, though here both seem disappointing. Using IG with variables sorted by their absolute values even came out superior to KS in the Bangalore housing dataset.

7 Conclusion

In this paper we have extended insertion and deletion tests to regression problems. That includes getting formulas for the effects of interactions on the AUC and ABC measures, finding the expected area between the insertion/deletion curve under random variable ordering, and replacing the horizontal axis by a more appropriate straight line reference. We gave a condition under which sorting variables by their Shapley value will optimize ABC as well as constructing an example where that does not happen.

We compared six methods and several policies on two datasets. We find that overall the Kernel SHAP gave the best areas. The much faster Integrated Gradients method was nearly as good. In order to even run IG in settings with binary variables, some strategy for using continuum values must be employed. We opted for the simplest choice of just casting the booleans to real values.

A very natural policy question is whether to prefer insertion or deletion. Petsiuk et al., (2018) consider both and do not show a strong preference for one over the other. They use deletion when comparing a real image to a blank image (deleting real pixels by replacing them with zeros). They use insertion when comparing a real image to a blurred one (inserting real pixels into the blurred image). In other words the choice between insertion and deletion is driven by the counterfactual point. In the regression setting both inputs points could be real data. By studying both insertion and deletion we have seen that they can differ. A natural way to break the tie is to sum the ABC values for both insertion and deletion. Under a completely random permutation the expected value of that sum is zero. See Appendix B.1. In our examples, IG closely matches KS for both insertion and deletion, so it also matches their sum.

Acknowledgement

This work was supported by the U.S. National Science Foundation grants IIS-1837931 and DMS-2152780, and by Hitachi, Ltd. We thank Benjamin Seiler for helpful comments. Comments from three anonymous reviewers have helped us improve this paper.

References

- Adebayo et al., (2018) Adebayo, J., Gilmer, J., Muelly, M., Goodfellow, I., Hardt, M., and Kim, B. (2018). Sanity checks for saliency maps. Advances in neural information processing systems, 31.

- Adebayo et al., (2020) Adebayo, J., Muelly, M., Liccardi, I., and Kim, B. (2020). Debugging tests for model explanations. Advances in Neural Information Processing Systems, 33:700–712.

- Agarwal et al., (2022) Agarwal, C., Krishna, S., Saxena, E., Pawelczyk, M., Johnson, N., Puri, I., Zitnik, M., and Lakkaraju, H. (2022). OpenXAI: Towards a transparent evaluation of model explanations. Technical report.

- Akiba et al., (2019) Akiba, T., Sano, S., Yanase, T., Ohta, T., and Koyama, M. (2019). Optuna: A next-generation hyperparameter optimization framework. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining, pages 2623–2631.

- Albini et al., (2021) Albini, E., Long, J., Dervovic, D., and Magazzeni, D. (2021). Counterfactual Shapley additive explanations. Technical report, arXiv:2110.14270.

- Aliş and Rabitz, (2001) Aliş, Ö. F. and Rabitz, H. (2001). Efficient implementation of high dimensional model representations. Journal of Mathematical Chemistry, 29(2):127–142.

- Ancona et al., (2017) Ancona, M., Ceolini, E., Öztireli, C., and Gross, M. (2017). Towards better understanding of gradient-based attribution methods for deep neural networks. Technical report, arXiv:1711.06104.

- Aumann and Shapley, (1974) Aumann, R. J. and Shapley, L. S. (1974). Values of Non-Atomic Games. Princeton University Press, Princeton, NJ.

- Bach et al., (2015) Bach, S., Binder, A., Montavon, G., Klauschen, F., Müller, K.-R., and Samek, W. (2015). On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS one, 10(7):e0130140.

- Bodria et al., (2021) Bodria, F., Giannotti, F., Guidotti, R., Naretto, F., Pedreschi, D., and Rinzivillo, S. (2021). Benchmarking and survey of explanation methods for black box models. Technical report, arXiv:2102.13076.

- Cai et al., (2021) Cai, Y., Zimek, A., and Ntoutsi, E. (2021). XPROAX-local explanations for text classification with progressive neighborhood approximation. In 2021 IEEE 8th International Conference on Data Science and Advanced Analytics (DSAA), pages 1–10. IEEE.

- Dawid and Musio, (2021) Dawid, A. P. and Musio, M. (2021). Effects of causes and causes of effects. Annual Review of Statistics and Its Application, 9.

- Efron and Stein, (1981) Efron, B. and Stein, C. (1981). The jackknife estimate of variance. Annals of Statistics, 9:586–596.

- Fel et al., (2021) Fel, T., Cadene, R., Chalvidal, M., Cord, M., Vigouroux, D., and Serre, T. (2021). Look at the variance! efficient black-box explanations with Sobol-based sensitivity analysis. Advances in Neural Information Processing Systems, 34.

- Fisher and Mackenzie, (1923) Fisher, R. A. and Mackenzie, W. A. (1923). The manurial response of different potato varieties. Journal of Agricultural Science, xiii:311–320.

- Gomez et al., (2022) Gomez, T., Fréour, T., and Mouchère, H. (2022). Metrics for saliency map evaluation of deep learning explanation methods. Technical report, arXiv:2201.13291.

- Harsanyi, (1959) Harsanyi, J. C. (1959). A bargaining model for cooperative n-person games. In Contributions to the Theory of Games IV, volume 2, pages 325–355. Princeton University Press, Princeton, NJ.

- Haug et al., (2021) Haug, J., Zürn, S., El-Jiz, P., and Kasneci, G. (2021). On baselines for local feature attributions. Technical report, arXiv:2101.00905.

- He et al., (2015) He, K., Zhang, X., Ren, S., and Sun, J. (2015). Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification. In Proceedings of the IEEE international conference on computer vision, pages 1026–1034.

- Hoeffding, (1948) Hoeffding, W. (1948). A class of statistics with asymptotically normal distribution. Annals of Mathematical Statistics, 19(3):293–325.

- Hooker et al., (2019) Hooker, S., Erhan, D., Kindermans, P.-J., and Kim, B. (2019). A benchmark for interpretability methods in deep neural networks. Advances in neural information processing systems, 32.

- Hsieh et al., (2021) Hsieh, C.-Y., Yeh, C.-K., Liu, X., Ravikumar, P., Kim, S., Kumar, S., and Hsieh, C.-J. (2021). Evaluations and methods for explanation through robustness analysis. In International Conference on Learning Representation (ICLR).

- Ismail et al., (2020) Ismail, A. A., Gunady, M., Corrada Bravo, H., and Feizi, S. (2020). Benchmarking deep learning interpretability in time series predictions. Advances in neural information processing systems, 33:6441–6452.

- Izzo et al., (2020) Izzo, C., Lipani, A., Okhrati, R., and Medda, F. (2020). A baseline for Shapley values in MLPs: From missingness to neutrality. Technical report, arXiv:2006.04896.

- Kokhlikyan et al., (2020) Kokhlikyan, N., Miglani, V., Martin, M., Wang, E., Alsallakh, B., Reynolds, J., Melnikov, A., Kliushkina, N., Araya, C., Yan, S., and Reblitz-Richardson, O. (2020). Captum: A unified and generic model interpretability library for pytorch. Technical report, arXiv:2009.07896.

- Kumar et al., (2020) Kumar, I. E., Venkatasubramanian, S., Scheidegger, C., and Friedler, S. (2020). Problems with Shapley-value-based explanations as feature importance measures. In Proceedings of the 37th International Conference on Machine Learning (ICML 2020).

- Kuo et al., (2010) Kuo, F., Sloan, I., Wasilkowski, G., and Woźniakowski, H. (2010). On decompositions of multivariate functions. Mathematics of computation, 79(270):953–966.

- Liao and Varshney, (2021) Liao, Q. V. and Varshney, K. R. (2021). Human-centered explainable AI (XAI): From algorithms to user experiences. Technical report, arXiv:2110.10790.

- Lundberg and Lee, (2017) Lundberg, S. M. and Lee, S.-I. (2017). A unified approach to interpreting model predictions. Advances in neural information processing systems, 30.

- Mase et al., (2019) Mase, M., Owen, A. B., and Seiler, B. (2019). Explaining black box decisions by Shapley cohort refinement. Technical report, arXiv:1911.00467.

- Mase et al., (2022) Mase, M., Owen, A. B., and Seiler, B. B. (2022). Variable importance without impossible data. Technical report, arXiv:2205.15750.

- McCauley, (2014) McCauley, T. (2014). Events with two electrons from 2010. CERN Open Data Portal.

- Nauta et al., (2022) Nauta, M., Trienes, J., Pathak, S., Nguyen, E., Peters, M., Schmitt, Y., Schlötterer, J., van Keulen, M., and Seifert, C. (2022). From anecdotal evidence to quantitative evaluation methods: A systematic review on evaluating explainable AI. ACM Computing Surveys.

- O’Donnell, (2014) O’Donnell, R. (2014). Analysis of Boolean functions. Cambridge University Press, New York.

- Parvatharaju et al., (2021) Parvatharaju, P. S., Doddaiah, R., Hartvigsen, T., and Rundensteiner, E. A. (2021). Learning saliency maps to explain deep time series classifiers. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, pages 1406–1415.

- Petsiuk et al., (2018) Petsiuk, V., Das, A., and Saenko, K. (2018). RISE: Randomized input sampling for explanation of black-box models. Technical report, arXiv:1806.07421.

- Plischke et al., (2021) Plischke, E., Rabitti, G., and Borgonovo, E. (2021). Computing Shapley effects for sensitivity analysis. SIAM/ASA Journal on Uncertainty Quantification, 9(4):1411–1437.

- Ribeiro et al., (2016) Ribeiro, M. T., Singh, S., and Guestrin, C. (2016). Why should I trust you? explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pages 1135–1144.

- Rudin, (2019) Rudin, C. (2019). Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nature Machine Intelligence, 1(5):206–215.

- Russakovsky et al., (2015) Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., Berg, A. C., and Fei-Fei, L. (2015). ImageNet large scale visual recognition challenge. International journal of computer vision, 115(3):211–252.

- Saeed and Omlin, (2021) Saeed, W. and Omlin, C. (2021). Explainable AI (XAI): A systematic meta-survey of current challenges and future opportunities. Technical report, arXiv:2111.06420.

- Samek et al., (2016) Samek, W., Binder, A., Montavon, G., Lapuschkin, S., and Müller, K.-R. (2016). Evaluating the visualization of what a deep neural network has learned. IEEE transactions on neural networks and learning systems, 28(11):2660–2673.

- Selvaraju et al., (2017) Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., and Batra, D. (2017). Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, pages 618–626.

- Shrikumar et al., (2017) Shrikumar, A., Greenside, P., and Kundaje, A. (2017). Learning important features through propagating activation differences. In International conference on machine learning, pages 3145–3153. PMLR.

- Shrikumar et al., (2016) Shrikumar, A., Greenside, P., Shcherbina, A., and Kundaje, A. (2016). Not just a black box: Learning important features through propagating activation differences. Technical report, arXiv:1605.01713.

- Simonyan et al., (2013) Simonyan, K., Vedaldi, A., and Zisserman, A. (2013). Deep inside convolutional networks: Visualising image classification models and saliency maps. Technical report, arXiv:1312.6034.

- Smilkov et al., (2017) Smilkov, D., Thorat, N., Kim, B., Viégas, F., and Wattenberg, M. (2017). SmoothGrad: removing noise by adding noise. Technical report, arXiv:1706.03825.

- Sobol’, (1969) Sobol’, I. M. (1969). Multidimensional Quadrature Formulas and Haar Functions. Nauka, Moscow. (In Russian).

- Sobol’, (2003) Sobol’, I. M. (2003). Theorems and examples on high dimensional model representation. Reliability Engineering & System Safety, 79(2):187–193.

- Sturmfels et al., (2020) Sturmfels, P., Lundberg, S., and Lee, S.-I. (2020). Visualizing the impact of feature attribution baselines. Distill, 5(1):e22.

- Sundararajan and Najmi, (2020) Sundararajan, M. and Najmi, A. (2020). The many Shapley values for model explanation. In International Conference on Machine Learning, pages 9269–9278. PMLR.

- Sundararajan et al., (2017) Sundararajan, M., Taly, A., and Yan, Q. (2017). Axiomatic attribution for deep networks. In International Conference on Machine Learning, pages 3319–3328. PMLR.

- Tan and Le, (2019) Tan, M. and Le, Q. (2019). Efficientnet: Rethinking model scaling for convolutional neural networks. In International conference on machine learning, pages 6105–6114. PMLR.

- Wah et al., (2011) Wah, C., Branson, S., Welinder, P., Perona, P., and Belongie, S. (2011). The Caltech-UCSD Birds-200-2011 Dataset. California Institute of Technology.

- Zhang et al., (2018) Zhang, J., Bargal, S. A., Lin, Z., Brandt, J., Shen, X., and Sclaroff, S. (2018). Top-down neural attention by excitation backprop. International Journal of Computer Vision, 126(10):1084–1102.

Appendix A Detailed Model Descriptions of the Experiments

This appendix provides some background details on the experiments conducted in this article.

A.1 The Example in Image Classification

Here we summarize how the example of insertion and deletion tests in image classification shown in Figure 1 was computed. The image is from Wah et al., (2011) and the model is pretrained for classification in ImageNet (Russakovsky et al.,, 2015) whose architecture is EfficientNet-B0 (Tan and Le,, 2019). The preprocessing of the model includes cropping the center of the image to make it square as shown in the saliency map of Figure 1.

The saliency map is computed using SmoothGrad of Smilkov et al., (2017). It averages IG computations over 300 randomly generated baseline images of Gaussian noise. The model output are distributed to the latent features in the first convolutional layer (implemented in Captum (Kokhlikyan et al.,, 2020) as layer integrated gradient). That layer has 32 blocks each of which has a grid of pixels times pixels. The effect of any pixel is summed over those 32 blocks and over all patterns that contain it. In the insertion and deletion test, this saliency map is then the same size, , as the preprocessed original image. The reference image for both insertion and deletion tests was a black image.

The AUCs for several different choices of reference data are summarized in Table 5. Note that the definition of AUCs are different from the main material of this paper and small deletion AUC is better in this situation. The reference image has a very significant effect on the AUCs.

A.2 Model for the Bangalore Housing Data

The detail of the model used in Section 5.1 is summarized in Table 6. Those hyperparameters, including the number of intermediate layers were obtained from a hyperparameter search using Optuna (Akiba et al.,, 2019). The model is optimized with Huber loss. Each intermediate layer is accompanied with parametric ReLU (He et al.,, 2015) and dropout layers. The dropout ratio is common in all of them.

| Hyperparameter | Value |

|---|---|

| Dropout Ratio | 0.10031 |

| Learning Rate | |

| Number of Neurons | [333–465–86–234] |

| Huber Parameter |

A.3 Other XAI methods in Tables 1, 2 and 3

The implementation details of the other XAI methods than KS and IG which appear in Tables 1, 2 and 3 are summarized in this subsection.

We use the implementations on Captum (Kokhlikyan et al.,, 2020) for those methods, DeepLIFT (Shrikumar et al.,, 2017), Vanilla Grad (Simonyan et al.,, 2013), InputGradient (Shrikumar et al.,, 2016) and LIME (Ribeiro et al.,, 2016) with default set arguments. Inputs of InputGradient are those after applying Z-score normalizing in the electron-electron collision data from CERN. Zeros in binary vectors are replaced by a small negative value () in InputGradient to avoid degeneration in the Metropolitan Areas of India dataset. As we use parametric ReLU as the activation functions in our model, it is also treated as a usual nonlinear function in the DeepLIFT calculations. The reference values that can be set in LIME and DeepLIFT are chosen as same data to KS and IG, depending on their policy.

A.4 CERN Electron Collision Data

The hyperparameters for the model used in Section 5.2 are given in Table 7. They were obtained from a hyperparameter search using Optuna (Akiba et al.,, 2019). Each intermediate layer is a parametric ReLU with dropout. The dropout ratio is common to all of the layers. The performance for test data is depicted in Figure 14. The model is overall very accurate but the very highest values are systematically underestimated.

| Hyperparameter | Value |

|---|---|

| Dropout Ratio | 0.11604 |

| Learning Rate | |

| Number of Neurons | [509–421–65–368–122–477] |

| Huber Parameter |

Appendix B Proof of Theorem 1

Here we prove that . We use the anchored decomposition that we define next. We also connect that decomposition to some areas of the literature.

The anchored decomposition is a kind of high dimensional model representation (HDMR) that represents a function of variables by a sum of functions, one per subset of where the function for depends on only through . The best known HDMR is the ANOVA of Fisher and Mackenzie, (1923), Hoeffding, (1948), Sobol’, (1969) and Efron and Stein, (1981) but there are others. See Kuo et al., (2010).

The anchored decomposition goes back at least to Sobol’, (1969). It does not require a distribution on the inputs. Instead of centering higher order interaction terms by subtracting expectations, which don’t exist without a distribution, it centers by subtracting values at default or anchoring input points. We only need it for functions on and without loss of generality we take the anchor to be all zeros.

We use for vectors of all ones and all zeros, respectively. For we write generalizing the standard basis vectors . The function we need to study is where

gives the values in the curve we study.

The anchored decomposition of is

The main effect in an anchored decomposition is and the two factor term for indices is

There is an inclusion-exclusion-Möbius formula

See for instance Kuo et al., (2010). The anchored decomposition is also called cut-HDMR (Aliş and Rabitz,, 2001) in chemistry, and finite differences-HDMR in global sensitivity analysis (Sobol’,, 2003). When is the value function in a Shapley value context, the values are known as Harsanyi dividends (Harsanyi,, 1959). Many of the quantities we use here also feature prominently in the study of Boolean functions (O’Donnell,, 2014).

The next Lemma is from Mase et al., (2019). We include the short proof for completeness.

Lemma 1.

For integer , let have the anchored decomposition . Then for ,

where .

Proof.

The inclusion-exclusion formula for the binary anchored decomposition is

Suppose that for . Then, splitting up the alternating sum

because and are the same point when . It follows that if .

Now suppose that . First because only depends on through . From we have . Then , completing the proof. ∎

We are now ready to state and prove our theorem expressing the AUC in terms of the anchored decomposition. Without loss of generality it takes to be the identity permutation.

Theorem 1.

Let and let be defined by , and let . Then the given by (1) satisfies

| (6) |

Proof.

B.1 ABC for Deletion

Now suppose that we use the deletion strategy of replacing by in the opposite order from that used above, meaning that we change variables thought to most decrease first. Then letting be the index of the smallest element of , with by convention, we get by the argument in Theorem 1,

Our area between the curves, ABC, measure for deletion is

If we sum the two ABC measures we get