Delay-Effective Task Offloading Technology in Internet of Vehicles: From the Perspective of the Vehicle Platooning

Abstract

The task offloading technology plays a crucial vital role in the Internet of Vehicle (IoV) with the demands of delay minimum, by jointly optimizing the heterogeneous computing resources supported by the vehicles, roadside units (RSUs), and macro base stations (MBSs). In previous works, on the one hand, they ignored the wireless interference among the exchange and sharing of the task data. On the other hand, the available resources supported by the vehicles that have similar driving behaviors, which can form a vehicle platooning (VEH-PLA) and effectively integrate the resources of individual vehicle, has not been addressed. In addition, as a novel resource management paradigm, the VEH-PLA should consider the task categorization, since vehicles in VEH-PLA may have the same task offloading requests, which also has not attracted enough attention. In this paper, considering the wireless interference, mobility, VEH-PLA, and task categorization, we propose four kinds of task offloading models for the purpose of the processing delay minimum. Furthermore, by utilizing centralized training and decentralized execution (CTDE) based on multi-agent deep reinforcement learning (MADRL), we present a task offloading decision-making method to find the global optimal offloading decision, resulting in a significant enhancement in the load balancing of resources and processing delay. Finally, the simulations demonstrate that the proposed method significantly outperforms traditional task offloading methods in terms of the processing delay minimum while keeping the resource load balancing.

Index Terms:

IoV, task offloading, vehicle platooning, CTDE and MADRL, processing delay minimumI Introduction

In the era of intelligent transportation systems, the Internet of Vehicles (IoV) emerges as a transformative technology paradigm, reshaping the way we perceive and interact with transportation infrastructure and vehicles [1, 2], followed by large amounts of computing tasks that requires significant computational resources to process this data in real-time. However, the limited computing resources and energy constraints of vehicles still pose challenges for executing complex task offloading, which refers to the local task processing or the process of transferring computational tasks from vehicles to nearby edge servers and cloud infrastructure. Accordingly, an effective method of task offloading helps alleviate the computational burden on vehicles by leveraging the computing resources available in the network infrastructure.

In the context of task offloading, the most two crucial aspect lie in the offloading decision and resource management. On the one hand, tasks should be offloaded to a suitable offloading destination, based on factors such as task characteristics, network conditions, performance requirement, and resource availability. On the other hand, computational resource allocation in edge servers or cloud data centers should accommodate task offloading requests and ensure timely task execution with minimal resource contention. Currently, heuristic algorithms [3], game-theoretic [4], and methods in [5] are popular ones to solve the issue. But these methods failed to work well in a dynamic environment, since they rely on pre-defined rules and complex computations that may not reflect real-time data accurately. The deep reinforcement learning (DRL) can continuously optimize offloading schemes and achieve the global performance optimization. In particular, centralized training and decentralized execution (CTDE) is a typical framework in DRL and utilizes the strengths of both by training a global model centrally while allowing agents to execute decisions locally, and corresponding offloading schemes based on DRL and CTDE framework can better address the problem of task offloading in the context of IoV.

However, due to the size difference of resources and the dynamic nature of IoV environments, above studies on the optimization of the offloading decision and resource management mainly face the following challenges: 1) resource type: In fact, vehicles moving on the same road typically exhibit similar behaviors, which can form a platooning mode and be regarded as a novel resource management paradigm; 2) wireless interference: The data exchange and sharing among different devices involves wireless communication interference, which further deteriorates the processing delay and success probability of the task offloading; 3) task categorization: The vehicles moving on the same road potentially have similar task offloading requirements, the public features of task offloading should be considered. In this way, combining with the concept of VEH-PLA, the resource utilization can be further enhanced.

In view of the above three limitations on the current works and three challenges for the problem of task offloading, combining with the concept of VEH-PLA, we provide a CTDE-based DRL task offloading decision by optimizing the computational resources to minimize the processing delay and keep the load balancing of the resources, and make a comparison with the popular offloading decision schemes. The main contributions of the paper are summarized as follows.

-

•

For the problem of task offloading in an context of the IoV, we establish an innovative heterogeneous resource management paradigm with the concept of VEH-PLA. Based on above paradigm, both the minimum processing delay and load balancing of resources are achieved by optimizing computational resources with proposed four kinds of task offloading schemes, under constraints on the vehicular mobility, task categorization, and interference.

-

•

The established resource management paradigm can be modeled as a decentralized partially observable markov decision process (Dec-POMDP). Furthermore, we propose an effective task offloading method by using MADRL based on CTDE, called as a heterogeneous computational resources allocation (CTDE-MADRL-HCRA), to find the global optimal offloading decision, which integrates the advantages of VEH-PLA, dynamic learning capability of MADRL, leading to a significant performance enhancement in the accuracy of offloading decisions, resource allocation and processing delay.

-

•

Simulation results demonstrate the effectiveness and correctness of the proposed schemes. By making a performance comparison between the schemes based on value decomposition networks (VDN), independent Q-learning (IQL) and Q-Mixing networks (QMIX) with the consideration of VEH-PLA and task categorization or not, the CTDE-MADRL-HCRA shows a superior performance on the processing delay minimum and load balancing of resources, and provides an effective solution for task offloading in complex scenarios caused by the vehicular mobility and wireless interference.

The remainder of this paper is organized as follows. Section II summarizes the related work on vehicular network task offloading conducted domestically and internationally. Section III describes the system model and formulates the VEC task offloading optimization problem. The problem is transformed into a Dec-POMDP, and a learning algorithm based on CTDE is proposed in Section IV. Comprehensive simulation results demonstrating the performance are presented in Section V. Finally, Section VI concludes the paper.

II Related Works

The effective computational resource allocation affects the processing delay and success probability of a task offloading. Usually, the computational resources can be supported by the edge computation power (such as the MBSs, RSUs and vehicles) and even cloud center. Due to the mobility of the vehicles, huge amounts of task offloading, and limited size of computational resources in an IoV, on the one hand, it is challenging to ensure the processing delay and success probability of task offloading. Subsequently, in terms of the computational resources provided by different edge servers, task offloading decision methods, performance metrics of system cost, processing delay, and energy consumption, we survey the methods for task offloading decision in the IoV.

II-A Computational Resources Supported by RSUs/MBSs

The mobile edge computing (MEC) functions can be realized by integrating edge servers of RSUs/MBSs. In this way, the data processing and storage can be performed directly on these servers and the processing delays can be reduced. Subsequently, in [6], Luo et al. deployed RSUs based edge computing along roads to provide enough bandwidth for computing task offloading requiring by vehicles, for the purpose of minimizing the data processing costs while ensuring delay constraints. In particular, they modeled the data scheduling as a DRL problem, which can be solved by an improved deep Q-network (DQN) algorithm. By integrating the resources of MBS and RSU, In [7], Sonmez et al. proposed a vehicle edge orchestrator based on two-stage machine learning, under the constraints of the task offloading success probability and task processing delay. Similar works were also done in [8].

Due to the high-speed mobility of the vehicles, the effective communication duration between the vehicles and MBSs/RSUs cannot be enough to support achieve task offloading, since the corresponding processing delay may be higher than the effective communication duration.

II-B Computational Resources Supported by vehicles

Achieving task offloading by exploiting the computational resources of multiple vehicles is effective. For example, by offloading the task to the vehicles moving along the same direction of the road, it is possible to achieve task offloading while ensuring a longer effective communication duration. In [9], Qiao et al. regarded the moving vehicles as mobile edge computing resources with the purpose of establishing a collaborative distributed computing architecture, under which a collaborative task offloading and output transmission mechanism was proposed to ensure the processing delay and application-level performance. In [10], Xiong et al. proposed a heterogeneous intelligent task offloading framework based on three types of V2X communication technologies.

Considering the computational resources provided by the vehicle edge computing (VEC) server, local vehicles and other vehicles, to enhance the delay benefits and energy cost benefits, in [11], Huang et al. proposed a task offloading and resource allocation method by jointly designing of task type and vehicle speed, under which the utility function of the vehicle can be derived based on the energy costs and task execution revenue. Finally, a joint optimization scheme for task offloading and resource allocation was developed to maximize the utility level of vehicles constrained by the processing delay, computing resources, and wireless resources. Similar works were also done in [12].

Above works have considered the computational resources supported by the vehicles, and provided an available solution to deal with task offloading interrupt existing in offloading centered on the RSUs. But the coordinated task offloading framework among vehicles and collaboration were ignored.

II-C Computational Resources Supported by VEH-PLA

Different from randomly moving vehicles, the vehicles in VEH-PLA are featured by the same driving behaviors, such as same driving direction, smooth speeds, and tight following distance [13]. As an innovative method through V2V communication technology that enables the coordinated operation among multiple vehicles, the VEH-PLA offers a stable topology to provide a larger size of computational resources and effective communication duration [14]. In addition, the task offloading by using the vehicular resources can further decrease the corresponding processing delay.

In [13], Xiao et al. redistributed complex tasks via VEH-PLA, thereby optimizing the utilization of VEH-PLA’s idle resources for enhanced task processing. This approach benefited from the inherent stable connectivity, adjustable maneuverability, and sufficient charging capabilities characteristic of VEH-PLA. Moreover, they introduce a Stackelberg game model to describe the interplay between mobile edge platoon cloud (MEPC) platforms and vehicles requiring task offloading, focusing on maximizing their combined utility. In [15], Zheng et al. analyzed the processing delay and energy consumption of local offloading and VEH-PLA offloading when offloading the task to VEH-PLA members, and proposed a method to minimize the long-term maximum task queue by optimizing the allocation of communication and computing resources. Finally, the effectiveness of the proposed method was demonstrated through online algorithms using Lyapunov optimization and successive convex approximation (SCA) methods. In [16], Chao et al. designed a contract based incentive mechanism to incentivize vehicles to form VEH-PLA and share their computing resources. Then, for maximizing vehicle utility, a joint optimization of offloading decisions, communication, and computing resource allocation schemes of the vehicle and BS was formulated. The problem of task offloading failure caused by potential disconnections among vehicles was studied in [17], a multi-leader multi-follower Stackelberg game was introduced for the co-optimization of pricing strategies and computational resource distribution, with the goal of maximizing participant revenues. Similar works were also done in [18].

However, for one thing, all above studies ignored wireless interference over the processing of task offloading, which affects the QoS of communication channel and the processing delay, resulting a failure of the task offloading. For another thing, facing a large number of task offloading requests, there has been no analysis of task types, particularly the differences between tasks managed in a queue environment and those performed by traditional road vehicles. VEH-PLA, as a new type of computational resource allocation framework, allows its members to drive collaboratively, collectively completing public tasks such as environmental perception, decision-making, and emergency response. This can effectively reduce the frequency of task offloading and alleviate the pressure of insufficient computing resources in the IoV.

III SYSTEM MODEL AND PRELIMINARIES

III-A System Model

III-A1 Computational resource architecture

As shown in Fig. 1, the management architecture of computational resources in the context of the IoV includes a set of MBSs, denoted by , a set of RSUs, denoted by and a set of moving vehicles, denoted by , where , , denote the number of MBSs, RSUs and vehicles, respectively. The MBSs are distributed randomly at different locations keeping away from the road, while the RSUs are placed equidistantly along the roadside. In addition, the vehicles, RSUs, and MBSs poss limited computational resources. Without losing generality, let , , and denote the maximum computing capabilities of individual vehicle, RSU, and MBS (i.e., the maximum CPU cycle frequency), respectively.

III-A2 Task offloading

To improve the driving safety and traffic efficiency, moving vehicles generate the tasks needed being computed by occupying computational resources. Generally speaking, the generated tasks usually are classified into public tasks, such public demands of traffic sign recognition and road obstacle perception, as well as personalized tasks for satisfying individual differentiated demands. Because of limited computational resources and affordable processing delay of individual vehicle, vehicles requesting for a task can resort to local computational resources or available ones of other vehicles, RSUs as well as MBSs, as shown in Fig. 1.

III-A3 VEH-PLA based resource management

It is worth mentioning that, considering the available computational resources provided by other vehicles, VEH-PLA is a significant resource management architecture to effectively integrate the resources of the individual vehicle. Constrained by traffic regulations (such as driving direction, safe distance, speed limits), vehicles driving on the same road typically exhibit similar behaviors. Based on this, these vehicles moving a local range can form a platooning mode, driving together in an orderly manner according to certain rules and distances (especially on highways during peak hours or long-distance journeys). In addition, the VEH-PLA can expand its size through lane changing and merging, achieving more efficient communication and resource coordination optimization. Let be the set of vehicles in the same VEH-PLA, where () is the number of vehicles in the platooning. In this way, considering task types and VEH-PLA, feasible task offloading decisions can be provided by the individual vehicle, VEH-PLA, RSUs and MBSs, as shown in Fig. 2.

In addition, for better describing task offloading, we discretize the time duration into decision episodes, indexed by , with the length of . It is assumed that the vehicles generate a task at most at the episode .

III-B Vehicle Speed Model

Without loss of generality, we employ a Cartesian coordinate system and define the positive direction as moving towards the right of the Fig. 1, with coordinates measured in meters. The position of -th vehicle at time slot is . Similarly, the locations of -th RSU and -MBS can be denoted by and , respectively.

Using a truncated Gaussian distribution model to simulate vehicle traffic is more in line with the actual movement characteristics of vehicles [19], where the mean is and the standard deviation is . Let and be the minimum and maximum truncation ranges for speed, and let the speed be represented by . To ensure the consistency of speed among members of VEH-PLA, the generated speeds need to be synchronized. In this way, the speed of member-vehicles, denoted by , is set to the average speed of the whole platooning, namely .

III-C Advantages of VEH-PLA and Task Offloading Methods

Vehicles driving on the same road usually have similar task processing requirements (e.g., the road condition information, road congestion information, and weather information etc). The framework VEH-PLA has a stable topology structure and tight following distance. Accordingly, on the one hand, tasks requesting from vehicles in the platooning can be regarded as public tasks, and can be handled uniformly by using the same resources. While the individual differentiated task requests can be called as personalized tasks, which need allocate additional resources to process the tasks. On the other hand, the VEH-PLA framework also extends the available computing resources of individual vehicle, increasing the success probability of task offloading. Based on the advantages of VEH-PLA, when the vehicle requests for a task offloading, based on the constraints of the task types, size of available computing resources and processing delay provided by different offloading models, the vehicle will select the best offloading decision among the LOCAL offloading, VEH-PLA offloading, RSU offloading, and RELAY offloading, as shown in Fig. 3.

In detail, the VEH-PLA offloading model can support two kinds of task types, i.e., public tasks and personalized tasks. On the one hand, the vehicle requesting for a task offloading belongs to the platooning, it first determines whether the task is public or not. If the task is public, it does not occupy any resources to compute the task and directly gets the result from the lead vehicle of the VEH-PLA. Otherwise, it will perform task offloading. On the other hand, when the -th vehicle does not belong to the platooning, if effective communication duration between and any one of vehicles in the platooning is less than or equal to the affordable offloading delay of -th vehicle, and the latter has enough computing resources satisfying the demand of -th vehicle’s task, then can execute the VEH-PLA offloading model.

III-D The Delay Analysis of the Task Offloading Model

As a result of these diverse offloading models, differentiated demand of vehicles, different scale of computing resources, the processing delay of a task offloading consists of different types of delays for LOCAL, VEH-PLA, RSU and RELAY offloading models.

III-D1 Transmission Rate between Vehicles and RSUs

Given that -th RSU can keep effective communication duration with -th moving vehicle . In other words, -th vehicle can offload the computing task to -th RSU before moving out the latter’s coverage area. Combining with the conclusions in [20, 21], the transmission rate between and , denoted by , can be represented as

| (1) |

where denotes the transmission bandwidth of the vehicles, is the transmission power of -th vehicle, and are the channel fading coefficient and the distance between -th vehicle and -th RSU. In particular, follows an exponential distribution with unit mean. is the path-loss exponent, and is the additive Gaussian white noise (AGWN) power at the -th RSU. Moreover, the indicator function represents whether the -th vehicle is associated with -th RSU or not. Specifically, , if the -th vehicle requests a task offloading and is within the coverage of the -th RSU; otherwise, , namely the -th vehicle does not be with no need for a task offloading, or is not within the coverage range of -th RSU.

III-D2 Transmission Rate among Vehicles

Given that -th vehicle (in the VEH-PLA or not) offloads its task to an available vehicle, denoted by -th one, in the VEH-PLA. That is, the latter can provide enough computing resources to the former one. Transmission rate between the -th vehicle and the -th vehicle, denoted by , can be represented as

| (2) |

where denotes the AWGN power at the -th vehicle, and the indicator function denotes whether the -th vehicle and the -th vehicle are both associated with the -th vehicle. Specifically, , if the -th vehicle sends a task offloading to the -th vehicle, which has been assigned its parts of resources to the -th vehicle for achieving the task data processing; otherwise, .

III-D3 The Processing Delay Analysis

Given the scenario of binary task offloading, based on the constraints of available computing resources and offloading delay, the vehicles with task offloading demands have the option to offload the task locally, to RSUs, to platoon member vehicles or to other RSUs or MBSs by using a RSU as the relay. Due to the mobility characteristics of the vehicles, the supported transmission rate for a given vehicle with the demands of task offloading, denoted as the -th vehicle, are dynamic, resulting in selecting different offloading schemes. Let represent the set of candidate offloading decisions for the -th vehicle at time slot , including decisions generated by LOCAL, VEH-PLA, RSU, and MBS offloading models. Consequently,

| (3) | ||||

where is a binary random variable and denotes a offloading decision, namely -th vehicle offloads its computing task to -th node with enough resources. In detail, indicates that the task of the -th vehicle is offloaded to the -th unoccupied resource node; otherwise, . In addition, denotes the set of candidate offloading decisions for all vehicles at time slot . Furthermore, we establish the offloading delay models for four offloading models. Specially, the RSUs and the MBSs are linked through fiber optics, so the offloading delay includes sending delay, rather than transmission delay.

The task generated by a vehicle can be modeled as , where denotes the size of the computing task, denotes the processing density or the number of CPU cycles required for per unit of data, and represents the affordable maximum delay requirement of the -th vehicle. Next, we present the corresponding framework of delay analyses for offloading models of LOCAL, VEH-PLA, RSU, and RELAY.

1) Processing delay in LOCAL offloading model: Given the -th vehicle with the demands of task offloading during episode , if it has sufficient resources and satisfies the minimum delay requirement, a LOCAL offloading decision is executed. Then, the processing delay for LOCAL at episode , denoted by , is calculated as .

2) Processing delay in VEH-PLA Offloading Model: If the -th vehicle is within the communication range of the vehicle platoon and the communication delay is less than the effective communication duration, then the task can be offloaded to the -th vehicle that belongs to the vehicle platoon, where the communication delay is . Consequently, the processing delay of the vehicle in the platooning by using VEH-PLA offloading model, denoted by , is calculated as .

3) Processing delay in RSU offloading model: Enabled by dynamic frequency and voltage scaling techniques for RSUs [22], allowing for dynamic allocation of CPU frequency for each task. Let denote the total computational resource demands of multiple tasks served by the -th RSU . Then, the size of computational resources for -th vehicle allocated by -th RSU, denoted by, , can be obtained as . Therefore, the offloading delay is given by . Furthermore, from Eq. (1), the communication delay caused by offloading the task to -th RSU is . To sum up, the processing delay to the RSU can be expressed as

| (4) |

Through optical fiber links, the task is transferred from the -th RSU to the -th RSU. Let be the transmission rate between the -th RSU and the -th RSU, then the processing delay can be expressed as

| (5) |

4) Processing delay in RELAY offloading model: The modeling of the computing resources provided by the -th MBS to vehicle users as a Poisson distribution is denoted by , where represents the resources used by non-vehicle users. The rest of the content remains consistent with the relay RSU. Therefore, the processing delay of the k-th vehicle when offloading its task to the j-th MBS can be expressed as

| (6) |

In summary, the processing delay can be universally represented as a summation of delays associated with each offloading model, and can be calculated as follows:

| (7) |

III-E Problem Formulation: Delay-effective Task Offloading

Based on the above description, the purpose of this paper is to optimize the task offloading decisions of all vehicles demanding for a task offloading at episode , denoted by

Formally, the optimization problem of a delay-effective task offloading can be written as follows

| Objective: | (8a) | |||

| s.t. | (8b) | |||

| (8c) | ||||

| (8d) | ||||

| (8e) | ||||

where constraints of Eq. (8b) and Eq. (8c) indicate that the -th vehicle with demand of a task offloading only chooses one of four available offloading models at episode , as described in Subsection III-C. Eq (8d) ensures that the processing delay satisfies the maximum affordable delay requirements of -th vehicle, while Eq. (8e) can achieve the load balancing of the resources on the RSUs, by allowing to serve vehicles simultaneously at most over all episode.

To obtain a satisfactory task offloading decision, a search must be required in the decision space with the size of . Based on this fact, traditional methods, such as [23], typically necessitate adjusting decisions iteratively to achieve the global optimization, but do not work well in rapidly moving vehicular environments. Facing the challenging in acquiring a priori knowledge of the dynamic environment, the DRL has been shown that it allows for learning optimal offloading schemes via continual interaction with the stochastic vehicular environments [24]. Therefore, in Section IV, based on a CTDE, we present a multi-agent DRL (MADRL) algorithm to strike a balance between computational complexity and convergence performance to find a long-term optimal solution, satisfying the processing delay and resource load balancing requirements.

IV Distributed Computation Offloading Algorithm Based on CTDE

The optimal task offloading decisions of the vehicles demanding task offloading in Eq. (8) depend on the global observation of the state information for all vehicles. However, due to complex behaviors among vehicles caused by their mobility, it is challenging to obtain the global observation of the state formation. Accordingly, in this section, we first model the task offloading decision problem as a Dec-POMDP, then based on MADRL, which are constrained by local state information observation, affordable processing delay and load balancing, we present a CTDE-MADRL based task offloading method in the context of VEH-PLA to determine the optimal offloading decisions for minimizing the processing delay while keeping the load balancing of resource.

IV-A Distributed Multi-Agent System Design

First of all, each agent in IoV can collect or observe the local information in its surrounding environment at episode . Subsequently, the agent independently makes an action choice for maximizing the overall expected shared reward. Formally, based on Dec-POMDP, a distributed vehicular task offloading can be represented as a tuple of where is the set of agents, represents the set of all possible states of the environment, including all states that the system can reach at any episode, represents the environmental state at episode , is the local observation space possessed by -th agent at episode , is the specific instance of the local observation, is the state transition function, is the action spaces available for -th agent at episode , represents the action selected by -th agent, is the reward of -th agent at episode , and is the discount factor that balances the present and future rewards. Due to limited observations achieved by each agent, it cannot fully observe the environmental state space and instead use the local observation as a substitute for the state . In addition, the state transition function can be ignored, namely can be removed from the tuple, since the task offloading decision problem is modeled as a distributed MADRL task. Therefore, the configurations of distributed vehicular task offloading are rewritten as follows.

1) Local Observations: In terms of the location, task size, computing resource demand, processing delay, and relationship with vehicular platooning, the local observation of -th agent at episode can be represented as

| (9) |

where denotes the location of -th agent at episode , represents the task size, signifies the computing resources needed by the task, is the corresponding processing delay required for executing LOCAL offloading, and indicates that whether -th agent makes a connection with -th agent belonging to the vehicular platooning. If the -th agent cannot be connected to any agents existing in the VEH-PLA, then .

2) Action Space: The action space of the -th agent consists of potential offloading decisions. In this way, the set of offloading decisions available to the -th agent at episode can be represented as

| (10) | ||||

3) Reward: To update task offloading decisions to maximize the whole system rewards, all agents share a common team reward. By using similar methods to deal with actions of agents that cannot satisfy the constraints [25], we set a negative penalty for agents to describe the results when violating constraints of Eq. (8b) - Eq. (8e). Then the reward for the -th agent based on the objective function and constraints to shape the training process is given by

| (11) |

where is the penalty coefficient, while and are penalties corresponding to constraints of Eq. (8d) and Eq. (8e), respectively. A well-designed penalty structure for the training process is crucial to accelerate the algorithm convergence.

Under the framework of the cooperative MADRL, the agents collectively execute task offloading decisions, with the purpose of the whole system reward maximization, i.e., optimizing the global reward. Mathematically, the global reward, denoted by , can be represented as

| (12) |

However, the global reward is obtained only at the end of the episode, resulting in a low training efficiency. To solve this problem, we extract the rewards of all agents in each decision episode to as the instantaneous global reward

| (13) |

Furthermore, in the context of Dec-POMDP, the scheduling of computing tasks in each decision episode aims to maximize the whole system reward. In addition, the decisions provided by the agents are also effected by each other. Accordingly, above computing task scheduling process can be regarded as an exact potential game (EPG) [26], which can be defined as a scenario that the change of individual agent’s utility by updating its offloading strategy, and be exactly equivalent to the change of all system’s potential utilities. With the help of EPG, we conclude that the instantaneous reward maximization of an individual agent can lead to the maximization of the whole system’s reward. That is, when the instantaneous reward of -th agent, i.e., , is maximized, the total rewards of all agents in each decision episode, i.e. , is also maximized. Mathematically, the instantaneous global reward can be maximized by updating offloading decisions of all agents as follows

| (14) |

where and and denotes the set of actions determined by all agents and the set of local spaces observed by all agents at episode , respectively.

Given a local observation of -th agent at episode , i.e., , the expected reward when it selects the action can be calculated by the corresponding Q-value function. That is, with the configurations of and , the expected reward of -th agent can be represented as

| (15) |

where is the expectation function, and is a policy rule that guides agents for choosing corresponding actions based on their local observations.

Due to the dynamic nature and uncertainty of the environment, it is difficult to derive the optimal Q-value function for each agent. To solve this problem, we introduce the Bellman equation into the decision-making process of the agents, since it can simplify multi-stage decision problems into a series of single-state problems by using recursive decomposition. In this way, the optimal Q-value function, denoted by , can be derived, which denotes the maximum expected reward by taking action within a given local observation and following the optimal policy, the expression is shown in Eq. (16).

| (16) |

Furthermore, by implementing a greedy selection mechanism based on the optimal Q-value function of in Eq. (16), which prioritizes the action that is anticipated to provide the highest reward in the current state, thereby we can derive the corresponding optimal policy for the local observations, which is given by

| (17) |

However, finding the optimal policy by using Eq. (17) ignores the potential long-term benefits of actions that do not be the optimal in the current context, resulting in an inadequate exploration for potential long-term benefits. To solve this problem, we introduce a -greedy strategy, under which the agent can either select the best action known currently with the probability of , or choose any feasible action randomly with the probability of .

Under the condition of limited strategy space for all agents, the EPG can converge to a pure strategy Nash equilibrium, so the expected maximum cumulative reward can be obtained. However, finding the global optimal action of a task offloading strategy is a challenging problem, due to the dynamic environment in which the agents. In the next subsection, we utilize a MADRL method based on CTDE to search for an approximate optimal action for the EPG.

IV-B CTDE-based DRL Architecture

The CTDE, as a cutting-edge framework that integrates a hybrid MARL framework, allows for centralized training of agents using global state information, while enabling agents to act in a decentralized manner based on local observations. However, extracting the policies from the centralized training process that can be decentralized for execution remains a challenge for implementing CTDE in MADRL, since the discrepancy between global information used during training and local observations available during execution leads to issues of policy effectiveness and stability. To overcome this challenge, the VDN algorithm is introduced to solve the problem of aligning decentralized execution with centralized training outcomes, from the perspective of facilitating individual agent decisions that collectively contribute to achieving the same global optimal policy.

Therefore, in this section, based on the framework of CTDE-MADRL, we propose an effective task offloading method empowered by VEH-PLA, called as a heterogeneous computational resources allocation (CTDE-MADRL-HCRA), which integrates the advantages of VEH-PLA coordination, dynamic learning capability of MADRL, efficient collaboration mechanism of CTDE strategies, and decision optimization techniques of VDN to address challenges of online offloading.

To narrow the gap between global state information and local state information, the method of storing historical observations as an auxiliary information to construct the Q-value function model has been proven to be effective [26]. Accordingly, we adopt to represent the collection of historical observations of all agents, where is joint action-observation history of -th agent.

The CTDE-based framework aims to learn a joint Q-value function with global state information by using centralized training process. Once is learned, which cannot be obtained by using distributed DRL algorithms, distributed agents based on CTDE can make more reasonable independent decisions based on individual local observations. The proposed CTDE-based computational offloading architecture is shown in Fig. 4, and can address the aforementioned challenges. It can be noticed that the architecture consists of individual agent modules, a central training mechanism, and a policy execution framework. In detail, individual agent modules are responsible for processing local observations and selecting corresponding actions based on their current policies. The central training mechanism can solve the challenge of learning a joint Q-value function that incorporates global state information, enabling the optimization of collective outcomes. The policy execution framework makes it possible for agents to act independently in a decentralized manner during execution, relying on their local observations while still aligning their actions with the learned global objectives.

To achieve the decentralized executions executed from centralized training outcomes, based on the VDN technology, we can decompose the joint Q-value function at episode into the Q-value functions of individual agent, denoted by . That is,

| (18) |

In practical application, constructing or maintaining directly a Q-value table for each state-action pair is impractical, as the state space gets very large with the increasing of agents, making the Q-value function difficult to be stored or computed directly. Therefore, we use a deep Q-value network (DQN) to approximate the above function, that is, we adopt , where is the parameter of -th agent’s Q-value network. Therefore, Eq. (18) can be rewritten as

| (19) |

In this way, the decomposition from joint Q-value function to that of individual agent can be simplified for the learning task. Furthermore, with the help of VDN technique, the joint Q-value function obtained from centralized learning can be embedded in the distributed execution process. In particular, to maintain the consistency in decision-making process, it is necessary to ensure that the results obtained by globally optimizing the joint Q-value function (i.e., the global argmax operation) are consistent with the results set obtained by independently optimizing the Q-value function for each -th agent, i.e.,

| (20) |

To facilitate the agents’ learning from their interaction histories and enhance decision-making accuracy, the historical observations can be stored in a Gated Recurrent Unit (GRU) and serve as a valuable supplementary information for enhancing the agent’s Q-value function model. By minimizing the difference between the joint target Q-values and their current joint Q-values, we can obtain the optimal joint Q-value function. Let be the target Q-value of -th agent. Based on the result of Eq. (19), we get . Accordingly, the loss function of is defined as

| (21) |

where is the difference between a difference target and a joint Q-value . The temporal difference target is an estimate by an agent of future rewards obtainable from current state and action, denoted as , is number of samples.

The loss function of the joint Q-value function in Eq. (21) cannot properly deal with the gradient estimation, since the interaction among multiple agents leads to a non-stationary target for optimization. With the help of the back propagation method, we can obtain an implicit update of individual agent networks based on global rewards rather than single reward of each agent. Furthermore, by updating individual Q-value functions of the agents and sharing the weights of the agent networks, the training complexity can be further simplified. To sum up, the pseudo-code of CTDE-MADRL-HCRA is shown in Algorithm 1, where denotes the maximum number of epochs in the whole training, is the maximum number of episodes in each epoch. Within each episode, all agents engage in a complete interaction with the environment. Following each epoch, sample collection and gradient updates are performed.

IV-C Convergence and Complexity Analysis

IV-C1 Pre-training for Algorithm Convergence

Considering the real-world IoV scenarios, there could be hundreds of vehicles/agents per kilometer of road, and the number of vehicles may fluctuate over time. Therefore, achieving a stable policy convergence requires a considerable amount of episodes. To address this issue, the collaboration of multiple powerful edge servers for pre-training is considered. Based on empirical statistical methods, setting up a large-scale number of agents beforehand can achieve an approximate convergence of the policy. In practical applications, if the number of agents does not reach the scale setting beforehand, the environmental and action information of the redundant agents is set to null. When new agents join the scene, their information can be directly filled into the preset agents with no actions, effectively adapting to the complexity and dynamism of the VEC network and significantly improving the efficiency of the decision-making process and the overall performance of the system.

IV-C2 Complexity Analysis

The centralized training of CTDE can be achieved before the beginning of CTDE-MADRL-HCRA, then corresponding complexity depends on the distributed execution of CTDE. Let , , and denote the number of neurons in the input layer, hidden layer and output layer, respectively, and denote the sequence length of historical observations, which can affect the computation of the GRU layer belonging to the hidden layer. In line 8 of CTDE-MADRL-HCRA, the input layer, without involving any data processing, is responsible for transferring the input data to the hidden layer, corresponding computational complexity can be ignored. Furthermore, the hidden layer includes fully connected layer and GRU layer for solving Q-value function. Computational complexity of the fully connected layer is , since each one of output neurons needs to be connected to and compute with every neuron in the previous layer. Based on the assumption that each unit within the GRU interacts with other units at each timestep, the computational complexity of the GRU layer is . The computational complexity of the output layer is , since it not only is a fully connected layer, but also is tasked with transforming the output of the GRU layer into an action policy. To sum up, considering the computational complexity caused by input layer, hidden layer and output layer, overall computational complexity of CTDE-MADRL-HCRA is .

V Evaluations

V-A Simulation Sep

| Notation | Definition | Value |

| Number of VEH-PLA | 2 | |

| Number of VEH-PLA members | 5 | |

| Number of vehicles connected to a RSU | 6 | |

| Coverage of each RSU | 400 m | |

| Transmission power of each vehicle | 20 dBm[27] | |

| Mean of the truncated Gaussian probability distribution | 60[27] | |

| Standard deviation of the truncated Gaussian probability distribution | 5[27] | |

| Bandwidth of sub-6G band | 30 MHz[27] | |

| Shadowing standard deviation | 3.5 dB [27] | |

| Channel power gain per unit distance | -65 dB[27] | |

| Computing resource of a vehicle | 0.5 GHz[27] | |

| Computing resource of a RSU | 6 GHz[27] | |

| Computing resource of a MBS | 10 GHz[27] | |

| Task bit size | 1.5 Mbits | |

| Task computation density | 100 cycles/bit | |

| Discount factor | 0.9 | |

| Learning rate | 0.0003 | |

| Exploration factor | [0.05, 1] | |

In this section, we validate the effectiveness and correctness of the proposed method through Python 3.11 and TensorFlow 2.14.0 simulator. In detail, a bi-directional lane with the length of 1200 meters is constructed, and the vehicles’ position are reset to the starting point when they reach the end of the road for avoiding offloading disruptions caused by the vehicles leaving the environment. Three RSUs are fixed at the position of 200m, 600m, and 1000m, and 15 moving vehicles are randomly distributed. To reduce the size of the action space and achieve load balancing, if the number of vehicles served by the same RSU is above , the RSU will no longer provide the sources to other vehicles with the demand of task offloading, which can be transferred to the adjacent RSUs. In addition, the related simulation parameters are given in Table I. In addition, to model the task categorization (i.e., public tasks and personalized tasks) for the VEH-PLA members and non-platooning members, the ratio of public tasks for the latter is 20%, while that of VEH-PLA members is set to 30%, since the vehicles in VEH-PLA usually have similar task requirements.

V-B Parameter Settings of DRL Model

In the training processing of the DRL model, the discount factor is set to 0.9 for reflecting the relative importance of future rewards to immediate ones. The learning rate is determined to be 0.0003 to regulate the pace of model weight updates, ensuring stability and convergence speed in the learning progression. The exploration factor is initially set to 1 and decays exponentially with an increase in exploration attempts, diminishing ultimately to 0.05. These settings allow the model to extensively explore potential action strategies initially and gradually focus on enhancing the best strategies discovered. A buffer with the size of 2000 provides the learning model with an ample experiential reservoir. An update interval for the target, occurring once every 100 episodes, contributes to the stability of target values during learning. Furthermore, the mini-batch size is set to 64, optimizing the efficiency of the training process and data utilization.

V-C Baseline Schemes

To validate the effectiveness and correctness of task offloading decisions based on VEH-PLA proposed in the paper, i.e., CTDE-MADRL-HCRA, which is based on VDN, VEH-PLA and task categorization, we adopt offloading baseline schemes based on VDN, IQL and QMIX, respectively. In particular, with the consideration of the VEH-PLA and task type or not, baseline schemes can be classified as follows.

-

•

[27]: Offloading schemes based on the VDN, and these based on VEH-PLA and task categorization, denoted by VDN-NoVP-NoTC, VDN-VEH-PLA and VDN-TC;

-

•

[28]: Offloading schemes based on the QMIX, and along with both VEH-PLA and task categorization, denoted by QMIX-NoVP-NoTC, QMIX-VEH-PLA-TC;

-

•

[29]: Offloading schemes based on the IQL, and along with both VEH-PLA and task categorization, denoted by IQL-NoVP-NoTC and IQL-VEH-PLA-TC;

-

•

[30]: LOCAL offloading;

-

•

[31]: LOCAL and RSU offloading.

In the following, we investigate the impact of the numbers of episodes and vehicles, the sizes of VEH-PLA and task data on the convergence rate, average reward, and processing delay.

| Scheme Name | Acc. Reward | Diff. Previous | Diff. First |

| CTDE-MADRL-HCRA | 95.562 | — | — |

| VDN-NoVP-NoTC [27] | 84.673 | 10.23% ↑ | 12.86% ↑ |

| IQL-VEH-PLA-TC [29] | 91.298 | — | 04.96% ↑ |

| IQL-NoVP-NoTC [29] | 83.646 | 09.15% ↑ | 14.25% ↑ |

With the settings of 15 vehicles, consisting of two VEH-PLA with 5 vehicles respectively, and 5 non-platooning vehicles, other simulated parameters are set based on Table I, Fig. 5 demonstrates the relationship between the accumulated average reward nd the number of training episodes. On the one hand, it can be noticed that, with the increment of the number of training episodes, CTDE-MADRL-HCPA, along with other baseline schemes, shows a fast convergence speed and good stability, which validates the effectiveness analyzed in Subsection IV-C. On the other hand, the average rewards of CTDE-MADRL-HCRA, as well as QMIX-VEH-PLA-TC and IQL-VEH-PLA-TC employing the offloading model proposed in this paper, are significantly higher than these of baseline schemes. The corresponding reason is that the VEH-PLA and task categorization reduce the number of task offloading requests, and further reduce computational resource consumption of vehicles belonging to VEH-PLA, which can be used to satisfy the demand of task offloading requiring by other vehicles. In particular, as shown in Table II, compared with VDN-NoVP-NoTC [27], QMIX-VEH-PLA-TC [28], QMIX-NoVP-NoTC [28], IQL-VEH-PLA-TC [29], and IQL-NoVP-NoTC [29], average reward achieved by CTDE-MADRL-HCPA is increased by 11.45%, 2.38%, 12.86%, 4.96% and 14.25%, respectively, which suggests that the CTDE-MADRL-HCRA possesses a superior offloading decision optimization capabilities by using task categorization and VEH-PLA, making it particularly suitable for IoV systems that demand high stability and efficiency.

Next, with the same parameter settings of Fig. 5, average processing delay of task offloading is considered, since the processing delay in Eq. (8a) cannot fully reflect the exploratory nature of individual vehicle decisions within the processing of DRL, while the average processing delay can smooth out this episodic variability, offering a more consistent measure for assessing the overall system efficiency. Consequently, in Fig. 6, we observe the influence of the number of episodes on the average processing delay. It can be observed that, apart from LOCAL offloading scheme [30], the average processing delay is relatively low in the initial phase of other schemes. This is because that, in the initial phase, the computational resources of RSUs and vehicles in VEH-PLA are not occupied by vehicles requesting for task offloading, and thus have abundant computational resources. Accordingly, the tasks can be offloaded directly successfully. While in LOCAL offloading scheme, the computational resource of individual vehicle is less than these of other offloading schemes, resulting in a larger average processing delay. However, as the number of episodes increases, more task offloading requests exist and available computational resources become less, resulting in a gradual rise in average processing delay. Among all schemes, CTDE-MADRL-HCRA has the lowest average processing delay followed by the QMIX-VEH-PLA-TC scheme [28], which further demonstrates the effectiveness of proposed offloading model based on EH-PLA. On the average, compared with other offloading schemes VDN-NoVP-NoTC [27], QMIX-VEH-PLA-TC [28], IQL-VEH-PLA-TC [29], the processing delay achieved by CTDE-MADRL-HCRA is decreased by 14.52%, 6.46% and 13.52% at least, respectively. In addition, compared to the offloading schemes based on IQL (i.e., IQL-VEH-PLA-TC, IQL-NoVP-NoTC [29]), which independent updating policies of multiple agents lead to difficulties in coordinating the optimization of average processing delay, both CTDE-MADRL-HCRA and QMIX-VEH-PLA-TC are more suitable for cooperative environments and therefore exhibit a more better performance.

| Schemes | 13 to 15 | 15 to 17 | 17 to 19 | 13 to 19 |

| CTDE-MADRL-HCRA | 10.76% | 19.07% | 20.95% | 59.52% |

| VDN-NoVP-NoTC [27] | 10.37% | 20.73% | 18.59% | 58.02% |

| QMIX-VEH-PLA-TC [28] | 11.01% | 19.89% | 19.52% | 59.06% |

| QMIX-NoVP-NoTC [28] | 10.81% | 21.33% | 18.13% | 58.82% |

| IQL-VEH-PLA-TC [29] | 13.13% | 24.85% | 16.54% | 64.61% |

| IQL-NoVP-NoTC [29] | 10.59% | 21.29% | 20.93% | 62.20% |

Furthermore, for the better observation, the results are averaged with the number of episodes varying from 500 to 1000. Considering different number of non-platooning vehicles and other simulated parameter settings keep the same as before, Fig. 7 illustrates the performance comparison of all offloading schemes in terms of the average processing delay. It can be noticed that from Fig. 7 the average processing delay increases with the increment of the number of vehicles. On the one hand, CTDE-MADRL-HCRA shows the best performance with the consideration of VEH-PLA and tesk categorization. On the other hand, as shown in Table III, compared with CTDE-MADRL-HCRA and QMIX-VEH-PLA-TC, the growth rate of IQL-VEH-PLA-TC is about 5%. Compared with offloading schemes based on IQL, such as IQL-VEH-PLA-TC [29], average processing delays achieved by CTDE-MADRL-HCRA and these based on QMIX-VEH-PLA-TC [28] increase more stably, which can be attributed to the fact that, for offloading schemes based on the IQL, the vehicles only learn independently without cooperation. When the number of vehicles becomes large, offloading schemes based on VDN and QMIX perform more effectively.

Considering the case of two VEH-PLAs with the number of moving vehicles varying in the range of [3, 4, 5, 6, 7], and the case of no-VEH-PLA (i.e., the number of vehicles in VEH-PLA is 1), Fig. 8 illustrates the relationship between the size of VEH-PLA and average processing delay, where the number of vehicles represented at x-axis is that of a single VEH-PLA. It can be noticed that with the increment of VEH-PLA members, on the one hand, the average processing delay shows a downward trend. This is because the fact that over the size of VEH-PLA increasing, the vehicles with the demand of public task offloading can directly utilize the results of the VEH-PLA, which can avoid overlapping offloading for the same public task, decreasing the average processing delay. On the other hand, total decrement of average processing delay between any two number of VEH-PLA members becomes less significant. In the CTDE-MADRL-HCRA scheme, the average processing delays with the size of VEH-PLA being 5, 6, 7 are 2.254s, 2.223s, and 2.211s, respectively. Compared with average processing delays achieved by 5 and 6 VEH-PLA members, that of VEH-PLA with 7 members is decreased 1.44% and 1.91%. One possible is that the marginal benefit of adding additional vehicles to the VEH-PLA diminishes as the number of vehicles increases, indicating a plateau in efficiency gains. Therefore, in the case of Fig. 8, maintaining a VEH-PLA with the size of 5 or 6 members is a more appropriate choice, in terms of average processing delay, VEH-PLA stability and offloading feasibility.

The number of moving vehicles on the road is set to 15, and other parameters are set as before. I particular, considering 12 VEH-PLA members, which are classified into 1, 2, 3, 4, and 6 platooning respectively, and 3 non-platooning members, Fig. 9 describes the performance comparison of average processing delay under different number of platooning, The results clearly indicate that CTDE-MADRL-HCRA is more applicable for various platoon modes, followed by QMIX-VEH-PLA-TC, the corresponding reason is similar as that of Fig. 5, Fig. 6, and Fig. 7. Similarly, IQL-VEH-PLA-TC exhibits the worst performance. Given a certain number of moving vehicles, decreasing the number of VEH-PLA (i.e., increasing the number of vehicles in a single VEH-PLA) can have actually helped reduce the average processing delay. This is attributed to the fact that more fewer VEH-PLA can lead to more fewer number of public task offloading. However, the size of VEH-PLA is not more larger the better, one possible reason is that increased communication overhead and coordination difficulties among a larger number of vehicles can lead to inefficiencies and delays in the platooning network, and has been concluded in [32], [33]. Therefore, in this case, keeping the size of VEH-PLA with the number of 6 members can make a better trade-off between the average processing delay and platooning stability.

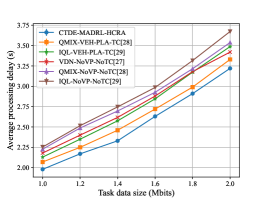

The size of of task data varies in range of [1.0, 2.0]Mbits, and other parameters are set as before. Fig. 10 validates the impact of task size on the average processing delay. CTDE-MADRL-HCRA shows the best performance as expected. In particular, given a task data with the size of 2 Mbits, compared with QMIX-VEH-PLATC and IQL-VEH-PLA-TC, the average processing delay achieved by CTDE-MADRL-HCRA is decreased 110 ms and 240 ms, respectively.

VI CONCLUSION AND FUTURE WORK

In this paper, by integrating the resources of individual vehicle with the concept of VEH-PLA, along with the resources of RSUs and MBSs, we investigated the task offloading in the IoV characterized by the mobility and wireless interference, with the purpose of minimizing the processing delay and keeping the resource load balancing. A DRL framework based on CTDE was introduced to enhance the accuracy of task offloading and resource utilization. The effectiveness of proposed offloading scheme was further demonstrated by simulation results, which made a comprehensive comparison with other baseline offloading schemes by considering the influences of the number of episodes and vehicles, the size of VEH-PLA and task data, respectively. In particular, the proposed scheme showed the lowest processing delay.

Owing to space limitations, this paper aimed to minimize the processing delay of task offloading and keep the load balancing of resources. In fact, it can be noticed that the performance of task offloading depends on the vehicular mobility and energy consumption. Therefore, in our future work, we will devote into joint optimization of the resource allocation and vehicle trajectory prediction to minimize the processing delay and energy consumption simultaneously. In addition, the integration of unmanned aerial vehicles (UAV) into the IoV can further enhance the network coverage and adjust computational resource allocation more flexibly, which also deserves further investigation.

References

- [1] B. Hazarika, K. Singh, S. Biswas, and C.-P. Li, “Drl-based resource allocation for computation offloading in iov networks,” IEEE Transactions on Industrial Informatics, vol. 18, no. 11, pp. 8027–8038, 2022.

- [2] X. He, H. Lu, M. Du, Y. Mao, and K. Wang, “Qoe-based task offloading with deep reinforcement learning in edge-enabled internet of vehicles,” IEEE Transactions on Intelligent Transportation Systems, vol. 22, no. 4, pp. 2252–2261, 2021.

- [3] X. Hou, Z. Ren, J. Wang, W. Cheng, Y. Ren, K.-C. Chen, and H. Zhang, “Reliable computation offloading for edge-computing-enabled software-defined iov,” IEEE Internet of Things Journal, vol. 7, no. 8, pp. 7097–7111, 2020.

- [4] X. Song, B. Xu, X. Zhang, S. Wang, T. Song, G. Xing, and F. Liu, “Everyone-centric heterogeneous multi-server computation offloading in its with pervasive ai,” IEEE Network, vol. 37, no. 2, pp. 62–68, 2023.

- [5] Y. Sun, Z. Wu, K. Meng, and Y. Zheng, “Vehicular task offloading and job scheduling method based on cloud-edge computing,” IEEE Transactions on Intelligent Transportation Systems, vol. 24, no. 12, pp. 14 651–14 662, 2023.

- [6] Q. Luo, C. Li, T. H. Luan, and W. Shi, “Collaborative data scheduling for vehicular edge computing via deep reinforcement learning,” IEEE Internet of Things Journal, vol. 7, no. 10, pp. 9637–9650, 2020.

- [7] C. Sonmez, C. Tunca, A. Ozgovde, and C. Ersoy, “Machine learning-based workload orchestrator for vehicular edge computing,” IEEE Transactions on Intelligent Transportation Systems, vol. 22, no. 4, pp. 2239–2251, 2021.

- [8] Y. Cui, H. Li, D. Zhang, A. Zhu, Y. Li, and H. Qiang, “Multiagent reinforcement learning-based cooperative multitype task offloading strategy for internet of vehicles in b5g/6g network,” IEEE Internet of Things Journal, vol. 10, no. 14, pp. 12 248–12 260, 2023.

- [9] G. Qiao, S. Leng, K. Zhang, and Y. He, “Collaborative task offloading in vehicular edge multi-access networks,” IEEE Communications Magazine, vol. 56, no. 8, pp. 48–54, 2018.

- [10] K. Xiong, S. Leng, C. Huang, C. Yuen, and Y. L. Guan, “Intelligent task offloading for heterogeneous v2x communications,” IEEE Transactions on Intelligent Transportation Systems, vol. 22, no. 4, pp. 2226–2238, 2021.

- [11] X. Huang, L. He, X. Chen, L. Wang, and F. Li, “Revenue and energy efficiency-driven delay-constrained computing task offloading and resource allocation in a vehicular edge computing network: A deep reinforcement learning approach,” IEEE Internet of Things Journal, vol. 9, no. 11, pp. 8852–8868, 2022.

- [12] J. Lin, S. Huang, H. Zhang, X. Yang, and P. Zhao, “A deep-reinforcement-learning-based computation offloading with mobile vehicles in vehicular edge computing,” IEEE Internet of Things Journal, vol. 10, no. 17, pp. 15 501–15 514, 2023.

- [13] T. Xiao, C. Chen, Q. Pei, and H. H. Song, “Consortium blockchain-based computation offloading using mobile edge platoon cloud in internet of vehicles,” IEEE Transactions on Intelligent Transportation Systems, vol. 23, no. 10, pp. 17 769–17 783, 2022.

- [14] T. Xiao, C. Chen, and S. Wan, “Mobile-edge-platooning cloud: A lightweight cloud in vehicular networks,” IEEE Wireless Communications, vol. 29, no. 3, pp. 87–94, 2022.

- [15] D. Zheng, Y. Chen, L. Wei, B. Jiao, and L. Hanzo, “Dynamic noma-based computation offloading in vehicular platoons,” IEEE Transactions on Vehicular Technology, vol. 72, no. 10, pp. 13 000–13 010, 2023.

- [16] C. Yang, W. Lou, Y. Liu, and S. Xie, “Resource allocation for edge computing-based vehicle platoon on freeway: A contract-optimization approach,” IEEE Transactions on Vehicular Technology, vol. 69, no. 12, pp. 15 988–16 000, 2020.

- [17] X. Ma, J. Zhao, Q. Li, and Y. Gong, “Reinforcement learning based task offloading and take-back in vehicle platoon networks,” in 2019 IEEE International Conference on Communications Workshops (ICC Workshops), 2019, pp. 1–6.

- [18] Z. Zhang and F. Zeng, “Efficient task allocation for computation offloading in vehicular edge computing,” IEEE Internet of Things Journal, vol. 10, no. 6, pp. 5595–5606, 2023.

- [19] J. Zhao, Q. Li, Y. Gong, and K. Zhang, “Computation offloading and resource allocation for cloud assisted mobile edge computing in vehicular networks,” IEEE Transactions on Vehicular Technology, vol. 68, no. 8, pp. 7944–7956, 2019.

- [20] K. Yu, J. Yu, Z. Feng, and H. Chen, “A reassessment on applying protocol interference model under rayleigh fading: From perspective of link scheduling,” IEEE/ACM Transactions on Networking, vol. 32, no. 1, pp. 238–252, 2024.

- [21] K. Yu, J. Yu, X. Cheng, D. Yu, and A. Dong, “Efficient link scheduling solutions for the internet of things under rayleigh fading,” IEEE/ACM Transactions on Networking, vol. 29, no. 6, pp. 2508–2521, 2021.

- [22] Y. Sun, X. Guo, J. Song, S. Zhou, Z. Jiang, X. Liu, and Z. Niu, “Adaptive learning-based task offloading for vehicular edge computing systems,” IEEE Transactions on Vehicular Technology, vol. 68, no. 4, pp. 3061–3074, 2019.

- [23] K. Zhang, Y. Mao, S. Leng, A. Vinel, and Y. Zhang, “Delay constrained offloading for mobile edge computing in cloud-enabled vehicular networks,” in 2016 8th International Workshop on RNDM, 2016, pp. 288–294.

- [24] X. Liu, Y. Wang, D. Chen, D. Li, and Z. Feng, “An multi-resources integration empowered task offloading in internet of vehicles,” IEEE Transactions on Communications, 2024, under review.

- [25] W. Zhan, C. Luo, J. Wang, C. Wang, G. Min, H. Duan, and Q. Zhu, “Deep-reinforcement-learning-based offloading scheduling for vehicular edge computing,” IEEE Internet of Things Journal, vol. 7, no. 6, pp. 5449–5465, 2020.

- [26] Z. Sun, H. Yang, C. Li, Q. Yao, D. Wang, J. Zhang, and A. V. Vasilakos, “Cloud-edge collaboration in industrial internet of things: A joint offloading scheme based on resource prediction,” IEEE Internet of Things Journal, vol. 9, no. 18, pp. 17 014–17 025, 2022.

- [27] L. Zhu, Z. Zhang, L. Liu, L. Feng, P. Lin, and Y. Zhang, “Online distributed learning-based load-aware heterogeneous vehicular edge computing,” IEEE Sensors Journal, vol. 23, no. 15, pp. 17 350–17 365, 2023.

- [28] T. Rashid, M. Samvelyan, C. S. De Witt, G. Farquhar, J. Foerster, and S. Whiteson, “Monotonic value function factorisation for deep multi-agent reinforcement learning,” Journal of Machine Learning Research, vol. 21, no. 178, pp. 1–51, 2020.

- [29] A. Tampuu, T. Matiisen, D. Kodelja, I. Kuzovkin, K. Korjus, J. Aru, J. Aru, and R. Vicente, “Multiagent cooperation and competition with deep reinforcement learning,” PloS one, vol. 12, no. 4, p. e0172395, 2017.

- [30] Z. Zhou, J. Feng, Z. Chang, and X. Shen, “Energy-efficient edge computing service provisioning for vehicular networks: A consensus admm approach,” IEEE Transactions on Vehicular Technology, vol. 68, no. 5, pp. 5087–5099, 2019.

- [31] H. Liao, Z. Zhou, W. Kong, Y. Chen, X. Wang, Z. Wang, and S. Al Otaibi, “Learning-based intent-aware task offloading for air-ground integrated vehicular edge computing,” IEEE Transactions on Intelligent Transportation Systems, vol. 22, no. 8, pp. 5127–5139, 2021.

- [32] X. Ge, Q.-L. Han, Q. Wu, and X.-M. Zhang, “Resilient and safe platooning control of connected automated vehicles against intermittent denial-of-service attacks,” IEEE/CAA Journal of Automatica Sinica, vol. 10, no. 5, pp. 1234–1251, 2023.

- [33] X. Ge, Q.-L. Han, X.-M. Zhang, and D. Ding, “Communication resource-efficient vehicle platooning control with various spacing policies,” IEEE/CAA Journal of Automatica Sinica, vol. 11, no. 2, pp. 362–376, 2024.