Delay and Packet-Drop Tolerant Multi-Stage Distributed Average Tracking in Mean Square

Abstract

This paper studies the distributed average tracking problem pertaining to a discrete-time linear time-invariant multi-agent network, which is subject to, concurrently, input delays, random packet-drops, and reference noise. The problem amounts to an integrated design of delay and packet-drop tolerant algorithm and determining the ultimate upper bound of the tracking error between agents’ states and the average of the reference signals. The investigation is driven by the goal of devising a practically more attainable average tracking algorithm, thereby extending the existing work in the literature which largely ignored the aforementioned uncertainties. For this purpose, a blend of techniques from Kalman filtering, multi-stage consensus filtering, and predictive control is employed, which gives rise to a simple yet comepelling distributed average tracking algorithm that is robust to initialization error and allows the trade-off between communication/computation cost and stationary-state tracking error. Due to the inherent coupling among different control components, convergence analysis is significantly challenging. Nevertheless, it is revealed that the allowable values of the algorithm parameters rely upon the maximal degree of an expected network, while the convergence speed depends upon the second smallest eigenvalue of the same network’s topology. The effectiveness of the theoretical results is verified by a numerical example.

Distributed average tracking, reference noise, input delay, packet-drop, multi-agent system.

1 Introduction

For a multi-agent plant operating through a network of devices, the capability of distributed average tracking (DAT), measured by the tracking error between each agent’s state and the average of a set of reference signals via a distributed protocol, depends on the agent dynamics, the network topology, the class of control algorithms, as well as the reference signals. It has been recognized that when the agent dynamics, the reference signals, and the network topology are given, and the control algorithm has been designed, the reference noise, input delay, and network transmission failures will also lead to degrading control performance. This paper considers the DAT problem for discrete-time multi-agent systems, in which both the agents’ states and the control inputs are updated in a discrete-time manner. The effect of linear time-invariant agent dynamics, noise-corrupted reference signals, and unreliable transmission networks subject to random packet-drop are investigated. Each agent’s local input will be implemented via a multi-stage algorithm. The objective is to investigate what may affect the tracking error in this setting, and whether it is possible to achieve practical DAT, i.e., the stationary-state tracking error can be made arbitrarily small, and how.

The capability of distributed average tracking is a significant attribute of multi-agent systems, which has proven useful for distributed sensor fusion [2, 3, 4], distributed optimization [5], and multi-agent coordination [6, 7, 8, 9, 10]. For single-integrator plants, a consensus algorithm and a proportional-integral algorithm are investigated respectively in [11] and [12], wherein both algorithms could track the average of stationary references with zero tracking errors. The proportional-integral control offers additional robustness against initialization errors. Meanwhile, more advanced design methods have been exploited to track time-varying references [13], sinusoid references with unknown frequencies [14], and arbitrary references with bounded derivatives [15]. Recently, the study on DAT has been expanded to handle complicated agent dynamics, e.g., double-integrator dynamics [16, 17], generic linear dynamics [8, 18], and nonlinear dynamics [19, 20], with performance analysis [21, 22, 23], privacy requirements [24], and for balanced directed networks [25, 26]. By introducing a “damping” factor, the algorithm of [27] ensures DAT with small errors while being robust against initialization errors. Inspired by the proportional algorithm, a multi-stage DAT algorithm was lately proposed in [28] based upon a cascade of DAT filters, which is capable of achieving DAT with bounded errors. For more details on DAT, a recent tutorial is available [29].

In spite of significant progress on DAT, the study on practical issues, such as delay and noise, is only to emerge [30]. Indeed, it is generally recognized, and intuitively clear, that the convergence of DAT algorithms can be constrained by transmission failures, input delays, as well as reference noise, which all likely result in negative effect on the convergence of the closed-loop system. For linear systems with small input delays, the control algorithm without delay compensation might still work, since linear systems possess a certain robustness margin to small delays [31]; yet the convergence will generically fail for relatively large delays. In practice, a reference signal might represent a target or a dynamic process, for which the measurement is inevitably corrupted by random noise. However, the effect of reference noise has been commonly ignored. Apart from that, since the communication network is shared by all agents, packet-drops ubiquitously exist, and therefore should be fully addressed, particularly when the data transmission rate is large.

This paper proposes a practical DAT design which can concurrently tolerate input delays, random packet-drops, and reference noise. For this purpose, a blend of the techniques from multi-stage consensus filtering, predictive control, and Kalman filtering is employed. This work extends the existing work to a more realistic setting where the idealized assumptions, which are seldom possible in practice, are removed. To the best of the authors’ knowledge, this work presents the first multi-stage design that takes reference noise, input delays, packet-drops all into consideration, which are perceived as main sources of control design difficulty for multi-agent systems. With this defining feature, the analysis reveals that an expected network topology plays a central role in ensuring the convergence of the proposed DAT algorithm, wherein the allowable values of the algorithm parameters rely upon the maximal degree of the expected network, while the convergence speed depends on the second smallest eigenvalue of the same network’s topology. It should be noted that due to the additional algorithm components and their inherent couplings, the convergence analysis is significantly more challenging than the existing ones.

The rest of this paper is organized as follows. In Section 2, notation and mathematical preliminaries are presented. In Section 3, the problem is defined. In Section 4, the DAT algorithm is designed with the aid of Kalman filtering, predictive control, and multi-stage consensus filtering. The performance of the proposed algorithm is analyzed in Section 5. Section 6 presents numerical examples to verify the theoretical results. Finally, Section 7 concludes the paper.

2 Preliminaries

2.1 Notation

Let denote the set of real numbers and the set of positive integers. Let and denote respectively the set of -dimensional real vectors and the set of real matrices. Let be the -dimensional identity matrix, the -dimensional vector with all ones, and the matrix with all ones. For a vector , the norm used is defined as . The transpose of matrix is denoted by . The diagonal matrix with being its th diagonal element is denoted by . Let denote the inverse of matrix . The smallest and largest eigenvalues of are given respectively by and . Let . It is assumed that all the vectors and matrices have compatible dimensions, which may not be shown if clear from the context. For a set , let denote its cardinality, i.e., the number of elements in . Let be the mathematical expectation and be the probability function. The normal probability distribution is denoted by .

2.2 Graph Theory

A graph is defined by , where is the set of nodes and is the set of edges. A graph is undirected if for all . This paper considers undirected graphs. For node , the set of its neighbors is defined as . The adjacency matrix of is given by , where if , and otherwise. The degree of node is defined as . The maximum degree of is given by . The degree matrix is given by . The Laplacian matrix of is defined as . A graph is connected if, for any pair , there exists a path connecting to . For graphs and with the same node set, their union is given by .

2.3 Observability and Controllability

Definition 1 ([32])

A matrix pair with is said to be completely observable if the observability Gramian

defined for , is positive definite for some and . Furthermore, the pair is said to be uniformly (completely) observable if there exists a positive integer and positive constants and such that

for all .

Definition 2 ([32])

A matrix pair with is said to be completely controllable if the controllability Gramian

defined for , is positive definite for some and . Furthermore, the pair is said to be uniformly (completely) controllable if there exists a positive integer and positive constants and such that

for all .

3 Problem Description

Consider a network of agents, labeled from to . Agent , , follows the following discrete-time multi-stage dynamics:

| (1) |

where is the state of agent at stage , is the input of agent at stage subject to an input delay , and is the time variable. A graph is used to describe the information flows among the agents, where is the node set and is the edge set. Throughout this paper, it is assumed that graph is connected.

Because information is exchanged through the network, there usually exist packet-drops particularly when the data transmission rate is high. It is common and reasonable to delineate packet-drops by independent Bernoulli processes. Specifically, let be a random variable indicating whether the transmission between two neighboring agents and is successful, i.e.,

| (2) |

where is the packet-drop rate. For non-neighboring agents, with probability . Due to the random packet-drops, the de facto information exchange network, denoted by , is by nature a random network. At each time instant, takes a value from the set , , with the probability

| (3) |

where and and means that it takes values from the graph indexed by .

Each agent has a reference signal , which is governed by

| (4) |

where is the input, is the measurement, and is the measurement gain. The process noise and measurement noise follow independent normal probability distributions, i.e.,

where is the variance of the process noise, and is the variance of the measurement noise. The following assumption is made on the reference signals.

Assumption 1

The reference signals satisfy the following properties:

-

(i)

the expectation approaches a constant, as ;

-

(ii)

and , where .

The primary objective of this paper is to design distributed input sequence for the system (3) such that all agents can track the average of the noisy reference inputs in the sense that, for all ,

| (5) |

where is a pre-desired constant, which can be arbitrarily small.

4 Algorithm Design

In this section, the control input sequence is designed for the multi-stage system (3) to track the average signal of the noisy references (4), which gives the following DAT algorithm:

| (6) |

where and are respectively the predicted states of agent and reference at time instant using the measurement information up to time instant , and and are two gain parameters to be designed.

The agent state predictor is given by

| (7) |

where is the predictor gain, while the states of agent from to are predicted by

| (8) |

Because the reference signals are subject to both input delay and noise, its design needs a combination of the predictive control and Kalman filtering techniques. Specifically, the following predictor for the reference signals is designed:

where is a predictor gain and is the estimate obtained via the Kalman filter

| (9a) | ||||

| (9b) | ||||

| (9c) | ||||

| (9d) | ||||

| (9e) | ||||

in which is the Kalman gain, and are, respectively, the priori and posteriori estimates of , is the mean-squared priori estimate error, and is the mean-squared posteriori estimate error. The initial states of the Kalman filter, and , are chosen randomly.

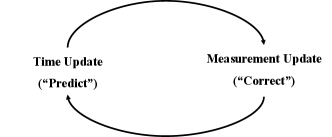

The estimate process alluded to above is delineated in Fig. 1. As shown, the Kalman filter performs two operations: time update and measurement update. The time update equations are (9a) and (9b), while the measurement update equations are (9c)–(9e). The time update equations “predict” the state and estimate errors at time from those at time , while the measurement update equations adjust the priori estimate by using the measurement according to the Kalman gain , which is obtained by minimizing .

Substituting the input (4) into system (3) leads to the closed-loop system

| (10) |

which can be written in a vector format as

| (11) |

where

Here, is a stochastic Laplacian matrix, changing within the possible set , where is the Laplacian matrix associated with , i.e.,

For future use, let

The following gives a useful result regarding the expecation of the Laplacian matrix . The result will be employed in the convergence analysis in the next section.

Lemma 1 ([33])

For multi-agent system (10) with a connected communication network, the expected Laplacian matrix, , has only one zero eigenvalue, i.e.,

The advantages of the multi-stage DAT system (10) are as follows: firstly, it does not involve integral control actions or input derivatives, thus exhibits robustness to initialization errors; secondly, the proposed multi-state scheme enables the possibility of making a trade-off among the communication/computation cost (i.e., the number of stages), the tracking error and the convergence time; thirdly, the proposed scheme takes input delay, packet-drops, and reference noise into consideration, making it more feasible for practical applications.

5 Convergence Analysis

In this section, the convergence of the proposed algorithm embedded in system (10) is analyzed. It is first to show that the Kalman filter (9) is stable.

Proof 5.1.

In what follows, the performance of the multi-stage DAT system (10) is analyzed. Let

where . Note that is well defined due to Assumption 1. The following result characterizes agents’ stationary states in terms of .

Lemma 5.2.

Proof 5.3.

Without loss of generality, only the first-stage state is analyzed here. The proof for the other stages is similar and is hence omitted.

Replacing with in (7) yields

| (13) |

Let . Subtracting (13) from (10) leads to

| (14) |

Applying (8) recursively gives

| (15) |

Similarly, applying (10) recursively yields

| (16) |

Subtracting (16) from (15) leads to

| (17) |

It follows from (14) and (17) that

| (18) |

For the reference signals, define . It then follows that

| (19) |

and

| (20) |

Using (18) and (20), it follows from (10) that

| (21) |

Since and are independent of at time , taking mathematical expectation on both sides of (21) yields

| (22) |

It then follows from (2) that , which together with (22) leads to

Let

It follows that

| (23) |

Define

Eq. (23) can be written as

| (24) |

Using (14) and (19), Eq. (24) can be rewritten in a compact form as

| (25) |

where

| (26) |

Taking mathematical expectation on both sides of (4) leads to

Based on Assumption 1 and (12), one has

| (27) |

which further leads to .

Due to (27), as , Eq. (25) becomes

| (28) |

It follows that the system (28) is asymptotically stable if and only if all the eigenvalues of the matrix lie within the unit circle. Furthermore, (26) suggests that the eigenvalues of the matrix coincide with the eigenvalues of the matrices , and .

For the matrices and , by choosing , all the eigenvalues of the two matrices are within the unit circle. For the matrix , it follows from (3) that . Denote the th eigenvalue of by . According to the Gerschgorin disc theorem, the expected Laplacian matrix has all its eigenvalues located within , where is the maximum degree of the expected graph . It thus follows that

Since is a symmetric matrix, the matrix is symmetric. Consequently, all the eigenvalues are real and are given by

| (29) |

Since and , it follows that the eigenvalues of (29) satisfy . As all eigenvalues of are within the unit circle, the dynamical system (28) is asymptotically stable.

Let denote the expected steady state. That is,

where , represents the expected steady state at stage of agent . As the asymptotic convergence of (28) is ensured, is well defined. The system (28) is asymptotically stable, i.e., , , as . As a result,

| (30) |

It follows from (17) and (19) that and as , which further leads (18) and (20) to

| (31) |

Eq. (11) gives

| (32) |

Using (27), (30) and (31), it follows from (32) that

The expected steady-state equilibrium at the first stage is then given by

| (33) |

For the remaining stages, it can be shown similarly that

| (34) |

where , represents the expected steady state at stage and . Combining (33) and (34) gives

The proof is thus completed.

The main result of this section is the following theorem.

Theorem 5.4.

Proof 5.5.

On the one hand, Theorem 5.4 shows the possibility of achieving mean-squared DAT in the presence of input delay, reference noise, and packet-drops. As the stage number goes to infinity, the tracking error will approach zero, i.e, the control objective (5) will be achieved. On the other hand, a larger stage number will induce a higher communication/computation cost for the agents, indicating that there exists trade-off between the tracking error and communication/computation cost.

6 Simulation

In this section, a numerical example is presented to verify Theorem 5.4.

A system consisting of nodes is considered. The communication network topology is given in Fig. 2.

Assume that the packet-drop probability , . The actual network topology can vary from one of the eight network topologies shown in Fig. 3 due to packet-drops.

Their corresponding Laplacian matrices are given respectively by

| (44) | ||||||

| (53) | ||||||

| (62) | ||||||

| (71) |

The expected Laplacian matrix is given by

Fig. 4 shows the expected network topology , the network topology corresponding to .

The reference signals are governed by (4), with and . Finally, the parameters are chosen as , , and . The input delay is set to .

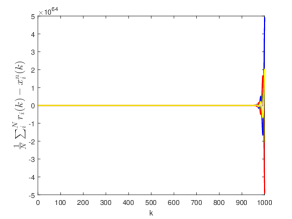

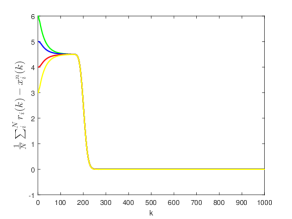

Fig. 5 shows the tracking error under the proposed algorithm embedded in system (10) without employing the Kalman filter and state predictor to handle the input delays, packet-drops, and noisy reference signals. It can be observed that the system cannot track the average of the reference signals; instead, the tracking error diverges eventually.

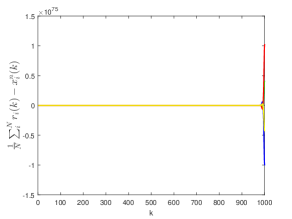

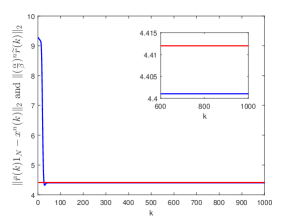

Fig. 6 shows the tracking error of the proposed DAT algorithm with the Kalman filter and state predictor for different stage number . It can be seen that in both cases the tracking error will finally reach a steady value. Furthermore, as the stage number gets larger, the tracking error gets smaller, which is consistent with Theorem 5.4.

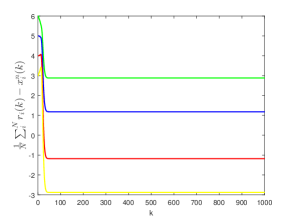

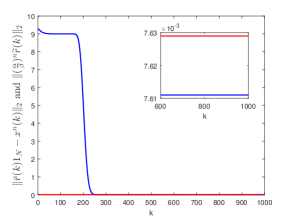

Fig. 7 shows respectively the trajectories of and . The red line corresponds to the trajectory of , while the blue line to the trajectory of . It can be seen that as , the inequality holds eventually.

7 Conclusion

This paper demonstrates that DAT algorithms can be seriously hampered by reference noise, packet-drops, and input delays; however, it is still possible to achieve practical DAT by employing appropriate control techniques, such as Kalman filtering and predictive control, to deal with those negative effects.

In summary, the following statements are drawn from this work.

-

•

An explicit expression of the expected stationary states of the agents is obtained and given in terms of the expected values of the references, which also depends on the control gains as well as the number of processing stages.

-

•

The mean-squared tracking error is ultimately upper bounded by the average difference among the reference signals, and as the number of stages goes to infinity, the tracking error will varnish, achieving practical DAT in the sense of mean square.

These results shed new lights on the studies of distributed average tracking and cooperative control of multi-agent systems.

References

- [1] C. Chen and F. Chen, “Discrete-time distributed average tracking for noisy reference signals,” in 2019 Chinese Control Conference (CCC). IEEE, 2019, pp. 5405–5410.

- [2] D. P. Spanos, R. Olfati-Saber, and R. M. Murray, “Distributed sensor fusion using dynamic consensus,” in Proceedings of the 16th IFAC World Congress, 2005, paper code. We-E04-TP/3.

- [3] R. Olfati-Saber and J. S. Shamma, “Consensus filters for sensor networks and distributed sensor fusion,” in Proceedings of the 44th IEEE Conference on Decision and Control (CDC), 2005, pp. 6698–6703.

- [4] H. Bai, R. A. Freeman, and K. M. Lynch, “Distributed kalman filtering using the internal model average consensus estimator,” in Proceedings of the American Control Conference (ACC), 2011, pp. 1500–1505.

- [5] S. Rahili and W. Ren, “Distributed continuous-time convex optimization with time-varying cost functions,” IEEE Transactions on Automatic Control, vol. 62, no. 4, pp. 1590–1605, 2017.

- [6] P. Yang, R. A. Freeman, and K. M. Lynch, “Multi-agent coordination by decentralized estimation and control,” IEEE Transactions on Automatic Control, vol. 53, no. 11, pp. 2480–2496, 2008.

- [7] Y. Sun and M. Lemmon, “Swarming under perfect consensus using integral action,” in Proceedings of the American Control Conference(ACC), 2007, pp. 4594–4599.

- [8] F. Chen and W. Ren, “A connection between dynamic region-following formation control and distributed average tracking,” IEEE transactions on cybernetics, vol. 48, no. 6, pp. 1760–1772, 2017.

- [9] F. Chen and J. Chen, “Minimum-energy distributed consensus control of multi-agent systems: A network approximation approach,” IEEE Transactions on Automatic Control, to be published. [Online]. Available: https://doi.org/10.1109/TAC.2019.2917279

- [10] J. Mei, W. Ren, and J. Chen, “Distributed consensus of second-order multi-agent systems with heterogeneous unknown inertias and control gains under a directed graph,” IEEE Transactions on Automatic Control, vol. 61, no. 8, pp. 2019–2034, 2015.

- [11] D. P. Spanos, R. Olfati-Saber, and R. M. Murray, “Dynamic consensus on mobile networks,” in Proceedings of the 16th IFAC world congress, 2005, paper code. We-A18-TO/1.

- [12] R. A. Freeman, P. Yang, and K. M. Lynch, “Stability and convergence properties of dynamic average consensus estimators,” in Proceedings of the 45th IEEE Conference on Decision and Control (CDC), 2006, pp. 338–343.

- [13] H. Bai, R. A. Freeman, and K. M. Lynch, “Robust dynamic average consensus of time-varying inputs,” in Proceedings of the 49th IEEE Conference on Decision and Control (CDC), 2010, pp. 3104–3109.

- [14] H. Bai, “A two-time-scale dynamic average consensus estimator,” in Proceedings of the 55th IEEE Conference on Decision and Control (CDC), 2016, pp. 75–80.

- [15] F. Chen, Y. Cao, and W. Ren, “Distributed average tracking of multiple time-varying reference signals with bounded derivatives,” IEEE Transactions on Automatic Control, vol. 57, no. 12, pp. 3169–3174, 2012.

- [16] S. Ghapani, W. Ren, F. Chen, and Y. Song, “Distributed average tracking for double-integrator multi-agent systems with reduced requirement on velocity measurements,” Automatica, vol. 81, pp. 1–7, 2017.

- [17] F. Chen, W. Ren, W. Lan, and G. Chen, “Distributed average tracking for reference signals with bounded accelerations,” IEEE Transactions on Automatic Control, vol. 60, no. 3, pp. 863–869, 2015.

- [18] Y. Zhao, Y. Liu, Z. Li, and Z. Duan, “Distributed average tracking for multiple signals generated by linear dynamical systems: An edge-based framework,” Automatica, vol. 75, pp. 158–166, 2017.

- [19] F. Chen, G. Feng, L. Liu, and W. Ren, “Distributed average tracking of networked euler-lagrange systems,” IEEE Transactions on Automatic Control, vol. 60, no. 2, pp. 547–552, 2014.

- [20] Y. Zhao, Y. Liu, G. Wen, X. Yu, and G. Chen, “Distributed average tracking for lipschitz-type of nonlinear dynamical systems,” IEEE transactions on cybernetics, vol. 49, no. 12, pp. 4140–4152, 2018.

- [21] B. Van Scoy, R. A. Freeman, and K. M. Lynch, “Optimal worst-case dynamic average consensus,” in Proceedings of the American Control Conference (ACC), 2015, pp. 5324–5329.

- [22] F. R. A. Van Scoy, Bryan and K. M. Lynch, “Exploiting memory in dynamic average consensus,” in Proceedings of the 53rd Annual Allerton Conference on Communication, Control, and Computing (Allerton), 2015, pp. 258–265.

- [23] Y. Yuan, J. Liu, R. M. Murray, and J. Gonçalves, “Decentralised minimal-time dynamic consensus,” in Proceedings of the American control conference (ACC), 2012, pp. 800–805.

- [24] S. S. Kia, J. Cortés, and S. Martinez, “Dynamic average consensus under limited control authority and privacy requirements,” International Journal of Robust and Nonlinear Control, vol. 25, no. 13, pp. 1941–1966, 2015.

- [25] C. Li, H. Xin, J. Wang, M. Yu, and X. Gao, “Dynamic average consensus with topology balancing under a directed graph,” International Journal of Robust and Nonlinear Control, vol. 29, no. 10, pp. 3014–3026, 2019.

- [26] S. Li and Y. Guo, “Dynamic consensus estimation of weighted average on directed graphs,” International Journal of Systems Science, vol. 46, no. 10, pp. 1839–1853, 2015.

- [27] E. Montijano, J. I. Montijano, C. Sagüés, and S. Martínez, “Robust discrete time dynamic average consensus,” Automatica, vol. 50, no. 12, pp. 3131–3138, 2014.

- [28] M. Franceschelli and A. Gasparri, “Multi-stage discrete time and randomized dynamic average consensus,” Automatica, vol. 99, pp. 69–81, 2019.

- [29] S. S. Kia, B. Van Scoy, J. Cortes, R. A. Freeman, K. M. Lynch, and S. Martinez, “Tutorial on dynamic average consensus: The problem, its applications, and the algorithms,” IEEE Control Systems Magazine, vol. 39, no. 3, pp. 40–72, 2019.

- [30] H. Moradian and S. S. Kia, “On robustness analysis of a dynamic average consensus algorithm to communication delay,” IEEE Transactions on Control of Network Systems, vol. 6, no. 2, pp. 633–641, 2018.

- [31] S.-I. Niculescu, “On delay robustness analysis of a simple control algorithm in high-speed networks,” Automatica, vol. 38, no. 5, pp. 885–889, 2002.

- [32] T. Karvonen, “Stability of linear and non-linear kalman filters,” Master’s thesis, University of Helsinki, 2014.

- [33] W.-M. Zhou and J.-W. Xiao, “Dynamic average consensus and consensusability of general linear multiagent systems with random packet dropout,” Abstract and Applied Analysis, vol. 2013, 2013, article no. 412189.