Degree assortativity in networks of spiking neurons

Abstract.

Degree assortativity refers to the increased or decreased probability of connecting two neurons based on their in- or out-degrees, relative to what would be expected by chance. We investigate the effects of such assortativity in a network of theta neurons. The Ott/Antonsen ansatz is used to derive equations for the expected state of each neuron, and these equations are then coarse-grained in degree space. We generate families of effective connectivity matrices parametrised by assortativity coefficient and use SVD decompositions of these to efficiently perform numerical bifurcation analysis of the coarse-grained equations. We find that of the four possible types of degree assortativity, two have no effect on the networks’ dynamics, while the other two can have a significant effect.

Key words and phrases:

1. Introduction

Our nervous system consists of a vast network of interconnected neurons. The network structure is dynamic and connections are formed or removed according to their usage. Much effort has been put into creating a map of all neuronal interconnections; a so-called brain atlas or connectome. Given such a network there are many structural features and measures that one can use to characterise it, e.g. betweenness, centrality, average path-length and clustering coefficient [27].

Obtaining these measures in actual physiological systems is challenging to say the least; nevertheless, insights into intrinsic connectivity preferences of neurons were observed via their growth in culture [8, 35]. Neurons with similar numbers of processes (e.g., synapses and dendrites) tend to establish links with each other – akin to socialites associating in groups and vice-versa. Such an assortativity, typically referred to as a positive assortativity, or a tendency of elements with similar properties to mutually connect, emerges as a strong preference throughout the stages of the cultured neuron development. Furthermore, this preferential attachment between highly-connected neurons is suggested to fortify the neuronal network against disruption or damage [35]. Moreover, a similar positive assortativity is inferred in human central nervous systems as well [8] at both a structural and functional level, where a central “core” in the human cerebral cortex may be the basis for shaping overall brain dynamics [14]. It seems that in no instance, however, is the directional flow of information (e.g., from upstream neuron via axon to synapse and downstream neuron) observed – either in culture or in situ.

Little is known about if and how this positive assortativity or its negative analogue (a tendency for highly connected elements to link with sparsely connected – akin to a “hub-and-spoke” system) influences the dynamics of the neurons networked together. We here consider these effects of assortativity with a network of idealised mathematical neurons known as theta neurons, and represent their connections of downstream (outbound) and upstream (inbound) synapses in a directed graph of nodes and edges. Each neuron then is a node with in- and out-degrees depicting the number of such connections within the network. Assortativity in this context refers to the probability that a neuron with a given in- and out-degree connects to another neuron with a given in- and out-degree. If this probability is what one would expect by chance, given the neurons’ degrees (and this is the case for all pairs of neurons), the network is referred to as neutrally assortative. If the probability is higher (lower) than one would expect by chance — for all pairs — the network is assortative (disassortative). Interchangeably, we will use the term postive assortativity (negative assortativity).

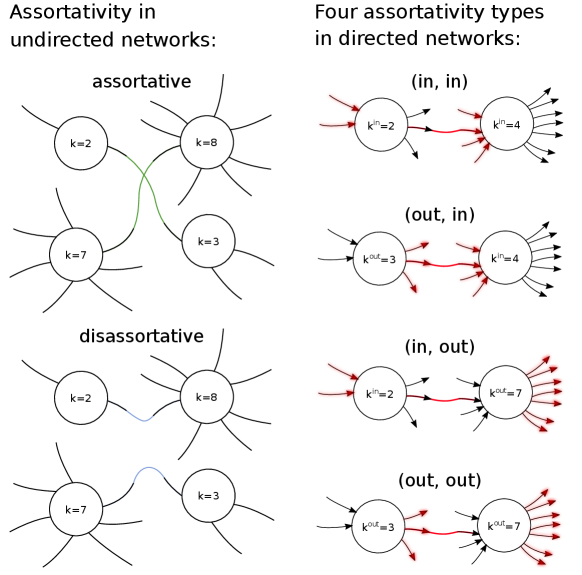

Assortativity has often been studied in undirected networks, where a node simply has a degree, rather than in- and out-degrees (the number of connections to and from a node, respectively) [30, 27, 26]. Since neurons form directed connections, there are four types of assortativity to consider [12]: between either the in- or out-degree of a presynaptic neuron, and either the in- or out-degree of a postsynaptic neuron (Figure 1).

We are aware of only a small number of previous studies in this area [31, 16, 1, 7]. Kähne et al. [16] considered networks with equal in- and out-degrees and investigated degree assortativity, effectively correlating both in- and out-degrees of pre- and post-synaptic neurons. They mostly considered networks with discrete time and a Heaviside firing rate, i.e. a McCulloch-Pitts model [24]. They found that positive assortativity created new fixed points of the model dynamics. Schmeltzer et al. [31] also consider networks with equal in- and out-degrees and investigated degree assortativity. These authors considered leaky integrate-and-fire neurons and derived approximate self-consistency equations governing the steady state neuron firing rates. They found, among other things, that positive assortativity increased the firing rates of high-degree neurons and decreased that of low-degree ones. Positive assortativity also seemed to make the network more capable of sustained activity when the external input to the network was low. De Fransciscis et al. [7] considered assortative mixing of a field of binary neurons, or Hopfield networks. They concluded that assortativity of such simple model neurons exhibited associative memory (similar to bit fields of a magnetic storage medium), and robustly so in the presence of noise that negatively assortative networks failed to withstand. Avalos-Gaytan et al. [1] considered the effects of dynamic weightings between Kuramoto oscillators — effectively a dynamically evolving network — on assortativity. They observed that if the strength of connections between oscillators increased when they were synchronised, a strong positive assortativity evolved in the network, suggesting a potential mechanism for the creation of assortative networks, as observed in cultured neurons mentioned above, and as we study here.

To briefly summarise our results, we find that only two out of the four types of degree assorativity have any influence on the network’s dynamics: those when the in-degree of a presynaptic neuron is correlated with either the in- or out-degree of a postsynaptic neuron. Of these two, (in,in)-assortativity has a greater effect than (in,out)-assortativity. For both cases, negative assortativity widens the parameter range for which the network is bistable (for excitatory coupling) or undergoes oscillations in mean firing rate (for inhibitory coupling), and positive assortativity has the opposite effect.

Our work is similar in some respects to that of Restrepo and Ott [30] who considered degree assortativity in a network of Kuramoto-type phase oscillators. They found that for positive assortativity, as the strength of connections between oscillators was increased the network could undergo bifurcations leading to oscillations in the order parameter, in contrast to the usual scenario that occurs for no assortativity. However, their network was undirected, and thus there is only one type of degree assortativity possible.

The outline of the paper is as follows. In Sec. 2 we present the model and then derive several approximate descriptions of its dynamics. In Sec. 3 we describe the method for creating networks with prescribed types of degree assortativity, and in Sec. 4 we discuss aspects of the numerical implementation of the reduced model. Results are given in Sec. 5 and we conclude with a discussion in Sec. 6. Appendix A contains the algorithms we use to generate networks with prescribed assortativity.

2. Model description and simplifications

We consider a network of theta neurons:

| (1) |

for where

| (2) |

is a constant current entering the th neuron, randomly chosen from a distribution , is strength of coupling, is mean degree of the network, and the connectivity of the network is given by the adjacency matrix , where if neuron connects to neuron , and zero otherwise. The connections within the network are either all excitatory (if ) or inhibitory (if ). Thus we do not consider the more realistic and general case of a connected population of both excitatory and inhibitory neurons, although it would be possible using the framework below.

The theta neuron is the normal form of a Type I neuron which undergoes a saddle-node on an invariant circle bifurcation (SNIC) as the input current is increased through zero [10, 11]. A neuron is said to fire when increases through , and the function

| (3) |

in (2) is meant to mimic the current pulse injected from neuron to any postsynaptic neurons when neuron fires. is a normalisation constant such that independent of .

The in-degree of neuron is defined as the number of neurons which connect to it, i.e.

| (4) |

while the out-degree of neuron is the number of neurons it connects to, i.e.

| (5) |

Since each edge connects two neurons, we can define the mean degree

| (6) |

Networks such as (1) have been studied by others [22, 3, 17], and note that under the transformation the theta neuron becomes the quadratic integrate-and-fire neuron with infinite thresholds [25, 21].

2.1. An infinite ensemble

As a first step we consider an infinite ensemble of networks with the same connectivity, i.e. the same , but in each member of the ensemble, the value of associated with the th neuron is randomly chosen from the distribution [2]. Thus we expect a randomly chosen member of the ensemble to have values of in the ranges

| (7) | ||||

with probability . The state of this member of the ensemble is described by the probability density

| (8) |

which satisfies the continuity equation

| (9) |

If we define the marginal distribution for the th neuron as

| (10) |

we can write

| (11) |

where we have now evaluated as an average over the ensemble rather than from a single realisation (as in (2)). This is reasonable in the limit of large networks [2].

Multiplying (9) by and integrating we obtain

| (12) |

A network of theta neurons is known to be amenable to the use of the Ott/Antonsen ansatz [29, 22, 17] so we write

| (13) |

The dependence on is written as a Fourier series where the th coefficient is the th power of a function . Substituting this into (12) and (11) we find that satisfies

| (14) |

and

| (15) |

where

| (16) |

where an overbar indicates complex conjugate, and

| (17) |

Assuming that is a Lorentzian:

| (18) |

we can use contour integration to evaluate the integral in (15), and evaluating (14) at the appropriate pole of we obtain

| (19) |

where

| (20) |

and , where the expected value is taken over the ensemble.

Now (19) is a set of coupled complex ODEs, so we have not simplified the original network (1) in the sense of decreasing the number of equations to solve. However, the states of interest are often fixed points of (19) (but not of (1)), and can thus be found and followed as parameters are varied. At this point the network we consider, with connectivity given by , is arbitrary. If was a circulant matrix, for example, this would represent a network of neurons on a circle, where the strength of coupling between neurons depends only on the distance between them [17].

2.2. Lumping by degree

The next step is to assume that for a large enough network, the dynamics of neurons with the same degrees will behave similarly [3]. Such an assumption has been made a number of times in the past [16, 15, 30]. We thus associate with each neuron the degree vector and assume that the value of for all neurons with a given are similar. There are distinct degrees where and are the number of distinct in- and out-degrees, respectively. We define to be the order parameter for neurons with degree , where , and now derive equations for the evolution of the .

Let be the vector of ensemble states , where and the degree index of neuron be , such that is its degree. We assume that for all neurons with the same degree the ensemble state is similar in sufficiently large networks and thus we only care about the mean value with . We say that degree occurs times and thus write

| (21) |

where the matrix has entries in row , each of value , at positions where and zeros elsewhere, i.e. with being the Kronecker delta.

To find the time derivative of we need to express in terms of , which we do with an matrix which assigns to the corresponding value, such that

| (22) |

with components . Note that , the identity matrix. Differentiating (21) with respect to time, inserting (19) into this and writing in terms of using (22) we obtain

| (23) |

Considering that for all there is only a single non-zero entry , equal to 1, the identity

| (24) |

holds for any power . Further we find that

| (25) |

Thus, the local term in (23) is

| (26) |

For the non-local term we write

| (27) | ||||

where describes the synaptic current of the ensemble equations averaged over nodes sharing the same degree . The identity (24) also applies to (20), so that

| (28) |

and the current can be written as

| (29) |

The effective connectivity between neurons with different degrees is therefore expressed in the matrix and we end up with equations governing the :

| (30) |

where

| (31) |

These equations are of the same form as (19)-(20) except that has been replaced by . Note that the connectivity matrix is completely general; we have only assumed that neurons with the same degrees behave in the same way. We are not aware of a derivation of this form being previously presented.

3. Network assembly

We are interested in the effects of degree assortativity on the dynamics of the network of neurons. We will choose a default network with no assortativity and then introduce one of the four types of assortativity and investigate the changes in the network’s dynamics. Our default network is of size neurons where in- and out-degrees for each neuron are independently drawn from the interval with probability (i.e. a power law, as found in [9] and used in [3]). We create networks using the configuration model [27], then modify them using algorithms which introduce assortativity and then remove multiple connections between nodes (or multi-edges) (described in Appendix A). Further, to observe any influence of multi-edges on the dynamics investigated here, we also developed a novel network assembly technique permitting introduction of very high densities of multi-edges also described in the Appendix; we refer to this novel technique as the “permutation” method. We choose as our default parameters , for which a default network approaches a stable fixed point. The sharpness of the synaptic pulse function is set to for all simulations.

We first check the validity of averaging over an infinite ensemble. We assemble 20 different default networks and for each, run (19)-(20) to a steady state and calculate the order parameter , the mean of . The real part of is plotted in orange in Fig. 2. For each of these networks we then generated 50 realisations of the ’s and ran (1)-(2) for long enough that transients had decayed, and then measured the corresponding order parameter for the network of individual neurons

| (32) |

and plotted its real part in blue in Fig. 2. Note that the orange circles always lie well within the range of values shown in blue. The fact that deviations within the 50 realisations are small relative to the value obtained by averaging over an infinite ensemble provide evidence for the validity of this approach, at least for these parameter values.

We also investigate the influence of multi-connections (i.e. more than one connection) between neurons on the network dynamics. The configuration model creates a network in which the neuron degrees are exactly those specified by the choice from the appropriate distribution, but typically results in both self-connections and multiple connections between neurons. We have an algorithm for systematically removing such connections while preserving the degrees, and found that removing such edges has no significant effect (results not shown). We also have an algorithm (see “Permutation Method” in Appendix A) for increasing the number of multi-edges from the number found using the default configuration model. This novel network assembly method meets specified neuron degrees and also produces specified densities of multi-connections ranging from none to 97%; see Fig. 3 for the results of such calculations. We see that only when the fraction of multi-edges approaches do we see a significant effect. However, in our simulations we use simple graphs without multi-edges.

3.1. Assortativity

For a given matrix we can measure its assortativity by calculating the four Pearson correlation coefficients with which read

| (33) |

where

| (34) | and |

being the number of connections and the leading superscript or refers to the sending or receiving neuron of the respective edge. For example the sending node’s in-degree of the second edge would be . Note that there are four mean values to compute.

We introduce assortativity by randomly choosing two edges and swapping postsynaptic neurons when doing so would increase the target assortativity coefficient [31]. An edge is directed from neuron to neuron . In order to know whether the pair and should be rewired or left untouched, we compare their contribution to the covariance in the numerator of (33):

| (35) | ||||

| (36) |

If we replace the edges and by and , respectively, otherwise we do not, and continue by randomly choosing another pair of edges. Algorithm 1 (see Appendix A) demonstrates a scheme for reaching a certain target assortativity coefficient.

We investigate the effects of different types of assortativity (see Fig 1) in isolation. We thus need a family of networks parametrised by the relevant assortativity coefficient. Algorithm 1 is used to create a network with a specific value of one of the assortativity coefficients, but especially for high values of assortativity it may be that in doing so a small amount of assortativity of a type other than the intended one is introduced. Accordingly, it may be necessary to examine all types of assortativity and apply the mixing scheme to reduce other types back to zero, and then (if necessary) push the relevant value of assorativity back to its target value. We do multiple iterations of these mixing rounds until all assortativities are at their target values (which may be 0) within a range of . We use Algorithm 1 with a range of target assortativities , and for each value, store the connectivity matrix and thus form the parametrised family . We do this for the four types of assortativity.

We have chosen to use the configuration model to create networks with given degree sequences and then introduced assortativity by swapping edges. Although we developed our novel “permutation” method as well (see Appendix A), that method was designed for assembling adjacency matrices with desired multi-edge densities and was applied only for that aspect. By contrast, another common adjacency network assembly technique, that of Chung and Lu [5] together with an analytical expression for assortativity (as in [3]), proved inadequate. We found that the latter approach significantly alters the degree distribution for large assortativity, whereas the configuration model combined with our mixing algorithm does not change degrees at all. For our default network this approach allows us to introduce assortativity of any one kind up to .

4. Implementation

For networks of the size we investigate it is impractical to consider each distinct in- and out-degree (because will be very large and sparse). Due to the smoothness of the degree dependency of we coarse-grain in degree space by introducing “degree clusters” — lumping all nodes with a range of degrees into a group with dynamics described by a single variable. Let there be clusters in in-degree and clusters in out-degree, with a total of degree clusters. The matrix then is an matrix and constructed as previously, except that is not the degree index of neuron , but the cluster index and is the cluster index running from 1 to . Similarly for the matrix . There are multiple options for how to combine degrees into a cluster. The cluster index of a neuron can be computed linearly, corresponding to clusters of equal size in degree space. However, with this approach, depending on the degree distribution, some of the clusters may be empty or hardly filled, resulting in poor statistics. To overcome this issue, the cumulative sum of in- and out-degree distribution can be used to map degrees to cluster indices. Thus, clusters are more evenly filled and at the same time regions of degree space with high degree probability are more finely sampled. The dynamical equations (30)-(31) are equally valid for describing degree cluster dynamics with and , where and are cluster versions of their previous definitions.

To check the effect of varying the number of clusters we generate 20 default matrices and then generate the corresponding matrix with varying numbers of clusters ( and are equal), then run (30)-(31) to a steady state and plot the real part of in Fig. 4. We see that the order parameter is well approximated using as little as about degree clusters. Beyond that, fluctuations between different network realisations exceed the error introduced by clustering. In our simulations we stick to the choice of 10 degree clusters per in- and out-degree space.

Having performed this clustering, we find that it is possible to represent using a low-rank approximation, calculated using singular value decomposition. Thus for a fixed we have

| (37) |

where is a diagonal matrix with decreasing entries, called singular values, and and are unitary matrices. In Fig. 5 we plot the largest 6 singular values of as function the assortativity coefficient, for the 4 types of assortativity. Even for large the singular values decay very quickly, thus a low-rank approximation is possible. We choose a rank-3 approximation, so approximate by

| (38) |

where is the th column of , similarly for and , and is the th singular value. We have such a decomposition at discrete values of and use cubic interpolation to evaluate for any . This decomposition means that the multiplication in (31) can be evaluated quickly using 3 columns of and rather than the full matrix .

We note that the components for the approximation of are calculated once and then stored, making it very easy to systematically investigate the effects of varying any of the parameters and (governing the sharpness of the pulse function (3)).

5. Results

5.1. Excitatory coupling

We take to model a network with only excitatory connections. To study the dynamical effect of assortativity we generate positive and negative () assortative networks of the four possible kinds and follow fixed points of (30)-(31) as a function of , and compare results with a neutral () network. We use pseudo-arc-length continuation [18, 13].

To calculate the mean frequency over the network we evaluate and then use the result that if the order parameter at a node is , then the frequency of neurons at that node is [19, 25]

| (39) |

Averaging these gives the mean frequency.

Results are shown in Figure 6, where we see quite similar behaviour in each case: apart from a bistable region containing two stable and one unstable fixed point, there is only a single stable fixed point present. Further, the two assortativity types (out,in) and (out,out) apparently do not affect the dynamics, whereas the saddle-node bifurcations marking the edges of the bistable region move slightly for (in,out) and significantly for (in,in) assortativity. Following the saddle-node bifurcations for the latter two cases we find the results shown in Figure 7. We have performed similar calculations for different networks with the same values of assortativity and found similar results.

5.2. Inhibitory coupling

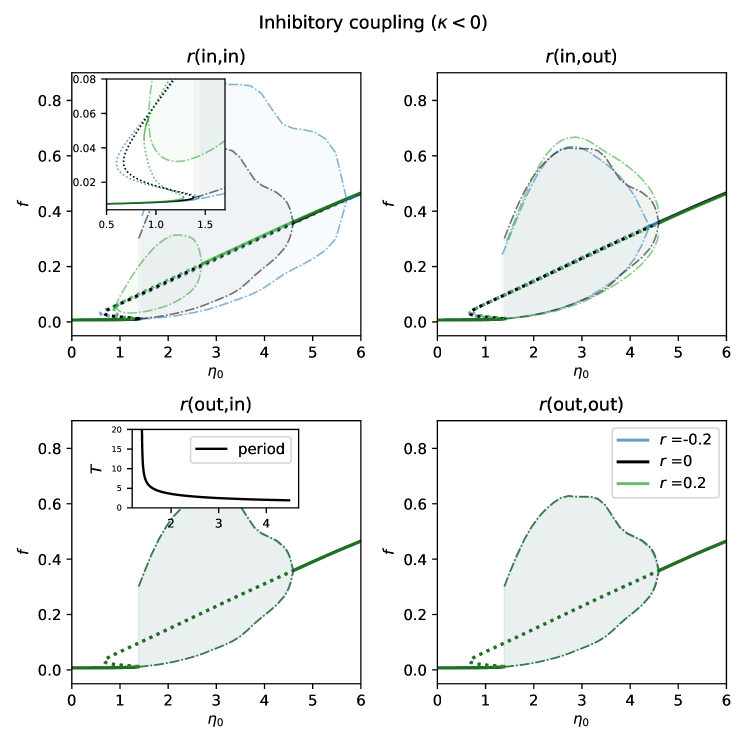

We choose to model a network with only inhibitory coupling. Again, we numerically continue fixed points for zero, positive and negative assortativity () as is varied and obtain the curves shown in Figure 8. Consider the lower left plot. For large the system has a single stable fixed point which undergoes a supercritical Hopf bifurcation as is decreased, creating a stable periodic orbit. This periodic orbit is destroyed in a saddle-node bifurcation on an invariant circle (SNIC) bifurcation at lower , forcing the oscillations to stop. Decreasing further, two unstable fixed points are destroyed in a saddle-node bifurcation. In contrast with the case of excitatory coupling, oscillations in the average firing rate are seen. These can be thought of as partial synchrony, since some fraction of neurons in the network have the same period and fire at similar times to cause this behaviour. The period of this macroscopic oscillation tends to infinity as the SNIC bifurcation is approached, as shown in the inset of the lower left panel in Fig. 8.

As in the excitatory case, we see that assortativities of type (out,in) and (out,out) have no influence on the dynamics in this scenario. However, type (in,out) does have a small effect, slightly moving bifurcation points (top right panel in Fig. 8). Type (in,in) has the strongest effect, resulting in a qualitative change in the bifurcation scenario for large enough assortativity: there is a region of bistability between either two fixed points or a fixed point and a periodic orbit. This is best understood by following the bifurcations in the top panels of Fig. 8 as is varied, as shown in Figure 9. There is one fixed point in regions A, B and D, and three in region C. For (in,out) assortativity there is a stable periodic orbit in region B and never any bistability.

We now describe the case for (in,in) assortativity. For negative and zero the scenario is the same as for the other three types, but as is increased there is a Takens-Bogdanov bifurcation where regions C,D,E and F meet, leading to the creation of a curve of homoclinic bifurcations, which is destroyed at another codimension-two point where there is a homoclinic connection to a non-hyperbolic fixed point [4]. There are stable oscillations in region E, created or destroyed in supercritical Hopf or homoclinic bifurcations. In region F there is bistability between two fixed points.

6. Discussion

We investigated the effects of degree assortativity on the dynamics of a network of theta neurons. We used the Ott/Antonsen ansatz to derive evolution equations for an order parameter associated with each neuron, and then coarse-grained by degree and then degree cluster, obtaining a relatively small number of coupled ODEs, whose dynamics as parameters varied could be investigated using numerical continuation. We found that degree assortativity involving the out-degree of the sending neuron, i.e. (out,in) and (out,out), has no effect on the networks’ dynamics. Further, (in,out) assortativity moves bifurcations slightly, but does not lead to substantial differences in dynamical behaviour. The most significant effects were caused by creating correlation between in-degrees of the sending and receiving neurons. For our excitatorially coupled example, positive (in,in) assortativity narrows the bistable region, whereas negative assortativity widens it (see Fig. 7). In the inhibitory case introducing negative assortativity increased the amplitude of network oscillations and extended their range to slightly larger . On the contrary, positive (in,in) assortativity in this network has an opposite effect and eventually stops oscillations (see Fig. 9).

The most similar work to ours is that of [3]. These authors also considered a network of the form (1)-(2) and by assuming that the dynamics depend on only a neuron’s degree and that the are chosen from a Lorentzian, and using the Ott/Antonsen ansatz, they derived equations similar to (30)-(31). The difference in formulations is that rather than a sum over entries of (in (31)), [3] wrote the sum as

| (40) |

where is the degree distribution and is the assortativity function, which specifies the probability of a link from a node with degree to one with degree (given that such neurons exist). They then chose a particular functional form for and briefly presented the results of varying one type of assortativity (between and ). In contrast, our approach is far more general (since any connectivity matrix can be reduced to the corresponding , the only assumption being that the dynamics are determined by a neuron’s degree). We also show the results of a wider investigation into the effects of assortativity.

This alternative presentation also explains why can be well approximated with a low-rank approximation. If the in- and out-degrees of a single neuron are independent, , and with neutral assortativity, . Thus

| (41) |

This term contributes to the input current to a neuron with degree , but is independent of . Thus the state of a neuron depend only on its in-degree, so

| (42) |

Comparing with (31) we see that where and , i.e. is a rank-one matrix. Varying assortativity within the network is then a perturbation away from this, with the effects appearing in the second (and third) singular values in the SVD decomposition of .

A limitation of our study is that we considered only networks of fixed size with the same distributions of in- and out-degrees, and a specific distribution of these degrees. However, our approach does not rely on this and could easily be adapted to consider other types of networks, although we expect it to become less valid as both the average degree and number of neurons in the network decrease. We have also only considered theta neurons, but since a theta neuron is the normal form of a Type I neuron, we expect similar networks of other Type I neurons to behave similarly to the networks considered here. The approach presented here could also be used to efficiently investigate the effects of correlated heterogeneity, where either the mean or width of the distribution of the is correlated with a neuron’s in- or out-degree [33, 34, 6]. We could also consider assortativity based on a neuron’s intrinsic drive () [32] rather than its degrees, or correlations between an individual neuron’s in- and out-degree [36, 20, 23, 37, 28]. We are currently investigating such ideas.

Acknowledgements: This work is partially supported by the Marsden Fund Council from Government funding, managed by Royal Society Te Apārangi. We thank the referees for their helpful comments.

Appendix A Algorithms

We present here the algorithms developed and utlised for adjacency matrix assembly and modification. These include modifications to resulting adjacency matrices produced by the familiar configuration method, and our novel matrix assembly technique we christen the “permutation” method.

A.1. Assortativity

Algorithm 1 is used to create a network with a specified degree distribution and values of the four different assortativity coefficients.

Randomly pair up all edges of the network with adjacency matrix and reconnect them at once where preferable with respect to target assortativity . Repeat the process until the assortativity coefficient lies within the tolerance. Once overshooting the target coefficient, interpolate the length of a shortened list of edge pairs and reconnect those.

A.2. Permutation Method

The well-known configuration model for generating adjacency matrices [27] typically includes auto-connections and multiple edge connections with no control over their appearance or proportion, often forcing post-processing removal if none are desired. We developed a novel adjacency matrix assembly technique that, given predefined sequences of in- and out-degrees, permits designating not only whether multiple edges appear but also their proportion — with no post-processing required. Additionally, auto-connections can be included or omitted without manipulating the resulting . These ’s exhibit generally neutral assortativities over all types with exceptions emerging for the highest multi-edge densities we assembled: e.g., 98-99% multi-edges exceed our target neutral assortativity range of , so for purposes of this study these were discarded.

This permutation method is a two-phase approach requiring only two sequences of in- and out-degrees, or and , respectively, and a target multiple edge connection density, . We describe this technique briefly here, and with more detail in a subsequent companion publication. These phases are as follows:

-

(1)

Generate an initial matrix, designated , with each node’s inbound edge counts (row sums) satisfying , yet ignoring . Each row of is filled with nonzero entries comprised of solo- and multiple-edge connections whose sum is for each node. Remaining entries along each row are simply filled with zeros out to the column. This resulting thus adheres to the designated and , but violates : all the column sums are incorrect (see Fig. (10)).

-

(2)

Randomly permute each row of , distributing the non-zero entries of solo- and multiple-edge connections into a first sequence of permuted matrices, . Calculate an error distance for from the designated via . Each entry in is used to classify nodes: too many out-bound edges (“donor” nodes), those with too few (“recipient” nodes) and those at their target out-degree (“inert” nodes). We then loop over all the donor nodes, randomly pick a recipient node and exchange edges from the donor to the recipient — if suitable. After each exchange, we update the current permuted matrix, , its corresponding error distance, , and repeat the process until this error is zero. The final matrix, , after updates, then satisfies all the designated characteristics if we performed suitable edge exchanges along the way.

Pseudo-code for generating initial permutation matrix satisfying and yet violating .

Pseudo-code for permuting initial matrix into final matrix satisfying all constraints, , and .

References

- [1] V. Avalos-Gayt n, J.A. Almendral, D. Papo, S.E. Schaeffer, and S. Boccaletti. Assortative and modular networks are shaped by adaptive synchronization processes. Physical Review E - Statistical, Nonlinear, and Soft Matter Physics, 86(1), 2012. cited By 17.

- [2] Gilad Barlev, Thomas M Antonsen, and Edward Ott. The dynamics of network coupled phase oscillators: An ensemble approach. Chaos, 21(2):025103, 2011.

- [3] Sarthak Chandra, David Hathcock, Kimberly Crain, Thomas M. Antonsen, Michelle Girvan, and Edward Ott. Modeling the network dynamics of pulse-coupled neurons. Chaos, 27(3):033102, 2017.

- [4] Shui-Nee Chow and Xiao-Biao Lin. Bifurcation of a homoclinic orbit with a saddle-node equilibrium. Differential and Integral Equations, 3:435–466, 01 1990.

- [5] Fan Chung and Linyuan Lu. Connected components in random graphs with given expected degree sequences. Annals of combinatorics, 6(2):125–145, 2002.

- [6] BC Coutinho, AV Goltsev, SN Dorogovtsev, and JFF Mendes. Kuramoto model with frequency-degree correlations on complex networks. Physical Review E, 87(3):032106, 2013.

- [7] S. De Franciscis, S. Johnson, and J.J. Torres. Enhancing neural-network performance via assortativity. Physical Review E - Statistical, Nonlinear, and Soft Matter Physics, 83(3), 2011. cited By 19.

- [8] Daniel de Santos-Sierra, Irene Sendiña-Nadal, Inmaculada Leyva, Juan A Almendral, Sarit Anava, Amir Ayali, David Papo, and Stefano Boccaletti. Emergence of small-world anatomical networks in self-organizing clustered neuronal cultures. PloS one, 9(1):e85828, 2014.

- [9] Victor M. Eguíluz, Dante R. Chialvo, Guillermo A. Cecchi, Marwan Baliki, and A. Vania Apkarian. Scale-free brain functional networks. Phys. Rev. Lett., 94:018102, Jan 2005.

- [10] Bard Ermentrout. Type I membranes, phase resetting curves, and synchrony. Neural Computation, 8(5):979–1001, 1996.

- [11] G B Ermentrout and N Kopell. Parabolic bursting in an excitable system coupled with a slow oscillation. SIAM Journal on Applied Mathematics, 46(2):233–253, 1986.

- [12] Jacob G. Foster, David V. Foster, Peter Grassberger, and Maya Paczuski. Edge direction and the structure of networks. PNAS USA, 107(24):10815–10820, 2010.

- [13] Willy JF Govaerts. Numerical methods for bifurcations of dynamical equilibria, volume 66. Siam, 2000.

- [14] P. Hagmann, L. Cammoun, X. Gigandet, R. Meuli, C.J. Honey, J. Van Wedeen, and O. Sporns. Mapping the structural core of human cerebral cortex. PLoS Biology, 6(7):1479–1493, 2008. cited By 2374.

- [15] Takashi Ichinomiya. Frequency synchronization in a random oscillator network. Physical Review E, 70(2):026116, 2004.

- [16] M Kähne, IM Sokolov, and S Rüdiger. Population equations for degree-heterogenous neural networks. Physical Review E, 96(5):052306, 2017.

- [17] C. R. Laing. Derivation of a neural field model from a network of theta neurons. Physical Review E, 90(1):010901, 2014.

- [18] C. R. Laing. Numerical bifurcation theory for high-dimensional neural models. The Journal of Mathematical Neuroscience, 4(1):1, 2014.

- [19] C. R. Laing. Exact neural fields incorporating gap junctions. SIAM Journal on Applied Dynamical Systems, 14(4):1899–1929, 2015.

- [20] M Drew LaMar and Gregory D Smith. Effect of node-degree correlation on synchronization of identical pulse-coupled oscillators. Physical Review E, 81(4):046206, 2010.

- [21] Peter E Latham, BJ Richmond, PG Nelson, and S Nirenberg. Intrinsic dynamics in neuronal networks. i. theory. Journal of neurophysiology, 83(2):808–827, 2000.

- [22] Tanushree B Luke, Ernest Barreto, and Paul So. Complete classification of the macroscopic behavior of a heterogeneous network of theta neurons. Neural Computation, 25:3207–3234, 2013.

- [23] Marijn B Martens, Arthur R Houweling, and Paul HE Tiesinga. Anti-correlations in the degree distribution increase stimulus detection performance in noisy spiking neural networks. Journal of Computational Neuroscience, 42(1):87–106, 2017.

- [24] Warren S. McCulloch and Walter Pitts. The statistical organization of nervous activity. Biometrics, 4(2):91–99, 1948.

- [25] Ernest Montbrió, Diego Pazó, and Alex Roxin. Macroscopic description for networks of spiking neurons. Physical Review X, 5(2):021028, 2015.

- [26] M. E. J. Newman. Assortative mixing in networks. Phys. Rev. Lett., 89:208701, Oct 2002.

- [27] M.E.J. Newman. The structure and function of complex networks. SIAM Review, 45(2):167–256, 2003.

- [28] Duane Q. Nykamp, Daniel Friedman, Sammy Shaker, Maxwell Shinn, Michael Vella, Albert Compte, and Alex Roxin. Mean-field equations for neuronal networks with arbitrary degree distributions. Phys. Rev. E, 95:042323, Apr 2017.

- [29] E. Ott and T.M. Antonsen. Low dimensional behavior of large systems of globally coupled oscillators. Chaos, 18:037113, 2008.

- [30] Juan G Restrepo and Edward Ott. Mean-field theory of assortative networks of phase oscillators. Europhysics Letters, 107(6):60006, 2014.

- [31] Christian Schmeltzer, Alexandre Hiroaki Kihara, Igor Michailovitsch Sokolov, and Sten Rüdiger. Degree correlations optimize neuronal network sensitivity to sub-threshold stimuli. PloS One, 10:e0121794, 2015.

- [32] Per Sebastian Skardal, Juan G Restrepo, and Edward Ott. Frequency assortativity can induce chaos in oscillator networks. Physical Review E, 91(6):060902, 2015.

- [33] Per Sebastian Skardal, Jie Sun, Dane Taylor, and Juan G Restrepo. Effects of degree-frequency correlations on network synchronization: Universality and full phase-locking. Europhysics Letters, 101(2):20001, 2013.

- [34] Bernard Sonnenschein, Francesc Sagués, and Lutz Schimansky-Geier. Networks of noisy oscillators with correlated degree and frequency dispersion. The European Physical Journal B, 86(1):12, 2013.

- [35] S. Teller, C. Granell, M. De Domenico, J. Soriano, S. Gómez, and A. Arenas. Emergence of assortative mixing between clusters of cultured neurons. PLoS Computational Biology, 10(9), 2014. cited By 31.

- [36] JC Vasquez, AR Houweling, and P Tiesinga. Simultaneous stability and sensitivity in model cortical networks is achieved through anti-correlations between the in- and out-degree of connectivity. Frontiers in Computational Neuroscience, 7:156, 2013.

- [37] Marina Vegué and Alex Roxin. Firing rate distributions in spiking networks with heterogeneous connectivity. Phys. Rev. E, 100:022208, Aug 2019.