Degradation-Guided Meta-Restoration Network for Blind Super-Resolution

Abstract

Blind super-resolution (SR) aims to recover high-quality visual textures from a low-resolution (LR) image, which is usually degraded by down-sampling blur kernels and additive noises. This task is extremely difficult due to the challenges of complicated image degradations in the real-world. Existing SR approaches either assume a predefined blur kernel or a fixed noise, which limits these approaches in challenging cases. In this paper, we propose a Degradation-guided Meta-restoration network for blind Super-Resolution (DMSR) that facilitates image restoration for real cases. DMSR consists of a degradation extractor and meta-restoration modules. The extractor estimates the degradations in LR inputs and guides the meta-restoration modules to predict restoration parameters for different degradations on-the-fly. DMSR is jointly optimized by a novel degradation consistency loss and reconstruction losses. Through such an optimization, DMSR outperforms SOTA by a large margin on three widely-used benchmarks. A user study including 16 subjects further validates the superiority of DMSR in real-world blind SR tasks.

1 Introduction

Image super-resolution (SR) is a fundamental computer vision task, which aims to recover high-resolution textures from a degraded low-resolution (LR) image [12]. Recent success has been achieved by deep neural networks in SR tasks, where numerous architectures have been proposed to improve image quality [5, 6, 17, 22, 37]. Such achievements enable SR methods to be applied in practical applications, such as digital zoom algorithm for mobile cameras [8], medical imaging [23] and satellite imaging [32].

In general, an image degradation process can be formulated as follows:

| (1) |

where indicates convolution operations, and is the blur kernel. usually represents bicubic down-sampling, and indicates additive noises. To generate super-resolved images, most of the existing SR methods either assume a predefined down-sampling blur kernel or a fixed noise level. In particular, earlier works on single image super-resolution (SISR) usually assume a fixed bicubic down-sampling kernel without any blur or noise consideration. Recent progresses have been made by blind super-resolution methods, which aim to super-resolve real-world LR images without degradation knowledge. However, current blind SR methods still have the assumptions of limited degradations. For example, ZSSR [25] and KernelGAN [2] assume that the degradation is restricted in the LR image and they try to learn internal distribution of the LR image itself. Gu et al. [9] mainly assume the noise level as a fixed value of zero. They propose an iterative corrected kernel estimator IKC to handle different blur degradations. Helou et al. [7] propose a new module based on existing SR backbones such as [9], which has the same limitations with previous works. Nonetheless, in real-world scenarios, the degradation process is typically accompanied with variant complicated down-sampling kernels (e.g. Gaussian blur and motion blur), and noises (e.g. Gaussian noise and salt noise). The performance of the SR methods trained on a predefined degradation drops severely when facing different degradation types [9], thus these methods are still limited in many real-world applications.

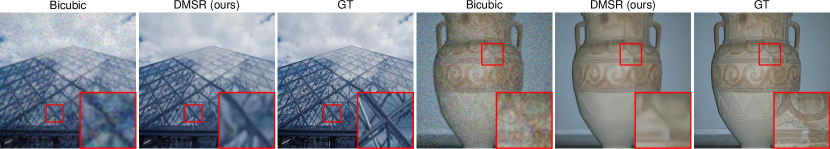

To solve the above problems, we propose a Degradation-guided Meta-restoration network for blind Super-Resolution (DMSR), which effectively super-resolve LR images with arbitrary real-world degradations. The blur and noise degradations are estimated on-the-fly and are further used to guide the meta-restoration process in SR networks. Specifically, DMSR consists of a degradation extractor and meta-restoration modules. The degradation extractor estimates the blur kernel and the noise map from the degraded LR image. This extractor is optimized by a degradation reconstruction loss. Besides, a novel degradation consistency loss is further proposed to enhance the accuracy of degradation estimation. Such a design plays a key role to guide the meta-restoration modules to generate accurate restoration parameters for different degradations. The proposed meta-restoration modules include a Meta-deNoise Module (MNM) and a Meta-deBlur Module (MBM) for noise removal and blur recovery, respectively. MNM and MBM take advantage of the estimated degradations and generate meta-biases and meta-weights, respectively. Such meta-biases and meta-weights will then serve as the restoration parameters in network to effectively restore high-resolution image features. In summary, to generate super-resolved results, our DMSR model can adjust network parameters according to different degradation situations, such that it can handle different real-world degradations. As shown in Figure 1, our model is capable of handling images with complicated blur and noise degradations in real-world cenarios.

The main contributions can be summarized as:

-

•

To the best of our knowledge, we are the first to address variant blur and noise degradations in one end-to-end model for blind SR. We propose two specially-designed meta-restoration modules MBM and MNM to handle blur and noise degradations in the real-world.

-

•

We design a degradation extractor in which the degradations are estimated dynamically and are further used to guide the meta-restoration process for SR networks. A degradation consistency loss is further proposed to enhance the estimation accuracy.

-

•

Evaluations on three widely-used benchmarks and real images demonstrate that our DMSR model achieves the state-of-the-art performance in blind SR tasks.

2 Related Work

Single image super-resolution. SISR aims to super-resolve the single LR image to the HR image. Most SISR methods assume the down-sampling blur kernel is predefined (usually bicubic) without any blur and noise consideration. SRCNN [5] is the first CNN based SR method while Dong et al. [6] further accelerated SR inference process by putting most of the layers in the low-resolution scale. With larger networks or novel optimizations, performance improvement has been achieved in [13, 14, 16, 17, 24, 26, 27, 29, 30, 38]. EDSR [17] further improved performance by removing normalization layers in residual blocks. Tong et al. [29] and Zhang et al. [38] used dense blocks [10] for SR task. RCAN [37] adds channel attention in residual blocks as a basic block to achieve the SOTA results. Soon afterwards, some methods which consider internal or hierarchical feature correlations were proposed in [4, 18, 21, 22]. In spite of the above achievements on SISR, these methods are still far from the real-world scenarios as they fail to handle the LR image beyond the assumed degradation.

Blind super-resolution. To address real-world super-resolution, some non-blind SR methods which assume degradation parameters are known were firstly proposed [31, 34, 35, 36]. Such a task can be regarded as an intermediate step and upper bound of blind SR, but it is still limited in practical applications as the degradation parameters are unknown in real-world scenarios. Blind SR aims to super-resolve real-world LR images whose degradation information is unavailable. ZSSR [25] and KernelGAN [2] learned the internal distribution of the degraded LR image to construct training pairs by treating LR as the training target. Nonetheless, the degradation assumption was restricted on the LR image itself and the degraded LR image was not an optimal training target in severe degradation situation. KMSR [39] used GAN to augment the blur-kernel pool for more SR training pairs. Hussein et al. [11] proposed a closed-form correction filter that transformed the LR image to adapt to existing leading SISR methods. However, these two methods only addressed blur degradation with a noise-free assumption. Gu et al. [9] proposed an iterative corrected kernel estimator IKC for their non-blind SR method SFTMD, but they mainly focused on blur degradation under the situation of a fixed noise level. Helou et al. [7] further addressed the overfitting problem from the perspective of frequency domain to improve blind SR performance. However, their module was based on current SR backbones such as Gu et al. [9], which had the same drawbacks with previous works.

Up till now, most existing SR methods either assume a predefined down-sampling blur kernel or a fixed noise level, which is still far from actual applications. Different from that, we propose a DMSR model which can handle LR images with arbitrary blur and noise degradations.

3 Approach

In this section, we present the details of the proposed Degradation-guided Meta-restoration network for blind Super-Resolution (DMSR). DMSR takes as input a degraded LR image and outputs a restored HR image. As shown in Figure 2, DMSR consists of a degradation extractor and three restoration modules for denoising, upsampling and deblurring, respectively. The degradation extractor (DE) estimates the degradations of the LR input. Such a kind of estimated degradations are further leveraged by the meta-restoration modules (MNM and MBM) to restore the image. The full model is optimized by a novel degradation consistency loss and reconstruction losses. We introduce the details of meta-restoration modules in Section 3.1. The degradation extractor is introduced in Section 3.2 and the loss functions are discussed in Section 3.3.

3.1 Meta-Restoration Modules

As described in E.q. (1), an HR image is sequentially degraded by blur, down-sampling and noise. Inspired by this process, we attach the MNM to the head of the network and the MBM to the end of the network, which inversely handles the degradation.

Meta-denoise module (MNM). There is a meta-layer at the beginning of the meta-denoise module. The guidance for the meta-layer in MNM is the estimated noise map from our degradation extractor instead of a noise level which is commonly used in [31, 35]. Considering the widely-used noise type, Additive White Gaussian Noise (AWGN), the probability density for each pixel is denoted as:

| (2) |

where is the noise level parameter and represents the probability density distribution. From this formulation, we can see that the parameter only influences the probability density distribution of the noise in pixel . However, it lacks the ability to express the exact noise value in that pixel. Therefore, we choose the noise map which provides more dense information in our model setting. Ideally, the estimated noise map is the additive noise of the input LR image and the network parameters need to learn an inverse operation. In such a case, the noise map can be regarded as the biases for each spatial position. A concatenation operation followed by a convolution layer are adopted to implement MNM, which can be formulated as:

| (3) |

where “Conv” and “Concat” represent the convolution layer and the concatenation operation, respectively. is the input degraded LR image and indicates the estimated noise map from our degradation extractor. is the output feature maps of MNM. When the convolution kernel size is set to 1, each value in the noise map just influences the corresponding position’s bias in this convolution process. In other words, the meta-layer in MNM generates meta-biases according to the estimated noise map for the network to better handle noise removal.

Deep feature learning and up-sampling. Between MNM and MBM, there is a network for deep feature learning and up-sampling. We adopt the residual group structure proposed in SOTA SISR method RCAN [37]. Each residual group is composed of several sequential residual channel attention blocks (RCABs) with a long skip connection. The residual path in each RCAB consists of “convolution + ReLU + convolution + channel attention”. Finally a convolution layer and a pixelshuffle layer enlarge the feature resolution according to the magnification scale factor.

Meta-deblur module (MBM). In general, a Gaussian blur process can be formulated as a convolution process:

| (4) |

where and are input features and blurred features, respectively. is the blur kernel with the size of and represents the convolution operation. Since convolution is a operation on local area, so each pixel value in is calculated from a local patch with the size of in . Considering deblur as an inverse process of the above convolution, each piexel value in is also related to a local patch with the size of in , which can be also expressed by a convolution process.

In our blind SR network, MBM is to process such an inverse process. To further enhance the expression capability of the module, we make the convolution kernels spatially variant and each kernel can be regarded as a meta-weight which is generated from the meta-layer in MBM. The estimated blur kernel serves as a guidance of this meta-layer because the blur kernel influences the deblur process.

Therefore, the target of the meta-layer in MBM is to generate proper meta-weights for this dynamic convolution process. Specifically, the estimated blur kernel will be firstly dimension-reduced to by a fully connected layer. This fully connected layer is initialized by the PCA matrix [35] which records the principle information estimated from a series of random sampled blur kernels. Then we repeatedly stretch such tensor to the features with where and indicate the feature resolution. The stretched features are concatenated with the network features to generate the meta-weights of this dynamic convolution layer. The meta-weights have the shape . Each spatial position with size represents a convolution kernel in that position. In such a design, the meta-layer in MBM predicts dynamic convolution parameters according to the estimated blur kernel for the network to address blur recovery.

3.2 Degradation Extractor

It is essential to extract accurate degradation for blind SR task, since degradation mismatch will produce unsatisfactory results [9]. The goal of the degradation extractor is to estimate accurate degradation which can provide solid guidance to the meta-restoration modules (MNM and MBM).

The structure of the degradation extractor is shown in Figure 3. One convolution layer followed by two residual blocks are firstly used to extract features of the input LR image. Then there are two branches to extract noise maps and blur kernels respectively. The upper branch estimates the noise map of the input LR image by an additional convolution layer, while the lower branch extracts blur kernel. Two convolution layers and a global average pooling layer are used in the end of the module. The resulting features are reshaped to the size of the blur kernel. We use a softmax layer at the end of the blur branch to ensure there is no value shift before and after blur degradation. To accurately estimate blur kernels and noise maps, we introduce a degradation reconstruction loss and a degradation consistency loss. These two losses are discussed in Section 3.3.

3.3 Loss Function

There are three types of loss functions in our model. The overall loss is denoted as:

| (5) |

where represents reconstruction loss, which is:

| (6) |

where () is the size of the HR image. We utilize loss which has been demonstrated to produce sharper results compared to loss.

The last two losses are shown in Figure 3. and are the estimated noise map and blur kernel, while and represent the ground truth noise map and the ground truth blur kernel, respectively. The degradation reconstruction loss can be interpreted as:

| (7) |

The degradation reconstruction loss directly constraints the estimated noise map and blur kernel closer to the accurate ones, which provides a direct supervision. To further enhance the accuracy of degradation estimation, we propose a novel degradation consistency loss. We degrade the original HR image by the estimated degradation to get a simulated degraded LR image , then we also use the degradation extractor to estimate the blur kernel and noise map from such simulated LR image. The degradation consistency loss can be described as:

| (8) | ||||

where the first term of degradation consistency loss aims to constraint the consistency between the input LR image and the simulated degraded LR image. Similar and indicates the accuracy of the degradation estimation to some extent. Similarly, the second and third terms in degradation consistency loss constraint the consistency of the estimated noise map and blur kernel, which enhance the accuracy of the degradation estimation.

4 Experiment

We conduct experiments on both quantitative and qualitative evaluations. We introduce the implementation details in Section 4.1. Evaluations on benchmarks and real cases are presented in Section 4.2 and Section 4.3, followed by ablation study experiments in Section 4.4.

4.1 Implementation Details

Training setups. To synthesize degraded images for training, we use isotropic blur kernels, bicubic down-sampling and additive white Gaussian noise following the common settings used in previous works [31, 35]. Specifically, the blur kernel size is set to , and the kernel width is randomly and uniformly sampled from the range of [0.2, 3.0]. The noise level varies in the range of [0, 75]. During training, we augment images by random horizontal flipping and rotating , and . Each mini-batch contains 32 LR patches with size .

We set the channel number of residual blocks in the degradation extractor as 64 and the kernel size of all the convolution layers as . There are 5 residual groups with each containing 20 RCABs in our full model. The global average pooling is used at the end of the blur branch. The weight coefficients for , and are 1, 10 and 1, respectively. Adam optimizer with , and is used with initial learning rate of . We train the model for iterations and the learning rate is halved every iterations.

Datasets and Metrics. For fair comparisons, we use DF2K [1, 28] as training set following the common settings used in previous works [9, 31]. All models are evaluated on both standard benchmarks (i.e., Set5 [3], Set14 [33] and B100 [20]) and real-world cases. Specifically, the real-world cases we used for comparisons include the commonly-used real image Flower [15] and the test set of “NTIRE 2020 Real World Super-Resolution” challenge [19]. The test set of the challenge contains 100 unknown-degraded test images without ground truth.

We report quantitative results in terms of PSNR and SSIM metrics, which are calculated on Y channel of YCbCr space. Since the ground truths of the degraded images of real cases are unavailable, we conduct qualitative evaluations and a user study for fair comparisons.

| Methods | [, | Set5 [3] | Set14 [33] | B100 [20] | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ] | 2 | 3 | 4 | 2 | 3 | 4 | 2 | 3 | 4 | |

| ZSSR [25] | 26.60 / .5972 | 25.62 / .5836 | 24.58 / .5600 | 25.73 / .5893 | 24.48 / .5382 | 23.62 / .5091 | 25.31 / .5667 | 24.19 / .5079 | 23.43 / .4724 | |

| IKC [9] | 26.74 / .7582 | 26.96 / .6546 | 23.73 / .5357 | 25.25 / .6732 | 25.36 / .5943 | 21.47 / .4312 | 25.38 / .6404 | 24.93 / .5540 | 21.16 / .3852 | |

| SFM [7] | [0.2, | 28.13 / .6856 | 24.60 / .5502 | 20.80 / .4333 | 26.81 / .6561 | 23.76 / .5213 | 18.42 / .3251 | 26.47 / .6315 | 23.33 / .4802 | 18.46 / .2924 |

| IKC [9]-vn | 15] | 32.54 / .8980 | 29.84 / .8551 | 28.79 / .8261 | 30.04 / .8382 | 27.76 / .7618 | 26.54 / .7122 | 29.17 / .8066 | 26.97 / .7221 | 25.93 / .6629 |

| SFM [7]-vn | 32.30 / .8962 | 30.26 / .8595 | 28.63 / .8232 | 29.68 / .8350 | 27.87 / .7621 | 26.57 / .7102 | 28.89 / .8054 | 26.93 / .7182 | 25.92 / .6608 | |

| DMSR (ours) | 32.79 / .9009 | 30.64 / .8637 | 29.06 / .8285 | 30.30 / .8417 | 28.12 / .7662 | 26.74 / .7122 | 29.33 / .8109 | 27.23 / .7197 | 26.05 / .6626 | |

| ZSSR [25] | 25.33 / .5346 | 25.06 / .5478 | 24.28 / .5374 | 24.29 / .4891 | 23.95 / .4909 | 23.40 / .4833 | 24.06 / .4546 | 23.77 / .4554 | 23.28 / .4469 | |

| IKC [9] | 25.69 / .7128 | 25.77 / .6085 | 23.46 / .5038 | 24.25 / .6152 | 24.47 / .5400 | 21.82 / .4111 | 24.54 / .5827 | 24.26 / .4980 | 20.60 / .3343 | |

| SFM [7] | [1.3, | 26.15 / .6168 | 24.59 / .5233 | 21.64 / .4400 | 24.84 / .5523 | 23.53 / .4738 | 19.23 / .3243 | 24.78 / .5187 | 23.27 / .4337 | 19.39 / .2933 |

| IKC [9]-vn | 15] | 30.56 / .8602 | 29.13 / .8278 | 28.26 / .8072 | 28.15 / .7654 | 26.97 / .7195 | 26.24 / .6881 | 27.34 / .7178 | 26.34 / .6705 | 25.65 / .6381 |

| SFM [7]-vn | 30.66 / .8622 | 29.15 / .8304 | 28.00 / .8016 | 28.27 / .7717 | 27.11 / .7242 | 26.19 / .6885 | 27.45 / .7260 | 26.42 / .6780 | 25.64 / .6384 | |

| DMSR (ours) | 31.13 / .8698 | 29.65 / .8403 | 28.48 / .8116 | 28.51 / .7735 | 27.24 / .7261 | 26.29 / .6895 | 27.54 / .7252 | 26.46 / .6738 | 25.70 / .6389 | |

| ZSSR [25] | 23.42 / .4378 | 23.40 / .4643 | 23.26 / .4826 | 22.53 / .3761 | 22.50 / .3989 | 22.43 / .4202 | 22.63 / .3436 | 22.64 / .3683 | 22.54 / .3888 | |

| IKC [9] | 24.33 / .6511 | 23.83 / .5079 | 21.60 / .3993 | 23.21 / .5606 | 22.85 / .4443 | 20.15 / .3058 | 23.71 / .5334 | 23.01 / .4111 | 19.58 / .2569 | |

| SFM [7] | [2.6, | 24.13 / .5255 | 23.17 / .4442 | 19.67 / .3246 | 23.07 / .4523 | 22.29 / .3820 | 18.27 / .2453 | 23.35 / .4225 | 22.28 / .3458 | 18.51 / .2192 |

| IKC [9]-vn | 15] | 27.57 / .7849 | 26.52 / .7523 | 26.38 / .7503 | 25.52 / .6645 | 24.86 / .6373 | 24.79 / .6318 | 25.25 / .6182 | 24.74 / .5935 | 24.64 / .5875 |

| SFM [7]-vn | 27.08 / .7686 | 26.80 / .7602 | 26.18 / .7425 | 25.38 / .6578 | 25.02 / .6423 | 24.71 / .6287 | 25.19 / .6141 | 24.94 / .6040 | 24.60 / .5855 | |

| DMSR (ours) | 28.81 / .8171 | 27.79 / .7943 | 27.10 / .7743 | 26.43 / .6916 | 25.81 / .6672 | 25.30 / .6478 | 25.86 / .6428 | 25.33 / .6173 | 24.93 / .6005 | |

| ZSSR [25] | 17.33 / .2164 | 17.06 / .2142 | 16.89 / .2265 | 17.09 / .2167 | 16.75 / .1982 | 16.54 / .1936 | 16.95 / .2011 | 16.63 / .1777 | 16.43 / .1700 | |

| IKC [9] | 23.41 / .5976 | 17.49 / .2372 | 14.54 / .1766 | 22.71 / .5137 | 17.21 / .2191 | 12.13 / .1013 | 23.14 / .4876 | 16.74 / .1898 | 11.77 / .0811 | |

| SFM [7] | [0.2, | 18.36 / .2468 | 15.52 / .1873 | 11.93 / .1112 | 17.66 / .2346 | 15.31 / .1723 | 11.88 / .0955 | 17.69 / .2227 | 15.35 / .1563 | 11.58 / .0812 |

| IKC [9]-vn | 50] | 28.35 / .8176 | 26.28 / .7678 | 25.12 / .7323 | 26.75 / .7278 | 25.10 / .6633 | 23.99 / .6195 | 26.06 / .6763 | 24.67 / .6138 | 23.81 / .5754 |

| SFM [7]-vn | 28.25 / .8165 | 26.42 / .7715 | 25.10 / .7317 | 26.44 / .7251 | 25.02 / .6614 | 23.98 / .6196 | 25.77 / .6753 | 24.55 / .6125 | 23.78 / .5752 | |

| DMSR (ours) | 28.58 / .8226 | 26.66 / .7763 | 25.38 / .7417 | 26.93 / .7296 | 25.30 / .6659 | 24.21 / .6232 | 26.20 / .6796 | 24.83 / .6158 | 23.94 / .5779 | |

| ZSSR [25] | 17.12 / .1814 | 16.88 / .1906 | 16.71 / .2034 | 16.84 / .1641 | 16.65 / .1697 | 16.46 / .1764 | 16.70 / .1446 | 16.54 / .1483 | 16.40 / .1548 | |

| IKC [9] | 23.07 / .5762 | 17.20 / .2094 | 13.37 / .1298 | 22.46 / .4969 | 17.01 / .1880 | 12.52 / .0991 | 22.91 / .4709 | 16.68 / .1611 | 11.89 / .0758 | |

| SFM [7] | [1.3, | 18.59 / .2243 | 15.42 / .1665 | 11.88 / .0991 | 17.51 / .1817 | 15.24 / .1475 | 11.87 / .0857 | 17.56 / .1654 | 15.29 / .1307 | 11.59 / .0713 |

| IKC [9]-vn | 50] | 27.27 / .7862 | 25.87 / .7466 | 24.81 / .7171 | 25.73 / .6766 | 24.65 / .6345 | 23.80 / .6071 | 25.24 / .6267 | 24.36 / .5883 | 23.67 / .5633 |

| SFM [7]-vn | 27.32 / .7877 | 25.82 / .7472 | 24.78 / .7155 | 25.69 / .6762 | 24.64 / .6361 | 23.79 / .6074 | 25.23 / .6281 | 24.32 / .5904 | 23.66 / .5633 | |

| DMSR (ours) | 27.62 / .7942 | 25.96 / .7533 | 24.98 / .7256 | 25.86 / .6776 | 24.76 / .6369 | 23.90 / .6088 | 25.30 / .6279 | 24.42 / .5897 | 23.71 / .5643 | |

| ZSSR [25] | 16.67 / .1354 | 16.61 / .1525 | 16.44 / .1693 | 16.40 / .1127 | 16.29 / .1252 | 16.20 / .1433 | 16.36 / .0976 | 16.28 / .1103 | 16.22 / .1276 | |

| IKC [9] | 22.42 / .5433 | 16.62 / .1616 | 14.26 / .1412 | 21.91 / .4660 | 16.66 / .1428 | 11.72 / .0608 | 22.48 / .4434 | 16.40 / .1209 | 11.75 / .0590 | |

| SFM [7] | [2.6, | 18.05 / .1753 | 15.12 / .1255 | 11.73 / .0752 | 17.25 / .1336 | 14.84 / .1023 | 11.72 / .0627 | 17.28 / .1185 | 15.04 / .0937 | 11.51 / .0532 |

| IKC [9]-vn | 50] | 25.59 / .7272 | 24.57 / .6925 | 23.85 / .6763 | 24.25 / .6135 | 23.49 / .5875 | 23.07 / .5742 | 24.17 / .5703 | 23.56 / .5484 | 23.20 / .5371 |

| SFM [7]-vn | 25.44 / .7212 | 24.72 / .6995 | 23.77 / .6712 | 24.20 / .6113 | 23.57 / .5905 | 23.06 / .5733 | 24.16 / .5693 | 23.65 / .5525 | 23.20 / .5370 | |

| DMSR (ours) | 26.05 / .7467 | 24.86 / .7139 | 24.21 / .6967 | 24.56 / .6240 | 23.83 / .5999 | 23.23 / .5818 | 24.36 / .5788 | 23.75 / .5566 | 23.27 / .5422 | |

| ZSSR [25] | 36.98 / .9567 | 32.23 / .8982 | 29.40 / .8264 | 32.77 / .9101 | 29.12 / .8206 | 27.16 / .7451 | 31.44 / .8910 | 28.26 / .7858 | 26.66 / .7063 | |

| IKC [9] | [0.2, | 37.26 / .9572 | 34.02 / .9261 | 31.55 / .8931 | 33.06 / .9116 | 30.09 / .8421 | 28.20 / .7830 | 31.94 / .8933 | 28.89 / .8066 | 27.43 / .7376 |

| SFM [7] | 0] | 37.53 / .9581 | 33.45 / .9249 | 32.11 / .8950 | 33.23 / .9129 | 30.12 / .8424 | 28.48 / .7833 | 31.93 / .8928 | 28.92 / .8061 | 27.44 / .7378 |

| DMSR-nf (ours) | 37.96 / .9617 | 34.49 / .9289 | 32.37 / .8977 | 33.48 / .9174 | 30.34 / .8448 | 28.73 / .7860 | 32.14 / .8995 | 29.14 / .8087 | 27.65 / .7398 | |

4.2 Evaluations on Benchmarks

In this section, we compare our model with SOTA blind SR methods, i.e., ZSSR [25], IKC [9] and SFM [7] on standard benchmarks. ZSSR is the SOTA method to learn internal distribution of the LR image. IKC is the SOTA blind SR method and SFM achieves performance improvement based on IKC backbone. For IKC and SFM which assume a fixed noise level, we additionally train IKC-vn and SFM-vn models with variant noise training for more fair comparison. We attach all the websites of the implementation in the footnote111Implementation of the methods in blind SR comparison:

ZSSR [25]: https://github.com/assafshocher/ZSSR

IKC [9]: https://github.com/yuanjunchai/IKC

SFM [7]: https://github.com/majedelhelou/SFM

..

For fair comparisons, we train IKC and SFM with blur kernels on DF2K dataset. We use IKC as the backbone of SFM and the percent rate of training for SFM is 50%. We sample isotropic Gaussian blur kernels from {0.2, 1.3, 2.6} and AWGN levels from {15, 50}.

Quantitative Comparisons. In Table 1, ZSSR which learns the internal distribution of the LR image performs badly since the noise and blur degradation in LR images will make its performance drop significantly. For IKC and SFM which have fixed noise level assumption zero, the performance drops significantly when noise level becomes larger. Even with variant noise training, the performance of SOTA methods is increased but still limited since they do not have specially-designed modules to handle variant noises. Among these blind SR methods, our DMSR can achieve the best performance on all benchmarks in different degradation situations.

To further validate the superior performance of our model, we also conduct experiments in the noise-free situation to meet the assumption of IKC and SFM, where IKC and SFM have significantly better performance. To get the noise-free version of our model “DMSR-nf”, we fine-tune the DMSR where we fix the noise level to 0. We train another iterations with learning rate . As shown in the bottom row in Table 1, even in this narrow range of degradations, our model can still achieve the best performance. This further verifies the real-world super-resolution capability of our model.

Qualitative Comparisons. We also show visual comparison results among different blind SR methods in Figure 4, where degradation parameters are set as isotropic blur kernel 2.6, noise level 15 with scale factor 4. ZSSR learns the noise distribution of the LR image so its SR results obviously contain noise. IKC and SFM fails to handle such degraded images beyond their degradation assumption. With variant noise training, IKC-vn and SFM-vn can generate better SR results, but not clear as ours. Our DMSR model can handle both noise and blur degradation well and achieves the best visual performance.

4.3 Evaluations on Real Cases

In this section, we further evaluate different methods on real cases. We compare our DMSR model with different kinds of SR methods to show the overall practical application ability. These methods include two SOTA blind SR methods with variant noise training IKC [9]-vn and SFM [7]-vn. We also adopt a SOTA SISR method RCAN [37] and a SOTA non-blind SR method UDVD [31] to conduct comparison. For the non-blind SR method UDVD, manual grid search on degradation parameters are usually performed and the best result is chosen. However, such a design is time-consuming on real-world application and not fair for other methods since they manually choose the best result from all the generated results. So for fair comparison, we draw a degradation parameter window which contains different degradation types applied on one image. It has 24 cases of degradations with 6 different noise levels and 4 different blur kernels for each scale factor 2, 3, 4, where 4 is shown in Supplementary. During inference, we first manually choose the most similar degraded image in the window and adopt its parameters as the input for UDVD. Such a design can save more real-world inference time and is more fair for comparison.

Performance on real images. We first conduct evaluation on the real image Flowers [15]. Since there is no ground truth HR image for this image, we only show the visual comparison results in Figure 5. The degradation parameters [, ] of UDVD is selected as [2.1, 60] for this image. As we can see, our DMSR model can achieve the best visual performance among these methods. The visual quality of RCAN is severely influenced by the noise degradation. IKC-vn and SFM-vn fail to remove the influences of the noise. Even with manually chosen degraded parameters, the SOTA non-blind SR method UDVD cannot generate textures as natural as ours and there are some artifacts in their result.

Performance on real-world image challenge. In addition to the real image, we also run our model on the test set of “NTIRE 2020 Real World Super-Resolution” challenge [19]. There are 100 unknown-degraded test images in Track 1 and we use these images for evaluation. The degradation parameters of UDVD for this test set are set to [1.2, 15]. Visual comparison is shown in Figure 6. As shown in this figure, our DMSR model can generate the results with clearer and more realistic textures. To further validate the superiority of our model, we conduct a user study on this challenge test set where our DMSR is compared with RCAN, IKC-vn, SFM-vn and UDVD. We collect 3,200 votes from 16 subjects, where each subject is invited to compare our model with two other methods. Therefore, each of the four comparison combination is evaluated by 8 subjects. In each comparison process, the users are provided with two images, including a DMSR result and another method’s result. Users are asked to select the one with better visual quality. The user study results are shown in Figure 7, where the values on Y-axis indicate the percentage of users that prefer our DMSR model over other methods. As we can see, DMSR significantly outperforms SOTA SISR method with over 97% of users voting for ours. For SOTA blind and non-blind SR methods IKC-vn, SFM-vn and UDVD, our model still has over 88% probability of winning. Such results validate the favorable visual quality of our DMSR model. This demonstrates that the meta-restoration modules in our model can generate proper network parameters on-the-fly for different real-world degradation situations.

4.4 Ablation Study

Degradation Extractor. In this part, we will verify the effectiveness of the proposed DR loss and DC loss. As shown in Table 2, without all losses in the degradation extractor, PSNR / SSIM performance are 24.87 / 0.7194. When adding DR loss to directly supervise degradation estimation, the performance increases to 24.92 / 0.7220. After applying DC loss on the LR image and degradation (blur kernel and noise map), the final performance is further increased to 24.98 / 0.7256. Such an ablation demonstrates the effectiveness of the losses in our degradation extractor. In addition, Figure 8 shows the blur kernel estimation results with and without the degradation consistency loss. With DC loss, the estimated blur kernel is more accurate.

| DR loss | DC loss (LR) | DC loss (KN) | PSNR / SSIM |

|---|---|---|---|

| 24.87 / .7194 | |||

| ✓ | 24.92 / .7220 | ||

| ✓ | ✓ | 24.95 / .7239 | |

| ✓ | ✓ | ✓ | 24.98 / .7256 |

Meta-restoration Modules. We also conduct ablation experiments on the two types of meta-restoration modules, MNM and MBM. The ablation results can be viewed in Table 3. For meta-denoise module, we first verify that the noise map is superior to the noise level number . We run a comparison model in which the degradation extractor predicts the noise level and this number will be spatially repeated as the “noise map” to be used in MNM. From the table, we can see that utilizing the noise map will bring about 0.1 PSNR performance improvement, which demonstrates the superiority of the noise map. For meta-deblur module, when we add MBM into the model, the performance will be further increased. Such ablation experiments verifies the effectiveness of our two types of meta-restoration modules.

| MNM- | MNM-map | MBM | PSNR / SSIM |

|---|---|---|---|

| ✓ | 28.61 / .8119 | ||

| ✓ | ✓ | 28.70 / .8148 | |

| ✓ | 28.72 / .8156 | ||

| ✓ | ✓ | 28.81 / .8171 |

The number of MBM. We also analyze the model performance with different numbers of MBMs. As shown in Table 4, we conduct experiments on one, two and three MBMs. After one MBM equipped, adding more MBMs can not bring obvious performance increase. This is because multiple MBMs is equal to one MBM by its linear nature. Considering additional memory cost, our final model contains only one MBM as the default setting.

| 1 MBM | 2 MBM | 3 MBM | PSNR / SSIM |

|---|---|---|---|

| ✓ | 25.96 / 0.7533 | ||

| ✓ | 25.97 / 0.7545 | ||

| ✓ | 25.95 / 0.7537 |

5 Conclusion

In this paper, we propose a Degradation-guided Meta-restoration network for blind Super-Resolution (DMSR) which aims to restore a real-world LR image to an HR image. DMSR consists of two types of meta-restoration modules, and a degradation extractor optimized by a tailored degradation consistency loss. The blur and noise degradations can be estimated online from the extractor and further guide the meta-restoration modules to generate restoration parameters. Therefore, our DMSR model can handle real-world LR images with arbitrary degradations. Extensive experiments on benchmarks and real cases demonstrate the real-world application ability of our model. Yet, we can still observe failure cases in some complicatedly degraded image. We will consider more types of degradations as our future work to enhance the practical application ability of our model, such as motion blur, salt-pepper noise or jpeg block artifacts.

References

- [1] Eirikur Agustsson and Radu Timofte. NTIRE 2017 challenge on single image super-resolution: Dataset and study. In CVPRW, pages 126–135, 2017.

- [2] Sefi Bell-Kligler, Assaf Shocher, and Michal Irani. Blind super-resolution kernel estimation using an internal-GAN. In NeurIPS, pages 284–293, 2019.

- [3] Marco Bevilacqua, Aline Roumy, Christine Guillemot, and Marie Line Alberi-Morel. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In BMVC, 2012.

- [4] Tao Dai, Jianrui Cai, Yongbing Zhang, Shu-Tao Xia, and Lei Zhang. Second-order attention network for single image super-resolution. In CVPR, pages 11065–11074, 2019.

- [5] Chao Dong, Chen Change Loy, Kaiming He, and Xiaoou Tang. Image super-resolution using deep convolutional networks. TPAMI, 38(2):295–307, 2015.

- [6] Chao Dong, Chen Change Loy, and Xiaoou Tang. Accelerating the super-resolution convolutional neural network. In ECCV, pages 391–407, 2016.

- [7] Majed El Helou, Ruofan Zhou, and Sabine Süsstrunk. Stochastic frequency masking to improve super-resolution and denoising networks. In ECCV, pages 749–766, 2020.

- [8] Sina Farsiu, Michael Elad, and Peyman Milanfar. Multiframe demosaicing and super-resolution of color images. TIP, 15(1):141–159, 2005.

- [9] Jinjin Gu, Hannan Lu, Wangmeng Zuo, and Chao Dong. Blind super-resolution with iterative kernel correction. In CVPR, pages 1604–1613, 2019.

- [10] Gao Huang, Zhuang Liu, Laurens Van Der Maaten, and Kilian Q Weinberger. Densely connected convolutional networks. In CVPR, pages 4700–4708, 2017.

- [11] Shady Abu Hussein, Tom Tirer, and Raja Giryes. Correction filter for single image super-resolution: Robustifying off-the-shelf deep super-resolvers. In CVPR, pages 1428–1437, 2020.

- [12] Michal Irani and Shmuel Peleg. Improving resolution by image registration. CVGIP, 53(3):231–239, 1991.

- [13] Jiwon Kim, Jung Kwon Lee, and Kyoung Mu Lee. Accurate image super-resolution using very deep convolutional networks. In CVPR, pages 1646–1654, 2016.

- [14] Jiwon Kim, Jung Kwon Lee, and Kyoung Mu Lee. Deeply-recursive convolutional network for image super-resolution. In CVPR, pages 1637–1645, 2016.

- [15] Marc Lebrun, Miguel Colom, and Jean-Michel Morel. The noise clinic: a blind image denoising algorithm. IPOL, 5:1–54, 2015.

- [16] Christian Ledig, Lucas Theis, Ferenc Huszár, Jose Caballero, Andrew Cunningham, Alejandro Acosta, Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, et al. Photo-realistic single image super-resolution using a generative adversarial network. In CVPR, pages 4681–4690, 2017.

- [17] Bee Lim, Sanghyun Son, Heewon Kim, Seungjun Nah, and Kyoung Mu Lee. Enhanced deep residual networks for single image super-resolution. In CVPRW, pages 136–144, 2017.

- [18] Jie Liu, Wenjie Zhang, Yuting Tang, Jie Tang, and Gangshan Wu. Residual feature aggregation network for image super-resolution. In CVPR, pages 2359–2368, 2020.

- [19] Andreas Lugmayr, Martin Danelljan, and Radu Timofte. NTIRE 2020 challenge on real-world image super-resolution: Methods and results. In CVPRW, pages 494–495, 2020.

- [20] David Martin, Charless Fowlkes, Doron Tal, and Jitendra Malik. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In ICCV, pages 416–423, 2001.

- [21] Yiqun Mei, Yuchen Fan, Yuqian Zhou, Lichao Huang, Thomas S Huang, and Honghui Shi. Image super-resolution with cross-scale non-local attention and exhaustive self-exemplars mining. In CVPR, pages 5690–5699, 2020.

- [22] Ben Niu, Weilei Wen, Wenqi Ren, Xiangde Zhang, Lianping Yang, Shuzhen Wang, Kaihao Zhang, Xiaochun Cao, and Haifeng Shen. Single image super-resolution via a holistic attention network. In ECCV, pages 191–207, 2020.

- [23] Ozan Oktay, Wenjia Bai, Matthew Lee, Ricardo Guerrero, Konstantinos Kamnitsas, Jose Caballero, Antonio de Marvao, Stuart Cook, Declan O’Regan, and Daniel Rueckert. Multi-input cardiac image super-resolution using convolutional neural networks. In MICCAI, pages 246–254, 2016.

- [24] Wenzhe Shi, Jose Caballero, Ferenc Huszár, Johannes Totz, Andrew P Aitken, Rob Bishop, Daniel Rueckert, and Zehan Wang. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In CVPR, pages 1874–1883, 2016.

- [25] Assaf Shocher, Nadav Cohen, and Michal Irani. “zero-shot” super-resolution using deep internal learning. In CVPR, pages 3118–3126, 2018.

- [26] Ying Tai, Jian Yang, and Xiaoming Liu. Image super-resolution via deep recursive residual network. In CVPR, pages 3147–3155, 2017.

- [27] Ying Tai, Jian Yang, Xiaoming Liu, and Chunyan Xu. Memnet: A persistent memory network for image restoration. In ICCV, pages 4539–4547, 2017.

- [28] Radu Timofte, Eirikur Agustsson, Luc Van Gool, Ming-Hsuan Yang, and Lei Zhang. NTIRE 2017 challenge on single image super-resolution: Methods and results. In CVPR, pages 114–125, 2017.

- [29] Tong Tong, Gen Li, Xiejie Liu, and Qinquan Gao. Image super-resolution using dense skip connections. In ICCV, pages 4799–4807, 2017.

- [30] Xintao Wang, Ke Yu, Shixiang Wu, Jinjin Gu, Yihao Liu, Chao Dong, Yu Qiao, and Chen Change Loy. ESRGAN: Enhanced super-resolution generative adversarial networks. In ECCVW, pages 63–79, 2018.

- [31] Yu-Syuan Xu, Shou-Yao Roy Tseng, Yu Tseng, Hsien-Kai Kuo, and Yi-Min Tsai. Unified dynamic convolutional network for super-resolution with variational degradations. In CVPR, pages 12496–12505, 2020.

- [32] Deniz Yıldırım and Oğuz Güngör. A novel image fusion method using ikonos satellite images. Journal of Geodesy and Geoinformation, 1(1):75–83, 2012.

- [33] Roman Zeyde, Michael Elad, and Matan Protter. On single image scale-up using sparse-representations. In International conference on curves and surfaces, pages 711–730, 2010.

- [34] Kai Zhang, Luc Van Gool, and Radu Timofte. Deep unfolding network for image super-resolution. In CVPR, pages 3217–3226, 2020.

- [35] Kai Zhang, Wangmeng Zuo, and Lei Zhang. Learning a single convolutional super-resolution network for multiple degradations. In CVPR, pages 3262–3271, 2018.

- [36] Kai Zhang, Wangmeng Zuo, and Lei Zhang. Deep plug-and-play super-resolution for arbitrary blur kernels. In CVPR, pages 1671–1681, 2019.

- [37] Yulun Zhang, Kunpeng Li, Kai Li, Lichen Wang, Bineng Zhong, and Yun Fu. Image super-resolution using very deep residual channel attention networks. In ECCV, pages 286–301, 2018.

- [38] Yulun Zhang, Yapeng Tian, Yu Kong, Bineng Zhong, and Yun Fu. Residual dense network for image super-resolution. In CVPR, pages 2472–2481, 2018.

- [39] Ruofan Zhou and Sabine Susstrunk. Kernel modeling super-resolution on real low-resolution images. In ICCV, pages 2433–2443, 2019.

Supplementary

In this supplementary material, Section A describes the comparison of the running time and the parameter number. Section B illustrates the network structure details of our proposed DMSR. In Section C, we show the degradation parameter window which are used for parameter chosen in non-blind SR methods. Finally, more visual results will be shown in Section D.

Appendix A Running Time and Parameter Number

In this section, the inference time and the parameter number of DMSR will be discussed. Our DMSR model is compared with the SOTA blind SR approaches which are adopted in our paper. These approaches include ZSSR [25], IKC [9] and SFM [7]. Because we choose the blind SR SOTA method IKC as the backbone of SFM, so their inference time and parameter number are the same. For running time, all the models are run on a single RTX 2080 GPU with an input LR image of the size . Table B.5 shows the results with scale factor 2, in which ZSSR has the smallest parameter number but its inference time is extremely high. This is because for every input LR image, ZSSR needs to first train on the LR image before generating SR result during inference, which is a restriction for practical application. IKC and SFM spend more time than our DMSR model in inference due to the iterative strategy. Our DMSR model can achieve significantly better performance than SOTA methods with less parameter number and inference time.

Appendix B Details of Network Structure

Our DMSR model contains a degradation extractor and an SR network. The structure of the degradation extractor is illustrated in Table B.6. A convolution layer and two residual blocks are adopted to extract image features. In the noise branch, an additional convolution layer predicts the noise map. In the blur branch, two convolution layers, a global pooling layer and a softmax layer are adopted sequentially to estimate the blur kernel with the size of .

Our SR network is composed of two types of meta-restoration modules (MNM and MBM), a deep feature learning and up-sampling part. The structure of the SR network is shown in Table B.7. We divide the SR network into three parts, in which the last two columns are the layers for MNM and MBM.

| Id | Layer name(s) | |

|---|---|---|

| 0 | Conv(3, 64), ReLU | |

| 1 | ResBlock(64) | |

| 2 | ResBlock(64) | |

| 3 | Conv(64, 128), ReLU | Conv(64,3) |

| 4 | Conv(128, 225), ReLU | |

| 5 | GlobalPool | |

| 6 | Softmax | |

| Id | Layer name(s) | Id | Layer name(s) for MNM | Id | Layer name(s) for MBM | Output size |

|---|---|---|---|---|---|---|

| 1-0 | Concat() | |||||

| 1-1 | Conv(6, 64) | |||||

| 0-0 | Conv(64, 64)(#1-1) | |||||

| 0-1 | Residual Group | |||||

| 0-2 | Conv, PixelShuffle, ReLU | |||||

| 0-3 | Conv(64, 3) | |||||

| 2-0 | FC(225, 15)() | |||||

| 2-1 | Repeat Spatially | |||||

| 2-2 | Concat(#0-3 #2-1) | |||||

| 2-3 | Conv(18, 225) | |||||

| 0-4 | DynamicConv(#2-3) | |||||

| 0-5 | Conv(3, 3) |

Appendix C Degradation Parameter Window

In this part, we show the degradation parameter window for the degradation parameter choices in non-blind SR methods. As shown in Figure C.9, during inference, we choose the most similar degraded image in the window and adopt its parameters as the input for non-blind SR methods.

Appendix D More Visual Results

In this section, we show more blind SR results on the common-used datasets, Set5 [3], Set14 [33] and B100 [20], as shown in Figure C.10. These results further demonstrate the effectiveness of our DMSR model over SOTA models. In addition, to verify the robustness of our proposed DMSR model, we show the SR results on different degradation situations in Figure D.11. We choose a wide range of blur kernel widths and noise levels as degradations with scale factor 4. The results demonstrate that our model can handle a wide range of degradations in real-world scenarios.

We also observe some failure cases during our experiments and we show them in Figure D.12. Due to the severe noise and blur degradation, our DMSR model fails to generate accurate textures in some special cases. We will further study more effective models to solve such problems.