Defense Against Adversarial Attacks on No-Reference Image Quality Models with Gradient Norm Regularization

Abstract

The task of No-Reference Image Quality Assessment (NR-IQA) is to estimate the quality score of an input image without additional information. NR-IQA models play a crucial role in the media industry, aiding in performance evaluation and optimization guidance. However, these models are found to be vulnerable to adversarial attacks, which introduce imperceptible perturbations to input images, resulting in significant changes in predicted scores. In this paper, we propose a defense method to improve the stability in predicted scores when attacked by small perturbations, thus enhancing the adversarial robustness of NR-IQA models. To be specific, we present theoretical evidence showing that the magnitude of score changes is related to the norm of the model’s gradient with respect to the input image. Building upon this theoretical foundation, we propose a norm regularization training strategy aimed at reducing the norm of the gradient, thereby boosting the robustness of NR-IQA models. Experiments conducted on four NR-IQA baseline models demonstrate the effectiveness of our strategy in reducing score changes in the presence of adversarial attacks. To the best of our knowledge, this work marks the first attempt to defend against adversarial attacks on NR-IQA models. Our study offers valuable insights into the adversarial robustness of NR-IQA models and provides a foundation for future research in this area.

1 Introduction

Deep Neural Networks (DNNs) have demonstrated remarkable performance across various domains [11, 16, 22], and Image Quality Assessment (IQA) is one of them. IQA aims to predict the quality of images consistent with human perception. And it could be categorized as Full-Reference (FR) and No-Reference (NR) according to the access to the reference images. While FR-IQA models specialize in assessing the perceptual disparities between two images, NR-IQA models focus on estimating a quality score for a single input image. The importance of IQA extends to many applications such as image transport systems [8], image in-painting [14] and so on [6, 45, 27]. Leveraging the capabilities of DNNs, recent IQA models have achieved remarkable consistency with human opinion scores [32].

However, the reliability of DNNs is challenged since they are found to be susceptible to adversarial perturbations. Attackers would mislead DNNs to make decisions inconsistent with human perception by adding carefully designed perturbations to inputs. This manipulation technique is called the adversarial attack, and the perturbed inputs are called adversarial examples. The initial discovery of DNNs’ vulnerability to adversarial attacks was in the context of classification tasks [33]. Subsequently, the threats of adversarial attacks are explored in various tasks, including object detection [34], segmentation [26], natural language processing [49], and many others [1, 19].

Recently, adversarial attacks on IQA models have garnered significant attention. Several attack methods targeting NR-IQA models have been proposed, where attackers aim to significantly change the predicted scores with small adversarial perturbations to input images. For instance, Zhang et al. [47] generated adversarial examples using the Lagrange multiplier method, imposing several constraints on the quality of adversarial examples. Besides, Shumitskaya et al. [30, 31] and Korhonen et al. [17] trained an individual model to generate adversarial examples.

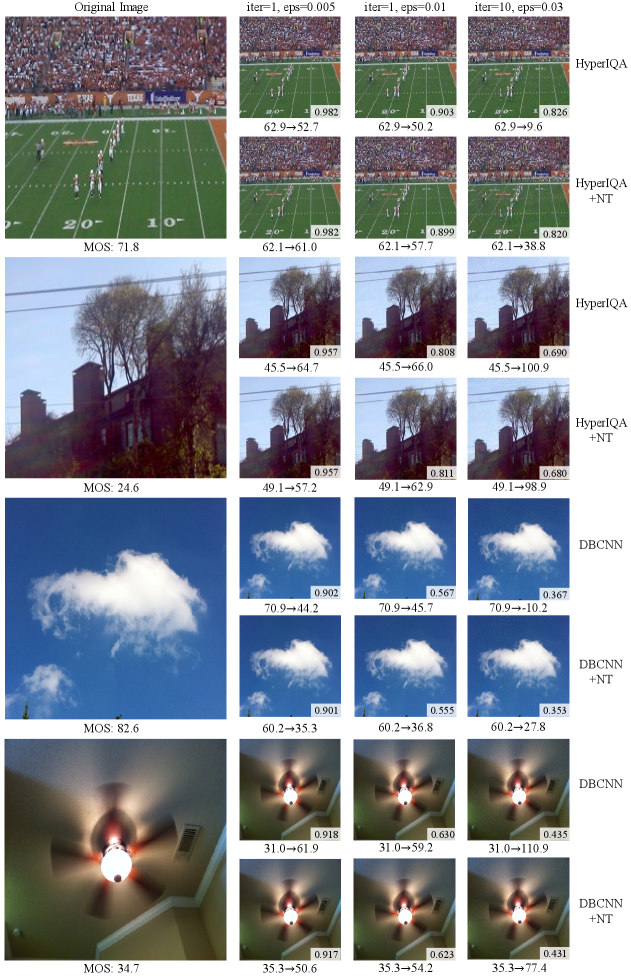

However, despite these proposed attack techniques highlighting vulnerabilities in NR-IQA models, no methods have been put forth to defend against attacks and improve the adversarial robustness of NR-IQA methods. Training robust IQA models is essential for improving the reliability of these models in real-world applications. For instance, in online advertising, the quality of ad images can significantly impact viewer engagement. Adversarial attacks on NR-IQA metrics could result in low-quality ad images being rated highly or high-quality ad images being rated lowly, as cases shown in Figure 2, potentially reducing the effectiveness of online advertising campaigns. Therefore, there is an impending need to train robust NR-IQA models, which is crucial for ensuring both the reliability and security of NR-IQA models in practical applications.

In this paper, we propose a defense method to improve the adversarial robustness of NR-IQA models in terms of reducing the quality score changes before and after adversarial attacks, which is supported by both theoretical foundations and empirical evidence. We analyze existing attacks on NR-IQA models and establish a theoretical foundation demonstrating the strong relationship between the adversarial robustness of an NR-IQA model and the norm of its gradient concerning the input image . We found that for an NR-IQA model, a smaller implies a more robust model. Drawing upon the theoretical analysis, we propose the regularization of the gradient’s norm to enhance the adversarial robustness of NR-IQA models. A direct way to regularize is adding it to the loss function in the training phase, which needs double backpropagation to compute the gradient of the regularization term with respect to model parameters considering the calculation mechanism of DNNs. However, double backpropagation is not currently scalable for large-scale DNNs [12]. Therefore, we approximate by finite differences [38] instead of using it directly. The approximation result is utilized as the regularization term, effectively constraining .

To further verify our methodology, we conduct experiments on four baseline NR-IQA models and four attack methods. The results show the effectiveness of the norm regularization strategy in boosting baseline models’ robustness against adversarial attacks. To the best of our knowledge, this is the first work to propose a defense method against adversarial attacks on NR-IQA models, which uses the norm of the gradient as a regularization term. This paper establishes a theoretical connection between the robustness of the NR-IQA model against adversarial attacks and the gradient norm with respect to the input image. To support reproducible scientific research, we release the code at https://github.com/YangiD/DefenseIQA-NT.

2 Related Work

Adversarial attacks were first studied in classification tasks, so we introduce these attacks along with defense methods in Sec. 2.1. Sec. 2.2 and Sec. 2.3 provide a brief overview of NR-IQA models and attacks on these models.

2.1 Adversarial Attacks and Their Defenses in Classification Tasks

Based on the available knowledge of the target model, adversarial attacks can be divided into white-box attacks and black-box attacks. In white-box scenarios, attackers possess comprehensive knowledge about the target model. Some classic attacks treated the problem of generating adversarial examples as an optimization task [10, 3, 25]. Alternatively, some attacks proposed to train a new model to generate adversarial examples [48, 39, 20]. Conversely, in the case of black-box attacks, attackers are restricted to accessing only the output of the target model. A predominant strategy for executing black-box attacks involves generating adversarial examples on a known source model and subsequently transferring them to the target model [40, 13, 21].

To defend against adversarial attacks in classification tasks, a widely used method is adversarial training [33] and its variants [35, 43, 29, 49]. Adversarial training involves the generation of adversarial examples using specific attacks, which are incorporated into the training dataset so that the model can learn from these adversarial examples in the training phase. It acts as a form of data augmentation and helps to improve the robustness of the network.

2.2 IQA Tasks and Models

IQA tasks aim to predict image quality scores consistent with human perception (i.e., Mean Opinion Score, MOS for short), which could be divided into FR and NR. For FR-IQA, it involves comparing a distorted image and its reference image to predict the quality score of the distorted image. Due to the difficulty of obtaining reference images in some authentic scenes, NR-IQA proposes to predict the quality score with only the distorted image.

NR-IQA methods extract features related to human perception of image quality. Some methods [24, 23] considered the hand-craft feature from Natural Scene Statistics. Further works explored the impact of image semantic information on human perception of image quality. HyperIQA [32] used a hypernetwork to obtain different quality estimators for images with different content. DBCNN [46] extracted distorted information and semantic information of images by two independent neural networks and combined them with a bi-linear pooling. LinearityIQA [18] proposed the normalization of scores in the loss function for faster convergence of the model. Meanwhile, some methods explored the effectiveness of different network architectures. MANIQA [41] and MUSIQ [15] utilized vision transformers [5] and verified their effectiveness in NR-IQA tasks.

2.3 Adversarial Attacks on NR-IQA Models

The issue of adversarial attacks within the context of IQA tasks has garnered some attention, although research in this area remains somewhat limited. Recently, some attack methods have been designed for NR-IQA models.

In white-box scenarios, the Perceptual Attack [47] modeled NR-IQA attacks as an optimization problem, where it employed the Lagrange multiplier method to solve this optimization problem. Perceptual Attack had tried different constraints on the image quality of adversarial examples, including the Chebyshev distance, LPIPS [44], SSIM [37] and DISTS [4]. Shumitskaya et al. [30] proposed to update a universal perturbation through a set of images and added it to clean images to attack NR-IQA models.

In black-box scenarios, the Kor. Attack [17] adapted ideas from attacks in classification tasks, creating adversarial examples through ResNet50 [11] and transferring them to the unknown target model. Likewise, Shumitskaya et al. [31] proposed to train a U-Net [28] to generate different adversarial perturbations for each image.

Although Korhonen et al. [17] adapted basic defense mechanisms from classification models to NR-IQA models, they did not investigate defense methods that are specifically designed for NR-IQA tasks.

3 Preliminary

3.1 Definition of Attacks on NR-IQA Models

Adversarial attacks on NR-IQA models aim to manipulate the predicted score of an input image by an NR-IQA model so that the objective score by models is inconsistent with the subjective score by humans. As for a successful attack on an NR-IQA model, there are imperceptible differences to the human eye between original images and adversarial examples, but these subtle perturbations result in large changes in the predicted scores generated by NR-IQA models. Examples are shown in Figure 2 (a) and (b). This attack can be mathematically described as follows:

| (1) |

where symbolizes the perturbation added to , while the function quantifies the perceptual distance between two images, and characterizes the tolerance of human eyes for image differences. An assumption is that when , the subjective score of is the same as . In our methodology, we take as defined by:

| (2) |

for the convenience of our theoretical analysis. Moreover, it has been used in attacks for IQA tasks [47, 17].

3.2 Robustness Evaluations of NR-IQA Models

The adversarial robustness of NR-IQA measures the stability of the NR-IQA model to imperceptible perturbations of input images generated by attacks. For example, when the original image and its adversarial example have the same appearance, an NR-IQA model should give both the same quality scores. Figure 2 presents instances where DBCNN fails in this aspect. Researchers tend to assess the adversarial robustness of NR-IQA models by evaluating their IQA performance on adversarial examples [17, 47]. A better performance implies that the model is more robust.

Typically, the performance of an NR-IQA model is measured using four metrics: Root Mean Square Error (RMSE), Pearson’s Linear Correlation Coefficient (PLCC), Spearman Rank-Order Correlation Coefficient (SROCC), and Kendall Rank-Order Correlation Coefficient (KROCC).111Formulations of these metrics are in the supplementary material. RMSE and PLCC are indicators of prediction accuracy, while SROCC and KROCC assess the prediction monotonicity [36]. When an NR-IQA model is attacked, greater robustness is indicated by smaller RMSE and larger PLCC, SROCC, and KROCC values. In this paper, we provide a theoretical analysis of robustness in terms of RMSE, and test all these metrics in the experimental part.

4 Methodology

In this section, we offer a theoretical exposition on improving NR-IQA models’ adversarial robustness in terms of the magnitude of changes in predicted scores. We show that the robustness can be enhanced by regularizing the norm of the gradient. We also propose a method for training a robust NR-IQA model using the norm regularization method.

4.1 Why to Regularize Gradient Norm?

In this subsection, we will outline the theoretical foundations regarding the relationship between the robustness in terms of score changes and the norm of the gradient. It raises the necessity of regularizing the norm of the input gradient of the predicted score. We prove that the magnitude of changes in predicted scores can be effectively approximated by the norm of , with the assumption that the norm of perturbations is bounded.

Theorem 1.

Suppose represents an NR-IQA model, is the strength of an attack, and denotes an input image. The maximum change in predicted scores of by against -bounded attacks is highly correlated to , which can be formulated as

| (3) |

Proof.

To begin, we apply the first-order Taylor expansion to the function in the vicinity of , yielding:

| (4) |

Then, . Meanwhile, has the maximum value when , and this leads to Eq. (3). ∎

This theorem establishes the connection between changes in predicted scores and the norm of the gradient. According to Theorem 1, suppose the strength of attacks is fixed, then the extent of score changes is primarily determined by the norm of the gradient . In practical terms, this signifies that the regularization of will lead to smaller fluctuations in predicted scores and thereby improve the adversarial robustness of against imperceptible attacks.

4.2 How to Regularize Gradient Norm?

To train a robust NR-IQA model incorporating gradient norm regularization, a direct way is to add the norm of gradients to the loss function, i.e.,

| (5) |

The loss function comprises two components: the loss tailored to the specific NR-IQA task, and the norm regularization term with a positive weight .

However, directly adding the term to the loss function leads to the requirement of double backpropagation for computing the gradient of this term with respect to model parameters, which is time-consuming and currently not suitable for large-scale DNNs [7]. Therefore, we employ an approximation technique for the regularization term. Drawing inspiration from the methodology presented in the work [12], we leverage the finite difference [38] technique to estimate , i.e.,

| (6) |

where is the step size and .

Finally, the loss function with the regularization of the norm of the gradient is as follows:

| (7) |

5 Experiments

In this section, we present extensive experiments conducted on various NR-IQA baseline models to validate the efficacy of our proposed Norm regularization Training (NT) strategy. We briefly overview our experimental setup in Sec. 5.1. Subsequently, in Sec. 5.2, we demonstrate the enhancement in robustness achieved by the NT strategy against a diverse set of attacks. Furthermore, we illustrate the role of the finite difference approximation in reducing the norm of the gradients (Sec. 5.3), as well as the relationship between attack intensity and robustness (Sec. 5.4). We also perform ablation studies on hyperparameters and in Sec. 5.5.

5.1 Experimental Settings

Experiments were carried out on the popular LIVEC dataset [9]. We randomly selected 80% of the images for training and the remaining 20% for testing and attacks.

Our experiments to assess the robustness of NR-IQA models are structured along three key dimensions, as depicted in Figure 3. The first dimension revolves around the choice of the baseline models. We evaluate our NT strategy on four prominent NR-IQA baseline models: HyperIQA [32], DBCNN [46], LinearityIQA [18], and MANIQA [41]. Each of these baseline models is referred to as “baseline,” while the models trained with NT are denoted as “baseline+NT.” The NT strategy is applied to HyperIQA with the weight , DBCNN, and LinearityIQA with and MANIQA with . For all models, the step size . Further training settings are provided in the supplementary material.

The second dimension involves the selection of attack methods. We employ four attack methods designed for NR-IQA tasks. These attacks include two white-box attacks: FGSM222FGSM was originally designed for classification tasks, but we modify its loss to for NR-IQA tasks (refer to the supplementary material). [10] and Perceptual Attack [47], as well as two black-box attacks: UAP333UAP is proposed as a white-box attack. We employ its perturbation generated on PaQ-2-PiQ model [42], and serve UAP as a black-box attack. [30] and Kor. Attack [17]. To ensure fairness in our evaluations, each attack method uses the same setting (i.e., employing the same hyperparameters in attack) when targeting different models. We set different hyperparameters for different attacks and ensure the majority of attacked images’ SSIM [37] was above 0.9 to satisfy the assumption that the MOS is the same for both images before and after the attack. Detailed hyperparameter information for these attacks can be found in the supplementary material. Black-box attacks are indicated with an asterisk in the tables of this paper.

The third dimension pertains to the evaluation metrics for NR-IQA models. As detailed in Sec. 3.2, we follow the evaluations in previous works and consider four metrics in this paper, i.e., RMSE, PLCC, SROCC, and KROCC. Additionally, we incorporate the robustness [47] into our analysis. This metric is proposed to assess the model robustness by measuring the relative score changes before and after attacks. Formulations of these metrics are shown in the supplementary material. Except for robustness, other metrics are conventionally computed by comparing the predicted scores by models against MOS provided by humans.444We normalize MOS to for a straightforward comparison. In our evaluation, we extend the analysis of these metrics to assess predicted scores by a model both before and after attacks, since attackers only possess prediction scores before attacks. Notably, in accordance with the attack definition in Eq. (1), the NT strategy primarily focuses on the magnitude of changes in predicted scores between predicted scores before and after attacks. It could be measured by RMSE between predicted scores before and after attacks.

5.2 Robustness Improvement

In this subsection, we present the performance of NR-IQA models on unattacked images (where R robustness is not applicable), as well as their adversarial robustness against different attack methods. Our experimental results are summarized into four key observations. We provide additional analysis of adversarial robustness improvement in the supplementary material.

| HyperIQA base / +NT | DBCNN base / +NT | LinearityIQA base / +NT | MANIQA base / +NT | |

| RMSE | 9.913 12.575 | 10.897 13.140 | 12.730 13.173 | 26.082 23.830 |

| SROCC | 0.899 0.859 | 0.866 0.856 | 0.832 0.820 | 0.876 0.871 |

| PLCC | 0.916 0.868 | 0.892 0.849 | 0.840 0.827 | 0.870 0.876 |

| KROCC | 0.728 0.670 | 0.688 0.666 | 0.641 0.627 | 0.696 0.692 |

Observation 1.

The NT strategy results in a slight decrease in the performance of NR-IQA models on clean images.

The performance of both the baseline models and their NT-enhanced versions on unattacked images are shown in Table 1. These metrics are calculated between MOS values and predicted scores on unattacked images. We can see that the NT strategy leads to a slight decrease in RMSE, SROCC, PLCC, and KROCC compared with baseline models. Similar trends were reported in the context of classification models that defense methods would cause a decline in classification accuracy on clean images. [33, 35, 43]. These findings suggest that enhanced robustness is often achieved at the cost of reducing performance on unattacked images. Nonetheless, the performance decline induced by the NT strategy is marginal and well within acceptable limits.

| MOS & Predicted Score After Attack | Score Before Attack & Score After Attack | |||||||

| HyperIQA base / +NT | DBCNN base / +NT | LinearityIQA base / +NT | MANIQA base / +NT | HyperIQA base / +NT | DBCNN base / +NT | LinearityIQA base / +NT | MANIQA base / +NT | |

| FGSM | 25.729 16.828 | 36.758 24.711 | 50.823 40.104 | 24.899 25.712 | 19.174 7.885 | 32.778 19.065 | 48.128 36.988 | 15.549 6.562 |

| Perceptual | 13.565 12.593 | 88.864 51.961 | 115.395 80.949 | 22.745 21.998 | 6.360 0.130 | 63.991 14.524 | 115.732 80.857 | 0.079 0.189 |

| UAP∗ | 17.765 16.363 | 19.775 17.188 | 16.997 16.847 | 23.109 27.832 | 10.583 8.131 | 14.833 10.922 | 20.813 19.434 | 5.795 5.592 |

| Kor.∗ | 18.564 17.667 | 12.617 12.707 | 19.500 17.865 | 18.423 17.395 | 13.698 10.107 | 6.514 5.298 | 14.807 12.407 | 7.759 6.680 |

| MOS & Predicted Score After Attack | Score Before Attack & Score After Attack | |||||||

| HyperIQA base / +NT | DBCNN base / +NT | LinearityIQA base / +NT | MANIQA base / +NT | HyperIQA base / +NT | DBCNN base / +NT | LinearityIQA base / +NT | MANIQA base / +NT | |

| FGSM | 0.021 0.810 | -0.318 0.200 | -0.375 -0.347 | 0.417 0.772 | 0.043 0.941 | -0.333 0.227 | -0.429 -0.426 | 0.428 0.878 |

| Perceptual | 0.815 0.858 | -0.127 0.643 | 0.477 0.567 | 0.876 0.871 | 0.938 1.000 | -0.160 0.773 | 0.542 0.685 | 1.000 1.000 |

| UAP∗ | 0.736 0.822 | 0.705 0.760 | 0.715 0.739 | 0.773 0.839 | 0.825 0.941 | 0.836 0.887 | 0.836 0.869 | 0.923 0.976 |

| Kor.∗ | 0.808 0.802 | 0.863 0.856 | 0.775 0.775 | 0.828 0.847 | 0.892 0.922 | 0.978 0.983 | 0.936 0.936 | 0.942 0.969 |

Observation 2.

The NT strategy significantly improves the robustness of NR-IQA models in most cases, where the robustness is in terms of RMSE, SROCC, PLCC, KROCC or robustness.

Due to space constraints, we only present the robustness results for RMSE (Table 2) and SROCC (Table 3) in this subsection. Comprehensive results for PLCC, KROCC and robustness can be found in the supplementary material. In both tables, columns 2-5 display the IQA metric calculated between MOS values of unattacked images and predicted scores on adversarial examples, while columns 6-9 showcase the metric calculated between predicted scores on unattacked images and scores on adversarial examples.

As shown in Table 2, when RMSE is computed between predicted scores before and after attacks, NR-IQA models trained with the NT strategy exhibit smaller score changes under nearly all attack scenarios compared to baseline models. These results confirm the correctness of our theoretical analysis in Sec. 4.1. The robustness improvement is especially significant when models are attacked by FGSM. The only exception is MANIQA when attacked by the Perceptual Attack. In this case, the RMSE of MANIQA is smaller than that of the NT-trained model, where the difference is only 0.11. We think this phenomenon can be attributed to inherent biases among test images. Furthermore, MANIQA and its NT-enhanced version exhibit significant robustness against the Perceptual Attack, as indicated by an SROCC value of 1 between predicted scores before and after the attack. This signifies that the Perceptual Attack has minimal impact on MANIQA and MANIQA+NT, resulting in a reasonably small difference in RMSE between the two models.

When considering RMSE results measured between MOS values and predicted scores after attacks, the robustness of NT-trained models is also improved. For example, the RMSE value of DBCNN under the FGSM attack is about 36.758, whereas that of DBCNN+NT is just 24.711. There are only 3 out of 16 cases where baseline+NT models perform worse than baseline models. Such occurrences are expected because the NT strategy does not leverage MOS information but relies on the original predicted scores.

Results shown in Table 3 demonstrate that the NT strategy can also enhance the robustness in terms of SROCC, although we are not clear about the theoretical connection between the NT strategy and SROCC. The improvement is particularly pronounced in white-box scenarios. Taking the HyperIQA model as an example, the robustness of the baseline model measured by SROCC is notably deficient under the FGSM attack. The SROCC value between MOS values and predicted scores is a mere 0.021, while the SROCC value between predicted scores before and after the FGSM attack is only 0.043. However, with the inclusion of the NT strategy, there is a significant enhancement in SROCC. The SROCC value between MOS values and predicted scores increases to 0.810, while the SROCC between scores before and after the FGSM attack rises to 0.941. This exemplifies the effectiveness of the NT strategy in boosting the SROCC robustness of NR-IQA models.

Observation 3.

In IQA tasks, the robustness in terms of distinct metrics is not completely the same.

In Table 2 and Table 3, it is evident that a model showing robustness in terms of RMSE when subjected to an attack method may not necessarily exhibit robustness in SROCC. Take the left part of the two tables as an example, for baseline models, we can see that HyperIQA achieves much better robustness in terms of RMSE than MANIQA against the UAP attack (17.765 vs. 23.109), but it performs worse in SROCC (0.736 vs. 0.773). Similar phenomena also occur with NT-trained models where LinearityIQA+NT shows better RMSE robustness but worse SROCC robustness than DBCNN+NT against the UAP attack. How to make a trade off between the adversarial robustness from different perspectives brings challenges in IQA tasks, potentially opening up new avenues for further exploration and research.

Observation 4.

The NT strategy exhibits a more effective defense against white-box attacks compared to black-box attacks.

Table 2 and Table 3 demonstrate that the improvement in RMSE / SROCC from the baseline to baseline+NT models is generally greater under white-box attacks than under black-box attacks. This trend is more clear from Table S7 in the supplementary material by comparing the averaged metrics of improvement.

This happens because the attack capability of existing black-box attacks is generally weaker than that of white-box attacks on NR-IQA models. Hence, the baseline models exhibit better robustness against black-box attacks compared to white-box attacks, making the robustness improvement brought by NT less evident in the black-box scenario. For instance, when attacked by black-box methods, the SROCC values between predicted scores before and after attacks for all baseline models exceed 0.8, while these values for most baseline models under white-box attacks are below 0.6. This observation highlights the importance of exploring effective black-box attacks on IQA models.

5.3 Norm Reduction

To validate the effectiveness of the NT strategy in reducing the norm of the gradient, as well as the accuracy of Eq. (6) in approximating the norm, we generate distribution plots of . Here, represents samples from the test set.

Figure 4 compares the norm distribution between baseline and baseline+NT models. We can see that the gradient norms of the baseline+NT models are all shifted towards the left compared to the baseline models. This indicates that models trained with the NT strategy exhibit a smaller gradient norm concerning the input image compared to the baseline models. These results confirm that Eq. (6) serves as a reliable approximation of the gradient norm.

To further demonstrate that a smaller enhances the robustness of an NR-IQA model against adversarial attacks, we draw a scatter plot to show the relationship between the adversarial robustness and the gradient norm. In Figure 5, the horizontal axis represents the logarithm of the average value across all test images. Points in the left part of Figure 5 generally follow a diagonal distribution from bottom left to top right, indicating that models with smaller gradient norms tend to exhibit better robustness in terms of RMSE. Moreover, points in the right part of Figure 5 are generally distributed from the top left to the bottom right. This reflects that models with smaller gradient norms tend to exhibit better robustness in terms of SROCC.

5.4 Attack Intensity and Robustness

To evaluate the robustness of the baseline model and the baseline+NT model under different attack intensities, we adjust the strength of the iterative FGSM attack (illustrated in the supplementary material) with different iterations and the norm of perturbations. Generally, a higher number of iterations and larger values correspond to more potent attacks. The attack intensity is quantified using SSIM, with smaller SSIM values signifying greater attack intensity.

Figure 6 presents the performance of HyperIQA and its NT-trained versions under attacks with varying intensities. As the attack intensities increase, the RMSE and SROCC values for HyperIQA and its NT version tend to get worse in general. This reflects that stronger attacks lead to decreased performance for both normally-trained and NT-trained models in most cases. Meanwhile, HyperIQA+NT model consistently keeps lower RMSE values than HyperIQA at the same attack intensity, regardless of the intensity levels. This demonstrates the effectiveness of the NT strategy against attacks with varying intensities.

5.5 Ablation Study

We conduct additional experiments to test the impact of hyperparameters in Eq. (7) for the NT strategy: the weight of the gradient norm and the step size in the finite difference. Due to the space limit, we present partial results and full results are shown in the supplementary material.

In Figure 7, we fix and vary in Eq. (7) in the range from to . Our analysis focuses on two aspects of an NR-IQA model: its performance on unattacked images and its robustness against attacks. For the former, we utilize SROCC on unattacked images across MOS values and predicted scores, and for the latter, we employ the RMSE between predicted scores before and after the FGSM attack. As increases, SROCC values on unattacked images tend to decrease on all baseline+NT models, while the RMSE values under the FGSM attack tends to decrease consistently. This implies that increasing enhances the robustness of NR-IQA models but leads to a performance decline on unattacked images.

To explore the effect of the step size in Eq. (7) on the performance of IQA models, we fix and vary in for DBCNN. In Table 4, we present the SROCC and RMSE values across MOS values and predicted scores for unattacked images, and SROCC and RMSE values between predicted scores before and after the FGSM attack for adversarial examples. In theory, a large cannot sufficiently represent the neighborhood of , so the approximation of the norm is inaccurate. The experimental results also confirm this point where the robustness of the model is worse when and . Conversely, an exceedingly small , such as , achieves effective defense performance but leads to a significant performance decline on unattacked images.

| 0.001 | 0.01 | 0.1 | 1 | ||

| Unattacked | SROCC | 0.788 | 0.856 | 0.846 | 0.844 |

| RMSE | 16.099 | 14.138 | 12.417 | 14.809 | |

| Attacked | SROCC | 0.577 | 0.200 | -0.3832 | -0.4406 |

| RMSE | 7.356 | 19.065 | 28.785 | 18.767 | |

6 Conclusion

To the best of our knowledge, this is the first work designing IQA-specific defense methods against adversarial attacks. Our work offers a rigorous theoretical proof that the score changes of NR-IQA models are related to the norm of the gradient when the perturbation is small. Furthermore, models trained with the proposed NT strategy exhibit significant improvement in adversarial robustness against both white-box and black-box attacks.

Limitations and future work. In this study, our primary theoretical analysis is on enhancing the prediction accuracy of NR-IQA models, i.e., reducing the changes in predicted scores when NR-IQA models are exposed to attacks. Nevertheless, an interesting and valuable avenue for future research is the development of NR-IQA models that demonstrate robustness in terms of prediction monotonicity like SROCC. Besides, we intend to explore our method applied to FR-IQA models in future work.

Acknowledgements. This work is partially supported by Sino-German Center (M 0187) and the NSFC under contract 62088102. Thank Ruohua Shi for supports during rebuttal. Thank Zhaofei Yu and Yajing Zheng for their valuable suggestions on the writing. Thank High-Performance Computing Platform of Peking University for providing computational resources.

References

- Abdullah et al. [2022] Hadi Abdullah, Aditya Karlekar, Vincent Bindschaedler, and Patrick Traynor. Demystifying limited adversarial transferability in automatic speech recognition systems. In ICLR, pages 1–17, 2022.

- Antsiferova et al. [2023] Anastasia Antsiferova, Khaled Abud, Aleksandr Gushchin, Sergey Lavrushkin, Ekaterina Shumitskaya, Maksim Velikanov, and Dmitriy Vatolin. Comparing the robustness of modern no-reference image-and video-quality metrics to adversarial attacks. arXiv preprint arXiv:2310.06958, 2023.

- Carlini and Wagner [2016] Nicholas Carlini and David Wagner. Towards evaluating the robustness of neural networks. In IEEE Symposium on Security and Privacy, pages 39–57, 2016.

- Ding et al. [2022] Keyan Ding, Kede Ma, Shiqi Wang, and Eero P. Simoncelli. Image quality assessment: Unifying structure and texture similarity. IEEE TPAMI, 44(5):2567–2581, 2022.

- Dosovitskiy et al. [2020] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, and Neil Houlsby. An image is worth words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- Duanmu et al. [2017] Zhengfang Duanmu, Kede Ma, and Zhou Wang. Quality-of-experience of adaptive video streaming: Exploring the space of adaptations. In ACM MM, pages 1752–1760, 2017.

- Finlay and Oberman [2021] Chris Finlay and Adam M. Oberman. Scaleable input gradient regularization for adversarial robustness. Machine Learning with Applications, 3:100017, 2021.

- Fu et al. [2023] Haisheng Fu, Feng Liang, Jie Liang, Binglin Li, Guohe Zhang, and Jingning Han. Asymmetric learned image compression with multi-scale residual block, importance scaling, and post-quantization filtering. IEEE TCSVT, 33(8):4309–4321, 2023.

- Ghadiyaram and Bovik [2016] Deepti Ghadiyaram and Alan C. Bovik. Massive online crowdsourced study of subjective and objective picture quality. IEEE TIP, 25:372–387, 2016.

- Goodfellow et al. [2015] Ian J. Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and harnessing adversarial examples. In ICLR, pages 1–11, 2015.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In CVPR, pages 770–778, 2016.

- Ilyas et al. [2019] Andrew Ilyas, Logan Engstrom, and Aleksander Madry. Prior convictions: Black-box adversarial attacks with bandits and priors. In ICLR, pages 1–23, 2019.

- Inkawhich et al. [2019] Nathan Inkawhich, Wei Wen, Hai (Helen) Li, and Yiran Chen. Feature space perturbations yield more transferable adversarial examples. In CVPR, pages 7066–7074, 2019.

- Isogawa et al. [2019] Mariko Isogawa, Dan Mikami, Kosuke Takahashi, Daisuke Iwai, Kosuke Sato, and Hideaki Kimata. Which is the better inpainted image? Training data generation without any manual operations. IJCV, 127(11-12):1751–1766, 2019.

- Ke et al. [2021] Junjie Ke, Qifei Wang, Yilin Wang, Peyman Milanfar, and Feng Yang. MUSIQ: Multi-scale image quality transformer. In ICCV, pages 5148–5157, 2021.

- Khan et al. [2023] Manzoor Ahmed Khan, Hesham El-Sayed, Sumbal Malik, Talha Zia, Jalal Khan, Najla Alkaabi, and Henry Ignatious. Level-5 autonomous driving - Are we there yet? A review of research literature. ACM Computing Surveys, 55:27:1–27:38, 2023.

- Korhonen and You [2022] Jari Korhonen and Junyong You. Adversarial attacks against blind image quality assessment models. In Proceedings of the 2nd Workshop on Quality of Experience in Visual Multimedia Applications, pages 3–11, 2022.

- Li et al. [2020] Dingquan Li, Tingting Jiang, and Ming Jiang. Norm-in-norm loss with faster convergence and better performance for image quality assessment. In ACM MM, pages 789–797, 2020.

- Liang et al. [2021] Kaizhao Liang, Jacky Y. Zhang, Boxin Wang, Zhuolin Yang, Sanmi Koyejo, and Bo Li. Uncovering the connections between adversarial transferability and knowledge transferability. In ICML, pages 1–11, 2021.

- Liu et al. [2019] Aishan Liu, Xianglong Liu, Jiaxin Fan, Yuqing Ma, Anlan Zhang, Huiyuan Xie, and Dacheng Tao. Perceptual-sensitive GAN for generating adversarial patches. In AAAI, pages 1028–1035, 2019.

- Liu et al. [2022] Yujia Liu, Ming Jiang, and Tingting Jiang. Transferable adversarial examples based on global smooth perturbations. Computers & Security, 121:1–10, 2022.

- Mishra et al. [2021] Sushruta Mishra, Anuttam Dash, and Lambodar Jena. Use of deep learning for disease detection and diagnosis. In Bio-inspired Neurocomputing, pages 181–201. Springer Singapore, 2021.

- Mittal et al. [2012] Anish Mittal, Anush K. Moorthy, and Alan C. Bovik. No-reference image quality assessment in the spatial domain. IEEE TIP, 21(12):4695–4708, 2012.

- Moorthy and Bovik [2011] Anush K. Moorthy and Alan C. Bovik. Blind image quality assessment: From natural scene statistics to perceptual quality. IEEE TIP, 20(12):3350–3364, 2011.

- Moosavi-Dezfooli et al. [2016] Seyed Mohsen Moosavi-Dezfooli, Alhussein Fawzi, and Pascal Frossard. DeepFool: A simple and accurate method to fool deep neural networks. In CVPR, pages 2574–2582, 2016.

- Ozbulak et al. [2019] Utku Ozbulak, Arnout Van Messem, and Wesley De Neve. Impact of adversarial examples on deep learning models for biomedical image segmentation. In MICCAI, pages 300–308, 2019.

- Rippel et al. [2019] Oren Rippel, Sanjay Nair, Carissa Lew, Steve Branson, Alexander G. Anderson, and Lubomir D. Bourdev. Learned video compression. In ICCV, pages 3453–3462, 2019.

- Ronneberger et al. [2015] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-Net: Convolutional networks for biomedical image segmentation. In MICCAI, pages 234–241, 2015.

- Shafahi et al. [2019] Ali Shafahi, Mahyar Najibi, Amin Ghiasi, Zheng Xu, John P. Dickerson, Christoph Studer, Larry S. Davis, Gavin Taylor, and Tom Goldstein. Adversarial training for free! In NeurIPS, pages 3353–3364, 2019.

- Shumitskaya et al. [2022] Ekaterina Shumitskaya, Anastasia Antsiferova, and Dmitriy S. Vatolin. Universal perturbation attack on differentiable no-reference image- and video-quality metrics. In BMVC, pages 1–12, 2022.

- Shumitskaya et al. [2023] Ekaterina Shumitskaya, Anastasia Antsiferova, and Dmitriy S. Vatolin. Fast adversarial CNN-based perturbation attack on No-Reference image-and video-quality metrics. In The First Tiny Papers Track at ICLR, pages 1–4, 2023.

- Su et al. [2020] Shaolin Su, Qingsen Yan, Yu Zhu, Cheng Zhang, Xin Ge, Jinqiu Sun, and Yanning Zhang. Blindly assess image quality in the wild guided by a self-adaptive hyper network. In CVPR, pages 3664–3673, 2020.

- Szegedy et al. [2014] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. Intriguing properties of neural networks. In ICLR, pages 1–10, 2014.

- Thys et al. [2019] Simen Thys, Wiebe Van Ranst, and Toon Goedemé. Fooling automated surveillance cameras: Adversarial patches to attack person detection. In CVPR Workshops, pages 1–7, 2019.

- Tramèr et al. [2018] Florian Tramèr, Alexey Kurakin, Nicolas Papernot, Ian J. Goodfellow, Dan Boneh, and Patrick D. McDaniel. Ensemble adversarial training: Attacks and defenses. In ICLR, pages 1–22, 2018.

- VQEG [2000] VQEG. Final report from the Video Quality Experts Group on the validation of objective models of video quality assessment, 2000.

- Wang et al. [2004] Zhou Wang, Alan C. Bovik, Hamid R. Sheikh, and Eero P. Simoncelli. Image quality assessment: From error visibility to structural similarity. IEEE TIP, 13(4):600–12, 2004.

- Wilmott et al. [1995] Paul Wilmott, Sam Howison, and Jeff Dewynne. The Mathematics of Financial Derivatives: A Student Introduction. Cambridge University Press, 1995.

- Xiao et al. [2018] Chaowei Xiao, Bo Li, Jun-Yan Zhu, Warren He, Mingyan Liu, and Dawn Song. Generating adversarial examples with adversarial networks. In IJCAI, pages 3905–3911, 2018.

- Xie et al. [2019] Cihang Xie, Zhishuai Zhang, Yuyin Zhou, Song Bai, Jianyu Wang, Zhou Ren, and Alan L. Yuille. Improving transferability of adversarial examples with input diversity. In CVPR, pages 2730–2739, 2019.

- Yang et al. [2022] Sidi Yang, Tianhe Wu, Shuwei Shi, Shanshan Lao, Yuan Gong, Mingdeng Cao, Jiahao Wang, and Yujiu Yang. MANIQA: Multi-dimension attention network for no-reference image quality assessment. In CVPR Workshops, pages 1190–1199, 2022.

- Ying et al. [2020] Zhenqiang Ying, Haoran Niu, Praful Gupta, Dhruv Mahajan, Deepti Ghadiyaram, and Alan C. Bovik. From patches to pictures (PaQ-2-PiQ): Mapping the perceptual space of picture quality. In CVPR, pages 3575–3585, 2020.

- Zhang et al. [2019a] Dinghuai Zhang, Tianyuan Zhang, Yiping Lu, Zhanxing Zhu, and Bin Dong. You only propagate once: Accelerating adversarial training via maximal principle. In NeurIPS, pages 227–238, 2019a.

- Zhang et al. [2018] Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. The unreasonable effectiveness of deep features as a perceptual metric. In CVPR, pages 586–595, 2018.

- Zhang et al. [2019b] Wenlong Zhang, Yihao Liu, Chao Dong, and Yu Qiao. RankSRGAN: Generative adversarial networks with ranker for image super-resolution. In ICCV, pages 3096–3105, 2019b.

- Zhang et al. [2020] Weixia Zhang, Kede Ma, Jia Yan, Dexiang Deng, and Zhou Wang. Blind image quality assessment using a deep bilinear convolutional neural network. IEEE TCSVT, 30(1):36–47, 2020.

- Zhang et al. [2022] Weixia Zhang, Dingquan Li, Xiongkuo Min, Guangtao Zhai, Guodong Guo, Xiaokang Yang, and Kede Ma. Perceptual attacks of no-reference image quality models with human-in-the-loop. In NeurIPS, pages 2916–2929, 2022.

- Zhao et al. [2018] Zhengli Zhao, Dheeru Dua, and Sameer Singh. Generating natural adversarial examples. In ICLR, pages 1–15, 2018.

- Zhu et al. [2020] Chen Zhu, Yu Cheng, Zhe Gan, Siqi Sun, Tom Goldstein, and Jingjing Liu. FreeLB: Enhanced adversarial training for natural language understanding. In ICLR, pages 1–14, 2020.

Supplementary Material

In the supplementary material, we offer the formulation of NR-IQA metrics (Sec. 3.1), detailed proofs of the finite difference in Eq. (6) (Sec. 4.2), additional implementation details (Sec. 5.1), further robustness analysis (Sec. 5.2), and supplementary ablation study results (Sec. 5.5). Additionally, we present more visualization results.

S7 Formulations of RMSE, SROCC, KROCC, PLCC and Robustness

In this section, we will introduce IQA-specific metrics RMSE, SROCC, KROCC, PLCC, and robustness mentioned in Sec 3.2.

RMSE measures the difference between MOS values and predicted scores, which is represented as

| (S8) |

In this equation, is the number of images. and represent the MOS and predicted score of the image, respectively. The smaller the RMSE value is, the smaller the differences between the two groups of scores.

SROCC measures the correlation between MOS values and predicted scores to what extent the correlation can be described by a monotone function. The specific formulation is as follows:

| (S9) |

where denotes the difference between orders of the image in subjective and objective quality scores. The closer the SROCC value is to 1, the more consistent the ordering is between two groups of scores.

KROCC measures the degree of concordance in the ranking of MOS values and predicted scores. The formulation is:

| (S10) |

In this equation, and represent the number of image pairs in the test dataset with consistent and inconsistent ranking of subjective and objective quality scores, respectively. The closer the KROCC value is to 1, the more consistent the ordering is between two groups of scores.

PLCC measures the linear correlation between MOS values and predicted scores, which is formulated as

| (S11) |

The closer the PLCC value is to 1, the higher the positive correlation between the two groups of scores.

robustness was recently proposed by Zhang et al. [47]. It takes the maximum allowable change in quality prediction into consideration:

| (S12) |

where is the number of images, is the image to be attack, is the attacked version of . is the IQA model for quality prediction. and are the maximum MOS and minimum MOS among all MOS values. A larger value means better robustness.

S8 Proof of Eq. (6)

Eq. (6) in the main context illustrates how to approximate the norm of by the finite difference, which is expressed as

In this formula, is a small step size and . We provide a proof of this approximation in this section.

Proof.

We expand at point by the first order Taylor estimation, i.e.,

| (S13) |

Since , we have

| (S14) |

Therefore,

| (S15) |

and can be approximated by

| (S16) |

∎

S9 Experimental Settings

In Sec. 5.1 in the main manuscript, part of the experimental settings are reported. In this section, we report the experimental environment and detailed experimental settings in our experiments.

S9.1 Experimental Environment

We conducted all the training, test, and attack on an NVIDIA GeForce RTX 2080 GPU with 11GB of memory.

S9.2 Training Settings

For the four NR-IQA models considered in our study, namely, HyperIQA [32], DBCNN [46], LinearityIQA [18], and MANIQA [41], we used publicly available code provided by their respective authors to train these models on the same training dataset.

Due to the memory requirements associated with approximating the norm, the batch size used for training the NR-IQA models had to be adjusted to prevent memory overflow. Other training settings are shown in Table S5. To ensure consistency and fairness in our comparisons, the same setting is utilized when training both the baseline and NT versions of each NR-IQA model.

| Model | Architecture | Input Size | Patches per Image | Batch Size | Training Epochs |

| HyperIQA / HyperIQA+NT | ResNet50 | 224224 | 25 | 16 | 16 |

| DBCNN / DBCNN+NT | VGG and Its Variant | 500500 | - | 6 | 50 |

| LinearityIQA / LinearityIQA+NT | ResNet34 | 498664 | - | 4 | 30 |

| MANIQA / MANIQA+NT | ViT-B/8 | 224224 | 20 | 1 | 30 |

S9.3 Normalization of MOS

In our selected 4 NR-IQA models, MANIQA [41] is a special NR-IQA model in which MOS is scaled to the range of , and it leads to the predicted score in the range of . Furthermore, the different scales of MOS in different NR-IQA models result in a difference in the RMSE metric.

For a fair comparison across different NR-IQA models, we normalize the MOS into the range . The normalization formula is depressed as follows:

| (S17) |

In this formula, and represent the minimal and maximal MOS of the training data, respectively. In this paper, and .

S10 (I-)FGSM for NR-IQA Tasks

We mention the FGSM attack in Sec. 5.1 in the main manuscript. We will introduce the details of the setting of the FGSM attack in this section. FGSM [10] is first proposed for classification tasks, which is concise and efficient in attacking classification models. In our paper, we perceive FGSM as a white-box attack for NR-IQA models with a redesigned loss function. We will first introduce the FGSM attack in classification tasks and then the FGSM attack adapted to NR-IQA models below.

In the context of classification, FGSM is a straightforward non-iterative attack method, which is expressed as follows:

| (S18) |

In this equation, represents the adversarial example, is the original image, denotes the norm bound of perturbations, is the loss function, signifies the neural network function, and represents the true label of . A common use of is cross-entropy loss.

I-FGSM is an iterative extension of FGSM, which is described as follows:

| (S19) |

where is the current iteration step and is the step size, the total number of iteration steps is . The operator projects the adversarial examples onto the space of the neighborhood in the -ball around .

In the NR-IQA task, we take as the predicted score of the clean image . In this paper, we choose the optimization object according to the predicted score of the image and define the loss function as follows:

| (S20) |

where represents the predicted score of the attacked image. The object is to maximize the predicted score for a low-quality image, thereby misleading the IQA model into assigning a high score to the adversarial example. Conversely, for a high-quality image, the goal is to minimize the predicted score to generate effective adversarial examples.

There is an interesting observation emerged from the experiment. We find that the choice of the loss function has a significant impact on the efficacy of the FGSM attack. Specifically, we also try the mean absolute error loss:

| (S21) |

and the mean squared error loss:

| (S22) |

Taking the DBCNN as an example, we report the RMSE, SROCC, PLCC, and KROCC after the FGSM attack with different loss functions in Table S6555As for attack methods, larger RMSE and smaller SROCC, KROCC, PLCC represents stronger attack ability. where . It is obvious that the effect of the FGSM attack is notably diminished when the loss function is or . Especially when the mean absolute loss is used, the changes of RMSE, SROCC, PLCC, and KROCC for all models are very minimal.

Investigating the relationship between the loss function and the ability of attacks is an interesting domain of research.

| MOS & Predicted Score After Attack | ||||

| RMSE | SROCC | PLCC | KROCC | |

| 10.0734 | 0.8994 | 0.8844 | 0.7177 | |

| 24.354 | 0.2795 | 0.2092 | 0.2096 | |

| 36.758 | -0.318 | -0.383 | -0.146 | |

| Predicted Scores Before & After Attack | ||||

| RMSE | SROCC | PLCC | KROCC | |

| 13.0829 | 0.754 | 0.705 | 0.6065 | |

| 14.5819 | 0.6351 | 0.5886 | 0.4689 | |

| 32.778 | -0.333 | -0.418 | -0.071 | |

S11 Hyperparameters of Attacks

In Sec. 5 in the main manuscript, 4 attack methods are utilized. For each attack method, there are hyperparameters which affect the strength of the attack. Table S7 summarizes the chosen hyperparameters in tested attack methods in the main experiment, i.e., experiments in Sec. 5.

| Method | Hyperparameters |

| FGSM | one step, |

| Perceptual Attack | constraint: SSIM, weight =1,000,000 |

| UAP∗ | scale |

| Kor.∗ Attack | learning rate: |

S12 Further Robustness Analysis

In this section, we will further analyze the effectiveness of the NT strategy in improving the robustness of NR-IQA models. In Sec. S12.1, we present the robustness of baseline models and their NT-enhanced versions measured by KROCC, PLCC, and robustness [47]. In Sec. S12.2, we report the average metrics of RMSE and SROCC improvement for both baseline models and their NT-enhanced versions. For each model, we provide the scatter plots of predicted scores before and after the perceptual attack in Sec. S12.3, which intuitively show the effectiveness of the NT strategy.

S12.1 Robustness in Terms of KROCC, PLCC and Robustness

In Sec. 5.2 in the main manuscript, the robustness performances in terms of RMSE and SROCC are reported. Table S8, Table S9 and Table S10 show the robustness performances of NR-IQA models in terms of KROCC, PLCC, and robustness against different attack methods, respectively. Specifically, We evaluate robustness on four baseline methods as well as their NT-trained models with .

In Table S8, NR-IQA models with the NT strategy outperform their baseline models under all attacks when KROCC is measured between predicted scores before and after attacks. Among them, HyperIQA+NT witnesses a larger improvement in KROCC compared to its baseline under the FGSM attack, with KROCC increasing from of HyperIQA to using the NT strategy. Meanwhile, MANIQA demonstrates strong robustness against the Perceptual Attack, achieving a KROCC (scores before and after the attack) value of . This means Perceptual Attack could not change the rank order of predicted scores before and after the attack on MANIQA. This phenomenon is also observed in the results of SROCC robustness.

| MOS & Predicted Score After Attack | Score Before Attack & Score After Attack | |||||||

| HyperIQA base / +NT | DBCNN base / +NT | LinearityIQA base / +NT | MANIQA base / +NT | HyperIQA base / +NT | DBCNN base / +NT | LinearityIQA base / +NT | MANIQA base / +NT | |

| FGSM | 0.020 0.610 | -0.146 0.136 | -0.197 -0.184 | 0.296 0.584 | 0.043 0.806 | -0.071 0.217 | -0.156 -0.171 | 0.332 0.749 |

| Perceptual | 0.627 0.669 | -0.079 0.471 | 0.350 0.415 | 0.870 0.876 | 0.837 0.997 | -0.091 0.628 | 0.440 0.566 | 1.000 1.000 |

| UAP∗ | 0.548 0.628 | 0.510 0.568 | 0.526 0.543 | 0.578 0.651 | 0.634 0.797 | 0.643 0.708 | 0.664 0.694 | 0.766 0.871 |

| Kor.∗ | 0.614 0.615 | 0.6780.669 | 0.585 0.587 | 0.637 0.658 | 0.724 0.777 | 0.874 0.895 | 0.777 0.786 | 0.790 0.850 |

| MOS & Predicted Score After Attack | Score Before Attack & Score After Attack | |||||||

| HyperIQA base / +NT | DBCNN base / +NT | LinearityIQA base / +NT | MANIQA base / +NT | HyperIQA base / +NT | DBCNN base / +NT | LinearityIQA base / +NT | MANIQA base / +NT | |

| FGSM | -0.009 0.801 | -0.383 0.251 | -0.497 -0.387 | 0.599 0.861 | 0.042 0.926 | -0.418 0.196 | -0.569 -0.439 | 0.535 0.929 |

| Perceptual | 0.830 0.868 | -0.030 0.585 | 0.487 0.528 | 0.696 0.691 | 0.937 1.000 | -0.005 0.719 | 0.522 0.582 | 0.998 0.995 |

| UAP∗ | 0.733 0.817 | 0.701 0.776 | 0.694 0.729 | 0.766 0.837 | 0.826 0.943 | 0.811 0.884 | 0.805 0.876 | 0.928 0.978 |

| Kor.∗ | 0.801 0.806 | 0.875 0.868 | 0.774 0.774 | 0.838 0.856 | 0.875 0.933 | 0.972 0.980 | 0.914 0.933 | 0.942 0.969 |

In Table S9, NR-IQA models with the NT strategy perform better than their baseline models in most cases when PLCC is measured between predicted scores before and after attacks. For example, when the attack method is the Percepural Attack, the PLCC of DBCNN is -0.005 while the PLCC of DBCNN+NT is 0.719. The only exception is MANIQA where MANIQA+NT performs worse than MANIQA when attacked by the Perceptual Attack. This trend is consistent with the results reported in RMSE robustness.

From Table S8 and Table S9, we can conclude that the robustness of NR-IQA models in terms of RMSE and PLCC have similar trends, while robustness in terms of SROCC and KROCC show similar patterns. Additionally, the robustness improvement caused by the NT strategy is more obvious when NR-IQA models are attacked in white-box scenarios than in black-box scenarios.

From Table S10, we can see that NR-IQA methods with our NT strategy generally perform better than their baselines. However, it’s essential to note that the definition of robustness assigns a higher weight to images with extremely large scores (close to ) or extremely small scores (close to ), whereas RMSE treats each image equally. Consequently, in scenarios where the DBCNN model is attacked by Kor. attack, the NT model shows improvement in the RMSE metric but a decrease in the robustness compared to its baseline. Similar trends are observed when the LinearityIQA model is attacked by UAP. Although different metrics focus on different aspects, the proposed NT strategy improves all robustness metrics in most cases.

| HyperIQA base / +NT | DBCNN base / +NT | LinearityIQA base / +NT | MANIQA base / +NT | |

| FGSM | 0.659 1.099 | 0.328 0.671 | 1.011 1.389 | 2.957 3.864 |

| Perceptual | 2.249 3.047 | 0.938 2.076 | 1.011 1.398 | 5.492 4.784 |

| UAP∗ | 1.180 1.285 | 1.054 1.067 | 1.161 1.096 | 3.459 3.464 |

| Kor.∗ | 0.980 1.092 | 1.333 1.323 | 0.883 1.000 | 3.299 3.319 |

S12.2 Averaged Metrics of RMSE and SROCC Improvement

For an NR-IQA model subjected to an attack method, we calculate the difference in RMSE (or SROCC) between its NT version and the original model, denoted as RMSE (or SROCC). We then average RMSE and SROCC for both white-box and black-box attacks, as shown in Table S11, to corroborate Observation 4 presented in the main manuscript. These results further confirm the effectiveness of NT in mitigating both white-box and black-box attacks.

| HyperIQA | DBCNN | LinearityIQA | MANIQA | |

| White | -8.7595 / 0.4800 | -31.5900 / 0.7465 | -23.0075 / 0.0730 | -4.4385 / 0.2250 |

| Black | -3.0215 / 0.0730 | -2.5635 / 0.0280 | -1.8895 / 0.0165 | -0.6410 / 0.0400 |

| Overall | -5.8905 / 0.2765 | -17.0768 / 0.3873 | -12.4485 / 0.0448 | -2.5398 / 0.1325 |

S12.3 Distributions of Predicted Scores

Figure S8–S11 illustrate the absolute differences between predicted scores before and after various attacks for all test images (from the first row to the last row: FGSM, Perceptual Attack, UAP, and Kor. attack). The fitted distribution is presented on the right side of each image.

It is evident that all models trained with the NT strategy exhibit smaller score changes compared to their corresponding baseline models. Additionally, we observe an interesting trend: the NT strategy enhances robustness for different NR-IQA models at various image quality levels.

For example, considering the Perceptual Attack, all points for HyperIQA+NT closely align with the line “difference of predicted scores = 0”. This highlights the significant effectiveness of the NT strategy in minimizing score changes with small perturbations for HyperIQA. For the DBCNN model, it is clear that the NT strategy brings about more reduction in score changes for images with MOS between and .

Conversely, in the case of LinearityIQA, the effectiveness of the NT strategy is more obvious on high-quality images with MOS in , while it proves more effective on low-quality images with MOS in for MANIQA. This discovery reflects that the NT strategy has varying impacts on images with different quality levels, and these impacts are closely tied to the NR-IQA models. Exploring the enhancement of adversarial robustness in NR-IQA models across different image quality levels represents a valuable avenue for research. Such investigations can shed light on the properties of NR-IQA models in predicting scores for images of differing quality.

S13 Full Results of Ablation Studies

In Figure S12, we show the full results of the ablation study of (mentioned in Sec. 5.5 in the main manuscript). Our analysis focuses on two aspects of an NR-IQA model: its performance on unattacked images and its robustness against attacks. For the former, we utilize SROCC on unattacked images across MOS values and predicted scores, and for the latter, we employ the RMSE between predicted scores before and after the FGSM attack.

As increases, the performance of baseline+NT models has the following trend on unattacked images. SROCC values generally decrease with the rising , while RMSE values exhibit an upward trend (except for MANIQA). It is an interesting observation that the RMSE value of MANIQA fluctuates as changes, and the RMSE tends to decrease with larger . When attacked by the FGSM attack, the RMSE values of all baseline+NT models decrease consistently with the increase of . Except for LinearityIQA, the SROCC values of other models increase as becomes larger. This implies that increasing tends to enhance the robustness of NR-IQA models but leads to a performance decline on unattacked images.

S14 Visualization Results

In this section, we present visualization results to illustrate the effectiveness of the NT strategy under FGSM attack with different attack intensities and UAP. Under FGSM attack with different attack intensities, for each pair of baseline and baseline+NT models, we provide two sets of visualization results: one for high-quality images and the other for low-quality images. We show the normalized MOS of the original image. Under the UAP attack, we provide one adversarial sample for an NR-IQA model. We display adversarial examples for both the baseline model and the baseline+NT model, along with the corresponding score changes (predicted score before attack predicted score after attack).

Figure S13 shows visualization results of FGSM attack for HyperIQA, DBCNN, and their NT versions. Figure S14 displays visualization results of FGSM attack for LinearityIQA, MANIQA, and their NT versions. Figure S15 shows visualization results of UAP attack for HyperIQA, DBCNN, LinearityIQA, MANIQA, and their NT versions.

From Figure S13 and Figure S14, we can see that the imperceptibility of adversarial perturbations for images gets worse as the attack intensity increases. However, despite this, the NT models consistently exhibit smaller score changes than the baseline models in most cases. Consider HyperIQA and its NT version as an example. When attacked by the strongest FGSM attack (iter=10, eps=0.01), the score change for HyperIQA+NT on the high-quality image is , whereas the score change for HyperIQA is . Similarly, for the low-quality image, the score change for the NT version is , while the change for the baseline model is . From Figure S15, we can see that with the same adversarial sample, baseline and their NT versions have different defense performances. For example, the score change for HyperIQA+NT on its adversarial sample is , whereas HyperIQA on the same adversarial sample is .