DeepParticle: learning invariant measure by a deep neural network minimizing Wasserstein distance on data generated from an interacting particle method

Abstract

We introduce DeepParticle, a method to learn and generate invariant measures of stochastic dynamical systems with physical parameters based on data computed from an interacting particle method (IPM). We utilize the expressiveness of deep neural networks (DNNs) to represent the transform of samples from a given input (source) distribution to an arbitrary target distribution, neither assuming distribution functions in closed form nor the sample transforms to be invertible, nor the sample state space to be finite. In the training stage, we update the weights of the network by minimizing a discrete Wasserstein distance between the input and target samples. To reduce the computational cost, we propose an iterative divide-and-conquer (a mini-batch interior point) algorithm, to find the optimal transition matrix in the Wasserstein distance. We present numerical results to demonstrate the performance of our method for accelerating the computation of invariant measures of stochastic dynamical systems arising in computing reaction-diffusion front speeds in 3D chaotic flows by using the IPM. The physical parameter is a large Péclet number reflecting the advection-dominated regime of our interest.

AMS subject classification: 35K57, 37M25, 49Q22, 65C35, 68T07.

Keywords: physically parameterized invariant measures, interacting particle method, deep learning, Wasserstein distance, front speeds in chaotic flows.

1 Introduction

In recent years, deep neural networks (DNNs) have achieved unprecedented levels of success in a broad range of areas such as computer vision, speech recognition, natural language processing, and health sciences, producing results comparable or superior to human experts [31, 18]. The impacts have reached physical sciences where traditional first-principle based modeling and computational methodologies have been the norm. Thanks in part to the user-friendly open-source computing platforms from industry (e.g. TensorFlow and PyTorch), there have been vibrant activities in applying deep learning tools for scientific computing, such as approximating multivariate functions, solving ordinary/partial differential equations (ODEs/PDEs) and inverse problems using DNNs; see e.g. [29, 52, 13, 14, 21, 45, 26, 2, 72, 50, 60, 73, 51, 25, 37, 36, 49, 66, 71, 1, 64, 39, 58, 70] and references therein.

There are many classical works on the approximation power of neural networks; see e.g. [12, 23, 15, 48]. For recent works on the expressive (approximation) power of DNNs; see e.g. [10, 55, 69, 45, 39, 58]. In [21], the authors showed that DNNs with rectified linear unit (ReLU) activation function and enough width/depth contain the continuous piece-wise linear finite element space.

Solving ODEs or PDEs by a neural network (NN) approximation is known in the literature dating back at least to the 1990’s; see e.g. [32, 44, 30]. The main idea in these works is to train NNs to approximate the solution by minimizing the residual of the ODEs or PDEs, along with the associated initial and boundary conditions. These early works estimate neural network solutions on a fixed mesh, however. Recently DNN methods are developed for Poisson and eigenvalue problems with a variational principle characterization (deep Ritz, [14]), for a class of high-dimensional parabolic PDEs with stochastic representations [20], and for advancing finite element methods [22, 5, 4]. The physics-informed neural network (PINN) method [51] and a deep Galerkin method (DGM) [60] compute PDE solutions based on their physical properties. For parametric PDEs, a deep operator network (DeepONet) learns operators accurately and efficiently from a relatively small dataset based on the universal approximation theorem of operators [40]; a Fourier neural operator method [34] directly learns the mapping from functional parametric dependence to the solutions (of a family of PDEs). Deep basis learning is studied in [43] to improve proper orthogonal decomposition for residual diffusivity in chaotic flows [42], among other works on reduced order modeling [29, 62, 8]. In [71, 1], weak adversarial network methods are studied for weak solutions and inverse problems, see also related studies on PDE recovery from data via DNN [37, 36, 49, 66] among others. In the context of surrogate modeling and uncertainty quantification (UQ), DNN methods include Bayesian deep convolutional encoder-decoder networks [72], deep multi-scale model learning [63], physics-constrained deep learning method [73], see also [26, 55, 25, 68] and references therein. For DNN applications in mean field games (high dimensional optimal control problems) and various connections with numerical PDEs, see [6, 7, 53, 3, 35] and references therein. In view of the above literature, the DNN interactions with numerical PDE methods mostly occur in the Eulerian setting with PDE solutions defined in either the strong or weak (variational) sense.

Our goal here is to study deep learning in a Lagrangian framework of multi-scale PDE problems, coming naturally from our recent work ([41], reviewed in section 4.2 later) on a convergent interacting particle method (IPM) for computing large-scale reaction-diffusion front speeds through chaotic flows. The method is based on a genetic algorithm that evolves a large ensemble of uniformly distributed particles at the initial time to another ensemble of particles obeying an invariant measure at a large time. The front speed is readily computed from the invariant measure. Though the method is mesh-free, the computational costs remain high as advection becomes dominant at large Péclet numbers. Clearly, it is desirable to initialize particle distribution with some resemblance of the target invariant measure instead of starting from the uniform distribution. Hence learning from samples of invariant measure at a smaller Péclet number with more affordable computation to predict an invariant measure at a larger Péclet number becomes a natural problem to study.

Specifically, we shall develop a DNN to map a uniform distribution (source) to an invariant measure (target), where is the reciprocal of Péclet number, and consists of network weights and . The network is deep from input to output with Sigmoid layers while the first three layers are coupled to a shallow companion network to account for the effects of parameter on the network weights. In addition, we include both local and nonlocal skip connections along the deep direction to assist information flow. The network is trained by minimizing the 2-Wasserstein distance (2-WD) between two measures and [61]. We consider a discrete version of 2-WD for finitely many samples of and , which involves a linear program (LP) optimizing over doubly stochastic matrices [59]. Directly solving the LP by the interior point method [65] is too costly. We devise a mini-batch interior point method by sampling smaller sub-matrices while preserving row and column sums. This turns out to be very efficient and integrated well with the stochastic gradient descent (SGD) method for the entire network training.

We shall conduct three numerical experiments to verify the performance of the proposed DeepParticle method. The first example is a synthetic data set on , where is a uniform distribution and is a normal distribution with zero mean and variance . In the second example, we compute the Kolmogorov-Petrovsky-Piskunov (KPP) front speed in a 2D steady cellular flow and learn the invariant measures corresponding to different ’s. In this experiment, is a uniform distribution on , and an empirical invariant measure obtained from IPM simulation of reaction-diffusion particles in advecting flows with Péclet number . Finally, we compute the KPP front speed in a 3D time-dependent Kolmogorov flow with chaotic streamlines and study the IPM evolution of the measure in three-dimensional space. In this experiment, is a uniform distribution on , and is an empirical invariant measure obtained from IPM simulation of reaction-diffusion particles of the KPP equation in time-dependent Kolmogorov flow. Numerical results show that the proposed DeepParticle method efficiently learns the dependent target distributions and predicts the physically meaningful sharpening effect as becomes small.

We remark that though there are other techniques for mapping distributions such as Normalizing Flows (NF) [27], Generative Adversarial Networks (GAN) [19], entropic regularization and Sinkhorn Distances [11, 47], Fisher information regularization [33], our method differs either in training problem formulation or in imposing fewer constraints on the finite data samples. For example, our mapping is not required to be invertible as in NF, the training objective is not min-max as in GAN for image generation, there is no regularization effect such as blurring or noise. We believe that our method is better tailored to our problem of invariant measure learning by using a moderate amount of training data generated from the IPM. Detailed comparison will be left for future work.

The rest of the paper is organized as follows. In Section 2, we review the basic idea of DNNs and their properties, as well as Wasserstein distance. In Section 3, we introduce our DeepParticle method for learning and predicting invariant measures based on the 2-Wasserstein distance minimization. In Section 4, we present numerical results to demonstrate the performance of our method. Finally, concluding remarks are made in Section 5.

2 Preliminaries

2.1 Artificial Neural Network

In this section, we introduce the definition and approximation properties of DNNs. There are two ingredients in defining a DNN. The first one is a (vector) linear function of the form , defined as , where , and . The second one is a nonlinear activation function . A frequently used activation function, known as the rectified linear unit (ReLU), is defined as [31]. In the neural network literature, the sigmoid function is another frequently used activation function, which is defined as . By applying the activation function in an element-wise manner, one can define (vector) activation function .

Equipped with those definitions, we are able to define a continuous function by a composition of linear transforms and activation functions, i.e.,

| (1) |

where with be undetermined matrices and be undetermined vectors, and is the element-wise defined activation function. Dimensions of and are chosen to make (1) meaningful. Such a DNN is called a -layer DNN, which has hidden layers. Denoting all the undetermined coefficients (e.g., and ) in (1) as , where is a high-dimensional vector and is the space of . The DNN representation of a continuous function can be viewed as

| (2) |

Let denote the set of all expressible functions by the DNN parameterized by . Then, provides an efficient way to represent unknown continuous functions, in contrast with a linear solution space in classical numerical methods, e.g. the space of linear nodal basis functions in the finite element methods and orthogonal polynomials in the spectral methods.

2.2 Wasserstein distance and optimal transportation

Wasserstein distances are metrics on probability distributions inspired by the problem of optimal mass transportation. They measure the minimal effort required to reconfigure the probability mass of one distribution in order to recover the other distribution. They are ubiquitous in mathematics, especially in fluid mechanics, PDEs, optimal transportation, and probability theory [61].

One can define the -Wasserstein distance between probability measures and on a metric space with distance function by

| (3) |

where is the set of probability measures on satisfying and for all Borel subsets . Elements are called couplings of the measures and , i.e., joint distributions on with marginals and on each axis.

In the discrete case, the definition (3) has a simple intuitive interpretation: given a and any pair of locations , the value of tells us what proportion of mass at should be transferred to , in order to reconfigure into . Computing the effort of moving a unit of mass from to by yields the interpretation of as the minimal effort required to reconfigure mass distribution into that of . A cost function on tells us how much it costs to transport one unit of mass from location to location . When the cost function , the -Wasserstein distance (3) reveals the Monge-Kantorovich optimization problem, see [61] for more exposition.

In a practical setting [47], the closed-form solution of and may be unknown, instead only independent and identically distributed (i.i.d.) samples of and are available. We approximate the probability measures and by empirical distribution functions:

| (4) |

Any element in can clearly be represented by an doubly stochastic matrix [59], denoted as transition matrix, satisfying:

| (5) |

The empirical distribution functions allow us to approximate different measures. And the doubly stochastic matrix provides a practical tool to study the transformation of measures via minimizing the Wasserstein distance between different empirical distribution functions. Notice that when the number of particles becomes large, it is expensive to find the transition matrix to calculate discrete Wasserstein distance. We propose an efficient submatrix sampling method to overcome this difficulty in the next section.

3 Methodology

3.1 Physical parameter dependent neural networks

Compared with general neural networks, we propose a new architecture to learn the dependency of physical parameters. Such type of network is expected to have independently batched input, and the output should also rely on some physical parameter that is shared by the batched input. Thus, in addition to concatenating the physical parameters as input, we also include some linear layers whose weights and biases are generated from a shallow network; see Fig. 1 for the layout of the proposed network used in later numerical experiments.

To be more precise, we let the network take on two kinds of input, and . Their batch sizes are denoted as and respectively. Then, there are sets of , such that in each set shares the same physical parameter . From to are general linear layers in which the weights and biases are randomly initialized and updated by Adams descent during training. They are in width and Sigmoid function is applied as activation between adjacent layers. Arrows denote the direction in forward propagation. From to are linear layers with in width, but every element of weight matrix and bias vector is individually generated from the specified on the right. Input of is the shared parameter, namely . From to are general linear layers with in width. For example, given the physical parameter is dimension, to generate a weight matrix, we first tile to tensor (boldface for tensor). Here denotes the shape of input batches which are independently forwarded in the network. In our numerical examples, . Then, we introduce a tensor as weight matrix in and do matrix multiplication of and on the last two dimensions while keeping dimensions in front. The third dimension in is the width of the . Shapes of weight tensor in and are and respectively. The weight parameters of the are randomly initialized and updated by gradient descent during training. We also include some skip connections as in to improve the performance of deeper layers in our network. In addition to standard short cuts, we design a linear propagation path directly from the input to the output . In numerical experiment shown later, such connection helps avoid output being over-clustered. There is no activation function between the last layer and output.

3.2 DeepParticle Algorithms

Given distributions and defined on metric spaces and , we aim to construct a transport map such that , where star denotes the push forward of the map. On the other hand, given function , the -Wasserstein distance between and is defined by:

| (6) |

where denotes the collection of all measures on with marginals and on the first and second factors respectively and denotes the metric (distance) on . A straightforward derivation yields:

| (7) |

where denotes the collection of all measures on with marginals and on the first and second factors respectively and still denotes the metric (distance) on .

Network Training Objective

Our DeepParticle algorithm does not assume the knowledge of closed form distribution of and , instead we have i.i.d. samples of and namely, and , , as training data. Then a discretization of (7) is:

| (8) |

where denotes all doubly stochastic matrices.

Let the map (represented by neural network in Fig. 1) be where is the input, is the shared physical parameter and denotes all the trainable parameters in the network. Clearly . In case of equipped with Euclidean metric, we take . The training loss function is

| (9) |

Iterative method in finding transition matrix

To minimize the loss function (9), we update parameters of with the classical Adams stochastic gradient descent, and alternate with updating the transition matrix .

We now present a mini-batch linear programming algorithm to find the best for each inner sum of (9), while suppressing dependence in . Notice that the problem (9) is a linear program on the bounded convex set of vector space of real matrices. By Choquet’s theorem, this problem admits solutions that are extremal points of . Set of all doubly stochastic matrix can be referred to as Birkhoff polytope. The Birkhoff–von Neumann theorem [54] states that such polytope is the convex hull of all permutation matrices, i.e., those matrices such that for some permutation of , where is the Kronecker symbol.

The algorithm is defined iteratively. In each iteration, we select columns and rows and solve a linear programming sub-problem under the constraint that maintains column-wise and row-wise sums of the corresponding sub-matrix of . To be precise, let , () denote the index chosen from without replacement. The cost function of the sub-problem is

| (10) |

subject to

| (14) |

where are from the previous step. This way, the column and row sums of are preserved by the update. The linear programming sub-problem of much smaller size is solved by the interior point method [65].

We observe that the global minimum of in (9) is also the solution of sub-problems (10) with arbitrarily selected rows and columns, subject to the row and column partial sum values of the global minimum. The selection of rows and columns can be one’s own choice. In our approach, in each step after gradient descent, apart from random sampling of rows and columns, we also perform a number of searches for rows or columns with the largest entries to speed up computation; see Alg. 1.

The cost of finding optimal increases as increases, however, the network itself is independent of . After training, our network acts as a sampler from some target distribution without assumption of closed-form distribution of . At this stage, the input data is no longer limited by training data, an arbitrarily large amount of samples approximately obeying can be generated through (uniform distribution).

Update of training data

Note that given any fixed set of and (training data), we have developed the iterative method to calculate the optimal transition matrix in (9) and update network parameter at the same time. However, in more complicated cases, more than one set of data (size of which is denoted as ) should be assimilated. The total number of data set is denoted . For the second and later set of training data, the network is supposed to establish some accuracy. So before updating the network parameter, we first utilize our iterative method to find an approximated new for the new data feed to reach a preset level measured by the normalized Frobenius norm of ( divided by the square root of its row number). The full training process is outlined in Alg. 2.

4 Numerical Examples

4.1 Mapping uniform to normal distribution

For illustration, we first apply our algorithm to learn a map from 1D uniform distribution on to 1D normal distribution with zero mean and various standard derivation . The refers to one dimensional in the network of Fig.1. As training data, we independently generate sets of uniformly distributed points, also normally distributed sample points with zero mean and standard derivation equally spaced in . During training, we aim to find a -dependent network such that the output from the input data sets along with their values approximate normal distributions with standard derivation , respectively. The layout of network is shown in Fig. 1. The total number of training steps is with initial learning rate and data set. After each update of parameters, we solve the optimization problem of transition matrix , i.e. Eq.(10) under Eq.(14), with selection of rows and columns by Alg. 1 for times. After training, we generate uniformly distributed test data points and apply the networks with , , , .

In Fig. 2, we plot the output histograms. Clearly, the empirical distributions vary with and fit the reference normal probability distribution functions (pdfs) in red lines. We would like to clarify that even though the dependence of in this example is ‘linear’, the linear propagation directly from input to output does not depend on and output is no longer a linear function of . In addition, we plot the input-output pairs at the bottom of each empirical distribution. Each line connects the input (for better visualization we scale it to ) and its corresponding output from the network. In one dimensional case, it is well known that the inverse of the cumulative distribution function is the optimal transport map. It is a monotone function that maps uniform distribution to the quantile of the target distribution. In the plotted input-output pair, we can see there are no lines crossing. It verifies that our network finds the monotone transport map. Our experiments here and below are all carried out on a quad-core CPU desktop with an RTX2080 8GB GPU at UC Irvine.

4.2 Computing front speeds in complex fluid flows

Front propagation in complex fluid flows arises in many scientific areas such as turbulent combustion, chemical kinetics, biology, transport in porous media, and industrial deposition processes [67]. A fundamental problem is to analyze and compute large-scale front speeds. An extensively studied model problem is the reaction-diffusion-advection (RDA) equation with Kolmogorov-Petrovsky-Piskunov (KPP) nonlinearity [28]:

| (15) |

where is diffusion constant, v is an incompressible velocity field (precise definition given later), and is the concentration of reactant. Let us consider velocity fields to be -periodic in space and time, which contain the celebrated Arnold-Beltrami-Childress (ABC) and Kolmogorov flows as well as their variants with chaotic streamlines [9, 24]. The solutions from compact non-negative initial data spread along direction e with speed [46]:

where is the principal eigenvalue of the parabolic operator with:

| (16) |

on the space domain (periodic boundary condition). It is known [46] that is convex in , and superlinear for large . The operator in (16) is a sum , with

| (17) |

where is the generator of a Markov process, and acts as a potential. The Feynman-Kac (FK) formula [16] gives a stochastic representation:

| (18) |

In Eq.(18), satisfies the following stochastic differential equation

| (19) |

where the drift term and is the standard -dimensional Wiener process. The expectation in (18) is over . Directly applying (18) and Monte Carlo method to compute is unstable as the main contribution to comes from sample paths that visit maximal or minimal points of the potential function , resulting in inaccurate or even divergent results. A computationally feasible alternative is a scaled version, the FK semi-group:

Acting on any initial probability measure , converges weakly to an invariant measure (i.e. ) as , for any smooth test function . Moreover,

| (20) |

Given a time discretization step , an interacting particle method (IPM) proceeds to discretize as , (, where ) by Euler’s method, approximates the evolution of probability measure by a particle system with a re-sampling technique to reduce variance. Let

| (21) |

Then sampled FK semi-group actions on :

where is an invariant measure of the discrete map , thanks to being -periodic in time. There exists so that [41]:

| (22) |

The IPM algorithm below (Alg. 3) approximates the evolving measure as an empirical measure of a large number of genetic particles that undergo advection-diffusion () and mutations (). The mutation relies on fitness and its normalization defined as in the FK semi-group. In Alg.3, the evolution is phrased in generations, each moving and mutating times in a life span of . The index in (21) is changed to .

Much progress has been made in the finite element computation [57, 56, 74] of the KPP principal eigenvalue problem (16) particularly in steady 3D flows . However, when is small and the spatial dimension is 3, adaptive FEM can be extremely expensive. For 2D time periodic cellular flows, adaptive basis deep learning is found to improve the accuracy of reduced order modeling [42, 43]. Extension of deep basis learning to 3D in the Eulerian setting has not been attempted partly due to the costs of generating a sufficient amount of training data.

An advantage of the IPM is that given the same particle number, the computational cost of generating training data scales linearly with the dimension of spatial variables. As a comparison, in the Eulerian framework, one needs to solve the principal eigenvalue of a parabolic operator by discretizing the eigenvalue problem on mesh grids. The number of mesh grids depends exponentially on the spatial dimension, which becomes expensive for 3D problems. Though adaptive mesh techniques can alleviate the computational burden, their design and implementation are challenging for time dependent 3D problems when large gradient regions are dynamically changing and repeated mesh adaptations must be performed. In contrast, the IPM is spatially mesh-free and self-adaptive. The computational bottleneck remains in IPM at small when we need a large number of particles running for many generations to approach the invariant measure. To address this issue, we apply our DeepParticle method to generate particle samples by initiating at a learned distribution resembling the invariant measure and accelerating Alg. 3 (i.e. reduce to reach convergence).

2D steady cellular flow

We first consider a 2D cellular flow . In this case, we apply the physical parameter dependent network described in Section 3.1 to learn the invariant measure corresponding to different values. To generate training data, we first use the IPM, Alg. 3 with , , and particle evolution for , , , to get samples of invariant measure at different values. The value of is chosen so that the direct IPM simulation of principal eigenvalue converges, see blue line of Fig.4(d). From each set of sample points with different ’s, we randomly pick sample points without replacement, denoted as . Under such setting, we then seek neural networks such that given and a set of i.i.d. uniformly distributed points on , the network output is distributed near in distance. The set is called one mini-batch of training data and in total we have mini-batches through steps of network training. As stated in Alg. 2, after each mini-batch of network training (10000 steps of gradient descent), we re-optimize until its normalized Frobenius norm is greater than . Note that only a quarter of the 40,000 particles in the IPM simulations have been used in training.

During training, we apply Adams gradient descent with initial learning rate and a weight decay with hyper-parameter for trainable weights in the network. In each step, we select columns and rows by Alg. 1 in solving the sub-optimization problem (10) and repeat the linear programming for times.

In Fig. 3, we present the performance of our algorithm in sampling distribution in the original training data. Each time, the training algorithm only has access to at most samples of each target distribution with , . After training, we generate uniformly distributed points as input of the network. We compare the histogram of network output and that of the sample points obeying the invariant measure from the IPM Alg.3. We see that our trained parameter-dependent network indeed reproduces the sharpening effect on the invariant measure when becomes small.

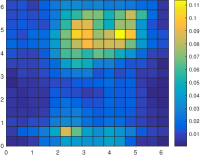

In Fig. 4, we present the performance of algorithm in predicting the invariant measure of IPM with . First we compare the invariant measure generated from direct simulation with IPM (Fig. 4(a)) and from the trained network (Fig. 4(b)). In Fig. 4(c), we show the distance between the generated distribution and the target distribution of the training data. The distance goes up when we re-sample the training data. This is because at this moment, the transition matrix in the definition of the distance is inaccurate. Note that the test value is no longer within the training range , . Our trained network predicts an invariant measure. Such prediction also serves as a ‘warm start’ for IPM and can accelerate its computation to quickly reach a more accurate invariant measure. As the invariant measure has no closed-form analytical solution, we plot in Fig. 4(d) the principal eigenvalue approximations by IPM with warm and cold starts, i.e. one generated from DeepParticle network (warm) and the i.i.d. samples from a uniform distribution (cold). The warm start by DeepParticle achieves 4x to 8x speedup.

Fig. 5 displays histograms of samples generated from our DeepParticle algorithm at different stage of training. In Fig. 5(d) with only one set of data, we already get a rough prediction of target distribution. With more data sets added, the prediction quality improves; see Fig. 5(e) to Fig. 5(i).

3D time-dependent Kolmogorov flow

Next, we compute KPP front speed in a 3D time-dependent Kolmogorov flow [17]:

The hyper-parameter setting for network training remains the same as in the 2D case. The physical parameter-dependent network in Section 3.1 learns the invariant measure corresponding to eight different training values. Besides the input and output are both in 3 dimensions, the network layout and training procedure are the same as in the 2D example.

Fig. 6 shows the performance of the network interpolating training data from to . Fig. 7 displays network prediction at . All the histograms here are 2D projections of the 3D histogram to the second and third dimensions. Results of the projection to other choices of two dimensions are similar and are not shown here for brevity.

5 Conclusions

We developed a DeepParticle method to generate invariant measures of stochastic dynamical (interacting particle) systems by a physically parameterized DNN that minimizes the Wasserstein distance between the source and target distributions. Our method is very general in the sense that we do not require distributions to be in closed-form and the generation map to be invertible. Thus, our method is fully data-driven and applicable in the fast computation of invariant measures of other interacting particle systems. During the training stage, we update network parameters based on a discretized Wasserstein distance defined on finite samples. We proposed an iterative divide-and-conquer algorithm that allows us to significantly reduce the computational cost in finding the optimal transition matrix in the Wasserstein distance. We carried out numerical experiments to demonstrate the performance of the proposed method. Numerical results show that our method is very efficient and helps accelerate the computation of invariant measures of interacting particle systems for KPP front speeds. In the future, we plan to apply the DeepParticle method to learn and generate invariant measures of other stochastic particle systems.

CRediT authorship contribution statement

Zhongjian Wang: Conceptualization, Programming, Methodology, Writing-Original draft preparation. Jack Xin: Conceptualization, Methodology, Writing-Reviewing and Editing. Zhiwen Zhang: Conceptualization, Writing-Reviewing and Editing.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

The research of JX is partially supported by NSF grants DMS-1924548 and DMS-1952644. The research of ZZ is supported by Hong Kong RGC grant projects 17300318 and 17307921, National Natural Science Foundation of China No. 12171406, Seed Funding Programme for Basic Research (HKU), and Basic Research Programme (JCYJ20180307151603959) of The Science, Technology and Innovation Commission of Shenzhen Municipality.

References

- [1] G. Bao, X. Ye, Y Zang, and H. Zhou. Numerical solution of inverse problems by weak adversarial networks. Inverse Problems, 36(11):115003, 2020.

- [2] Y. Bar-Sinai, S. Hoyer, J. Hickey, and M. Brenner. Learning data-driven discretizations of PDEs. Bulletin of the American Physical Society, 63, 2018.

- [3] M. Burger, L. Ruthotto, and S. Osher. Connections between deep learning and partial differential equations. European Journal of Applied Mathematics, 32(3):395–396, 2021.

- [4] Z. Cai, J. Chen, and M. Liu. Least-squares ReLU neural network (LSNN) method for linear advection-reaction equation. Journal of Computational Physics, page 110514, 2021.

- [5] Z. Cai, J. Chen, M. Liu, and X. Liu. Deep least-squares methods: An unsupervised learning-based numerical method for solving elliptic PDEs. Journal of Computational Physics, 420:109707, 2020.

- [6] R. Carmona and M. Lauriere. Convergence analysis of machine learning algorithms for the numerical solution of mean field control and games: I – The ergodic case. arXiv:1907.05980, 13 July 2019.

- [7] R. Carmona and M. Lauriere. Convergence analysis of machine learning algorithms for the numerical solution of mean field control and games: II – The finite horizon case. arXiv:1908.01613, 5 August 2019.

- [8] W. Chen, Q. Wang, J. Hesthaven, and C. Zhang. Physics-informed machine learning for reduced-order modeling of nonlinear problems. Journal of Computational Physics, 446:110666, 2021.

- [9] S. Childress and A. Gilbert. Stretch, Twist, Fold: The Fast Dynamo. Lecture Notes in Physics Monographs, No. 37, Springer, 1995.

- [10] N. Cohen, O. Sharir, and A. Shashua. On the expressive power of deep learning: A tensor analysis. In Conference on Learning Theory, pages 698–728, 2016.

- [11] M. Cuturi. Sinkhorn distances: Lightspeed computation of optimal transport. Advances in neural information processing systems, 26:2292–2300, 2013.

- [12] G. Cybenko. Approximation by superpositions of a sigmoidal function. Mathematics of control, signals and systems, 2(4):303–314, 1989.

- [13] W. E, J. Han, and A. Jentzen. Deep learning-based numerical methods for high-dimensional parabolic partial differential equations and backward stochastic differential equations. Communications in Mathematics and Statistics, 5(4):349–380, 2017.

- [14] W. E and B. Yu. The deep Ritz method: A deep learning-based numerical algorithm for solving variational problems. Communications in Mathematics and Statistics, 6(1):1–12, 2018.

- [15] S. Ellacott. Aspects of the numerical analysis of neural networks. Acta Numerica, 3:145–202, 1994.

- [16] M. Freidlin. Functional Integration and Partial Differential Equations. Princeton Univ. Press, 1985.

- [17] D. Galloway and M. Proctor. Numerical calculations of fast dynamos in smooth velocity fields with realistic diffusion. Nature, 356(6371):691–693, 1992.

- [18] I. Goodfellow, Y. Bengio, A. Courville, and Y. Bengio. Deep learning, volume 1. MIT press Cambridge, 2016.

- [19] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio. Generative adversarial nets. Advances in neural information processing systems, 27, 2014.

- [20] J. Han, A. Jentzen, and W. E. Solving high-dimensional partial differential equations using deep learning. Proceedings of the National Academy of Sciences, 115(34):8505–8510, 2018.

- [21] J. He, L. Li, J. Xu, and C. Zheng. Relu deep neural networks and linear finite elements. Journal of Computational Mathematics, 38(3):502–527, 2020.

- [22] J. He and J. Xu. Mgnet: A unified framework of multigrid and convolutional neural network. Science China Mathematics, 62(7):1331–1354, 2019.

- [23] K. Hornik, M. Stinchcombe, and H. White. Multilayer feedforward networks are universal approximators. Neural networks, 2(5):359–366, 1989.

- [24] C. Kao, Y-Y Liu, and J. Xin. A Semi-Lagrangian Computation of Front Speeds of G-equation in ABC and Kolmogorov Flows with Estimation via Ballistic Orbits. arXiv:2012.11129, to appear in SIAM J. Multiscale Modeling and Simulation, 2021.

- [25] S. Karumuri, R. Tripathy, I. Bilionis, and J. Panchal. Simulator-free solution of high-dimensional stochastic elliptic partial differential equations using deep neural networks. Journal of Computational Physics, 404:109120, 2020.

- [26] Y. Khoo, J. Lu, and L. Ying. Solving parametric PDE problems with artificial neural networks. European Journal of Applied Mathematics, Special Issue 3: Connections between Deep Learning and Partial Differential Equations, 32:421–435, 2021.

- [27] I. Kobyzev, S. Prince, and M. Brubaker. Normalizing flows: An introduction and review of current methods. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- [28] A. Kolmogorov, I. Petrovsky, and N. Piskunov. Investigation of the equation of diffusion combined with increasing of the substance and its application to a biology problem. Bull. Moscow State Univ. Ser. A: Math. Mech, 1(6):1–25, 1937.

- [29] J.N. Kutz. Deep learning in fluid dynamics. Journal of Fluid Mechanics, 814(1-4), 2017.

- [30] I. Lagaris, A. Likas, and D. Fotiadis. Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans. Neural Netw., 9(5):987–1000, 1998.

- [31] Y. LeCun, Y. Bengio, and G. Hinton. Deep learning. Nature, 521(7553):436, 2015.

- [32] H. Lee. Neural algorithm for solving differential equations. Journal of Computational Physics, 91:110–131, 1990.

- [33] W. Li, P. Yin, and S. Osher. Computations of optimal transport distance with Fisher information regularization. Journal of Scientific Computing, 75(3):1581–1595, 2018.

- [34] Z. Li, N. Kovachki, K. Azizzadenesheli, B. Liu, K. Bhattacharya, A. Stuart, and A. Anandkumar. Fourier neural operator for parametric partial differential equations. arXiv preprint arXiv:2010.08895, 2020.

- [35] A. Lin, S. Fung, W.Li, L. Nurbekyana, and S. Osher. Alternating the population and control neural networks to solve high-dimensional stochastic mean-field games. PNAS, 118(31), e2024713118, 2021.

- [36] Z. Long, Y. Lu, and B. Dong. PDE-Net 2.0: Learning PDEs from data with a numeric-symbolic hybrid deep network. Journal of Computational Physics, 399:108925, 2019.

- [37] Z. Long, Y. Lu, X. Ma, and B. Dong. PDE-Net: Learning PDEs from data. International Conference on Machine Learning, pages 3208–3216, 2018.

- [38] Z. Long, P. Yin, and J. Xin. Global convergence and geometric characterization of slow to fast weight evolution in neural network training for classifying linearly non-separable data. Inverse Problems and Imaging, 15(1):41–62, 2021.

- [39] J. Lu, Z. Shen, H. Yang, and S. Zhang. Deep network approximation for smooth functions. SIAM Journal on Mathematical Analysis, 53(5):5465–5506, 2021.

- [40] L. Lu, P. Jin, and G. Karniadakis. Deeponet: Learning nonlinear operators for identifying differential equations based on the universal approximation theorem of operators. arXiv:1910.03193, 2019.

- [41] J. Lyu, Z. Wang, J. Xin, and Z. Zhang. A convergent interacting particle method and computation of KPP front speeds in chaotic flows. to appear in SIAM J. Numer. Anal., 2021.

- [42] J. Lyu, J. Xin, and Y. Yu. Computing residual diffusivity by adaptive basis learning via spectral method. Numerical Mathematics: Theory, Methods and Applications, 10(2):351–372, 2017.

- [43] J. Lyu, J. Xin, and Y. Yu. Computing residual diffusivity by adaptive basis learning via super-resolution deep neural networks. In: H. A. Le Thi, et al (eds), Advanced Computational Methods for Knowledge Engineering. ICCSAMA 2019. Advances in Intelligent Systems and Computing, 1121:279–290, 2020.

- [44] A. Meade and A. Fernandez. The numerical solution of linear ordinary differential equations by feedforward neural networks. Math. Comput. Model., 19(12):1–25, 1994.

- [45] H. Montanelli and Q. Du. New error bounds for deep ReLU networks using sparse grids. SIAM Journal on Mathematics of Data Science, 1(1):78–92, 2019.

- [46] J. Nolen, M. Rudd, and J. Xin. Existence of KPP fronts in spatially-temporally periodic advection and variational principle for propagation speeds. Dynamics of PDEs, 2(1):1–24, 2005.

- [47] G. Peyré and M. Cuturi. Computational optimal transport. Foundations and Trends in Machine Learning, 11(5-6):355–607, 2019.

- [48] A. Pinkus. Approximation theory of the MLP model in neural networks. Acta numerica, 8:143–195, 1999.

- [49] T. Qin, K. Wu, and D. Xiu. Data driven governing equations approximation using deep neural networks. Journal of Computational Physics, 395:620–635, 2019.

- [50] M. Raissi, P. Perdikaris, and G. Karniadakis. Multistep Neural Networks for Data-driven Discovery of Nonlinear Dynamical Systems. arXiv:1801.01236, 2018.

- [51] M. Raissi, P. Perdikaris, and G. Karniadakis. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. Journal of Computational Physics, 378:686–707, 2019.

- [52] S. Rudy, J.N. Kutz, and S. Brunton. Deep learning of dynamics and signal-noise decomposition with time-stepping constraints. Journal of Computational Physics, 396:483–506, 2019.

- [53] L. Ruthotto, S. Osher, W. Li, L. Nurbekyan, and S. Fung. A machine learning framework for solving high-dimensional mean field game and mean field control problems. Proceedings of the National Academy of Sciences, 117(17):9183–9193, 2020.

- [54] A. Schrijver. Combinatorial optimization: polyhedra and efficiency, volume 24. Springer Science & Business Media, 2003.

- [55] C. Schwab and J. Zech. Deep Learning in High Dimension. Research Report, vol. 2017, 2017.

- [56] L. Shen, J. Xin, and A. Zhou. Finite element computation of KPP front speeds in 3D cellular and ABC flows. Mathematical Modelling of Natural Phenomena, 8(3):182–197, 2013.

- [57] L. Shen, J. Xin, and A. Zhou. Finite element computation of KPP front speeds in cellular and cat’s eye flows. Journal of Scientific Computing, 55(2):455–470, 2013.

- [58] Z. Shen, H. Yang, and S. Zhang. Deep network with approximation error being reciprocal of width to power of square root of depth. Neural Computation, 33(4):1005–1036, 2021.

- [59] R. Sinkhorn. A relationship between arbitrary positive matrices and doubly stochastic matrices. The annals of mathematical statistics, 35(2):876–879, 1964.

- [60] J. Sirignano and K. Spiliopoulos. DGM: A deep learning algorithm for solving partial differential equations. Journal of Computational Physics, 375:1339–1364, 2018.

- [61] C. Villani. Topics in optimal transportation, volume 58. American Math. Soc., 2021.

- [62] Q. Wang, N. Ripamonti, and J. Hesthaven. Recurrent neural network closure of parametric POD-Galerkin reduced-order models based on the Mori-Zwanzig formalism. Journal of Computational Physics, 410:109402, 2020.

- [63] Y. Wang, S. Cheung, E. Chung, Y. Efendiev, and M. Wang. Deep multiscale model learning. Journal of Computational Physics, 406:109071, 2020.

- [64] Z. Wang and Z. Zhang. A mesh-free method for interface problems using the deep learning approach. Journal of Computational Physics, 400:108963, 2020.

- [65] S. Wright. Primal-dual interior-point methods. SIAM, 1997.

- [66] K. Wu and D. Xiu. Data-driven deep learning of partial differential equations in modal space. Journal of Computational Physics, page 109307, 2020.

- [67] J. Xin. An introduction to fronts in random media, volume 5. Springer Science & Business Media, 2009.

- [68] L. Yang, X. Meng, and G.E. Karniadakis. B-PINNS: Bayesian physics-informed neural networks for forward and inverse PDE problems with noisy data. J. Comp. Physics, 425:109913, 2021.

- [69] D. Yarotsky. Error bounds for approximations with deep ReLU networks. Neural Networks, 94:103–114, 2017.

- [70] G. Yoo and H. Owhadi. Deep regularization and direct training of the inner layers of neural networks with kernel flows. arXiv:2002.08335, 2020.

- [71] Y. Zang, G. Bao, X. Ye, and H. Zhou. Weak adversarial networks for high-dimensional partial differential equations. Journal of Computational Physics, 411:109409, 2020.

- [72] Y. Zhu and N. Zabaras. Bayesian deep convolutional encoder-decoder networks for surrogate modeling and uncertainty quantification. Journal of Computational Physics, 366:415–447, 2018.

- [73] Y. Zhu, N. Zabaras, P. Koutsourelakis, and P. Perdikaris. Physics-constrained deep learning for high-dimensional surrogate modeling and uncertainty quantification without labeled data. Journal of Computational Physics, 394:56–81, 2019.

- [74] P. Zu, L. Chen, and J. Xin. A computational study of residual KPP front speeds in time-periodic cellular flows in the small diffusion limit. Physica D, 311:37–44, 2015.