\ul

11institutetext: Deakin University, Australia

11email: {yanbo,changtsun.li,xuequan.lu}@deakin.edu.au

Deepfake Detection via Joint Unsupervised Reconstruction and Supervised Classification

Abstract

Deep learning has enabled realistic face manipulation for malicious purposes (i.e., deepfakes), which poses significant concerns over the integrity of the media in circulation. Most existing deep learning techniques for deepfake detection can achieve promising performance in the intra-dataset evaluation setting (i.e., training and testing on the same dataset), but are unable to perform satisfactorily in the inter-dataset evaluation setting (i.e., training on one dataset and testing on another). Most of the previous methods use the backbone network to extract global features for making predictions and only employ binary supervision (i.e., indicating whether the training instances are fake or authentic) to train the network. Classification merely based on the learning of global features often leads to weak generalizability to deepfakes attributed to unseen manipulation methods. Previous methods also use a reconstruction process to improve the learned representation. However, they did not consider the merit of combining the reconstruction task with the classification task. In this paper, we introduce a novel approach for deepfake detection, which considers the reconstruction and classification tasks simultaneously to address these problems. This method shares the information learned by one task with the other, each focusing on different aspects, and hence boosts the overall performance. In particular, we design a two-branch Convolutional AutoEncoder (CAE), in which the Convolutional Encoder used to compress the feature map into a latent representation is shared by both branches. The latent representation of the input data is then fed to a simple classifier and the unsupervised reconstruction component simultaneously. Our network is trained end-to-end. Experiments demonstrate that our method achieves state-of-the-art performance on three commonly-used datasets, particularly in the cross-dataset evaluation setting.

Keywords:

Deepfake detection Unsupervised Joint learning.1 Introduction

Recent deep learning techniques have enabled the generation of realistic face images and the synthesis of tampered videos. The evolution of such techniques allows attackers or malicious users to forge videos/images by replacing the original content with alternative versions, which poses security and privacy threats to the society. Therefore, it is of great importance to investigate innovative approaches to detect manipulated faces.

Several existing detection methods for face manipulation are based on visual cues produced by traditional manipulation methods. Yang et al. [34] proposed a detection approach that analyzes the abnormality of head poses to differentiate the original and deepfakes. Li et al. [21] suggested that the frequency of eye blinking is a good hint to determine if an image/video is manipulated. Li et al. [20] utilized the biological characteristic (eye movement) of the target face to detect deepfake video. Although these methods show promising performance on some benchmark datasets, they are designed for specific visual artifacts caused by the manipulation process. As a result, the performance of their methods drops dramatically when the clues are removed or when the manipulation artifacts are different from the specific artifacts the methods are designed to detect. With the technical evolution of manipulation, a main focus on deepfake detection is deep learning. The method proposed in [4, 5, 9] has shown that deep neural networks are effective in distinguishing tampered images from the original ones by capturing discriminative features and local patterns of images. These local patterns are then transformed by the Global Average Pooling into a scalar, resulting in losing the local information. This scalar value is then used for classification in the final stage, meaning the local features do not really contribute to the final decision-making. Therefore, the detection accuracy of those methods mentioned above degrades significantly when tested on cross datasets because the learned features are strongly related to the specific manipulations used to generate the training data. It also incurs huge time delay to retrain the model. The afore-mentioned observations suggest that it is crucial to exploit not only global features of the faces [37, 28] but also integrate the local face information learned from auxiliary supervision, e.g., unsupervised learning [16] into classification.

In this paper, we propose a novel method called Joint DeepFake Detector(JDFD) that employ the Convolutional Neural Network (CNN)-based autoencoder for reconstruction and a shallow linear network for classification, respectively. In our framework, we use both supervised and unsupervised learning in a complementary fashion. Supervised learning is used to minimise the classification task loss, while unsupervised learning is used for reconstructing the input data without knowing the labels. The reconstruction task uses the shared encoder to learn the representation of the input images’ features and helps to enhance the generalization ability across different datasets (i.e., cross-dataset evaluation). The framework can be trained end-to-end in a jointly supervised and unsupervised manner. Comprehensive experiments on three datasets allowing (3 intra-dataset evaluations and 6 cross-dataset evaluations) demonstrate the effectiveness of our method, and show that our method achieves state-of-the-art performance on deepfake detection.

In summary, our main contributions are as follows:

-

•

We introduce a two-branch Convolutional Autoencoder framework for deepfake detection, which considers both supervised classification and unsupervised reconstruction. Auxiliary supervision is adopted to assist the encoder to extract robust feature representation.

-

•

We propose to combine the learning of local and global spatial information from the reconstruction and classification tasks, which promotes the ability of mapping the feature representation of the shared encoder. This improves the generalizability of the network to unknown manipulated patterns.

2 Related Work

2.1 Face Manipulation Methods

Computer vision approaches are widely used for transferring facial expression and features by reconstructing 3D models for both source and target faces, and then exploring the correspondence among face geometry to warp between these two faces. Thies et al. [31] proposed a method to swap facial expression with an RGB-D camera. Face2Face [32] is a face reenactment system that uses only an RGB camera to manipulate facial expression in real-time. The extended work [14] even transfers more attributes other than expressions such as head position, eye blinking, and rotation from the source individual to a target individual in a video. FaceSwap [17] transfers the source individual’s features into the target face while preserving the expressions and head pose. Recently, deep learning-based techniques have attracted lots of attention in synthesizing or manipulating face images. Zhmoginov et al. [38] proposed a deep learning approach that utilizes the neural network to invert low-dimension face embedding for producing realistic face images. This technique is further extended to the mobile application called FaceApp [1], which can selectively edit facial attributes. Another branch of deep learning techniques is GAN-based methods. For example, ProGAN [13] achieves high-resolution face image synthesis by using progressively growing generator and detector. Although intended for age-oriented face synthesis, the Conditional Discriminator Pool proposed by Wang et al. [33] can be easily adapted for deepfake creation. Shao et al. [29] propose a facial expression transfer framework that specifically swaps the fine-grained expression of two unpaired images while well preserving other natural attributes. In [10], the authors introduce a stage-wise framework with an auto-encoder semi-learning manner. It predicts the image target boundary using pose and expression vector, where the boundary and input image are encoded into a latent space using two encoders. Then the input structure and texture are separated using the LightCNN network, and the concatenation of the boundary and input representation is decoded to produce the target synthesis. They considerably reduce the correlation bias in transferring poses and expressions for high-resolution input.

2.2 Deepfake Detection Methods

With the increasing magnitude of negative impacts on privacy and information security due to face forgery, researchers are racing to develop effective countermeasures. Some early works consider artifacts left by face manipulation methods as visual cues, e.g., unnatural eye blinking [21], abnormality of head pose [34], and biological signals from synthesized video [7]. Li et al. [22] proposed a detection method based on exploiting face warping artifacts from the manipulation pipeline. However, all these methods share a common drawback that these artifacts become invalid once the manipulation methods evolve. As the learning-based methods become popular, a recent study [27] proposes the deep learning-based framework that learns the features from the spatial domain and achieves outstanding performance on specific datasets. Zhou et al. [39] proposed a two-stream network to extract steganalysis features for tampered face detection. The work presented in [35] divides the original image into many patches where each patch is a particular facial region. The learned sub-feature maps of these patches are taken as input to the patch-based detector for classification. But their methods only consider a specific manipulation during training, thus, the model is not robust enough when tested on unseen face forgery datasets. Face X-ray [19] improves the generalization ability to detect fake faces without using fake images generated by existing manipulation methods. However, this method focuses on analyzing the artifacts left in the image by the post-processing step only: the blending step. While the artifacts due to the blending process are useful, other useful artifacts due to the processes at the prior stages in the manipulation pipeline are ignored. Discovering the intrinsic representation between samples from the same class is more useful for detecting general deepfake. By integrating Xception [6] with a triplet loss in [9], Feng et al. demonstrated the feasibility of their method in detecting deepfakes. In [11], Feng et al. applies the concept of unsupervised contrastive learning to manipulated face forensics by employing Xception as an encoder network. This method is divided into two training stages: 1) pre-training of the encoder using the original face data and its transformed version as a pair with a contrastive loss, followed by 2) the training of a simple classifier using the output from the encoder. It’s worth noting one merit of this method is that they train the encoder without using the ground truth. Although good performance can be observed in intra-data settings, there is still a significant performance gap when their method is applied in cross-data settings. Zhang et al. [36] introduced a self-supervised decoupling network(SDNN) to learn compression and authenticity features. The goal is to normalise the model using various compression rates in order to improve the classification performance of the authenticity classifier. However, the model might not be robust to unseen compression rates since the range of the compression ratios is variable and difficult to predict. In [18], a frequency-aware discriminative feature learning framework (FDFL) is proposed to minimize intra-class variations of authentic faces while increasing inter-class distance in the embedding space and compensate for the low efficacy of handcrafted features for forgery detection. The single-centre loss (SCL) is presented to drag real face features to the centre and push the manipulated features away. However, the model does not perform well on unseen datasets.

3 Proposed Method

Our proposed method produces two outputs including the probabilities and the reconstructed sample of the input. The framework consists of two steps: data pre-processing, and coupled supervised training (classification) and unsupervised training (reconstruction). Previous methods mentioned in Section 2.2 are dedicated to exploiting the imperfections of a specific manipulation for deepfake detection and neglect the generalization ability in detecting new manipulations. Thus, extracting the discriminative feature representation of the images is essential for differentiating their authenticity. As such, we utilize supervised and unsupervised learning in a complementary fashion to enable the framework to learn more discriminative features which could be explicitly classified by a linear layer network. This approach makes the network robust to unseen face data even if the tampered method is different from the training data. Face-centred images are extracted in the pre-processing step and resized to pixels. Each input is encoded in a high-level latent space through a Convolutional Autoencoder (CAE). We then input the high-level features to the decoder and the classifier simultaneously. Fig. 1 shows the overview of our method.

3.1 Data Pre-processing

Most of the public face manipulation datasets are stored in the format of video. The face area only takes up a small proportion section in manipulated videos, which would raise difficulties for learning features and predicting probabilities. Since the purpose of deepfake is to swap the face or edit the attributes in the face region, locating the face region of each frame in the video and narrowing down the range of the bounding box to the face centre is necessary. In this paper, we use a popular face extractor Dlib [15] to locate the face region in each frame sampled from videos according to 68 facial landmarks and crop the image using a bounding box. To preserve as many tampered traces as possible, the faces are aligned at a centre position to incorporate the spatial information and eliminate the variance of head poses. Finally, the cropped-out faces will go through the transformation procedures (e.g., resize, normalization). The pre-processing allows our framework to work with different resolutions and postures.

3.2 Two-Branch Autoencoder

Extracting representations effectively is critical for determining whether face images are real or fake. Different from conventional autoencoder, Convolutional Autoencoder (CAE) applies convolutional layers to learn representation by sharing the same weights among all locations in each input channel, which can preserve spatial locality [24]. As such, we employ a CAE to extract more distinguishable representations, which are beneficial in separating true and fake images in a high-dimensional space. To force the network to disentangle two different tasks, we feed the latent vector to a shallow linear network to handle the decision while the decoder restores the latent vector to reconstruct the original input by learning the local features of the input. With the combined supervised and unsupervised training, the distinguishable information associated with their classes (real or fake) is separated accordingly. The decoder upsamples the latent vector and reconstructs a realistic sample by learning the local spatial features of input data. Hence, the reconstruction task reinforces the shared encoder to extract robust features. The two-branch autoencoder design ensures the network shares useful information from both the reconstruction and classification tasks, yielding more robust forgery detection.

In this work, the CAE, denoted by g, consists of two sub-components, namely the encoder () and the decoder (). The face dataset, denoted as X, includes real and fake face images and their corresponding labels , where , is the size, and . Each training image is taken as input to the encoder for conversion into different low-dimensional feature maps that are projected to the latent vector space to produce the latent vector , where z is the dimension of v.

| (1) |

where denotes parameters for the encoder. Then, the latent vector z is sent to the decoder and a linear classifier C(), simultaneously as shown in Fig 1. The decoder decodes the latent vector v to reconstruct the input image while the linear classifier estimates the probabilities of v being real or fake.

| (2) |

where and are weights of the decoder and classifier, respectively. Note that we only adopt a shallow linear neural network for the classifier because the full-connected layers have a large number of trainable parameters, which are capable of fitting global spatial features rather than relying on the local features generated by convolutional layers. To this end, it is suitable to classify the distributed feature representation encoded in the latent space. Then the loss information is back-propagated to the encoder to support the extraction of effective representations.

3.3 Loss Function

During the training phase, we employ the jointly supervised and unsupervised fashion to optimize the two-branch autoencoder. We enforce the cross-entropy loss on the predicted authenticity label of the images and the Mean Square Error (MSE) loss on the images reconstructed from the autoencoder:

| (3) |

where and weigh the influence of each loss term. The network is end-to-end trainable with .

The cross-entropy loss is to measure the difference between the predicted label and the ground-truth label corresponding to input . The classifier network is used to predict the probability of each image : . Let denote the number of instances. This loss is defined as:

| (4) |

The reconstruction loss measures the Euclidean distance between the original image and the reconstructed image , which is produced by :

| (5) |

4 Experiments

4.1 Datasets

We evaluate our proposed method on three different face manipulation datasets: UADFV [22], FaceForensics++ (FF++) [27], and Celeb-DF (version2) [23]. The UADFV is a small but commonly used dataset, which includes 49 pristine videos and 49 manipulated videos. Celeb-DF is a relatively large face forgery dataset with the forged images generated with advanced Deepfake algorithms to minimize the perceptibility of the artifacts, such as temporal flickering frames and color inconsistency. FF++ dataset contains 1,000 real videos collected from online archives and their corresponding forged versions generated with four different manipulation methods (DeepFake [2], Face2Face, FaceSwap [3], and NeuralTexture [30]). For simplicity, we use light compression (c23) [27] as our training data.

The number of images extracted from each dataset is set to 300 frames per video using the pre-processing introduced in Section 3.1. Table 1 shows the detailed volume of each dataset we used for training in our experiments.

| Datasets | Train | Test | ||

|---|---|---|---|---|

| Real | Fake | Real | Fake | |

| Celeb-DF | 152786 | 147901 | 26834 | 26108 |

| FaceForensics++ | 117218 | 124429 | 20795 | 21995 |

| UADFV | 9881 | 9886 | 1740 | 1723 |

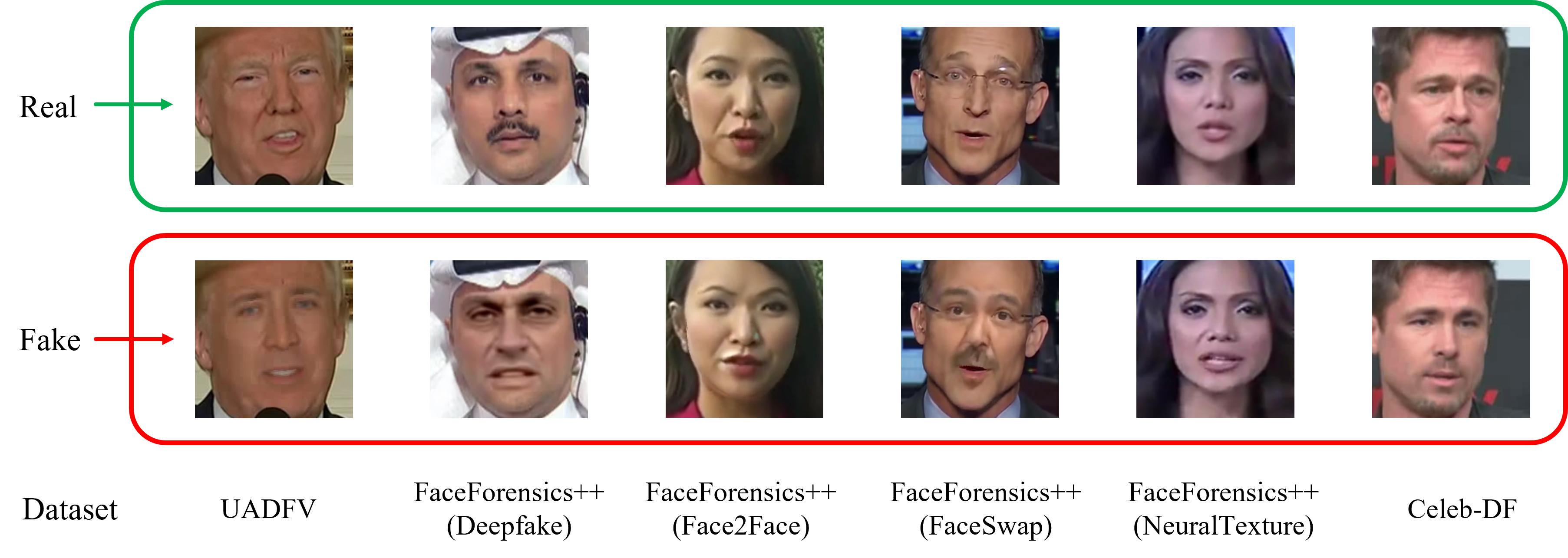

We show some examples of pre-processed images from UADFV, Celeb-DF, and each category of the FF++ dataset in Fig. 2. The listed images show that they are at the similar visual quality level (e.g., brightness, image resolution). However, the fake images in UADFV are easier to be distinguished by human eyes because of the blending boundary discrepancies, unnatural facial expressions, and color inconsistency.

4.2 Implementation Details

For image pre-processing, we use Dlib [15] as explained in Section 3.1 to detect face regions, crop the faces, and resize the images to . In this work, we adopt Xception [6] as the backbone of the shared encoder. The structure of the decoder and shallow linear classifier are pre-defined. Following each deconvolutional layer are a batch normalization [12] and a rectified linear unit (ReLU) [25] except the last deconvolutional layer. We resize the reconstructed images to 299 299 using interpolation with the bi-linear mode. For the classifier network, we apply ReLU after each fully connected layer excluding the last dense layer. The network is trained with the SGD optimizer with the learning rates of the convolutional autoencoder and the classification network set to 0.005 and 0.0004, respectively. We also add a scheduler on our optimizer with step size 5 and descending rate. The batch size is set to in our experiments. We empirically set and .

4.3 Comparison with Previous Methods

In this section, we compare our proposed framework, JDFD with state-of-the-art face forgery detection methods. We provide intra-dataset and cross-dataset evaluations on UADFV, FF++ and Celeb-DF. AUC (area under receiver operating characteristic curve) is adopted as the metric to evaluate the performance.

| Methods | Train data | FF++ | UADFV | Celeb-DF |

|---|---|---|---|---|

| Xception[27] | FF++ | 99.7 | 80.4 | 48.2 |

| Capsule[26] | FF++ | 96.6 | 61.3 | 57.5 |

| Xception+Tri.[9] | FF++ | \ul99.9 | 74.3 | 61.7 |

| Xception[23] | UADFV | - | 96.8 | 52.2 |

| Xception+Reg.[8] | UADFV | - | 98.4 | 57.1 |

| Xception+Tri.[9] | UADFV | 61.3 | \ul99.9 | 60.0 |

| Headpose[34] | UADFV | 47.3 | 89.0 | 54.6 |

| FWA[22] | UADFV | 80.1 | 97.4 | 56.9 |

| Xception+Tri. [9] | Celeb-DF | 60.2 | 88.9 | \ul99.9 |

| JDFD | FF++ | 98.2 | 92.9 | 78.0 |

| JDFD | UADFV | 61.0 | \ul99.9 | 64.9 |

| JDFD | Celeb-DF | 61.1 | 88.4 | 97.1 |

From Table 2, we can observe the AUC values by different approaches. Regarding the evaluation result from the model trained on FF++, our method is weaker than Xception and weaker than Xception+Tri when tested on the FF++ dataset. For the cross-dataset setting, we observe a dramatic performance improvement of our method when tested on UADFV and Celeb-DF (e.g., outperforming Xception by on UADFV and Xception+Tri by on Celeb-DF). Because the decoder models the distribution of the latent vector corresponding to each input data, the reconstruction loss reinforces the learning of input data’s inherent features of the category it belongs to. To this end, our joint supervised and unsupervised learning enables the encoder to learn robust representations, allowing it to generalize better to unknown forged patterns. Celeb-DF is a challenging Deepfake dataset among these three in terms of quality, quantity, and diversity. For results from the model trained on UADFV, we reach the state-of-the-art performance in the intra-dataset setting. Also, our method outperforms the best-performing method of others by on Celeb-DF. However, FWA [22] achieves excellent AUC on the FF++ dataset which is higher than our method. This is similar to [9], which is because their method [22] focuses on specific face discrepancies due to the affine transformations employed by various deepfake generation methods used to generate some benchmarking datasets (e.g., UADFV, FF++). To enhance our method’s generalization power, we do not design it to detect specific manipulations but to learn both local batch patterns and global spatial information. Therefore, it might be slightly weaker than these methods in some specific cases. Given the fact that the deepfake generation algorithms evolve, countermeasures designed for detecting specific manipulations will soon become unfit for purposes in the near future. Celeb-DF is a more challenging dataset for deepfake detection. When trained on Celeb-DF, the result of our method is still competitive when compared to Xception+Tri [9]. With the performance on the cross-dataset setting, our method is 0.9% higher on FF++ and weaker on UADFV. As our method considers the learned local features into classification rather than just determining global features, the performance is better on FF++ (containing four different manipulation methods) demonstrating that our method improved the generalizability of the model. In summary, our method generally achieves better results on the cross-dataset evaluation setting (e.g., it is the best performer in 4 of the 6 tests).

The ROC curves of the corresponding AUCs of our method are also shown in Fig. 3. The diagonal line indicates the baseline result from random classification (50% in binary classification). Thus, the curve further away from the diagonal line from above indicates better performance.

4.4 Ablation Study

In this section, we show two ablation studies: the effect of unsupervised learning, and the effect of data augmentation from other datasets.

4.4.1 Effect of unsupervised learning

To evaluate the contributions of unsupervised learning, we set up a baseline model to compare with our proposed framework. The baseline model has the same architecture but without the decoder. In Table 3, we report the results of these two models. With the unsupervised learning branch, our model has achieved obviously better performance on the intra-dataset evaluation of UADFV, FF++, and Celeb-DF, with 0.4%, 0.8%, and 1.5% improvement, respectively. The generalization capability to new forms of manipulation becomes stronger, as seen by the results of our method outperforming the baseline model on all the cross-dataset settings. When trained on UADFV, the AUCs of our method are 2.6 % and 1.5% higher when tested on FF++ and Celeb-DF, respectively. There is a significant increase (12.2%) when trained on FF++ and tested on UADFV, and a moderate increase (2.6%) when trained on FF++ and tested on Celeb-DF. The performance of our method increases to 88.4% when trained on Celeb-DF and tested on UADFV, which is 5.3% higher than the results of the baseline model. So, the proposed combination of supervised learning and unsupervised learning enables the shared encoder to not only extract the local feature by sliding the window but also learn the relationship between samples by mining the inherent representation of the data. Then the compressed latent vector is beneficial to determine the authenticity of the input sample.

| Train data | Method | UADFV | FF++ | Celeb-DF |

|---|---|---|---|---|

| UADFV | Baseline | 99.5 | 58.4 | 63.7 |

| JointDeepfake | 99.9 | 61.0 | 65.2 | |

| FF++ | Baseline | 80.7 | 97.4 | 75.4 |

| JointDeepfake | 92.9 | 98.2 | 78.0 | |

| Celeb-DF | Baseline | 83.0 | 59.7 | 95.6 |

| JointDeepfake | 88.4 | 61.1 | 97.1 |

4.4.2 Data augmentation from other datasets

It is interesting to evaluate the generalization capability of the proposed method when new data is added. We add more images from the other two datasets other than the current dataset used for training. Note that the labels of the added images are assumed to be unknown during training. This study involves three schemes. 1) We randomly choose 5 images from each of the other two datasets (e.g., around 15,000 images from FF++). 2) We increase the augmented foreign data to 10. 3) The augmented foreign data is increased to 15. The results are shown in Fig. 4. In each augmented data group from each dataset, the ratio of real/fake images is around 50%. We train the model on FF++ with the configuration demonstrated in Section 4.2. The results demonstrate that the AUC generally increases with respect to the amount of augmented data. When 15% foreign data is added to our framework for training, the performance on UADFV improves by 6.5 when compared to the case of 5 cross data. Similarly, the performance for Celeb-DF improves significantly to 79.6%, which is 4.9% higher. When tested on the FF++ itself, the result of adding 15% foreign data drops by around 0.8% compared with the best result achieved in this study. This is understandable as adding foreign data will affect the training due to the added diversity introduced into FF++, but will naturally enhance the cross-dataset evaluations due to the augmentation of foreign data itself.

5 Conclusion

In this paper, we introduce a two-branch autoencoder with jointly unsupervised and supervised learning for deepfake detection. The shared encoder is optimized by receiving the back-propagated information from the reconstruction task and classification task, which enables the encoder to learn the representations effectively. Experimental results demonstrate that joint supervised and unsupervised learning can effectively promote the encoder’s efficacy in projecting discriminative features onto the latent space and differentiate the representations between real and forgery samples. These latent vectors are fed into the shallow linear network for prediction. It is able to perform well in deepfake detection, in particular in the inter-dataset evaluation settings. Future work will focus on developing an attention module in our framework to locate different forgery regions, which helps to disentangle more potential clues in the latent space for the detector and improve the ability of the framework to cope with seen and unseen face manipulation.

References

- [1] Faceapp. https://www.faceapp.com (2018), accessed on:15/12/2021

- [2] Deepfakes github. https://github.com/deepfakes/faceswap (2019), accessed on:15/12/2021

- [3] Faceswap github. https://github.com/MarekKowalski/FaceSwap (2019), accessed on:15/12/2021

- [4] Afchar, D., Nozick, V., Yamagishi, J., Echizen, I.: Mesonet: a compact facial video forgery detection network. In: 2018 IEEE international workshop on information forensics and security (WIFS). pp. 1–7. IEEE (2018)

- [5] Bayar, B., Stamm, M.C.: A deep learning approach to universal image manipulation detection using a new convolutional layer. In: Proceedings of the 4th ACM workshop on information hiding and multimedia security. pp. 5–10 (2016)

- [6] Chollet, F.: Xception: Deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 1251–1258 (2017)

- [7] Ciftci, U.A., Demir, I., Yin, L.: Fakecatcher: Detection of synthetic portrait videos using biological signals. IEEE Transactions on Pattern Analysis and Machine Intelligence (2020)

- [8] Dang, H., Liu, F., Stehouwer, J., Liu, X., Jain, A.K.: On the detection of digital face manipulation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern recognition. pp. 5781–5790 (2020)

- [9] Feng, D., Lu, X., Lin, X.: Deep detection for face manipulation. In: International Conference on Neural Information Processing. pp. 316–323. Springer (2020)

- [10] Fu, C., Hu, Y., Wu, X., Wang, G., Zhang, Q., He, R.: High-fidelity face manipulation with extreme poses and expressions. IEEE Transactions on Information Forensics and Security 16, 2218–2231 (2021)

- [11] Fung, S., Lu, X., Zhang, C., Li, C.T.: DeepfakeUCL: Deepfake detection via unsupervised contrastive learning. In: Proceedings of the International Joint Conference on Neural Networks (IJCNN). pp. 1–8. IEEE (2021)

- [12] Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning. pp. 448–456. PMLR (2015)

- [13] Karras, T., Aila, T., Laine, S., Lehtinen, J.: Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196 (2017)

- [14] Kim, H., Garrido, P., Tewari, A., Xu, W., Thies, J., Niessner, M., Pérez, P., Richardt, C., Zollhöfer, M., Theobalt, C.: Deep video portraits. ACM Transactions on Graphics (TOG) 37(4), 1–14 (2018)

- [15] King, D.E.: Dlib-ml: A machine learning toolkit. The Journal of Machine Learning Research 10, 1755–1758 (2009)

- [16] Kong, C., Chen, B., Li, H., Wang, S., Rocha, A., Kwong, S.: Detect and locate: Exposing face manipulation by semantic-and noise-level telltales. IEEE Transactions on Information Forensics and Security 17, 1741–1756 (2022)

- [17] Korshunova, I., Shi, W., Dambre, J., Theis, L.: Fast face-swap using convolutional neural networks. In: Proceedings of the IEEE international conference on computer vision. pp. 3677–3685 (2017)

- [18] Li, J., Xie, H., Li, J., Wang, Z., Zhang, Y.: Frequency-aware discriminative feature learning supervised by single-center loss for face forgery detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 6458–6467 (2021)

- [19] Li, L., Bao, J., Zhang, T., Yang, H., Chen, D., Wen, F., Guo, B.: Face x-ray for more general face forgery detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 5001–5010 (2020)

- [20] Li, M., Liu, B., Hu, Y., Wang, Y.: Exposing deepfake videos by tracking eye movements. In: 2020 25th International Conference on Pattern Recognition (ICPR). pp. 5184–5189. IEEE (2021)

- [21] Li, Y., Chang, M.C., Lyu, S.: In ictu oculi: Exposing ai generated fake face videos by detecting eye blinking. arXiv preprint arXiv:1806.02877 (2018)

- [22] Li, Y., Lyu, S.: Exposing deepfake videos by detecting face warping artifacts. arXiv preprint arXiv:1811.00656 (2018)

- [23] Li, Y., Sun, P., Qi, H., Lyu, S.: Celeb-DF: A Large-scale Challenging Dataset for DeepFake Forensics. In: IEEE Conference on Computer Vision and Patten Recognition (CVPR). Seattle, WA, United States (2020)

- [24] Masci, J., Meier, U., Cireşan, D., Schmidhuber, J.: Stacked convolutional cuto-encoders for hierarchical feature extraction. In: International conference on artificial neural networks. pp. 52–59. Springer (2011)

- [25] Nair, V., Hinton, G.E.: Rectified linear units improve restricted boltzmann machines. In: Icml (2010)

- [26] Nguyen, H.H., Yamagishi, J., Echizen, I.: Capsule-forensics: Using capsule networks to detect forged images and videos. In: ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). pp. 2307–2311. IEEE (2019)

- [27] Rossler, A., Cozzolino, D., Verdoliva, L., Riess, C., Thies, J., Nießner, M.: Faceforensics++: Learning to detect manipulated facial images. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 1–11 (2019)

- [28] Schwarcz, S., Chellappa, R.: Finding facial forgery artifacts with parts-based detectors. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 933–942 (2021)

- [29] Shao, Z., Zhu, H., Tang, J., Lu, X., Ma, L.: Explicit facial expression transfer via fine-grained semantic representations (2019)

- [30] Thies, J., Zollhöfer, M., Nießner, M.: Deferred neural rendering: Image synthesis using neural textures. ACM Transactions on Graphics (TOG) 38(4), 1–12 (2019)

- [31] Thies, J., Zollhöfer, M., Nießner, M., Valgaerts, L., Stamminger, M., Theobalt, C.: Real-time expression transfer for facial reenactment. ACM Trans. Graph. 34(6), 183–1 (2015)

- [32] Thies, J., Zollhofer, M., Stamminger, M., Theobalt, C., Nießner, M.: Face2face: Real-time face capture and reenactment of rgb videos. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2387–2395 (2016)

- [33] Wang, H., Sanchez, V., Li, C.T.: Age-oriented face synthesis with conditional discriminator pool and adversarial triplet loss. IEEE Transactions on Image Processing 30, 5413–5425 (2021)

- [34] Yang, X., Li, Y., Lyu, S.: Exposing deep fakes using inconsistent head poses. In: ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). pp. 8261–8265. IEEE (2019)

- [35] Yu, M., Ju, S., Zhang, J., Li, S., Lei, J., Li, X.: Patch-dfd: Patch-based end-to-end deepfake discriminator. Neurocomputing (2022)

- [36] Zhang, J., Ni, J., Xie, H.: Deepfake videos detection using self-supervised decoupling network. In: 2021 IEEE International Conference on Multimedia and Expo (ICME). pp. 1–6. IEEE (2021)

- [37] Zhao, H., Zhou, W., Chen, D., Wei, T., Zhang, W., Yu, N.: Multi-attentional deepfake detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 2185–2194 (2021)

- [38] Zhmoginov, A., Sandler, M.: Inverting face embeddings with convolutional neural networks. arXiv preprint arXiv:1606.04189 (2016)

- [39] Zhou, P., Han, X., Morariu, V.I., Davis, L.S.: Two-stream neural networks for tampered face detection. In: 2017 IEEE conference on computer vision and pattern recognition workshops (CVPRW). pp. 1831–1839. IEEE (2017)