oddsidemargin has been altered.

textheight has been altered.

marginparsep has been altered.

textwidth has been altered.

marginparwidth has been altered.

marginparpush has been altered.

The page layout violates the UAI style.

Please do not change the page layout, or include packages like geometry,

savetrees, or fullpage, which change it for you.

We’re not able to reliably undo arbitrary changes to the style. Please remove

the offending package(s), or layout-changing commands and try again.

Deep Sigma Point Processes

Abstract

We introduce Deep Sigma Point Processes, a class of parametric models inspired by the compositional structure of Deep Gaussian Processes (DGPs). Deep Sigma Point Processes (DSPPs) retain many of the attractive features of (variational) DGPs, including mini-batch training and predictive uncertainty that is controlled by kernel basis functions. Importantly, since DSPPs admit a simple maximum likelihood inference procedure, the resulting predictive distributions are not degraded by any posterior approximations. In an extensive empirical comparison on univariate and multivariate regression tasks we find that the resulting predictive distributions are significantly better calibrated than those obtained with other probabilistic methods for scalable regression, including variational DGPs—often by as much as a nat per datapoint.

1 INTRODUCTION

As machine learning becomes utilized for increasingly high risk applications such as medical treatment suggestion and autonomous driving, calibrated uncertainty estimation becomes critical. As a result, substantial effort has been devoted to the development of flexible probabilistic models. Gaussian Processes (GPs) have emerged as an important class of models in cases where predictive uncertainty estimates are essential (Rasmussen, 2003). Unfortunately, the simplest GP models often fail to match the expressive power of their neural network counterparts.

This limited flexibility has motivated the introduction of Deep Gaussian Processes (DGPs), which compose multiple layers of latent functions to build up more flexible function priors (Damianou and Lawrence, 2013). While this class of models has shown considerable promise, inference remains a serious challenge, with empirical evidence suggesting—not surprisingly—that posterior approximations (e.g. factorizing across layers) can degrade the performance of the predictive distribution (Havasi et al., 2018). Indeed, targeting posterior approximations as an optimization objective may fail both to achieve good predictive performance and to faithfully approximate the exact posterior. Clearly, this motivates investigating alternative approaches.

In this work we take a different approach to constructing flexible probabilistic models. In particular we formulate a class of fully parametric models that retain many of the attractive features of variational deep GPs, while posing a much easier (maximum likelihood) inference problem. This pragmatic approach is motivated by the recognition that—especially in settings where predictive performance is paramount—it is essential to consider the interplay between modeling and inference. In particular, as motivated above, a compelling class of models may be of limited predictive utility if approximations in the inference procedure severely degrade the posterior predictive distribution. Conversely, it can be a significant advantage if a class of models admits a simple inference procedure.

Similarly to variational deep GPs, our regression models utilize predictive distributions that are mixtures of Normal distributions whose mean and variance functions make use of hierarchical composition and kernel interpolation. In contrast to deep GPs, however, our parametric perspective allows us to directly target the predictive distribution in the training objective. As we show empirically in Sec. 5 the model we introduce—the Deep Sigma Point Process (DSPP)—exhibits excellent predictive performance and dramatically outperforms a number of strong baselines, including Deep Kernel Learning (Calandra et al., 2016; Wilson et al., 2016) and variational Deep Gaussian Processes (Salimbeni and Deisenroth, 2017).

2 BACKGROUND

This section is organized as follows. In Sec. 2.1-2.2 we review the basics of Gaussian Processes and inducing point methods. In Sec. 2.3 we review Deep Gaussian Processes. In Sec. 2.4 we review PPGPR (Jankowiak et al., 2020), as it serves as motivation for DSPPs in Sec. 3. We also use this section to establish our notation.

2.1 GAUSSIAN PROCESS REGRESSION

In probabilistic modeling Gaussian Processes offer flexible non-parametric function priors that are useful in various regression and classification tasks (Rasmussen, 2003). For a given input space GPs are entirely specified by a kernel and a mean function . Different choices of and permit the modeler to encode prior information about the generative process. In the prototypical case of univariate regression111Note that here and throughout this work we focus on the regression case. the joint density takes the form

| (1) |

where are the real-valued targets, are the latent function values, are the inputs with , is a multivariate Normal distribution with covariance , and is the variance of the Normal likelihood . The marginal likelihood takes the form

| (2) |

Eqn. 2 can be computed analytically, but doing so is computationally prohibitive for large datasets, necessitating approximate methods when is large.

2.2 SPARSE GAUSSIAN PROCESSES

Recent years have seen significant progress in scaling Gaussian Process inference to large datasets. This advance has been enabled by the development of inducing point methods (Snelson and Ghahramani, 2006; Titsias, 2009; Hensman et al., 2013), which we now review. One begins by introducing inducing variables that depend on variational parameters , with each and where . One then augments the GP prior with the auxiliary variables

and then appeals to Jensen’s inequality to lower bound the log joint density over the inducing variables and the targets:

| (3) |

where is given by

| (4) |

and

| (5) |

Eqn. 3 can be used to construct a variety of algorithms for scalable GP inference; here we limit our discussion to SVGP (Hensman et al., 2013).

2.2.1 VARIATIONAL INFERENCE: SVGP

If we apply standard techniques from variational inference to the lower bound in Eqn. 3, we obtain SVGP, a popular algorithm for scalable GP inference. In more detail, SVGP proceeds by introducing a multivariate Normal variational distribution and computing the ELBO, which is the expectation of Eqn. 3 w.r.t. together with an entropy term term :

| (6) |

Here KL denotes the Kullback-Leibler divergence, is the predictive mean function given by

| (7) |

and denotes latent function variance

| (8) |

Note that the complete variational distribution used in SVGP is given by

| (9) |

with a marginal distribution given by

| (10) |

where is the covariance matrix

| (11) |

The objective , which depends on and the various kernel hyperparameters, can then be maximized with gradient methods. Since the expected log likelihood in Eqn. LABEL:eqn:svgp factorizes as a sum over data points it is amenable to stochastic gradient methods, and thus SVGP can be applied to very large datasets.

2.3 DEEP GAUSSIAN PROCESSES

Deep Gaussian Processes (Damianou and Lawrence, 2013) are a natural generalization of GPs in which a sequence of GP layers form a hierarchical model in which the outputs of one GP layer become the inputs of the subsequent layer, resulting in a flexible, compositional function prior. In the simplest case of a 2-layer DGP with a univariate continuous output and with a GP layer of width fed into the topmost GP, the joint likelihood for a dataset is given by222We limit our discussion to this case to simplify notation; generalizations to multiple outputs and multiple layers are straightforward.

| (12) |

Here is a matrix of latent function values of size , i.e. denotes the latent function value of the GP in the first layer evaluated at the input . For the vector of dimension then serves as the input to the second GP denoted by . See Fig. 1 for an illustration. Throughout this work we assume that the prior over factorizes as , with each GP governed by its own kernel. Inference in this model is intractable, necessitating approximate methods. A popular approach is variational inference, which we now briefly review.

2.3.1 DOUBLY STOCHASTIC VARIATIONAL INFERENCE

The stochastic variational inference approach described in Sec. 2.2.1 can be generalized to the DGP setting (Salimbeni and Deisenroth, 2017). Proceeding in analogy to SVGP, we introduce inducing variables and inducing point locations for each GP and form a factorized variational distribution with each factor of the form in Eqn. 9. The variational distribution can then be used to form the ELBO

| (13) |

where denotes a sum over the various KL divergences for the inducing variables . Crucially, is easy to sample from since it suffices to sample from the various marginals and so the expected log likelihood term in Eqn. LABEL:eqn:dsvi factorizes333Since e.g. only depends on . into a sum over data points , enabling mini-batch training. As in SVGP, the topmost layer of latent function values can be integrated out analytically. All remaining latent variables (namely ) must be sampled, necessitating the use of the reparameterization trick (Price, 1958; Salimans et al., 2013) and resulting in ‘doubly’ stochastic gradients. For more details we refer the reader to (Salimbeni and Deisenroth, 2017).

2.3.2 DGP PREDICTIVE DISTRIBUTIONS

We review the structure of the posterior predictive distribution that results from the variational inference procedure described in the previous section, as this will serve as motivation for the introduction of Deep Sigma Point Processes in Sec. 3. We first note that in the (single-layer) SVGP case the predictive distribution at input is given by the Normal distribution

| (14) |

where is the predictive mean function in Eqn. 7 and is the latent function variance in Eqn. 8. The predictive distribution for the DGP is an immediate generalization of Eqn. 14. In particular in the DGP case the predictive distribution is given by a continuous mixture of Normal distributions of the form in Eqn. 14. In more detail, for the 2-layer DGP in Eqn. 12, the predictive distribution is given by

| (15) |

Since this expectation is intractable, in practice it must be approximated with Monte Carlo samples, i.e. as a finite mixture of Normal distributions.

2.4 PPGPR

In this section we review a recently introduced approach to scalable GP regression (PPGPR; Jankowiak et al. (2020)), as it will serve as motivation for Deep Sigma Point Processes in Sec. 3. In PPGPR one takes the family of predictive distribution in Eqn. 14—parameterized by , , , and the kernel hyperparameters—as the model class, and fits the model using gradient-based maximum likelihood estimation. Jankowiak et al. (2020) argue that this class of models achieves good performance because the training objective directly targets the distribution used to make predictions at test time. In more detail the PPGPR objective is given by

| (16) |

where is a hyperparameter and serves as a regularizer. Note that the objective in Eqn. 16 looks deceptively similar to the SVGP objective in Eqn. LABEL:eqn:svgp; the crucial difference is where appears. This difference is a result of the fact that the PPGPR objective directly targets the predictive distribution, while the SVGP objective targets minimizing a KL divergence between the variational distribution and the exact posterior. In SVGP the posterior predictive is then formed in a second step and does not directly enter the training procedure. PPGPR will serve as one of our baselines in Sec. 5.

3 DEEP SIGMA POINT PROCESSES

We now describe the class of models that is the focus of this work. Our approach is motivated by the good empirical performance exhibited by PPGPR, a class of parametric GP models explored in (Jankowiak et al., 2020) and reviewed in Sec. 2.4. Crucially, this approach to scalable GP regression relies on an objective function that is formulated in terms of the predictive distribution. We would like to apply this approach to the DGP setting but, unfortunately, the form of the predictive distribution for DGPs (see Eqn. 15) presents an immediate obstacle: Eqn. 15 is a continuous mixture of Normal distributions and cannot be computed in closed form. In other words, the analog of the PPGPR objective in Eqn. 16 would involve the logarithm of the expectation in Eqn. 15. While this quantity could be approximated with a Monte Carlo estimator, because of the outer logarithm the result would be a biased estimator. Instead of trying to address this obstacle directly and construct an unbiased (gradient) estimator, we instead adopt a simpler solution: we replace the continuous mixture with a (parametric) finite mixture.444In Sec. C we report empirical results comparing to the ‘direct’ (biased) approach in which the predictive distribution is given as a continuous mixture. We find that this model variant is outperformed by the DSPP, presumably because of the additional model flexibility that results from the learned quadrature rules we adopt in Sec. 3.2.

To define a finite parametric family of mixture distributions, we essentially apply a sigma point approximation or quadrature-like integration rule to Eqn. 15. To make this concrete, suppose the width of the first GP layer in Eqn. 15 is . Then for an input we can write

| (17) |

The simplest ansatz for converting Eqn. 17 into a finite mixture uses Gauss-Hermite quadrature, i.e. we approximate with a -component mixture of Dirac delta distributions controlled by weights and quadrature points

| (18) |

with an analogous ansatz for . For example for we would have

| (19) |

for both and . Making these replacements in Eqn. 17 then yields a mixture with components:

| (20) |

Thus for a 2-layer model where the first GP layer has width this particular quadrature rule leads to a predictive distribution that is a mixture of Normal distributions, each of which is parameterized by compositition of mean and variance functions of the form in Eqn. 7 and Eqn. 8. This exponential growth in the number of mixture components is potentially problematic; we defer a more detailed discussion of alternative—in particular more compact—quadrature rules to Sec. 3.2.

3.1 TRAINING OBJECTIVE

Now that we have defined the class of parametric regression models we are interested in, we can define our training objective. As in Jankowiak et al. (2020), we define an objective function that corresponds to regularized maximum likelihood estimation

| (21) |

where is an optional regularization constant and is a sum over KL divergences of inducing variables (one for each GP) just as in the DGP objective, Eqn. LABEL:eqn:dsvi. Just as in ‘Doubly Stochastic Variational Inference’ for DGPs (Sec. 2.3.1), this objective—which depends on as well as parameters and various kernel hyperparameters for each GP—can be optimized using stochastic gradient methods. In contrast to DGPs, this optimization is only ‘singly stochastic,’ i.e. we only subsample data points and not latent function values.

3.2 QUADRATURE RULES

The Gauss-Hermite quadrature rule used to motivate DSPPs in Eqn. 18 is intuitive, but is of limited practical use, since it leads to an exponential blow-up in the number of mixture components used to define the DSPP. It is therefore essential to consider alternative quadrature rules that are more compact. We emphasize at the outset that our goal is not to accurately estimate the intractable expectation in Eqn. 17. Rather, our goal is to construct a flexible family of parametric distributions that are governed by well-behaved mean and variance functions that benefit from compositional structure. Consequently, there is no need to restrict ourselves to quadrature rules derived from gaussian integrals—indeed empirically we find that the quadrature rule in Eqn. 18 is too rigid. We now describe three more flexible alternatives.

QR1

In the first quadrature rule we choose quadrature points that follow the same factorized structure that is evident in the double sum in Eqn. 20, i.e. we still use a grid of quadrature points for a 2-layer DSPP where the first GP layer has width . That is we make the substitution

| (22) |

where is a multi-index. In contrast to the Gauss-Hermite quadrature rule, however, we choose the quadrature points to be learnable parameters (for a total of real parameters). In addition we replace the Gauss-Hermite weights for each mixture—which are given as a product over the GPs in the first layer, i.e. for each multi-index —by -many learnable parameters that sum to unity .

QR2

The second rule is identical to QR1 except the quadrature points are forced to be symmetric, i.e. for and .

QR3

In the third quadrature rule we abandon the factorized structure of QR1 and QR2, thus liberating us from the exponential growth of mixture components. To do this we effectively ‘line-up’ the quadrature points across the different GPs , making the substitution

| (23) |

where there is a set of learnable quadrature weights and learnable quadrature points defined by real parameters. Here is a parameter that we control; in particular it has no relationship to and can be as small as . We explore the empirical performance of these quadrature rules in Sec. 5.1.

3.3 DISCUSSION

We use this section to clarify the relationship between variational DGPs and DSPPs. DSPPs differ from DGPs in two important respects (also see Fig. 1):

-

1.

Objective function: The DGP is trained with an ELBO (Eqn. LABEL:eqn:dsvi) and the DSPP is trained via a regularized maximum likelihood objective (Eqn. 21) that directly targets the DSPP predictive distribution.

-

2.

Treatment of latent function values: In the DGP latent function values not at the top of the hierarchy (e.g. in Eqn. 12) are sampled while in the DSPP they are parameterized via a learnable quadrature rule as in Eqn. LABEL:eqn:qr3.

Indeed this latter point is made explicit by our quadrature rules—see Eqn. LABEL:eqn:qr1 and Eqn. LABEL:eqn:qr3—which should be compared to the reparameterization trick used during DGP training, which implicitly makes the substitution

and approximates the expectation using Monte Carlo samples with .

Note that in other respects variational DGPs and DSPPs are very similar. For example, apart from the parameters defining the learned quadrature rule in a DSPP, they make use of the same parameters. Similarly, their predictive distributions have similar forms, with the difference that the DSPP utilizes a finite mixture of Normal distributions, while the DGP predictive distribution is a continuous mixture of Normal distributions.

4 RELATED WORK

We discuss some work related to DSSPs, noting that we review some of the relevant literature in Sec. 2. The use of pseudo-inputs and inducing point methods to scale-up Gaussian Process inference has spawned a large literature, especially in the context of variational inference (Snelson and Ghahramani, 2006; Titsias, 2009; Hensman et al., 2013; Cheng and Boots, 2017). While variational inference remains the most popular inference algorithm for scalable GPs, researchers have also explored different variants of Expectation Propagation (Hernández-Lobato and Hernández-Lobato, 2016) as well as Stochastic gradient Hamiltonian Monte Carlo (Havasi et al., 2018).

Deep Gaussian Processes were introduced in (Damianou and Lawrence, 2013), with recent approaches to variational inference for DGPs described by Salimbeni and Deisenroth (2017). Cutajar et al. (2017) introduce a hybrid model formulated with random feature expansions that combines features of DGPs and neural networks. This class of models bears some resemblance to DSPPs; this is especially true for the VAR-FIXED variant, in which spectral frequencies are treated deterministically. Importantly, since Cutajar et al. (2017) rely on variational inference, their training objective does not directly target the predictive distribution. As we show empirically in Sec. 5, the mismatch between the training objective and test time predictive distributions for variational DGPs severely degrades predictive performance; we expect this is also true of the approach in (Cutajar et al., 2017).

DSPPs can also be motivated by Direct Loss Minimization, which emerges from a view of approximate inference as regularized loss minimization (Sheth and Khardon, 2017). This connection is somewhat loose, however, since our fully parametric models dispose of approximate posterior (or quasi-posterior) distributions entirely.

5 EXPERIMENTS

| DSPP-QR1 | DSPP-QR2 | DSPP-QR3 | ||

| NLL | ||||

| RMSE | ||||

| CRPS |

| OD-SVGP | PPGPR | DGP | -DGP | DSPP | ||

| NLL | ||||||

| RMSE | ||||||

| CRPS |

In this section we explore the empirical performance of the Deep Sigma Point Process introduced in Sec. 3. Throughout DSPPs use diagonal (i.e. mean field) covariance matrices . Except for Sec. 5.4 where we consider 3-layer models, all DGPs and DSPPs considered in our experiments have two layers. For details about kernels, mean functions, layer widths, numbers of inducing points, etc., refer to Sec. A in the appendix.

5.1 QUADRATURE RULE ABLATION STUDY

We begin by exploring the impact of the three different quadrature rules defined in Sec. 3.2. To do so we train 2-layer DSPPs on the 8 smallest univariate regression datasets described in the next section. In particular we compare QR1 and QR2 with555Since we consider this corresponds to mixture components. to QR3 with . The results are summarized in Table 1, with more detailed results to be found in Sec. C in the appendix. The upshot is that the three quadrature rules give largely comparable performance, with some preference for the most flexible quadrature rule, QR3. Since this quadrature rule avoids exponentially many quadrature points—and the computational cost can be carefully controlled by choosing appropriately—we use QR3 in the remainder of our experiments. Indeed this choice is essential for training 3-layer DSPPs in Sec. 5.4, which would otherwise be too computationally expensive.

5.2 UNIVARIATE REGRESSION

We reproduce a univariate regression experiment described in (Jankowiak et al., 2020). In particular we consider consider twelve datasets from the UCI repository (Dua and Graff, 2017), with the number of data points in the range and the number of input dimensions in the range .

We consider four strong GP baselines—two single-layer models: (OD-SVGP) the orthogonal basis decoupling method described in Cheng and Boots (2017) and Salimbeni et al. (2018); (PPGPR) the Parametric Predictive GP regression method described in Sec. 2.4; as well as two 2-layer models: (DGP) a variational DGP as described in Sec. 2.3; and (-DGP) the robust DGP described in Knoblauch (2019); Knoblauch et al. (2019).666This robust DGP can be viewed as a variant of the DGP described in Sec. 2.3 in which the inference procedure is modified by replacing the expected log likelihood loss with a gamma divergence in which the likelihood is raised to a power: . Results for OD-SVGP and PPGPR are reproduced from (Jankowiak et al., 2020).

Our results are summarized in Figs. 2-4 and Table 2. We find that in aggregate the DSPP outperforms all the baselines in terms of log likelihood, RMSE, and CRPS (also see Table 6 in Sec. C in the appendix). In particular, averaging across all twelve datasets, the DSPP outperforms the DGP by nats and the PPGPR by nats w.r.t. log likelihood. Strikingly, the second strongest baseline is the (single-layer) PPGPR described in Sec. 2.4. The fact that the PPGPR is able to outperform a 2-layer DGP highlights the advantages of a training procedure that directly targets the predictive distribution. Since the DSPP is in effect a much more flexible version of the PPGPR, it yields even better predictive performance, especially with respect to log likelihood. Indeed while the DGP achieves good RMSE performance on most datasets, posterior approximations degrade the calibration of the test time predictive distribution (as measured by log likelihood and CRPS).

5.3 MULTIVARIATE REGRESSION

| SVGP | PPGPR | DGP | -DGP | DSPP | ||

| NLL | ||||||

| MRMSE |

We conduct a multivariate regression experiment using five robotics datasets, two of which were collected from real-world robots and three of which were generated using the MuJoCo physics simulator (Todorov et al., 2012). In all five datasets the input and output dimensions correspond to various joint positions/velocities/etc. of the robot, with the number of data points in the range , the number of input dimensions in the range , and the number of output dimensions in the range . These datasets have been used in a number of papers, including (Vijayakumar and Schaal, 2000; Cheng and Boots, 2017).

All of our models follow the structure of the ‘linear model of coregionalization’ (Alvarez et al., 2012), specifically along the lines of ‘Semiparametric latent factor models’ (Seeger et al., 2005); for more details see Sec. A.3 in the supplementary materials.

We consider four strong GP baselines. In particular we consider two single-layer models: (SVGP) as described in Sec. 2.2.1 and (Hensman et al., 2013); (PPGPR) the Parametric Predictive GP regression method described in Sec. 2.4; as well as two 2-layer models: (DGP) a variational DGP as described in Sec. 2.3; and (-DGP) the robust DGP described in Knoblauch (2019); Knoblauch et al. (2019).

Our results are summarized in Fig. 5 and Table 3; see Sec. C in the appendix for additional results. We find that the DSPP outperforms all the baselines w.r.t. NLL, and achieves comparable MRMSE performance to the DGP. Averaged across all five datasets, the DSPP outperforms the DGP by nats and PPGPR by nats w.r.t. log likelihood. Note that while the PPGPR achieves good performance on log likelihoods, its MRMSE performance is substantially worse than the DSPP.

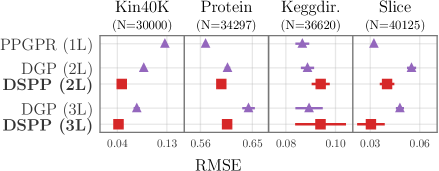

5.4 MULTILAYER MODELS

In the above experiments we have limited ourselves to 2-layer DSPPs. Here we investigate the predictive performance of 3-layer models, in particular comparing to 3-layer DGPs. Our results for four univariate regression datasets are summarized in Fig. 6. For both DGPs and DSPPs we find that, depending on the dataset, adding a third layer can improve NLLs, but that these gains are somewhat marginal compared to the gains from moving from single-layer to two-layer models. DSPPs exhibit the best log likelihoods, while RMSE results are somewhat more mixed, with the DSPP and PPGPR obtaining the lowest RMSE depending on the dataset.

5.5 COMPARISON WITH DEEP KERNEL LEARNING

From the previous sets of results we see that DSPP models tend to outperform their single-layer counterparts, both in terms of NLL and RMSE. A natural question is whether or not these performance improvements can be obtained with other classes of hierarchical models. More specifically, could we achieve similar results if the hierarchical features are extracted using traditional neural networks rather than GPs? To answer this question, we consider deep kernel learning (DKL) variants of SVGP, PPRPR, DPG, and DSPP in which the input features to each model are extracted by a neural network (Calandra et al., 2016; Wilson et al., 2016). The neural network parameters are optimized end-to-end alongside the GP/DGP/DSPP parameters. We conduct experiments on 4 medium-sized UCI regression datasets. For all DKL models we use a 5-layer neural network architecture proposed by Wilson et al. (2016) to extract features (see Sec. A.5 for details).

In Fig. 7 we compare the neural network modulated models (denoted by “NN+”) with their standard counterparts. We find that adding a neural network to DSPP models has limited impact on performance, improving on some datasets and not on others. For SVGP/PPGPR/DGP models, neural networks improve RMSE but have mixed effects on NLL. On three of the four datasets, none of the NN + SVGP/PPGPR/DGP models matches the NLL of the standard DSPP. This is particularly notable for the NN+PPGPR model, as it only differs from the standard DSPP model in terms of its “feature extractor” layer (neural networks versus GP/quadrature). These results suggests that hidden GP layers in DSPPs extract features that are complementary to those extracted by neural networks, while enjoying favorable regularization properties.

6 DISCUSSION

We motivated Deep Sigma Point Processes as a finite family of parametric distributions whose structure mirrors the DGP predictive distribution in Eqn. 15. It would be interesting to consider model variants that retain a continuous mixture distribution as in Eqn. 15 while leveraging the flexibility inherent in the learned quadrature rules described in Sec. 3.2. Another open question is whether DSPPs can be fruitfully applied to other likelihoods, for example those that arise in classification. We leave the exploration of these directions to future work.

References

References

- Alvarez et al. (2012) Mauricio A Alvarez, Lorenzo Rosasco, Neil D Lawrence, et al. Kernels for vector-valued functions: A review. Foundations and Trends® in Machine Learning, 4(3):195–266, 2012.

- Calandra et al. (2016) Roberto Calandra, Jan Peters, Carl Edward Rasmussen, and Marc Peter Deisenroth. Manifold gaussian processes for regression. In International Joint Conference on Neural Networks, 2016.

- Cheng and Boots (2017) Ching-An Cheng and Byron Boots. Variational inference for gaussian process models with linear complexity. In Advances in Neural Information Processing Systems, 2017.

- Cutajar et al. (2017) Kurt Cutajar, Edwin V Bonilla, Pietro Michiardi, and Maurizio Filippone. Random feature expansions for deep gaussian processes. In International Conference on Machine Learning, 2017.

- Damianou and Lawrence (2013) Andreas Damianou and Neil Lawrence. Deep gaussian processes. In Artificial Intelligence and Statistics, 2013.

- Dua and Graff (2017) Dheeru Dua and Casey Graff. UCI machine learning repository, 2017. URL http://archive.ics.uci.edu/ml.

- Gneiting and Raftery (2007) Tilmann Gneiting and Adrian E Raftery. Strictly proper scoring rules, prediction, and estimation. Journal of the American Statistical Association, 102(477):359–378, 2007.

- Havasi et al. (2018) Marton Havasi, José Miguel Hernández-Lobato, and Juan José Murillo-Fuentes. Inference in deep gaussian processes using stochastic gradient hamiltonian monte carlo. In Advances in Neural Information Processing Systems, 2018.

- Hensman et al. (2013) James Hensman, Nicolo Fusi, and Neil D Lawrence. Gaussian processes for big data. In Uncertainty in Artificial Intelligence, 2013.

- Hernández-Lobato and Hernández-Lobato (2016) Daniel Hernández-Lobato and José Miguel Hernández-Lobato. Scalable gaussian process classification via expectation propagation. In Artificial Intelligence and Statistics, 2016.

- Ioffe and Szegedy (2015) Sergey Ioffe and Christian Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International Conference on Machine Learning, 2015.

- Jankowiak et al. (2020) Martin Jankowiak, Geoff Pleiss, and Jacob R Gardner. Parametric gaussian process regressors. In International Conference on Machine Learning, 2020.

- Kingma and Ba (2015) Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In International Conference on Learned Representations, 2015.

- Knoblauch (2019) Jeremias Knoblauch. Robust deep gaussian processes. arXiv preprint arXiv:1904.02303, 2019.

- Knoblauch et al. (2019) Jeremias Knoblauch, Jack Jewson, and Theodoros Damoulas. Generalized variational inference. arXiv preprint arXiv:1904.02063, 2019.

- Price (1958) Robert Price. A useful theorem for nonlinear devices having gaussian inputs. IRE Transactions on Information Theory, 4(2):69–72, 1958.

- Rasmussen (2003) Carl Edward Rasmussen. Gaussian processes in machine learning. In Summer School on Machine Learning, pages 63–71. Springer, 2003.

- Salimans et al. (2013) Tim Salimans, David A Knowles, et al. Fixed-form variational posterior approximation through stochastic linear regression. Bayesian Analysis, 8(4):837–882, 2013.

- Salimbeni and Deisenroth (2017) Hugh Salimbeni and Marc Deisenroth. Doubly stochastic variational inference for deep gaussian processes. In Advances in Neural Information Processing Systems, 2017.

- Salimbeni et al. (2018) Hugh Salimbeni, Ching-An Cheng, Byron Boots, and Marc Deisenroth. Orthogonally decoupled variational gaussian processes. In Advances in neural information processing systems, 2018.

- Seeger et al. (2005) Matthias Seeger, Yee-Whye Teh, and Michael Jordan. Semiparametric latent factor models. Technical report, 2005.

- Sheth and Khardon (2017) Rishit Sheth and Roni Khardon. Excess risk bounds for the bayes risk using variational inference in latent gaussian models. In Advances in Neural Information Processing Systems, 2017.

- Snelson and Ghahramani (2006) Edward Snelson and Zoubin Ghahramani. Sparse gaussian processes using pseudo-inputs. In Advances in neural information processing systems, 2006.

- Titsias (2009) Michalis Titsias. Variational learning of inducing variables in sparse gaussian processes. In Artificial Intelligence and Statistics, 2009.

- Todorov et al. (2012) Emanuel Todorov, Tom Erez, and Yuval Tassa. Mujoco: A physics engine for model-based control. In International Conference on Intelligent Robots and Systems, 2012.

- Vijayakumar and Schaal (2000) Sethu Vijayakumar and Stefan Schaal. Locally weighted projection regression: An o (n) algorithm for incremental real time learning in high dimensional space. In International Conference on Machine Learning, 2000.

- Wilson et al. (2016) Andrew G Wilson, Zhiting Hu, Russ R Salakhutdinov, and Eric P Xing. Stochastic variational deep kernel learning. In Advances in Neural Information Processing Systems, 2016.

Appendix A EXPERIMENTAL DETAILS

We use Matérn kernels with independent length scales for each input dimension throughout. Throughout we discard input or output dimensions that have negligible variance.

A.1 QUADRATURE RULE ABLATION STUDY

The experimental procedure for this experiment follows that described in the next section, with the difference that the quadrature rule ablation study described in Sec. 5.1 only makes use of the 8 smallest UCI datasets and we consider .

A.2 UNIVARIATE REGRESSION

We follow the experimental procedure outlined in (Jankowiak et al., 2020). In particular we use the Adam optimizer for optimization with an initial learning rate of that is progressively decreased during the course of training (Kingma and Ba, 2015). We use a mini-batch size of for the Buzz, Song, 3droad and Houseelectric datasets and for all other datasets. We train for 400 epochs except for the Houseelectric dataset where we train for 150 epochs and the Buzz, Song, and 3droad datasets where we train for 250 epochs. We do 10 train/test/validation splits on all datasets, always in the proportion 15:3:2. All datasets are standardized in both input and output space. For all 2-layer models we use inducing points for each GP; we initialize with kmeans. For the DSPP we use quadrature rule QR3 with S=10, while for the DGP and -DGP we use 10 Monte Carlo samples to approximate the ELBO training objective. We use the validation set to determine a small set of hyperparameters. In particular for the DGP we search over (where, as elsewhere, is a constant that scales the KL regularization term). For the -DGP we search over (with ). For the DSPP we search over . For all 2-layer models we also search over the hyperparameter , which controls the width of the first layer. For all 2-layer models the mean function in the first layer is linear with learned weights, and the mean function in the second (final) layer is constant with a learned mean. As mentioned in the main text, for the DSPP we use diagonal covariance matrices to define variance functions. In contrast, for the DGP and -DGP we use full rank covariance matrices parameterized by Cholesky factors, as in the original references. To evaluate log likelihoods for the DGP and -DGP we use a Monte Carlo estimator with samples. For all models we do multiple restarts (3) and only train the best initialization to completion (as judged by training NLL); this makes the optimization more robust. As mentioned in the main text, results for PPGPR and OD-SVGP are taken from (Jankowiak et al., 2020).

A.3 MULTIVARIATE REGRESSION

We first describe the linear model of coregionalization (LMC) model structure used by all our multivariate models. We focus on the topmost layer of GPs of width , as the deeper layers (here only , since this is a 2-layer model) are structured identically as in the univariate case. Our models are specified by the generative process

| (24) |

where represents a -dimensional matrix of GP latent function values, is a -dimensional matrix of outputs and is the set of -dimensional inputs. Here is a matrix of learned coefficients that mixes the topmost GPs. The likelihood is a Normal likelihood with a (block-)diagonal covariance matrix specified by learnable parameters. Throughout our experiments we choose and treat (the width of the first GP layer) as a hyperparameter.

Our experimental procedure for the multivariate regression experiments follows that of the univariate regression experiments described in the previous section, with the following differences. For the DSPP we use quadrature points. For the 2-layer models we use inducing points, while for the single-layer models we use inducing points. We use a mini-batch size of for all datasets and train for 300 epochs. To evaluate log likelihoods for the DGP and -DGP we use a Monte Carlo estimator with samples. We use 5 random train/test/validation splits for each dataset.

A.4 MULTILAYER MODELS

The experimental procedure used for the multilayer experiments in Sec. 5.4 follows the procedure described in Sec. A.2, with the following differences. For 3-layer models we use 5 instead of 10 random train/test/validation splits. In addition to searching over and the layer width 777Note that here is the width of the first as well as the second layer. for 3-layer models we also search over several discrete topology choices. In particular the first layer always uses a linear mean function and the final layer always uses a constant mean function, but the structure of the second layer—in particular how it depends on the outputs of the first layer—differs:

-

1.

the layer mean function is linear and depends on the outputs of the layer (i.e. vanilla feedforward)

-

2.

the layer mean function is linear and depends on the inputs (but the kernel function only depends on the outputs of the layer)

-

3.

the layer mean function is linear and depends on the outputs of the layer and the inputs (but the kernel function only depends on the outputs of the layer)

-

4.

the layer mean function is linear and both the mean function and kernel function depend on the inputs and the outputs of the layer

Note that this search over topologies applies to both DSPPs and DGPs.

A.5 DEEP KERNEL LEARNING REGRESSION

The neural network variants of SVGP / PPGPR / DGP / DSPP all use the same 5-layer feature extractor proposed by Wilson et al. (2016). The layers have , , , , and hidden units (respectively). The inputs to the SVGP / PPGPR / DGP / DSPP models are the -dimensional extracted features. We apply batch normalization (Ioffe and Szegedy, 2015) and a ReLU non-linearity after the first four layers. We z-score the final set of extracted features (out of the layer), which we accomplish using a batch normalization layer without any learned affine transformation. The neural network parameters are trained jointly with the SVGP / PPGPR / DGP / DSPP parameters using the Adam optimizer. We apply weight decay only to the neural network parameters. For all models and datasets, we use the validation set to search over the regularization parameter and the amount of weight decay . For the DGP/DSPP models we only consider 2-layer models with . The rest of the training details (learning rate, number of epochs, mini-batch sizes, etc.) match those outlined in Sec. A.2.

Appendix B TIME AND SPACE COMPLEXITY

We briefly describe the time and space complexity of 2-layer univariate DSPP models that utilize quadrature rule QR3. (Extending to deeper and multivariate models is straightforward.) Our analysis is similar to that of doubly-stochastic Deep Gaussian Processes (Salimbeni and Deisenroth, 2017). In particular, when using QR3, the running time and space complexities of DSPP are identical to that of the doubly stochastic DGP if the number of quadrature sites for DSPP is taken to be equal to the number of samples used for the DGP.

We first note that computing the marginal distribution of a single-layer sparse Gaussian Process—as in Eqn. 10—is . Both the predictive mean (Eqn. 7) and variance (Eqn. 8) require computing which is a cubic operation. After this operation all other terms can be computed in time. If the inverse (or, more practically, its Cholesky factor) is cached, all subsequent predictive distributions also require time.

Training complexity.

Each DSPP training iteration requires computing Eqn. 21. Again, let be the width of the hidden GP layer, be the number of quadrature points, and be the number of inducing points (which, for simplicity, we assume is the same for both layers). The right term in Eqn. 21 is the sum of KL divergences between -dimensional multivariate Normal distributions (one for each hidden-layer GP and one for the final-layer GP), for a total of computation. To compute the log likelihood term, we compute , quadrature points , and . This requires computation for the terms of each GP, and then multiplies against matrices of size , where is the minibatch size. In total the computational complexity is . The space complexity is —the size of storing each as well as the additional vectors in Eqns. 7 and 8 for each marginal distribution .

Prediction complexity.

We use nearly the same set of computations at test time as we do during training. The only difference is that, since the parameters are constant, we do not need to recompute the matrices at every iteration. Consequentially, after the one-time cost to compute these inverses, the remaining terms can be computed in time. The space complexity is still .

Appendix C ADDITIONAL RESULTS

Quadrature weights.

In Fig. 9 we depict a histogram of learned quadrature weights for 96 DSPPs trained using quadrature rule QR3. In particular we follow the experimental procedure described in Sec. A.2 with quadrature points. We average over 3 train/test/validation splits for the 8 smallest UCI regression datasets with 4 different values of , for a total of 96 model runs. We then depict distributions over the largest, second largest, and third largest quadrature weights. We see that while a significant amount of the probability mass is put on the leading one or two mixture components, DSPP training does not result in degenerate weights: the final predictive distribution reflects a diversity of mean and variance functions.

Using MC integration instead of quadrature.

Rather than using a quadrature scheme or learned weights to evaluate the integral in Eq. 15, we could alternatively use Monte Carlo sampling. As described in Sec. 3, this would result in a biased estimate of the predictive objective function. In Fig. 8 we depict the NLL and RMSE of DSPP models that use biased MC sampling to evaluate Eq. 15 rather than quadrature. As this approach bypasses the finite mixture approximation made by DSPP models, we refer to this class of model as Biased Predictive Deep GPs (or BPDGPs). These models are trained following the procedure described in Sec. A.2.888 Integrals are evaluated with 32 MC samples. We find that DSPP models (trained with QR3) tend to outperform the biased BPDGP models, both in terms of NLL and RMSE. In fact, the biased models achieve even worse RMSE than single-layer SVGP or PPGPR models.

Quadrature ablation study.

Table 4 displays the average NLL, RMSE, and CRPS results across all datasets and train/test/validation splits.

Univariate regression.

Multivariate regression.

Multilayer models.

Comparison with Deep Kernel Learning.

Results compilations.

Tables 9, 10, and 11 report numbers for all experiments in Sec. 5. Numbers are averages standard errors over train/test/validation splits.

| DSPP-QR1 | DSPP-QR2 | DSPP-QR3 | ||

| NLL | ||||

| RMSE | ||||

| CRPS |

| OD-SVGP | PPGPR | DGP | -DGP | DSPP | ||

| NLL | ||||||

| RMSE | ||||||

| CRPS |

| SVGP | PPGPR | -DGP | DGP | DSPP | ||

| NLL | ||||||

| MRMSE | ||||||

| RMSE |

| PPGPR (1L) | DGP (2L) | DSPP (2L) | DGP (3L) | DSPP (3L) | ||

| NLL | ||||||

| RMSE | ||||||

| CRPS |

| SVGP | NN+SVGP | PPGPR | NN+PPGPR | DGP | NN+DGP | DSPP | NN+DSPP | ||

| NLL | |||||||||

| RMSE | |||||||||

| CRPS |

| OD-SVGP | PPGPR | -DGP (2L) | DGP (2L) | DGP (3L) | DSPP-QR1 (2L) | DSPP-QR2 (2L) | DSPP-QR3 (2L) | DSPP-QR3 (3L) | ||||

| Metric | Dataset | |||||||||||

| NLL | Pol | — | — | |||||||||

| Elevators | — | — | ||||||||||

| Bike | — | — | ||||||||||

| Kin40K | ||||||||||||

| Protein | ||||||||||||

| Keggdir. | ||||||||||||

| Slice | ||||||||||||

| Keggundir. | — | — | ||||||||||

| 3Droad | — | — | — | — | ||||||||

| Song | — | — | — | — | ||||||||

| Buzz | — | — | — | — | ||||||||

| Houseelectric | — | — | — | — | ||||||||

| RMSE | Pol | — | — | |||||||||

| Elevators | — | — | ||||||||||

| Bike | — | — | ||||||||||

| Kin40K | ||||||||||||

| Protein | ||||||||||||

| Keggdir. | ||||||||||||

| Slice | ||||||||||||

| Keggundir. | — | — | ||||||||||

| 3Droad | — | — | — | — | ||||||||

| Song | — | — | — | — | ||||||||

| Buzz | — | — | — | — | ||||||||

| Houseelectric | — | — | — | — | ||||||||

| CRPS | Pol | — | — | |||||||||

| Elevators | — | — | ||||||||||

| Bike | — | — | ||||||||||

| Kin40K | ||||||||||||

| Protein | ||||||||||||

| Keggdir. | ||||||||||||

| Slice | ||||||||||||

| Keggundir. | — | — | ||||||||||

| 3Droad | — | — | — | — | ||||||||

| Song | — | — | — | — | ||||||||

| Buzz | — | — | — | — | ||||||||

| Houseelectric | — | — | — | — |

| SVGP | PPGPR | -DGP (2L) | DGP (2L) | DSPP-QR3 (2L) | ||||

| Metric | Dataset | |||||||

| NLL | Reacher | |||||||

| R.-Baxter | ||||||||

| Sarcos | ||||||||

| Mujoco | ||||||||

| Swimmer | ||||||||

| MRMSE | Reacher | |||||||

| R.-Baxter | ||||||||

| Sarcos | ||||||||

| Mujoco | ||||||||

| Swimmer | ||||||||

| RMSE | Reacher | |||||||

| R.-Baxter | ||||||||

| Sarcos | ||||||||

| Mujoco | ||||||||

| Swimmer |

| SVGP | NN+SVGP | PPGPR | NN+PPGPR | DGP (2L) | NN+DGP | DSPP | NN+DSPP | ||||

| Metric | Dataset | ||||||||||

| NLL | Kin40K | ||||||||||

| Protein | |||||||||||

| Keggdir. | |||||||||||

| Slice | |||||||||||

| RMSE | Kin40K | ||||||||||

| Protein | |||||||||||

| Keggdir. | |||||||||||

| Slice | |||||||||||

| CRPS | Kin40K | ||||||||||

| Protein | |||||||||||

| Keggdir. | |||||||||||

| Slice |