Deep Partial Multi-Label Learning with Graph Disambiguation

Abstract

In partial multi-label learning (PML), each data example is equipped with a candidate label set, which consists of multiple ground-truth labels and other false-positive labels. Recently, graph-based methods, which demonstrate a good ability to estimate accurate confidence scores from candidate labels, have been prevalent to deal with PML problems. However, we observe that existing graph-based PML methods typically adopt linear multi-label classifiers and thus fail to achieve superior performance. In this work, we attempt to remove several obstacles for extending them to deep models and propose a novel deep Partial multi-Label model with grAph-disambIguatioN (PLAIN). Specifically, we introduce the instance-level and label-level similarities to recover label confidences as well as exploit label dependencies. At each training epoch, labels are propagated on the instance and label graphs to produce relatively accurate pseudo-labels; then, we train the deep model to fit the numerical labels. Moreover, we provide a careful analysis of the risk functions to guarantee the robustness of the proposed model. Extensive experiments on various synthetic datasets and three real-world PML datasets demonstrate that PLAIN achieves significantly superior results to state-of-the-art methods.

1 Introduction

The rapid growth of deep learning allows us to tackle increasingly sophisticated problems. Among them, multi-label learning (MLL), which assigns multiple labels to an object, is a fundamental and intriguing task in various real-world applications such as image retrieval Lai et al. (2016), autonomous vehicles Chen et al. (2019a), and sentiment analysis Yilmaz et al. (2021). Typically, these tasks require precisely labeled data, which is expensive and time-consuming due to the complicated structure and the high volume of the output space.

To address this issue, plenty of works have studied different weakly-supervised settings of MLL, including semi-supervised MLL Shi et al. (2020), multi-label with missing labels Durand et al. (2019); Huynh and Elhamifar (2020), and single-labeled MLL Xu et al. (2022). While these learning paradigms mainly battle the rapid growth of data volume, they overlook the intrinsic difficulty and ambiguity in multi-label annotation. For example, it is hard for a human annotator to identify an Alaskan Malamute from a Siberian Husky. One potential solution is to randomly decide the binary label value. However, it may involve uncontrollable label noise that has a negative impact on the training procedure. Another strategy is to leave it missing, but then, we may lose critical information. Recently, partial multi-label learning (PML) Xie and Huang (2018) has been proposed to alleviate this problem. In PML, the annotators are allowed to provide a candidate label set that contains all potentially relevant labels as well as false-positive labels.

A straightforward approach to learning from PML data is to regard all candidate labels as valid ones, and then, off-the-shelf multi-label methods can be applied. Nevertheless, the presence of false-positive labels can highly impact predictive performance. To cope with this issue, many PML methods Xie and Huang (2018); Yu et al. (2018); He et al. (2019); Sun et al. (2019); Li et al. (2020); Lyu et al. (2020); Xie and Huang (2020); Yan et al. (2021); Xie and Huang (2022) have been developed. Amongst them, graph-based PML methods Wang et al. (2019); Li and Wang (2020); Xu et al. (2020); Lyu et al. (2020); Zhang and Fang (2021) have been popular. In practice, graph-based algorithms demonstrate a good ability to obtain relatively precise confidence of candidate labels by aggregating information from the nearest neighbors. However, we observe that most of them adopt linear classifier, which is less powerful to deal with complicated tasks and high data volumes.

Consequently, there is an urgent need for developing an effective deep model to boost the performance of graph PML methods. However, there are two main challenging issues. First, existing graph-based PML methods typically require propagating labels over the whole training dataset. It restricts their scalability when employing deep classifiers since the deep models are trained in batch mode. Second, it is well acknowledged that there exist label correlations in multi-label datasets, which also helps disambiguate the candidate labels. For example, if labels Amusement Park and Mickey Mouse are identified as true, it is very likely that label Disney is also a ground-truth label. Thus, it is required to exploit the label correlations to improve the disambiguation ability in a unified framework.

In this work, we propose a novel deep Partial multi-Label model with grAph disambIguatioN (PLAIN). In PLAIN, we involve both instance- and label-level similarities to disambiguate the candidate labels set, which simultaneously leverages instance smoothness as well as label correlations. Then, we build a deep multi-label classifier to fit the relatively credible pseudo-labels generated through disambiguation. Instead of separately training in two stages like previous works Wang et al. (2019); Zhang and Fang (2021), we propose an efficient optimization framework that iteratively propagates the labels and trains the deep model. Moreover, we give a careful analysis of the risk functions such that proper risk functions are chosen for improved robustness. Empirical results on nine synthetic datasets and three real-world PML datasets clearly verify the efficiency and effectiveness of our method. More theoretical and empirical results can be found in Appendix.

2 Related Works

Multi-Label Learning (MLL)

Liu et al. (2022); Wei et al. (2022) aims at assigning each data example multiple binary labels simultaneously. An intuitive solution, the one-versus-all method (OVA) Zhang and Zhou (2014), is to decompose the original problem into a series of single-labeled classification problems. However, it overlooks the rich semantic information and dependencies among labels, and thus fails to obtain satisfactory performance. To solve this problem, many MLL approaches are proposed, such as embedding-based methods Yu et al. (2014); Shen et al. (2018), classifier chains Read et al. (2011), tree-based algorithms Wei et al. (2021), and deep MLL models Yeh et al. (2017); Chen et al. (2019b); Bai et al. (2020). Nevertheless, these methods typically require a large amount of fully-supervised training data, which is expensive and time-consuming in MLL tasks. To this end, some works have studied different weakly-supervised MLL settings. For example, semi-supervised MLL Niu et al. (2019); Shi et al. (2020) leverages information from both fully-labeled and unlabeled data. Multi-label with missing labels Durand et al. (2019); Wei et al. (2018); Xu et al. (2022) allows providing a subset of ground-truth. In noisy MLL Veit et al. (2017), the binary labels may be flipped to the wrong one. Nevertheless, though these settings relieve the burden of multi-label annotating, they ignore the inherent difficulty of labeling, i.e. the labels can be ambiguous.

Partial Multi-Label Learning (PML)

learns from a superset of ground-truth labels, where multiple labels are possible to be relevant. To tackle the ambiguity in the labels, the pioneering work Xie and Huang (2018) estimates the confidence of each candidate label being correct via minimizing confidence-weighted ranking loss. Some works Yu et al. (2018); Sun et al. (2019); Li et al. (2020); Gong et al. (2022) recover the ground-truth by assuming the true label matrix is low-rank. Recently, graph-based methods Wang et al. (2019); Xu et al. (2020); Lyu et al. (2020); Xie and Huang (2020); Zhang and Fang (2021); Xu et al. (2021); Tan et al. (2022) have attracted much attention from the community due to the good disambiguation ability. For instance, PARTICLE Zhang and Fang (2021) identifies the credible labels through an iterative label propagation procedure and then, applies pair-wise ranking techniques to induce a multi-label predictor. Although graph-based models have shown promising results, we observe that most of them adopt linear classifiers, which significantly limits the model expressiveness. Besides, they typically separate the graph disambiguation and model training into two stages, which makes the multi-label classifier error-prone; in contrast, the proposed PLAIN is an end-to-end framework.

Some studies have also developed deep PML models, such as adversarial PML model Yan and Guo (2019) and mutual teaching networks Yan et al. (2021). However, it remains urgent to combine the graph and deep models together such that the model enjoys high expressiveness as well as good disambiguation ability. It should also be noted that the recent popular graph neural networks (GNN) model Wu et al. (2021) is not suitable for PML. The reason is that GNN aims at better representation learning (at an instance-level), while in PML, the graph structure is used to alleviate the label ambiguity.

3 Method

We denote the -dimensional feature space as , and the label space as with class labels. The training dataset contains examples, where is the instance vector and is the candidate label set. Without loss of generality, we assume that the instance vectors are normalized such that . Besides, we denote as the ground-truth label set, which is a subset of . For simplicity, we define , as the binary vector representations of and . When considering the whole dataset, we also denote the candidate label matrix by .

In what follows, we first introduce the learning target of our model that integrates label correlations to boost disambiguation ability. Then, we provide an efficient optimization framework for our deep propagation network.

3.1 Learning Objective

Our ultimate goal is to estimate a multi-label predictor from . In this work, we assume that its output satisfies the following properties,

-

1.

is close to the candidate vector ;

-

2.

satisfies the instance-level similarity. That is, if two instances are close to each other, then their label vectors are likely to be close too;

-

3.

satisfies the label-level similarity. That is, if two labels co-occur in the training data frequently, then they tend to co-exist at many instances.

These properties coincide with our goals. The first property is natural since the only thing we can trust is the candidate label set. The second one has been widely adopted to disambiguate the ground-truth label from the false-positive labels. The last one exploits the label correlations to further promote the performance. In this paper, we adopt a graph-based model to achieve these goals. Generally speaking, the graph structure can be collected in various ways. One direct strategy is to use prior knowledge. For instance, for data mining tasks, social networks Qiu et al. (2018) can be used to depict instance similarity. The label similarity can also be estimated using an external knowledge graph Lee et al. (2018).

However, we notice that there is no available graph structure in existing public PML datasets. Hence, we propose to construct the instance graph and the label graph in a data-driven way. To obtain , we adopt a monomial kernel Iscen et al. (2017) as the distance metric and calculate a sparse instance affinity matrix with elements,

Here saves the indices of -nearest neighbors of . controls the weights of similarity. Then, we get a symmetrized instance graph by .

Inspired by HALE Lyu et al. (2020), we build the label graph by means of the label co-occurrence statistics from the training data, whose elements are given by,

where is an indicator function returning if the event is true and otherwise. It is worth noting that HALE simply calculates the average co-occurrence by dividing the instance number, which overlooks the existence of false-positive labels. In our method, we penalize it by the counts of instances that are assigned the labels as the candidates. In other words, if one label is assigned to too many instances, its co-occurrence statistics are less reliable.

Next, we impose the instance-level similarity property on the model output by means of a Laplacian regularizer,

| (1) |

where is the predicted label matrix. is the Laplacian matrix of instance graph. is a diagonal matrix with . The objective in Eq. (1) enforces two data examples to have similar predicted labels if they have a large affinity score. Similarly, we can introduce the label-level similarity by,

Finally, we involve a candidate consistency term to get the following objective,

where is the Frobenius norm. , , and are all positive hyperparameters.

In summary, the main goal of our objective is to utilize the instance- and label-level similarity to recover reliable label confidences and address the problem of false positives, while simultaneously enjoying an extra performance improvement by exploiting label correlations.

3.2 Deep Propagation Network

If a linear model is adopted, the parameters can be directly obtained by optimizing with gradient descent. However, in deep models, it is not the case. When we update the model using a mini-batch of data, the instance-level manifold regularizer can involve unexpected gradient updating on the nearest neighbors of these data. Then, the training time will increase rapidly. To remedy this problem, we propose an efficient optimization framework for training a deep model from PML data. Specifically, we maintain a smooth pseudo-label matrix , which minimizes the propagation error while being close to the model output . In the sequel, we elaborate the training procedure of our model. The training procedure is also summarized in Algorithm 1.

3.2.1 Propagation Step

At -th epoch, we first propagate the label information from candidates and the model output to the intermediate ones. In graph-based methods, the output label is required to be smooth enough. Therefore, we use the Frobenius norm as the measure to ensure the closeness of and . Formally, we estimate by,

| (2) |

We can derive its gradient by,

| (3) |

which is followed by the matrix properties that and . We have because is symmetric. The optimal condition typically requires solving a Sylvester system, which can be time-consuming. Therefore, we solve this problem by gradient descent , where is the learning rate. Note that in MLL datasets, negative labels usually dominate, which makes all the pseudo-labels tend to concentrate at a small value. To cope with this issue, we further perform column-wise min-max normalization on to obtain a balanced output.

3.2.2 Model Updating Step

Given an instance vector , we first feed it into a deep neural model . Then, we update the model parameters to fit the pseudo-labels,

| (4) |

where is the loss function. Recall that in Eq. (2), we enforce to be close to using the Frobenius norm. Hence, a natural choice of loss is to reuse the mean squared error (MSE), which is adopted by most graph-based PML algorithms Wang et al. (2019); Xu et al. (2020); Zhang and Fang (2021). However, in PML tasks, the disambiguated labels can still be noisy and the MSE loss leads to heavy overfitting. This is largely overlooked by previous works.

To tackle this problem, we shall have a closer look at the risk function. While MSE penalize too much on prediction mistakes, other robust surrogate losses can be considered, such as mean absolute error (MAE). However, we notice that MAE violates our smooth prediction assumption. To this end, we adopt the widely-used binary cross-entropy loss (BCE) for multi-label learning,

where is the sigmoid function. On one hand, BCE loss approximately grows linearly for largely negative values, which makes it less sensitive to outliers. On the other hand, BCE outputs relatively smooth output such that the smoothness is preserved.

In effect, we can go further on the risk. A plethora of works Ghosh et al. (2017); Feng et al. (2020) has demonstrated that BCE is still less robust since it is unbounded. Let us consider the probabilistic output of the deep model. Then the BCE loss is equivalent to a negative log loss on . If the predicted probability of the label is close to zero, the loss can grow quickly to infinity. Previous works have suggested that bounded loss on the probabilistic output is theoretically more robust than unbounded ones. One common choice of bounded loss is the probabilistic MSE loss (PMSE), i.e. . In that case, the training criterion is consistent with our propagation error. Empirically, both PMSE and BCE loss perform well, and thus, we instantiate our model using BCE loss when comparing it with other methods. In Section B.2, we present a comparison between these losses, which clearly verifies our conjectures.

Fast Implementation.

To efficiently build the instance graph from training data, we employ a quick similarity search python package Faiss Johnson et al. (2017) to find the nearest neighbors. The sparse matrix multiplication can be efficiently implemented by the Scipy package. In Appendix A, we provide a detailed analysis of the complexity and convergence properties to show the efficacy of our method.

| Datasets | PLAIN | PML-NI | HALE | ML-KNN | GLOCAL | PML-lc | PARTICLE |

| Ranking Loss (the lower the better) | |||||||

| Music-emotion | 0.2170.006 | 0.2440.007 | 0.2990.008 | 0.3650.010 | 0.3440.025 | 0.3100.010 | 0.2610.007 |

| Music-style | 0.1360.005 | 0.1380.009 | 0.2710.015 | 0.2290.010 | 0.2530.007 | 0.2690.016 | 0.3510.014 |

| Mirflickr | 0.0880.006 | 0.1230.004 | 0.1430.009 | 0.1780.013 | 0.2120.003 | 0.2060.008 | 0.2130.011 |

| Average Precision (the higher the better) | |||||||

| Music-emotion | 0.6640.007 | 0.6100.011 | 0.5430.005 | 0.5050.010 | 0.5130.010 | 0.5390.013 | 0.6260.011 |

| Music-style | 0.7420.012 | 0.7400.013 | 0.6870.013 | 0.6590.014 | 0.6450.007 | 0.5980.009 | 0.6210.016 |

| Mirflickr | 0.8470.012 | 0.7920.004 | 0.7180.005 | 0.6980.013 | 0.6790.007 | 0.6750.010 | 0.6900.012 |

| Hamming Loss (the lower the better) | |||||||

| Music-emotion | 0.1910.004 | 0.2540.013 | 0.2190.003 | 0.3640.011 | 0.2190.001 | 0.2210.008 | 0.2060.003 |

| Music-style | 0.1120.003 | 0.1570.010 | 0.1220.005 | 0.8440.010 | 0.1440.001 | 0.1620.006 | 0.1370.007 |

| Mirflickr | 0.1620.003 | 0.2230.005 | 0.1560.003 | 0.2170.006 | 0.2530.001 | 0.1930.006 | 0.1790.008 |

| Metrics | Emotions | Birds | Medical | Image | Corel5k | Bibtext | Eurlex-dc | Eurlex-sm | NUS-WIDE | Sum |

| Ranking Loss | 18/0/0 | 15/0/3 | 15/0/3 | 15/1/2 | 18/0/0 | 18/0/0 | 15/0/3 | 14/4/0 | 16/0/2 | 144/5/13 |

| Average Precision | 18/0/0 | 16/1/1 | 15/0/3 | 18/0/0 | 16/0/2 | 16/1/1 | 18/0/0 | 18/0/0 | 18/0/0 | 153/2/7 |

| Hamming Loss | 15/1/2 | 14/1/3 | 11/4/3 | 11/1/6 | 5/13/0 | 11/7/0 | 8/10/0 | 14/4/0 | 14/4/0 | 103/45/14 |

| Sum | 51/1/2 | 45/2/7 | 41/4/9 | 44/2/8 | 39/13/2 | 45/8/1 | 41/10/3 | 46/8/0 | 48/4/2 | 400/52/34 |

4 Experiments

In this section, we present the main empirical results of PLAIN compared with state-of-the-art baselines. More experimental results are shown in Appendix B.

4.1 Datasets

We employe a total of twelve synthetic as well as real-world datasets for comparative studies. Specifically, the synthetic ones are generated from the widely-used multi-label learning datasets. A total of nine benchmark multi-label datasets111http://mulan.sourceforge.net/datasets-mlc.html are used for synthetic PML datasets generation, including Emotions, Birds, Medical, Image, Bibtex, Corel5K, Eurlex-dc, Eurlex-sm, NUS-WIDE. Note that NUS-WIDE is a large-scale dataset, and we preprocess it as in Lyu et al. (2020). For each data example in MLL datasets, we randomly select irrelevant labels and aggregate them with the ground-truth labels to obtain a candidate set. For example, given an instance and its ground-truth label set , we select labels from its complementary set . If there are less than irrelevant labels, i.e. , we simply set the whole training set as the candidate set for . Following the experimental settings in Lyu et al. (2020), we choose , resulting in synthetic datasets. Furthermore, we conducted experiments on three real-world PML datasets Zhang and Fang (2021), including Music-emotion, Music-style, Mirflickr. These three datasets are derived from the image retrieval task Huiskes and Lew (2008), where the candidate labels are collected from web users and then further verified by human annotators to determine the ground-truth labels.

4.2 Baselines and Implementation Details

We choose six benchmark methods for comparative studies, including two MLL methods ML-KNN Zhang and Zhou (2007), GLOCAL Zhu et al. (2018), and four state-of-the-art PML methods PML-NI Xie and Huang (2022), PARTICLE Zhang and Fang (2021), HALE Lyu et al. (2020), and PML-lc Xie and Huang (2018). In particular, PARTICLE and HALE are two advanced graph-based PML methods. PARTICLE Zhang and Fang (2021) adopts an instance-level label propagation algorithm to disambiguate the candidate labels. HALE Lyu et al. (2020) regards the denoising procedure as a graph-matching procedure.

The parameter setups for the used methods are as follows. We fix for all NN-based methods, including PLAIN. For the baselines, we fix or fine-tune the hyperparameters as the suggested configurations in respective papers. For our PLAIN method, the deep model is comprised of three fully-connected layers. The hidden sizes are set as for those datasets with less than labels, and for those datasets with more than and less than labels. For the remaining datasets, we set the hidden sizes as . The trade-off parameters and are hand-tuned from . is selected from . Following Iscen et al. (2017), we set . We train our deep model via stochastic gradient descent and empirically set the learning rate as for both propagation and deep model training procedures. The number of maximum iterations is set as for small-scale datasets and for the large-scale NUS-WIDE dataset. Besides, weight decay is applied with a rate of to avoid overfitting.

For performance measure, we use three widely-used multi-label metrics, ranking loss, average precision and hamming loss Zhang and Zhou (2014). Finally, we perform ten-fold cross-validation on each dataset and the mean metric values as well as the standard deviations are reported.

| Datasets | PLAIN | PML-NI | HALE | ML-KNN | GLOCAL | PML-lc | PARTICLE |

| Ranking Loss (the lower the better) | |||||||

| Emotions | 0.1580.024 | 0.2140.029 | 0.2350.037 | 0.2410.026 | 0.3220.053 | 0.4590.035 | 0.2590.019 |

| Birds | 0.2050.036 | 0.1870.035 | 0.2710.061 | 0.3040.048 | 0.3020.041 | 0.3210.021 | 0.3010.032 |

| Medical | 0.0500.011 | 0.0390.013 | 0.1690.025 | 0.0880.019 | 0.0680.005 | 0.0560.012 | 0.1000.021 |

| Image | 0.1900.015 | 0.2890.018 | 0.1920.015 | 0.3420.026 | 0.2640.010 | 0.4670.025 | 0.3150.073 |

| Corel5K | 0.1200.004 | 0.2050.006 | 0.2590.011 | 0.1390.007 | 0.1640.003 | 0.1690.013 | 0.3330.050 |

| Bibtext | 0.0790.005 | 0.1260.010 | 0.6010.009 | 0.2320.006 | 0.1310.006 | 0.3420.005 | 0.2870.010 |

| Eurlex-dc | 0.0330.002 | 0.0300.003 | 0.0790.002 | 0.0860.012 | 0.1500.003 | 0.0710.013 | 0.0610.023 |

| Eurlex-sm | 0.0270.001 | 0.0280.002 | 0.0400.002 | 0.0430.003 | 0.0710.003 | 0.0820.013 | 0.0530.002 |

| NUS-WIDE | 0.2110.003 | 0.2210.002 | 0.2390.012 | 0.3010.011 | 0.3110.020 | - | 0.2400.015 |

| Average Precision (the higher the better) | |||||||

| Emotions | 0.8120.043 | 0.7540.016 | 0.7510.035 | 0.7410.029 | 0.6470.030 | 0.5730.025 | 0.7450.024 |

| Birds | 0.6060.037 | 0.5800.062 | 0.5050.054 | 0.4530.052 | 0.3900.060 | 0.3880.035 | 0.4310.051 |

| Medical | 0.7810.039 | 0.8490.035 | 0.7690.023 | 0.6720.039 | 0.7510.009 | 0.7130.012 | 0.7200.044 |

| Image | 0.7740.020 | 0.6680.018 | 0.7620.016 | 0.6270.022 | 0.6920.008 | 0.5230.019 | 0.6890.096 |

| Corel5K | 0.3190.010 | 0.2890.011 | 0.2310.008 | 0.2510.007 | 0.2840.007 | 0.2470.012 | 0.1350.041 |

| Bibtext | 0.5500.014 | 0.5370.012 | 0.3530.010 | 0.3060.006 | 0.4380.011 | 0.2830.010 | 0.3130.012 |

| Eurlex-dc | 0.7400.008 | 0.7000.011 | 0.6730.006 | 0.6030.012 | 0.2780.005 | 0.6020.012 | 0.6300.016 |

| Eurlex-sm | 0.8020.007 | 0.7180.011 | 0.7390.013 | 0.7610.012 | 0.5560.008 | 0.5820.013 | 0.6950.012 |

| NUS-WIDE | 0.2860.004 | 0.2740.003 | 0.2300.007 | 0.1710.015 | 0.1770.024 | - | 0.2060.017 |

| Hamming Loss (the lower the better) | |||||||

| Emotions | 0.2230.033 | 0.4550.059 | 0.2970.071 | 0.6070.209 | 0.2810.015 | 0.4370.027 | 0.2330.018 |

| Birds | 0.0880.010 | 0.0950.013 | 0.0960.009 | 0.0530.006 | 0.0960.008 | 0.1320.012 | 0.1420.018 |

| Medical | 0.0190.001 | 0.0140.002 | 0.0170.001 | 0.0220.002 | 0.0280.001 | 0.0630.003 | 0.0240.002 |

| Image | 0.2070.009 | 0.2730.028 | 0.1890.017 | 0.7530.005 | 0.2370.009 | 0.4430.014 | 0.4030.042 |

| Corel5K | 0.0090.000 | 0.0110.000 | 0.0140.001 | 0.0090.000 | 0.0090.000 | 0.0190.002 | 0.0090.000 |

| Bibtext | 0.0130.000 | 0.0150.000 | 0.0160.001 | 0.0140.000 | 0.0150.001 | 0.0210.001 | 0.0170.000 |

| Eurlex-dc | 0.0020.001 | 0.0100.001 | 0.0040.003 | 0.0090.005 | 0.0020.000 | 0.0130.005 | 0.0040.000 |

| Eurlex-sm | 0.0060.002 | 0.0110.001 | 0.0080.005 | 0.0120.001 | 0.0110.000 | 0.0190.002 | 0.0100.001 |

| NUS-WIDE | 0.0210.000 | 0.0350.002 | 0.0310.008 | 0.0490.016 | 0.0220.001 | - | 0.2900.006 |

-

- means over long time consumption, and thus, the experimental results are not reported.

4.3 Experimental Results

Table 1 reports the experimental results on real-world datasets. Table 3 lists the results on synthetic datasets, where the parameter is configured with . The results of are consistent with those of and thus are omitted. To better understand the superiority of the proposed method, we summarize the win/tie/loss counts on all synthetic datasets in Table 2. We also report the statistical testing results in Appendix B for analyzing the relative performance amongst competing algorithms. Based on these results, we can draw the following observations:

-

•

The proposed PLAIN method significantly outperforms all other competing approaches. For example, on the Music-emotion and Mirflicker datasets, in terms of average precision, PLAIN achieved results superior to those of the best baselines by % and % respectively.

-

•

According to Table 2, out of 486 statistical comparisons, six competing algorithms, and three evaluation metrics, PLAIN beats the competing methods in % cases. In particular, PLAIN wins or ties the baselines in % cases regarding average precision.

-

•

ML-KNN and GLOCAL obtain inferior performance on many datasets, such as Medical and Eurlex-dc. The reason is that these tailored MLL models regard all the candidate labels as the ground-truth and thus tend to overfit the false-positive labels.

-

•

Two PML methods PML-NI and HALE are strong baselines. In particular, PML-NI achieved the second-best results on most datasets. However, PLAIN performs significantly better than them, since their performance is restricted to the linear classifier.

-

•

On large-scale dataset NUS-WIDE, PLAIN still outperforms other methods on all evaluation metrics. It demonstrates the effectiveness of PLAIN in handling high-volume of data.

4.4 Further Analysis

Complexity Analysis

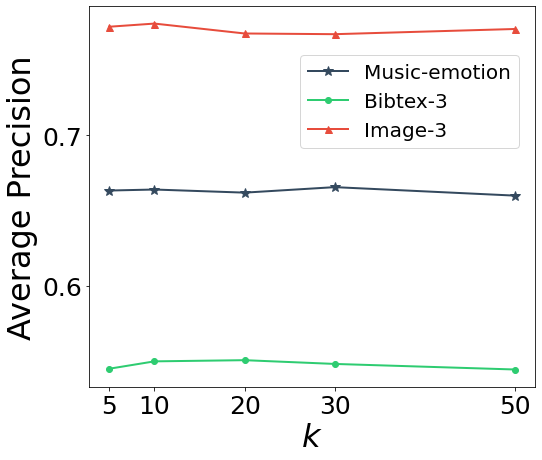

In Table 4, we report the running time of different training stages of PLAIN, including the graph building step, label propagation step (one epoch) and the deep model training step (one epoch), on datasets with various scales. First of all, we can see that the instance graph can be fast built in a few microseconds on all datasets, with the help of Faiss package Johnson et al. (2017). Moreover, by utilizing the sparse property of instance graph and the fast computation ability of GPUs, the time complexity of label propagation is at the same magnitude as that of deep model training. In particular, on those datasets with fewer labels, such as Music-emotion and Image, the propagation phase is as fast as the deep model traveling the whole training dataset. In Figure 2, (a) and (b) show the parameter sensitivities of PLAIN to and . We can observe that PLAIN is stable with varying values of and . Specifically, PLAIN obtains high average precision even with small and small . But when (without label propagation), our PLAIN model degenerates to the trivial DNN model and thus, the performance degrades. It should also be noted that when is too large, the performance of PLAIN slightly drops because the model tends to overfit. In summary, the proposed PLAIN model requires only a few gradient steps in the label propagation phase and can be efficiently trained.

Comparison between Loss Functions

As discussed in section 3.2.2, mean square error (MSE) is error-prone to the label noise. Hence, we conducted experiments to compare the performance of different loss functions, including MSE, MAE, BCE and probabilistic MSE (PMSE). The results are shown in Figure 2 (c). We can see that MAE demonstrates inferior performance and low convergence. Despite its robustness, it tends to produce harsh predictions and is opposite to the graph techniques which generate smooth outputs. MSE obtains good performance, but underperforms BCE and PMSE, since it easily overfits the false-positive labels. Finally, both BCE and PMSE demonstrate good empirical results. These observations suggest that a robust loss function is essential in successful learning from PML data.

| Datasets | Graph Building | Label Propagation | Model Training |

| Image | 1.0 | 150.2 | 154.4 |

| Medical | 1.1 | 344.0 | 112.2 |

| Music-emotion | 5.6 | 449.6 | 480.7 |

| Bibtex | 26.7 | 2498.7 | 600.8 |

| Eurlex-sm | 355.7 | 8911.2 | 1902.2 |

| NUS-WIDE | 1829.3 | 17255.2 | 11251.6 |

Ablation Analysis

An ablation study would be informative to see how different components of the proposed PLAIN contribute to the prediction performance. Thus, we compare PLAIN with three variants: 1) a pure deep neural network model (DNN) that treats the candidate labels as valid ones; 2) the PLAIN model without considering label-level similarity (PLAIN w/o LS); 3) the PLAIN model without considering instance-level similarity (PLAIN w/o IS). Figure 3 reports the results on one real-world dataset as well as two synthetic datasets. In general, both instance- and label-level graph regularizers improve the simple DNN model on most datasets and evaluation metrics. Then, by integrating the two regularizers, our PLAIN model consistently achieves the best performance. These results clearly verify the superiority of the proposed method.

5 Conclusion

In this work, we proposed a novel deep partial multi-label learning model PLAIN that copes with several limitations in existing graph-based PML methods. At each training epoch, we first involve both the instance- and label-level similarities to generate pseudo-labels via label propagation. We then train the deep model to fit the disambiguated labels. Moreover, we analyzed the time complexity and the robustness of PLAIN from both theoretical and empirical perspectives. Comprehensive experiments on synthetic datasets as well as real-world PML datasets clearly validate the efficiency, robustness, and effectiveness of our proposed method. Our work demonstrates that combining the graph and deep models together leads to high expressiveness as well as good disambiguation ability. We hope our work will increase attention toward a broader view of graph-based deep PML methods.

Acknowledgments

This work is supported by the National Key R&D Program of China (No. 2022YFB3304100) and by the Zhejiang University-China Zheshang Bank Co., Ltd. Joint Research Center. Gengyu Lyu is supported by the National Key R&D Program of China (No. 2022YFB3103104) and the China Postdoctoral Science Foundation (No. 2022M720320).

References

- Bai et al. [2020] Junwen Bai, Shufeng Kong, and Carla P. Gomes. Disentangled variational autoencoder based multi-label classification with covariance-aware multivariate probit model. In IJCAI, pages 4313–4321. ijcai.org, 2020.

- Chen et al. [2019a] Long Chen, Wujing Zhan, Wei Tian, Yuhang He, and Qin Zou. Deep integration: A multi-label architecture for road scene recognition. IEEE Trans. Image Process., 28(10):4883–4898, 2019.

- Chen et al. [2019b] Zhao-Min Chen, Xiu-Shen Wei, Peng Wang, and Yanwen Guo. Multi-label image recognition with graph convolutional networks. In CVPR, pages 5177–5186. Computer Vision Foundation / IEEE, 2019.

- Demsar [2006] Janez Demsar. Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res., 7:1–30, 2006.

- Durand et al. [2019] Thibaut Durand, Nazanin Mehrasa, and Greg Mori. Learning a deep convnet for multi-label classification with partial labels. In IEEE Conf. Comput. Vis. Pattern Recog., pages 647–657. IEEE, 2019.

- Feng et al. [2020] Lei Feng, Takuo Kaneko, Bo Han, Gang Niu, Bo An, and Masashi Sugiyama. Learning with multiple complementary labels. In ICML, volume 119, pages 3072–3081. PMLR, 2020.

- Ghosh et al. [2017] Aritra Ghosh, Himanshu Kumar, and P. S. Sastry. Robust loss functions under label noise for deep neural networks. In AAAI, pages 1919–1925. AAAI Press, 2017.

- Gong et al. [2022] Xiuwen Gong, Dong Yuan, and Wei Bao. Partial multi-label learning via large margin nearest neighbour embeddings. In AAAI, pages 6729–6736. AAAI Press, 2022.

- He et al. [2019] Shuo He, Ke Deng, Li Li, Senlin Shu, and Li Liu. Discriminatively relabel for partial multi-label learning. In ICDM, pages 280–288. IEEE, 2019.

- Huiskes and Lew [2008] Mark J. Huiskes and Michael S. Lew. The MIR flickr retrieval evaluation. In SIGMM, pages 39–43. ACM, 2008.

- Huynh and Elhamifar [2020] Dat Huynh and Ehsan Elhamifar. Interactive multi-label CNN learning with partial labels. In IEEE Conf. Comput. Vis. Pattern Recog., pages 9420–9429. IEEE, 2020.

- Iscen et al. [2017] Ahmet Iscen, Giorgos Tolias, Yannis Avrithis, Teddy Furon, and Ondrej Chum. Efficient diffusion on region manifolds: Recovering small objects with compact CNN representations. In IEEE Conf. Comput. Vis. Pattern Recog., pages 926–935. IEEE Computer Society, 2017.

- Johnson et al. [2017] Jeff Johnson, Matthijs Douze, and Hervé Jégou. Billion-scale similarity search with gpus. arXiv preprint arXiv:1702.08734, 2017.

- Lai et al. [2016] Hanjiang Lai, Pan Yan, Xiangbo Shu, Yunchao Wei, and Shuicheng Yan. Instance-aware hashing for multi-label image retrieval. IEEE Trans. Image Process., 25(6):2469–2479, 2016.

- Lee et al. [2018] Chung-Wei Lee, Wei Fang, Chih-Kuan Yeh, and Yu-Chiang Frank Wang. Multi-label zero-shot learning with structured knowledge graphs. In IEEE Conf. Comput. Vis. Pattern Recog., pages 1576–1585. IEEE, 2018.

- Li and Wang [2020] Ximing Li and Yang Wang. Recovering accurate labeling information from partially valid data for effective multi-label learning. In IJCAI, pages 1373–1380. ijcai.org, 2020.

- Li et al. [2020] Ziwei Li, Gengyu Lyu, and Songhe Feng. Partial multi-label learning via multi-subspace representation. In IJCAI, pages 2612–2618. ijcai.org, 2020.

- Liu et al. [2022] Weiwei Liu, Haobo Wang, Xiaobo Shen, and Ivor W. Tsang. The emerging trends of multi-label learning. IEEE Trans. Pattern Anal. Mach. Intell., 44(11):7955–7974, 2022.

- Lyu et al. [2020] Gengyu Lyu, Songhe Feng, and Yidong Li. Partial multi-label learning via probabilistic graph matching mechanism. In KDD. ACM, 2020.

- Niu et al. [2019] Xuesong Niu, Hu Han, Shiguang Shan, and Xilin Chen. Multi-label co-regularization for semi-supervised facial action unit recognition. In Adv. Neural Inform. Process. Syst., pages 907–917, 2019.

- Qiu et al. [2018] Jiezhong Qiu, Jian Tang, Hao Ma, Yuxiao Dong, Kuansan Wang, and Jie Tang. Deepinf: Social influence prediction with deep learning. In KDD. ACM, 2018.

- Read et al. [2011] Jesse Read, Bernhard Pfahringer, Geoff Holmes, and Eibe Frank. Classifier chains for multi-label classification. Mach. Learn., 85(3):333–359, 2011.

- Shen et al. [2018] Xiaobo Shen, Weiwei Liu, Ivor W. Tsang, Quan-Sen Sun, and Yew-Soon Ong. Multilabel prediction via cross-view search. IEEE Trans. Neural Networks Learn. Syst., 29(9):4324–4338, 2018.

- Shi et al. [2020] Wanli Shi, Victor S. Sheng, Xiang Li, and Bin Gu. Semi-supervised multi-label learning from crowds via deep sequential generative model. In KDD, pages 1141–1149. ACM, 2020.

- Sun et al. [2019] Lijuan Sun, Songhe Feng, Tao Wang, Congyan Lang, and Yi Jin. Partial multi-label learning by low-rank and sparse decomposition. In AAAI, pages 5016–5023. AAAI Press, 2019.

- Tan et al. [2022] Anhui Tan, Jiye Liang, Wei-Zhi Wu, and Jia Zhang. Semi-supervised partial multi-label classification via consistency learning. Pattern Recognit., 131:108839, 2022.

- Veit et al. [2017] Andreas Veit, Neil Alldrin, Gal Chechik, Ivan Krasin, Abhinav Gupta, and Serge J. Belongie. Learning from noisy large-scale datasets with minimal supervision. In IEEE Conf. Comput. Vis. Pattern Recog., pages 6575–6583. IEEE Computer Society, 2017.

- Wang et al. [2019] Haobo Wang, Weiwei Liu, Yang Zhao, Chen Zhang, Tianlei Hu, and Gang Chen. Discriminative and correlative partial multi-label learning. In IJCAI, pages 3691–3697. ijcai.org, 2019.

- Wei et al. [2018] Tong Wei, Lan-Zhe Guo, Yu-Feng Li, and Wei Gao. Learning safe multi-label prediction for weakly labeled data. Mach. Learn., 107(4):703–725, 2018.

- Wei et al. [2021] Tong Wei, Jiang-Xin Shi, and Yu-Feng Li. Probabilistic label tree for streaming multi-label learning. In KDD, pages 1801–1811. ACM, 2021.

- Wei et al. [2022] Tong Wei, Zhen Mao, Jiang-Xin Shi, Yu-Feng Li, and Min-Ling Zhang. A survey on extreme multi-label learning. CoRR, abs/2210.03968, 2022.

- Wu et al. [2021] Zonghan Wu, Shirui Pan, Fengwen Chen, Guodong Long, Chengqi Zhang, and Philip S. Yu. A comprehensive survey on graph neural networks. IEEE Trans. Neural Networks Learn. Syst., 32(1):4–24, 2021.

- Xie and Huang [2018] Ming-Kun Xie and Sheng-Jun Huang. Partial multi-label learning. In AAAI, pages 4302–4309. AAAI Press, 2018.

- Xie and Huang [2020] Ming-Kun Xie and Sheng-Jun Huang. Semi-supervised partial multi-label learning. In ICDM, pages 691–700. IEEE, 2020.

- Xie and Huang [2022] Ming-Kun Xie and Sheng-Jun Huang. Partial multi-label learning with noisy label identification. IEEE Trans. Pattern Anal. Mach. Intell., 44(7):3676–3687, 2022.

- Xu et al. [2020] Ning Xu, Yun-Peng Liu, and Xin Geng. Partial multi-label learning with label distribution. In AAAI, pages 6510–6517. AAAI Press, 2020.

- Xu et al. [2021] Ning Xu, Yun-Peng Liu, Yan Zhang, and Xin Geng. Progressive enhancement of label distributions for partial multilabel learning. IEEE Trans. Neural Networks Learn. Syst., pages 1–12, 2021.

- Xu et al. [2022] Ning Xu, Congyu Qiao, Jiaqi Lv, Xin Geng, and Min-Ling Zhang. One positive label is sufficient: Single-positive multi-label learning with label enhancement. CoRR, abs/2206.00517, 2022.

- Yan and Guo [2019] Yan Yan and Yuhong Guo. Adversarial partial multi-label learning. CoRR, abs/1909.06717, 2019.

- Yan et al. [2021] Yan Yan, Shining Li, and Lei Feng. Partial multi-label learning with mutual teaching. Knowl. Based Syst., 212:106624, 2021.

- Yeh et al. [2017] Chih-Kuan Yeh, Wei-Chieh Wu, Wei-Jen Ko, and Yu-Chiang Frank Wang. Learning deep latent space for multi-label classification. In AAAI, pages 2838–2844. AAAI Press, 2017.

- Yilmaz et al. [2021] Selim F. Yilmaz, E. Batuhan Kaynak, Aykut Koç, Hamdi Dibeklioğlu, and Suleyman Serdar Kozat. Multi-label sentiment analysis on 100 languages with dynamic weighting for label imbalance. IEEE Trans. Neural Networks Learn. Syst., pages 1–13, 2021.

- Yu et al. [2014] Hsiang-Fu Yu, Prateek Jain, Purushottam Kar, and Inderjit S. Dhillon. Large-scale multi-label learning with missing labels. In ICML, volume 32 of JMLR Workshop and Conference Proceedings, pages 593–601. JMLR.org, 2014.

- Yu et al. [2018] Guoxian Yu, Xia Chen, Carlotta Domeniconi, Jun Wang, Zhao Li, Zili Zhang, and Xindong Wu. Feature-induced partial multi-label learning. In ICDM, pages 1398–1403. IEEE Computer Society, 2018.

- Zhang and Fang [2021] Min-Ling Zhang and Jun-Peng Fang. Partial multi-label learning via credible label elicitation. IEEE Trans. Pattern Anal. Mach. Intell., 43(10):3587–3599, 2021.

- Zhang and Zhou [2007] Min-Ling Zhang and Zhi-Hua Zhou. ML-KNN: A lazy learning approach to multi-label learning. Pattern Recognit., 40(7):2038–2048, 2007.

- Zhang and Zhou [2014] Min-Ling Zhang and Zhi-Hua Zhou. A review on multi-label learning algorithms. IEEE Trans. Knowl. Data Eng., 26(8):1819–1837, 2014.

- Zhu et al. [2018] Yue Zhu, James T. Kwok, and Zhi-Hua Zhou. Multi-label learning with global and local label correlation. IEEE Trans. Knowl. Data Eng., 30(6):1081–1094, 2018.

Appendix A Theoretical Analysis

A.1 Complexity Analysis

It is worth noting that the propagation step can be efficient. First of all, we have disentangled the propagation step from the deep model training, which allows us to perform simple algebra operations. Moreover, since is close to both and , either one can be used to initialize and only a few gradient steps are required to get a satisfactory solution.

Theoretically, in Eq. (3), calculating the term requires time. To compute , since the instance graph is highly sparse, we need , where denotes the number of non-zero entries in a matrix. In our data-driven graph-construction case, we have . Therefore, at each epoch, we require time in the graph propagation procedure, where is the number of gradient steps. In our implementation, we use the widely-used matrix computation package Scipy to perform sparse matrix multiplication . The remaining calculations, including the deep model training, are done on GPUs using PyTorch. According to our empirical analysis, the graph propagation procedure is indeed as fast as the deep training and be efficiently computed.

A.2 Convergence Analysis

It is also of interest to see the convergence performance of PLAIN method. Take the Probabilistic MSE risk as an example, the overall objective can be formulated as follows,

with a constant factor ignored on the deep model objective. We further assume that the gradient descent algorithm is used as the function in Algorithm 1 and the learning rates are selected properly. The following Proposition ensures the convergence of our method and proves the value of the objective function is non-increasing at each updating step.

| Datasets | EXPs† | FEAs | CLs | M-GT | A-GT | DOM |

| Emotions | 593 | 72 | 6 | 3 | 1.87 | music |

| Birds | 645 | 260 | 19 | 6 | 1.01 | audio |

| Medical | 978 | 1,449 | 45 | 3 | 1.25 | text |

| Image | 2,000 | 294 | 5 | 3 | 1.23 | image |

| Corel5K | 5,000 | 499 | 374 | 5 | 3.52 | image |

| Bibtext | 7,395 | 1,836 | 159 | 28 | 2.40 | text |

| Eurlex-dc | 19,348 | 5,000 | 412 | 7 | 1.29 | text |

| Eurlex-sm | 19,348 | 5,000 | 201 | 12 | 2.21 | text |

| NUS-WIDE† | 133,441 | 500 | 81 | 20 | 1.76 | image |

| Music-emotion | 6,833 | 98 | 11 | 7 | 2.42 | music |

| Music-style | 6,839 | 98 | 10 | 4 | 1.44 | music |

| Mirflickr | 10,433 | 100 | 7 | 5 | 1.77 | image |

-

‡

For each PML dataset, the number of examples (EXPs), features (FEAs), class labels (CLs), the maximum number of ground-truth labels (M-GT), the average number of ground-truth labels (A-GT) and its corresponding domain (DOM) are recorded.

-

†

The original number of instances is 269,648 but some of them are unlabeled w.r.t the 81 class labels, thus we only utilized the remaining 133,441 instances to conduct experiments.

Proposition 1.

The updating rules listed in Algorithm 1 guarantee finding a stationary point of the objective .

Proof.

Let be the solution at the -th epoch and be the corresponding objective function value. In the propagation step, is fixed and the remaining objective, i.e. Eq. (2), is a standard convex optimization problem. Thus, our gradient descent algorithm guarantees to decrease the objective function value. Next, in the deep model training step, is fixed and the objective in Eq. (4) is non-convex. However, the gradient descent algorithm still decreases the objective until a first-order stationary point is achieved. We can conclude that,

Consequently, the objective function will monotonically decrease and finally converge to a stationary point. ∎

Appendix B Additional Experiments and Implementation Details

In this section, we present additional empirical results. All the computations are carried out on a workstation with an Intel E5-2680 v4 CPU, a Quadro RTX 5000 GPU, and 500GB main memory running the Linux platform. We summarize the characteristics of the used datasets in Table 5.

| Metrics | Critical Value | |

| Ranking Loss | 31.88 | 2.15 (Methods: 7, Data sets: 30) |

| Average Precision | 49.26 | |

| Hamming Loss | 2.87 |

B.1 Statistical Tests

Following previous works Lyu et al. [2020]; Zhang and Fang [2021], to comprehensively evaluate the superiority of PLAIN, we further utilized Friedman test Demsar [2006] as the statistical test to analyze the relative performance among the competing methods. The experimental results are reported in Table 6. At a 0.05 significance level, the null hypothesis of indistinguishable performance of PLAIN among all competing methods is clearly rejected. Subsequently, we employ the Bonferroni-Dunn test as the posthoc test by regarding PLAIN as the control approach. Figure 4 reports the CD diagrams on each evaluation metric, where the average rank of the competing approaches is marked along the axis. The performance of the control method and one learning method is deemed to be significantly different if their average ranks differ by at least one CD. According to Figure 4, we can observe that PLAIN achieves highly superior results to other baseline methods.

B.2 Further Analysis

Convergence Curve

We also conducted experiments on Music-emotion to show the convergence curve of the objective function. The results in Figure 5 (a) further support our proposition. Besides, the experimental results in Figure 2 (c) also agree with our theoretical findings. When the binary cross-entropy loss is used, our deep model is trained in an asymmetric fashion, and then there is no overall objective for our algorithm. However, as shown in Figure 2 (c), our method still tends to converge to a good solution.

| Datasets | PLAIN | APML | PML-MT♯ |

| Ranking Loss (the lower the better) | |||

| Music-emotion | 0.2150.005 | 0.2420.007 | 0.2360.007 |

| Music-style | 0.1340.007 | 0.1450.006 | - |

| Mirflickr | 0.0880.008 | 0.1240.014 | 0.1260.016 |

| Average Precision (the higher the better) | |||

| Music-emotion | 0.6640.007 | 0.6210.013 | 0.6270.010 |

| Music-style | 0.7430.009 | 0.7320.010 | - |

| Mirflickr | 0.8450.013 | 0.7770.027 | 0.8070.034 |

| Hamming Loss (the lower the better) | |||

| Music-emotion | 0.1920.003 | 0.2000.004 | 0.2070.011 |

| Music-style | 0.1140.004 | 0.1150.002 | - |

| Mirflickr | 0.1660.003 | 0.1700.003 | 0.1730.011 |

-

The results of PML-MT on Music-style are not reported in the corresponding paper.

Comparing with Deep PML Methods

To demonstrate the effectiveness of PLAIN, we further compare with two state-of-the-art deep PLL methods APML Yan and Guo [2019] and PML-MT Yan et al. [2021] on three real-world datasets according to the reported results in their papers. Since their experimental settings are different from our work, we follow Yan and Guo [2019]; Yan et al. [2021] to split the datasets to 80% training and 20% testing. We then rerun the proposed PLAIN on these datasets and the experimental results are reported in Table 7. We can observe that PLAIN outperforms APML and PML-MT on all datasets and all evaluation metrics. For instance, on the Music-emotion, Music-style, and Mirflicker datasets, in terms of average precision, PLAIN achieves results superior to those of the best-competing methods by 5.90%, 1.50% and 4.71% respectively. These results demonstrate that the graph technique improves the disambiguation ability of deep PML models and further confirms the effectiveness of our proposed PLAIN.

Comparing with Two-stage Variant

To show the superiority of our end-to-end design, we further experiment with a two-stage variant of PLAIN which first propagates labels on the graph until converges and then trains the deep model to fit the pseudo-labels. The results are shown in 8. We found the two-stage method underperforms PLAIN because it fails to disambiguate labels in the first stage, making deep model overfits. In contrast, PLAIN incorporates accurate model prediction into propagation for refined pseudo-labels. We will add complete results in the revision.

| Metrics | Rloss | AP | Hloss |

| Two-Stage | 0.236 | 0.637 | 0.201 |

| PLAIN | 0.217 | 0.664 | 0.191 |

Parameter Analysis of

Furthermore, we study the sensitivity analysis of PLAIN with respect to the critical parameter . In Figure 5 (b), we show the performance of PLAIN changes as increases from 5 to 50 on three datasets. We can observe that PLAIN is robust in terms of the parameter and thus, we empirically fix in our experiments.

| Data | |

| Number of data examples | |

| Dimensions of features and labels | |

| Average number of false-positive labels in datasets | |

| Training dataset | |

| Feature space and label space | |

| Candidate label set and true label set | |

| Candidate label vector, true label vector, and predicted label vector | |

| Candidate label matrix and predicted label matrix | |

| Pseudo-Label matrix | |

| Algorithm | |

| Indices of -nearest neighbors of | |

| Sparse instance affinity matrix | |

| Instance and label graphs | |

| Diagonal instance and label degree matrices | |

| Instance and label Laplacian matrices | |

| Identity matrix | |

| Function | |

| Neural network | |

| Parameters of the neural network | |

| Instance and label graph regularizers | |

| Overall propagation objective | |

| Sigmoid function | |

| Loss function | |

| Overall objectives of propagation and deep model training | |

| Trace of a matrix | |

| Indicator function for equivalance testing | |

| Hyper-parameter | |

| Number of nearest neighbors | |

| Weights of graph similarity | |

| Loss weighting factors of candidate consistency, instance regularizer, and label regularizer | |

| Learning rate of gradient descent for label propagation | |

| Number of propagation gradient updating steps | |

Appendix C Notations and Terminology

The notations are summarized in Table 9.