1Graduate School of Life and Medical Sciences, Doshisha University, Kyoto, Japan

2Department of Biomedical Sciences and Informatics, Doshisha University, Kyoto, Japan

(Tel: +81-774-65-6020; E-mail: [email protected])

Deep-learning models in medical image analysis:

Detection of esophagitis from the Kvasir Dataset

Abstract

Early detection of esophagitis is important because this condition can progress to cancer if left untreated. However, the accuracies of different deep learning models in detecting esophagitis have yet to be compared. Thus, this study aimed to compare the accuracies of convolutional neural network models (GoogLeNet, ResNet-50, MobileNet V2, and MobileNet V3) in detecting esophagitis from the open Kvasir dataset of endoscopic images. Results showed that among the models, GoogLeNet achieved the highest F1-scores. Based on the average of true positive rate, MobileNet V3 predicted esophagitis more confidently than the other models. The results obtained using the models were also compared with those obtained using SHapley Additive exPlanations and Gradient-weighted Class Activation Mapping.

keywords:

Kvasir dataset, Deep Learning, Convolutional Neural Networks, Gradient-Weighted Class Activation Mapping, SHAP, SHapley Additive exPlanation1 Introduction

With the development of artificial intelligence (AI), several studies have focused on the application of this technology in the medical field. In gastroenterology, AI is used to detect inflammation, polyps, and stomach cancer and develop systems that can automatically determine the severity of symptoms [1] [2] [3] [4]. AI models are expected to improve diagnostic accuracy and reduce medical costs by preventing misdiagnosis by humans.

Various deep learning and AI models, including deep learning convolutional neural network (CNN) models, have been proposed and used for medical image recognition and analysis. However, these models differ in accuracy, and comparing this aspect is important to identify which model is suitable for a specific application in endoscopic imaging.

The z-line is an anatomic landmark located posterior to the stomach and esophagus. Esophagitis is an inflammation of the esophagus that appears as a break in the esophageal mucosa relative to the z-line [5]. The z-line and esophagitis can be described as normal and diseased conditions, respectively. Early detection of esophagitis is necessary because this condition can cause complications (e.g., esophageal ulcer, bleeding, and stricture) and progress to cancer if left untreated. Therefore, distinguishing between the z-line and esophagitis is necessary. However, this procedure is difficult [6]. In addition, the accuracies of various models in detecting esophagitis have yet to be compared.

Thus, this study aimed to compare the accuracies of several CNN models, including GoogLeNet [7], ResNet-50 [8], MobileNet V2 [9], and MobileNet V3 [10], in identifying z-lines and esophagitis in endoscopic images from the open Kvasir dataset. These models have received considerable attention in recent years after winning in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC), a competition using a large image recognition dataset. The results obtained by the four CNN models were compared. The training models were also compared with the explainable artificial intelligence (XAI) methods Gradient-weighted Class Activation Mapping (Grad-CAM) [11] and SHapley Additive exPlanations (SHAP) [12].

2 Deep learning in medical image analysis

2.1 Typical architecture for image classification

CNN is a deep learning method specialized for image recognition. It is widely used for identifying lesion sites in medical images. It combines a convolutional layer with a pooling layer and finally iterates through all the combined layers to generate the results. In this study, we compared the results of different CNN models used for site identification in medical images. The CNN models used included GoogleNet and ResNet, the successive winning models of ILSVRC, and MobileNet V2 and MobileNet V3, which have attracted considerable attention in recent years because of their small computational and memory.

2.1.1 GoogLeNet

GoogLeNet was the winning model at ILSVRC in 2014 The model consists of an Inception module, 11 convolution, auxiliary loss, and global average pooling. GoogLeNet can be multi-layered using the Inception module, but 11 convolution is performed before each convolution calculation to reduce dimensionality resulting from the large number of parameters. The Inception module helps process data using multiple filters in parallel. The fully connected layer is removed to increase the width and depth of the network, average pooling is used instead of the fully connected layer to avoid gradient loss, and class classification is performed on sub-networks branched from the middle of the network by auxiliary loss [7].

2.1.2 ResNet

ResNet was the winning model at the ILSVRC in 2015. The problem of learning not progressing due to gradient loss and degradation problems was solved using a method called Residual Block, which uses 152 very deep layers to solve the problem. The key features of this model are residual block and batch normalization using shortcut connection. ResNet has several models with different layer depths. ResNet-50 shows higher accuracy than GoogLeNet in ImageNet classification [8]. However, ResNet-50 requires about twice as many parameters as GoogLeNet.

2.1.3 MobileNet V2

MobileNet is a small computationally and memory model that can adjust the trade-off between accuracy and computational load. Depthwise separable convolution decomposes the convolution layer into depthwise and pointwise convolution for computation. This mechanism reduces the computation cost. Furthermore, V2 introduces expand/projection layers and inverted residual blocks. Expand/projection layers rapidly increase or decrease the number of channels. MobileNet V2 achieves comparable accuracy to GoogLeNet and ResNet-50 in ImageNet classification while significantly reducing the number of parameters [9].

2.1.4 MobileNet V3

MobileNet V3 is an improved version of MobileNet V2, introducing a squeeze-and-excite structure (SE-block) in the inverted residual block, one of the features of MobileNet V2. SE-block improves the expressiveness of the model by weighting information in the channel direction [13]. Compared with V2, MobileNet V3 shows more accurate ImageNet classification while shortening total inference time [10].

2.2 Explainable AI (XAI)

The CNN models were compared with XAI methods Grad-CAM and SHAP. The Discussion section explains the results obtained using these techniques.

2.2.1 Grad CAM

Grad-CAM displays a color map of the area the CNN is gazing at for classification [11]. It is based on the fact that variables with large gradients in the output values of the predicted class are essential for classification prediction. The gradient of each input image pixel with respect to the output value of the prediction class in the last convolution layer is used.

2.2.2 SHAP

SHAP calculates, for each predicted value, how each characteristic variable affects that prediction [12]. This analysis allows us to visualize the impact of an increase or decrease in the value of a given characteristic variable.

3 Materials and Methods

CNN models GoogLeNet, ResNet-50, MobileNet V2, and MobileNet V3 were employed to detect esophagitis from the open Kvasir dataset of endoscopic images, and their results were compared.

3.1 Kvasir dataset

The Kvasir dataset is a collection of endoscopic images of the gastrointestinal tract. It was annotated and validated by certified endoscopists. The dataset was made available in the fall of 2017 through the Medical Multimedia Challenge provided by MediaEval. It includes anatomical landmarks (pylorus, z-line, and cecum), disease states (esophagitis, ulcerative colitis, and polyps), and medical procedures (dyed lifted polyps and dyed resection margins). The resolution of the images from the Kvasir dataset with these eight classes varies from 720576 pixels to 19201072 pixels. Each image has a different shooting angle, resolution, brightness, magnification, and center point.

3.2 Prepossessing

Image prepossessing was performed before training the models. Edge artifacts and annotations that interfere with learning during the analysis of medical images were removed. A mask image was created, where pixels with luminance values below a certain threshold were set to 0. The opening process was applied to the mask image to remove the annotations. The image was cropped using this final mask image to obtain the target area. This process was performed on all data.

Each image in the dataset has a different resolution. All images were resized to 224224 pixels by bilinear completion and optimized for deep learning input. In addition to these processes, data augmentation was performed on the data used for learning. We applied two types of data augmentation: horizontal and vertical flip.

3.3 Cross Validation

A total of 1000 image data sets containing z-lines and esophagitis were partitioned into test, training, and validation data. First, 25% (n = 250) of the total data were randomly selected to generate test data. Of the remaining data (75%, n = 750), 50% (n = 500) was used for training and 25% (n = 250) for validation.

The inner loop consisted of training and validation data. The model was trained using the training data, and parameters such as the optimal number of epochs were determined using the validation data. Thus, four training models were generated. The test data of each model were evaluated, and the average of discrimination accuracy of the four times was used as the evaluation value of the CNN model. The test, training, and validation data were each partitioned to maintain the class proportions.

3.4 CNN models

PyTorch was used for the implementation of GoogLeNet, ResNet-50, MobileNet V2, and MobileNet V3. The initial values of all model parameters were pre-trained by ImageNet, and the models were trained by fine tuning.

For all models, the Adam optimizer was used for training. The batch size was five, and the maximum number of epochs was 100. The cross-entropy error shown in equation (1) was used as the loss function.

| (1) |

3.5 Evaluation Function

Five evaluation indices were used in this experiment: accuracy, precision, recall, specificity, and F1-score. These metrics were calculated using the confusion matrix shown in Table 1.

|

|

|||||

|---|---|---|---|---|---|---|

|

True Positive | False Negative | ||||

|

False Positive | True Negative |

In this experiment, the z-line and esophagitis were judged as the negative and positive classes, respectively. In other words, data judged to be esophagitis and z-line by the learning model were designated true positive (TP) and false negative (FN), respectively. Meanwhile, data determined to be esophagitis and z-line by the training model were designated false positive (FP) and true negative (TN), respectively. Based on the values of TP, FP, TN, and FN obtained from the confusion matrix, the accuracy, precision, recall, specificity, and F1-score of the models were calculated using Equations(2) to (6).

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

4 Results and Discussions

4.1 Performance comparison between different architecture

The evaluation indices obtained from the experiments are shown in Table 2.

| Model |

|

|

|

|

|

|||||

|---|---|---|---|---|---|---|---|---|---|---|

| GoogLeNet | 0.846 | 0.859 | 0.830 | 0.862 | 0.843 | |||||

| MobileNet V3 | 0.842 | 0.901 | 0.776 | 0.908 | 0.831 | |||||

| ResNet-50 | 0.833 | 0.865 | 0.792 | 0.874 | 0.826 | |||||

| MobileNet V2 | 0.830 | 0.852 | 0.800 | 0.860 | 0.825 |

The F1-score results in Table 2 show that GoogLeNet was the best among the four models. In other words, GoogLeNet was more reliable in predicting esophagitis than the other models. Meanwhile, MobileNet V3 showed the highest precision and specificity. In other words, MobileNet V3 was the most accurate among the tested models for z-line prediction. From a medical point of view, an ideal model should be likely to distinguish esophagitis with severe symptoms from the z-line.

The average of TP rate were 0.950, 0.923, 0.892, and 0.841 for MobileNet V3, MobileNet V2, GoogLeNet, and ResNet-50, respectively. MobileNet V3 predicted esophagitis with more confidence than the other models.

4.2 GoogLeNet analysis

Grad-CAM and SHAP were applied to the learned model, and what kind of the model was created was discussed.

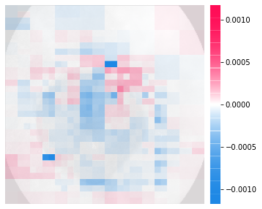

Figure1 shows an example of the image results in the case of TP predicted by GoogLeNet. In the Grad-CAM results, red indicates the most potent activation, and blue indicates the weakest activation. In the SHAP results, the SHAP values of the patches were computed and rendered in a color map: a positive SHHAP value (red) indicates that the class is supported. By contrast, a negative SHAP value (blue) indicates that the class is rejected.

Tearing the esophageal mucosa against the z-line is a feature of esophagitis. According to Figure1, the results of Grad-CAM and SHAP showed that the learned model of GoogLeNet can makes predictions focusing on the clinically significant aspects of esophagitis images. The GoogLeNet model learned the findings that are important for diagnosing esophagitis. Comparison results showed that SHAP captured the location of multiple mucosal tears in the image more accurately than Grad-CAM.

Figure2 shows the results of applying Grad-CAM and SHAP in the FN case. The following can be observed from the results of Grad-CAM and SHAP for Figure2, respectively. In the Grad-CAM results, most areas in the image are shown as activated regions. Areas that provide the basis for the prediction are difficult to identify because of the gradient saturation in the Grad-CAM calculation. In the SHAP results, the inflammatory areas of the input image are indicated by blue pixels. Blue pixels indicate features that have a negative contribution to the prediction. In other words, although the model incorrectly identified esophagitis as a z-line, the model recognized that areas in the image negatively contributed to the z-line decision.

4.3 MobileNet V3 analysis

One hundred images were determined to be TP in the MobileNet V3 model. The SHAP results for the images judged to have the highest and lowest probabilities of being esophagitis are shown in Figure3.

As shown in Figure3, in cases with a high prediction probability, some features may have a negative contribution to the prediction. Many features showing negative contributions can be identified in the images with low prediction probability for Figure3. In this case, the prediction probability may be low.

5 Conclusions

We compared the accuracies of CNN models, including GoogLeNet, ResNet-50, MobileNet V2, and MobileNet V3, in identifying z-line and esophagitis in endoscopic images from the open Kvasir dataset. Among the four models, GoogLeNet had the highest F1-score, and MobileNet V3 had the highest average TP rate. These results suggest that GoogLeNet performs better than state-of-the-art CNN models in medical image recognition. In addition, MoblieNet V3 is a cost-effective model because of its low memory and short training time. Each model was analyzed and compared with Grad-CAM, and SHAP. Other models, datasets, and model analyses are warranted for verification.

References

- [1] Peng-Jen Chen, Meng-Chiung Lin, Mei-Ju Lai, Jung-Chun Lin, Henry Horng-Shing Lu, and Vincent S Tseng. Accurate classification of diminutive colorectal polyps using computer-aided analysis. Gastroenterology, 154(3):568–575, 2018.

- [2] Toshiaki Hirasawa, Kazuharu Aoyama, Tetsuya Tanimoto, Soichiro Ishihara, Satoki Shichijo, Tsuyoshi Ozawa, Tatsuya Ohnishi, Mitsuhiro Fujishiro, Keigo Matsuo, Junko Fujisaki, et al. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer, 21(4):653–660, 2018.

- [3] Pedro Guimarães, Andreas Keller, Tobias Fehlmann, Frank Lammert, and Markus Casper. Deep-learning based detection of gastric precancerous conditions. Gut, 69(1):4–6, 2020.

- [4] Yaqiong Zhang, Fengxia Li, Fuqiang Yuan, Kai Zhang, Lijuan Huo, Zichen Dong, Yiming Lang, Yapeng Zhang, Meihong Wang, Zenghui Gao, et al. Diagnosing chronic atrophic gastritis by gastroscopy using artificial intelligence. Digestive and Liver Disease, 52(5):566–572, 2020.

- [5] Konstantin Pogorelov, Kristin Ranheim Randel, Carsten Griwodz, Sigrun Losada Eskeland, Thomas de Lange, Dag Johansen, Concetto Spampinato, Duc-Tien Dang-Nguyen, Mathias Lux, Peter Thelin Schmidt, et al. Kvasir: A multi-class image dataset for computer aided gastrointestinal disease detection. In Proceedings of the 8th ACM on Multimedia Systems Conference, pages 164–169, 2017.

- [6] Timothy Cogan, Maribeth Cogan, and Lakshman Tamil. Mapgi: accurate identification of anatomical landmarks and diseased tissue in gastrointestinal tract using deep learning. Computers in biology and medicine, 111:103351, 2019.

- [7] Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1–9, 2015.

- [8] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [9] Mark Sandler, Andrew Howard, Menglong Zhu, Andrey Zhmoginov, and Liang-Chieh Chen. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4510–4520, 2018.

- [10] Andrew Howard, Mark Sandler, Grace Chu, Liang-Chieh Chen, Bo Chen, Mingxing Tan, Weijun Wang, Yukun Zhu, Ruoming Pang, Vijay Vasudevan, et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF international conference on computer vision, pages 1314–1324, 2019.

- [11] Ramprasaath R Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, pages 618–626, 2017.

- [12] Scott M Lundberg and Su-In Lee. A unified approach to interpreting model predictions. Advances in neural information processing systems, 30, 2017.

- [13] Jie Hu, Li Shen, and Gang Sun. Squeeze-and-excitation networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 7132–7141, 2018.