Deep Learning for Plant Identification and Disease Classification from Leaf Images: Multi-prediction Approaches

Abstract.

Deep learning plays an important role in modern agriculture, especially in plant pathology using leaf images where convolutional neural networks (CNN) are attracting a lot of attention. While numerous reviews have explored the applications of deep learning within this research domain, there remains a notable absence of an empirical study to offer insightful comparisons due to the employment of varied datasets in the evaluation. Furthermore, a majority of these approaches tend to address the problem as a singular prediction task, overlooking the multifaceted nature of predicting various aspects of plant species and disease types. Lastly, there is an evident need for a more profound consideration of the semantic relationships that underlie plant species and disease types. In this paper, we start our study by surveying current deep learning approaches for plant identification and disease classification. We categorise the approaches into multi-model, multi-label, multi-output, and multi-task, in which different backbone CNNs can be employed. Furthermore, based on the survey of existing approaches in plant pathology and the study of available approaches in machine learning, we propose a new model named Generalised Stacking Multi-output CNN (GSMo-CNN). To investigate the effectiveness of different backbone CNNs and learning approaches, we conduct an intensive experiment on three benchmark datasets Plant Village, Plant Leaves, and PlantDoc. The experimental results demonstrate that InceptionV3 can be a good choice for a backbone CNN as its performance is better than AlexNet, VGG16, ResNet101, EfficientNet, MobileNet, and a custom CNN developed by us. Interestingly, there is empirical evidence to support the hypothesis that using a single model for both tasks can be comparable or better than using two models, one for each task. Finally, we show that the proposed GSMo-CNN achieves state-of-the-art performance on three benchmark datasets.

1. Introduction

Deep learning (DL) has been a major disruptor in a wide range of real-life applications from autonomous vehicles to clinical decision support. In agriculture, deep learning approaches have been emerging as a revolutionising tool for sustainable production. In particular, they play an important role in precision agriculture (PA)/smart agriculture (SA) (Vijaykanth Reddy and Sashi Rekha, 2021; Gajjar et al., 2021; Chouhan et al., 2021; Mureşan et al., 2020; Chouhan et al., 2020), including, but not limited to, pest, weeds or irrigation control, automatic harvesting, yield estimation, and plant disease/fruit detection, etc. Deep learning within the field of plant pathology has garnered significant attention from both the research and industrial sectors. In these domains, the identification of plants and the classification of diseases based on leaf images have witnessed extensive study and practical application (Azlah et al., 2019; Othman et al., 2022; Shelke and Mehendale, 2022; Kanda et al., 2021; Wu et al., 2007; Agarwal et al., 2019; Ashok et al., 2020; Agarwal et al., 2020; Saraswathi et al., 2021; G. and J., 2019; Sunil et al., 2020; Lee et al., 2021). The reason why leaf images are commonly used is leaves are an important part of plants where they participate in the important photosynthesis process and are the most visible part of most plants throughout their growth (Chouhan et al., 2020). Leaf colour, texture, and shape can characterise plant species and, therefore, are useful in plant identification for large-scale plant and crop management (Wu et al., 2007). Besides, many plant diseases can be visible from leaves. Leaf disease is one of the important factors that disrupt the health of plants as a whole and are one of the main causes of reduced crop yield. Therefore, it is critical for farmers to detect the occurrence of leaf disease as early as possible and minimise its negative impact or, at least, keep it under control. In general, there is a rising demand from the industry for effective methods to accurately detect and/or classify leaf diseases.

Deep learning is revolutionising traditional methods for SA/PA, especially in plant and disease classification. Although being popular in the past, traditional approaches have several obvious limitations, mostly caused by the manual costs, such as labours training, requirement of human involvement in many stages of the prediction process, and experts’ knowledge, etc. Manual methods are difficult to detect timely, and the diagnosis may be based on subjective judgment. Nowadays, with the assistance of computer vision, Internet of Things (IoT) and machine learning, we could detect leaf diseases in real-time through various devices, e.g., mobile phone applications(Paymode et al., 2021), websites(Wadhawan et al., 2020), IoT application (Chen et al., 2020) and smart glasses(Ponnusamy et al., 2020). For example, (Chen et al., 2020) introduced a combined approach of IoT and AI models to detect rice blast disease. Compared to our work, this approach uses a custom convolutional neural network (CNN), similar to the first model we implement in 3.3.1, but it work on different input data. In particular, in (Chen et al., 2020) an IoT platform for soil cultivation was utilised to extract non-image data while our study focuses on image data.

With these advanced technologies, the difficulty of the production operation can be greatly reduced, and we can achieve improvements in accuracy and efficiency. Among them, machine learning has been emerging as a key player, leading the innovation pathway for more effective prediction solutions in plant identification and disease classification. During the earlier stage, researchers employed traditional machine learning approaches, combining feature extraction and classification, with limited successes (Kirti and Rajpal, 2020; Barburiceanu et al., 2020; Singh et al., 2020b; Das et al., 2020; Gadade and Kirange, 2020; JAYAPRAKASH and BALAMURUGAN, 2021; Shahidur Harun Rumy et al., 2021; Bharate and Shirdhonkar, 2020). The key issue here is that such approaches rely heavily on an independent step to craft features from the images to classify plant species and disease types. The feature engineering process is normally defined by domain experts or generated by general image processing techniques such as Scale-invariant Feature Transform (SIFT) (JAYAPRAKASH and BALAMURUGAN, 2021), grey-level co-occurrence matrix (GLCM) (Dang-Ngoc et al., 2021; Bharate and Shirdhonkar, 2020; Shahidur Harun Rumy et al., 2021; Tulshan and Raul, 2019; Kumar et al., 2020b), etc.

As being independent of the later step of learning a classifier, these handcrafted features may not be optimal for prediction. Deep learning has been emerging recently as an effective solution. For example, CNNs can provide an end-to-end classification pipeline to identify plants and classify diseases directly from leaf images. An advantage of CNNs is their ability to learn distinctive features tailored to specific tasks. Furthermore, their adaptability allows for fine-tuning models to maximise effectiveness for unique datasets. Finally, their distributed computation capability makes them an ideal choice for large-scale solutions, enabling efficient processing and analysis of substantial datasets.

Although the application of DL for plant identification and disease classification is not new, we found that most current studies develop CNN models as single prediction classifier. It would be more convenient if there exists a multi-prediction approach for plant species and disease types as they have different indicative features from leaf images. More importantly, it is apparent that besides common types of disease different plant species will be prone to different diseases of their own. Therefore, we hypothesise that by incorporating the two tasks together in one single model, we can improve the prediction performance for each task. To investigate the proposed hypothesis, we employ multi-label/multi-output/multi-task approaches with deep learning as the core. The main constraint of the task is a requirement for multiple labels of each image to enable the training of deep models. Let us formally define the problem as follows.

Problem Statement: Given a data set , where is an image with a width , height , and channels; is a plant species; and is a plant disease, how to train a deep learning model to accurately identify the plant species and the disease type from an unseen image ?

A plethora of deep learning models, mostly CNNs, have been employed for plant and leaf classification separately, including AlexNet (G. and J., 2019; Ashok et al., 2020; Agarwal et al., 2019; ANANDHAKRISHNAN and JAISAKTHI, 2020), GoogLeNet (Vijaykanth Reddy and Sashi Rekha, 2021; Zhang et al., 2018), VGG (Agarwal et al., 2020; ANANDHAKRISHNAN and JAISAKTHI, 2020; Bir et al., 2020; Agarwal et al., 2019; Huang et al., 2020; Thet et al., 2020), Inception (Agarwal et al., 2020; KRISHNAMOORTHY and PARAMESWARI, 2021; Hassan et al., 2021; Sai, 2021), ResNet (ANANDHAKRISHNAN and JAISAKTHI, 2020; Guan, 2021; Kumar et al., 2020a; Vijaykanth Reddy and Sashi Rekha, 2021), MobileNet (Mwebaze et al., 2019; Surya and Gautama, 2020; Agarwal et al., 2020; Huang et al., 2020), etc. However, there are several questions which are not been studied properly, including (i) which back-bone CNNs can be most useful for plant identification and disease classification; (ii) what other deep learning approaches can be employed for this task; (iii) whether separate models for plant identification and disease classification perform better than a single model for both tasks; and (iv) whether their performance comparison is consistent across different datasets.

In this paper, we aim to answer the above questions, and finally verify our hypothesis, by surveying, developing, and comparing a wide range of CNN architectures. To this end, we solve the problem of plant identification and disease classification by employing and evaluating a variety of deep learning approaches. We conduct an empirical study to analyse the usefulness of current deep learning models for plant species identification and leaf disease detection from leaves. We categorise the deep learning approaches into:

-

•

Multi-model: This is an ensemble of two CNNs models, one is tasked to predict plant species from leaf images while the other is tasked to predict diseases. For completeness, we will use and compare different backbone CNNs for these models, including our custom CNNs, AlexNet, VGG16, ResNet101, EfficientNet, InceptionV3, and MobileNetV2.

-

•

Multi-label: This is a single CNN model with an output of multi labels. Different from the standard multi-label learning (Madjarov et al., 2012), in this case, we combine the labels to make a power-set label for the prediction. In other words, we use a combined label to present multiple labels. We also employ different backbone CNNs for this approach.

-

•

Multi-output: This is a single CNN model with multiple output layers, each for a prediction target. Similarly, different backbone CNNs are used for this model as well.

-

•

Multi-task. We can also adapt multi-task learning to this problem. In this case, the target tasks are different but the input data for the tasks will be from the same distribution. Multi-task learning has been employed effectively in many computer vision problems (Yuan et al., 2012; Zhang et al., 2013) and deep learning can bolster this class of approaches because one task’s features may benefit other tasks’ learning (Zhang and Yang, 2021; Zhang et al., 2014).

Furthermore, based on the theoretical analysis of the above approaches, we propose a new method to improve the performance of plant identification and disease classification. After that, we review and select suitable benchmark datasets for an extensive experiment. For the empirical analysis, we use 3 different datasets including Plant Village (Hughes and Salathe, 2016), Plant Leaves (Chouhan et al., 2019), and PlantDoc (Singh et al., 2020a). The experimental results show that InceptionV3 is the best CNN backbone for both plant identification and disease classification. Interestingly, there is empirical evidence to support the hypothesis stated earlier, as single models for both tasks can achieve better performance than an ensemble of independent models. Finally, our proposed model can achieve state-of-the-art results on the benchmark datasets studied in this paper. The contribution of this paper is threefold:

-

•

We conduct a detailed literature review through appropriate selection criteria, including recent common machine learning methods and available public datasets in the field of plant identification and disease classification. We generalise the methods to two classes of multi-prediction paradigm (multi-model CNNs, multi-label CNNs) and extend the study by re-introduce several methods available in the machine learning literature (multi-ouput CNNs, multi-task learning) but have not been studied deeply in the research field of plant pathology. As far as we know, this is the first study to survey and compare different deep multi-prediction techniques.

-

•

We proposed a novel model, namely Generalised Stacking Multi-output CNNs (GSMo-CNN), to improve the performance of plant identification and disease classification. Our model creates multiple output layers and stacks them one on top another to form a chain of classification in a hierarchical manner. By doing this, we aim to represent the relationship between plant species and disease types during the inference process. The experimental results show that GSMo-CNN achieves state-of-the-art performance in all datasets studied in this paper.

-

•

Our study reveals several important empirical findings. First, we show that selection of backbone CNNs is critical for achieving good performance and InceptionV3 has the best performance in our study. Second, we demonstrate the advantage of single models for multi-prediction over multiple models. This is an interesting finding as single models are more compact and easier to train. Third, we showcase the effectiveness of combining labels in a hierarchical manner, together with transfer learning we can significantly improve the prediction performance for both plant species and leaf diseases. These findings offer valuable insights for researchers and practitioners in agriculture. It would save their time to search for suitable approaches for more accurate and efficient plant species identification and disease classification, ultimately contributing to improved crop management and disease control. For the sake of reproducibility, we share the source code and datasets in this study at: https://github.com/funzi-son/plant_pathology_dl.

2. Machine Learning for Plant Identification and/or Disease Classification

2.1. Article Selection Criteria

| Year | Paper | Tech | Review | Total |

|---|---|---|---|---|

| 2021 - 2023 | (Metre and Sawarkar, 2021), (Sujatha et al., 2021), (Vijaykanth Reddy and Sashi Rekha, 2021), (Shahidur Harun Rumy et al., 2021), (Dang-Ngoc et al., 2021), (Raina and Gupta, 2021)aaa, (Paymode et al., 2021), (Guan, 2021), (KRISHNAMOORTHY and PARAMESWARI, 2021), (Chowdhury et al., 2021), (Saraswathi et al., 2021), (JANA et al., 2021), (Mukhopadhyay et al., 2021), (Li et al., 2021b)bbb, (Chouhan et al., 2021), (Gajjar et al., 2021), (Hu et al., 2021), (JAYAPRAKASH and BALAMURUGAN, 2021), (Hassan et al., 2021), (Ekanayake and Nawarathna, 2021)ccc, (Sai, 2021), (Kathiresan et al., 2021), (Metre and Sawarkar, 2022)ddd, (Ariyapadath, 2021), (Qin et al., 2021), (Vandenhende et al., 2021b), (Hassanin et al., 2021), (Lee et al., 2021), (Yousef Methkal Abd et al., 2023), (Pandey et al., 2023), (Thangaraj et al., 2023) | 27 | 4 | 31 |

| 2020 | (Xie et al., 2020), (Singh et al., 2020a), (Kirti and Rajpal, 2020), (Bharate and Shirdhonkar, 2020), (Barburiceanu et al., 2020), (Huang et al., 2020), (Thet et al., 2020), (Singh et al., 2020b), (Bhowmik et al., 2020), (Ashok et al., 2020), (Kumar et al., 2020a), (lakshmi and Nickolas, 2020), (Kawatra et al., 2020), (Kumar et al., 2020b), (Surya and Gautama, 2020), (Wadhawan et al., 2020), (Rajesh et al., 2020), (Sunil et al., 2020), (Chaudhari and Patil, 2020), (Bir et al., 2020), (Das et al., 2020), (Ponnusamy et al., 2020), (Mureşan et al., 2020)eee, (Gadade and Kirange, 2020), (Sharma et al., 2020), (Agarwal et al., 2020), (ANANDHAKRISHNAN and JAISAKTHI, 2020), (Chouhan et al., 2020)fff, (Fu et al., 2020), (Chen et al., 2020) | 28 | 2 | 30 |

| 2019 - 2015 | (G. and J., 2019), (Agarwal et al., 2019), (Tulshan and Raul, 2019), (Mwebaze et al., 2019), (Zhang et al., 2018), (Sardogan et al., 2018), (Padol and Yadav, 2016), (Hughes and Salath’e, 2015), (Hughes and Salathe, 2016), (dos Santos Ferreira et al., 2017), (Jasitha et al., 2019), (Misra et al., 2016), (Shinohara, 2016), (Wang et al., 2016) | 14 | 0 | 14 |

The academic papers selected for this study primarily focus on three key aspects. Firstly, the publication timeline, as illustrated in Table 1, reveals that over 80% of the research articles in this study were published between 2020 and 2023. This timeframe was chosen to ensure the effectiveness and timeliness of this research. Secondly, the degree of citation and the impact factor of the journals (such as Q1 for journals and CORE A/A* for conferences) were considered. Lastly, the relevance to this research was determined through keyword searches, including ”leaf disease,” ”plant disease,” ”machine learning,” ”deep learning,” ”classification,” and ”detection.” These keywords were used to search in reputable databases such as EBSCO host and Scopus, and Google Scholar.

2.2. Traditional Machine Learning versus Deep Learning

In the earlier years, traditional (shallow) machine learning was used for plant identification and leaf disease classification (Ariyapadath, 2021; Xie et al., 2020; Agarwal et al., 2020; Thet et al., 2020; Sardogan et al., 2018; Bhowmik et al., 2020; lakshmi and Nickolas, 2020). This machine learning paradigm in plant pathology consists of two different steps: feature extraction (Raina and Gupta, 2021; Li et al., 2021b; Metre and Sawarkar, 2022, 2021) and classifier training (Kirti and Rajpal, 2020; Barburiceanu et al., 2020; Tulshan and Raul, 2019; Bharate and Shirdhonkar, 2020). In some cases, researchers also consider including data segmentation after collecting and pre-processing data before applying feature extraction (Metre and Sawarkar, 2022, 2021). Among many feature extraction techniques, K-means clustering (Kirti and Rajpal, 2020; Padol and Yadav, 2016; Kumar et al., 2020b; Chaudhari and Patil, 2020) and grey-level co-occurrence matrix (GLCM) (Bharate and Shirdhonkar, 2020; Tulshan and Raul, 2019; Dang-Ngoc et al., 2021; Kumar et al., 2020b; Shahidur Harun Rumy et al., 2021) are the most common feature extraction methods. In terms of shallow learning classifiers, Support Vector Machines (SVMs) (Kirti and Rajpal, 2020; Barburiceanu et al., 2020; Singh et al., 2020b; Das et al., 2020; Gadade and Kirange, 2020; Shahidur Harun Rumy et al., 2021; Padol and Yadav, 2016; Bharate and Shirdhonkar, 2020; Dang-Ngoc et al., 2021; Kumar et al., 2020b; Chaudhari and Patil, 2020; Mukhopadhyay et al., 2021) was the most popular, followed by K-Nearest Neighbor (KNN) (Tulshan and Raul, 2019; Bharate and Shirdhonkar, 2020), Random Forest (RF) (Shahidur Harun Rumy et al., 2021), Multilayer Perceptron (MLP) (JAYAPRAKASH and BALAMURUGAN, 2021), and Decision Tree (Rajesh et al., 2020). They are all popular in machine learning applications and achieve good performance in leaf disease detection and/or classification. In the case where data segmentation is used, we will need to detect the region of interest as a part of the data before feeding it to feature extractors and then classifiers. As we can see, the whole process is complex, involving several consecutive steps such as data acquisition, data processing, feature extraction, and prediction (Raina and Gupta, 2021; Li et al., 2021b; Metre and Sawarkar, 2022, 2021).

| Paper | Year | Dataset | Non-DL | DL |

|---|---|---|---|---|

| (Sharma et al., 2020) | 2020 | Plant Village (Part) | 66.4% | 98% |

| (Saraswathi et al., 2021) | 2021 | Plant Village (Part) | 90% | 96% |

| (Agarwal et al., 2019) | 2019 | Plant Village (Grape) | 97.5% | 99% |

| (G. and J., 2019) | 2019 | Plant Village (Whole) | 87.87% | 97.87% |

| (Kumar et al., 2020a) | 2020 | Plant Village (Modified) | 88.06% | 96.51% |

| (Vijaykanth Reddy and Sashi Rekha, 2021) | 2021 | Plant Village (Apple) | 68.73% | 97.62% |

| (Ashok et al., 2020) | 2020 | Tomato Leaves | 92.94% | 98.12% |

| (Sujatha et al., 2021) | 2021 | Citrus Leaves(Rauf et al., 2019) | 87% | 89.5% |

| (Yousef Methkal Abd et al., 2023) | 2023 | Citrus Leaves (Rauf et al., 2019) | 86% | 99.98% |

Substantial changes in technology adoption can be seen under the rise of deep learning. From recent studies, as Table 2 shows, researchers have affirmed that deep learning can achieve better performance than traditional (non-deep) learning approaches (Sujatha et al., 2021; Sharma et al., 2020; Saraswathi et al., 2021; Agarwal et al., 2019; G. and J., 2019; Kumar et al., 2020a; Vijaykanth Reddy and Sashi Rekha, 2021). A class of deep learning models, known as convolutional neural networks (CNNs), have been widely applied for plant identification and/or leaf disease classification. In (dos Santos Ferreira et al., 2017) the authors employed a popular CNN model named AlexNet for plant classification. In this work, AlexNet was shown to successfully classify grass and broadleaf with average accuracy up to 99%. For leaf disease classification, AlexNet achieved 91.19% accuracy on Apple leaf images (Vijaykanth Reddy and Sashi Rekha, 2021), 86.5% on Grape leaves (Agarwal et al., 2019) and 95.75% on tomato leaf images (Ashok et al., 2020). Note that, they are separate models, each for a task. A deeper architecture, known as very deep Convolutional Neural networks (or VGG, VGGNet) has shown better performance than AlexNet in image classification tasks. When applied to plant identification, VGG achieved 97% accuracy on Leaf1 Dataset (8 species), 96.57% on Flavia Dataset (32 species) and 85.37% on D-Leaf Dataset (43 species) (Jasitha et al., 2019). VGG for leaf disease classification also received promising results. For example, in grape leaf disease VGG-16 (16 hidden layers) has been applied to many datasets (Agarwal et al., 2019; Huang et al., 2020; Thet et al., 2020). Notably, in (Thet et al., 2020) VGG-16 with Average Pooling (GAP) layer achieved 98.4% accuracy. Another common architecture of VGG is VGG with 19 hidden layers, known as VGG-19, which had achieved 96.86% accuracy in tomato leaf disease classification (Bir et al., 2020).

Another CNN architecture, known as Residual Networks or ResNets, has shown remarkable results in image classification tasks in recent years (it won the 2015 ImageNet competition). In (Qin et al., 2021), a set of ResNet variants has been employed for plant identification. An evaluation on a real leaf dataset that the authors collected with 15207 images (of 201 species) is reported as follows, 91.83% (ResNet-50); 92.71% (Res2Net-50); 92.95% (Res2Net-101). In the case of leaf disease classification, ResNet-50 achieved 98.40% accuracy for tomato leaves (ANANDHAKRISHNAN and JAISAKTHI, 2020), a customised ResNet model had 82.78% accuracy on a modified Plant Village dataset (Guan, 2021), ResNet-34 achieved 99.40% accuracy and 0.9651 F1-score in betel vine leaf disease (Kumar et al., 2020a). In (Vijaykanth Reddy and Sashi Rekha, 2021) ResNet-20 was tested on apple leaves images and achieved 92.76% accuracy. Last but not least, InceptionV3 is recently emerging as a good model for classification tasks with leaf images. InceptionV3 is the third version of Google’s Inception CNN. In (Agarwal et al., 2020), it achieved 63.4% accuracy on tomato leaf diseases and in (KRISHNAMOORTHY and PARAMESWARI, 2021), it achieved 95.41% in rice leaf disease classification. InceptionV3 has been applied to the famous Plant Village dataset and achieved very promising results, as shown in (Hassan et al., 2021) (98.42% accuracy) and in (Sai, 2021) (99.74% accuracy).

Besides the very deep and complex models discussed above, several studies also showed the advantages of light-weight CNNs in plant identification and leaf disease classification. One of the advantages of light-weight CNNs is they can run on low-resource devices, enabling a wider range of applications in smart agriculture and precision agriculture. For example, MobileNet has been deployed for smartphones and IoT devices. In (Agarwal et al., 2020), it achieved 63.75% accuracy in tomato leaf disease classification and in (Huang et al., 2020) it achieved 86% accuracy in grape leaf disease classification. Another light-weight CNN model is EfficentNet whose different variants (B0, B4, B7) have been used to classify tomato leaf diseases (Plant Village) (Chowdhury et al., 2021). To enable high performance for EfficientNet the authors have relabeled the dataset in three subtasks: task 1: healthy & unhealthy; task 2: 5 classes of leaf state, including bacterial & fungal; task 3: 1 healthy & 9 diseases. The results show that B7 got the best in task 1 (99.95%) and task 2 (99.12%) and B4 got the best in task3 (99.89%).

The above related work applied CNNs separately for plant identification and disease classification from leaf images. Each paper evaluates the CNNs on a different dataset, and sometimes on a modified dataset, making it difficult to benchmark their performance. Different from them, this paper sets up a comprehensive evaluation to provide a comparative view of the CNNs, using multiple datasets.

Several attempts in recent years have shown promising approaches of using a single CNN for multiple tasks (Misra et al., 2016; Fu et al., 2020; Vandenhende et al., 2021b; Shinohara, 2016; Hassanin et al., 2021; Wang et al., 2016). In the case of plant pathology, a CNN can learn to predict both plant species and diseases at the same time with leaf images as input. For example, in (Lee et al., 2021) the authors showed the effectiveness of conditional multi-task learning for the simultaneous identification of plant species and classification of diseases. Unlike other studies on large-scale multi-task learning, the approach adapts the multi-task learning idea for interrelated labels, where input data from different tasks are from the same distribution. In Plant Village dataset and PlantDoc dataset, the labels are the combination of both species and diseases, e.g., Apple Black Rot in Plant Village dataset and Tomato Leaf Late Blight in PlantDoc dataset. This combination deals with the multi-prediction problem by creating a multi-label known as power-set. This can transform a multi-task or multi-label problem to a large-scale multi-class task. A trained model can predict the species and diseases simultaneously, and the predicted results are joint species-disease labels. Several examples employed this idea are shown in Table 3. In (Sharma et al., 2020; Saraswathi et al., 2021; Sunil et al., 2020) the authors applied power-set CNNs on a subset of Plant Village dataset, and in (G. and J., 2019; Kumar et al., 2020a; Guan, 2021; Kawatra et al., 2020) the author worked on the whole or modified Plant Village dataset. All these studied models achieved more than 90% accuracy. For example, according to a study on PlantDoc dataset, with the support of image segmentation and power-set labelling VGG-16 achieved 60.41% accuracy, InceptionV3 achieved 62.06% accuracy, and InceptionResNet V2 achieved 70.53% accuracy (Singh et al., 2020a).

| Paper | Year | Dataset | Categories | Size/Ratio (training/test) | Methods & Accuracy |

| Plant Identification | |||||

| (Othman et al., 2022) | 2022 | Coriander & Parsley (Private) | 2 | 100 (70%/ 30%) | CNN (90%) |

| (Shelke and Mehendale, 2022) | 2022 | Private Dataset | 79 | 2591 (80%/20%) | DenseNet-161 (97.3%) |

| Middle European Woody Plants (Novotný and Suk, 2013) | 119 | (80%/20%) | LR(98.72%) | ||

| Flavia Dataset (Wu et al., 2007) | 32 | 1703 (80%/20%) | LR(99.58%) | ||

| MalayaKew (MK) Leaf Dataset (Lee et al., 2015) | 44 | 2816 (80%/20%) | LR(89.35)% | ||

| (Kanda et al., 2021) | 2021 | MK Leaf (Lee et al., 2015) + Synthetic Dataset | 44 | (80%/20%) | LR(93.33)% |

| Folio Dataset (Munisami et al., 2015) | 32 | (80%/20%) | LR(98.75%) | ||

| Amazon Forest (Vizcarra et al., 2021) | 9 | 59,441 (80%/20%) | LR(98.87%) | ||

| LeafSnap Dataset (Kumar et al., [n. d.]) | 185 | 23,147 (80%/20%) | LR(89.27%) | ||

| Swedish Dataset (Söderkvist, 2001) | 15 | 1125 (80%/20%) | LR(100%) | ||

| Swedish Dataset | 15 | 1125 (70%/15%/15%111Acc & F1) | ANN (98.99%), KNN (96.68%) & RF (97.12%) | ||

| (Ariyapadath, 2021) | 2021 | Flavia Dataset | 32 | 1907 (70%/15%/15%111Training/Validation/Test Amount) | ANN (96.29%), KNN (93.79%) & RF (95.24%) |

| D-Leaf Dataset(TAN and Chang, 2018) | 43 | 1290 (70%/15%/15%111Training/Validation/Test Amount) | ANN (95.31%), KNN (86.3%) & RF (91.5%) | ||

| Leaf1 Dataset | 8 | 75 (80%/20%) | GoogLeNet (98%) & VGG-16 (97%) | ||

| (Jasitha et al., 2019) | 2019 | Flavia Dataset | 32 | 1879 (80%/20%) | GoogLeNet (94%) & VGG-16 (96.57%) |

| D-Leaf Dataset(TAN and Chang, 2018) | 43 | 1290 (80%/20%) | GoogLeNet (88.74%) & VGG-16 (85.37%) | ||

| Disease Classification | |||||

| (Agarwal et al., 2019) | 2019 | Plant Village (Grape) | 4 | 3800/200 | CNN (99%), Alexnet (86.5%), VGG16 (97.5%) |

| (Vijaykanth Reddy and Sashi Rekha, 2021) | 2021 | Plant Village (Apple) | 4 | 10888/2801 | CNN (97.62%), AlexNet (91.19%), GoogLeNet (95.69%), ResNet-20 (92.76%) & VGG-16 (96.32%) |

| (Ashok et al., 2020) | 2020 | Tomato Leaves (Self) | 4 | N/A | CNN (98.12%), AlexNet (95.75%) & ANN (92.94%) |

| (Agarwal et al., 2020) | 2020 | Plant Village (Tomato) | 10 | 10,000/7,000/500111Training/Validation/Test Amount | CNN (91.2%), Mobilenet (63.75%), VGG-16 (77.2%)& InceptionV3 (63.4%) |

| (Sardogan et al., 2018) | 2018 | Plant Village (Tomato) | 5 | 500(80%/20%) | CNN (86%) |

| (Chowdhury et al., 2021) | 2021 | Plant Village (Tomato) | 2, 6, 10 | 5-fold | EfficientNet B0, B4, B7 (97% - 99%) |

| (ANANDHAKRISHNAN and JAISAKTHI, 2020) | 2020 | Plant Village (Tomato) | 10 | 80%/20% | Xception V4 (99.45%), AlexNet (90.1%), Lenet (88.3%), Resnet (98.40%) & VGG-16 (90.1%) |

| (Yousef Methkal Abd et al., 2023) | 2023 | Citrus Leaves (Rauf et al., 2019) | 5 | 609 | C-GAN(99.6% & 97%),CNN(99.97%&99.98%), SGD(85%&86%) & ACO-CNN (99.98%&99.99%)222Accuracy & F1-score |

| (Pandey et al., 2023) | 2023 | Cotton Leaf Disease Dataset | 4 | 1661 | SVM(98.7%&98.7%), CNN(98.8%&98.8%) & Hybrid(98.9%&98.9%)222Accuracy & F1-score |

| (Thangaraj et al., 2023) | 2023 | Plant Village (Tomato) | 10 | 80%/20% | InceptionV3(94.58%), MobileNetV1(82.7%), MobileNetV2(92.1%) & MX-MLF2(99.61%) |

| Single model for Plant Identification & Disease Classification | |||||

| (Sharma et al., 2020) | 2020 | Plant Village (Part) | 19 | N/A | CNN (98%) |

| (Saraswathi et al., 2021) | 2021 | Plant Village (Part) | 15 | 80%/20% | CNN (96%) |

| (G. and J., 2019) | 2019 | Plant Village (Whole) | 38 | 55,636/1950 | CNN (97.87%), AlexNet (87.34%), ResNet (92.56%), VGG16 (92.87%) & InceptionV3 (94.32%) |

| (Kumar et al., 2020a) | 2020 | Plant Village (Modified) | 38 | 15,200 (80%/20%) | ResNet34 (99.40% & 96.51%)222Accuracy & F1-score |

| (Singh et al., 2020a) | 2020 | PlantDoc (Cropped) | 28 | 80%/20% | VGG-16 (60.41%), InceptionV3 (62.06%) & InceptionResNet V2 (70.53%) |

| (Guan, 2021) | 2021 | Plant Village (Modified) | 61 | 31718/4540 | Stacking Model (87%), ResNet (82.78%), InceptionNet (82.22%), DenseNet (83.44%)& InceptionResNet (84.07%) |

| (Kawatra et al., 2020) | 2020 | Plant Village (Whole) | 38 | N/A | Hybrid (AlexNet + Linear SVM) Model (99.98%), Basic AlexNet (96.34%) & AlexNet with GAP Layer (97.29%) |

| (Sunil et al., 2020) | 2020 | Plant Village (Peach, Pepper & Strawberry) | 6 | 70%/30% | Multi Convolutional Layered-based CNN (87.47% - 99.25%) with different epochs (50, 75,100 & 125) |

| (Lee et al., 2021) | 2021 | Plant Village + Digipathos (Garcia Arnal Barbedo et al., 2018) + Web images | 1146333311 species, 289 diseases | 10324 (80%/20%) | InceptionV3 & Conditional Multi-task Learning (CMTL), Total Top-1 Accuracy: 69.43%, Total Avg Accuracy: 64.79, Disease Top_1: 82.96%, Species Top-1: 78.64% |

The above studies are all based on multi-class classification tasks, either directly or indirectly through the use of power-set labelling. Recently, researchers have also begun to adopt multi-task learning methods (different from power-set) to directly address the classification of both species and diseases. For example, in (Lee et al., 2021) the authors proposed conditional multi-task learning approach (CMTL) with InceptionV3 as a backbone CNN to predict both leaf species and diseases. In addition, the paper showed that it is possible to use the predicted results of plant species to help improve the prediction of disease. The dataset for the evaluation of CMTL consists of Plant Village, Digipathos (Garcia Arnal Barbedo et al., 2018) and Web images which made up to 12,290 leaf images with 1146 joint species-disease labels (311 species & 289 diseases). The experiment showed that, the total Top-1 accuracy for joint prediction of species-disease labels is 69.43%, the total average accuracy is 64.79%, the disease’s Top-1 accuracy is 82.96%, and the species’ Top-1 accuracy is 78.64%. Although power-set multi-label CNNs and CMTL are promising, there are many other approaches for multi-prediction that have not been deeply explored, which can be beneficial for plant identification and disease classification.

In this paper, we survey, implement, and evaluate a wide range of multi-prediction approaches with different CNN backbones that can be employed for predicting both plant species and diseases. We also proposed a new deep learning architecture with a learning strategy to improve prediction performance.

3. Methodology

3.1. Datasets

| ID | Dataset | Year | Species | Disease | Link |

|---|---|---|---|---|---|

| 1 | Plant Village | 2016 | 14 | 22 | https://data.mendeley.com/datasets/tywbtsjrjv/1 |

| 2 | Plant Leaves | 2019 | 12 | 22 | https://data.mendeley.com/datasets/hb74ynkjcn/1 |

| 3 | Plantae_k | 2019 | 8 | 9 | https://data.mendeley.com/datasets/t6j2h22jpx/1 |

| 4 | PlantDoc | 2020 | 13 | 17 | https://github.com/pratikkayal/PlantDoc-Dataset |

| 5 | Plant Pathology 2021 - FGVC8 | 2021 | 1 | 6 | https://www.kaggle.com/c/plant-pathology-2021-fgvc8/overview |

| 6 | Maize Leaf (NLB) | 2018 | 1 | 2 | https://osf.io/p67rz/ |

| 7 | Citrus Leaves | 2019 | 1 | 5 | https://data.mendeley.com/datasets/3f83gxmv57/2 |

| 8 | Rice Diseases Image Dataset | 2019 | 1 | 4 | https://www.kaggle.com/minhhuy2810/rice-diseases-image-dataset |

| 5 | JMuBEN (Arabica Coffee Leaf Images) | 2021 | 1 | 3 | https://data.mendeley.com/datasets/t2r6rszp5c/1 |

| 6 | JMuBEN2 | 2021 | 1 | 2 | https://data.mendeley.com/datasets/tgv3zb82nd/1 |

| 7 | Cassava Diseases | 2019 | 1 | 5 | https://www.kaggle.com/c/cassava-disease/data |

| 8 | UCI Rice Leaf Diseases | 2017 | 1 | 3 | https://archive.ics.uci.edu/ml/datasets/Rice+Leaf+Diseases |

Data has a central role in modern AI technologies, including machine learning, deep learning, and computer vision. In this study, data is also necessary for the comparison of different methods. This section aims to survey and then select suitable datasets for the benchmarking in the next step of experiment and testing. Different from previous studies where, in most cases, only one dataset is used, in this paper, we select three data sources to make four evaluation sets to provide a comprehensive comparison of different approaches. The role of image datasets for computer vision in plant pathology is clearly important. In (Chouhan et al., 2020), the authors showed that the foremost problem most researchers in this field have been facing is the lack of available data sets. This would greatly affect and restrict the research of machine learning for plant identification and disease classification from leaf images. Fortunately, in recent years, several attempts have been made successfully and researchers have devoted themselves to the collection of plant disease data, filling the data availability gap in this area. Table 4 shows recent available public datasets about plant leaf diseases for computer vision research. In the table, “Year” denotes the published year; “Species” denotes the number of plant species in the data; “Disease” denotes the number of diseases available in a dataset, because different plants may have different sets of diseases. In the experiment, we select three datasets and we resize the images in those datasets to an appropriate input shape for a model, for example, the input size for CNN and AlexNet will be .

In Table 4, the datasets can be divided into two groups, multi-prediction datasets (Plant Village, Plant Leaves, PlantDoc & Plant Pathology 2021) and single-prediction datasets (Maize Leaf, Rice Diseases Image, JMuBEN, Cassava Diseases & UCI Rice Leaf). A multi-prediction dataset has different plant species (as shown in the Species column in Table 4) and different types of diseases (as shown in the ”Disease” column in Table 4). These datasets can be useful for both species and disease classification which will be employed this study to explore the usefulness of multi-prediction approaches and to verify our hypothesis. A single-prediction dataset normally only has a single plant species and a set of disease types for that plant. Therefore, the selected benchmark datasets for our study are detailed as follows:

3.1.1. Plant Village Dataset

Plant Village dataset is currently one of the most widely used public datasets for research on leaf disease identification and classification. It has different versions, including an original version and a data augmentation version. The original dataset was published in 2016 (Hughes and Salathe, 2016), it consists of images of diseased leaves and healthy leaves from 14 plant species (Apple, Blueberry, Cherry, Corn, Grape, Orange, Peach, Bell Pepper, Potato, Raspberry, Soybean, Squash, Strawberry & Tomato). Each species has 1 to 10 classes of related diseases, resulting in 22 unique disease categories totally with some species sharing several diseases. In this dataset, there is a total of 38 unique combinations of species and diseases (e.g. Apple Black Rot), and one additional category about images without leaf ( background images). The data augmentation version was released in 2019 (Hughes and Salath’e, 2015). In this version, the creators have applied six augmentation methods to enrich the data, including image flipping, Gamma correction, noise injection, principle component analysis (PCA) colour augmentation, rotation, and scaling, to improve the quality and quantity of the data. As the result, the number of samples in this dataset is , which increased from in the original version. In our study, we carry out the experiment on the original version. We split the data into -- for training, validation, and test sets respectively. From Figures 1(a) and 2(a), we can see the class distribution of Plant Village clearly, because Tomato has 9 groups of diseases and 1 group of healthy, it has the most number of pictures. Figure 3(a) shows the relationships between species and disease of Plant Village.

3.1.2. Plant Leaves Dataset

Plant Leaves dataset consists of images of healthy and unhealthy leaves divided into 22 categories by species and their health condition. The images are in high-resolution JPG format. The dataset has 12 plant species: AlstoniaScholaris, Arjun, Bael, Basil, Chinar, Gauva, Jamun, Jatropha, Lemon, Mango, Pomegranate, and PongamiaPinnata. We partition the data samples into three different sets with for training, for validation and for testing. Figures 1(b) and 2(b) show the class distribution of Plant Leaves, because each species has 1 group of disease and 1 group of healthy, the healthy category has the most number of pictures. The relationships between species and disease of Plant Leaves have been shown in Figure 3(b).

3.1.3. PlantDoc Dataset

Compared to Plant Village Dataset, PlantDoc dataset aims to establish a challenging benchmark with real-field images. The images in Plant Village were taken in a laboratory setup and not in real conditions of cultivation fields that impact the trained model’s efficacy in practice (Singh et al., 2020a). The usefulness of Plant Village may be not fully potential for the development of applications to identify real-world leaf diseases. PlantDoc is a large-scale non-lab data set for leaf disease detection. The images of PlantDoc have cluttered and diverse backgrounds. It has similar categories of plant species and disease types as Plant Village with leaf images, 13 plant species, and 17 unique diseases. There are 38 classes for a combination of species and diseases (e.g., Apple Scab Leaf). Originally, the data was partitioned into a training set of samples and a small test set of samples. We refer to this set as PlantDoc-1.0. To facilitate deeper comparison (with other works and for future study) we re-partition the data to create another dataset from PlantDoc. We mixed and shuffled the whole dataset and split it into -- for training, validation, and testing respectively. This data is referred to as PlantDoc-0.2. We use these two versions of PlantDoc in this study. Figures 1(c) and 2(c) show PlantDoc’s class distribution, because Tomato has 7 groups of diseases and 1 group of healthy, it has the most number of leaf images. Figure 3(c) shows the relationships between species and disease of PlantDoc.

3.2. Models

In this section we will survey different deep learning approaches which have been or can be applied for plant identification and disease classification. The current deep learning models employed for plant identification or disease classification are CNN models we will describe in Section 3.2.1. However, most of the other approaches we present below have not been applied largely to plant pathology, although they are really relevant and already exist in machine learning literature.

3.2.1. Backbone CNNs

In recent years, many different architectures were designed based on Convolutional Neural Networks (CNN) to deal with spatial data such as images and videos, especially in computer vision tasks. With their flexible and computationally efficient architectures, CNNs can be adapted to different scenarios and tasks. In what follows, we re-introduce several CNN models, which are popular and have been proven with excellent performance on image classification tasks. They were tested on benchmark datasets such as CIFAR-100 and ImageNet, and also are the state-of-the-art approaches for plant identification and for disease classification in recent research, as shown in Table 3.

Convolutional Neural Networks (CNN). CNNs refer to a class of neural networks that employ convolutional operators for information propagation from layers to layers. The convolutional operation is useful for image analysis as it helps neural networks learn local features, which makes CNNs popular for image data (Lecun et al., 1998). This is also the foundation for a series of deep neural network structures lately. A CNN has an input layer and an output layer, and between these two layers, there are several hidden layers where connections between a lower layer and an upper layer are formed by convolutional operators. The number of hidden layers is chosen depending on the complexity of a task. Recent advanced techniques in CNNs can improve the performance and allow CNNs to be scalable for learning from larger datasets. These include Rectified Linear units (ReLU) and other activation functions, pooling (average and max pooling), normalisation (Batch Norm and Layer Norm), etc. Normally, in a CNN architecture after a series of convolutional layers, there will be several fully-connected layers before the outputs. A fully-connected layer is a normal layer with dense connections (instead of convolutional connections). These layers will process and weave the features from the preceding convolutional layers to make accurate prediction. One of the advantages of CNN is that it can process the raw pixel values from images and learn discriminative features in an end-to-end fashion.

Based on our study on neural networks, we design a custom CNN which can be applied to plant identification or leaf disease classification. The structure of our CNN is similar to (Chen et al., 2020). However, different from it, we apply our structure to image data. The input size of the our CNN is set as and it has 4 convolutional layers in total. There is a batch normalization layer that can normalize the inputs after each convolutional layer. After 2 convolutional layers and 2 batch normalization layers, we place a max-pooling layer (this combination is repeated twice). The activation functions for all units are ReLU. On top of these layers, depending on various tasks, we add output layers to perform prediction. The units in these layers are constrained together as a softmax group. Besides the custom CNN, in what follows, we will present the most common off-the-self CNNs which have been used for image classification in general and plant pathology in specific.

AlexNet. This CNN architecture starts with five convolutional layers, and there are two max-pooling layers between the first three convolution layers. In the later stage, AlexNet has three fully connected layers. An interesting feature of AlexNet is its activation functions are designed as non-saturating ReLU. AlexNet was one of the early CNN models that made a breakthrough in image classification, notably being the first CNN to win the ImageNet challenge in 2012.

VGG16. This CNN architecture has 16 layers with multiple kernel-size filters for the convolution. This is different from the first and second large kernel-sized filters in AlexNet. VGG was designed to increase the depth of CNNs where it has several max-pooling layers. In VGG16, there are three large fully connected layers, one with units and another with units in the later stage of its architecture. In image classification, VGG16 achieved 92.7% top-5 test accuracy in the 2014 ImageNet challenge.

ResNet101. This is a powerful structure where we can design and train the model with a lot of layers to gain performance superiority. The key component of ResNet is its ”skip connections” which will skip one or several layers before rejoining to connect to the following layer. This idea can help mitigate the vanishing gradient issue or to deal with the degradation issue. ResNet can help reduce the training error when adding more layers to the CNN, hence providing a good structure for scalable learning (He et al., 2016). ResNet was the winner of ILSVRC 2015 challenge (a subset of ImageNet).

InceptionV3. Inception is a class of CNNs that utilises Inception modules for deeper structure with more efficient computation. The motivation of Inception is to prevent the number of parameters from being too large while building deeper neural networks (Szegedy et al., 2015). Inception consists of asymmetric and symmetric construction blocks. Different layers are employed, including convolution layers, average and max-pooling layers, concatenate layers, dropout layers and fully connected layers. Each Inception module in this architecture consists of four operations in parallel. The modules will be linked by concatenate layers. The batch Normalization method has been applied to the output of convolutional layers and is widely used in the whole model. In this study, we use the most popular version of Inception, i.e., InceptionV3.

MobileNetV2. MobileNet is one of the most popular light-weight CNN architectures. It aims to significantly reduce the size of the parameters and to increase the computational speed while maintaining accuracy. It was designed based on the inverted residual structure. However, different from other residual models, its residual block’s input and output are thin bottleneck layers. Also, the light-weight depthwise convolution operator is used in its intermediate expansion layer to reduce the number of parameters (Sandler et al., 2018). Interestingly enough, the lightweight depthwise convolution can not only reduce the complexity of the model, i.e. size of the model, but also greatly reduce computational cost. MobileNet, therefore, is popular for low-resource devices, especially for mobile devices. In this paper, we use MobileNetV2.

EfficientNet. Similar to MobileNet, this is one of the light-weight CNN architectures. EfficientNet is based on a scaling approach which employs fixed and compound coefficients to scale all depth/ width/ resolution dimensions. In this study, we employ EfficientNet with two core parts, one is inverted bottleneck residual blocks (adopted from MobileNetV2) and the other is Squeeze-and-Excitation blocks (SENet). EfffientNet achieved 77.3% top-1 accuracy in the ImageNet dataset.

Vision Transformer (ViT). Vision Transformer (ViT) model (Dosovitskiy et al., 2021) was released in 2021, based on the idea of Transformers (Vaswani et al., 2017) developed from the natural language processing. ViTs can handle a wide range of image sizes without requiring architectural changes. ViTs have a mechanism to capture global context information. They can attend to all image patches simultaneously, allowing them to understand the relationships between distant parts of an image. ViTs leverage the self-attention mechanism, which can capture complex relationships between image patche. They have shown advantages over CNNs in several tasks, for example ViTs perform better than ResNet in image classification on ImageNet and CIFAR-10 (Dosovitskiy et al., 2021).

We have presented popular CNN models used in this study. Those models can work alone to predict plant species or disease types, or they can be the backbone in a multi-prediction model to predict these two labels simultaneously, as shown in what follows.

3.2.2. Multi-model CNNs

This is the most straightforward application of CNNs for multi-prediction. A deep learning model can consist of two independent CNNs, each for a task. In this study we use two independent CNNs, one for predicting plant species and the other for predicting disease types, as shown in Figure 4. The two CNNs have the same architecture but each has different set of parameters.

3.2.3. Multi-label (power-set) CNNs

The second approach for multi-prediction is multi-label where the two tasks (plant prediction and disease prediction) are encoded in a single output layer. The most feasible way for it is to join the labels, as known as power-set labelling. This would help transfer a multi-label task to a multi-class task where we can directly apply the backbone CNNs above. In particular, the plant species label and the disease type label will be combined, making a joint label representing both plants and diseases. For example, a power-set label “apple_scab” can be created from the plant label “apple” and the disease label “scab”. This is the most common method for plant identification and disease classification in the literature. However, our paper is the first to apply and compare different backbones CNNs. Despite being simple, this multi-label approach has a scalable issue when facing a large number of classes for each label. In the worst case, the power-set label will consist of classes, where is the number of plants and is the number of diseases. It may lead to the growth in computational complexity.

3.2.4. Multi-output CNNs

This is a class of CNN models in which we have an input layer and multiple output layers, each for a task as shown in Figure 4 (third model). Theoretically, compare to the multi-label CNNs, multi-output CNNs have fewer parameters because the latter have fewer connections to the output layer(s). The number of total output units in multi-output CNNs for plant & diseaes prediction is . The learning in these CNNs is done by optimising the model for all tasks simultaneously. This is also different from the multi-task learning we will discuss below.

3.2.5. Multi-task Deep Learning

Finally, we can adapt multi-task deep learning architectures for leaf disease and plant type classification. Originally, a multi-task learning problem is to learn a model from different (related) domains, where each task is associate a dataset . Multi-task learning is very suitable for deep learning models, the features learned from one task may benefit another task learning (Zhang and Yang, 2021). We can utilise those structures for plant identification (task 1) and disease classification (task 2) by sharing input data among different tasks, i.e. and , , where is a plant leaf dataset in our problem statement. Although having the same outputs as multi-output CNNs, the learning in multi-task models is different in which for each data point (an image), they only optimise for a task. In this study, instead of directly using the backbone CNNs (as we will need to implement the learning strategies), we employ the state-of-the-art multi-task models, as shown below.

Cross-stitch Network. Cross-stitch is a multi-task approach to learning shared and task-specific representations (Misra et al., 2016). To this end, cross-stitch units are designed with a soft-parameter sharing mechanism. These units integrate the features from outputs of multiple networks, each can represent different patterns of the tasks. In other words, they provide soft feature fusions among multiple single-task networks through a linear combination of every layer’s activations. As the result, cross-stitch networks would be able to fuel discriminative features across multiple tasks to improve performance, even with a small number of training examples. In (Vandenhende et al., 2021a), the authors found that we should pre-train each single-task network first before stitching these networks for better performance. In our study, the cross-stitch units have been deployed in the middle and the end of two CNNs, one for plant prediction and the other for disease prediction.

Multi-Task Attention Network (MTAN). Different from the parallel learning of shared and task-specific features in cross-stitch networks, MTAN will learn shared (global) features first from the images, and then, allow task-specific features to be learned from those global features via soft-attention modules (Liu et al., 2019). MTAN is built upon a single shared network with a global feature pool and associate each task with a task-specific soft-attention module. Compared to cross-stitch networks, as MTAN aims to share a general feature pool among different single-task networks it will not be affected by the scalability issue. However its limitation would be the lack of diversity in task-specific features are they are all produced from the shared pool (Vandenhende et al., 2021a).

Task Switching Network (TSN). TSN is based on a task-conditional single-encoder-single-decoder architecture which works with one task at a time while switching between the tasks (Ronneberger et al., 2015). TSN’s decoder is based on a U-Net architecture and its encoder is ResNet-based backbone (ResNet-18). In TSN, different tasks will be switched by a small task embedding network, one task after another. Meanwhile, the single encoder-decoder would pair all parameters for sharing among the tasks.

Model-Contrastive Learning (MOON). MOON is a recent model from federated learning paradigm that aims to leverage the similarity of different tasks’ representations to enhance local training of each task (Li et al., 2021a). In (Li et al., 2021a), it is shown that the global features are more useful than the local features learned from each task’s dataset. Therefore, MOON proposes a contrastive learning strategy to fine-tune the local representations at model level by maximizing the agreement between representations of the local model and the global model. The advantages of MOON are its simplicity, effectiveness, and ability to deal with the non-iid data issue. Compared to state-of-the-art approaches, MOON achieves a significant improvement on various image classification tasks.

3.2.6. Our model: Generalised Stacking Multi-output CNNs (GSMo-CNNs)

For completeness, we propose a new model for plant identification and disease classification. The CNN architecture of our model is inspired by the relationship between plant species and disease types. It is commonly known that some diseases may only appear in some particular plants and, therefore, the information about diseases can be useful for the prediction of plants. This reasoning can be applied contrariwise where plant information can be used to predict diseases. We realise this idea by stacking the output (softmax) layers for plant identification and disease classification one on top of another. We generalise the effect of the relationships between plant species and disease types by adding weights on different loss functions at each level of the stack for each output. In what follows, we will show the details of our model.

Architecture. The structure of our model is shown in Figure 5. The model is based on the multi-output approaches with all convolutional layers that can be reused from the backbone CNNs we presented earlier. The changes here, as we can see, are (1) the split of dense layers for different tasks; and (b) the stacking of prediction layers. The motivation behind the split of layers is we can use the convolutional layers to learn global features while the dense layers will learn task-specific features. For the stacking strategy of prediction layers, GSMo-CNNs will temporarily infer the probability of plant species and the probability of diseases in the first prediction level. After that, we apply cross connection, i.e. we concatenate the probability of predicted plants and the CNN features to make the final prediction of diseases, and similarly, we concatenate the probability of predicted diseases with the CNN features to make the final prediction of plants.

In particular, the proposed model has two sets of fully connected layers (called here as ”branches”) after the flatten layer, each branch will connect to a concatenate layer in the later stage. These two branches will produce a pair of predicted plant and disease results first, named here as (plant temporary) and (disease temporary). We did not make an actual prediction at this level, instead, we extract the prediction probability from the two softmax layers for the next step. At the concatenate layer, the image features from the flatten layer of the CNN will be combined with the features from softmax layers (probability) in the temporary prediction level ( or , depending on the branch used). These two soft-max layers will connect to two different concatenate layers, where they will join with the shared CNN’s flatten layer. On top of each concatenate layer, we have another softmax layer for cross prediction. In other words, our GSMo-CNN uses the prediction probability of plant species (from ) to predict leaf diseases () and uses the predicted disease () to predict the plant species () from leaf images. Note that we can use any of the four output layers (, , and for prediction but we will show in the experiments that by stacking the softmax layers the final output layers would give better performance. The use of probability in the temporary prediction layer (with the function), instead of the predicted values (with the function), will help smooth the propagation of gradients in the learning. Our model is inspired by classifier chain (Read et al., 2009), but the difference here is that the ”chain” in GSMo-CNNs is implemented in a stacking fashion with parallel inference instead of sequential inference.

Training. For training, as we have four outputs, we aim to optimise the prediction at every output layer. This is because a good estimation of plant species in the temporary prediction layer will help improve the prediction of diseases in the final prediction (top) layer. Similarly, a good estimation of diseases in the temporary prediction layer will help the prediction of plants in the final layer. As the result, we will need four loss functions to minimise. Normally, we can train our model to minimise the losses simultaneously to take advantage of the underlying relationships between plant species and leaf disease. This would also allow us to impose the logical negation constraint that some diseases will not appear on specific plants and vice versa. Furthermore, we are interested in how much relationships and constraints affect the learning. However, there will be an issue associated with such relationships and constraints in the case where the temporary layers do not learn well and make the wrong prediction for the top layers. This will definitely happen during the beginning of the training where, after being initialised, the model will have very low performance (and many mistakes will be made) as it just starts to learn. Therefore, in order to control this impact, we associate each loss with a balance weight. In particular, we train our model by minimising the following total loss function:

| (1) |

where is a cross-entropy loss function; , are the ground truth for the plant species at level 1 and level 2 in the stack respectively; , , are the corresponding predicted plant species; , are the ground truth for the disease types at level 1 and level 2 in the stack respectively; , , are the corresponding predicted diseases. , , , are the balance weights. In the experiment , , , and are treated as hyper-parameters. The reason for using balance weights for the losses in each level is that we want to fine-tune the learning in the upper level based on what we have in the lower level. We also apply different balance weights to the losses on different outputs to see how they influence each other. We would anticipate that these balance weights would be similar as the prediction of plants would help the prediction of diseases, and vice versa.

Inference Our model performs two stages of inference for plant species and disease types but only the outcomes of the stage will be used for the final prediction. However, by using the balance weights in the training, we generalise the multi-output architecture, allowing control of interactions between different outputs and between outputs in different levels. As the result, the multi-model and multi-output approaches are just special cases of our model, as follows.

-

•

If and then our model becomes a single CNN for plant identification. In this case the layer for plant identification at the first level will be used as the output.

-

•

If and then our model becomes a single CNN for disease classification. In this case the layer for disease classification at the first level will be used as the output.

-

•

If and = =0 our model becomes a regular multi-output CNNs, as shown earlier. The outputs are the two layers in the first prediction level.

3.3. Evaluation Metrics

Based on the study of related work and classification tasks, we found that Accuracy and F1-score are the most common evaluation metrics for plant identification and disease classification. Both metrics can be calculated from a confusion matrix (Chicco and Jurman, 2020). The accuracy metric indicates how accurate a machine learning model is. A prediction is correct if its output is the same as the actual label (ground truth) and accuracy is the ratio of the number of correct predictions to the total number of samples. Generally, accuracy is a sensible metric for classification tasks, however, when facing the data imbalance issue accuracy will become more biased towards the class with the most number of samples. For example, if 95 out of 100 images are apple leaves, then we can make a simple guess to achieve 95% accuracy. Although not all datasets are imbalanced and sometimes the imbalance issue is not severe, for completeness, we also employ F1-score as another evaluation metric. Accuracy and F1-score are calculated as follows: , , , , .

Here, TP, TN, FP,FN, and FPR denote True Positives, True Negatives, False Positives, False Negatives, and False Positive Rate respectively. As we can see, F1-score is based on both Recall and Precision. It balances the weights of precision and recall, making it a more reliable metric. In this study, F1-score will be the key evaluation metric, e.g. used for model selection. Together with Accuracy, they will provide a comprehensive view of the performance of a model. We will evaluate a model based on its performance (Accuracy & F1-score) on plant prediction, disease prediction, and (combined) plant-disease prediction. False Positive Rates will be used to assist in evaluating the optimal models for all approaches. FPR represents the number of false positive predictions, and the sum of FP and TN constitutes the total number of true negatives.

3.4. Setup

With the models are ready, we are now in a position to prepare for the empirical study. A collection of common and recent CNN models for plant species and diseases with various learning approaches (multi-model, multi-label, multi-output, multi-task, and GSMo-CNNs) will be tested. Backbone models (i.e., CNN, AlexNet, VGG16, ResNet101, EfficientNet, InceptionV3 & MobileNetV2) are implemented in Tensorflow 2.6, and multi-task models are in Pytorch (downloaded and cited from related authors’ papers and GitHub). We perform the experiments on three public datasets, including Plant Village, Plant Leaves and PlantDoc, as detailed in Section 3.1. The ratio of training and test sets is 80: 20 and 10% of the training set will be used as a validation set. All hyper-parameters in the experiments were set uniformly across the models and approaches. During the training, we adopt an early stopping method to avoid the problem of over-fitting. In particular, if a model’s validation loss has not been decreasing in 50 epochs we stop the training. The input size of leaf images is set to pixels and all samples are in RGB format. Our model selection is done by measuring F1-score on the validation set. For GSMo-CNNs, we search the balance weights using a coarse grid [0.2, 0.4, 0.6, 0.8] and then narrow down the search to find the best combination for all datasets. We find that , , , are generally good for all three datasets and we report the results of GSMo-CNNs under this configuration. We run each experiment 10 times and report the average accuracy & F1-score with standard deviation.

4. Experimental Results

In this section, we report the results of different models on the benchmark datasets and analyse their performance. We divide the results into ”Plant Prediction”, ”Disease Classification”, and both (Plant Identification & Disease Classification). In multi-model, multi-label (power-set), multi-output, and our GSMo-CNNs, we evaluate different CNNs backbones, including AlexNet, VGG16, ResNet101, EfficientNet, InceptionV3, MobileNetV2, and our custom CNN. We test two versions of our GSMo-CNNs, one without balance weights (), and the other with balance weights. To save time for model selection, we choose the best backbone CNN found in the former version (GSMo-CNNs without balance weights) for the latter. For completeness, we apply transfer learning to our GSMo-CNNs to see if improvement can be achieved. We implement the transfer learning idea by using pre-trained backbone CNNs on the ImageNet dataset to initialise our model and fine-tune it on a leaf image dataset.

4.1. Plant Identification

Let us discuss the plant identification task first to evaluate the models and approaches on their ability to predict plant species from leaf images. This is a classification task where we identify plant types among a fixed set of categories (species). Different from previous work on identifying plants using healthy leaves only, the challenge of this task in our study is we have both healthy and diseased leaves images. It is worth noting that identifying plant species from corrupted or damaged leaves is non-trivial. Nevertheless, we expect that an effective classification model should distinguish them accurately. Table 5 shows all the plant type results from the experiment. The results contain the accuracy and F-1 scores of multi-model, multi-label (power-set), multi-output, multi-task models, and GSMo-CNNs on the benchmark datasets with different CNN backbones.

Table 5 and Figure 6 shows that all approaches achieve good performance (more than accuracy & F1-score) on Plant Village and Plant Leaves. These results confirm the effectiveness of deep learning approaches for classification tasks with image inputs. For PlantDoc (both PlantDoc-0.2 and PlantDoc-1.0), the performance is lower. We anticipate this outcome because the images in Plant Doc are more complex with noisy backgrounds and there can be multiple leaves in one image. Note that, for the sake of the fair evaluation, we don’t apply any data processing and augmentation techniques, even though previous research shows that they can improve the performance greatly (Saraswathi et al., 2021; G. and J., 2019; Agarwal et al., 2019).

In terms of backbone CNNs, as we can see, in multi-model approach, there is an inconsistency of which backbone CNNs has the best performance in all datasets. In particular, MobileNetV2 has the highest accuracy and F1-score in Plant Village, VGG16 is the best in PlantDoc-0.2, while InceptionV3 has the best results in both Plant leaves and PlantDoc-1.0. Different from the multi-model approach, in multi-label and multi-output approaches InceptionV3 clearly shows its advantages with higher performance than other backbones. In PlantDoc-0.2 and PlantDoc-1.0, InceptionV3 achieves the best performance and its advantages are overwhelming. In particular, its accuracy/F1-score on PlantDoc-0.2 are / and on PlantDoc-1.0 are /, respectively. In the case of GSMo-CNN, InceptionV3 is also better than other backbone CNNs in Plant Village, Plant Leaves, PlantDoc-2.0, and PlantDoc-1.0.

In terms of common approaches (multi-model, multi-label, multi-output, multi-task), multi-label (power-set) achieves the best results in Plant Village (Accuracy: 99.469% & F1-score: 0.99469) and multi-model has the highest average accuracy and F1-score (99.175% & 0.99175) in Plant Leaves dataset. Multi-output outperforms multi-model, multi-label, and multi-task in PlantDoc-0.2 (Accuracy: , F1-score: ) and PlantDoc-1.0 (Accuracy: , F1-score: ). Multi-task is inferior to other approaches. This is not surprised as both tasks (plant identification and disease classification) shared the same input data, which is different from original multi-task learning paradigm where different tasks have different sets of data.

For our proposed model GSMo-CNN (denoted as ”Our methods (Plant)” in Table 5, we applied 7 backbone CNNs for our new model structure without the balance weight (BW) of each loss (i.e. set ::: as 1:1:1:1). InceptionV3 is also the best backbone in this case. This is the backbone we use for GSMo-CNN with balance weights. Without the balance weights, the performance of the GSMo-CNNs is already promising as it achieves better results than multi-model, multi-label, multi-output, and multi-task on Plant Village (Accuracy:, F1-score: ) and on PlantDoc-1.0 (Accuracy: , F1-score: ). With the balance weights, GSMo-CNNs achieves the best performance in three cases: Plant Leaves, PlantDoc-0.2, and PlantDoc-1.0. The accuracy and F1-score are as follows, Plant Village: & ); Plant Leaves: & ; PlantDoc-0.2: , ; and PlantDoc-1.0: & .

Finally, when transfer learning is applied we can see improvement in all cases, especially for PlantDoc-0.2 and PlantDoct-1.0. In Plant Village, the best results without transfer learning are accuracy and F1-score while transfer learning achieves accuracy and F1-score. Huge improvement can be seen in PlantDoc sets. In particular, our model with transfer learning increase the performance from accuracy & F1-score to & on PlantDoc-0.2, and from accuracy & F1-score to & on PlantDoc-1.0.

4.2. Disease Classification

The disease classification task aims to predict the diseases of plants from leaf images. Note that, ”healthy” is also treated as a category of plant diseases. The task will not only identify whether a plant has a disease or not but also need to accurately categorise the disease types from different plants. A model should be able to focus on the diseases and not be confused by the common patterns from leaves of the same type. Table 6 shows the results of different models and approaches, including all the disease classification results from multi-model CNNs, multi-label (power-set) CNNs, multi-output CNNs, multi-task learning approaches and our new model.

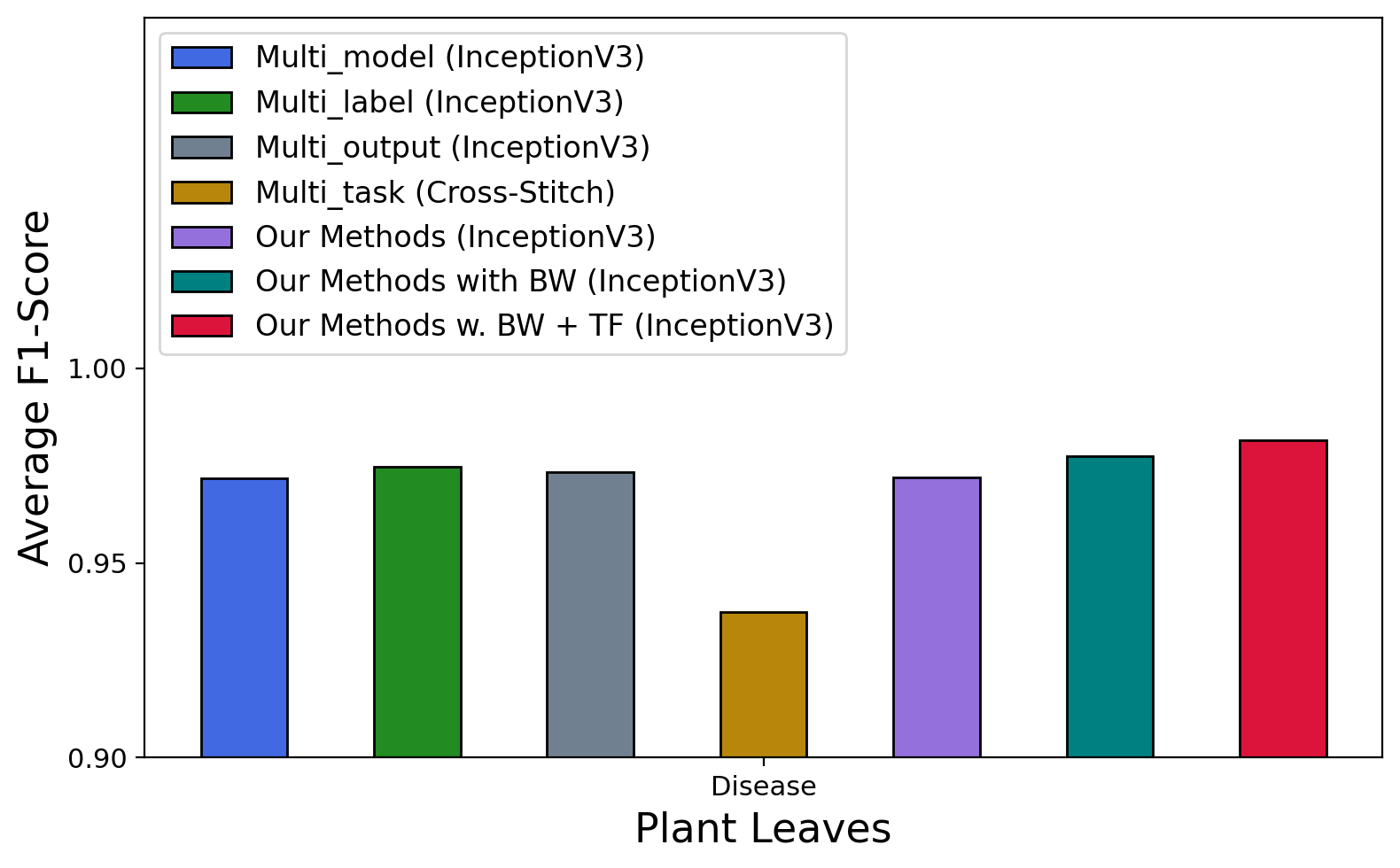

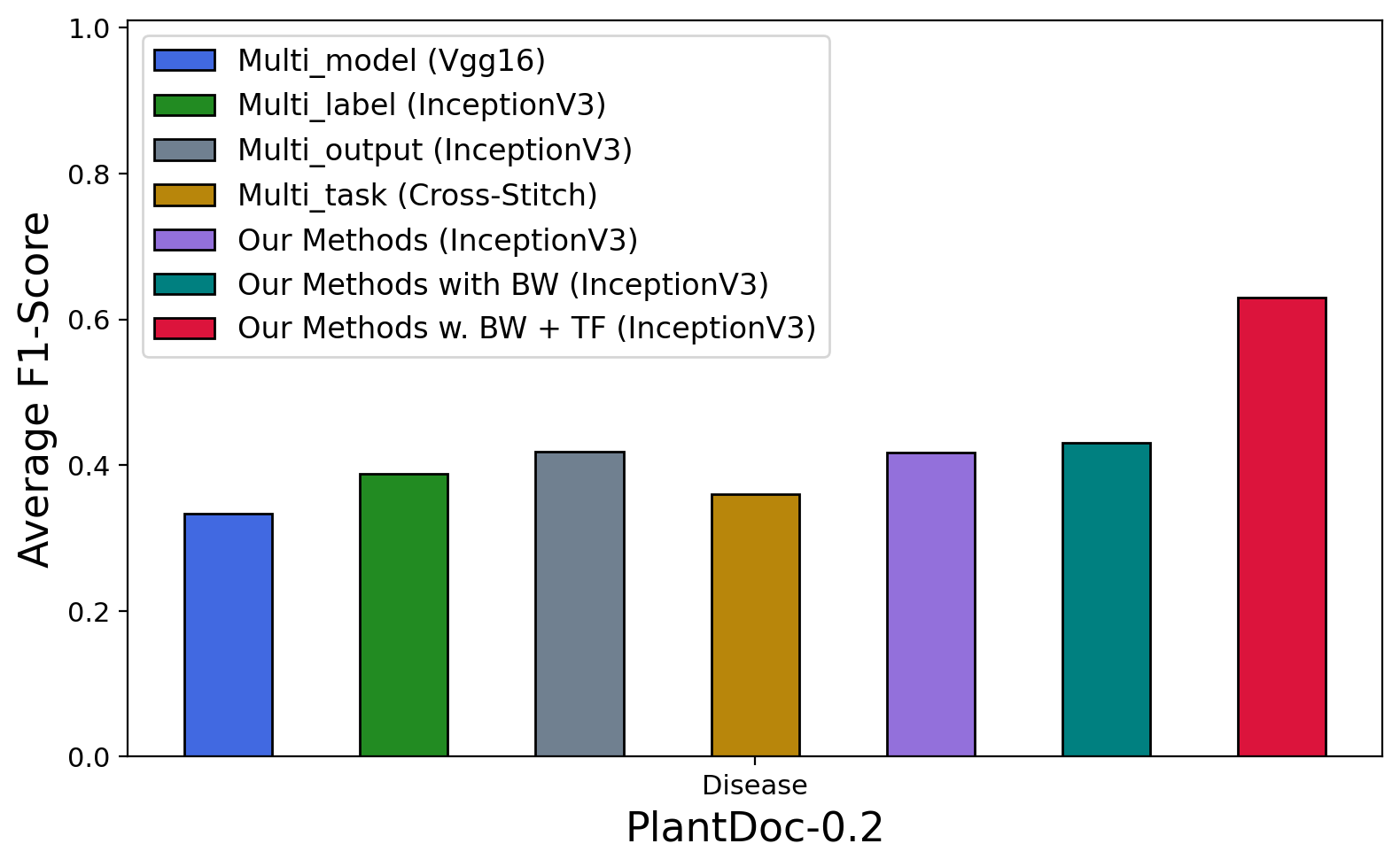

As we can see in Table 6 and Figure 7, all approaches achieve good performance on Plant Village and Plant Leaves datasets (most accuracy & F1-score over 80% & 0.80). In terms of backbone CNNs, we observe a similar trend as in Plant identification, where InceptionV3 performs better than other CNNs in most of the approaches and datasets. The exception can only be seen in the multi-model approach where MobileNet got the best results in Plant Village ( accuracy & F1-score) and VGG16 achieved accuracy and F1-score in PlantDoc-0.2. In terms of common approaches (multi-model, multi-label, multi-output, multi-task), multi-model (with InceptionV3) achieves the best accuracy () and F1-score () on PlantDoc-1.0; multi-output (with InceptionV3) is better than the others on Plant Village ( accuracy and F1-score) and on PlantDoc-0.2 ( accuracy and F1-score); multi-label (with InceptionV3) achieves the highest accuracy () and F1-score () on Plant Leaves.

Our proposed model also performs well in the case of disease classification. Without the balance weights, GSMo-CNN achieves better results than multi-model, multi-label, multi-output, and multi-task approaches in PlantVillage and PlantDoc-0.2. The accuracy and F1-score of our new model without balance weights and with InceptionV3 as the backbone are as follows: Plant Village (99.418% & 0.99417), Plant Leaves (97.292% & 0.97201), PlantDoc-0.2 (45.029%, 0.41716) and PlantDoc-1.0 (47.542% & 0.46156). When balance weights are used, with InceptionV3 as the best backbone GSMo-CNNs achieves the best results in 3 out of 4 cases. In particular, it has accuracy and F1-score on Plant Village dataset; accuracy and F1-score on Plant Leaves; and accuracy and F1-score on PlantDoc-0.2. Only in PlantDoc-1.0 where GSMo-CNN ranks second, here, multi-model wins the best accuracy () and F1-score (). If we use InceptionV3 pre-trained weights from ImageNet as the backbone for GSMo-CNN, we can even achieve much higher performance. As we can see, the accuracy and F1-score of our model in all datasets are improved significantly as follows, Plant Village (99.615% accuracy & 0.99615 F1-score), Plant Leaves (98.149% accuracy & 0.98146 F1-score), PlantDoc-0.2 (64.544% accuracy & 0.62968 F1-score) and PlantDoc-1.0 (63.305% accuracy & 0.63757 F1-score).

4.3. Plant Identification & Disease Classification

For completeness, we will evaluate the models and approaches in predicting plant species and disease types altogether. This is the combination of the plant identification task and the disease classification task, as discussed earlier. However, instead of evaluating each task separately, we are interested in studying how deep learning models can perform accurate predictions for both tasks. A prediction is accurate if and only if both plant species and disease type are inferred correctly. This would be the key criteria for users to select a model for their applications, as usually we want to have information about both plants and diseases to find a suitable treatment. For evaluation, plant species and disease type will be combined to the joint species-disease labels for the calculation of average accuracy and F1-score.

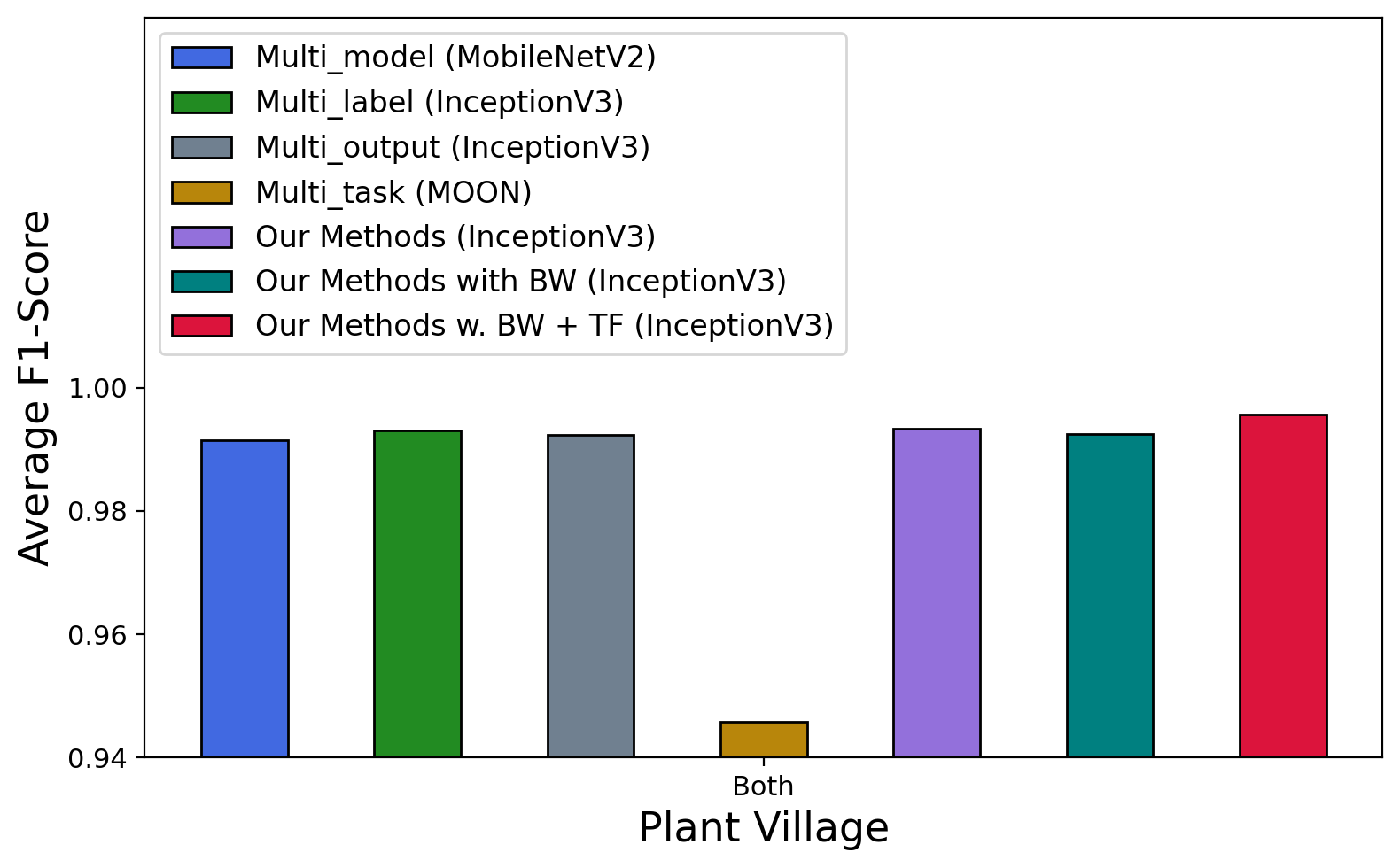

Table 7 and Figure 8 shows the results of all models and approaches. Although the combined prediction of plant species and disease types is more complex than the previous two tasks, all approaches achieved promising results with more than average accuracy and F1-score on Plant Village and Plant Leaves. In terms of backbone CNNs, the multi-model approach demonstrates that MobileNet achieves the best performance ( accuracy and F1-score) on Plant Village and VGG16 achieves the highest results on PlantDoc-0.2 ( accuracy & F1-score). The multi-output approach shows that AlexNet has the highest accuracy of and F1-score of on PlantDoc-1.0. Except for such three cases, InceptionV3 achieves higher performance than other backbone CNNs in the other cases.

Among the common multi-prediction approaches (multi-model, multi-label, multi-output, and multi-task), multi-label (power-set) performs the best on Plant Village, Plant Leaves, and PlantDoc-1.0 while multi-output has the best results on PlantDoc-0.2. It makes sense as the power-set labelling in the multi-task approach combines the two labels together, therefore, the learning would directly optimise the prediction of the combined label (plant & disease). Interestingly, multi-output performs well in PlantDoc-0.2 even though there is no communication between the two labels during the learning. Having said that, it can be the back-propagation of gradients from the two branches to the shared CNNs that plays a role in regularisation for the shared features in the learning. Such regularisation would help the model to learn more generalised features rather than task-specific features. The multi-task learning models (TSNs, MOON, Cross Stitch & MTAN) show good results in Plant Village and Plant Leaves, however, they are not comparable to other approaches.