Deep Learning Enhanced Dynamic Mode Decomposition

Abstract

Koopman operator theory shows how nonlinear dynamical systems can be represented as an infinite-dimensional, linear operator acting on a Hilbert space of observables of the system. However, determining the relevant modes and eigenvalues of this infinite-dimensional operator can be difficult. The extended dynamic mode decomposition (EDMD) is one such method for generating approximations to Koopman spectra and modes, but the EDMD method faces its own set of challenges due to the need of user defined observables. To address this issue, we explore the use of autoencoder networks to simultaneously find optimal families of observables which also generate both accurate embeddings of the flow into a space of observables and submersions of the observables back into flow coordinates. This network results in a global transformation of the flow and affords future state prediction via the EDMD and the decoder network. We call this method the deep learning dynamic mode decomposition (DLDMD). The method is tested on canonical nonlinear data sets and is shown to produce results that outperform a standard DMD approach and enable data-driven prediction where the standard DMD fails.

1 Introduction:

One particular collection of methods that has garnered significant attention and development is those built around the approximation of Koopman operators [1], which are broadly described as dynamic mode decomposition (DMD). These methods in some sense fit within the larger context of modal decompositions [2, 3], but in several ways, they go further insofar as they also readily generate proxies for the flow maps connected to the otherwise unknown but underlying dynamical systems generating the data in question. The first papers in this area [4, 5] showed across a handful of problems that with a surprisingly straightforward approach, one could readily generate accurate models with nothing but measured time series. Further extensions soon appeared by way of the extended DMD (EDMD) and the kernel DMD (KDMD) [6, 7] which generalized the prior approaches in such a way to better exploit the approximation methodologies used in Reproducing Kernel Hilbert Spaces. These methods were used to develop an all purpose approach to model discovery [8]. See [9] for a textbook presentation and summary of what one might describe as the first wave of DMD literature.

However, in the above methods, user choices for how to represent data are necessary, and as shown in [10], even what appear to be natural choices can produce misleading or even outright incorrect results. In response, researchers have brought several supervised learning, or what one might broadly call machine learning (ML), approaches to bear on the problem of finding optimal representations of data for the purpose of approximating Koopman operators. These approaches have included dictionary learning approaches [11, 12, 13] and related kernel learning methods [14]. Likewise, building on theoretical results in [15, 16], more general methods seeking the discovery of optimal topological conjugacies of the flow have been developed [17, 18]. Related work on discovering optimal embeddings of chaotic time series [19] and ML driven model discovery [20, 21, 22] have also recently appeared.

Between the dictionary learning and topological conjugacy approaches then, in this work we present a hybrid methodology that uses ML to discover optimal dictionaries of observables which also act as embeddings of the dynamics that enforce both one-time step accuracy as well as global stability in the approximation of the time series. A similar philosophy is used in [23]. However, in that work autoencoders are used to obtain low-dimensional structure from high-dimensional data; see also the results in [20, 24]. In contrast, in this paper we look at low-dimensional dynamical systems and use autoencoders to find optimal embeddings of the phase-space coordinates. Running EDMD on the embedded coordinates then generates global linearizations of the flow which are characterized by a single set of eigenvalues that govern the dynamics of a given trajectory. Our spectral results may be contrasted with that of [17], in which their autoencoder is paired with an auxiliary network that parameterizes new eigenvalues at each point along the trajectory. We forego this additional parameterization and obtain a more clearly global spectral representation for any given path in the flow.

To assess the validity and robustness of our approach, we explore a number of examples motivated by several different phase-space geometries. We examine how our method deals with the classic harmonic oscillator, the Duffing system, the multiscale Van der Pol oscillator, and the chaotic Lorenz-63 equations. This presents a range of problems in which one has a global center, two centers, slow/fast dynamics on an attractive limit cycle, and finally a strange attractor. As we show, our method is able to learn across these different geometries, thereby establishing confidence that it should be adaptable to more complex problems. While accuracy for test data is high for the planar problems, as expected, the chaotic dynamics in Lorenz-63 cause prediction accuracy to degrade relatively quickly. Nevertheless, with a minimal number of assumptions and guidance in our learning algorithm, we are able to generate a reasonable approximation to the strange attractor, which shows our method should be applicable in a wider range of use cases than those explored here.

In Section 2, we introduce the Koopman operator and outline the DMD for finding a finite-dimensional approximation to the Koopman operator. The role of deep autoencoders in our method is presented in Section 3, and our algorithm and implementation details are explained in Section 4. Results are presented in Section 5. Discussion of results and explorations of future directions are presented in Section 6.

2 The Koopman Operator and Dynamic Mode Decomposition

In this work, we seek to generate approximate predictive models for time series, say , which are generated by an unknown dynamical system of the form

| (1) |

where is some connected, compact subset of . We denote the affiliated flow of this equation as , and observables of the state as , where . To build these approximate predictive models, we build on the seminal work in [1] which shows that there exists a linear representation of the flow map given by the Koopman operator where

| (2) |

Linearity is gained at the cost of turning the problem of predicting a finite-dimensional flow into one of examining the infinite-dimensional structure of the linear operator . To wit, if we consider the observables to be members of the associated Hilbert space of observables, say , if where is given by

For concreteness, we have chosen to integrate with respect to Lebesgue measure, though of course others could be more convenient or appropriate. We see then that this makes the Koopman operator an infinite-dimensional map such that

In this case, one has

where

Based on the above computations, we observe that the Koopman operator is an isometry for volume preserving flows.

If we further suppose that is invariant with respect to the flow, or for , we can simplify the above statement so that

We will assume this requirement going forward since it is necessary to make much in the way of concrete statements about the analytic properties of the Koopman operator. In particular, using Equation (2), if we further suppose that we have an observable such that then we see that

where denotes the supremum norm over the compact subset . From this computation, we find that the infinitesimal generator, say , affiliated with is given by

and we likewise can use the Koopman operator to solve the initial-value problem

so that . From here, the central question in Koopman analysis is to determine the spectrum and affiliated modes of since these then completely determine the behavior of . This can, of course, involve solving the classic eigenvalue problem

However, as the reader may have noticed, there has been no discussion of boundary conditions. Therefore, while one can get many useful results focusing on this problem, one must also allow that continuous spectra are a natural feature, and eigenfunctions can have pathological behavior as well as being difficult to categorize completely and in detail; see [16] and [25]. As this issue represents an evolving research area, we do not attempt to make any further claim and simply follow in the existing trend of the literature and focus on trying to numerically solve the classic eigenvalue problem.

To this end, if we can find the Koopman eigenfunctions with affiliated eigenvalues , where

then for any observable one, in principle, has a modal decomposition such that

as well as an analytic representation of the associated dynamics

| (3) |

Note, that the analytic details we provide regarding the Koopman operator in this section are those we find most essential to understanding the present work and for providing a reasonably complete reading experience. Essentially all of it exists, and more detail and depth can be found, in [26, 27, 28], among many other sources.

Now, the challenge of determining the modes and eigenvalues of the infinite-dimensional operator, , remains. In general, this is impossible to obtain in an analytic way, however, the DMD and its extensions, the EDMD and the KDMD [4, 5, 9, 6, 7], allow for the numerical determination of a finite number of the Koopman modes and eigenvalues. In this work, we focus on the EDMD since it most readily aligns with the methodologies of ML.

The EDMD begins with the data set where

where is the time step at which data are sampled. Note, per this definition, we have that . Following [6, 7], given our time snapshots , we suppose that any observable of interest lives in a finite-dimensional subspace described by a given basis of observables so that

| (4) |

Given this ansatz, we then suppose that

| (5) | ||||

where is the associated error which results from the introduction of the finite-dimensional approximation of the Koopman operator represented by the matrix .

While we ultimately find a matrix to minimize the error relative to the choice of observables, for this approach to make any real computational sense, we tacitly make the following assumption when using the EDMD

Ansatz 1.

We have chosen observables such that the space

is invariant under the action of the Koopman operator , i.e.

Equivalently, we suppose that there exists a set of observables for which the affiliated error in Equation (4) is identically zero.

We see that if this condition holds for , then it also holds for , where is an integer such that ; therefore, this Ansatz is stable with respect to iteration of the discrete time Koopman operator. If this condition does not hold, or at least hold up to some nominal degree of error, then one should not imagine that the EDMD method is going to provide much insight into the behavior of the Koopman operator.

One can then demonstrate, in line with the larger DMD literature, that finding the error minimizing matrix is equivalent to solving the optimization problem

| (6) |

where the matrices are given by

| (7) |

where each column in the above matrices is a vector of observables of the form

where is the Frobenius norm. In practice, we solve (6) using the Singular Value Decomposition (SVD) of so that

This then gives us

where denotes the Moore–Penrose pseudoinverse. The corresponding error in the Frobenius norm is given by

We see that serves as a proxy for the error function .

Following existing methodology [6], if we wished to find Koopman eigenfunctions and eigenvalues, then after diagonalizing so that

then one can show that the quantity should be an approximation to an actual Koopman eigenvalue and

| (8) |

From here, in the traditional EDMD algorithm, one approximates the dynamics via the reconstruction formula

where the Koopman modes in principle solve the initial-value problem

In the original coordinates of the chosen observables, using Equation (8) we obtain the equivalent formula

where is the matrix whose columns are the Koopman modes and is the diagonal matrix with diagonal entries . In practice, we find the Koopman modes via the fitting formula

| (9) |

where is any complex matrix and the norm refers to the standard Euclidean norm. Of course, the appropriateness of this fit is completely contingent on the degree to which Ansatz 1 holds. We address this issue in Section 3.

Finally, we note that the standard DMD is given by letting and . In this case, we have that . Due to its popularity and ease of implementation, we use the DMD for reference in Section 5.

3 The Deep Learning Dynamic Mode Decomposition

The central dilemma when using the EDMD is finding suitable collections of observables relative to the data stream . To accomplish this in an algorithmic way, we suppose that an optimal set of observables can be found using a deep neural network where

and where across dimensions we define

We call this the encoder network and the transformed coordinates, , the latent variables. Given that the neural network representing consists of almost everywhere smooth transformations, we note that by Sard’s Theorem [29], we can generically assume that has a Jacobian of full rank at almost all points in the domain of the encoder. We generically assume that the latent dimension , making an immersion [29] from the flow space into the space of observables. In this case, the matrices from equation (7) are now given by

so that we compute in the latent-space coordinates . Beyond the lower bound given above, we do not enforce any constraint on the number of dimensions of the latent space. Instead, is treated as a hyperparameter. This is a key feature of our encoder networks as they are allowed to lift the data into higher-dimensional latent spaces, giving the EDMD a rich, and flexibly defined, space of observables with which to work.

Corresponding to the immersion , we introduce the decoder network which is meant to act as the inverse of , i.e. we seek a decoder mapping acting as a submersion

so that

| (10) |

Upon finding such a mapping, we can show the following lemma.

Lemma 1.

If for immersion there exists corresponding submersion such that

then is injective and therefore an embedding.

Proof.

Suppose

Then we see by identity that

and therefore . Thus is an embedding. ∎

As shown in [27, 16], flows which are diffeomorphic to one another share Koopman eigenvalues and have eigenfunctions that are identical up to diffeomorphism. In our case, and are typically not invertible since the reverse composition, , does not necessarily yield the identity. However, we can define the affiliated flow such that

Immediately, we find that there must of course be an affiliated Koopman operator, , corresponding to this encoded, or lifted, flow. This then allows us to show the following theorem.

Theorem 1.

With and as above, if are a spectral pair for the Koopman operator , then are a spectral pair for the Koopman operator where .

Proof.

For the operator , if we suppose it has eigenfunction with corresponding eigenvalue , then we have the identities

We then have the affiliated identities

and so in particular we see that

∎

Thus we see that every Koopman mode and eigenvalue in the original flow space is lifted up by the embedding . That said, it is of course possible then that new spectral information affiliated with can appear, and if we perform the EDMD in the lifted variables, there is no immediate guarantee we are, in fact, computing spectral information affiliated with the primary Koopman operator . That said, if in fact Ansatz 1 holds for the given choice of observables, which is to say we have made the right choice of embedding , then we can prove the following theorem.

Theorem 2.

Assuming Ansatz 1 holds relative to some choice of observables

, suppose that the action of on each observable is given by the connection matrix where

with denoting the entries of the connection matrix. If is diagonalizable, then the EDMD algorithm only computes spectra and eigenfunctions of , .

Proof.

For , we have that

If Ansatz 1 holds, it is then the case that

and likewise we must have that

Thus, the action of the Koopman operator is now recast in terms of the following matrix problem

Likewise, if we ask for the corresponding connection matrix of higher powers of so that for integer we have

Then we see that

or, defining with being the identity matrix,

Moreover, referring to the EDMD algorithm, clearly , so that if , then

Choosing then the vector of coefficients to be the column of or , we see

so that we have using the EDMD algorithm we see we have computed eigenvalues and eigenvectors of . Passing to the infinitesimal generator allows us to then extend the result for the Koopman operator for . ∎

So we see on the one hand that the EDMD left to its own devices is prone to introducing perhaps spurious spectral information, and of course, without recourse to a known reference, we have no way in advance of knowing how to tell which results generated via the EDMD produce relevant spectra. We note that this issue was numerically illustrated in [10]. On the other hand, if we can somehow ensure Ansatz 1 holds, then the EDMD is guaranteed to produce meaningful results with essentially zero error. Of course, what remains in either case is the fundamental dilemma of how to choose observables such that Ansatz 1 is enforced, or at least such that the error is guaranteed to be controlled in some uniform way.

To address this dilemma then, we propose the deep learning dynamic mode decomposition (DLDMD), in which we determine the encoder/decoder pair and by minimizing the following loss function , where

| (11) |

such that

and contains the weights of the and networks making the final term in a regularization term. The hyperparameters and provide appropriate scalings for each loss component. The autoencoder reconstruction loss, , demands that approximates the inverse of . We see then that in effect replaces Equation (9). The DMD loss, , keeps as small as possible relative to advancing one timestep. In contrast, we see makes the overall method remain stable under repeated applications of the finite-dimensional approximation to . Thus plays the role of ensuring we have a consistent time-stepping scheme, while ensures we have a globally stable method, and so by combining the two, we address the fundamental dilemma presented by Ansatz 1. It is this ensured global stability that motivates us to call the diagonalization of the matrix from our algorithm’s EDMD the global linearization of the flow.

We further see that this loss function implements the diagram in Figure 1. Through this diagram we impose a one-to-one mapping between trajectories in the original coordinates, , and trajectories in the latent space, . Note that this diagram allows us to circumvent the unknown flow map, , which is equivalent to the Koopman operator of interest, , from Equations (4) and (5).

4 The DLDMD Algorithm: Implementation Details

We build the autoencoder in the Python programming language using Tensorflow version 2. The deep neural networks we construct that act as and from Equation (10) must transform each vector of coordinates along a sample trajectory to and from the latent-space coordinates , respectively. We chose to use dense layers in each network, though other layer types should suffice so long as they encode each point along the trajectory separately, are densely connected, and output the correct dimensions.

As is the case when training any type of neural network, there are a number of hyperparameters that the researcher must take care in selecting. However, we found that the encoder and decoder networks did not require significantly different hyperparameters from dataset to dataset. Notably, the architecture of the neural networks remained identical across all examples. We found that 3 hidden layers each with 128 neurons were sufficient for all of the test problems presented in Section 5. The primary tunable parameter was the dimension of the latent space, , which was tuned manually. We used Rectified Linear Units (ReLU) for the activation functions and chose the Adam optimizer with a learning rate of for the harmonic oscillator, and for Duffing, Van der Pol, and Lorenz 63. For the loss function hyperparameters in Equation (11), , and were all set to , while . The harmonic oscillator was trained with a batch size of 512 while all other systems had batch sizes of 256. All systems were trained for 1,000 epochs. The hardware used was an Nvidia Tesla V100.

See Algorithm 1 for the complete pseudocode for the DLDMD training method. The trained DLDMD model is applied by sending a trajectory through the encoder network, performing the EDMD using the encoded coordinates as observables, then using the modes, eigenvalues, and eigenfunctions to reconstruct the full length of the trajectory and beyond in the latent space. The decoder network then allows us to map the entire EDMD reconstruction back into the original coordinate system.

5 Results

We test the DLDMD method on several datasets generated from dynamical systems that each exhibit some unique flow feature. In Sections 5.1 - 5.3, we examine much of the range of planar dynamics by way of studying the harmonic oscillator, Duffing, and Van der Pol equations. For example, when limited to the first separatrix, the harmonic oscillator gives closed orbits about a single center. Proceeding in complexity, the Duffing equation is comprised of not only closed orbits but also a homoclinic connection that separates two distinct regions of the phase space, requiring now that the DLDMD compute trajectories on either side of a separatrix. The Van der Pol oscillator has trajectories both growing and decaying onto a limit cycle and exhibits multi-scale, slow/fast dynamics. Finally, in Section 5.4, we demonstrate the DLDMD on chaotic trajectories from the Lorenz-63 system, which extends our approach to three-dimensional results evolving over a strange attractor.

The training, validation, and test data for each example were generated using a fourth-order Runge-Kutta scheme. To generate test-data, for the harmonic oscillator and the Duffing system, we chose a time step of and ran simulations out to in order to get closed orbits for all initial conditions. The Van der Pol system used and . This system required a shorter integration step size to sufficiently sample the slow and fast parts of each trajectory. Finally, the Lorenz-63 system used and . For testing, we applied the trained encoder and decoder with an EDMD decomposition on the latent trajectories and generated reconstructions up to the simulation times used for training. Then, in each case we ran the trajectories out further in time in their latent-space coordinates using only the spectral information from their respective EDMD to evaluate stability. The prediction time for the harmonic oscillator and Duffing system was from to , for the Van der Pol equation it was to , and finally for the Lorenz-63 equations, it was from to .

We generate 15,000 trajectories for each system, using 10,000 for training, 3,000 for validation, and 2,000 for testing. Each trajectory is generated by uniform random sampling of initial conditions from some pre-defined region of phase space. For the harmonic oscillator, we used , , and we limited our choice of trajectories to those within the first separatrix using the potential function . The Duffing system was generated over initial conditions sampled from and . The Van der Pol system used and to generate trajectories and was then scaled by its standard deviation. The Lorenz-63 system used , , and .

5.1 The Harmonic Oscillator: One Center

The first system we consider is a nonlinear oscillator described by the undamped pendulum system,

This system exhibits nearly linear dynamics near the origin and becomes increasingly nonlinear towards the separatrix. We limited the dataset to just those trajectories that lie below the separatrix in order to test the DLDMD on a system with only closed Hamiltonian orbits about a single center.

Figure 2 shows the DLDMD has found a mapping to and from coordinates in which it can apply the EDMD with fair precision and stability. This is achieved outside the linear regime corresponding to small angle displacements of the pendulum. These outer trajectories exhibit increasing nonlinearities; yet, nevertheless, the DLDMD is able to adapt to them with minimal assumptions in the model. For this example, we found that a latent dimension of produced the most parsimonious results. Taking the mean-squared error (MSE) for each trajectory and then averaging across all 2000 in the test set, we obtain a DLDMD loss of .

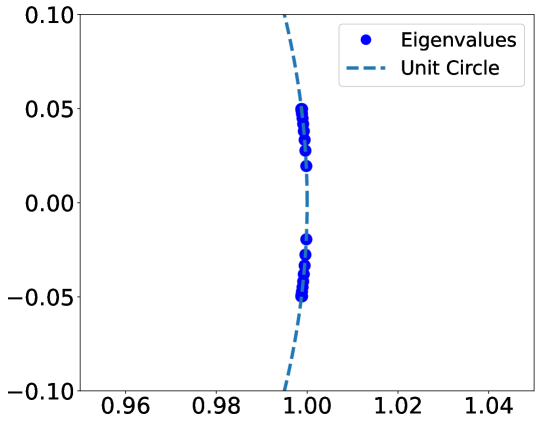

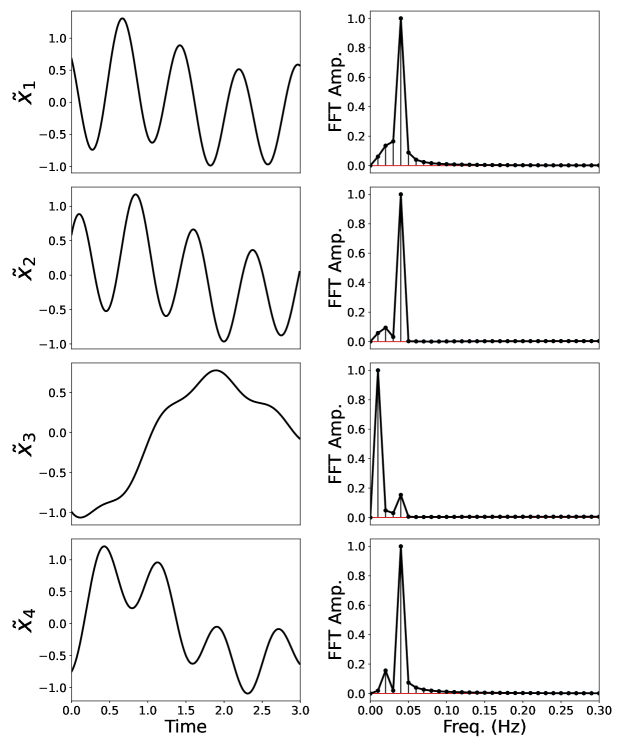

Figure 3 plots the latent-space coordinates used in the EDMD step of the DLDMD. Here we see how the method has used the encoder network to morph the original test trajectories into a system that has less nonlinearity for the EDMD to overcome, in particular for the orbits near the separatrix. Indeed, by examining in Figure 4 the Fourier spectrum of each of the encoded coordinates, , we arrive at a fundamental innovation of the DLDMD method. As we see, the embedded trajectory has a nearly monochromatic Fourier transform, showing that our neural network has learned embeddings and corresponding submersions which nonlinearly map the dynamics onto what would often be described in the dynamical systems literature as fundamental modes. Note, the trajectory illustrated in this figure corresponds to that in Figure 2 which is the one that is closest to the separatrix and exhibits the most nonlinearity in the test set. We emphasize here that these latent-space coordinates are learned with no parameterization or assumptions from the user other than those in the loss function; see Equation (11). Moreover, we see in Figure 5 plots of the eigenvalues for the 10 test trajectories from Figure 2. Each eigenvalue and its conjugate pair is essentially on the unit circle, so that this plot shows us how each embedded trajectory is governed by a single frequency of oscillation for all time. This in part echoes the relatively monochromatic Fourier spectra seen in Figure 4.

|

| (a) |

|

| (b) |

Of course, the harmonic oscillator only consists of closed orbits around a single center. Therefore, it only contains one family of trajectories. As is shown in the next sections, when the dynamical system increases in complexity to include saddles, limit cycles, or chaos, we are still able to successfully generate global linearizations of the flow by increasing the embedding dimension .

5.2 The Duffing Equation: Two Centers

The Duffing system is another weakly nonlinear oscillator with more complex behavior than the undamped pendulum. Without a driving force, the Duffing system is described by the double-well potential Hamiltonian system,

Here we are testing whether the DLDMD can cope with closed orbits that are not all oriented about a single center. Figure 6 shows the reconstruction capability of the DLDMD over the unforced Duffing oscillator. For this system, we found that a latent dimension of produced the best results. Note, more on choosing the appropriate latent dimension is discussed in Section 6. Because , we are still able to easily visualize the embedding, see Figure 7, and find that the trajectories now follow nearly circular orbits on this higher-dimensional manifold. As we see, it appears that the homoclinic connections require an additional latent dimension in order to separate the three types of motion in the phase-space coordinates. These three types are paths orbiting the left center, paths orbiting the right center, and paths orbiting outside the separatrix.

As seen in Figure 8, by simply adding one additional embedding dimension, we again find that the latent-space coordinates have nearly monochromatic Fourier spectra. Furthermore, when we examine in Figure 9 the affiliated eigenvalues for several of the test trajectories, we see that while each orbit is characterized by a unit-magnitude complex-conjugate pair of eigenvalues, as well as an eigenvalue exactly equal to one. This strictly real eigenvalue corresponds to the EDMD accurately computing the temporal average of the time series, which for the Duffing system is determined by which fixed point an orbit oscillates around. Thus, the higher embedding dimension here allows the EDMD to accurately account for this difference between trajectories.

|

| (a) |

|

| (b) |

5.3 The Van der Pol Oscillator: Attraction to a Slow/Fast Limit Cycle

The Van der Pol oscillator, described by the parameterized dynamical system

has for positive values of a globally attractive limit cycle. All presented results use ; however, the DLDMD has been tested out to with no modifications to the algorithm or hyperparameters. The limit cycle itself is made up of slow and fast submanifolds thereby producing multiscale behavior. This system pushes the DLDMD much further than the harmonic oscillator and Duffing systems, for it must now account for the attraction onto the limit cycle as well as the multiscale periodic motion. Furthermore, the attraction onto the limit cycle involves a transient growth or decay term for initial conditions starting inside or outside the limit cycle respectively. Because of this complexity, we found that the DLDMD performed well with , though for this system the choice was not as stringent as for the previous two cases. Our choice of was due to its performance after 1,000 epochs, which was the maximum used for all of the dynamical systems studied in this paper. However, a reasonable reconstruction and prediction error could likely be obtained with slightly fewer or slightly more embedding dimensions given enough training epochs.

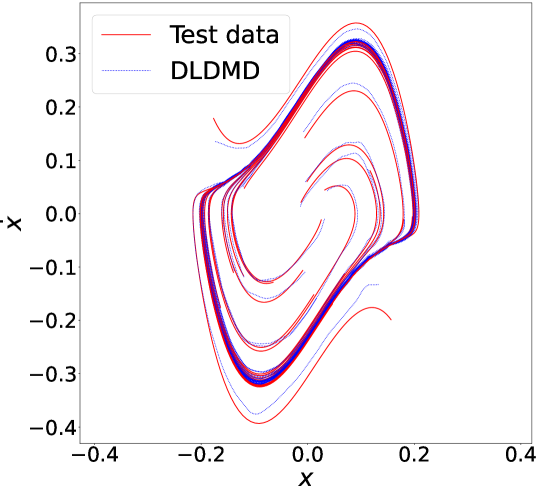

Figure 10 shows the DLDMD reconstruction of the Van der Pol system for several test trajectories. The MSE loss averaged over all 2000 test trajectories is . Figure 11 again illustrates how an encoded trajectory is transformed from one with a relatively large spread in its Fourier spectrum to a set of coordinates whose spread in Fourier space is much reduced, and Figure 12 plots the corresponding eigenvalues. However, unlike the previous two cases, we do see some slight deviations away from strictly periodic motion, reflecting the transients in the underlying dynamics. These transient phenomena are also reflected by five of the eigenvalues being off the unit circle, indicating that dynamics along the affiliated coordinates decay in time leaving only the oscillatory modes.

|

| (a) |

|

| (b) |

5.4 The Lorenz-63 system: Chaos

The Lorenz-63 system, described by the equations

with parameters , , and generates chaotic trajectories with a strange attractor. This system provides categorically different dynamics than the previous three examples, but the DLDMD is able to discover the attractor structure even though its overall pointwise prediction is poor; see Figure 13. This is seen more readily in Figure 14 by examining each component of the test versus predicted trajectory. We see, in particular, that the DLDMD predicted trajectory exhibits a lobe switching pattern that is close to that of the test trajectory. This is an especially pleasing result given that the model was trained on trajectories of length , while the test trajectory shown here was extended to twice that to . The latent dimension used to get these results was . This is somewhat surprising in that a system with chaotic trajectories only required one additional dimension in order to obtain decent global linear representations via EDMD whereas the limit cycle of Van der Pol required upward of six more embedding dimensions. The choice of was quite inflexible compared to that used for the Van der Pol equation, with the total loss during training increasing by several orders of magnitude for .

Moreover, as seen by comparing Figures 15 (a) and (b), the DLDMD finds a set of latent-space coordinates for the Lorenz-63 system that, while no longer monochromatic due to clearly visible two-frequency or beating phenomena, are far more sparsely represented in their affiliated Fourier spectrum than the original phase-space coordinates. That said, the coordinate would seem to track some aperiodic behavior in the dynamics, though longer simulation times would be necessary to determine what exactly is being represented via this transformation. Overall though, these plots further reinforce the result that the DLDMD can generally find embeddings in which the Fourier spectral representation of a given trajectory is far more sparse. Likewise, as seen in Figure 16, we see the DLDMD again finds eigenvalues either on or nearly on the unit circle, reflecting the largely oscillatory behavior of the latent-space trajectories. For those eigenvalues just inside the unit circle, the implied weak transient behavior could be more of an artifact of limited simulation time. Resolving this issue is a subject of future work.

|

| (a) |

|

| (b) |

6 Conclusions and Future Directions

In this paper, we have developed a deep learning extension to the EDMD, dubbed DLDMD, which ensures, through the inclusion in our loss function of the terms and , both the local one step accuracy and global stability, respectively, in the generated approximations. This keeps us numerically close to satisfying the requirements stated in Ansatz (1), which is necessary for the success of the EDMD in the latent variables. Likewise, by constructing a loss function to train the autoencoder using the diagram in Figure 1, the DLDMD learns mappings to and from the latent space which are one-to-one. Thus we ensure that all Koopman modes and eigenvalues are captured in this latent space as well. These results taken together ensure that the DLDMD finds a global linearization of the flow, facilitating a straightforward spectral characterization of any analyzed trajectory and an accurate prediction model for future dynamics.

| System | Avg. Loss (MSE) | # Params | sec./epoch | |

|---|---|---|---|---|

| Pendulum | 2 | 67K | 6 | |

| Duffing | 3 | 67K | 8 | |

| Van der Pol | 8 | 68K | 14 | |

| Lorenz 63 | 3 | 68K | 7 |

Moreover, we have developed a ML approach which requires a relatively minimal number of assumptions to ensure successful training. We have demonstrated this across a relatively wide array of dynamical phenomena including chaotic dynamics; see Table 1 for a performance summary of the DLDMD across all presented examples. We are able to cover so much ground by way of implementing relatively straightforward higher-dimensional embeddings via the encoder network, . The latent-space coordinates of each trajectory generally show that the encoder produces modes with near monochromatic Fourier spectra; again see Figures 4, 8, 11, and 15. This is an especially compelling result of the DLDMD, and it speaks to the power of deep learning methods that such elegant representations can be discovered with so little user guidance. However, by lifting into higher-dimensional latent spaces we do lose the strict topological equivalence in [16] and cannot guarantee that all spectra are invariant. This issue does not seem to manifest in any measurable way in our results, but it should be kept in mind when pursuing future work.

The DLDMD is not without other drawbacks. In particular, the latent dimension parameter is critical to the success or failure of the DLDMD. Unfortunately, there is no readily apparent method for choosing this embedding dimension before training. Therefore, the optimal had to be determined by simply training the model at successively larger values for and stopping once the error became too large or grew unstable. This approach is time consuming and an obvious disadvantage to the method. Determining more optimal ways to approach the latent dimensionality will be the subject of future research.

Another drawback of the DLDMD is the number of trajectories used during training. For each example we generated 10,000 initial conditions sampled uniformly over the phase space of interest for training. Rarely do real-world datasets provide such a uniform sampling of the space. Methods to cope with sparse observations could potentially add far more utility to the method. Very high-dimensional systems that exhibit low-rank structure also present problems for DLDMD and the more conventional use of autoencoder networks for compression rather than lifting [20, 23] may be more applicable. The chaotic trajectories of the Lorenz-63 system were clearly the most challenging for DLDMD to reproduce. This is evident in the low average MSE loss and in the relatively poor agreement between the test and predicted trajectory. For this reason, chaotic systems will likely require additional innovations, such as incorporating delay embeddings into the EDMD step [30] and is the topic of future work.

Lastly, systems with noisy observations will pose significant challenges to DLDMD as it is reliant on the one-step prediction to enforce consistency in the latent spectra. In this regard, the method would seem to benefit from some of the alternative DMD approaches as found in [31], an issue we will also pursue in future work.

Data Availability Statement

The data that support the findings of this study are openly available at

https://github.com/JayLago/DLDMD.git

Acknowledgements

This work was supported in part by the Naval Information Warfare Center Pacific (NIWC Pacific) and the Office of Naval Research (ONR).

The authors would like to thank Dr. Erik M. Bollt from the Department of Electrical and Computer Engineering at Clarkson University for his feedback and insights, as well as Joseph Diaz and Robert Simpson for their insightful comments.

References

- [1] B.O. Koopman. Hamiltonian systems and transformation in Hilbert space. Proc. Nat. Acad. Sci., 17:315–318, 1931.

- [2] P. Benner, S. Gugercin, and K. Willcox. A survey of projection-based model reduction methods for parametric dynamical systems. SIAM Review, 57:483–531, 2015.

- [3] K. Taira, S.L. Brunton, S.T.M. Dawson, C.W. Rowley, T. Colonius, B.J. McKeon, O.T. Schmidt, S. Gordeyev, V. Theofilis, and L.S. Ukeiley. Modal analysis of fluid flows: An overview. AIAA, 55:4013–4041, 2017.

- [4] P. Schmid. Dynamic mode decomposition of numerical and experimental data. J. Fluid Mech., 656:5–28, 2010.

- [5] I. Mezić. Spectral properties of dynamical systems, model reduction, and decompositions. Nonlinear Dyn., 41:309–325, 2005.

- [6] M.O. Williams, I. G. Kevrekidis, and C. W. Rowley. A data-driven approximation of the Koopman operator: extending dynamic mode decomposition. J. Nonlin. Sci., 25:1307–1346, 2015.

- [7] M.O. Williams, C. W. Rowley, and I. G. Kevrekidis. A kernel-based method for data driven Koopman spectral analysis. J. Comp. Dyn., 2:247–265, 2015.

- [8] S.L. Brunton, J.L. Proctor, and J.N. Kutz. Discovering governing equations from data by sparse identification of nonlinear dynamical systems. PNAS, 113:3932–3937, 2016.

- [9] J.N. Kutz, S.L. Brunton, B.W. Brunton, and J.L. Proctor. Dynamic Mode Decomposition: Data-driven modeling of complex systems. SIAM, Philadelphia, PA, 2016.

- [10] J.N. Kutz, J.L. Proctor, and S.L. Brunton. Applied Koopman theory for partial differential equations and data-driven modeling of spatio-temporal systems. Complexity, 2018:1–16, 2018.

- [11] Q. Li, F. Dietrich, E. Bollt, and I. Kevrekidis. Extended dynamic mode decomposition with dictionary learning: A data-driven adaptive spectral decomposition of the Koopman operator. Chaos, 27:103111, 2017.

- [12] N. Takeishi, Y. Kawahara, and T. Yairi. Learning Koopman invariant subspaces for dynamic mode decomposition. In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 30. Curran Associates, Inc., 2017.

- [13] E. Yeung, S. Kundu, and N.O. Hodas. Learning deep neural network representations for Koopman operators of nonlinear dynamical systems. In American Control Conference, 2019.

- [14] A. M. Degenarro and N. M. Urban. Scalable extended dynamic-mode decomposition using random kernel approximation. SIAM J. Sci. Comp., 41:1482–1499, 2019.

- [15] M. Budisić, R. Mohr, and I. Mezić. Applied koopmanism. Chaos, 22(047510), 2012.

- [16] E. Bollt, Q. Li, F. Dietrich, and I. Kevrekidis. On matching, and even rectifying, dynamical systems through koopman operator eigenfunctions. SIAM J. Appl. Dyn. Sys., 17.2:1925–1960, 2018.

- [17] B. Lusch, J. N. Kutz, and S. L. Brunton. Deep learning for universal linear embeddings of nonlinear dynamics. Nature Comm., 9:4950, 2018.

- [18] Jason J. Bramburger, Steven L. Brunton, and J. Nathan Kutz. Deep learning of conjugate mappings. arXiv, 2104.01874, 2021.

- [19] W. Gilpin. Deep reconstruction of strange attractors from time series. In 34th Conference on NeurIPS, 2020.

- [20] K. Champion, B. Lusch, J. N. Kutz, and S. L. Brunton. Data-driven discovery of coordinates and governing equations. PNAS, 116:22445–22451, 2019.

- [21] Andreas Mardt, Luca Pasquali, Hao Wu, and Frank Noé. Vampnets for deep learning of molecular kinetics. Nature Communications, 9(1):5, 2018.

- [22] N. B. Erichson, M. Muehlebach, and M. Mahoney. Physics-informed autoencoders for lyapunov-stable fluid flow prediction. In Machine Learning and the Physical Sciences Workshop, Conference on Neural Information Processing Systems, 2019.

- [23] O. Azencot, N. B. Erichson, V. Lin, and M. Mahoney. Forecasting sequential data using consistent koopman autoencoders. In Hal Daumé III and Aarti Singh, editors, Proceedings of the 37th International Conference on Machine Learning, volume 119 of Proceedings of Machine Learning Research, pages 475–485. PMLR, 13–18 Jul 2020.

- [24] Yunzhu Li, Hao He, Jiajun Wu, Dina Katabi, and Antonio Torralba. Learning compositional Koopman operators for model-based control. In International Conference on Learning Representations, 2020.

- [25] I. Mezić. Spectrum of the koopman operator, spectral expansions in functional spaces, and state-space geometry. Journal of Nonlinear Science, 30.5:2091–145, 2019.

- [26] A. Lasota and M. C. Mackey. Chaos, Fractals, and Noise. Springer, New York, NY, 2nd edition, 1994.

- [27] M. Budivsić, R. Mohr, and I. Mezić. Applied koopmanism. chaos, 22:047510, 2012.

- [28] Efrain Gonzalez, Moad Abudia, Michael Jury, Rushikesh Kamalapurkar, and Joel A. Rosenfeld. Anti-koopmanism, 2021.

- [29] R. Abraham, J.E. Marsden, and T. Ratiu. Manifolds, Tensor Analysis, and Applications. Springer, New York, NY, 2nd edition, 2001.

- [30] H. Arbabi and I. Mezić. Ergodic theory, dynamic mode decomposition, and computation of spectral properties of the koopman operator. SIAM Journal on Applied Dynamical Systems, 16:2096–2126, 2017.

- [31] S. L. Brunton, B. W. Brunton, J. L. Proctor, E. Kaiser, and J. N. Kutz. Chaos as an intermittently forced system. Nature Comm., 8(1), 2017.