Deep Learning Chromatic and Clique Numbers of Graphs

Abstract

Deep neural networks have been applied to a wide range of problems across different application domains with great success. Recently, research into combinatorial optimization problems in particular has generated much interest in the machine learning community. In this work, we develop deep learning models to predict the chromatic number and maximum clique size of graphs, both of which represent classical NP-complete combinatorial optimization problems encountered in graph theory. The neural networks are trained using the most basic representation of the graph, the adjacency matrix, as opposed to undergoing complex domain-specific feature engineering. The experimental results show that deep neural networks, and in particular convolutional neural networks, obtain strong performance on this problem.

1 Introduction

Computing the chromatic number and clique number of a graph are classical NP-complete combinatorial optimization problems that have many applications to every day life. Recently, deep neural networks (DNN) have been utilized to develop heuristics in solving combinatorial optimization problems. DNNs offer several advantages as they naturally run in parallel on GPUs with many cores, and can be self-learning. Domain knowledge of the problem is not always necessary as the neural network can learn directly from supervised data and examples.

In [AMSB19] the combinatorial optimization problem of solving Rubik’s cube is studied. Here an A* search algorithm is implemented to unscramble a Rubik’s cube that relies on a heuristic function to determine which node of the search tree to expand. The heuristic function is a DNN that is trained on a large set of examples, where each example results from scrambling the solved cube and recording how many turns it took to reach that scrambled state. The essence is that the neural network learns to look at a scrambled cube and determine how far it is from being solved. The A* search utilizes this information to reach the solved cube goal. Two crucial points of [AMSB19] are that there is a unique goal state, and the problem is not NP-hard. Supervised examples can be generated efficiently to feed the neural network.

In [SGT+08] the Graph Neural Network (GNN) is introduced. Nodes in a graph represent objects or concepts, and edges represent their relationships. Each concept is naturally defined by its features and the related concepts. A learning algorithm is given which estimates the parameters of the GNN model on a set of given training examples. In our paper we represent graphs as adjacency matrices and feed them to standard neural networks. Though the GNN is not as sensitive to node ordering as an adjacency matrix, our hope is that by feeding our neural networks enough training data, it can learn more invariant properties of graphs.

[LPAL19] uses Graph Neural Networks (GNNs) to address the graph coloring problem. Their algorithm seeks to determine if a given graph admits a -coloring, hence it is presented as a binary classification problem. The authors considered graphs with a vertex set size , and chromatic number .

Inspired by the AlphaGoZero [SSS+17] algorithm, [HPD19] uses a deep reinforcement learning algorithm for the graph coloring problem. By formulating the problem as a Markov Decision Process, AlphaGoZero with graph embeddings is adapted to learn new heuristics in graph coloring.

[VSPRB20] provides a survey of machine learning approaches across an array of combinatorial optimization problems on graphs such as maximum cut, graph coloring, and maximal clique. Both supervised and reinforcement learning methods are evaluated and compared.

In [XT20] a GNN is used to guide a Monte Carlo tree search in solving the traveling salesman problem (TSP) in the spirit of AlphaGoZero. Their results show shorter tours than other state of the art learning algorithms.

Our main goal is to use Deep Neural Networks (DNN) to approximate the chromatic number and clique number of a graph. Ultimately a goal would be to develop an algorithm analogous to [AMSB19] that can constructively color a graph and find the set of maximal cliques. However formidable obstacles make it difficult to directly apply [AMSB19]. Given a scrambled Rubik’s cube, the minimal number of turns needed to unscramble the cube is a well defined function since there is a unique goal state to focus upon. In graph coloring there are many possible optimal colorings so it would be difficult to define a function that could measure how far a partially colored graph is from an optimal coloring. Another key difference is graph coloring is NP-complete, while the Rubik’s cube is not. This makes it difficult to generate learning examples.

Let be a finite graph, where is the set of vertices, and is the set of edges; with order and size We consider vertex colorings of and let denote the chromatic number of the minimal number of colors needed so that no two adjacent vertices are assigned the same color. Let denote the clique number, the maximum number of vertices in a maximum clique of

It is not clear as to the best way to input a graph to a neural network without losing its topological properties. Our hope was that the neural network would learn to see the edges connecting vertices, like a human would. As the adjacency matrices is square, and convolutional neural networks (CNN) are known to excel at computer vision, we felt they were a good place to start. We study various DNN architectures and measure how well they can learn or for a graph We generate a large number of examples of random graphs of order with and computed exactly. See §2.

After experimentation we were able to train CNNs to approximate the chromatic number and clique number with roughly 90% accuracy (we defined success if we were off by one or less). See §4.

The complete code to generate our data and to implement the neural networks can be found at github.com/mathprofessor/coloring1.

2 Generating Training Data

2.1 Generating Random Graphs

Let Denote the set of graphs of order by When generating graphs of order we feel it is more natural for the DNN to also encounter graphs of all orders less than so we actually generate graphs of various orders and embed them in Let

Let denote the complete graph of order Note that and Let be a uniformly random number between and To generate a random graph of order we shuffle the set of edges and delete of the edges. Denote this graph by

Next append the vertices to and do not modify the edges Denote this new graph by Note that it contains vertices that are isolated. It is of order but we wish to embed it in a more random manner into Note that each of the vertices of has no edges incident with it.

Choose a random permutation and define where Next define

Finally for a given and we generate different graphs This will generate a training set with examples. The Python code to generate our data sets is available at github.com/mathprofessor/coloring1.

2.2 Calculating the Chromatic Number and Clique Number

Let be a graph. To compute we utilize the algorithm of Bron and Kerbosch [BK73]. More specifically we encode our graph using the Python library NetworkX [HSS08] which implements an updated version [BK73, TTT06, CK08].

Though computing maximal cliques and graph colorings are independent problems, we can utilize the maximal clique as a heuristic to speed up finding an optimal coloring using a SAT solver. Specifically we use the open source constraint programming solver Google OR-Tools: CP-SAT [PF19].

Let and let For let be the color of vertex where is integer valued with values also in Let be an integer valued variable, also with values in Suppose is a maximal clique of

We consider the following constraint programming problem with objective:

-

1.

For each in arbitrarily assign to a unique color in

-

2.

For each edge we add the constraint

-

3.

For each vertex we have

-

4.

Finally we add an objective to minimize the variable

We summarize these steps in Algorithm 1. The Python code to implement the above algorithm can be found at github.com/mathprofessor/coloring1.

3 Exploratory Data Analysis

Data was generated as described previously to create three disjoint datasets for training, validation and testing. The training set consists of 249,999 records, while the validation and test datasets each contain 24,999 records.

Figure 1 shows the distribution of the chromatic number and maximum clique size , respectively, for the training dataset. As can be seen from these graphs, the majority of graphs have and less than 10, though there is a long tail of larger values as well. The distributions for the validation and test datasets are very similar to what is presented in Figure 1 and hence are omitted.

4 Empirical Results

4.1 Performance Metrics

To measure and compare performance of the different models, the empirical study will use the mean absolute error (mae) and the percentage of records with an absolute error less than or equal to , (typically, ):

where is the number of records in the dataset and and are the actual and predicted values of the dependent variable for record . The absolute error for a single record is denoted as .

Training for the linear regression model uses only the training dataset. Parameters for the deep neural network models are estimated using the training dataset, and the validation dataset is used to determine when to terminate the training process. The test set is only used to estimate the generalization performance. mae is the metric used to train the neural network models, and is computed post-training for evaluation purposes.

4.2 Linear Regression

To set a simple baseline for performance, we built a regression model with the independent variable as the number of edges in the graph, and the dependent variable either or ). The scatterplots for the test data are in Figure 2, along with the regression line.

Intuitively, there should be a positive correlation between the number of edges in a graph and both the chromatic number and maximum clique size. As can be seen from the scatterplot, for a fixed number of edges, there is a wide range of values for and ).

| Metric | ||

|---|---|---|

| mae | 2.31 | 2.62 |

| 26.3% | 24.0% | |

| 42.3% | 40.1% |

Table 1 summarizes the performance metrics of the regression model on test data. The mean absolute error on test data for predicting the chromatic number is 2.31, while the mean absolute error for predicting the maximum clique size is 2.62. There is certainly opportunity for substantial improvement over this very simplistic and naive model.

4.3 Dense Deep Neural Network

The objective of this work is to determine if a deep neural network can be built to predict and ) without performing domain-specific feature engineering, hence using only the adjacency matrix of a graph. Our initial attempt is to use a dense network created using the Sequential API in Keras. For each input graph, the adjacency matrix was flattened into a input vector. We experimented with various hyperparameters, including the number of hidden layers and the number of hidden nodes per layer, activation function, learning rate and optimizer.

Generally speaking, lower values for the number of layers and number of hidden nodes per layer performed worse than higher values, while the choice of activation function, learning rate and optimizer did not substantially change the performance of the dense neural network. Therefore, our final dense model consisted of 13 hidden layers with 1000 nodes per layer, ReLU activation function, and Adam optimizer with the default learning rate. The final layer contains a single node with a linear activation function to produce a single output, either the chromatic number or maximum clique size. Note that all deep learning models were built in Tensorflow version 2.5 using the Keras API.

| Metric | ||

|---|---|---|

| mae | 1.59 | 1.81 |

| 37.5% | 35.1% | |

| 60.7% | 57.7% |

The results for this dense model are presented in Table 2. When predicting the chromatic number, the mae on the test dataset was 1.59, with and . Results for maximum clique size are similar: mae of 1.81, with and . Compared to the results in Table 1, this dense model presents significant lift over the regression model, as would be expected.

4.4 Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are commonly used with images, which are often represented as a 2-dimensional tensor of pixel values (in the case of greyscale images). In our case, the adjacency matrix of a graph is also 2-dimensional, with entries of 1 in the row and column if the two vertices and are connected, and 0 otherwise. Given this representation of the graph, CNNs are a natural choice for the architecture of the neural network.

The input into the CNN is a tensor with dimensions , representing the height (rows), width (columns), and number of channels. We experimented with a number of different architectures, varying the number of convolutional and maximum pooling layers, the number of filters and size of the convolution kernel, in addition to the number of dense layers and hidden nodes per layer.

Section 4.4.1 describes the Sequential CNN model that obtained solid performance on the test data, while Section 4.4.2 describes a more refined architecture that we refer to as a wide convolutional neural network.

| Metric | ||

|---|---|---|

| mae | 0.43 | 0.55 |

| 68.0% | 56.5% | |

| 92.6% | 81.6% |

4.4.1 Sequential Convolutional Neural Network

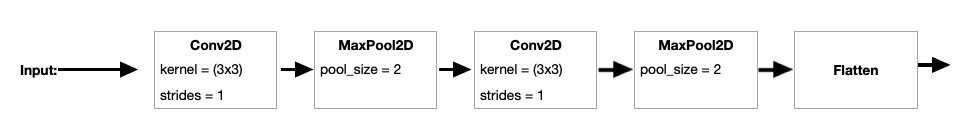

The architecture of the sequential CNN consists of a layer with a kernel and 512 filters, followed by a layer with . A second layer with 64 filters and another layer follow. The output is flattened and fed into a dense network consisting of seven layers and 300 hidden nodes per layer. Finally, the activation function was set to for every layer in the network with the exception of the final dense layer, which consists of a single node with a linear activation function.

The performance of this model, trained separately for the chromatic number and maximum clique size, is shown in Table 3. As can be seen from this table, the performance has improved substantially compared to the dense neural network: mae = for predicting and for .

4.4.2 Wide Convolutional Neural Network

The high level architecture of the wide CNN model is shown in Figure 3. The second stage (after the input) consists of five parallel convolution pipelines with different size filters and strides, described in more detail below. The output of each of these five pipelines is flattened, and the results are concatenated together. The output of the concatenation layer is fed into a dense sequential network with seven hidden layers and 200 hidden nodes per layer, followed by a single dense layer with one node (and linear activation function) to create the output prediction.

Figure 4 shows the first pipeline of the convolution stage of the wide CNN model. The input data is processed through a layer with and , followed by a layer with (note that we use activation functions throughout this network and hence omit from the diagram for simplicity). Additional and layers follow with the same parameters as before. The output is then flattened, and will be combined with the other convolution pipeline branches.

The second and third pipelines in the convolution stage are shown in Figure 5. The second branch uses a by kernel with for the first layer, followed by a layer. The second layer also uses a 5 by 5 kernel, but this time with a stride of 1. As before, the output of this layer is flattened. The third branch is similar to the second, except the kernel size is set to 10 by 10 with in the first layer.

The fourth and fifth branches in the convolution pipeline are shows in Figure 6. Each layer uses very large kernels - 25 by 25 and 50 by 50, respectively. Finally, within all of the convolution pipelines, the first layer uses 512 filters, while the second uses filters.

Compared to the sequential CNN in Section 4.4.1, the wide CNN takes the foundational component of 2 convolutional layers with a kernel size of 3 by 3, fed into a dense network, and adds additional parallel convolutional layers with varying kernel sizes to the network. The idea is to use these varying kernel sizes to capture different relationships between vertices in the graph; indeed by considering only 3 by 3 kernels, relationships between larger subsets of vertices may be missed.

Table 4 shows the results of the wide CNN model. When predicting the chromatic number, the model obtains an mae of 0.38 and of 90.9%; in other words, over 90% of the records in the test dataset had a prediction for the chromatic number within 1 of the true chromatic number. The mae of the wide CNN model for predicting the maximum clique size is 0.47, with .

| Metric | ||

|---|---|---|

| mae | 0.38 | 0.47 |

| 68.7% | 60.1% | |

| 90.9% | 88.0% |

4.5 Discussion of Results

The performance of the four different models - a baseline regression model, a Dense Sequential Neural Network and two different Convolutional Neural Networks - is summarized in Figure 7 and in Table 5.

Figure 7 shows the significant improvement in both metrics and towards the convolutional neural networks. Clearly the CNN architecture is detecting the relationships between nodes in the graph directly from the adjacency matrix in a way that is useful for predicting and , showing substantial lift over a dense neural network.

| Model | mae | % Impr. | mae | % Impr. |

|---|---|---|---|---|

| Regression | 2.31 | - | 2.62 | - |

| Dense NN | 1.59 | 31.2% | 1.81 | 30.9% |

| Sequential CNN | 0.43 | 81.4% | 0.55 | 79.0% |

| Wide CNN | 0.38 | 83.5% | 0.47 | 82.1% |

Table 5 summarizes the mae values obtained on test data for each of the models. In addition, the column titled ‘% Impr.’ shows the percentage improvement in the mean absolute error versus the baseline (regression) model. For example, the Sequential CNN obtained an mae of 0.43, which is 81.4% lower than the mae of the regression model. As with the data shown in Figure 7, convolutional neural networks significantly outperform other approaches. Further, the wide CNN, which combines convolution filters with different kernel sizes and strides, is able to combine information in a way that allows it to outperform a more standard CNN.

To provide a more in-depth understanding of the performance of the wide CNN, Figure 8 shows the distribution of the absolute error for predicting on the test data. To improve readability, records in the test data were first divided into groups by the value of (e.g., ). A boxplot showing the distribution of absolute error for each group of records is then plotted.

Each box shows three quartile values (e.g., percentile, median, and percentile) with whiskers that extend to 1.5 times the interquartile range (IQR). As expected, the distribution of errors increases as the chromatic number increases, however performance of the model is very good regardless of the chromatic number.

To further analyze performance, an additional metric, the absolute percentage error (ape), is considered. ape divides the absolute error by the actual target value, and is typically expressed as a percentage:

Similar to mae, the mape is the mean absolute percentage error over all records in the test data:

Absolute percentage error is useful for counteracting the phenomenon observed in Figure 8, namely that as the value for the target variable increases, the observed absolute errors also tend to increase, but relative to the value of the target variable, the increases in error are not as meaningful. For example, if and , but . Compare this to a situation where and . Records and have the same absolute error (), however the absolute percentage error for is , compared to for . We note that there are many additional performance metrics proposed in the literature (e.g., weighted mape, symmetric mape), however a full consideration of these metrics does not have a significant impact on this work.

| Model | ||

|---|---|---|

| Sequential CNN | 5.49% | 7.41% |

| Wide CNN | 4.63% | 6.69% |

Table 6 shows the mape for both CNN models on the test data. All models achieve a mape significantly less than 10, which we would characterize as strong performance. Furthermore, Figure 9 shows the distribution of ape obtained by the wide CNN across different ranges of , similar to Figure 8. As you can see from Figure 9, the IQR is relatively stable across groups, but as expected, there is a higher level of skew in ape when is small (i.e., when dividing by a small actual value, small absolute errors can become large percentage errors).

5 Conclusion

Combinatorial optimization problems are of growing interest in the machine learning community, and in the context of graphs, graph coloring and clique search are two important areas of study. Searching for an optimal coloring and determining the maximum clique in a graph are known to be NP-complete, and research has begun to look at ways to improve heuristics to address these challenges.

In this research, we have trained deep learning models to directly predict the chromatic number and maximum clique size from the graph adjacency matrix. Since this is a supervised learning application, our training set consisted of graphs with known values for and . The experimental results demonstrated conclusively that deep neural networks, and in particular convolutional neural networks, were able to learn from the training set and generalize well to previously unseen graphs of the same size. In particular, learning occurred without the need for domain-specific feature engineering of the graph input.

Future work will look to expand the scope of investigation to larger networks, where training data may be more limited due to the time involved in generating labeled data from large graphs. In addition, future work will consider the problem of graph coloring itself, i.e., coloring each node in a graph, as opposed to only predicting the number of colors needed.

References

- [AMSB19] Forest Agostinelli, Stephen McAleer, Alexander Shmakov, and Pierre Baldi, Solving the rubik?s cube with deep reinforcement learning and search, Nature Machine Intelligence 1 (2019), no. 8, 356–363.

- [BK73] Coen Bron and Joep Kerbosch, Algorithm 457: finding all cliques of an undirected graph, Communications of the ACM 16 (1973), no. 9, 575–577.

- [CK08] Frédéric Cazals and Chinmay Karande, A note on the problem of reporting maximal cliques, Theoretical computer science 407 (2008), no. 1-3, 564–568.

- [HPD19] Jiayi Huang, Mostofa Patwary, and Gregory Diamos, Coloring big graphs with alphagozero, arXiv preprint arXiv:1902.10162 (2019).

- [HSS08] Aric A. Hagberg, Daniel A. Schult, and Pieter J. Swart, Exploring network structure, dynamics, and function using networkx, Proceedings of the 7th Python in Science Conference (Pasadena, CA USA) (Gaël Varoquaux, Travis Vaught, and Jarrod Millman, eds.), 2008, pp. 11 – 15.

- [LPAL19] Henrique Lemos, Marcelo Prates, Pedro Avelar, and Luis Lamb, Graph colouring meets deep learning: Effective graph neural network models for combinatorial problems, 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), IEEE, 2019, pp. 879–885.

- [PF19] Laurent Perron and Vincent Furnon, Or-tools, developers.google.com/optimization (2019).

- [SGT+08] Franco Scarselli, Marco Gori, Ah Chung Tsoi, Markus Hagenbuchner, and Gabriele Monfardini, The graph neural network model, IEEE transactions on neural networks 20 (2008), no. 1, 61–80.

- [SSS+17] David Silver, Julian Schrittwieser, Karen Simonyan, Ioannis Antonoglou, Aja Huang, Arthur Guez, Thomas Hubert, Lucas Baker, Matthew Lai, Adrian Bolton, et al., Mastering the game of go without human knowledge, nature 550 (2017), no. 7676, 354–359.

- [TTT06] Etsuji Tomita, Akira Tanaka, and Haruhisa Takahashi, The worst-case time complexity for generating all maximal cliques and computational experiments, Theoretical computer science 363 (2006), no. 1, 28–42.

- [VSPRB20] Natalia Vesselinova, Rebecca Steinert, Daniel F Perez-Ramirez, and Magnus Boman, Learning combinatorial optimization on graphs: A survey with applications to networking, IEEE Access 8 (2020), 120388–120416.

- [XT20] Zhihao Xing and Shikui Tu, A graph neural network assisted monte carlo tree search approach to traveling salesman problem, IEEE Access 8 (2020), 108418–108428.

Jason Van Hulse PhD

e-mail: [email protected]

Joshua S. Friedman PhD

Department of Mathematics and Science

United States Merchant Marine Academy

300 Steamboat Road

Kings Point, NY 11024

U.S.A.

e-mail: [email protected], [email protected]