[datatype=bibtex] \map[overwrite] \step[fieldsource=month, match=\regexp\A(j—J)an(uary)?\Z, replace=1] \step[fieldsource=month, match=\regexp\A(f—F)eb(ruary)?\Z, replace=2] \step[fieldsource=month, match=\regexp\A(m—M)ar(ch)?\Z, replace=3] \step[fieldsource=month, match=\regexp\A(a—A)pr(il)?\Z, replace=4] \step[fieldsource=month, match=\regexp\A(m—M)ay\Z, replace=5] \step[fieldsource=month, match=\regexp\A(j—J)un(e)?\Z, replace=6] \step[fieldsource=month, match=\regexp\A(j—J)ul(y)?\Z, replace=7] \step[fieldsource=month, match=\regexp\A(a—A)ug(ust)?\Z, replace=8] \step[fieldsource=month, match=\regexp\A(s—S)ep(tember)?\Z, replace=9] \step[fieldsource=month, match=\regexp\A(o—O)ct(ober)?\Z, replace=10] \step[fieldsource=month, match=\regexp\A(n—N)ov(ember)?\Z, replace=11] \step[fieldsource=month, match=\regexp\A(d—D)ec(ember)?\Z, replace=12]

Deep Learning Based Walking Tasks Classification in Older Adults using fNIRS

Abstract

Decline in gait features is common in older adults and an indicator of increased risk of disability, morbidity, and mortality. Under dual task walking (DTW) conditions, further degradation in the performance of both the gait and the secondary cognitive task were found in older adults which were significantly correlated to falls history. Cortical control of gait, specifically in the pre-frontal cortex (PFC) as measured by functional near infrared spectroscopy (fNIRS), during DTW in older adults has recently been studied. However, the automatic classification of differences in cognitive activations under single and dual task gait conditions has not been extensively studied yet. In this paper, we formulate this as a classification task and leverage deep learning to perform automatic classification of STW, DTW and single cognitive task (STA). We conduct analysis on the data samples which reveals the characteristics on the difference between HbO2 and Hb values that are subsequently used as additional features. We perform feature engineering to formulate the fNIRS features as a 3-channel image and apply various image processing techniques for data augmentation to enhance the performance of deep learning models. Experimental results show that pre-trained deep learning models that are fine-tuned using the collected fNIRS dataset together with gender and cognitive status information can achieve around 81% classification accuracy which is about 10% higher than the traditional machine learning algorithms. We further perform an ablation study to identify rankings of features such as the fNIRS levels and/or voxel locations on the contribution of the classification task.

functional near infrared spectroscopy, neural networks, walking tasks classification, deep learning, aging

1 Introduction

Mobility impairments are common older adults affecting their functional independence and leading to increased risk of disability, morbidity, and mortality [1]. Studies have shown that attention which is sub-served by the Pre-Frontal Cortex (PFC) and its related circuits plays a key role in the higher order cognitive control of mobility [2, 3, 4, 5, 6]. Especially under complex and more taxing locomotion tasks as in dual task walking (DTW), allocating attention to competing task demands, requires use of additional attentional resources [7], can result in degradation in both the gait and the secondary task performances, and is sensitive to aging posing a key risk factor for incident frailty and falls [8, 9]. Hence, assessment and identification of cognitive resource allocation together with gait performance under simple and attention demanding walking conditions, can be critical for incident risk assessment and prevention of falls in normal aging as well as in disease populations.

Motor control models of locomotion and robust associations between structural changes in frontal and subcortical brain regions with mobility outcomes have been established [10, 11, 12]. Even though converging evidence suggests the role cognitive processes, specifically the executive functions in explaining mobility performance and decline in older adults [2, 3], studies on the real time assessment and specific detections of functional neural correlates of simple and attention-demanding locomotion tasks is scarce. This gap could be in part due to the requirements of subject immobility and supine positioning in traditional neuroimaging modalities during scanning procedures making functional imaging of real, on the ground walking unattainable.

Recent studies began to increasingly utilize an emerging neuroimaging modality, namely functional near infrared spectroscopy (fNIRS) to assess cortical control and functional correlates of mobility under simple and attention demanding dual task walking conditions in aging populations [4, 21, 22, 23, 24, 25, 26, 27, 28, 13, 14, 15, 16, 17, 18, 19, 20]. fNIRS is an optics-based non-invasive, safe, portable, and wearable neuroimaging technique [29, 30, 31, 32, 33], which can monitor relative changes in oxygenated-hemoglobin (HbO2) and deoxygenated-hemoglobin (Hb) associated with cognitive activity in real world tasks such as walking and talking.

While the tasks used in the investigation of functional brain mechanisms of mobility using fNIRS technology varies across studies, the most commonly implemented ones involve balance tasks, running, climbing the stairs and STW and DTW conditions [4, 21, 22, 23, 24, 25, 26, 27, 28, 13, 14, 15, 16, 17, 18, 19, 20]. Specifically, in prior fNIRS studies reproducible and statistically significant increases have been found in HbO2 obtained from the PFC in DTW as compared to STW due to greater cognitive demands on attentional resources and gait performance that are inherent in the DTW condition [27, 28, 13, 14, 15, 16, 17, 18, 19, 20]. Furthermore, it was found that cortical responses to task demands specifically in the DTW condition were moderated by age [28], gender and stress [13], fatigue level [14], medication use [16], and disease status including diabetes [17], Multiple Sclerosis (MS) [18], mild cognitive impairments [19], and neurological gait abnormalities [26].

Even though growing number of studies that utilized fNIRS measures on older adults have repeatedly shown that hemodynamic biomarkers from PFC can provide significant differences between STW and DTW conditions in healthy and disease populations, automatic classification of these tasks using machine learning algorithms have not yet been studied. Automatic detection of attentionally more demanding vs simple walking tasks using discriminative hemodynamic features extracted from HbO2 and Hb can provide information on an individual’s use of his/her attentional resources during active walking. Such automated detection indicative of attentional load during active walking can help in real time identification or prediction of cognitive overload, loss of gait control, reduction in gait performance and even prevention of falls. Moreover, identification of selective features that can discriminate walking task conditions can also lead to further diagnosis, monitoring and automatic classification of different age-related disease conditions where PFC activations in DTW were found to differ.

fNIRS measures have been used in the classification of wide range of tasks and disease populations in different age groups in prior studies. Some of these applications involve monitoring of mental workload, motor imagery, auditory and visual perception, various brain computer interfaces, pain assessment, anesthesia monitoring, attention deficit and hyperactivity disorder (ADHD) diagnosis, cognitive decline in traumatic brain injury, diagnosis of various mental illnesses such as schizophrenia [34, 35, 36, 37, 38, 39, 40, 41, 42]. However, there are very few studies on the classification of gait related tasks. Existing studies primarily monitored motor areas and investigated classification of intention or preparation to different types of gait in healthy young adults primarily for gait rehabilitation applications involving control of assistive devices where classification accuracy was found in about 80% ranges [43, 44, 45, 46]. In these small number of prior studies, fNIRS measures from PFC during single and attentionally demanding dual task active walking conditions that are indicative of different attentional states and cognitive load conditions in elderly populations were not studied with machine learning models.

In this study our aim is to achieve automatic classification of walking tasks requiring different levels of cognitive resources. Specifically, we develop a comprehensive pipeline for processing and engineering the collected fNIRS data to efficiently extract the features. We fine-tune pre-trained state-of-the-art deep learning models over the fNIRS dataset and obtain up to 81% accuracy, which is about 10% higher than the traditional machine learning algorithms. We also conduct ablation studies for identifying critical features when using fNIRS for classifying walking tasks of older adults.

This paper is organized as follows: In Section 2, we introduce the information of the participants and our task protocol. In Section 3, we explain our proposed methods in detail. We present the results of our comprehensive results in Section 4 and finally, we provide concluding remarks in Section 5. To the best of our knowledge, we are the first to apply deep learning methods in fNIRS-based walking task classification for older adults.

2 Participants and Task Protocol

2.1 Participants

The study involved a total of community dwelling older adults in Lower Westchester county, NY of age 65 years and older (76.16 6.67, 223 females) who were originally enrolled in a longitudinal cohort study entitled “Central Control of Mobility in Aging” (CCMA) [4, 25]. Recruitment procedures started with the identification of potential participants from population lists and then conducting a structured telephone interview to obtain verbal assent, assess medical history, mobility and cognitive functioning. Participants with significant loss of vision and/or hearing, inability to ambulate independently, current or history of severe neurological or psychiatric disorders, and recent or anticipated medical procedures that may affect mobility were excluded from the study. Individuals who agreed to participate in the study, fell into the inclusion/exclusion criteria and passed the phone interview were invited to two annual in-person study visits each lasting around 3 hours at the research center at Albert Einstein College of Medicine, Bronx, NY. During these visits, participants received a structured neurological examination and comprehensive neuropsychological, psychological, functional, and mobility assessments. Functional brain monitoring using fNIRS during the STW and DTW protocol was completed in one session. Cognitive status was determined at consensus diagnostic case conferences [47]. Repeatable Battery for the Assessment of Neuropsychological Status (RBANS) was used to characterize overall level of cognitive function [48]. The sample was relatively healthy (Global Health Status mean score = 1.62 1.09) and in the average range of overall cognitive function (RBANS mean Index score = 91.77 11.71). The work described in this manuscript has been executed in adherence with The Code of Ethics of the World Medical Association (Declaration of Helsinki) and the APA ethical standards set for research involving human participants. Written informed consents were obtained at the first clinic visit according to study protocols approved by the Institutional Review Board at Albert Einstein College of Medicine, Bronx, NY (Protocol #2010-224; Date: 03/03/2022).

2.2 Task Protocol

The task protocol used in this study involved two single tasks and one dual-task conditions presented in a counterbalanced order using a Latin-square design to minimize task order effects on the outcome measures. The single task conditions were 1) single-task walking (STW) and 2) the single task alpha cognitive interference task (STA). In STW condition, participants were asked to walk at their “normal pace” around a 4 20 foot electronic walkway (Zenometrics system with Zeno electronic walkway using ProtoKinetics Movement Analysis Software (PKMAS), Zenometrics, LLC; Peekskill, NY). In the Alpha condition participants were asked to stand still on the electronic walkway while reciting alternate letters of the alphabet out loud (A, C, E…) for 30 seconds. In the dual-task walking (DTW) condition, participants were required to perform the two single tasks at the same time by walking around the walkway at their normal pace while reciting alternate letters of the alphabet. Participants were specifically asked to pay equal attention to both the walking and cognitive interference tasks to minimize task prioritization effects. In both STW and DTW conditions participants were asked to walk on the instrumented walkway in three continuous loops that consisted of six straight walks and five left-sided turns. The duration of each task condition varied depending on the individual’s walking speed. Reliability and validity for this walking paradigm have been well established [49].

3 Methods

An overview of the proposed methods utilized in this work is illustrated in Fig. 1. We have four major steps: data collection, data pre-processing, feature extraction and deep learning:

-

•

Data Collection: In data collection, participants were asked to complete the task protocol as instructed, during which their hemodynamic activations were collected using fNIRS. In addition, we also collected subject-related data (gender and RBANS).

-

•

Data Pre-processing: In data pre-processing, we applied different methods such as visual inspection, wavelet denoising, hemodynamic data conversion, and spline and low pass filterings to obtain HbO2 and Hb data of participants in time domain for different task conditions.

-

•

Feature Engineering: We formulated the data pre-processed as an image tensor. First two channels of the image tensor represent the pre-processed Hb and HbO2 data, respectively. We also added another channel into the image tensor which is the difference between the HbO2 and Hb, i.e, the HbO2 - Hb referred to as the oxigen index [50].

-

•

Deep Learning: We applied various deep learning algorithms using the PyTorch framework [51]. We used the pre-trained deep neural network and vision transformer architectures which are available open-source, and fine-tune them with the engineered fNIRS data and evaluate the model performance.

3.1 Data Collection

fNIRS System. We have utilized the fNIRS Imager 1100 (fNIRS Devices, LLC, Potomac, MD) in this study to collect the hemodynamic activations in the PFC while participants were performing the task protocol [25, 29, 30, 52]. In this fNIRS device, the sensor consists of 4 LED light sources and 10 photodetectors configured as shown in Fig. 2 where each source-detector separation is set to 2.5 cm. The light sources on the sensor (Epitex Inc. type L4X730/4X805/4X850-40Q96-I) contain three built-in LEDs having peak wavelengths at 730, 805, and 850 nm, with an overall outer diameter of 9.2 0.2 mm. The photodetectors (Texas Instruments, Inc., type OPT101) are monolithic photodiodes with a single supply transimpedance amplifier. With the given source-detector configuration and the serial data collection regime of the device, hemodynamic changes in the PFC can be monitored at the sampling rate of 2 Hz with 16 voxels as shown in Fig. 2.

During the fNIRS data collection procedure, first the fNIRS sensor was placed on the forehead of the recruited participants. A standardized sensor placement procedure based on landmarks from the international 10–20 system was implemented [52, 53] where middle of the sensor was aligned with the nose horizontally and the bottom of the sensor was placed above the eyebrows vertically. Testing was conducted in a quiet room. Participants wore comfortable footwear and performed the task protocol with the fNIRS sensor attached to their forehead during the overall data collection period.

3.2 Data Pre-processing

First, visual inspection was performed on individual data from all voxels to identify and eliminate the ones with saturation, dark current conditions or extreme noise. Then to eliminate spiky type noise, wavelet denoising with Daubechies 5 (db5) wavelet was applied to the raw intensity measurements at 730 and 850 nm wavelengths as proposed in [54] and widely applied in fNIRS studies [55]. The artifact-removed raw intensity measurements were then converted to changes in HbO2 and Hb using modified Beer-Lambert law (MBLL) [20, 30, 56]. In MBLL, previously published values for conversion parameters i.e. wavelength and chromophore dependent molar extinction coefficients () and age and wavelength adjusted differential pathlength factor (DPF) were used [20, 30, 57]. Finally, we applied Spline filtering [58] followed by a finite impulse response low-pass filter with cut-off frequency at 0.08 Hz [20, 59] to HbO2 and Hb data separately to remove possible baseline shifts and to suppress physiological artifacts such as respiration and Mayer waves.

Data epochs corresponding to each task condition, STW, STA and DTW, were extracted to be used in further processing for feature extraction and machine learning model generation for automatic activity classification. fNIRS data acquisition and the electronic walkway system for gait analysis were synchronized using a central “hub” computer with E-Prime 2.0 software where time stamps of start and end points for each baseline and task condition were marked and recorded [27, 28, 13, 14, 15, 16, 17, 18, 19, 20]. In order to correctly extract the data epochs during the exact walking task execution periods, a second level processing time synchronization method was implemented. The HbO2 and Hb data epochs corresponding to time interval between the first recorded foot contact with the walkway until the end of the 6th and final straight walk algorithmically determined by PKMAS as previously described in [26] were extracted for STW and DTW conditions. Finally, proximal 10-second baselines administered prior to each experimental task were used to determine the relative task-related changes in the extracted HbO2 and Hb data epochs for each of the task condition using the previously described baseline correction method (subtracting the average value of the proximal baseline region data from the following task epoch data) [27, 28, 13, 14, 15, 16, 17, 18, 19, 20]. We then used HbO2 and Hb data epochs in DTW, STW and STA tasks in further feature extraction and machine learning model development to automatically classify these three tasks in this work.

3.3 Feature Engineering

In this sub-section, we first show the statistical analysis across and within subjects, then present the feature engineering including input formulation for subsequent deep learning algorithms.

Analysis across Subjects. We first illustrate the distribution of task completion time across subjects in Fig. 3. The task completion time differs between subjects and task conditions due to individual variability and normal pace. Note that completion time of the STA is 30 seconds as defined in the task protocol. For the other two tasks, we notice that DTW on average requires more time to complete than STW, since DTW could be a more challenging task for older adults.

Additionally, we investigate the distribution of Hb and HbO2 values under the three task conditions as provided in the histogram plots in Fig. 4. For Hb levels, all the three task conditions exhibit a Gaussian-like distribution where the averages are around negative values close to 0, suggesting decreases relative to baseline conditions. DTW condition shows higher standard deviation than the rest of the two single tasks, suggesting more individual variability in this condition. For HbO2, distribution of STW is still showing Gaussian-like pattern with average around the positive values close to 0. However, for DTW and STA, the distributions have shifted to more positive values suggesting more cognitive activations relative to baseline in these task conditions as compared to STW. Comparatively, levels in DTW show larger shift than STA. Such distribution differences between Hb, HbO2 and HbO2 - Hb levels inspire us in this study to use them as additional features for the machine learning model.

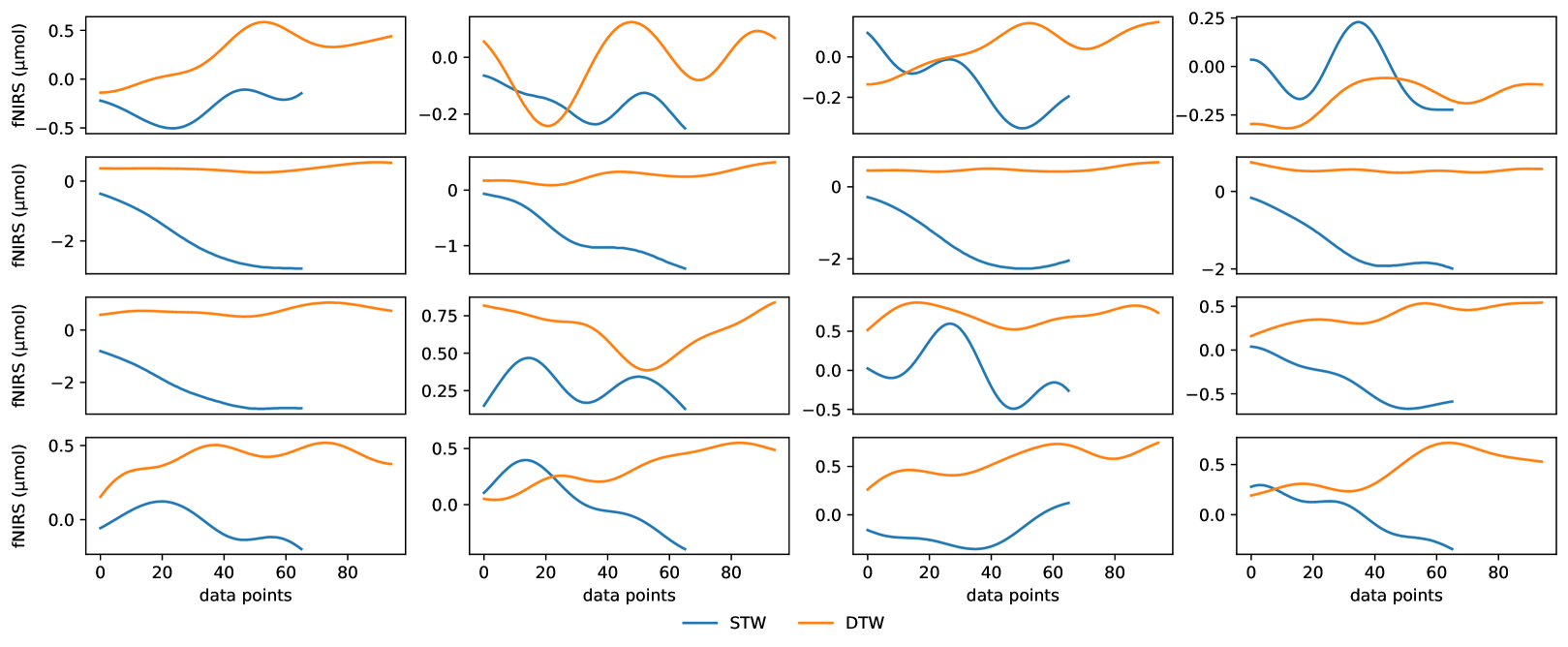

Analysis within Subject. Although Fig. 4 indicates that the difference in hemodynamic activity levels of STW and DTW conditions can be leveraged as features, they could be different at the granularity of each voxel location for each individual subject. A representative subject HbO2 recording under STW and DTW conditions based on each voxel location is shown in Fig. 5. Note that, for this case, the time to complete DTW is higher than STW. We can observe from the plots that, although HbO2 levels in DTW are in general higher than those of STW, for each voxel location the range of values can drastically vary. In some channels and sample points, it can also be observed that HbO2 levels in STW are similar or even higher than DTW. Hence, there is a need to leverage the power of deep learning models beyond mere visual or statistical analysis to enable more accurate and individualized study, particularly to leverage the rich information revealed with each voxel location.

Input Formulation. Based on the observation from the analysis, we aggregate the HbO2, Hb and HbO2 - Hb levels together and formulate each sample as a 3-channel image as input to the subsequent machine learning model. The first and the second channel are the HbO2 and Hb levels respectively and the third channel is the difference between HbO2 and Hb levels. For -th sample in the dataset of each subject and task condition, the size is . refers to the number of forehead voxel locations and refers to the sample points which is twice the task completion time since we use sampling frequency of 2 Hz.

Although there are 16 voxel locations, due to limitations on data collection with excessive artifacts that cannot be cleaned, there are missing fNIRS data of one or a few voxel locations in some samples. We perform a screening and accept samples with no more than 3 missing voxel locations and any samples with missing numbers beyond 3 is regarded as invalid data and thus not used in training or inference. The number of valid samples in the dataset after removing is . For the missing data we use interpolation to reconstruct the data into an image of size .

Since the dataset is relatively small compared with general computer vision datasets with usually more than tens of thousands of images, we use pre-trained deep learning models rather than training models afresh to prevent over-fiting. Additionally, due to the difference on task completion time, the dimensions of the images are not consistent. Therefore, we perform further image processing to conform the input image dimensions with the input of the deep learning models that are usually pre-trained with the ImageNet dataset [60], i.e., . Although such processing or resizing of inputs are common in deep learning, how the images are resized into can potentially have impact on the learning performance [61]. Note that, for each sample, data beyond first 224 points are truncated while data with less than 224 points are padded with 0. We intentionally avoid row-wise interpolation because we want the fNIRS data to keep the information of task completion time and pace of each subject which can be an important marker.

In order to find the optimal processing configuration, we sweep across 3 different parameters which are two commonly used resizing techniques for deep learning model inputs [61], which enables us 5 different configurations. The 3 parameters are visualized in Fig. 6 and also explained below:

-

•

Interpolation mode: Interpolation is to insert data between each original data to enlarge the input size into . The available options are bilinear or bicubic interpolation.

-

•

Corner alignment: This parameter decides whether to force corner alignment during interpolation. If true, the levels from the first and last voxel locations will be used as corner so that no interpolated values will appear as corners of the processed image.

-

•

Interleave repeat: As we have 16 voxel locations, we are able to interleave repeat the data of each location by 14 times to achieve the required 224 dimension (row-wise) as an alternative for interpolation. Interleave repeat will duplicate exactly the data row-wise, which will reflect as the clear boarders between each voxel locations visually as illustrated in Fig. 6.

In summary, after feature engineering, we are able process and enhance the original samples with fNIRS levels of missing data and inconsistent dimensions into images in the shape of , which is ready for the deep learning models.

3.4 Deep Learning

We leverage the state-of-the-art deep learning models for machine vision and/or image classification. We first train the models afresh, i.e., without any pre-training. However, since the scale of the fNIRS dataset is rather small, the model easily overfits: the accuracy on training set achieves more than 90% while the inference set is only around 70%.

This is a common issue for other applications from medical imaging in the bioinformatics domain as the clinical data are usually much less than large general image datasets. Thus, for domain specific application with proprietary datasets, the concept of transfer learning [62] is usually in favor: the model is first pre-trained using the general and public image dataset and then fine-tuned on the proprietary dataset. In this work, using ResNet as an example, we obtain open-sourced, publicly available ResNet model which is trained using the ImageNet dataset which has 1000 classes. We then change the dimensionality of the last classifier (the fully connected layer) from 1000 to 3 to match the three task conditions. We fine-tune over this model by using the processed images to fit the model on the fNIRS dataset for classifying the three task conditions.

We implement our deep learning model using various architectures that are basically in two families: deep convolutional neural networks and vision transformer attention networks. Model details and configurations are introduced in the experimental results section Sec. 4.

4 Experimental Results

In this section, we present the experimental results on the machine learning model for the classification of tasks in older adults. We evaluate the impact of different feature combinations as well as different machine learning algorithms on classification accuracy and computational efficiency.

4.1 Experimental Setup

Environment. We use Pytorch, a machine learning framework for python [51] to implement the machine learning models. We split the samples into training and inference set by 8:2, and the results are obtained with 5-fold cross validation. We use Adam optimizer with 0.001 learning rate and halt fine-tuning when accuracy does not increase further, thus the total number of epochs as well as the time for fine-tuning can vary across different models.

Models. We use the state-of-the-art deep learning and/or machine vision models from two architectures: deep neural networks and vision transformer attention networks. We sweep across different architectures including deep convolutional neural networks such as ResNet [63], VGG [64], MobileNet [65], EfficientNet [66], and TinyNet [67]. We also evaluate on recently emerging attention based vision transformer models [68]. All the pretrained deep learning models are fetched from online open source repositories via PyTorch Image Models (timm) package [69].

As a comparative study, we also implement several baseline machine learning models, including traditional machine learning models of decision tree, random forest (with 25 trees), and k-nearest neighbors (k = 5) which are implemented via Scikit-Learn package [70].

4.2 Classification Results

Comparison with Baselines. Results of this classification task including accuracy and error bars with different learning models (baseline and deep learning) is presented in Table 1.

We can first observe that deep learning models are able to out-perform all the baseline traditional learning algorithms by at least 10% on the inference set. Particularly, decision tree and random forest are extremely over-fit on training data with higher than 99% accuracy while having poor performance on inference set. K-nearest neighbors can hardly learn efficiently as the accuracy on training set is only around 77% which is quite lower than all the other models.

Some of the deep learning models also experience different degree of overfit. Particularly for larger models such as VGG, ResNet and ViT-Base, their accuracy on training set is mostly over 94% except for VGG. Smaller models like MobileNetV2, TinyNet and EfficientNet are usually less overfit as their accuracy is 90% – 93% on training set.

The top three models according to accuracy on inference set are: ResNet-18, VGG-13 and TinyNet-E. All the three models can achieve accuracy near 81%. We use these three models for the following ablation study and efficiency analysis.

| Models | Train Acc. | Infer. Acc. |

|---|---|---|

| Decision Tree | 0.9940.002 | 0.6280.01 |

| Random Forest (n_tree = 25) | 0.9970.001 | 0.6620.011 |

| k-Nearest Neighbors (k=5) | 0.7670.028 | 0.6460.004 |

| ResNet-18 | 0.9400.019 | 0.8100.023 |

| ResNet-26 | 0.9440.018 | 0.8000.016 |

| VGG-13 | 0.9050.022 | 0.8080.022 |

| VGG-16 | 0.8990.008 | 0.8000.006 |

| ViT-Base | 0.9620.007 | 0.7940.017 |

| TinyNet-E | 0.9060.012 | 0.8100.017 |

| MobileNetV2-050 | 0.9230.015 | 0.7910.024 |

| EfficientNet-b0 | 0.9270.036 | 0.7510.106 |

| ViT-Tiny | 0.9680.009 | 0.7960.013 |

4.3 Ablation Study

Dimension. We perform an ablation study to identify the contribution of features. First, we are to rank the contribution of each dimension in the input sample, e.g., Hb, HbO2 and the difference between HbO2 and Hb, respectively, to the classification performance. Therefore, we attempt to remove one dimension in the image while keeping the data in other two dimensions intact and observe the change on the accuracy of inference set. According to Fig. 7, removing any of the three dimensions will cause accuracy degradation. However, model accuracy has different sensitivity towards different dimensions. Specifically, removing the HbO2 and the HbO2 - Hb dimension induces larger accuracy drop than removing Hb. This aligns with the statistical analysis in Fig. 4 that HbO2 levels are different in distribution which indicate more discriminative features while Hb levels preserves similar Gaussian distribution under three task conditions and hence not very selective. Our results are also in line with the prior findings that HbO2 and hence the oxygenation are more reliable and sensitive to locomotion-related changes in cerebral blood flow [71] and therefore providing the most distinctive features.

| Models | bilinear, aligned | bilinear, not aligned | bicubic, aligned | bicubic, not aligned | interleave |

|---|---|---|---|---|---|

| ResNet-18 | 0.81 | 0.8 | 0.808 | 0.797 | 0.802 |

| VGG-13 | 0.806 | 0.808 | 0.798 | 0.804 | 0.798 |

| TinyNet E | 0.799 | 0.795 | 0.81 | 0.806 | 0.802 |

Image Processing. As shown in Fig. 6 (images are normalized for visualization purposes), we apply in total 5 different configurations on image processing. As an ablation study, we analyze the performance impact of image processing by comparing the inference set accuracy under different configurations in Table 2. We can observe that although the accuracy can vary up to 2% across different configurations, we do not observe any single configuration able to dominate over other configurations. Based on such observation, we conclude that for different deep learning models, the best configuration can vary and require individual evaluation to select for the best to extract the features from the fNIRS data.

Hemisphere. We also try to characterize hemispheric contributions to the classification accuracy. We prune the input samples by removing all the data from voxel locations that is from one hemisphere (Channel 1 - 8 for left and 9 - 16 for right) and only use the rest for deep learning and identify the model performance. Based on Fig. 8, we can observe that for TinyNet-E model, data from the left hemisphere seem to contribute more while for ResNet-18 and VGG-13 models, removing data from right hemisphere causes more accuracy drop. In general, by removing data from either hemisphere will result in accuracy degradation for up to 8%, thus indicating it is preferred to use the data from all the voxel locations for better model performance.

4.4 Model Efficiency

We also provide insights on the three models for their cost and overhead including number of parameters, model size, time for model fine-tuning and inference as well as the throughput (samples per second) in Table 3. Time and throughput data are obtained with NVIDIA Tesla P100 GPU.

ResNet-18 and VGG-13 use relatively larger model size and show lower throughput. Particularly for VGG-13, although the model is quite large with around 130M parameters, it does not show more competitive accuracy than the rest. For TinyNet-E, since the classifier layer (fully connected layer) output is reduced to 3, the model size becomes drastically compact and the throughput nearly triples the rest two models. However, based on our experiment, TinyNet-E requires more epochs to achieve comparable accuracy, thus even if it is smaller in model, it takes more time than ResNet-18 to fine-tune.

| Models | Params | Model Size | Finetune/Infer | Throughput |

|---|---|---|---|---|

| (MB) | Time (sec) | (sample/sec) | ||

| ResNet-18 | 11.18M | 42.7 | 55.1/0.250 | 4.9K |

| VGG-13 | 129M | 491.9 | 180.5/0.459 | 2.6K |

| TinyNet E | 0.8M | 2.9 | 78.4/0.089 | 13.7K |

5 Conclusion

Functional near infrared spectroscopy (fNIRS) is an optic-based, non-invasive neuroimaging modality, which is increasingly used as a safe and portable method to assess the cortical control of gait. Notably, fNIRS studies repeatedly show increased activation in the prefrontal cortex from single task walk (STW) to dual-task walk (DTW) conditions in older adults due to increased attentional demands in DTW, which is also an established risk factor for incident frailty, disability, and mortality. In this paper, we introduce and integrate the emerging deep learning methods into the pipeline of using fNIRS measures based on oxygenated (HbO2) and deoxygenated hemoglobin (Hb) to detect and classify task conditions in older adults to assess their cognitive capabilities during single and dual task locomotion. We develop an extensive framework for data collection, pre-processing, feature engineering and deep learning and leverage the outstanding learning capabilities of deep neural networks models which surpasses traditional machine learning models by at least 10% in terms of classification accuracy. To the best of our knowledge, this is the first study to introduce deep learning methods in fNIRS-based single and dual task walking classification in older adults.

References

- [1] S. Studenski et al. “Gait speed and survival in older adults” In JAMA 305.1, 2011, pp. 50–58

- [2] R. Holtzer et al. “The relationship between attention and gait in aging: facts and fallacies” In Motor Control 16, 2012, pp. 64–80

- [3] G. Yogev-Seligmann et al. “The role of executive function and attention in gait” In Mov. Disord. 23.2, 2008, pp. 329–42

- [4] R. Holtzer et al. “Neuroimaging of mobility in aging: a targeted review” In Journals of Gerontology Series A: Biomedical Sciences and Medical Sciences 69.11, 2014, pp. 1375–88

- [5] Marianna Amboni et al. “Cognitive contributions to gait and falls: evidence and implications” In Movement disorders 28.11 Wiley Online Library, 2013, pp. 1520–1533

- [6] Ulman Lindenberger et al. “Memorizing while walking: increase in dual-task costs from young adulthood to old age.” In Psychology and aging 15.3 American Psychological Association, 2000, pp. 417

- [7] Doug Rohrer et al. “When two memories can and cannot be retrieved concurrently” In Memory & cognition 26.4 Springer, 1998, pp. 731–739

- [8] Roee Holtzer et al. “Age-related differences in executive control of working memory” In Memory & cognition 32.8 Springer, 2004, pp. 1333–1345

- [9] J. Verghese et al. “Mobility stress test approach to predicting frailty, disability, and mortality in high-functioning older adults” In J Am Geriatr Soc. 60.10, 2012, pp. 1901–5

- [10] T. Drew et al. “Cortical and brainstem control of locomotion” In Prog Brain Res 143, 2004, pp. 251–261

- [11] M. Lucas et al. “Moderating effect of white matter integrity on brain activation during dual-task walking in older adults” In J Geront. Ser. A 74.4, 2019, pp. 435–441

- [12] M.. Wagshul et al. “Multi-modal neuroimaging of dual-task walking: Structural MRI and fNIRS analysis reveals prefrontal grey matter volume moderation of brain activation in older adults” In NeuroImage 189, 2019, pp. 745–754

- [13] R. Holtzer et al. “Stress and gender effects on prefrontal cortex oxygenation levels assessed during single and dual‐task walking conditions” In European Journal of Neuroscience. 45.5, 2017, pp. 660–70

- [14] R. Holtzer et al. “Interactions of subjective and objective measures of fatigue defined in the context of brain control of locomotion” In The Journals of Gerontology: Series A. 72.3, 2017, pp. 417–23

- [15] M. Chen et al. “Neural correlates of obstacle negotiation in older adults: An fNIRS study” In Gait & posture 58, 2017, pp. 130–5

- [16] C.. George et al. “The effect of polypharmacy on prefrontal cortex activation during single and dual task walking in community dwelling older adults” In Pharmacological research 139, 2019, pp. 113–9

- [17] R. Holtzer et al. “The effect of diabetes on prefrontal cortex activation patterns during active walking in older adults. Brain and cognition” In vol. 125, 2018, pp. 14–22

- [18] M.. Hernandez et al. “Brain activation changes during locomotion in middle-aged to older adults with multiple sclerosis” In Journal of the neurological sciences 370, 2016, pp. 277–83

- [19] R. Holtzer and M. Izzetoglu “Mild cognitive impairments attenuate prefrontal cortex activations during walking in older adults” In Brain Sci 10, 2020, pp. 415–31

- [20] M. Izzetoglu and R. Holtzer “Effects of processing methods on fNIRS signals assessed during active walking tasks in older adult” In IEEE Trans. on Neural Systems and Rehab. Eng. 28.3, 2020, pp. 699–709

- [21] R. Vitorio et al. “fNIRS response during walking—Artefact or cortical activity? A systematic review” In Neuroscience & Biobehavioral Reviews 1.83, 2017, pp. 160–72

- [22] F. Herold et al. “Functional near-infrared spectroscopy in movement science: a systematic review on cortical activity in postural and walking tasks” In Neurophotonics. 4, 2017, pp. 4

- [23] Dr.R. Leff et al. “Assessment of the cerebral cortex during motor task behaviours in adults: a systematic review of functional near infrared spectroscopy (fNIRS) studies” In Neuroim. 54.4, 2011, pp. 2922–36

- [24] D. Hamacher et al. “Brain activity during walking: a systematic review” In Neuroscience & Biobehavioral Reviews 57, 2015, pp. 310–27

- [25] R. Holtzer et al. “Online fronto-cortical control of simple and attention-demanding locomotion in humans” In Neuroimage. 112, 2015, pp. 152–9

- [26] R. Holtzer et al. “Neurological gait abnormalities moderate the functional brain signature of the posture first hypothesis” In Brain topo. 29.2, 2016, pp. 334–43

- [27] R. Holtzer et al. “Distinct fNIRS-Derived HbO2 Trajectories During the Course and Over Repeated Walking Trials Under Single-and Dual-Task Conditions: Implications for Within Session Learning and Prefrontal Cortex Efficiency in Older Adults” In J. of Geront.: Ser. A, 2018

- [28] R. Holtzer et al. “fNIRS study of walking and walking while talking in young and old individuals” In J Geront Ser. A: Biomed Sci. Med. Sci. vol. 66, no. 8, 2011, pp. 879–87

- [29] S.. Bunce et al. “Functional near-infrared spectroscopy” In IEEE engineering in medicine and biology magazine 25.4, 2006, pp. 54–62

- [30] M. Izzetoglu et al. “Functional near-infrared neuroimaging” In IEEE Trans. on Neural Systems and Rehab. Eng. 13.2, 2005, pp. 153–9

- [31] F. Scholkmann et al. “A review on continuous wave functional near-infrared spectroscopy and imaging instrumentation and methodology” In Neuroimage. 85, 2014, pp. 6–27

- [32] S. Cutini et al. “Functional near infrared optical imaging in cognitive neuroscience: an introductory review” In J. of Near Infrared Spectroscopy 20.1, 2012, pp. 75–92

- [33] V. Quaresima and M. Ferrari “Functional near-infrared spectroscopy (fNIRS) for assessing cerebral cortex function during human behavior in natural/social situations: a concise review” In Org. Res. Met.. 428116658959 1094, 2016

- [34] Y. Liu et al. “Multisubject ”learning” for mental workload classification using concurrent EEG, fNIRS, and Physiological measures” In Front. in Hum. Neurosci. 11, 2017, pp. 389

- [35] Felix Putze et al. “Hybrid fNIRS-EEG based classification of auditory and visual perception processes” In Frontiers in neuroscience 8 Frontiers, 2014, pp. 373

- [36] A.. Chiarelli et al. “Deep learning for hybrid EEG-fNIRS brain–computer interface: application to motor imagery classification” In J Neural Eng 15.3, 2018, pp. 036028

- [37] N. Naseer and K.. Hong “fNIRS-based brain-computer interfaces: a review” In Front. in Hum. Neurosci. 9, 2015, pp. 3

- [38] A. Pourshoghi et al. “Application of functional data analysis in classification and clustering of functional near-infrared spectroscopy signal in response to noxious stimuli” In J of Biomed. Optics 21.10, 2016, pp. 101411

- [39] G. Hernandez-Meza et al. “Investigation of optical neuro-monitoring technique for detection of maintenance and emergence states during general anesthesia” In J Clin. Monit. Comp. 32.1, 2018, pp. 147–163

- [40] A. Guven et al. “Combining functional near-infrared spectroscopy and EEG measurements for the diagnosis of attention-deficit hyperactivity disorder” In Neural Comp. Appl. 3, 2019, pp. 1–4

- [41] A.. Merzagora et al. “Functional near-infrared spectroscopy and electroencephalography: a multimodal imaging approach” In Int. Conf. on Found. of Aug. Cog. Berlin: Springer, 2019, pp. 417–426

- [42] H. Song et al. “Automatic schizophrenic discrimination on fNIRS by using complex brain network analysis and SVM” In BMC medical informatics and decision making 17.3, 2017, pp. 166

- [43] H. Jin et al. “Pilot study on gait classification using fNIRS signals” In Comp. Intel. and Neurosci., 2018

- [44] C. Li et al. “Detecting self-paced walking intention based on fNIRS technology for the development of BCI” In Medical & Biological Engineering & Computing 21, 2020, pp. 1–9

- [45] M. Rea et al. “Lower limb movement preparation in chronic stroke: a pilot study toward an fNIRS-BCI for gait rehabilitation” In Neurorehabilitation and neural repair 28.6, 2014, pp. 564–75

- [46] R.. Khan et al. “fNIRS-based Neurorobotic Interface for gait rehabilitation” In J Neuroeng. Rehab. 15.1, 2018, pp. 7

- [47] R. Holtzer et al. “Within-person across-neuropsychological test variability and incident dementia” In JAMA 300.7, 2008, pp. 823–830

- [48] K. Duff et al. “Utility of the RBANS in detecting cognitive impairment associated with Alzheimer’s disease: sensitivity, specificity, and positive and negative predictive powers” In Archives of clinical neuropsychology : the official journal of the National Academy of Neuropsychologists 23, 2008, pp. 603–612

- [49] R. Holtzer et al. “Performance variance on walking while talking tasks: theory, findings, and clinical implications” In Age (Dordr) 36.1, 2014, pp. 373–381

- [50] Meltem Izzetoglu et al. “Motion artifact cancellation in NIR spectroscopy using Wiener filtering” In IEEE Transactions on Biomedical Engineering 52.5 IEEE, 2005, pp. 934–938

- [51] Adam Paszke et al. “Pytorch: An imperative style, high-performance deep learning library” In Advances in neural information processing systems 32, 2019

- [52] H. Ayaz et al. “Using MazeSuite and functional near infrared spectroscopy to study learning in spatial navigation” In JoVE (Journal of Visualized Experiments). 8.56, 2011, pp. e3443

- [53] H. Ayaz et al. “Registering fNIR data to brain surface image using MRI templates” In Conf Proc IEEE Eng Med Biol Soc, 2006, pp. 2671–4

- [54] B. Molavi and G.. Dumont “Wavelet-based motion artifact removal for functional near-infrared spectroscopy” In Phys. Meas. 33.2, 2012, pp. 259–70

- [55] A.. Chiarelli et al. “A kurtosis-based wavelet algorithm for motion artifact correction of fNIRS data” In Neuroimage 112, 2015, pp. 128–137

- [56] M. Cope and D.. Delpy “System for long-term measurement of cerebral blood and tissue oxygenation on newborn infants by near infra-red transillumination” In Med. Biol.Eng.Com. 26.3, 1988, pp. 289–294

- [57] F. Scholkmann and M. Wolf “General equation for the differential pathlength factor of the frontal human head depending on wavelength and age” In Journal of biomedical optics 18.10, 2013, pp. 5004

- [58] F. Scholkmann et al. “How to detect and reduce movement artifacts in near-infrared imaging using moving standard deviation and spline interpolation” In Physiological measurement 31.5, 2010, pp. 649

- [59] M.. Yücel et al. “Mayer waves reduce the accuracy of estimated hemodynamic response functions in functional near-infrared spectroscopy” In Biomedical optics express 7.8, 2016, pp. 3078–3088

- [60] Jia Deng et al. “Imagenet: A large-scale hierarchical image database” In 2009 IEEE conference on computer vision and pattern recognition, 2009, pp. 248–255 Ieee

- [61] Hossein Talebi and Peyman Milanfar “Learning to resize images for computer vision tasks” In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021, pp. 497–506

- [62] Fuzhen Zhuang et al. “A comprehensive survey on transfer learning” In Proceedings of the IEEE 109.1 IEEE, 2020, pp. 43–76

- [63] Kaiming He et al. “Deep residual learning for image recognition” In Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778

- [64] Karen Simonyan and Andrew Zisserman “Very deep convolutional networks for large-scale image recognition” In arXiv preprint arXiv:1409.1556, 2014

- [65] Mark Sandler et al. “Mobilenetv2: Inverted residuals and linear bottlenecks” In Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 4510–4520

- [66] Mingxing Tan and Quoc Le “Efficientnet: Rethinking model scaling for convolutional neural networks” In International conference on machine learning, 2019, pp. 6105–6114 PMLR

- [67] Kai Han et al. “Model rubik’s cube: Twisting resolution, depth and width for tinynets” In Advances in Neural Information Processing Systems 33, 2020, pp. 19353–19364

- [68] Alexey Dosovitskiy et al. “An image is worth 16x16 words: Transformers for image recognition at scale” In arXiv preprint arXiv:2010.11929, 2020

- [69] Ross Wightman “PyTorch Image Models” In GitHub repository GitHub, https://github.com/rwightman/pytorch-image-models, 2019 DOI: 10.5281/zenodo.4414861

- [70] Fabian Pedregosa et al. “Scikit-learn: Machine learning in Python” In the Journal of machine Learning research 12 JMLR. org, 2011, pp. 2825–2830

- [71] Taeko Harada et al. “Gait capacity affects cortical activation patterns related to speed control in the elderly” In Experimental brain research 193.3 Springer, 2009, pp. 445–454