Deep Intrinsic Decomposition with Adversarial Learning for Hyperspectral Image Classification

Abstract

Convolutional neural networks (CNNs) have been demonstrated their powerful ability to extract discriminative features for hyperspectral image classification. However, general deep learning methods for CNNs ignore the influence of complex environmental factor which enlarges the intra-class variance and decreases the inter-class variance. This multiplies the difficulty to extract discriminative features. To overcome this problem, this work develops a novel deep intrinsic decomposition with adversarial learning, namely AdverDecom, for hyperspectral image classification to mitigate the negative impact of environmental factors on classification performance. First, we develop a generative network for hyperspectral image (HyperNet) to extract the environmental-related feature and category-related feature from the image. Then, a discriminative network is constructed to distinguish different environmental categories. Finally, a environmental and category joint learning loss is developed for adversarial learning to make the deep model learn discriminative features. Experiments are conducted over three commonly used real-world datasets and the comparison results show the superiority of the proposed method. The implementation of the proposed method and other compared methods could be accessed at https://github.com/shendu-sw/Adversarial_Learning_Intrinsic_Decomposition for the sake of reproducibility.

Index Terms:

Adversarial Learning, Deep Intrinsic Decomposition, Environmental-related Feature, Category-related Feature, Hyperspectral Image Classification.I Introduction

Hyperspectral images, which contain a multitude of spectral bands including the visible and non-visible parts of the electromagnetic spectrum [1], can provide an extensive and detailed view of the Earth’s surface and play a crucial role in various fields, including agriculture, geology, ecology, and disaster management [2]. The plentiful spectral and spatial information of hyperspectral data allows for precise discrimination and characterization of materials, terrain, and environmental features, facilitating applications such as land cover mapping [3], mineral identification [4], vegetation health assessment [5], and pollution monitoring [6]. However, great spectral similarity occurs between different objects which makes difficulty to discriminate different objects. Another challenge arises from the complexity of handling a vast amount of spectral information across numerous narrow bands. The high dimensionality of the data poses difficulties in effective feature selection, model training, and computational demands. Additionally, atmospheric effects, mixed pixels, and the need for extensive, accurately labeled training data make hyperspectral classification a formidable task. Therefore, there exists huge demand to explore effective methods to extract discriminative features from the hyperspectral image.

-

1.

A robust and effective feature extraction backbone network to understand and represent the complex spectral-spatial correlation of hyperspectral image is required. Generally, a well-designed network would have great potential to capture relevant patterns and characteristics from the training samples.

-

2.

A proper learning strategy is imperative to truly harness the discriminative information of the hyperspectral image. Especially, by considering the unique characteristics of the hyperspectral image, the model’s representational ability could be enhanced to extract valuable latent information from the complex data.

Following these two fundamental considerations, there have been increasing efforts to explore impressive methods for hyperspectral image classification.

Faced with the first problem, conventional methods design hand-crafted spectral features to represent the hyperspectral image. These well-established techniques usually includes spectral feature extraction (e.g. principal component analysis (PCA) [7], linear discriminant analysis (LDA) [8]), statistical classifiers [9], and dimensionality reduction (e.g. non-negative matrix factorization (NMF) [10], t-distributed stochastic neighbor embedding (t-SNE) [11], which cannot be adaptive for the complex latent correlation within the image. To pursue more representative methods, much efforts have been paid on machine learning algorithms, including support vector machines (SVM) [12], decision trees [13], k-Nearest Neighbors (k-NN) [14], and random forests [15], to optimize feature extraction and classification processes. These methods are generally “shallow” methods with only one or two layers, which limit their ability to capture the intricate patterns and spectral information embedded in hyperspectral data.

Recently, deep learning with multiple hidden layers have gained prominence in hyperspectral image classification [16]. They can automatically extract hierarchical features from the data and capture complex relationships, which can further enhance classification accuracy. Generally, based on different architectural paradigms, these deep learning methods can be broadly several classes, such as recurrent neural networks (RNNs), graph convolutional networks (GCNs), CNNs, Transformers, and others. RNNs are good at captuing temporal and spectral dependencies within the hyperspectral data. As a representative, Hang et al. designed a cascaded RNN for HS image classification by taking advantage of RNNs that can model the sequentiality to represent the relations of neighboring spectral bands effectively [17]. GCNs can effectively capture and propagate information across this spectral graph [18], allowing for the modeling of complex relationships and contextual dependencies within hyperspectral data. MiniGCN [19], which provides a feasible solution for addressing the issue of large graphs in GCNs, is a representative of this class of methods. Transformers excel at capturing long-range dependencies in the data, which is especially useful when hyperspectral information is distributed across a wide spectral range. ViT [20], Transformer in Transformer (TNT) [21], SpectralFormer [22], are typical transformers which can be applied for hyperspectral image classification. CNNs, as the most used deep architectures for hyperspectral image classification, can capture local spatial relationships while efficiently process the spectral information. The representative CNNs, such as Noise CNN [23], HybridSN [24], PResNet [25], and 3-D CNN [26], can make full use of both the spatial and spectral information, and present comparable or even better performance than other paradigms.

While these architectures exhibit promising potential for hyperspectral images, they tend to overlook the intrinsic properties of hyperspectral images, thereby limiting their classification performance. Through analysis of intrinsic structure of hyperspectral image, this work will mainly propose a deep intrinsic decomposition framework for hyperspectral image classification. The framework constains the generative network (HyperNet) and discriminative network to extract the environmental-related features and category-related features, which can mitigate the influence of environmental factors and better discriminate different objects.

In order to deal with the second one, this work develops a novel adversarial learning method for deep intrinsic decomposition utilize the intrinsic physical property. Prior works mainly focus on design the specific training losses to learn a better model. The common training loss quantifies the disparity between the predicted outputs of the model and the actual ground truth labels during the training process, such as the generally used softmax loss [27]. Some other works also construct training loss functions for hyperspectral remote sensing images by incorporating inter-sample relationships [28, 29]. This approach harnesses the spectral similarities and differences between samples in the dataset to improve the performance of deep models. Furthermore, more advanced avenue of research explores the incorporation of the physical properties inherent to categories within hyperspectral data for the construction of training loss functions, such as Statistical loss [30], DMEM loss [31]. By considering the unique spectral characteristics and physical attributes of materials or objects of the same category, it becomes possible to design loss functions that promote a deeper understanding and better exploitation of these intrinsic properties. While these training loss functions for developing hyperspectral remote sensing image classification models have become capable of harnessing intra-class structural information, they still disregard the influence of environmental factors on hyperspectral imaging.

When dealing with hyperspectral images, it is essential to acknowledge the significant impact that environmental factors have on classification performance. The intricate interplay of these factors can introduce variations in spectral signatures, potentially leading to misclassification or reduced accuracy in the analysis of hyperspectral data. Researchers try to isolate the unique spectral characteristics or intrinsic properties of the materials or objects within the image through hyperspectral intrinsic decomposition [32]. However, prior works on hyperspectral intrinsic decomposition pjredominantly relied on general spectral analysis techniques [33, 34, 35]. The classification performance is limited due to limitations in their model’s ability to express complex spectral information effectively.

Motivated by [36], this work endeavors to implement deep intrinsic decomposition by leveraging a dedicated adversarial learning method. The intention is to harness the power of deep neural networks to capture the intricate interplay between spectral and spatial information in hyperspectral data. By incorporating adversarial learning, which involves the training of a generator and discriminator network, the model can learn to disentangle intrinsic components more effectively.

Considering the merits of both the hyperspectral deep intrinsic decomposition and adversarial learning, this work develops a new deep intrinsic decomposition with adversarial learning for hyperspectral image classification. First, we design a adversarial network which contains the hypernet and discriminative network to extract the environmental-related feature and category-related feature. Then, a environmental and category joint learning loss is developed for adversarial learning of the model. Finally, we have successfully implemented deep intrinsic decomposition through our specific adversarial learning framework. To be concluded, this paper makes the following contributions.

-

•

We revisit the intrinsic property of hyperspectral image and propose a new adversarial network comprising a hypernet and a discriminative network that jointly extract environmental-related and category-related features from hyperspectral data. This innovation enables a more comprehensive understanding of complex scenes.

-

•

We develop a new adversarial learning based on the environmental and category joint learning loss to make the model learn discriminative environmental-related features and category-related features. This loss function encourages the effective disentanglement of intrinsic components, thereby improving the model’s performance in hyperspectral decomposition tasks.

-

•

We qualitatively and quantitatively evaluate the classification performance of the proposed AdverDecom on three representative hyperspectral image datasets, i.e., Pavia University data, Indian Pines data, and Houston2013 data. Comparisons with other state-of-the-art methods show that the proposed method can have a significant superiority (with an increase of at least 3% OA).

The remainder of this paper is organized as follows. Section II details the proposed AdverDecom, including hyperspectral intrinsic decomposition, adversarial network and adversarial learning for deep intrinsic decomposition, and implementation details, for hyperspectral image classification. Extensive experiments are conducted over three real-world datasets for quantitative and qualitative evaluation of the proposed method in Section III. Section IV concludes the work with a brief outlook on future directions.

II Proposed Method

Given a specific hyperspectral image, the goal of classification task is to assign a unique land-cover label to each pixel of the image. Denote as the set of training samples of a given hyperspectral image, where is the number of training samples, and is the corresponding label of . where represents the class number of the image.

II-A Hyperspectral Intrinsic Decomposition

The intrinsic information coupling model is designed to model the mutual coupling process of intensity and color of light during the imaging process. This model aims to elucidate the intricate interplay between light intensity and color, providing valuable insights into the underlying dynamics of image formation.

As for a natural red-green-blue (RGB) image, the intrinsic image decomposition can be described as [37, 38]

| (1) |

where denotes the original image, and represents the reflectance component and the shading component, respectively. stands for the elementwise multiplication operator.

In contrast to RGB images, hyperspectral images are typically acquired using passive imaging sensors that primarily capture energy reflected from solar radiation. Due to variations in sensitivity to scene radiance changes across different spectral bands, the pixel values in different bands undergo non-proportional changes with scene radiance variations. Therefore, the shading component of hyperspectral images affects each wavelength differently. Considering the varying effects, the hyperspectral intrinsic decomposition model can be formulated as [39]

| (2) |

where denotes the wavelength, and represents the reflectance component and the shading component. determines the spectral reflectance signature which is unique spectral response of each pixel in the image. describes the influence of environmental factors on the hyperspectral image. Based on the property, we define and as the category-related feature and environmental-related feature, respectively.

For the task at hand, the objective of hypersepctral intrinsic image decomposition is to decrease the influence of complex environmental factors, extract and represent the intrinsic spectral and spatial information of hyperspectral images accurately, so as to improve the performance of hypersepctral image classification. Following we will introduce proposed deep intrinsic decomposition method based on the assumption model.

II-B Adversarial Network for Deep Intrinsic Decomposition

As shown in Fig. 1, this work constructs a novel adversarial network to realize the deep intrinsic decomposition for hyperspectral image. The adversarial network consists of the HyperNet and the discriminative network.

II-B1 HyperNet

The aim of HyperNet is to decompose the learned feature into the environmental-related and category-related part. Under the assumption in subsection II-A, the original image can be divided into the category-related feature and environmental-related feature.

Given a sample in the image. Define as the function to extract the category-related feature and as the function to extract the environmental-related feature. Then, based on Eq. 2, the problem can be formulated as

| (3) |

where denotes the overall feature learned from . and are fundamentally about learning mapping relationships and extracting features from the image. Deep neural networks are widely recognized for their exceptional nonlinear fitting capabilities, making them a prime choice for implementing functions and in this study. Deep learning enables the parallel processing of different spectral bands in hyperspectral remote sensing imagery, allowing deep models to model all bands simultaneously. Moreover, leveraging deep neural networks allows us to harness the complex, hierarchical representations within hyperspectral data, enabling us to capture intricate patterns and relationships.

Fig. 1 introduces the framework of the developed HyperNet. and are used for the learning of and , respectively. The first halves of and consist of a common CNN backbone network model with shared parameters, allowing them to collectively extract and learn essential hierarchical features from the image. This shared architecture ensures that both networks benefit from a shared understanding of low-level spectral-spatial features present in the data. In the latter halves of these networks, distinct MLP models are employed, which specialize in different objectives. This design enables the networks to leverage the same foundational feature representations while tailoring their respective output layers to extract the environmental-related and the category-related features.

II-B2 Discriminative Network

The discriminative network takes the environmental-related features as input and learns to disciminate the environmental pseudo class out of pre-defined environmental category. This work uses a specific multi-layer perception as the discriminate network. Denote as the mapping function of the discriminate network. Then, the extracted features can be formulated as , where is the representation function, represents the dimension of environmental-related and category-related features from the image, and stands for the dimension of extracted features by the discriminative network.

II-C Adversarial Learning for Deep Intrinsic Decomposition

In this subsection, we will present the methodology and details of learning the representation function of the image. In particular, we will first introduct the goal of deep intrinsic decomposition, motivate the proposed AdverDecom by general adversarial learning, and finally discuss key algorithmic details.

II-C1 Deep Intrinsic Decomposition Goal

Given sample from the hyperspectral image, the goal of Deep Intrinsic Decomposition is to learn a representation , such that for any environmental factors, there exists a latent mapping function which allows sufficiently distinctive to distinguish different land-cover classes. Formally, an optimal representation, , solves the following optimization problem:

| (4) |

where is the representation function, is the mapping from the features to classification probabilities and denotes a classification loss function. represents the spatial size used for better classifition performance and stands for the channels of the hyerspectral image. As showed in prior subsection, we use specific deep neural networks (DNNs) to represent and , respectively.

II-C2 Adversarial Learning

One challenge of the optimization in Eq. 4 is the intraspecies spectral variability caused by the environmental factors. These fluctuations can lead to great differences in the spectral signatures of objects or materials belonging to the same category, and multiplies the difficulty to learn and . Due to the good representational ability, the DNN may memorize the distributions of samples from a specific class under different environmental factors. The optimization in Eq. 4 may lead to over-fitting and may not properly find an environmentally invariant representation.

To address the aforementioned issue, this work employs adversarial learning framework to separately acquire environmental-related and category-related features. First, based on the training samples, we develop to construct environmental pseudo classes unsupervisedly. Then, based on the environmental pseudo classes, we construct the adversarial optimization problem.

Construction of Environmental Pseudo Classes Given the training samples of the hyperspectral image, we group them into a number of environmental pseudo classes unsupervisedly.

In hyperspectral imaging, there exists the phenomenon that distinct objects or materials exhibit similar spectral signatures in the hyperspectral data. This intriguing occurrence generally arise due to the presence of the effects of environmental factors. Therefore, directly conducting clustering analysis on hyperspectral pixels can yield valuable insights into the influence of environmental variables on classification. This approach involves grouping pixels with similar spectral properties, potentially revealing patterns of spectral variability driven by environmental factors.

Denote as the number of predefined environmental pseudo classes. Denote as the centers of different environmental factors. Iteratively, we calculates the centers of the groups, optimizing the error as follows:

| (5) |

where denotes the indicative function where if condition is true otherwise.

Given a training sample in the hyperspectral image, denote as the corresponding environmental pseudo class of , then the environmental pseudo class can be obtained by calculating

| (6) |

For convenience, following we will use to represent the sample with environment index .

Adversarial Optimization To solve the intraspecies spectral variability problem, we propose the following adversarial optimization framework:

| (7) |

where represents the DNN as the discriminator in subsection II-B2 to predict the environment index out of environmental pseudo classes. and represent the classification loss functions (e.g., the cross entropy loss), respectively, is a hyperparameter to control the degree of regularization. Intuitively, , and play a zero-sum max-min game: the goal of is to predict the environmental index directly from (achieved by the outer ; the goal of is to approximate the label while making the job of harder (achieved by the inner ). In other words, is a learned regularizer to remove the environmental information contained in .

In our experiments, the output of is a -dimensional vector for the class probabilities of land-cover classes, and we use the cross entropy loss for , which is given as

| (8) |

where if and otherwise and stands for the standard basis vector. Similarly, represents a -dimensional vector for the class probabilities of land-cover classes, and we also use the cross entropy loss for , which is given as

| (9) |

where if and otherwise and stands for the standard basis vector.

II-D Implementation of Deep Intrinsic Decomposition with Adversarial Learning

Finally, we solve the optimization problem in 7 by the proposed AdverDecom (described in Algorithm 1 and Fig. 1). As shows in the algorithm, the AdverDecom contains three steps: (1) Construct the environmental pseudo classes of different samples (Line 4); (2) update the category-related representation based on the training batch (Line 5); (3) update the discriminator on the training batch (Line 6). Under iteratively learning from (2) to (3), we can obtain the final environmental-invariant features.

| (10) |

| (11) |

III Experimental Results

III-A Experimental Datasets

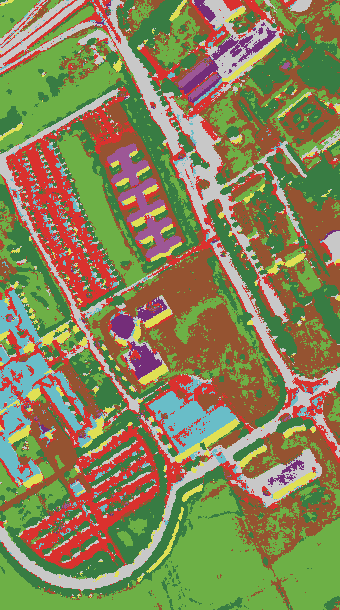

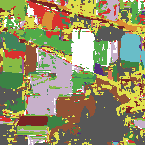

The classification performance of the proposed AdverDecomCNN is evaluated on three datasets, i.e., the Pavia University dataset [40], the Indian Pines dataset [40], and the Houston2013 dataset [41].

Pavia University (PU) data was obtained by the reflective optics system imaging spectrometer (ROSIS-3) over the city of Pavia, Italy with a spatial resolution of . It consists of pixels and each pixel possesses 115 bands with a spectral coverage ranging from 0.43 to 0.86 m. 12 spectral bands are abandoned due to the water absorption and noise, and the remaining 103 channels are used. A total of 43923 labeled sampels divided into nine classes have been chosen for experiments (seen table I for details). The number of training and testing samples per class are also listed in the table.

Indian Pines (IP) data was gathered by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor over the Indian PInes test set in Northwestern Indiana at a ground sampling distance (GSD) of 20m. It consists of pixels with spectral bands ranging from 0.4 to 2.5 m. 24 bands covering the region of water absorption are removed and the remaining 200 spectral bands are used. 16 land cover classes with a total of 10366 labeled samples are selected for experiments. Table II shows the detailed training and testining samples in the experiments.

Houston 2013 (HS) data was collected by the National Center for Airborne Laser Mapping (NCALM) over the University of Houston campus and the neighboring urban area throuth ITRES CASI 1500 sensor at the spatial resolution of 2.5m. The cube consists of pixels with 144 spectral bands ranging from 380 nm to 1050 nm. 15 land cover classes with a total of 15029 labeled samples are selected for experiments. Table III presents the details of the training and testing samples of the dataset for experiments.

| Class | Class Name | Color | Training | Testing |

|---|---|---|---|---|

| C1 | Asphalt |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/pc1.png)

|

548 | 6304 |

| C2 | Meadows |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/pc2.png)

|

540 | 18146 |

| C3 | Gravel |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/pc3.png)

|

392 | 1815 |

| C4 | Trees |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/pc4.png)

|

524 | 2912 |

| C5 | Metal sheet |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/pc5.png)

|

265 | 1113 |

| C6 | Bare soil |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/pc6.png)

|

532 | 4572 |

| C7 | Bitumen |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/pc7.png)

|

375 | 981 |

| C8 | Brick |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/pc8.png)

|

514 | 3364 |

| C9 | Shadow |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/pc9.png)

|

231 | 795 |

| Total | 3921 | 40002 |

| Class | Class Name | Color | Training | Testing |

|---|---|---|---|---|

| C1 | Corn-notill |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c1.png)

|

50 | 1384 |

| C2 | Corn-mintill |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c2.png)

|

50 | 784 |

| C3 | Corn |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c3.png)

|

50 | 184 |

| C4 | Grass-pasture |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c4.png)

|

50 | 447 |

| C5 | Grass-trees |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c5.png)

|

50 | 697 |

| C6 | Hay-windrowed |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c6.png)

|

50 | 439 |

| C7 | Soybean-notill |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c7.png)

|

50 | 918 |

| C8 | Soybean-mintill |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c8.png)

|

50 | 2418 |

| C9 | Soybean-clean |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c9.png)

|

50 | 564 |

| C10 | Wheat |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c10.png)

|

50 | 162 |

| C11 | Woods |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c11.png)

|

50 | 1244 |

| C12 | Buildings-Grass-Trees-Drives |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c12.png)

|

50 | 330 |

| C13 | Stone-Steel-Towers |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c13.png)

|

50 | 45 |

| C14 | Alfalfa |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c14.png)

|

15 | 39 |

| C15 | Grass-pasture-mowed |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c15.png)

|

15 | 11 |

| C16 | Oats |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/c16.png)

|

15 | 5 |

| Total | 695 | 9671 |

| Class | Class Name | Color | Training | Testing |

|---|---|---|---|---|

| C1 | Grass-healthy |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc1.png)

|

198 | 1053 |

| C2 | Grass-stressed |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc2.png)

|

190 | 1064 |

| C3 | Grass-synthetic |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc3.png)

|

192 | 505 |

| C4 | Tree |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc4.png)

|

188 | 1056 |

| C5 | Soil |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc5.png)

|

186 | 1056 |

| C6 | Water |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc6.png)

|

182 | 143 |

| C7 | Residential |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc7.png)

|

196 | 1072 |

| C8 | Commercial |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc8.png)

|

191 | 1053 |

| C9 | Road |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc9.png)

|

193 | 1059 |

| C10 | Highway |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc10.png)

|

191 | 1036 |

| C11 | Railway |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc11.png)

|

181 | 1054 |

| C12 | Parking-lot1 |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc12.png)

|

192 | 1041 |

| C13 | Parking-lot2 |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc13.png)

|

184 | 285 |

| C14 | Tennis-court |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc14.png)

|

181 | 247 |

| C15 | Running-track |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/0c9c2d9e-00f0-4026-8645-9f19f1319890/hc15.png)

|

187 | 473 |

| Total | 2832 | 12197 |

III-B Experimental Setups

All the experiments in this paper are implemented under Pytorch 1.9.1, Cuda 11.2. The learning rate, epoch iteration, and training batch are set to 0.01, 500, and 64, respectively. The dimension of extracted features is set to 128. The structures of discriminative network in the experiments are set as 128-64-64- where denotes the number of pseudo classes. If not specifice, neighbors is used to incorporate the spatial information. We adopt the stochastic gradient descent (SGD) as optimizer of deep model. The codes will be publicly available soon for easily replication at https://github.com/shendu-sw/Adversarial_Learning_Intrinsic_Decomposition.

III-B1 Evaluation Metrics

We use the overall accuracy (OA), average accuracy (AA), Kappa coefficient () as measurements to evaluate performance. Furthermore, classification accuracy per class is also used to provide a thorough comparison. Besides, the visualization of classification maps is also provided to make a qualitative comparison.

III-B2 Baseline Methods

Several representative baselines and backbone networks are selected for comparison. These methods denote the state-of-the-art CNNs (e.g., 3-D CNN [26], PResNet [25], HybridSN [24]), RNNs (e.g., RNN [17]), GCNs (e.g., miniGCN [19]), and Transformers (e.g., ViT [20], SpectralFormer [22], SSFTTNet [42]), for hyperspectral image classification.

-

•

Support vector machine (SVM) is implemented through sklearn package and It is performed with radial basis function (RBF) kernel. SVM is chosen as the representative of such methods of non-machine learning.

-

•

The 3-D CNN [26] consists of four subsequent convolutional block and each block accompanied with a ReLU activation function. Softmax layer and cross entropy classifier are finally added on the top layer of the 3-D CNN to classify different samples.

-

•

PResNet [25] is composed by several blocks of stacked convolutional layers, which have a bottleneck architecture (pyramidal bottleneck residual units) in which the output layer is larger than the input layer.

-

•

HybridSN was developed in [24], and in the implementation, the architecture comprises of three three-dimensional convolution layers, one two-dimensional convolution layer and two fully connected layers. Each convolutional layer is accompanied with batch normalization and ReLU layer.

-

•

RNN [17] consists of two recurrent layers with gated recurrent unit (GRU) where each layer has 128 neural units.

-

•

The miniGCN follows the implementation in [19], which successively contains a BN layer, a graph convolutional layer with 128 neuron units, and a ReLU layer.

- •

-

•

As the former ViT, SpectralFormer [22] consists of five encoder blocks. Each encoder block consists of a four-head SA layer, a MLP with 8 hidden dimensions, and a GELU nonlinear activation layer. Specifically, the SpectralFormer contains the group-wise spectral embedding (GSE) and cross-layer adaptive fusion (CAF).

-

•

SSFTTNet [42] adopts the architecture as the released code at https://github.com/zgr6010/HSI_SSFTT.

III-C Evaluation of the Computational Performance

At first, we test the computational performance of the proposed method compared with other methods. In this set of experiments, the HybridSN is chosen as the backbone CNN to extract features. In order to demonstrate the general usability of the proposed method, a common machine with a Intel@Xeon(R) Gold 6226R GPU, 128GB RAM and Quadro RTX 6000 24GB GPU is used to evaluate the classification performance. The training and testing cost of 3-D CNN, PResNet, HybridSN, SpectralFormer are selected for comparison.

Table IV shows the computational performance over the three datasets. From the table, we can find that the training of proposed AdverDecom took about 618.9s, 141.5s, and 382.6s over Pavia University data, Indian Pines data, and Houston2013 data, respectively. The proposed method took a comparable computational efficiency when compared with 3-D CNN and HybridSN while presented a better computational efficiency than PResNet and SpectralFormer. Furthermore, the testing of the proposed AdverDecom cost about 1.87s, 0.58s, and 0.61s separately which could satisfy the computational efficiency requirements of most applications.

| Data | Metrics | Pavia University | Indian Pines | Houston2013 |

|---|---|---|---|---|

| 3-D CNN | Training(s) | 471.24 | 112.7 | 308.9 |

| Testing(s) | 1.225 | 0.45 | 0.425 | |

| PResNet | Training(s) | 1752.4 | 364.0 | 961.24 |

| Testing(s) | 7.1 | 2.1 | 2.2 | |

| HybridSN | Training(s) | 536.2 | 123.9 | 320.5 |

| Testing(s) | 1.7 | 0.56 | 0.55 | |

| SpectralFormer | Training(s) | 1061.2 | 232.9 | 621.3 |

| Testing(s) | 2.52 | 0.92 | 0.77 | |

| AdverDecom | Training(s) | 618.9 | 141.5 | 382.6 |

| Testing(s) | 1.87 | 0.58 | 0.61 |

III-D Evaluation of the Models Trained with Different Backbone CNNs

The backbone CNN influences the quality of extracted environmental-related and category-related features, and thus shows significant effect on the classification performance of the hyperspectral image. In this set of experiments, we test the performance of the proposed method with different backbone CNNs, s.t., 3-D CNN, PResNet, and HybridSN. The structures of these backbone CNNs are set as the setups in subsection III-B.

Table V, VI, and VII shows the comparison results of the proposed method and the Vanilla CNNs over the three datasets. Inspect the comparison results in these tables and it can be noted that the following hold.

First, the performance based on PResNet and HybridSN is better than that based on 3-D CNN. For Pavia University data, the proposed method can obtain 93.94%, 94.13% with PResNet and HybridSN as backbone which is better than that with 3-D CNN (88.64%). For Indian Pines data, the proposed method can obtain 88.03%, 88.50%, 91.07% with 3-D CNN, PResNet, HybridSN as backbones separately. As for Houston2013, the proposed method can obtain 86.30%, 88.60%, and 90.03% with 3-D CNN, PResNet, HybridSN as backbones, respectively. Then, the proposed deep intrinsic decomposition with adversarial learning can remarkably improve the performance of vanilla CNN. For Pavia University, the proposed method can improve the performance by 1.12%, 3.83%, 3.86% with 3-D CNN, PResNet, HybridSN as backbone model, respectively. As for Indian Pines, the proposed method can obtain an improvement by 10.81%, 5.53%, 12.35% with the three different backbones. While for Houston2013 data, the proposed method can impprove the performance by 1.59%, 3.01%, and 3.14%, respectively.

| Data | Metrics | CNN Backbone | ||

|---|---|---|---|---|

| 3-D CNN | PResNet | HybridSN | ||

| OA(%) | 87.52 | 90.11 | 90.27 | |

| Vanilla | AA(%) | 89.01 | 89.43 | 91.79 |

| (%) | 83.37 | 86.68 | 87.03 | |

| OA(%) | 88.64 | 93.94 | 94.13 | |

| Proposed | AA(%) | 82.34 | 93.19 | 93.63 |

| (%) | 84.51 | 91.82 | 92.11 | |

| Data | Metrics | CNN Backbone | ||

|---|---|---|---|---|

| 3-D CNN | PResNet | HybridSN | ||

| OA(%) | 77.22 | 82.97 | 78.72 | |

| Vanilla | AA(%) | 86.83 | 90.19 | 88.15 |

| (%) | 74.21 | 80.65 | 75.81 | |

| OA(%) | 88.03 | 88.50 | 91.07 | |

| Proposed | AA(%) | 92.09 | 92.94 | 95.45 |

| (%) | 86.30 | 86.82 | 89.79 | |

| Data | Metrics | CNN Backbone | ||

|---|---|---|---|---|

| 3-D CNN | PResNet | HybridSN | ||

| OA(%) | 84.71 | 85.59 | 86.89 | |

| Vanilla | AA(%) | 85.53 | 87.45 | 88.92 |

| (%) | 83.40 | 84.35 | 85.77 | |

| OA(%) | 86.30 | 88.60 | 90.03 | |

| Proposed | AA(%) | 88.34 | 89.91 | 91.66 |

| (%) | 85.12 | 87.63 | 89.18 | |

III-E Evaluation of the Models Trained with Different Number of Pseudo Classes

The construction of pseudo classes is an important factor for the learning of the discriminative network and therefore, it can also influence the classification performance. The number of pseudo classes defines the class of environmental factors. When is set to 1, it means that all the samples possess the same environmental factor.In the experiments, the is set to . Table VIII presents the results of the proposed method with different number of pseudo classes over the three datasets, respectively.

From the figure, we can conclude that a proper can guarantee a good performance of the proposed method. For Pavia Unviersity data, the performance achieve the best when the is set to 5. For Indian Pines data, the performance achieve an accuracy of 91.07% OA which is the best when is set to 2. While for Houston2013 data, the performance achieve the best (90.03%) when is set to 4. Even though the proposed method performs different with different , one has a large range to select since some performs similar. That is, within a certain range, the is not sensitive to the classification performance. For example, for Pavia University data, the proposed method can achieve similar performance when is set to 3 (93.58%), 4(93.91%), 5(94.13%), 6(93.37%), 7(93%). If there is a specific requirement for high accuracy, one can use cross validation for a proper .

| Data | Metrics | Number of Pseudo Classes | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 20 | 30 | ||

| OA(%) | 91.34 | 92.49 | 93.58 | 93.91 | 94.13 | 93.37 | 93.00 | 92.87 | 92.34 | 93.48 | 93.74 | 93.97 | |

| PU | AA(%) | 90.95 | 91.95 | 92.05 | 93.59 | 93.63 | 93.39 | 92.77 | 92.73 | 92.64 | 93.51 | 92.50 | 93.28 |

| (%) | 88.32 | 89.88 | 91.31 | 91.82 | 92.11 | 91.10 | 90.61 | 90.39 | 89.72 | 91.20 | 91.57 | 91.90 | |

| OA(%) | 88.34 | 91.07 | 91.01 | 90.88 | 89.65 | 90.51 | 87.67 | 88.83 | 90.07 | 90.20 | 87.17 | 84.23 | |

| IP | AA(%) | 94.30 | 95.45 | 94.92 | 95.09 | 94.05 | 95.42 | 92.93 | 94.13 | 94.73 | 94.74 | 93.33 | 91.75 |

| (%) | 86.70 | 89.79 | 89.69 | 89.57 | 88.13 | 89.17 | 85.91 | 87.25 | 88.67 | 88.78 | 85.36 | 81.98 | |

| OA(%) | 86.55 | 86.14 | 87.01 | 90.03 | 89.60 | 89.42 | 89.08 | 88.93 | 88.86 | 89.11 | 86.06 | 86.09 | |

| HS | AA(%) | 88.61 | 88.47 | 89.15 | 91.66 | 91.24 | 91.05 | 90.62 | 90.72 | 90.83 | 90.26 | 87.84 | 88.27 |

| (%) | 85.40 | 84.96 | 85.89 | 89.18 | 88.71 | 88.51 | 88.15 | 87.99 | 87.90 | 88.18 | 84.87 | 84.90 | |

III-F Evaluation of the Models Trained with Different

As mentioned in Section II, denotes the tradeoff between the adversarial error and classification error. It can significantly affect the learning process of environmental-related features and category-related features, and thus influence the classification performance. Generally, a larger value leads to a better performance. However, excessively large values decrease the classification performance and even bring about the non-converge of the deep model. The reason is that a larger means a higher weight for adversarial learning and reduces the excessive intra-class variation caused by environmental factors. As a result, the learned features can be easily to discriminate different classes and thus increase the classification performance. However, excessively large focuses on too much attention on the environmental-related features and ignores the category-related features, which in turn decreases the classification performance. Fig. 2 shows the tendiencies of the performance with different over the three datasets.

Here, we choose the value of from . It should be noted that when we conduct the experiments when is set to 10, the deep model cannot converge over the three datasets. As the figure shows, a larger provide a better performance while excessively values decrease the performanc. Since value can significantly affect the performance of the model, a proper is essential for current task. Cross-validation can be used to choose a proper faced with different tasks.

Besides, we can conclude from Fig. 2 that over Pavia University data and Houston2013 data, the proposed method can achieve the best when is set to 1. While over Indian Pines data, the proposed method can achieve 90.90% which performs the best when is set to 0.1.

III-G Evaluation of the Models Trained with Different Size of Spatial Neighbors

It is obvious that the size of spatial neighbors can significantly affect the classification performance of the hyerspectral images. Therefore, in this subsection, we further investigate the effects of the neighbor size on the classification performance. The neighbor sizes are chosen from {, , , , }. Fig. 3 shows the tendencies of classification accuracies under different neighbor sizes.

As shown in Fig. 3, the classification accuracy of the proposed method can provide an improvement of performance under different size of neighbors. For Pavia University data, the proposed method achieves the best (94.46%) with size of neighbors and we can obtain a 2.54% improvement when compared with vanilla CNN. For Houston2013, the proposed method also achieve the best performance (90.68%) under neighbors. As for Indian Pines data, the performance increases with the increase of the neighbor size and the accuracy can obtain 95.36% with neighbor size. Generally, samples with larger neighbor sizes contain more spatial information and thus can provide a better classification performance, just as Indian Pines data. However, larger neighbor sizes imply a more complex physical model, which increases the difficulty of model training. Therefore, for Pavia University and Houston2013 data, samples with neighbors can provide a better classification accuracy than samples with neighbors.

III-H Evaluation of the Models Trained with Different Number of Samples

Prior subsections mainly conduct the experiments over a given training and testing samples divided as Table I, II, and III list. This subsection will further evaluate the performance of the developed method under a different number of training samples. As shows in Table I-III, 3921 training and 40002 testing samples for Pavia University data, 695 training and 9671 testing samples for Indian Pines data, 2832 training and 12197 testing samples for Houston2013 data, are used for experiments, respectively. While in this set of experiments, 6.25%, 12.5%, 25%, 50%, and 100% samples are selected from the original training samples over these datasets to evaluate the performance with different number of training samples. That is, over Pavia University data, 245, 490, 980, 1960, 3921 training samples are selected. Over Indian Pines data, 43, 86, 173, 347, 695 samples are selected and 177, 354, 708, 1416, 2832 samples are chosen for Houston2013 data. Fig. 4 shows the tendencies of classification performance with different number of training samples over the three datasets, respectively.

We can find that the accuracies by the proposed method can be remarkably improved compared with the vanilla CNN. For Pavia University data, the accuracy can be increased by about 3%-4%. For Houston2013 data, the accuracy can be increased by about 1.5%-3%. Specifically, for Indian Pines data, the accuracy can be even increased by more than 10%. This is because the proposed method decomposes the environmental-related features and the category-related features, and improves the discrimination of category-related features and reduces the impact of environmental factors on hyperspectral image classification. Besides, the classification performance of the learned model is significantly improved with the increase of training samples. More training samples provides additional information for the deep model to learn, allowing it to better extract discriminative features for hyerspectral image classification.

Furthermore, we show the classification maps over different datasets in Figs. 5-7 under setups in Table I-III, respectively. Compare Fig. 5 with 5, 6 with 6, and 7 with 7, and we can find that the classification error can be significantly decreased under the proposed AdverDecom method. This also indicates that the proposed method with deep intrinsic decomposition through adversarial learning can provide a more discriminative feature by decompose the environmental-related and category-related features.

III-I Comparison with State-of-the-art Methods

To further validate the effectiveness of the proposed method for hyperspectral image classification, we compare classification results of the proposed method with the state-of-the-art methods. Table IX, X, and XI present the comparisons over the three datasets, respectively. All the experimental results in these tables come from the same experimental setups.

From Table IX, we can obtain that the proposed method can obtain 94.13% OA that outperforms the CNNs (e.g. 3-D CNN (87.52%), PResNet (90.11%), HybridSN (90.27%)), RNN (80.61%), miniGCN (83.23%), and Transformers (e.g. ViT (86.27%), SpectralFormer (90.04%), SSFTTNet(82.56%)) over Pavia University data. As listed in Table X, for Indian Pines data, the proposed method can provide an accuracy of 91.07% outperforms that of the CNNs (e.g. 3-D CNN (77.22%), PResNet (82.97%), HybridSN (78.72%)), RNN (81.11%), miniGCN (74.71%), and Transformers (e.g. ViT (65.16%), SpectralFormer (83.38%), SSFTTNet (80.29%)). Furthermore, for Houston2013 data, the proposed method can also provide a better classification performance when compared with other state-of-the-art methods (see Table XI for details). These comparison results show the effectiveness of the proposed method for current task.

Besides, from classification maps in Figs 5-7, we can also find that the classification error of the proposed method can be decreased by the proposed AdverDecom and thus the accuracy can be obviously improved. In particular, the results of our proposed methods have less noisy points compared to other state-of-the-art methods.

To sum up, the proposed method can significantly improve the representational ability of the deep model and significantly improve the classification accuracy when compared with not only other handcrafted methods and CNNs-based deep models, but also other state-of-the-art deep methods.

| Methods | SVM | CNNs | RNN | miniGCN | Transformers | AdverDecom | ||||

| 3-D CNN | PResNet | HybridSN | ViT | SpectralFormer | SSFTTNet | |||||

| C1 | 77.08 | 82.95 | 80.11 | 83.49 | 85.56 | 91.55 | 82.55 | 84.72 | 75.89 | 92.43 |

| C2 | 79.22 | 88.32 | 94.81 | 91.52 | 75.00 | 84.62 | 96.57 | 95.86 | 81.46 | 95.32 |

| C3 | 77.52 | 73.55 | 85.62 | 81.76 | 71.63 | 74.27 | 56.42 | 66.72 | 75.54 | 80.22 |

| C4 | 94.61 | 93.96 | 98.49 | 98.80 | 94.16 | 71.22 | 95.98 | 96.53 | 83.72 | 98.73 |

| C5 | 98.74 | 99.55 | 99.91 | 100.0 | 91.46 | 99.55 | 93.62 | 99.19 | 100.0 | 98.83 |

| C6 | 93.68 | 79.11 | 79.48 | 85.39 | 72.66 | 67.61 | 49.43 | 73.16 | 83.44 | 89.57 |

| C7 | 85.12 | 90.21 | 79.00 | 91.85 | 92.25 | 86.75 | 79.61 | 79.71 | 99.80 | 91.64 |

| C8 | 93.82 | 98.63 | 91.77 | 94.41 | 95.54 | 86.15 | 93.79 | 97.74 | 88.61 | 99.20 |

| C9 | 92.58 | 94.84 | 95.72 | 98.87 | 93.21 | 100.0 | 91.07 | 93.33 | 95.60 | 96.73 |

| OA(%) | 83.76 | 87.52 | 90.11 | 90.27 | 80.61 | 83.23 | 86.27 | 90.04 | 82.56 | 94.13 |

| AA(%) | 88.04 | 89.01 | 89.43 | 91.79 | 85.72 | 84.64 | 82.12 | 87.44 | 87.12 | 93.63 |

| (%) | 78.98 | 83.37 | 86.68 | 87.03 | 74.83 | 77.44 | 81.16 | 86.51 | 77.30 | 92.11 |

| Methods | SVM | CNNs | RNN | miniGCN | Transformers | AdverDecom | ||||

| 3-D CNN | PResNet | HybridSN | ViT | SpectralFormer | SSFTTNet | |||||

| C1 | 72.11 | 67.85 | 72.76 | 66.04 | 72.76 | 70.52 | 59.68 | 78.97 | 83.37 | 86.71 |

| C2 | 71.43 | 77.04 | 87.50 | 79.46 | 84.82 | 53.19 | 37.76 | 85.08 | 71.39 | 93.88 |

| C3 | 86.96 | 93.48 | 94.02 | 94.57 | 78.26 | 91.85 | 55.43 | 85.33 | 94.57 | 97.28 |

| C4 | 95.97 | 92.84 | 94.85 | 91.28 | 88.14 | 93.74 | 65.10 | 94.18 | 93.93 | 98.43 |

| C5 | 88.67 | 83.21 | 91.97 | 90.96 | 83.50 | 95.12 | 86.51 | 84.36 | 95.38 | 98.85 |

| C6 | 95.90 | 98.63 | 97.49 | 100.0 | 91.80 | 99.09 | 97.95 | 98.63 | 98.40 | 99.77 |

| C7 | 75.60 | 74.51 | 84.20 | 79.30 | 87.58 | 63.94 | 51.85 | 63.94 | 72.66 | 90.52 |

| C8 | 59.02 | 62.66 | 73.57 | 68.07 | 74.28 | 68.40 | 62.45 | 83.95 | 71.63 | 84.45 |

| C9 | 76.77 | 69.68 | 75.18 | 70.21 | 75.35 | 73.40 | 41.13 | 73.58 | 50.18 | 88.12 |

| C10 | 99.38 | 99.38 | 100.0 | 100.0 | 99.38 | 98.77 | 96.30 | 99.38 | 98.15 | 100.0 |

| C11 | 93.33 | 93.25 | 93.41 | 76.94 | 93.89 | 88.83 | 91.00 | 97.19 | 91.94 | 94.21 |

| C12 | 73.94 | 96.06 | 80.61 | 90.30 | 63.03 | 46.06 | 52.12 | 64.55 | 86.63 | 97.58 |

| C13 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 97.78 | 95.56 | 97.78 | 95.45 | 100.0 |

| C14 | 87.18 | 89.74 | 97.44 | 92.31 | 66.67 | 46.15 | 48.72 | 76.92 | 82.05 | 97.44 |

| C15 | 100.0 | 90.91 | 100.0 | 100.0 | 100.0 | 72.73 | 81.82 | 100.0 | 100.0 | 100.0 |

| C16 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 80.00 | 100.0 | 100.0 | 100.0 | 100.0 |

| OA(%) | 76.53 | 77.22 | 82.97 | 78.72 | 81.11 | 74.71 | 65.16 | 83.38 | 80.29 | 91.07 |

| AA(%) | 86.02 | 86.83 | 90.19 | 88.15 | 84.97 | 77.47 | 70.21 | 86.49 | 86.61 | 95.45 |

| (%) | 73.42 | 74.21 | 80.65 | 75.81 | 78.51 | 71.21 | 60.26 | 80.93 | 77.40 | 89.79 |

| Methods | SVM | CNNs | RNN | miniGCN | Transformers | AdverDecom | ||||

| 3-D CNN | PResNet | HybridSN | ViT | SpectralFormer | SSFTTNet | |||||

| C1 | 82.62 | 83.76 | 81.67 | 83.57 | 81.67 | 96.20 | 82.53 | 83.29 | 83.29 | 82.62 |

| C2 | 98.78 | 95.49 | 99.91 | 100.0 | 95.39 | 96.90 | 99.06 | 98.97 | 90.51 | 99.53 |

| C3 | 90.30 | 95.05 | 90.89 | 98.02 | 95.05 | 99.41 | 91.49 | 96.63 | 98.61 | 99.60 |

| C4 | 97.06 | 99.24 | 86.74 | 95.55 | 96.02 | 97.63 | 95.64 | 96.02 | 96.97 | 99.81 |

| C5 | 99.81 | 99.43 | 99.43 | 99.72 | 97.63 | 97.73 | 99.34 | 100.0 | 99.53 | 97.82 |

| C6 | 82.52 | 90.21 | 92.31 | 95.80 | 91.61 | 95.10 | 94.41 | 94.40 | 91.61 | 96.50 |

| C7 | 89.65 | 86.85 | 90.49 | 90.67 | 89.92 | 65.86 | 91.04 | 83.21 | 67.91 | 86.10 |

| C8 | 57.74 | 82.05 | 75.31 | 81.01 | 70.09 | 65.15 | 60.68 | 80.72 | 55.08 | 80.72 |

| C9 | 61.19 | 76.49 | 80.93 | 81.59 | 73.84 | 69.88 | 71.20 | 77.43 | 54.25 | 85.93 |

| C10 | 67.66 | 53.96 | 70.27 | 46.33 | 65.93 | 67.66 | 52.51 | 58.01 | 81.56 | 83.49 |

| C11 | 72.68 | 82.35 | 84.91 | 94.12 | 70.40 | 82.83 | 78.75 | 80.27 | 90.51 | 89.66 |

| C12 | 70.41 | 78.48 | 71.85 | 80.50 | 79.73 | 68.40 | 81.27 | 84.44 | 84.73 | 81.94 |

| C13 | 61.05 | 75.44 | 89.47 | 94.74 | 74.39 | 57.54 | 65.96 | 73.33 | 81.75 | 92.98 |

| C14 | 94.33 | 91.90 | 97.57 | 96.36 | 98.79 | 99.19 | 95.14 | 99.60 | 99.19 | 99.19 |

| C15 | 80.13 | 92.18 | 100.0 | 95.78 | 98.31 | 98.73 | 92.39 | 99.15 | 99.79 | 98.94 |

| OA(%) | 80.16 | 84.71 | 85.59 | 86.89 | 83.55 | 82.31 | 82.22 | 85.55 | 82.46 | 90.03 |

| AA(%) | 80.40 | 85.53 | 87.45 | 88.92 | 85.25 | 83.88 | 83.43 | 87.03 | 85.02 | 91.66 |

| (%) | 78.44 | 83.40 | 84.35 | 85.77 | 82.15 | 80.84 | 80.68 | 84.32 | 80.97 | 89.18 |

IV Conclusions

In this work, based on intrinsic property of hyperspectral image, we develop a deep intrinsic decomposition with adversarial learning for hyperspectral image classification. We develop the adversarial network to decompose the learned feature into the category-related and environmental-related features. Then, based on the proposed adversarial learning methods, the network can be adversarially learned and provide discriminative features of the hyperspectral image. Experimental results over different CNN backbone shows that the proposed method can remarkably improve the classification performance. Besides, the comparison results between other state-of-the-art methods also show the superiority of the proposed method.

In future works, it would be interesting to investigate the effects of the proposed AdverDecom on the applications of other tasks, such as anomaly detection, target identification. Besides, exploring the performance of AdverDecom by integrating other training strategies, such as metric learning, is another interesting future topic.

References

- [1] Z. Gong, P. Zhong, Y. Yu, and et al., “A CNN with multiscale convolution and diversified metric for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 6, pp. 3599–3618, 2019.

- [2] Y. Gu, J. Chanussot, X. Jia, and J. A. Benediktsson, “Multiple kernel learning for hyperspectral image classification: A review,” IEEE Transactions on Geoscience and Remote Sensing, vol. 55, no. 11, pp. 6547–6565, 2017.

- [3] H. Sun, X. Zheng, and X. Lu, “A supervised segmentation network for hyperspectral image classification,” IEEE Transactions on Image Processing, vol. 30, pp. 2810–2825, 2021.

- [4] N. Okada, Y. Maekawa, N. Owada, K. Haga, A. Shibayama, and Y. Kawamura, “Automated identification of mineral types and grain size using hyperspectral imaging and deep learning for mineral processing,” Minerals, vol. 10, no. 9, p. 809, 2020.

- [5] B. P. Banerjee, S. Raval, H. Zhai, and P. J. Cullen, “Health condition assessment for vegetation exposed to heavy metal pollution through airborne hyperspectral data,” Environmental Monitoring and Assessment, vol. 189, pp. 1–11, 2017.

- [6] J. Zhao, L. Liu, Y. Zhang, X. Wang, and F. Wu, “A novel way to rapidly monitor microplastics in soil by hyperspectral imaging technology and chemometrics,” Environmental Pollution, vol. 238, pp. 121–129, 2018.

- [7] W. Sun, G. Yang, J. Peng, and Q. Du, “Lateral-slice sparse tensor robust principal component analysis for hyperspectral image classification,” IEEE Geoscience and Remote Sensing Letters, vol. 17, no. 1, pp. 107–111, 2019.

- [8] T. V. Bandos, L. Bruzzone, and G. Camps-Valls, “Classification of hyperspectral images with regularized linear discriminant analysis,” IEEE Transactions on Geoscience and Remote Sensing, vol. 47, no. 3, pp. 862–873, 2009.

- [9] A. Villa, J. A. Benediktsson, J. Chanussot, and C. Jutten, “Hyperspectral image classification with independent component discriminant analysis,” IEEE Transactions on Geoscience and Remote Sensing, vol. 49, no. 12, pp. 4865–4876, 2011.

- [10] M. S. Karoui, Y. Deville, F. Z. Benhalouche, and I. Boukerch, “Hypersharpening by joint-criterion nonnegative matrix factorization,” IEEE Transactions on Geoscience and Remote Sensing, vol. 55, no. 3, pp. 1660–1670, 2016.

- [11] L. Gao, D. Gu, L. Zhuang, J. Ren, D. Yang, and B. Zhang, “Combining t-distributed stochastic neighbor embedding with convolutional neural networks for hyperspectral image classification,” IEEE Geoscience and Remote Sensing Letters, vol. 17, no. 8, pp. 1368–1372, 2019.

- [12] J. Peng, Y. Zhou, and C. P. Chen, “Region-kernel-based support vector machines for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 53, no. 9, pp. 4810–4824, 2015.

- [13] J. Xia, P. Du, X. He, and J. Chanussot, “Hyperspectral remote sensing image classification based on rotation forest,” IEEE Geoscience and Remote Sensing Letters, vol. 11, no. 1, pp. 239–243, 2013.

- [14] L. Ma, M. M. Crawford, and J. Tian, “Local manifold learning-based -nearest-neighbor for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 48, no. 11, pp. 4099–4109, 2010.

- [15] J. Xia, P. Ghamisi, N. Yokoya, and A. Iwasaki, “Random forest ensembles and extended multiextinction profiles for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 56, no. 1, pp. 202–216, 2017.

- [16] S. Li, W. Song, L. Fang, Y. Chen, and J. A. Ghamisi, P. amd Benediktsson, “Deep learning for hyperspectral image classification: An overview,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 9, pp. 6690–6709, 2019.

- [17] R. Hang, Q. Liu, D. Hong, and P. Ghamisi, “Cascaded recurrent neural networks for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 8, pp. 5384–5394, 2019.

- [18] X. Chen, Z. Gong, X. Zhao, W. Zhou, and W. Yao, “A machine learning surrogate modeling benchmark for temperature field reconstruction of heat source systems,” Science China Information Sciences, vol. 66, no. 5, pp. 1–20, 2023.

- [19] D. Hong, L. Gao, J. Yao, and et al., “Graph convolutional networks for hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 59, no. 7, pp. 5966–5978, 2021.

- [20] A. Dosovitskiy, L. Beyer, A. Kolesnikov, and et al., “An image is worth 16x16 words: Transformers for image recognition at scale.” International Conference on Learning Representations, 2021.

- [21] K. Han, A. Xiao, E. Wu, and et al., “Transformer in Transformer,” vol. 34. Advances in Neural Information Processing Systems, 2021, pp. 15 908–15 919.

- [22] D. Hong, Z. Han, J. Yao, and et al., “SpectralFormer: Rethinking hyperspectral image classification with transformers,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–15, 2022.

- [23] Z. Gong, P. Zhong, W. Yao, and et al., “A CNN with Noise Inclined Module and Denoise Framework for Hyperspectral Image Classification,” IET Image Processing, 2022.

- [24] S. K. Roy, G. Krishna, S. R. Dubey, and B. B. Chaudhuri, “HybridSN: Exploring 3-D–2-D CNN feature hierarchy for hyperspectral image classification,” IEEE Geoscience and Remote Sensing Letters, vol. 17, no. 2, pp. 277–281, 2019.

- [25] M. E. Paoletti, J. M. Haut, R. Fernandez-Beltran, and et al., “Deep pyramidal residual networks for spectralspatial hyperspectral image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 2, pp. 740–754, 2018.

- [26] A. B. Hamida, A. Benoit, P. Lambert, and C. B. Amar, “3-D deep learning approach for remote sensing image classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 56, no. 8, pp. 4420–4434, 2018.

- [27] X. Yang, Y. Ye, X. Li, R. Y. Lau, X. Zhang, and X. Huang, “Hyperspectral image classification with deep learning models,” IEEE Transactions on Geoscience and Remote Sensing, vol. 56, no. 9, pp. 5408–5423, 2018.

- [28] K. K. Huang, C. X. Ren, H. Liu, Z. R. Lai, Y. F. Yu, and D. Q. Dai, “Hyperspectral image classification via discriminative convolutional neural network with an improved triplet loss,” IEEE Transactions on Geoscience and Remote Sensing, vol. 112, p. 107744, 2021.

- [29] A. J. Guo and F. Zhu, “Spectral-spatial feature extraction and classification by ann supervised with center loss in hyperspectral imagery,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 3, pp. 1755–1767, 2018.

- [30] Z. Gong, P. Zhong, and W. Hu, “Statistical loss and analysis for deep learning in hyperspectral image classification,” IEEE Transactions on Neural Networks and Learning Systems, vol. 32, no. 1, pp. 322–333, 2021.

- [31] Z. Gong, W. Hu, X. Du, and et al., “Deep manifold embedding for hyperspectral image classification,” IEEE Transactions on Cybernetics, vol. 52, no. 10, pp. 10 430–10 443, 2022.

- [32] X. Jin, Y. Gu, and W. Xie, “Intrinsic Hyperspectral Image Decomposition With DSM Cues,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–13, 2021.

- [33] R. Hang, Q. Liu, and Z. Li, “Spectral super-resolution network guided by intrinsic properties of hyperspectral imagery,” IEEE Transactions on Image Processing, vol. 30, pp. 7256–7265, 2021.

- [34] W. Xie, Y. Gu, and T. Liu, “Hyperspectral Intrinsic Image Decomposition Based on Physical Prior Driven Unsupervised Learning,” IEEE Transactions on Geoscience and Remote Sensing, 2023.

- [35] T. Xie, S. Li, and B. Sun, “Hyperspectral images denoising via nonconvex regularized low-rank and sparse matrix decomposition,” IEEE Transactions on Image Processing, vol. 29, pp. 44–56, 2023.

- [36] M. O’Connell, G. Shi, X. Shi, K. Azizzadenesheli, A. Anandkumar, Y. Yue, and S. J. Chung, “Neural-fly enables rapid learning for agile flight in strong winds,” Science Robotics, vol. 7, no. 66, pp. 1–15, 2022.

- [37] X. Kang, S. Li, L. Fang, and J. A. Benediktsson, “Intrinsic image decomposition for feature extraction of hyperspectral images,” IEEE Transactions on Geoscience and Remote Sensing, vol. 53, no. 4, pp. 2241–2253, 2014.

- [38] Y. Gu, W. Xie, X. Li, and X. Jin, “Hyperspectral intrinsic image decomposition with enhanced spatial information,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–14, 2022.

- [39] X. Jin, Y. Gu, and T. Liu, “Intrinsic image recovery from remote sensing hyperspectral images,” IEEE Transactions on Geoscience and Remote Sensing, vol. 57, no. 1, pp. 224–238, 2018.

- [40] Hyperspectral data, https://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_Sensing_Scenes, accessed Oct., 2023.

- [41] C. Debes, A. Merentitis, R. Heremans, and et al., “Hyperspectral and LiDAR data fusion: Outcome of the 2013 GRSS data fusion contest,” IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, vol. 7, no. 6, pp. 2405–2418, 2014.

- [42] L. Sun, G. Zhao, Y. Zheng, and Z. Wu, “Spectral–Spatial Feature Tokenization Transformer for Hyperspectral Image Classification,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–14, 2022.