Deep Generative Models in Engineering Design: A Review

Abstract

Automated design synthesis has the potential to revolutionize the modern engineering design process and improve access to highly optimized and customized products across countless industries. Successfully adapting generative Machine Learning to design engineering may enable such automated design synthesis and is a research subject of great importance. We present a review and analysis of Deep Generative Machine Learning models in engineering design. Deep Generative Models (DGMs) typically leverage deep networks to learn from an input dataset and synthesize new designs. Recently, DGMs such as feedforward Neural Networks (NNs), Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and certain Deep Reinforcement Learning (DRL) frameworks have shown promising results in design applications like structural optimization, materials design, and shape synthesis. The prevalence of DGMs in engineering design has skyrocketed since 2016. Anticipating continued growth, we conduct a review of recent advances to benefit researchers interested in DGMs for design. We structure our review as an exposition of the algorithms, datasets, representation methods, and applications commonly used in the current literature. In particular, we discuss key works that have introduced new techniques and methods in DGMs, successfully applied DGMs to a design-related domain, or directly supported the development of DGMs through datasets or auxiliary methods. We further identify key challenges and limitations currently seen in DGMs across design fields, such as design creativity, handling constraints and objectives, and modeling both form and functional performance simultaneously. In our discussion, we identify possible solution pathways as key areas on which to target future work.

1 Introduction

The human design process is a ubiquitous element of modern society, playing a critical role in the technologies producing the food we eat, the products we use, and the spaces in which we live. Accelerating the design process through automation can reduce cost and increase industrial productivity, which would be immensely desirable for global productivity and prosperity. Integrating AI into the design process can alleviate dependence on human experts and revolutionize user customizability, providing specialized products for individual users without the prohibitive cost of manual design. Driven by the widespread potential to advance global equity and prosperity through design automation, methods such as “generative design” have recently emerged alongside advanced computing and automation technologies.

“Generative design” is the process in which algorithms directly synthesize designs either via explicit programming or implicit learning. Early generative design methods leaned heavily on explicit programming of human design expertise through manually-defined design representation methods like grammars [1]. While practical for explicitly encoding design constraints and objectives, these rule-based frameworks ignored opportunities for implicit leaning on information and knowledge encoded in the vast expanse of existing designs. As the availability of computational resources increased over the past decade, data-intensive methods like deep learning opened doors to successfully automate complex human tasks such as image processing and natural language processing.

In deep learning, data is propagated through sequential layers to learn progressively higher-level meaning, an architecture generally known as an Artificial Neural Network (ANN) or just Neural Network (NN) [2]. Most of the deep learning-based approaches pioneered during the 2010s leveraged extensive quantities of data to avoid explicit feature engineering. This trend is mirrored in engineering design with algorithms learning data distributions instead of requiring them to be predefined. Among these algorithms are Deep Generative Models (DGMs) — deep learning models that can approximate complicated, high-dimensional probability distributions using a large dataset. In this review paper, we specifically define “Deep Generative Models” as algorithms that are capable of generating new samples using deep learning. Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) are two classes of DGMs that have demonstrated compelling synthesis of images, text, and tabular data in numerous domains. Considering that images, text, and tabular data are all common representation methods for design, one might assume that DGMs should be capable of synthesizing full designs as well with relative ease. However, several unique properties of the generative design task pose particular challenges for DGMs. Many of these challenges are so fundamental that the future success of DGMs in engineering design is largely contingent on the ability to overcome them. We list four of these challenges below:

-

1.

Modeling design performance: Real-world functional performance is critical in many engineering design tasks. Developing performance-aware DGMs capable of synthesizing designs for a given set of target requirements (a process termed as inverse design) is a challenging task that is exacerbated by the computational cost of numerical simulation and the even greater difficulty of real-world evaluation.

-

2.

Data sparsity: Compared to other research fields like Computer Vision, which have massive publicly available datasets, the availability of large, well-annotated, public datasets in engineering is severely lacking. Furthermore, even when data is available, the distribution of the data often does not cover the design space evenly, with much sparsity often observed in the data.

-

3.

The creativity gap: In conventional DGM applications, the overarching goal is to mimic the training data and emulate existing designs. In engineering design, emulation of existing products is often undesirable. Designers typically aim to introduce products with novel features to target new market segments.

-

4.

Usability and feasibility: For synthesized designs to be physically fabricated, they must be physically feasible. Furthermore, designs must be encoded in a data representation that contains enough parametric detail to be converted into a representation usable for fabrication.

Over the past few years, the design community has made substantial progress in using generative machine learning (ML) models to create new designs. DGMs have been applied to a broad range of design tasks such as structural optimization, materials design, and shape synthesis. Over time, researchers have introduced increasingly advanced methods, which have begun to address some of the above challenges. For example, many works have proposed approaches to incorporate design performance and optimization into DGM training. Other works have explored incorporating novelty and creativity into DGMs. Despite these advancements, DGMs for engineering design are still in their infancy and will require further efforts to effectively overcome these fundamental challenges.

Our primary goal in this work is to help build cohesion between the countless active researchers in the design field working with DGMs and furthermore provide a starting point for researchers entering the field. In particular, we seek to provide researchers with a reference guide in planning projects in the data-driven generative design space. To this end, we provide an overview of common methods and tools (Sec. 2), a discussion of different data parameterization methods (Sec. 3), a review of potentially relevant research across various design domains (Sec. 5), an overview of relevant datasets (Sec. 6), and an analysis of common challenges in the field (Sec. 7). Figure 1 provides an overview of the standard process to apply DGMs in engineering design.

2 Overview of Deep Generative Models

Deep Generative Machine Learning approaches share the goal of high-quality synthesis but significantly vary in methodology. In practice, we identify four common approaches to generate designs: direct generation using deep neural networks (DNN), adversarial generation with Generative Adversarial Networks (GAN), generation from embedding vectors using Variational Autoencoders (VAE), and sequential generation using Reinforcement learning (RL). In the design community, we observe that GANs, VAEs, and RL are most commonly used for design synthesis. While DNNs as well as extensions like recurrent neural networks (RNNs) are occasionally used for direct design synthesis, they are more frequently used for non-generative tasks. In this section, we briefly discuss the background and methodology of GANs, VAEs, and RL.

2.1 Generative Adversarial Networks

Originally introduced in 2014, Generative Adversarial Networks (GANs) [3] found initial success with convincing image synthesis performance[4, 5, 6, 7]. We provide an introduction of GANs but refer the reader to [8] for a detailed overview. A generative adversarial network [3] consists of two models — a generator and a discriminator. The generator maps an arbitrary noise distribution to the data distribution, in our case the distribution of designs, and can thus generate new data; simultaneously, the discriminator learns to distinguish between real and generated data. Both models are usually built with deep neural networks. As improves, also improves as it learns to generate data that fools .

Common challenges in training GAN models:

GANs are often considered difficult to train, suffering from training instability stemming from several sources [9, 10]. One common issue in GAN training occurs when the discriminator overpowers the generator, easily distinguishing generated samples, and causing the gradient to the generator to vanish, effectively halting the generator’s training. This issue has been addressed by many researchers. For example, the WGAN replaces the discriminator with a critic, modifies the GAN’s loss function to estimate the Wasserstein (Earth Mover’s) distance between the original data and generated data distributions, and modifies the training process [11, 12].

Another problem that GANs face is the issue of “mode collapse,” where the generator fails to encompass all modes in the data distribution or even generates only a handful of unique samples that are capable of fooling the discriminator. To overcome these issues, researchers have developed novel algorithmic techniques [13, 14, 15] to reward diversity in samples.

GAN conditioning:

In the design domain, we often have design constraints, requirements, and objectives that any generated design should satisfy. To use DGMs for such problems, these requirements should be imposed on them. For example, we may seek to train a DGM to generate bikes, but depending on our user, we may want to constrain it to generate only roadbikes or mountain bikes without retraining for each generation task. Model conditioning is one method to do this. Several proposed approaches add conditioning to the GAN using a condition vector which is intended to be interpretable. Typically GANs are discretely conditioned by feeding the condition vector into both the generator and discriminator, in a configuration known as a Conditional GAN (cGAN) [16]. Instead of feeding the condition vector into the discriminator, an auxiliary network and cross entropy loss can instead be used to reconstruct the condition vector from the generated samples in a configuration known as an Information Maximizing GAN (InfoGAN) [17]. Conditioning is also essential in design applications where inverse design is being done on performance metrics, which often exist in continuous spaces (e.g., stiffness, lift coefficient, drag coefficient, density, etc.). Researchers have come up with continuous conditioning solutions for GANs such as the Regressional GAN [18], continuous conditional GAN (CcGAN) [19] and performance conditioned diverse GAN (PcDGAN) [20].

2.2 Variational Autoencoders

Introduced in 2013, Variational Autoencoders found significant success in many machine learning applications. Autoencoders are unsupervised embedding algorithms consisting of an encoder that maps an input design into a (typically) lower-dimensional latent space and a decoder that reconstructs the design as accurately as possible from the latent space. The encoder and decoder are conventionally implemented using deep neural networks. To generate new samples, latent vectors are sampled from the latent space and fed through the decoder. Typically, the distribution of the real data mapped to the latent space of an autoencoder is sparse, meaning that sampling a realistic latent vector is difficult. This limitation is addressed with the introduction of the Variational Autoencoder (VAE), first proposed by Kingma et al. [21]. The Variational Autoencoder adds in a probabilistic sampling in the latent space that regularizes the latent distribution. In practice, the VAEs’s encoder outputs means and variances, from which -dimensional latent vectors are sampled before decoding. To maintain a predictable latent space distribution, The VAE adds a Kullback-Liebler (KL) divergence [22] loss between the distribution of the latent space and a standard Gaussian. Interested readers are encouraged to refer to the literature [23] for a more detailed overview.

Conditional VAEs:

Just as we do for GANs, we may also seek to condition VAE training on design constraints or user preferences. The VAE has a natural advantage over the GAN in that its latent space is typically already structured. Since this structure may be fairly weak and difficult to interpret, explicitly conditioning VAEs may still be desirable. The Conditional VAE (cVAE) [24] extends on the conventional VAE by adding a conditioning vector as an input to both the encoder and decoder and helps achieve this goal.

2.3 Reinforcement Learning

Reinforcement Learning fundamentally differs from the other DGMs discussed in that it learns without a dataset in an unsupervised fashion through a large set of trial and error interactions between an actor and an environment [25]. This is typically done through some reward signal being sent to the actor after taking actions, based on the effects of said actions on the environment. In this scenario, the actor’s goal is to maximize the rewards it receives by making decisions (i.e., taking actions) such that the total reward is maximized. From this point of view, reinforcement learning can be thought of as an approach similar to optimization, where an objective (maximizing the reward) is being optimized.

One of the first attempts at introducing deep learning to the reinforcement learning approach was done in 2013 by Mnih et al., when they introduced deep learning to a reinforcement learning process known as Q-Learning [26]. Q-Learning refers to learning the state-action value function or Q-function, which is a progressively updated estimate of the expected reward to be received from taking a particular action in a particular state. Mnih et al., attempted to learn the Q-function using convolutional neural networks (CNN). Many Deep RL techniques have been introduced since this first work by Mnih et al.. Further exploration of the details of these approaches is left to the reader.

In practice, when applying RL to design applications, the design process is usually broken down into a sequential process of building a design or altering existing designs in steps (i.e., actions taken to alter or expand the current state of a design being generated) and the reward is measured by the quality or performance of the resulting design (i.e., the environment). While RL requires no dataset, this advantage is balanced by dependence on meaningful and reliable reward signals, which may often require a high-fidelity simulation environment. One major benefit of RL over GANs and VAEs is the fact that the reward function can be set based on any objective which does not need to be differentiable. In contrast, any objective added to the loss function of a GAN or VAE must be differentiable since GANs and VAEs are trained using the gradient-based optimization [15].

3 Overview of Design Representation Methods

In this section, we discuss common design representation methods seen in DGMs for engineering design which are visualized in Section 2 of Figure 1. We include a definition and discuss the pros and cons of each method.

3.1 Images

Design data often comes in the form of images (e.g. microstructure scans) or can be represented in image form (e.g. Topology Optimization). An image consists of a rectangular grid of pixels, each of which contains a color parameter. They are commonly represented by third-order tensors (). Common color schemes are black-and-white (boolean color channel), grayscale (integer color channel), and color (3-4 integer color channels). Pros: The image is an information-rich representation and can capture many details of a design. The use of convolution/convolution-transpose filters in deep learning provides a convenient tool for learning/generation of both high-level and low-level features as well as upsampling/downsampling. Many cutting-edge ML techniques are pioneered in the computer vision domain and are often directly applicable to images. Cons: Representing designs using pixels means that the generated design images can be infeasible for downstream tasks. Accurately fabricating designs based on images can be difficult or impossible. Even performance evaluation using conventional simulation tools like FEA or CFD can require an intermediate conversion from an image to a 3D model. The poor usability of images is exacerbated by the prevalence of artifacts in many applications (hanging pixels, disconnected geometry, etc.). Artifacts are especially common when training on (typically) small datasets in the design domain since training is often terminated early due to over-fitting concerns. All in all, images can be considered surrogate representations of engineering designs and may lack domain knowledge and information on the physical realization of the design. Therefore, DGMs using images as representations often have a gap between the generated images and the actual design they are representing.

3.2 Voxelizations

Voxels are 3D grid points that are effectively the 3D equivalent of pixels. As such, voxelizations share many characteristics with images. In practice, voxels aren’t conducive to ‘color’ parameterization and are typically represented as booleans (space vs. object). This effectively makes them third-order tensors with dimension (). Pros: Voxelizations support 3D convolution which can learn high-level and low-level features in 3D. Cons: Compared to images and other representations, the curse of dimensionality is especially pronounced with voxels, with the number of parameters scaling with the cube of spatial resolution. Voxelizations share the same issues as images. Their usability is limited in downstream tasks and artifacts are very prevalent. Like images, voxelizations serve as surrogate representations (often representing CAD models which originate from parametric representations or 3D shapes which originate from meshes). Like many other representations such as point clouds and Signed Distance Fields, voxelizations often require conversion before they can be used in downstream tasks. For example, they are often converted to Boundary Representation (BRep) or polygonal representations, which are often the native parameterizations of rendering and graphics software, Finite Element Analysis, and Computational Fluid Dynamics simulation.

3.3 Point Clouds

Point Clouds are simple collections of points, often in 3D space, which are defined to be within some object. Pros: Point Clouds can represent arbitrarily complex geometry with a finite number of points, though fidelity may vary. Point Clouds are often the native output of 3D scanning software, making them relatively easy to create [27]. Cons: Like Voxelizations and Signed Distance Functions, Point Clouds often require conversion to BRep or polygonal representations such as meshes [28] for downstream tasks.

3.4 Meshes

Meshes are a common method to represent objects in 3D space. Triangular meshes are by far the most commonly used form. Triangular meshes are the native representation used in many computer graphics algorithms and software, as well as many Finite Element tools. Pros: Meshes can be directly visualized and simulated in many FEA or CFD tools, enabling easy pipelines for performance evaluation using numerical methods. A mesh can be considered a specialized type of graph and can leverage graph operators like graph convolutional operators. Cons: In contrast to other representations like voxelizations and point clouds, meshes are more challenging to directly generate using Machine Learning methods, despite recent advances in algorithms that directly generate meshes [29, 30, 31].

3.5 Signed Distance Functions

The Signed Distance Function/Field (SDF) is a representation method that consists of a (typically 3D) functional map from a coordinate point to an SDF value. The magnitude of this value indicates the distance to the nearest point on the surface of the object and the sign indicates whether the point is inside or outside the object. SDFs themselves can be represented in many ways, for example, as a rasterized grid in which each ‘voxel’ contains a continuous numerical value denoting the SDF value at that point. Pros: SDFs can serve as a convenient intermediate parameterization for many learning tasks. Cons: Like point clouds or voxels, SDFs are difficult to use in downstream tasks without first converting to BRep or polygonal representations.

3.6 Parameterizations

We use the term “parametric” data to encompass any design representation consisting of a collection of design parameters where any spatial or temporal significance of parameters is unknown. Most parametric data can be organized in tabular form with each row being a collection of parameters representing a single design and each column describing a design parameter. Tabular design data often consists of a collection of mixed-datatype parameters where relations between these parameters may be unclear or nonexistent. Since parametric data may come in many varieties, the pros and cons discussed may not apply to every case. Pros: Quality parametric data is typically very information-dense (i.e. requiring fewer parameters to encode the same level of geometric detail). Whereas spatially-organized representations such as pixels or voxels encode designs with uniform information density, parametric data can contain more detail in design-critical areas without the need for upsampling the entire representation. This information density often comes with a lower dimensionality which can make optimization of parametrically represented designs significantly easier. Parametric data may also be more supportive of downstream tasks, especially if design parameters are human-interpretable. For example, a detailed enough design parameterization may allow generated designs to be directly fabricated using conventional (non-additive) manufacturing techniques. Human-interpretable parameterizations can also give human designers a tractable method to interact with generative methods to allow for human-in-loop design. Finally, design parameters can sometimes be directly linked to the latent space of a generative method, as demonstrated in numerous works [32, 33], creating a pipeline to directly condition design generation on high-level design goals. Linking design parameters with latent variables has several potential advantages, such as enabling more effective optimization or inverse design using generative methods. Cons: Learning parametric data can be particularly challenging. Parametric data commonly uses mixed datatypes and inherits the training challenges of the constituent components. Multimodal distributions, skewed categories, non-Gaussian distributions, data sparsity, and poor data scaling additionally make the application of DGMs and training very difficult. Since methods that are robust to all of the mentioned challenges are difficult to come by, successfully applying existing methods to the parametric data domain can be hard. Finally, since parametric data may be nontrivial to convert to 3D models, generated parametric designs may be challenging to evaluate using numerical simulations or through qualitative visualization.

3.7 Grammars

Grammars are representation methods consisting of variables, terminal symbols, nonterminal symbols, and a set of rules. Rules describe how non-terminal symbols can expand into other terminal and nonterminal symbols. The most prevalent grammars in engineering design are graph and spatial grammars [1], and they have been applied in a wide variety of applications, such as the design of satellites and electro-mechanical systems. Grammars can be especially useful to dictate feasible assembly hierarchies of design components as in [34]. Pros: Grammars can explicitly constrain design spaces to feasible or desirable regions by nature of their construction, thereby encoding domain knowledge. Cons: Grammars are challenging to implicitly learn and often must be manually defined. Grammars can also restrict the exploration of the design space. A survey on grammar-based design synthesis approaches is provided in [1].

3.8 Graphs

Graphs are a highly flexible representation method consisting of nodes and edges, which can be directed or undirected. Graph-based representations have proven successful for many different aspects of design generation and optimization and provided avenues for describing complex systems efficiently. Pros: Graphs are highly adaptable and are capable of representing many different kinds of complex systems and designs [35, 36, 37, 38]. Graphs also provide representations for design processes and modeling complex interactions in systems [39, 40] which may enable methods for automating the design of systems or modeling inter-part dependencies. Graph neural networks (GNNs) [41, 42, 43, 44, 45] provide an excellent tool for machine learning on graphs. Cons: Despite the developments of graph neural networks (GNNs) in the computer science community [46, 47, 48, 49] and their success in molecular graph generation [50, 51], there is less usage of graph-based DGMs in the design community, possibly due to the lack of graph-based design datasets.

4 Literature Review Methodology

Sec. 5 discusses specific works that apply Deep Generative Models to engineering design or make advancements to existing generative ML methods in the context of engineering design. We consider works based on a predefined scope, with each work we discuss meeting the following selection criteria. Note that these criteria only apply to the engineering design papers presented and that the works we cite to add context to or substantiate the discussion of fundamentals, applications, and datasets need not adhere to these rules.

-

1.

We limit our consideration specifically to papers involving Deep Generative Models, focusing on Variational Autoencoders, Generative Adversarial Networks, and Reinforcement Learning in particular. Works must utilize deep learning. Works only considering design optimization are excluded.

-

2.

We only consider work specifically relevant to engineering design and not other domains (such as computer science).

-

3.

We consider only work published between Jan. 2014 and Sep. 2021, when we conclude our review, as many pivotal works in deep learning (CNNs, VAEs, GANs) were introduced in this period.

To identify works, we specifically searched for “Generative Adversarial Network,” “Variational Autoencoder,” and “Reinforcement Learning” in Google Scholar. We initially confined our search to a set of known design venues, specifically the Journal of Mechanical Design, the Proceedings of the International Design Engineering Technical Conferences, Computer-Aided Design Journal, International Conference on Engineering Design, and Artificial Intelligence for Engineering Design, Analysis, and Manufacturing Journal. This helped us identify an initial set of seed papers. From all search results identified through these methods, 41 papers were deemed to be relevant and included. The relevance was decided by independent assessment by two raters, who are also authors of this paper. For papers where there was a disagreement, all authors discussed them and mutually decided on their classification. Next, papers cited in these seed papers were considered and added to this paper. 22 papers from other venues were deemed to be relevant and included as well. Of the 63 papers, 48 were published between Jan. 2019 and Aug. 2021, while a mere 15 were published between Jan. 2016 and Dec. 2018, indicating strong growth in the field in recent years.

| Topology Optimization | Materials | 2D Shape Synthesis | 3D shape synthesis | Other Domains | |

| Plain NN | [52]i [53]vi [54]p∗ [55]i | [56]p [57]i [58]i | |||

| GAN | [59]i [60]i [61]i [62]i | [63][64]i [65]vi [66]v [67]i [68]vi | [69]c | ||

| GAN+Conditioning | [70]i[71]i[72]i [73]i | [74]p[75]p[76]p[33]ph | |||

| New GAN-based | [72]i | [15]p | [77]v | [78]i | |

| AE/VAE | [79]i | [80]i [81]i [82]i [32]i [83]i | [84]v | [85]p, [86]p,[87]p | |

| cVAE | [88] [89]i | [85]p[90]i | |||

| New VAE-based | [91]i | [92]s | [93]ip | ||

| RL-based | [94]p [95]p | [96]ip [34]g [97]ip [98]p [99]p | |||

| Other Method | [100]v [101]i | [102]i [103]i [67]i [104]i [105]i [106]i | [95]p | [100]v[107]p [34]g [108]ip [109]ip [110]g | |

| +Style Transfer | [79]i | [64]i[65]vi[80]i [57]i [104]i | |||

| +Genetic Alg. | [82]i [105]i | [92]s | |||

| +Bayesian Opt. | [64]i [83]i | [76]p |

*Model-based reconstruction using parameters in a lower-dimensional space

Technically not images, but use an image-like parameterization structure

5 Application Domains in Engineering Design

Engineering design encompasses a wide variety of applications, ranging from designing aircraft models to small-scale metamaterials. To structure different types of applications using DGMs, we grouped them into a few categories of application areas, which are discussed in this section and are reported in Table 1.

5.1 Deep Generative Models in Topology Optimization

Topology Optimization (TO) is a research field with a long history of research and methods. TO searches a design space to find an ideal spatial distribution of material to optimize some predefined objective. Common areas of application include solid mechanics [111, 112], fluid dynamics [113, 114], additive manufacturing [115, 116], and heat transfer [117, 118]. While Topology Optimization is considered a method for generative design, standard TO does not leverage deep learning and is not considered a DGM. In recent years, however, several papers have proposed methods to use TO and DGMs together, in many cases to address the computational cost of TO on large amounts of data. Despite differences between architectures, we found that many DGMs for TO share certain characteristics. In particular, due to the predominant use of voxelized or pixelized representations, many hybrid TO-Generative ML models use methods originally developed for computer vision applications, such as convolutional neural networks and super-resolution [59, 53].

Supervised generation of optimized topologies:

To avoid the computational cost of Topology Optimization, DGMs have been used to predict final optimized topologies directly. This approach can also be used as an initialization technique for conventional TO, which allows conventional TO algorithms to rapidly converge, saving computational cost. We consider a baseline for DGMs in TO, in which an existing generative architecture is applied to a dataset of TO-generated designs and the network is trained to generate samples mimicking the training data. A few papers fall within this category of methods: Rawat and Chen [60] train a WGAN on a dataset generated through TO and train an auxiliary network to additionally predict performance metrics. Sharpe and Seepersad [70] expand on this baseline with a cGAN conditioned on volume fraction and load location. Guo et al. [79] train a VAE with an additional style transfer [119, 120] loss on a TO-generated dataset for heat transfer and propose iterative strategies for targeted design optimization using the VAE’s latent space. Style transfer is discussed further in Sec. 5.2.

Iterative DGM training, filtering, and human guidance in TO:

Oh et al. [62, 61] propose a method that expands on the baseline generative-network-fitting by iteratively synthesizing new designs, optimizing them in TO, then dropping designs that are too similar from the full collection of designs. The authors use a modified Boundary Equilibrium GAN (BEGAN) [121], which extends on the WGAN and begin training on a collection of existing TO-generated topologies. However, unlike the previously discussed generative-network-fitting approaches used in literature [60, 70, 79], the retraining and re-optimization allows the framework to generate, optimize, and explore new areas of the design space. The proposed method is applied to the problem of wheel design, with the key motivation being to find a trade-off between the aesthetics of real designs and the structural performance of designs found through TO. The authors present strong empirical results on this wheel design problem.

The issue of gaps in the design space of topologies is also addressed by Fujita et al. [101], who propose an approach leveraging a Variational Deep Embedding (VaDE) [122]. An initial dataset is generated using TO. The proposed method sequentially identifies voids in the design space using the VaDE, decodes designs from the void, optimizes them using TO, then adds them to the training dataset.

Although the above works have proposed methods with automated retraining steps, human input can also be injected into the training process. For example, Valdez et al. [73] propose a cGAN-based human-in-loop topology design generation framework in which the designer iteratively selects design clusters to gradually hone in on preferable designs.

DGMs for TO that utilize super-resolution:

Since one of the key limitations of Topology Optimization is computational cost, which scales with the resolution, a major focus in DGMs for TO has focused on attaining high-resolution TO-like results. A common approach uses super-resolution, which is a technique used in computer vision to convert low-resolution images to high-resolution ones. Several works expand on the baseline DGM-in-TO framework by learning from a low-resolution TO-generated dataset, then performing super-resolution on synthesized topologies, such as Yu et al. [72], who add a cGAN for upscaling. A similar approach proposed by Li et al. [59] uses a Super-Resolution GAN (SRGAN) [123] to generate high-resolution optimal topologies for heat transfer problems after generating low-dimensional topologies on a different GAN. Other researchers have proposed transfer learning for the super-resolution task instead of using GANs or VAEs for super-resolution. For example, Behzadi et al. [53], train a feedforward NN-based model to predict optimized topologies directly without any discriminator and using the MSE loss. Once they have trained their model on the low-resolution data, they lock the model weights and add a few layers to the model to increase the output resolution and only train said layers to obtain high-resolution samples. This avoids the need for a large quantity of high-resolution data or the extra time required to train a high-resolution model from scratch. Many papers that have applied super-resolution techniques demonstrate that after initial training, their framework consistently generates near-optimal topologies many times faster than classic TO [72, 59].

Improving DGM-based topology generation using physical properties:

Several papers have succeeded in improving the baseline performance of DGMs for topology generation through the use of physical properties of the design domain. For example, Nie et al. [71] propose to adapt the cGAN architecture Pix2Pix [124] to generate synthetic topologies based on spatial fields of various physical parameters (displacement, strain energy density, and Von Mises stress) as input to the generator. Topologies generated by TO are taken as ground truth for training. The authors also propose a new generator architecture combining the Squeeze and Excitation ResNet [125, 126] with U-Net [127]. Cang et al. [55] use a neural network to generate optimized topologies from loading conditions. They propose an approach to rapidly evaluate the deviation of proposed solutions from the problem’s optimality conditions. They then progressively augment their dataset during training by recalculating optimal solutions (using TO) for proposed solutions that are in greatest violation of these conditions.

Other DGM approaches in topology generation:

Sosnovik and Oseledets [52] propose to use gradient information from a topology distribution to inform estimates of a final topology through a DNN. Their DNN takes both the density distribution and gradient of this density distribution from some intermediate step of a TO process to generate an estimate of the final topology without waiting for TO convergence. The authors demonstrate binary accuracy of when predicting the final topology after only five iterations of TO, when the full TO process would take 100 iterations to complete.

In a different kind of approach, Keshavarzzadeh et al. [54], address the generalization problem of training for different scales and different domains. They parametrically represent shapes in both 2D and 3D using their proposed “Disjunctive Normal Shape Model” (DNSM) [54]. Using this DNSM, they create a platform for resolution-independent shape reconstruction. They then train an NN model to generate optimal topologies given boundary conditions and other problem-specific information in the DNSM space, which then can be reconstructed at any resolution using the DNSM. They demonstrate that their DNSM method overcomes the super-resolution problem across a variety of problems and domains.

5.2 Microstructure, Nanostructure, and Metamaterials

Many design applications not only require the need to design the topology or shape of the artifact, but also the material properties within it. Inverse design of materials is one of the key elements of Computational Materials Science. The most common approach to developing inverse materials design frameworks is the development of Process-Structure-Property (PSP) links, i.e. understanding how a particular material processing approach impacts its microstructure and how the corresponding microstructure impacts its physical properties [128]. Designing material microstructures for direct use is difficult, as there must then be some fabrication process to generate a material with the target microstructure. A common goal in computational materials science is microstructure image “reconstruction,” in other words, generating microstructure images that exhibit certain characteristics. Bostanabad et al. [128] give an overview of the Microstructure Characterization and Reconstruction (MCR) field. Reconstruction can accelerate downstream tasks like augmenting the training data of networks that attempt to model PSP links. Better modeling PSP links can in turn create more accurate generative material design pipelines. Many classes of DGMs have been applied to this reconstruction task, including GANs [64], VAEs [80] and Convolutional Deep Belief Networks (CDBNs) [129] [103, 102, 106]. Other studies attempt to bridge the gap between microstructure and properties in generative tasks using trained black-box surrogates, such as Tan et al.’s work [63]. Most research focuses on 2D microstructure images, though several also consider 3D voxelizations [66, 68].

Since reconstruction often involves mimicking existing microstructures, several papers have applied style transfer [119, 120] to the problem as part of a DGM architecture. Style transfer is in essence a loss between two images that attempts to capture the difference in “style” between the images. It is typically calculated by comparing the intermediate layers of an auxiliary style convolutional neural network. Cang et al. [80], for example, propose a VAE with style transfer which is targeted at applications where only a small set of training data is available. Li et al. [57] also utilize style transfer in their proposed transfer learning reconstruction framework. In another approach using style transfer, this time using a vanilla GAN, Yang et al. [64] expand on the reconstruction task by attempting inverse design on the structure-property link. After training their GAN with style transfer loss and an additional loss to penalize mode collapse, they treat the noise vectors as input variables and optimize them with Bayesian optimization, using the generator to translate between design vectors and microstructure images. The authors choose to optimize microstructures for energy absorption which can be evaluated from images using coupled-wave analysis [130], providing them a convenient structure-property link that would be more challenging if optimizing for other objectives.

More recently, Fokina et al. [104] adapt StyleGAN [7] to the microstructure image synthesis domain. Other studies impose the “style” of generated microstructure images through enforcement of physical properties. For example, Chen et al. [89] propose an approach to generate Random Heterogeneous Material (RHM) microstructure images using a cGAN conditioned on target images. Their cGAN uses an augmented loss based on the matching of perimeter, volume, and Euler characteristics. DGM studies in microstructure design have also used other advanced methods introduced in computer vision such as super-resolution[58] and image translation [67, 4, 67, 124].

Although 2D images are a widely used means to represent microstructures, Zhang et al. [65] expand the microstructure reconstruction task to the 3D domain. Their ScaffoldGAN method generates scaffold materials to mimic real-world examples of human bone scaffolds as well as foam metal scaffolds in both 3D and 2D data. They employ the conventional GAN approach with style loss for better visual similarity between generated scaffolds and real ones. However, the authors additionally introduce a novel “structural loss” term to specifically address spatial coherence, a limitation of GANs in emulating scaffolds. This additional loss helps them achieve better results that mimic the features of the data more realistically. Other studies also consider DGMs on 3D microstructures, such as Mosser et al. [66] and Liu et al. [68].

Photonics and phononics:

Within microstructure design, the design of materials with photonic or phononic properties is an area of interest in numerous industries including sensing, communications, and display technology. The work by Yang et al. [64] is an example of a subfield of generative microstructure design targeting the development of microstructures with particular photonic or phononic properties. Molesky et al. [131] also provide a review of inverse design in nanophotonics.

In the photonics and phononics subfield, performance evaluations such as the one used in [64] are frequently used to incorporate performance into DGMs. For example, the technique of optimization using learned mappings from the latent space of a trained autoencoder to the property space has been employed in several papers. Li et al. [81], Liu et al. [82], and Wang et al. [105] train an autoencoder, VAE, and Gaussian Mixture VAE [132] respectively on images of microstructures. They map the latent variables to the property space using a DNN, CNN, and Gaussian Process Regressor, respectively. Li et al. [81] directly optimize the properties using the DNN, while Liu et al. [82], and Wang et al. [105] optimize using a Genetic Algorithm.

Though optimizing latent variables is a common approach, several other DGMs have been proposed for photonics/phononics design that optimize performance in other ways. For instance, Malkiel et al. [56] uniquely use a parametric representation and a bidirectional DNN to simultaneously learn a bidirectional mapping between nanostructures and the property space. Ma et al. [91] propose a novel VAE-based framework consisting of feature extraction, prediction, recognition, and generation networks. The proposed method is capable of both forward prediction of properties based on metamaterial structure as well as the inverse (generative) prediction task based on properties. Furthermore, the framework supports self-supervised learning where the model trains on unlabeled metamaterial pattern images without corresponding property labels. The authors also demonstrate transfer learning to other microstructure shape classes as well as the inverse design of multiple microstructures forming a meta-mirror with desired properties. DGMs have also been applied to nano-scale photonic devices, such as an optic broadband power splitter as in Tang et al. [88].

Unit-cell-based metamaterials:

Research in generative design for microstructures has also focused on the development of unit cell structures for metamaterials. For instance, Wang et al. [32] fit a VAE to metamaterial unit cells and demonstrate that latent space parameters can reflect the physical properties of the unit cells. They then demonstrate several downstream tasks using their VAE, such as diverse subset selection and generation, targeted generation to match desired stiffness matrix values, and metamaterial family design. The authors also demonstrate several 2D macro-optimization runs to design arrays of unit cells to match macro-level deflection targets in an approach similar to classic TO. Xue et al. [83] train a VAE to generate unit cells, then perform Bayesian Optimization in the latent space of the VAE to attain desired macroscopic elastic properties.

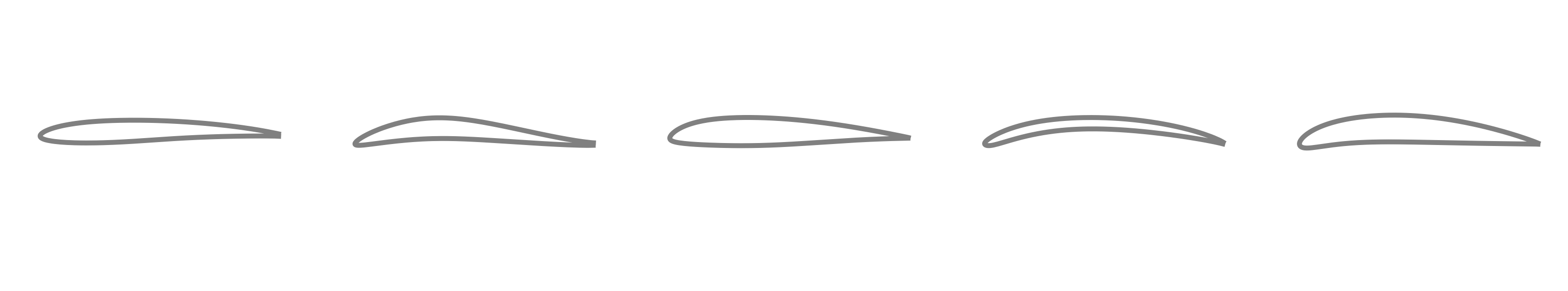

5.3 2D Shape Synthesis

Designing features with critical geometric considerations is a task that appears in several engineering fields such as aerospace and automobile design. DGMs have been widely applied to these problems. Within aerospace, the design of an airfoil, which is the cross-sectional shape of a wing, is of particular interest to many researchers. Airfoils have a wide variety of uses in engineering domains such as propeller, rotor, and turbine blade design. Since airfoil performance parameters are of key interest, most of the research focuses on performance-aware conditional generation. For example, Yilmaz and German [74] apply a cGAN to the airfoil design problem, conditioning their network on various stall parameters. While the overwhelming majority of research in this domain uses DGMs trained to learn shape parameterization based on spline interpolation of Cartesian points, equation-based parameterization is also sometimes used. For example, Li et al. [94] propose a performance-aware RL framework where the RL agent learns the optimal equation coefficients using Proximal Policy Optimization (PPO) [133].

Other works generalize their applications to general 2D shape synthesis. For example, Chen and Fuge [75] propose Bézier GAN, a framework that learns shape representations through Bézier curves, featuring InfoGAN style conditioning [17]. Chen et al. [76] expand on this work with Bayesian Optimization to maximize lift/drag ratio. Classic test data for 2D shape synthesis methods are the UIUC airfoil dataset as well as artificially-designed shapes like superformulas [134]. While the emphasis of certain papers is on shapes or airfoils themselves, several papers primarily propose methodology advancements that they choose to demonstrate on the 2D shape synthesis domain. For example, Chen and Fuge [33] address the challenging problem of multi-component design generation using a GAN to synthesize parts using inter-part dependencies. The authors assume inter-part dependencies in a design are known and propose modeling them using directed acyclic graphs. They propose a hierarchical adaptation of the InfoGAN using a single discriminator for the entire design and one generator/auxiliary network pair for each part in the design, which they term the hierarchical GAN (HGAN). The method is tested on a medley of synthetic datasets generated using BézierGAN [75] and demonstrated to form meaningful and interpretable latent spaces. Although the paper assumes that part dependency graphs for the object class are well defined, this paper takes a significant step towards multi-component design synthesis and interpretable GANs.

Several other papers use the 2D shape synthesis domain to address the challenges of performance evaluation in DGMs. Dering et al. [95], for example, apply an iterative retraining approach to boat sketches using an adaptation of the Long Short-Term Memory (LSTM)-based Sketch-RNN [135] as the generator. To evaluate candidate designs, the performance is scored using a simulated environment in a game engine, within which the “behavior” (motion) of the design is learned.

A recent work by Chen and Ahmed [15] addresses both performance evaluation and design novelty through their proposed Performance Augmented Diverse GAN (PaDGAN) framework. Conventional GANs are trained to mimic the design space they are trained in and as a consequence, are penalized for generating novel designs. PaDGAN expands on the conventional GAN architecture by modeling design performance and design diversity using a Determinantal Point Process (DPP) kernel [136]. By promoting diversity, PaDGAN directly addresses mode collapse in GANs too (see Sec. 2.1). The authors demonstrated that PaDGAN is capable of generating high-quality and previously unseen novel designs for the UIUC airfoil database, using the lift to drag ratio as the quality metric. [137] extends this work to multi-objective generation.

5.4 3D Shape Synthesis

3D object generation using deep learning is an active field in computer science, with significant research efforts being dedicated to generating realistic-looking shapes and objects. 3D shapes and objects are typically represented by voxels, point clouds, or meshes. Advancements in 3D shape synthesis in the computer graphics community have leaned heavily on GANs or Autoencoders, as well as other machine learning advancements like Recurrent Neural Networks (RNNs), Transformers, and Graph Neural Networks (GNNs). This research is tangentially relevant to engineering design but is typically focused more on visual appearance and aesthetics rather than design considerations like functional performance derived from simulations or manufacturability. For this reason, we do not discuss these methods in detail and opt to list a few of the many noteworthy papers in this field from 2016 to 2021 for the reader to further explore at their discretion: [138, 139, 140, 141, 142, 143]. It is important to note that many of these proposed DGM architectures could be adapted to the design domain too. Despite the prominence of 3D object generation papers in the computer graphics community, a few have arisen within the engineering design community as well. In an early work, for example, Brock et al. [84] implement a 3D cVAE to reconstruct and interpolate between voxelized models from the ModelNet-10 Dataset.

The majority of 3D shape synthesis work in engineering design, however, has implemented some kind of design performance consideration. Zhang et al. [92] propose the use of a Genetic Algorithm (GA) to optimize latent space design embeddings of a trained VAE variant called the Variational Shape Learner (VSL) [144], which they demonstrate on the optimization of 3D aircraft models. Shu et al. [69] take an iterative retraining approach by retraining a GAN on high-performance models evaluated using Computational Fluid Dynamics (CFD) evaluation. The authors apply the proposed method to point cloud aircraft models from ShapeNet and use minimization of aerodynamic drag as the performance objective of choice. The authors use a standard GAN loss and use a discriminator architecture from [145]. Nobari et al. [77] introduce another self-augmentation approach to train on skewed or sparse datasets. They couple it with a “range loss” to encourage designs to comply with design constraints related to parameter bounds and apply their approach to generate 3D aircraft models.

5.5 Other Applications

In this section, we discuss several application domains for DGMs with only a few relevant papers identified in our search.

Manufacturability:

Synthesizing manufacturable designs is an important, albeit less-explored area in DGMs. Greminger [100] proposes approaching the problem of manufacturability using an MSG-GAN architecture [146] adapted to the 3D domain. The author synthetically generates topologies that are manufacturable by a 3-axis mill, trains the GAN to generate similar topologies, then optimizes generated topologies using TO. A key challenge in GAN-based synthesis of manufacturable designs is a lack of annotated datasets with design and manufacturing process details.

Fluid flow shapes:

DGMs have also been used to generate flow shapes. For example, Lee et al. [96] apply a Double Deep Q-Network (DoubleDQN) [147] RL framework to learn the geometry of a microfluidic channel to generate target flow shapes. The authors also propose methods in which a human designer can participate in the design process alongside the RL agent to impart design knowledge or constraints. Other approaches that integrate humans into the design generation process are discussed next.

Integrating humans into the generation process:

One element of design that is often less emphasized in DGMs for design is human interpretation and design input during the generative process. Though datasets are often created using some human input, humans are not directly involved during the training process of most DGMs. Burnap et al. [90] note that numerical performance measures often do not correspond to a human’s perception of design quality, and study this phenomenon on automobile images generated through a conditional VAE. Supporting the involvement of humans during the training process is a potential approach to find the middle ground between the expertise and intuition of humans and the detailed information of datasets, which can enable different tasks. For example, Wang et al. [78] look at the problem of generating samples that are visually pleasing for any given human subject. To do this, they train a modified version of the Auxiliary Classifier GAN (ACGAN) [148] which classifies the type of image being generated at the same time as learning to generate images. The authors, however, do not opt for a cGAN architecture. Instead, they condition the GAN using Electroencephalography (EEG) signals from human subjects. They train an encoder that takes EEG signals and transforms them into a set of input features for the GAN. By exposing human subjects to the images as they are being generated, the encoder introduces the human response to the design into the generation process. This effectively allows for a human subject to extract more visually pleasing samples from the GAN model. Several other studies such as [73, 85, 96] propose frameworks that incorporate humans into the design process.

Kinematic synthesis:

In machine design, generating a mechanism to generate specific motion curves or transmit power is a common design task. Kinematic synthesis involves selecting the size, type, and configuration of mechanism components to achieve such a goal. Several works have applied DGMs to various kinematic synthesis problems. Deshpande and Purwar [85], for example, propose a VAE-based method for planar linkage synthesis. They deterministically parameterize both coupler paths and linkages, then generate sample coupler paths from a variety of linkages (four-bar, slider-crank, Stephenson-type six-bar). In the first of their two studies, the authors train a VAE to reconstruct trajectories. They then propose a method in which humans can interact with the trained VAE to customize linkage paths to their design goals by visualizing the effect of various latent space perturbations and selecting one that matches their design vision. The authors then expand on their first study with a cVAE architecture, feeding in the linkage as the data samples and the coupler curve as the condition vector. In a similar work, Sharma and Purwar [86] explore spatial linkage synthesis of 5-SS mechanisms using VAEs. In other papers, the same authors take a look at paths themselves as guiding mediums for linkage mechanism design by first training models to encode paths, then using said models to search within a dataset of mechanisms to obtain solutions for the specific paths [93].

Other studies have approached the kinematic synthesis task through Reinforcement Learning. Vermeer et al. [107] propose using Deep Q-Learning to synthesize planar linkage mechanisms to obtain mechanisms that are capable of producing straight lines, showing that RL can be effective in generating linkage mechanisms using machine learning.

Truss design:

Raina et al. [108] propose an RL-based approach to truss design. In their approach, they learn from a dataset of sequential human design decisions [149]. They first train an Autoencoder to map trusses to and from a design embedding. Sequential design embeddings are then used to predict a heatmap of possible next design states using a supervised transition network. Interestingly, the authors choose to convert to and from images from their parametric representation when training the autoencoder and using decoded results. Finally, a rule-based agent selects from possible next design steps. Even without knowledge of design metrics and performance, the RL agents were found to generate competitive designs compared to humans. Puentes et al. [109] expand on this work by learning action-sequence heuristics instead of individual design actions. Raina et al. [97] further expand on this work with a goal-based reinforcement learning agent.

Hierarchical product design synthesis:

Some works have applied DGMs to multi-component products, typically with a well-known hierarchical structure. Stump et al. [34], for example, propose a unique approach that simultaneously combines RL, Recurrent Neural Networks (RNNs), grammars, and physics-based simulation. In their approach, the RNN learns to generate modular sailing crafts by sampling discrete selections from a predefined shape grammar. The training of the RNN is detailed in [110]. The control policy of the craft is then optimized using RL in a physics-based simulated sailing environment. Regenwetter et al. [87, 150] explore the full synthesis of bicycles, a diverse class of products typically consisting of numerous hierarchical components. The authors demonstrate full generative synthesis of bikes using VAEs for both image and parametric representations. The authors note that their detailed design parameterization allows AI-generated designs to be physically fabricated.

Procedural content generation:

Lopez et al., explore procedural content generation in a specific context for virtual reality. In their work, they introduce a reinforcement learning model that can learn to generate 3D virtual reality (VR) content which users can explore in a VR environment [98]. In their approach, they specifically work towards generating manufacturing environments that are physically valid and feasible. In an extension of this work, Cunningham et al., extend the method to generate content for multiple contexts instead of just one at a time [99], which enables the integration of user-specific parameters.

6 Datasets

In this section, we present commonly-used datasets that have been or have the potential to be used to train DGMs for data-driven design tasks. We provide a more detailed list online111https://decode.mit.edu/datasets/. While the datasets listed are not comprehensive, we aim to note key types of datasets that have been commonly used in engineering design applications. We hope that researchers can create larger well-annotated datasets and make them public for the community to use.

6.1 Topology Optimization Datasets

Most papers on DGMs for Topology Optimization generate their own datasets, which are often not publicly available. The most common method for dataset generation is Solid Isotropic Material with Penalisation (SIMP), which has several publicly available software implementations. A few TO datasets, however, are open source. Sosnovik and Oseledets [52] generate a dataset of 10,000 artificially generated topologies providing both final and intermediate topologies from the optimization process222https://github.com/ISosnovik/top. Each included topology contains one 40x40 image of the topology generated at every stage of 100 steps of Topology Optimization. Hence, a total of one million images are provided. Nie et al. [71] provide the dataset used to train their TopologyGAN framework333https://github.com/zhenguonie/2020_TopologyGAN. Unlike the aforementioned, this dataset consists only of final topologies but contains 49078 generated topologies at a resolution of 64x128, generated from 42 unique boundary conditions. Both datasets discussed use the ToPy [151] implementation of SIMP.

6.2 Microstructure Datasets

Well-established technologies such as optical microscopy (OM) and scanning electron microscopy (SEM) are often used to visualize material microstructures. As such, numerous datasets of microstructure scan images are publicly available, such as [152], [153], and [154]. Numerous datasets are also available for the design of composite materials of various types, such as the NanoMine nanopolymer composite database444https://github.com/tetherless-world/nanomine-ontology, which contains over 20,000 datapoints [155]. Compiled lists of materials science datasets555https://github.com/sedaoturak/data-resources-for-materials-science and synthetic microstructure datasets are also available. For example, Yang et al. [64] provide a trained GAN model which generates synthetic microstructure images666https://github.com/zyz293/GAN_Materials_Design.

6.3 Design Geometry Datasets

The UIUC airfoil database777https://m-selig.ae.illinois.edu/ads/coord_database.html has been used as a case study in several generative design research frameworks [76, 15, 75]. The database details nearly 1,600 real-world airfoil designs using coordinates of points on the surface. Since the original data provides inconsistent numbers of coordinates along the top and bottom surfaces, Chen et al. [76] propose a method to standardize the data with B-spline interpolation over the airfoil which is also used in other works [33, 15].

6.4 3D Object Datasets

ShapeNet888https://shapenet.org/ [156] is one of the most commonly-used 3D model datasets, consisting of over 51,300 3D models of 55 object categories. PartNet [157] expands on ShapeNet with fine-grained hierarchical semantic annotations for component parts of ShapeNet objects. Princeton ModelNet999https://modelnet.cs.princeton.edu/ [158] is another commonly-used 3D model dataset consisting of 127,915 voxel-based 3D models of 662 object categories. Numerous works discussed in this review use ShapeNet or ModelNet models [69, 77, 92, 84].

Sangpil et al. [159] introduce a dataset of 58,696 models of mechanical components from 68 classes called the Mechanical Components Benchmark (MCB)101010https://bit.ly/3ne4gwv. The MCB contains a hierarchical label tree grouping components into subclasses of different levels, such as Components Fasteners Nuts Wingnuts, for example. Models are represented as point clouds, voxels, and 2D views.

6.5 CAD and CAD-based Datasets

Willis et al. [160] introduce two datasets of Autodesk Fusion models111111https://github.com/AutodeskAILab/Fusion360GalleryDataset. One dataset, intended for reconstruction tasks, contains 8,625 models and the other, intended for segmentation, contains 35,680 models. The reconstruction dataset is particularly interesting for generative tasks, as it contains information governing the sequential CAD operation steps taken to generate a part.

Regenwetter et al. [87, 150] introduce a dataset called BIKED121212https://decode.mit.edu/projects/biked/ consisting of mixed data extracted from 4,512 bicycle CAD models. The dataset includes bicycle assembly images, segmented subcomponent images, as well as “parametric” data. The “parametric” data consists of 2,395 mixed-type design parameters describing both high-level and low-level characteristics. BIKED provides an advantage over conventional 3D object datasets for generative tasks in that synthesized designs contain the necessary parametric information to physically fabricate designs. BIKED’s parametric data is used in [87] for bicycle synthesis and its image data in [161] for generating novel designs. The FRAMED dataset [162] expands on BIKED with structural performance data, such as weight, safety factors, and deflections under various loads for all 4512 models as well as artificially generated bicycle frames131313https://decode.mit.edu/projects/framed/.

6.6 Metamaterials Datasets

Wang et al. [32] introduce a dataset of 248,396 2D unit cells represented by 50x50 pixelated matrices. The unit cells have associated stiffness tensor components provided and can also be used for TO research. Chan et al. [163] introduce a dataset of 3,000 3D isosurface unit cells sampled from 30 level-set functions, along with corresponding 3D elastic tensor components. Wang et al. [164] introduce a dataset of 795 unit cells generated from 10 lattice models. Associated stiffness tensors are provided. The three datasets can be found here141414https://ideal.mech.northwestern.edu/research/software/.

6.7 Sketch Datasets

QuickDraw [165] is a sketch dataset of 50 million doodles from 345 categories151515https://github.com/googlecreativelab/quickdraw-dataset. The doodles were collected by Google from user-drawn sketches in an interactive sketching game. QuickDraw data is used in several works discussed [166, 95]. Toh & Miller [167] introduce a dataset of 934 innovative milk frother design sketches with associated text descriptions that can potentially be used to train DGMs, perhaps factoring in Natural Language Processing (NLP)161616https://sites.psu.edu/creativitymetrics/2018/07/18/milkfrother/.

6.8 Sequential Human Design Datasets

McComb et al. [149] provide a tabular truss design dataset taken from a truss design activity executed by sixteen human teams171717https://www.sciencedirect.com/science/article/pii/S2352340918302014?via%3Dihub. Sequential design operations such as joint and member placement are recorded using geometric parameters such as joint coordinates, member size, etc.. Performance metrics such as safety factors and weights are also included. While this dataset can be used as a truss design dataset, it is primarily intended as a resource to study or mimic the human design process. We note that the Autodesk Fusion reconstruction dataset mentioned previously [160] can also be used for learning sequential design tasks.

7 Discussion, Challenges and Future Work

When applying DGMs to engineering design, the ‘standard’ objective of mimicking the training data is often insufficient, or even counterproductive. Instead, real designs are governed by specific objectives and constraints, often including novelty or creativity. Thus, different objectives, such as real-world performance metrics, novelty, and adherence to constraints may make for better training objectives. We discuss the challenges with performance evaluation, constraint violation, and incorporation of novelty in the following sections, as well as pathways to potential solutions. We further discuss some general challenges for DGMs in engineering design, such as limited availability of data, lack of benchmark problems and metrics, and delayed adoption of cutting edge methods from different research communities. Our discussion is supported by observations from our literature review.

7.1 Design Performance Evaluation

Incorporating performance evaluation into DGMs is one of the key stepping stones toward practical applications of ML in design. Three major challenges in performance evaluation are fidelity, cost, and differentiability.

-

1.

Evaluation methods lacking fidelity may result in DGM-generated designs that do not meet specifications.

-

2.

Computational cost precludes compatibility with methods that heavily sample performance values.

-

3.

Lack of differentiability makes implementation into the training process difficult in any machine learning models which rely on gradient-based optimization.

Physical evaluation, which means building a product and testing its performance in the real-world, typically has the highest fidelity but is rarely adopted in DGMs due to its prohibitive cost. Qualitative human evaluation of designs has also been investigated in several papers [85, 73, 96]], although the evaluation is typically not focused on performance. Medium-fidelity evaluations, such as numerical simulations often deliver satisfactory fidelity, but can be costly and are rarely differentiable. Methods that do incorporate medium-fidelity performance evaluation like [69, 62] do not rely on performance score gradients, and instead alternate (re)training and performance evaluation, typically doing this retraining a handful of times.

Low-cost evaluation methods like surrogate models can be worked into the training objective and are by far the most common performance evaluation method seen in DGMs [15, 20, 77, 18, 70, 79, 60]. Unfortunately, surrogate models can be brittle and generalize poorly to designs that differ from the data the surrogate was trained on. This is a particular concern when training data for surrogates is unevenly distributed across the performance space [77] and when novelty and performance are incorporated into training objectives for DGMs. Many different approaches have attempted to improve the performance of low-fidelity surrogate models. For example, self-supervised data augmentation [77] uses the DGM itself to generate samples in sparse regions (through optimization [137] or conditioning [77]) which then can be used to train the low-fidelity models to perform more accurately and in turn improve methods that rely on them for design generation. Multi-fidelity modeling is another promising approach, which involves generating surrogate models that augment a few costly high-fidelity samples with low-fidelity samples to attain higher fidelity surrogates with minimal expense.

Advancements have also been made in using machine learning to improve and accelerate medium-fidelity physics simulations such as Finite Element Analysis [168, 169, 170, 171, 172] and Computational Fluid Dynamics [173, 174, 175, 172]. These works, although not yet robust enough to be easily applied as an alternative to high-fidelity simulations, provide a proof of concept for machine learning-based acceleration and even replacement of higher-fidelity simulations.

Numerous studies have also proposed crowdsourcing methods to evaluate synthesized data from generative machine learning methods [90, 176, 166] instead of physical or physics-based evaluation. Though the fidelity of crowdsourced evaluation is highly task- and crowd-expertise-dependent, the high evaluation cost and lack of differentiability are fairly universal.

In summary, current DGMs are largely constrained in performance evaluation by an inherent fidelity vs. cost tradeoff, however, several directions show promise in enabling faster and more accurate evaluation methods that may escape this limitation.

7.2 Feasibility, Constraints, and Manufacturability

One key component of design performance is obeying explicit design constraints, as well as implicit constraints such as physical feasibility and manufacturability. DGMs are difficult to rely upon for explicit design constraints since they are generally probabilistic and may generate completely invalid designs. This issue is a concern that many researchers have pointed to [177, 33, 103]. One potential solution is to develop inexpensive and reliable validation methods, however, this is a challenging task that may require significant human input.

Another underlying issue lies in design representation, which is discussed in detail in Sec. 3. Representations such as images often have no clear translation to representations with practical uses. Other representations, such as 3D models of various types can only be realistically fabricated using additive manufacturing, which precludes many design domains. For ML-generated designs to be physically fabricated and used in practical applications, the feasibility across this ‘domain gap’ must be overcome [103, 87]. Though a few works have investigated manufacturability [100], we observed that modeling it is not considered in most of the papers we reviewed. Other works have attempted to apply DGMs to parameterizations that encode similar parametric design data to what would be used in manufacturing drawings. However, the same works identify that parametric data of this sort is challenging to learn and generate [87].

7.3 Creativity and Novelty

Whereas creativity and novelty are essential aspects of the classic design process, DGMs rarely explicitly consider either. Most DGMs learn to mimic the data covering the existing and already explored portions of the design space. While this emulative behavior is helpful for maintaining realism and ensuring sample quality, it incentivizes DGMs against generating creative or novel designs [178]. For DGMs to progress towards more human-like design, advancements must be made in modeling creativity as well as in developing architectures that promote creativity.

Several recent works [137, 15, 161] have proposed methods to encourage creativity and novelty in DGMs. In their framework, CreativeGAN, Nobari et al., focus on identifying novelty and guiding DGMs towards such behavior by directly introducing novel features into typical designs, thereby expanding the design space and novelty of the DGM’s data. Creativity in machine learning has also been explored outside of the design community. For example, Creative Adversarial Networks (CAN)[178] introduce entropy into the training to encourage the generation of surprising images. Readers are directed to Franceschelli et al.’s survey on the topic for more detail [179].

7.4 Evaluating Model Performance & Benchmark Problems

Readers may have noted that many of the works discussed have nearly identical methodologies, though they are applied to a specific dataset and only compare their work in this respect with a few other baselines. This may be attributed to a large variety of design applications compared to other domains such as computer vision and a tendency of design researchers to find solutions to their particular problem, instead of finding generalizable solutions to many. However, this makes any measure of ‘state-of-the-art’ algorithm dependent on specific applications. Without a good understanding of state-of-the-art methods that are broadly applicable across engineering domains, practitioners will struggle to select models for practical deployment. While some works in the design community have specifically introduced benchmark problems and datasets [87, 162], there is still a need for larger, higher-quality, and more numerous datasets and benchmarks.

The difficulty of establishing a state-of-the-art lies in the lack of benchmark problems and performance metrics within the DGM field and within design automation as a whole. Design datasets tend to be small, restricted in domain, and sparse in distribution as we discuss in Section 7.5. Furthermore, many methods are developed on proprietary datasets. Finally, the hugely different design representation methods across the field make establishing standardized model performance benchmarks difficult, even if there were good datasets upon which to do so. These problems are noted by many authors in the field as well, who find it difficult to compare their approach with existing ones [60, 177].

7.5 Data Limitations and Quality

Data sparsity is one of the greatest challenges facing the data-driven design community and is a particular concern for researchers developing data-hungry DGMs. Broadly, there are three problems when it comes to the data. The first of these is a general lack of data in many design domains. Although datasets continue to cover more and more design fields, there still are many that lack publicly available data. There are also data representations that are underrepresented in the current datasets, notably graphs as discussed in Sec. 3. The second key data-related problem that designers face in applying DGMs is the insufficient size of current datasets. Many of the latest breakthroughs in deep learning, notably in computer vision and natural language processing, have owed their success to very large models which are notoriously data-hungry, requiring millions or billions of training examples, and cannot be properly utilized with datasets of smaller size. Focusing resources on the generation of very large publicly available datasets or effective data augmentation methods would open doors for the design community to leverage larger deep models. The last major limitation in existing data is sparsity and bias. These problems are common in many design datasets. How the data is distributed in the performance space can cause some challenges for many DGMs, especially in inverse design problems [77]. Diversity is important to ensure the data covers the design space as evenly as possible to avoid bias in the data. Keeping diversity and bias avoidance in mind will help researchers generate better datasets.

We highly encourage researchers to develop diverse datasets, publicly release these datasets, and establish benchmark problems for future works. We subsequently encourage researchers to test their methods on other researchers’ data, publicly release their benchmarking results, and acknowledge state-of-the-art methods when possible.

7.6 Other DGMs