Deep Generative Framework for Interactive 3D Terrain Authoring and Manipulation

Abstract

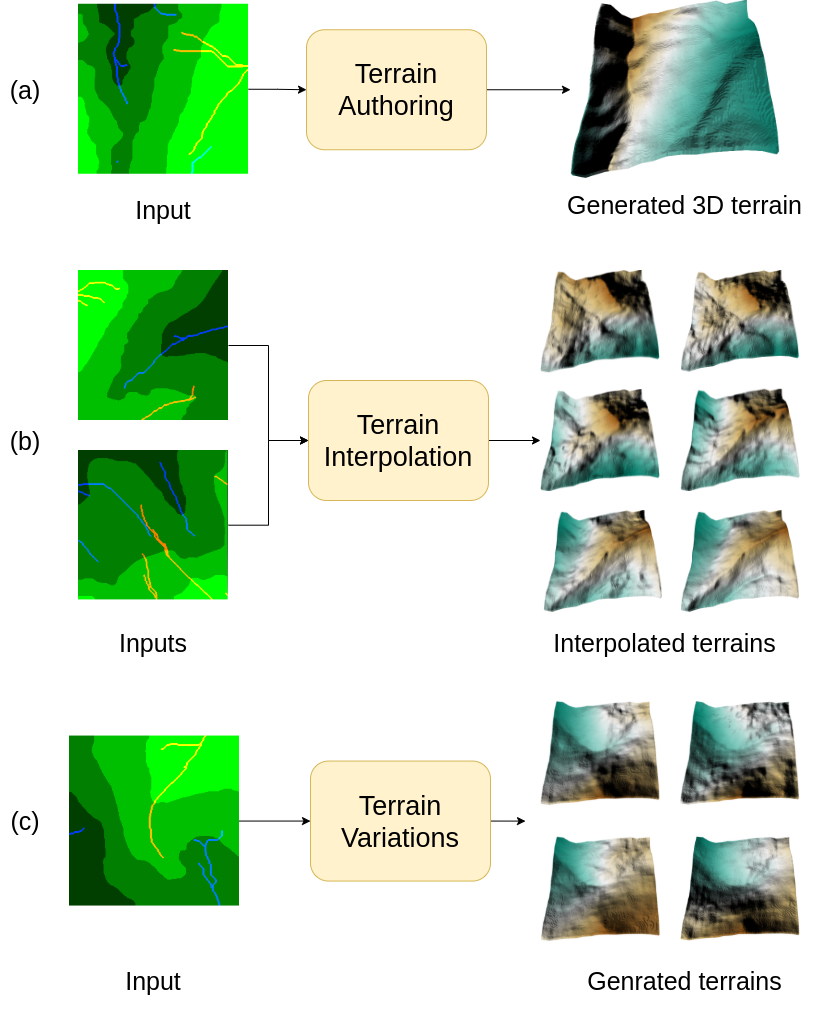

Automated generation and (user) authoring of the realistic virtual terrain is most sought for by the multimedia applications like VR models and gaming. The most common representation adopted for terrain is Digital Elevation Model (DEM). Existing terrain authoring and modelling techniques have addressed some of these and can be broadly categorised as: procedural modeling, simulation method, and example-based methods. In this paper, we propose a novel realistic terrain authoring framework powered by a combination of VAE and generative conditional GAN model. Our framework is an example-based method that attempt to overcome the limitations of existing methods by learning a latent space from real world terrain dataset. This latent space allows us to generate multiple variants of terrain from a single input as well as interpolate between terrains, while keeping the generated terrains close to real world data distribution. We also developed an interactive tool, that lets the user generate diverse terrains with minimalist inputs. We perform thorough qualitative and quantitative analysis and provide comparison with other SOTA methods. We intend to release our code/tool to academic community.

1 Introduction

Terrain modelling aims to create a digital representation of the real world topography and is useful in both scientific applications of land surface processes like flooding, soil erosion as well as virtual terrain rendering in graphics and computer vision applications.

In particular, automated generation and (user) authoring of the realistic virtual terrain is most sought for by the multimedia applications like Virtual Reality (VR) models and gaming. The real world terrains undergo a range of natural transformations such as erosion, weathering, and landslides over the years. This leads to various complex topographies such as hills, mountain ranges, canyons, plateaus, and plains. Some of these significant and unique terrain features are apparent at different scales. All these contribute to making the terrain generation and authoring a challenging task. The most common representation adopted for terrain is Digital Elevation Model (DEM). DEM is a discrete grid representation of the ground elevation and its quality is dependent on the resolution or scale of the grid or pixel.

Existing terrain authoring and modelling techniques have addressed some of these and can be broadly categorised as: procedural modeling, simulation method, and example-based methods [7]. Procedural modeling use algorithms to reproduce the effects of physical phenomena. Simulation methods perform simulations of real-world phenomena such as thermal and hydraulic erosion, weathering and land formation where the modelling of the processes and its interactions involving physical elements lead to the formation of the targeted terrains. Example-based methods use information extracted from real world examples. This method provides better user control over the generated outputs.

Recent advancements in deep learning enabled the ability to learn diverse terrain features for tasks like terrain amplification [13], modifications [20], etc. In the context of deep learning based automated terrain authoring, the literature is very sparse. One of the most relevant example-based terrain authoring method proposed trains a conditional Generative Adversarial Network (cGAN), referred in this work as TSynthNet [10], on large set of real world terrain data to generate realistic virtual terrains from hand drawn user inputs. However they provide a limited user control and generate a single terrain for given input. Additionally, their method allows the use of either drawings of level-sets or ridge/valley strokes (with altitude cues). However, these two representations are complementary and jointly provide richer information for terrain authoring task. One major limitation of their method is that they fail to learn a generative latent space and hence are unable to perform terrain manipulation tasks.

In this paper, we propose a novel realistic terrain authoring framework powered by a combination of Variational Auto-encoder (VAE), and conditional GAN model. Our framework attempt to overcome the limitations of TSynthNet [10] by learning a latent space from real world terrain dataset. This latent space allows us to generate multiple variants of terrain from a single input as well as interpolate between terrains, while keeping the generated terrains close to real world data distribution. We also developed an interactive tool, that lets the user model diverse terrains with minimalist inputs. We perform thorough qualitative and quantitative analysis and provide comparison with TSynthNet [10] to show superiority of our method over SOTA. We summarise our contributions as follows:

-

•

We propose a novel terrain authoring framework, powered by VAE and cGAN and provide thorough empirically evaluation for the same on real world dataset.

-

•

We propose to learn a generative latent space for topographic maps using VAE that enables terrain interpolation and automated variant terrain generation.

-

•

We design a novel VAE loss function to exploit sparse topographic features like ridge/valley lines.

Example-based methods use real world examples or user defined sketches for terrain synthesis. Texture-based terrain synthesis technique takes a set of input images and produces an output with similar characteristics by deriving from common features in input data. [6] is a texture-based method that takes user constraints in the form of points, curves and painted terrains. [16] lets user edit existing terrains by providing sketch silhouettes. [5] combines interactive and procedural methods to let user render complex waterfall scenes. They also allow user to edit existing terrains to model waterfalls. Sketch-based methods are often tedious as it requires manual editing whereas example-based methods are limited by dataset and cannot generate new ones unless present in the input.

Generative methods in deep learning attempts task of generating unique, diverse data. [19] is one of the earliest unsupervised generative model. [18] use convolution neural networks (CNNs) to compress input data into a low dimensional latent vector and generate images from these vectors. VAE [12] maps the input data on to a probabilistic distribution, by learning their parameters for each input. They manage to model the entire dataset on to latent space. The data points similar to each other lie close on this learnt latent space. It is possible to sample data similar to each other by sampling from the learnt distribution. One can also traverse this latent space to interpolate between two data points. GANs [8] use a combination of generative and adversarial networks for training. The adversarial loss used makes sure that the generated sample distribution and true data distribution are close to each other. [17] use CNNs with GANs to generate unique images from vectors sampled from a known distribution. Conditional GANs (cGAN) [15] use auxiliary information along with input to to have better control over generated output. Pix2pix [11] is a cGAN which performs image to image translation. They also provide an application of translating hand drawn input into meaningful image. [4, 9] are a face editing models that first learn a local feature embedding for each component of face, then further user generative network to generate images with incorporated editing. Learning a latent space gives more flexibility for authoring and editing applications. TSynthNet [10] is one of the first to use a deep learning model for example based terrain synthesis. They use a cGAN model borrowed from [11] to generate terrains from hand drawn topographic maps (either level-sets or ridge/valley lines).

Please refer to supplementary material for detailed discussion on other related works in the literature.

2 Methodology

We propose a realistic virtual terrain authoring framework for generating terrains in the form of Digital Elevation Models (DEMs) from hand drawn sketches. Our two-stage framework attempt to learn the topographical structure of real-world terrains from existing datasets and generate plausible DEM from input sketches that can be thought of as topographic maps primarily consisting of DEM level-sets and ridge/valley lines representing underlying abstract topological features of the terrain. In the first stage, we aim to learn a generative latent space for topographic maps using a Variational Auto-encoder (VAE) [12] model from real world terrain dataset. We extract ground truth topographic maps from real world terrain data (see Section 3.1) and autoregress using VAE to learn the latent space. Learning such a latent space enables two key use-cases of proposed method, namely, automated generation of multiple variants of terrain from a single user input sketch, and interpolate between two terrains, while keeping the generated virtual terrains closer to real world dataset consisting of realistic topographical features. The output of this stage is the latent topographic map depicting generated level-sets and ridge/valley lines. Subsequently, this output is fed to the second stage which consists of conditional Generative Adversarial Network (cGAN) (Pix2pix [11]) model that generates plausible DEM output. Although our second stage is inspired from TSynthNet [10], we propose to combine DEM level-sets and ridge/valley line strokes into a single topographic map as these two representations are complementary and jointly provide richer information for terrain authoring task. We propose a novel VAE loss to emphasize the sparse ridge/valley lines in the joint topographic map representation. Figure 2 show the overview of proposed two-stage framework.

2.1 Stage 1: Latent Space Learning

Let be our (hand drawn) input sketches representing a rough topographic map. We assume that these inputs belong to some parametric distribution and each terrain is generated by some random process, involving an unobserved random variable . Calculating is hard as is intractable. Hence, we use the VAE to approximate another distribution such that it is similar to learns the distribution parameters and , from which the latent vector is sampled. We use a re-parameterization trick to sample as , where is sampled from a Standard Normal distribution. This sampled vector is fed to the decoder which predicts , that is the reconstruction of original input .

| (1) |

| (2) |

We propose a novel auto-regressive reconstruction loss between VAE input and output by modifying the traditional Binary Cross Entropy (BCE) loss (Equation 1) to emphasize the ridge/valley lines in the topographic map. Here, we represent the input topographic map as RGB image where the Green channel () is dedicated to represent level-sets while the Red () and Blue () channels are used to represent ridge and valley lines, respectively. A similar representation exist for output (reconstructed) topographic map (). We propose to give higher weightage to the loss on red and blue channels so as to give more importance to red and blue channels representing sparse ridge and valley lines/strokes in the topographic map sketches. This is desired in our joint representation of topographic map as ridge/valley lines/strokes are relatively sparse (occupying fewer pixels) in comparison to dense level-sets maps (green channel). This helps the model learn sharp features of river valleys and hill ridges. Additionally, a traditional KL divergence loss (Equation 3) ensure that the probability distribution of latent vector follows a Standard Normal distribution.

| (3) |

Thus, the final VAE loss (Equation 4) is a combination of reconstruction (Equation 2) and KL divergence loss (Equation 3). The parameter in Equation 4 is the weighting of the latent loss which is set to 0.65, is weight of red and blue channels in reconstruction loss which is set to 5, and are the mean and variance learnt by the network.

| (4) |

The VAE output is a topographic map, which is further fed to our second stage for generating the plausible DEM for completion of terrain authoring task. However, apart from traditional terrain authoring task, the key importance of our multi-stage framework is that the VAE based learning of latent space also enables performing interpolation of terrains as well as generating novel variants of a given terrain, as explained below.

2.1.1 Terrain Interpolation

We can use the latent space for interpolation between two terrains in the lower dimensional (latent) feature space (). Given input (topographic map) sketches and , we can obtain their corresponding latent representations in terms of means & and standard deviations & . We can subsequently interpolate between the of these two inputs as where () is an interpolation parameter defining degree of dominance of over in the interpolated output topographic map. The interpolated topographic map output is fed to the second stage to get the final DEM.

2.1.2 Generating Terrain Variants

We can further use this learnt latent space for sampling new terrain variant for automated variant generation. Given an input , we can obtain corresponding and vectors from the encoder. Subsequently, we can sample new vectors as , where we can vary parameter to get different output terrains () with variations in terrain topographic features as compare to input . The generated topographic map variant is fed to the second stage to get the final DEM.

2.2 Stage 2: DEM Generation

The aim of this stage is to generate the DEM given user topographic map sketch or in our case generated sketch from previous stage. Similar to TSynthNet [10], we adapt Pix2pix [11], a conditional GAN model for this task. Traditionally, GAN [8] are based on the idea of making a given noise distribution to match the desired data distribution. The conditional GANs [15] conditions both generator and discriminator on some auxiliary information to have better control over the generated output. The generator network attempts to learn this mapping while the discriminator learns to discriminate the generated data from ground truth data. Thus, the overall network is trained such that both generator and discriminator reach a Nash equilibrium by playing a two player minmax game while optimizing the value functions.

Let’s represent ground truth DEM as and as generated/predicted DEM by the Pix2pix model for the input topographic map sketch . Let be the feature embedding learnt by the generator encoder, the value function can be defined as:

| (5) |

Our model uses hand drawn input topographic sketch maps as an auxiliary information in order to produce plausible DEMs. We use L1 loss for reconstruction from the generator i.e., . So the final loss for cGAN training becomes:

| (6) |

3 Experimental Setup

3.1 Dataset

We use popular DEM dataset used by other relevant works in the literature e.g., [3, 14] which is part of publicly available high-resolution DEMs of mountain ranges named Pyrenees [1] and Tyrol [2], respectively. DEM patches with a resolution of 2m/pixel has been used as ground truth elevation maps. Original DEM tiles were split into 200x200 pixels. Instead of using the whole dataset of 22000 image patches for training and 11000 image patches for testing, we randomly sample 3000 image patches for training and 878 image patches for testing. More details about the dataset can be referred from [14]. We prepare the training dataset by extracting the topographic map input sketches from DEMs (please refer to supplementary material for details).

3.2 Implementation Details

Our VAE model (in stage 1) is a 12 layer network with 6 layers in encoder and 6 layers in decoder. The latent space dimension is set to 128. All the layers of encoder consists of a 3x3 convolution with stride of 2 and padding of 1, followed by Batch Normalization and using Leaky ReLU non-linearity. Due to stride of 2, the image resolution reduces by two after each layer, and we double the number of channels in each layer starting from 32 in first and 1024 in the final layer of encoder. We then flatten the output of encoder and pass it through fully connected layer to get 128 dimension mean and log variance. This mean and log variance is then used to sample and then the decoder is used to reconstruct the input. Each decoder layer consists of Transposed Convolution with stride of 2 and input and output padding of 1, followed by batch normalization and leaky ReLU as activation. Decoder also consists of 6 layers, so we reach the same resolution as input. At the end we have a convolution layer with 3x3 kernel, stride of 1, padding of 1 and output channels 3. Adam optimizer was used to update the parameters with learning rate of and an exponential scheduler with gamma set to while training the VAE with a batch size of for epochs.

Our conditional GAN generator (in stage 2) is a U-net inspired Pix2pix architecture [11]. This model was trained using Adam optimizer with learning rate of , set to and as for both Generator and Discriminator. We use a batch size of here.

We used 3000 terrain patches (of 256256) for training and 878 terrain patches (of 256256) for testing both stage 1 and 2 models of our method. All our experiments were performed on a single Nvidia GTX 1080Ti. The network implementations were done using Pytorch.

4 Results & Discussion

4.1 Qualitative Evaluation

We demonstrate the result of the proposed pipeline in Figure 4. The figure shows the comparison between rendered ground truth and predicted DEM draped with corresponding satellite images. This shows that the model is able to generate realistic terrains close to the ground truth. Some more examples of diverse terrains generated are shown in Figure 6.

Generating Terrain Variants:

We utilise the latent space created by VAE to generate different samples from the same input. Different terrains generated from the latent space encoding of the same input topographic map are shown in Figure 5. The red circled regions shows the variation in the generated terrains as compared to the original terrain. We can observe the generated terrains have realistic but slightly different topographical features from that of input terrain. This provides the user the flexibility to generate multiple terrain DEMs and use them for large scale generation of virtual terrain maps.

Terrain Interpolation:

The latent space can also be used for automated fusion of topographic features across two terrains. Figure 3 shows an example interpolation of two input terrains in the latent space for different values of parameter. This enables the user to generate new virtual terrain maps by combining a given pair of input terrain maps.

Please refer to supplementary video for 3D visualization of qualitative results.

4.2 Quantitative Evaluation & Ablation Study

| Method | MSE |

|---|---|

| TSynthNet [10] | 30.157 |

| Only VAE | 57.265 |

| Our model (VAE+cGAN) | 28.4024 |

| Our model (VAE+cGAN) without modified VAE loss | 73.611 |

We perform quantitative analysis and compare our model with TSynthNet [10]. Additionally, we also compare our method with two variants namely, “Only VAE” and “VAE+cGAN without modified VAE loss”. We attempted to learn the DEM generation using only VAE in the former model. The latter is an ablative variant of our model without modified VAE loss. We train all these models with same train/test split on terrain dataset (see subsection 3.1) and reported Mean Squared Error (MSE) as the evaluation metric.

We provide quantitative evaluation results in Table 1 . We can observe that we obtained superior performance with MSE of from our model (VAE+cGAN with modified VAE loss). The ablative comparison with VAE+cGAN without modified VAE loss (i.e., MSE value of ) show that our proposed modified VAE loss that account for ridge/valley lines significantly improves the performance of our method. Only VAE model (i.e., single stage VAE based DEM generation) also performs inferior (with MSE of ) to our model justifying need of two stage framework. In comparison to TSynthNet [10], our model yield lower MSE value. It is important to note that apart from achieving lower MSE than TSynthNet on terrain generation task, our method also enables terrain interpolation and variant generation using the learnt VAE latent space. Thus, our model yield superior quantitative performance as compared to other methods/variants.

We also performed two additional ablation experiments to justify our proposed method. In the first experiment, we retain the same architecture as our method but train it differently. Here we first train the VAE of first stage but during training of cGAN in the second stage, we let the gradients back propagate all way to decoder (while VAE encoder weights are kept frozen). However, we achieved an MSE of quiet inferior to our model. In the second experiment we initially train the stage 1 VAE to learn the latent space. In the second stage we again learn another VAE but with borrowing and freezing the encoder weights from first stage and train only the decoder for generating DEMs. Thus, it is still a two stage pipeline with two VAEs. We obtained an MSE of with this variant. These ablative studies justifies choice of our proposed two stage framework and learning strategy.

4.3 User Study

We performed a detailed user study involving users. In order to test the degree to which our model can generate realistic terrains, we presented users (students trained in geographical information system course) with set of generated (reconstructed) and ground truth terrain pairs overlaid with satellite images, similar to ones shown in Figure 6 and asked them to differentiate between the two. The outcome was that users were unable to decisively differentiate and choose the real terrain only of the time. Total of the users agreed that the terrains generated are very realistic while said that it is fairly realistic.

In the second experiment, we provide the user with a simple interface to draw input sketches. The designed interface is simple with controls such as Clear, Save, Exit. We provide the option to vary brush thickness so that the dense level-sets can be drawn with only few strokes. The user interface and the DEM generated for a hand draw user input is shown in Figure 7. The input can also be interactively edited to get desired output. Figure 8 shows visualization of generated terrains during an interactive terrain editing session. We asked the users several questions regarding the interface and the application such as 1) whether the input is intuitive, 2) if it is easy to express one’s intent, 3) if the terrains generated follow the sketches etc. users said that the input is very intuitive while users agreed that it is fairly intuitive. of the user strongly agree that the generated terrain follow the input sketches, while the remaining fairly agree. All the users strongly agree that the system is fast and reactive. When asked to rate on a scale of 1 to 5, on how easy it was to express one’s intent, of the users gave a rating of 5, gave a rating of 3 and gave a rating of 2. We observe that the users were able to generate DEMs with ease after a couple of attempts.

5 Conclusion

We proposed a novel realistic terrain authoring framework powered by a combination of Variational Auto-encoder and conditional GAN model. Our framework learns a generative latent space from real world terrain dataset. This latent space allows us to generate multiple variants of terrain from a single input as well as interpolate between terrains, while keeping the generated terrains close to real world data distribution. While a preliminary interactive tool has been developed and used here, we further intend to provide user control to generate the terrain variants and interpolated terrains. The thorough qualitative and quantitative analysis and comparison with other SOTA methods supports the superior outcome of our approach.

References

- [1] Institut cartogràfic i geològic de catalunya (icc). http://www.icc.cat/vissir3. Online Accessed: February 2, 2019.

- [2] Südtiroler bürgernetz geokatalog (sbg). http://geokatalog.buergernetz.bz.it/geokatalog. Online Accessed: February 2, 2019.

- [3] Oscar Argudo, Antoni Chica, and Carlos Andujar. Terrain super-resolution through aerial imagery and fully convolutional networks. In Computer Graphics Forum, volume 37, pages 101–110. Wiley Online Library, 2018.

- [4] Shu-Yu Chen, Wanchao Su, Lin Gao, Shihong Xia, and Hongbo Fu. Deepfacedrawing: Deep generation of face images from sketches. ACM Trans. Graph., 39(4), July 2020.

- [5] Arnaud Emilien, Pierre Poulin, Marie-Paule Cani, and Ulysse Vimont. Interactive procedural modelling of coherent waterfall scenes. Comput. Graph. Forum, 34(6):22–35, Sept. 2015.

- [6] J. Gain, B. Merry, and P. Marais. Parallel, realistic and controllable terrain synthesis. Computer Graphics Forum, 34(2):105–116, 2015.

- [7] Eric Galin, Eric Guérin, Adrien Peytavie, Guillaume Cordonnier, Marie-Paule Cani, Bedrich Benes, and James Gain. A review of digital terrain modeling. Computer Graphics Forum, 38(2):553–577, 2019.

- [8] Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial networks, 2014.

- [9] Shuyang Gu, Jianmin Bao, Hao Yang, Dong Chen, Fang Wen, and Lu Yuan. Mask-guided portrait editing with conditional gans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2019.

- [10] Eric Guérin, Julie Digne, Eric Galin, Adrien Peytavie, Christian Wolf, Bedrich Benes, and Benoit Martinez. Interactive example-based terrain authoring with conditional generative adversarial networks. ACM Transactions on Graphics (proceedings of Siggraph Asia 2017), 36(6), 2017.

- [11] Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. Image-to-image translation with conditional adversarial networks. CoRR, abs/1611.07004, 2016.

- [12] Diederik P Kingma and Max Welling. Auto-encoding variational bayes, 2014.

- [13] Ashish Kubade, Diptiben Patel, Avinash Sharma, and K. S. Rajan. Afn: Attentional feedback network based 3d terrain super-resolution, 2020.

- [14] Ashish A Kubade, Avinash Sharma, and K S Rajan. Feedback neural network based super-resolution of dem for generating high fidelity features. In IGARSS 2020 - 2020 IEEE International Geoscience and Remote Sensing Symposium, pages 1671–1674, 2020.

- [15] Mehdi Mirza and Simon Osindero. Conditional generative adversarial nets, 2014.

- [16] Flora Ponjou Tasse, Arnaud Emilien, Marie-Paule Cani, Stefanie Hahmann, and Neil Dodgson. Feature-based terrain editing from complex sketches. Computers and Graphics, 45:101–115, Dec. 2014.

- [17] Alec Radford, Luke Metz, and Soumith Chintala. Unsupervised representation learning with deep convolutional generative adversarial networks, 2016.

- [18] D. E. Rumelhart, G. E. Hinton, and R. J. Williams. Learning Internal Representations by Error Propagation, page 318–362. MIT Press, Cambridge, MA, USA, 1986.

- [19] Ruslan Salakhutdinov and Geoffrey Hinton. Deep boltzmann machines. In David van Dyk and Max Welling, editors, Proceedings of the Twelth International Conference on Artificial Intelligence and Statistics, volume 5 of Proceedings of Machine Learning Research, pages 448–455, Hilton Clearwater Beach Resort, Clearwater Beach, Florida USA, 16–18 Apr 2009. PMLR.

- [20] Monia Santini, Salvatore Grimaldi, Fernando Nardi, Andrea Petroselli, and Maria Cristina Rulli. Pre-processing algorithms and landslide modelling on remotely sensed dems. Geomorphology, 113(1-2):110–125, 2009.