Deep Generative Attacks and Countermeasures for

Data-Driven Offline Signature Verification

Abstract

This study investigates the vulnerabilities of data-driven offline signature verification (DASV) systems to generative attacks and proposes robust countermeasures. Specifically, we explore the efficacy of Variational Autoencoders (VAEs) and Conditional Generative Adversarial Networks (CGANs) in creating deceptive signatures that challenge DASV systems. Using the Structural Similarity Index (SSIM) to evaluate the quality of forged signatures, we assess their impact on DASV systems built with Xception, ResNet152V2, and DenseNet201 architectures. Initial results showed False Accept Rates (FARs) ranging from to across all models and datasets. However, exposure to synthetic signatures significantly increased FARs, with rates ranging from to . The proposed countermeasure, i.e., retraining the models with real + synthetic datasets, was very effective, reducing FARs between and . These findings emphasize the necessity of investigating vulnerabilities in security systems like DASV and reinforce the role of generative methods in enhancing the security of data-driven systems.

1 Introduction

Handwritten signatures have long been a cornerstone of identity verification, utilized extensively in legal, financial, and medical domains. Despite their widespread use, the manual verification of signature authenticity remains a laborious and impractical process, especially at large scales. The rise in sophisticated signature frauds further complicates manual verification, underscoring the need for automated solutions. Consequently, Automated Signature Verification (ASV) has been a vibrant field of research for several decades [1, 2]. Traditional machine learning classifiers, such as Hidden Markov Models, Support Vector Machines, and Neural Networks, have been employed with notable success, achieving commendable error rates [1, 2]. More recently, the advent of data-driven approaches, particularly Convolutional Neural Networks (CNNs) and Transformer-based architectures, has significantly enhanced representation learning and improved verification accuracy [3, 4, 2, 5, 6, 7]. CNN-based models, in particular, have demonstrated lower error rates across diverse datasets, affirming their scalability and generalizability [3, 8].

The threat of signature forgery poses substantial risks to various sectors, potentially leading to severe financial losses, legal disputes, and breaches of confidential information. These risks highlight the critical need for robust ASV systems to resist sophisticated forgeries. The literature differentiates between writer-dependent and writer-independent models within the domain of ASV. This study focuses on writer-dependent models, which are meticulously tailored to individual users [1]. Furthermore, ASV systems are classified based on data acquisition techniques into online and offline verification systems. This paper concentrates on offline ASV systems, which rely on static signature images while deferring the exploration of online systems to future research [9, 10, 2, 1].

Like other biometric systems, ASVs are vulnerable to various types of attacks, which can be categorized as either random or skilled based on the attacker’s knowledge and intent [10]. Random forgeries are executed without prior knowledge of the target signature. In contrast, skilled forgeries involve deliberate attempts to replicate or mimic the target signature with varying degrees of sophistication, from rudimentary imitations to highly practiced and refined copies [11]. The increasing complexity of these attacks necessitates the development of innovative defensive measures.

Ballard et al. [12] expanded the classification of attacks to include naive, trained, and generative methods, highlighting the critical role of generative attacks in evaluating ASV security. While there has been some research into the generation of synthetic signatures [13, 5], the potential of advanced generative techniques, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), remains largely unexplored [14, 15]. Therefore, this study explores the application of contemporary Deep Generative Models (DGMs) to challenge data-driven ASV systems and develop effective countermeasures. The primary contributions are as follows:

- •

- •

-

•

Identified a correlation between the SSIM of the generated images and the attack success rates measured by False Accept Rates (FAR), thereby introducing controlled and explainable attack mechanisms.

-

•

Enhanced model performance by incorporating SSIM-optimized synthetic forgeries into the training process.

2 Related work

Previous studies explored the use of deep convolutional neural networks (CNNs) with Siamese architectures for offline signature verification [3, 23]. These studies showed improvements in verification performance across various datasets, including reduced error rates on the CEDAR dataset. Other architectures, such as Inception [24], VGG16 [25], and DenseNet121 [26], have also been used effectively. Yusnur et al. [27] implemented CNNs with triplet loss, focusing on ResNet-18 and DenseNet-121. In this study, we use the latest versions of these architectures to establish baseline models.

Besides signature verification, automatic signature generation has been researched for several years. Ferrer et al. [28, 29] focused on generating forgeries and new identity signatures, developing a two-stage method mimicking the human handwriting process. Diaz et al. [30] introduced a cognitive-inspired algorithm that accounts for human spatial and motor variabilities in signing. Maruyama et al. [5] proposed a method to capture common writer variability traits and introduced a way to assess the quality of generated signatures using feature vectors. Further details of applications of signature generation can be found in [30].

Recent surveys have highlighted the threat posed by generated adversarial examples in offline signature verification and developing countermeasures [2]. For instance, Hafemann et al. [31] identified vulnerabilities in CNN-based verifiers to deceptive inputs. Szegedy et al. [32] noted the susceptibility of neural network-based ASVs to perturbed signatures. Bird [33] explored synthetic forgeries generated using Conditional GANs and robotic arms, documenting false acceptance rates and recommending system training with synthetic forgeries as a countermeasure. In contrast, Li et al. [34] suggested that while adversarial inputs are detectable by humans, they failed to deceive ASVs. Tolosana et al. [35] investigated the use of Variational Autoencoders (VAEs) for online handwriting synthesis but did not evaluate the threat they posed to ASVs. In contrast, our research focuses on offline signature verification, providing further insights into the understanding of generative adversarial threats. In particular, we employ state-of-the-art generators such as Conditional GANs and VAEs to challenge baseline models implemented using Xception, DenseNet201, and ResNet152V2—across three different datasets, each in a different language. We also emphasized controlling the quality of synthetic signatures to devise effective countermeasures, as detailed in Section 2.

The presented attack scenarios simulate black-box injection attacks to evaluate ASV vulnerabilities, keeping real-world scenarios in mind where attackers do not have detailed knowledge of the baseline models. Please see Section 3.3 for more details.

3 Design of experiments

Figure 1 provides an overview of the experimental setup. The components and corresponding sub-components are described in the subsequent paragraphs. The dataset and implementation details are available via Github111https://github.com/axn0684/DeepGenerativeAttack

3.1 Datasets

To test our attack and countermeasure approaches, we used three different datasets, each consisting of signatures from a different language:

CEDAR This widely used dataset in the offline ASV domain [17] includes genuine and forged signatures for each of the users, totaling genuine and forged signatures. The signatures were at dpi gray-scale and were binarized using a gray-scale histogram. Salt-and-pepper noise removal and slant normalization were also applied to preprocess the signature images.

BHSig260Bengali This dataset consists of sets of handwritten offline signatures. Each set comprises genuine and skilled forgeries. All signatures were collected using a flatbed scanner with a resolution of dpi in gray-scale and stored in TIFF format. A histogram-based threshold was applied for binarization to convert data to two-tone images. The Bengali script has more curved shapes compared to Hindi Devanagari. It also includes unique nasal consonants and sibilants not found in Hindi, while vowel forms have a horizontal orientation.

BHSig260Hindi This dataset consists of sets of handwritten offline signatures. The components and acquisition of each set are the same as the BHSig260-Bengali dataset. The angular Devanagari script is used for Hindi signatures. It contains distinct consonant clusters that are absent in Bengali. The Hindi vowel shapes have a more diagonal and vertical orientation than Bengali. While both datasets contain Indian script signatures, the writing styles differ due to variations in the Bengali and Hindi character sets.

Random signatures To train the ASVs, Otsu preprocessing and normalization proposed in [36], were applied on the original datasets besides transforming the signatures into a binary array of size x. Thus, to generate random signatures, we created arrays of size filled with randomly selected values of either or . These random signatures were used to launch random signature attacks and evaluate ASVs’ vulnerability to such attacks.

DGM-assisted synthetic signatures We used VAE [35] and CGAN [16] to create six synthetic datasets from the forgeries of each public dataset. Our generation method differs from that of Bird et al. [33], who supplied conditional GANs with multiple images labeled genuine and forgery to generate GAN-based images. We trained individual models for each generative architecture and generated nine synthetic samples for each original image. The six synthetic datasets were divided into two parts, training, and testing, to avoid data leakage. The training part comprised signatures generated from references drawn from the training set of human forgeries, while the testing part comprised signatures generated from references drawn from the testing set of human forgeries. We describe the generators below.

CGANs are an extension of the vanilla GAN [14]. They consist of two components: a generator and a discriminator. The generator creates synthetic data samples, while the discriminator distinguishes between real and generated data. The key difference in CGANs is the introduction of additional conditional variables, such as labels or tags, to both the generator and the discriminator. This conditional information provides control over the types of data the generator creates. In this study, CGANs were trained on existing datasets to create synthetic forgeries of each public dataset. Mathematically, the objective function of a CGAN is expressed as:

VAEs are generative models consisting of two main parts: an encoder and a decoder. The encoder compresses input data, in this case, images, into a lower-dimensional latent space while the decoder reconstructs the original data from these compressed representations. VAEs use a variational inference approach, introducing a probabilistic layer that models the latent space as a distribution, typically Gaussian, rather than deterministic values. In this study, VAEs were trained on images from three human forgery datasets. The encoder was designed to map signature images to a lower-dimensional latent space, capturing the essential features and variations of the signatures. The decoder was trained to generate signature images from the latent representations.

Mathematically, VAEs optimize an objective function that includes a reconstruction loss and a regularization term. The objective function of VAE is expressed as:

Here, represents the input data, which are human forgeries, is a sample from the latent space, is the encoder, is the decoder, and KL represents the Kullback-Leibler divergence.

Quality of synthetic signatures was evaluated using the Structural Similarity Index Measure (SSIM) [19]. SSIM considers luminance (brightness), contrast, and the structure of pixels in the images. It calculates three key components: luminance (), contrast (), and structure (), each designed to capture specific aspects of similarity. These components are computed using mean () and standard deviation () values of pixel intensities within the windows. The SSIM score, denoted by , is computed as follows:

The value of ranges from to , where indicates perfect structural similarity, denotes no similarity, and negative values indicate dissimilarity.

VAE-generated datasets achieved an average of across three datasets. In contrast, the average for CGAN-generated datasets was . Details are presented and discussed in Table 1.

One could argue that CGAN should achieve better results than VAE, in general while generating the synthetic signatures. However, this was not the case in our experimental setup, likely because (1) we are generating new signatures using a single reference signature, and (2) GAN suffers from limited data for training, mainly because the discriminator memorizes the training set [37], and (3) VAE has inherent regularization in the VAE’s objective (the KL divergence term)– preventing over-fitting to the single reference sample, presumably a more stable training process, and the smooth latent space it learns.

SSIMs vs. FARs (attack success) We used a fixed number of epochs () to generate the synthetic signatures, and the resulting values are presented in Table 1. VAE achieved average SSIMs of , , and for the Bengali, CEDAR, and Hindi datasets, respectively. With the same number of epochs, CGAN achieved average SSIMs of , , and for the Bengali, CEDAR, and Hindi datasets, respectively.

Observing the stark difference between the damages (FARs) caused by the VAE-generated and CGAN-generated signatures (see Figure 5), we investigated the relationship between and the false accept rates achieved by the generated signatures. A strong negative correlation was found between and the false accept rates, as demonstrated in Figure 2, which offers insights into controlling the quality of the generated signatures and, in turn, the ability to attack.

The uncovered relationship between SSIM and FARs led us to generate an SSIM-controlled dataset for effective attacks and developing countermeasures. Since attackers can always obtain impostor signature samples, we centered the data generation process around impostor samples. In other words, we used impostor samples as the reference for data generation via DGMs.

SSIM-controlled generation Our objective was to generate signatures that deviate significantly from the impostor samples so they could be misclassified as genuine, thereby increasing the FARs. Specifically, we aimed to generate signature samples from forgeries with the lowest SSIM.

We generated five signatures per user from the impostor samples at different epochs. Upon plotting the results, we observed that CGAN could only generate signatures with very low SSIM. In contrast, VAE could generate signatures with a wide range of SSIMs (see Table 1 and Figure 3).

| Dataset | VAE | CGAN | VAE SSI-tuned |

|---|---|---|---|

| Bengali | 0.942 | 0.104 | 0.048 |

| CEDAR | 0.510 | 0.126 | 0.045 |

| Hindi | 0.938 | 0.108 | 0.045 |

Considering the high SSIM images generated by VAE compared to CGAN, we decided to retrain VAE with the SSIM-controlled countermeasure (see Table 1). Using this approach to attack the baselines, we generated signatures with SSIM less than to validate our observation of the relationship between SSIM and FAR.

The VAE models were retrained with the same dataset until the generated signatures reached an SSIM that could be considered insignificant. Specifically, instead of training our deep-learning baselines for a fixed number of epochs and using the final generator to produce signatures, we sampled one signature every epochs during the training. If the signatures were within the threshold (in this case, ), we stopped the training and kept the sampled signature. If, after epochs, no signature met the criteria, the model was retrained from scratch. This process was repeated times for each signature to obtain generated samples, similar to the uncontrolled dataset. We named this dataset the VAE-SSI-Controlled dataset.

The new VAE-SSI-Controlled dataset further confirmed our observation of a negative correlation between SSIM and FAR, as it achieved higher FARs than the epoch-guided ones. The epoch-guided dataset is referred to as the VAE dataset, while the SSI-controlled dataset will be referred to as VAE-SSI-Controlled hereafter.

3.2 Baseline implementation

The baselines were created using three advanced Deep Convolutional Neural Network architectures: Xception, ResNet152V2, and DenseNet201. These architectures were chosen primarily because their previous versions demonstrated effectiveness in the literature for signature verification [38, 39, 20]. They mainly served as feature extractors to train user-specific signature authentication models. Since the focus is on writer-dependent models, a baseline was created for each user using their signatures.

Preprocessing We applied the Otsu method [36] for signature preprocessing. Subsequently, the images were resized to using the bi-cubic interpolation method and normalized before being input for feature extraction. The same preprocessing technique was also applied during testing and attacking.

Training and testing the authentication models We trained individual models for each user using two-thirds of their genuine and forged signatures. The remaining one-third of each user’s dataset and the synthetic signatures generated from their genuine and forged signatures were used for testing. The authentication module consists of a simple dense layer with nodes using the ReLU activation function and a dense layer with two nodes representing the output layer for genuine and impostor signatures. These layers use the Sigmoid activation function. We employed binary cross-entropy with a Stochastic Gradient Descent (SGD) optimizer and a learning rate of with momentum of . The resulting models are referred to as Vanilla Baselines to distinguish them from those obtained after retraining.

3.3 Threat model

This paper considers black-box attacks, similar to those presented in [40] for deceiving gait, touch, face, and voice-based verification. The attackers do not require access to the details of the baseline models. However, the attackers need access to the samples the verifiers accept. Legitimate samples can be obtained through theft or using the membership inference technique presented in [41].

3.4 Attack evaluation scenarios

We used four attack scenarios to assess the baseline models’ attack susceptibility. The impacts of each attack were measured using False Acceptance Rates (FARs). The higher the FAR, the more successful the attack.

Vanilla or zero-effort attack The Vanilla Attack involves traditional impostor testing with human forgeries in the corresponding datasets. This attack is the primary scenario used to evaluate baseline performance, facilitate comparison with previous studies, and highlight the performance of various other attacks.

Random attack We adopted this attack from [40, 42], which studied random vector attacks for deceiving gait, touch, face, and voice-based verification. Following this approach, we generated random images to attack and evaluate each baseline.

VAE and CGAN attack In this scenario, we used VAE and CGAN-generated signatures to test if they could bypass the signature verification process. Unlike VAE-generated signatures, CGAN-generated signatures had more significant disparities from the reference images (see Figure 3). We hypothesized and expected CGAN-generated signatures to produce a higher FAR because the generated images were farther from the impostor images, resulting in a higher chance of being classified as genuine. This hypothesis was further investigated by exploring the relationship between the similarity of the generated and original images and the corresponding false accept rates (FAR).

3.5 Countermeasures

Retraining with synthetic datasets We propose synthetic data-augmented retraining of DASVs to enhance their robustness against the studied attacks. Each DASV was retrained using random signatures, DGM-assisted signatures, and human forgeries as impostor samples. This process resulted in three new models: random-assisted, VAE-assisted, and CGAN-assisted. These retrained models were then evaluated using the same attack scenarios and metrics. Separate datasets were used for retraining and testing to avoid data leakage.

For random-assisted systems, the random signatures were used in retraining. Similarly, the VAE and CGAN datasets were divided into training and testing sets for synthetic dataset-assisted retraining. We refer to the retrained models as VAE-assisted and CGAN-assisted verification models. These models were tested on synthetic signatures from the testing datasets to ensure the retraining set remained unseen. This process aimed to improve system performance in unfamiliar contexts.

SSIM-Tuning While evaluating the baseline models under GAN-assisted attack scenarios, we observed a strong negative correlation between SSIM and FAR (specified in Section 4.2). In other words, lower SSIM values (indicating greater distance from the reference human forgeries) corresponded to higher FARs, as these signatures were more likely to be accepted as genuine. Based on this observation, we posit that training models with low SSIM signatures, considering them as impostors would increase impostor sample variance, reducing FAR and enhancing robustness against various attack scenarios.

We decided to generate SSIM-controlled signatures for retraining the baselines. Among CGAN and VAE, VAE was more suitable for SSIM-controlled tasks due to its ability to generate images with a wide range of SSIMs (see Figure 3). The new VAE models were trained with the same dataset settings until the generated images reached an insignificant SSIM. Instead of training our deep-learning baselines with a fixed number of epochs, we sampled one image every epochs. If the image was within the threshold , we stopped the training and kept the sampled image. The model was retrained from scratch if no image met the criteria after epochs. This process was repeated times for each signature image to obtain generated samples, similar to the uncontrolled dataset.

It took several iterations to generate images with the desired SSIM. However, the process did converge in a certain number of iterations. This process is a bit ad-hoc, therefore in the future, we will investigate if it can be simplified further.

The new VAE SSI-controlled dataset further confirmed our observation of a negative correlation between SSIM and FAR. The new VAE SSI dataset posed a greater threat to the baseline than the initial VAE dataset and was used to test or retrain the models. This paper refers to the VAE SSI-tuned dataset as VAE SSI to differentiate it from the initial VAE dataset.

3.6 Performance evaluation

The performance of the baseline models was assessed under two distinct settings. The first setting evaluated the models’ proficiency in accepting genuine signatures, while the second focused on rejecting non-genuine signatures. Performance was quantified using False Reject Rates (FRR), probabilistically complementary to True Accept Rates (TAR). In contrast, the models’ ability to reject non-genuine signatures was measured using False Accept Rates (FAR), probabilistically complementary to True Reject Rates (TRR), across the four attack scenarios described above. For convenience, the Half Total Error Rate (HTER), the average of FAR and FRR, is also reported for easier comparison between different systems.

4 Results and discussion

4.1 Baseline performance

Figure 4 presents the performance of the three baseline models, namely Xception, ResNet152V2, and DenseNet201, across the CEDAR, BHSig260-Bengali, and BHSig260-Hindi datasets. All three models consistently achieved error rates below 6% in both FAR and FRR. DenseNet201 was the top performer with an HTER of 1.81%, followed by Xception (3.52%) and ResNet152V2 (4.43%) for the CEDAR dataset. For BHSig260-Bengali, DenseNet201 achieved an HTER of 0.44%, followed by ResNet152V2 (1.65%) and Xception (1.65%). For BHSig260-Hindi, DenseNet201, ResNet152V2, and Xception obtained HTERs of 1.73%, 3.66%, and 4.26%, respectively. The performance closely followed the results presented in the literature [24, 26].

4.2 Attack performance

The effect of described attack scenarios is presented in Figure 5 measured using FAR.

Random attacks Figure 5 reveals that although our baseline systems exhibited low FARs against skilled forgeries, they were highly vulnerable to even random attacks across all three models. In particular, ResNet152V2 trained on the Bengali dataset appears to have the highest FAR on random attacks. Similarly, on average, other models’ FAR on random attacks reached higher than 30.5%.

CGAN-assisted attacks CGAN-generated signatures with lower SSIM caused substantial damage to the baseline ASVs when used as attack samples. Specifically, the CGAN-based attack achieved a % FAR across the ASVs and datasets. This error rate far exceeded that of original human forgeries. The reason is that the CGAN-generated signatures were far from skilled forgeries and presumably closer to genuine signatures, resulting in higher FARs. In contrast, the VAE-generated signatures were closer to the human forgeries, resulting in rejections by the ASVs and causing lower FARs than the CGAN-generated signatures.

VAE-assisted attacks Presented in Figure 5, the attack results using VAE datasets closely mirrored our anticipated outcomes. We observed that most of the results from VAE-generated signatures came close to the original forgery samples across all datasets, with a slight exception for those trained on CEDAR. Specifically, DenseNet201, ResNet152V2, and Xception trained on Vanilla Bengali had FARs of , and for Vanilla attacks, compared to , and for VAE attacks, respectively. Similarly, for the models trained on Vanilla Hindi, the FARs for Vanilla attacks were , and , compared to , and for VAE attacks. CEDAR-trained models were more affected by this type of attack, with significantly higher FARs of , and resulting from the VAE attacks.

There exists several other generation methods as listed in [43]. We posit that different synthetic signature generation methods will have varying levels of attack and robustness impact.

4.3 Countermeasure and performance

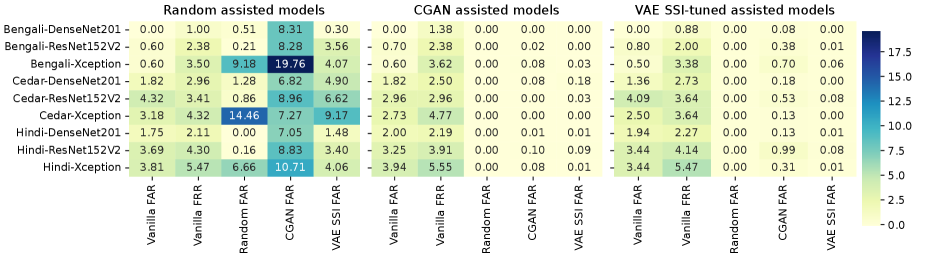

Random-assisted models The first heatmap (five columns from the left) in Figure 6 suggests that while the FAR and FRR tested on Human Genuine and Human Forgeries experienced slight changes, random-assisted models demonstrated higher robustness to random and generated attacks (compare the FARs in Figure 5 and Figure 6).

CGAN-assisted models The second heatmap (five columns in the middle) in Figure 6 suggests that the CGAN-assisted models showed high robustness against each attack. In particular, the FAR for all random attacks has been minimized to 0% and CGAN attacks under 0.10%, followed by the Vanilla attack under 0.18% in the worst case.

VAE-assisted models The third heatmap (five columns on the right) shown in Figure 6 suggests that the VAE-assisted retraining drastically improved the robustness of the models under all the attacks. In particular, VAE-assisted models also obtained FAR on random attack. The models also show significant improvements in CGAN attacks, despite not as much as CGAN-assisted models and VAE-SSI attacks. Particularly, the FAR falls in the range of 0.08 - 0.99% for CGAN attacks and 0.0-0.08% for VAE-SSI attacks. The models’ FAR on VAE-SSI attacks was slightly lower, with an average of , compared to for CGAN-assisted models.

The results support the hypothesis presented in [40] that mixing up synthetic signatures farther away from the human forgeries (negative samples) increases the variance of the impostor class and, in turn, reduces the false accept rates.

4.4 Implications

The research revealed severe vulnerabilities in data-driven ASVs to random and deep generative attacks. In other words, the existing data-driven ASVs needed to have detected state-of-the-art generative forgeries to an alarming extent. The proposed countermeasure, i.e., retraining the ASVs using SSIM-tuned generated signatures, effectively reduced the impact of zero-effort, random, VAE, and CGAN-assisted attacks. This research thus emphasizes the continuous study of emerging threats to ASVs and possible countermeasures, enabling the widespread adoption of data-driven automatic signature verification and their trustworthiness among users.

5 Conclusion and future work

This study evaluated the vulnerabilities of data-driven offline ASVs to random and generative attacks and proposed effective countermeasures. Two advanced deep generative models, Variational Autoencoders (VAEs) and Conditional Generative Adversarial Networks (CGANs), were used to generate synthetic signatures that challenged baseline ASVs. The study demonstrated that CGAN-generated signatures, with lower SSIM, caused significant FARs, highlighting a critical security risk. The findings revealed a strong negative correlation between SSIM and FAR, indicating that lower-quality synthetic forgeries are more likely to be accepted as genuine signatures. This insight led to the development of a novel countermeasure involving SSIM-tuned retraining. Incorporating synthetic forgeries with controlled SSIM into the training process significantly improved the robustness of ASVs against various attack scenarios.

The retrained models, particularly those using VAE-SSIM-tuned signatures, exhibited FARs below 1% under CGAN and VAE-SSI attacks, demonstrating the effectiveness of our approach. This study underscores the importance of continuously evaluating and enhancing ASVs to mitigate evolving threats. In the future, we plan to investigate additional image quality metrics, such as the Fréchet Inception Distance (FID) and Universal Image Quality Index (UIQI), to better understand their relationship with attack success rates. Additionally, we aim to explore the potential of using genuine human signature datasets to improve system robustness further. Finally, extending our research to online signature verification and writer-independent models will be crucial for developing comprehensive and resilient ASVs.

6 Acknowledgment

We thank the anonymous reviewers for their invaluable feedback and comments, which significantly improved this manuscript. We are also grateful to The Bucknell Program for Undergraduate Research (PUR) for supporting An Ngo.

References

- [1] Luiz G. Hafemann, Robert Sabourin, and Luiz S. Oliveira. Offline handwritten signature verification — literature review. In 2017 Seventh International Conference on Image Processing Theory, Tools and Applications (IPTA), 2017.

- [2] M. Muzaffar Hameed, Rodina Ahmad, Miss Laiha Mat Kiah, and Ghulam Murtaza. Machine learning-based offline signature verification systems: A systematic review. Signal Processing: Image Communication, 2021.

- [3] Luiz G. Hafemann, Robert Sabourin, and Luiz S. Oliveira. Learning features for offline handwritten signature verification using deep convolutional neural networks. Pattern Recognition, 2017.

- [4] Elias N. Zois, Ilias Theodorakopoulos, and George Economou. Offline handwritten signature modeling and verification based on archetypal analysis. In 2017 IEEE International Conference on Computer Vision (ICCV), pages 5515–5524, 2017.

- [5] Teruo M. Maruyama, Luiz S. Oliveira, Alceu S. Britto, and Robert Sabourin. Intrapersonal parameter optimization for offline handwritten signature augmentation. IEEE Transactions on Information Forensics and Security, 2021.

- [6] Huan Li, Ping Wei, Zeyu Ma, Changkai Li, and Nanning Zheng. Offline signature verification with transformers. In 2022 IEEE International Conference on Multimedia and Expo (ICME), pages 1–6, 2022.

- [7] Huan Li, Ping Wei, Zeyu Ma, Changkai Li, and Nanning Zheng. Transosv: Offline signature verification with transformers. Pattern Recogn., 145(C), jan 2024.

- [8] E. Allan Farnsworth. Large-scale offline signature recognition via deep neural networks and feature embedding. Neurocomputing, 359:1–14, 2019.

- [9] Moises Diaz, Miguel A. Ferrer, Donato Impedovo, Muhammad Imran Malik, Giuseppe Pirlo, and Réjean Plamondon. A perspective analysis of handwritten signature technology. ACM Comput. Surv., Jan 2019.

- [10] Carlos Gonzalez-Garcia, Ruben Tolosana, Ruben Vera-Rodriguez, Julian Fierrez, and Javier Ortega-Garcia. Introduction to presentation attacks in signature biometrics and recent advances, 2023.

- [11] Walid Bouamra, Chawki Djeddi, Brahim Nini, Moises Diaz, and Imran Siddiqi. Towards the design of an offline signature verifier based on a small number of genuine samples for training. Expert Systems with Applications, 2018.

- [12] Lucas Ballard, Daniel Lopresti, and Fabian Monrose. Forgery quality and its implication for behavioural biometric security. ieee trans syst man cybern part b. IEEE transactions on systems, man, and cybernetics. Part B, Cybernetics : a publication of the IEEE Systems, Man, and Cybernetics Society, 37:1107–18, 11 2007.

- [13] M. A. Ferrer, M. Diaz, C. Carmona-Duarte, and R. Plamondon. idelog: Iterative dual spatial and kinematic extraction of sigma-lognormal parameters. IEEE Transactions on Pattern Analysis &; Machine Intelligence, 42(01):114–125, jan 2020.

- [14] Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial networks. 2014.

- [15] Diederik P Kingma and Max Welling. Auto-encoding variational bayes. CoRR, 2013. doi: 10.48550/ARXIV.1312.6114.

- [16] Mehdi Mirza and Simon Osindero. Conditional generative adversarial nets, 2014.

- [17] Meenakshi K. Kalera, Sargur N. Srihari, and Aihua Xu. Offline signature verification and identification using distance statistics. Int. J. Pattern Recognit. Artif. Intell., 18:1339–1360, 2004.

- [18] Srikanta Pal, Alireza Alaei, Umapada Pal, and Michael Blumenstein. Performance of an off-line signature verification method based on texture features on a large indic-script signature dataset. In 2016 12th IAPR Workshop on Document Analysis Systems (DAS), pages 72–77, 2016.

- [19] Zhou Wang, A.C. Bovik, H.R. Sheikh, and E.P. Simoncelli. Image quality assessment: from error visibility to structural similarity. IEEE Transactions on Image Processing, 2004.

- [20] Gao Huang, Zhuang Liu, Laurens Van Der Maaten, and Kilian Q. Weinberger. Densely connected convolutional networks. CVPR, 2017.

- [21] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Identity mappings in deep residual networks, 2016.

- [22] François Chollet. Xception: Deep learning with depthwise separable convolutions. CoRR, abs/1610.02357, 2016.

- [23] Sounak Dey, Anjan Dutta, J. Ignacio Toledo, Suman K. Ghosh, Josep Llados, and Umapada Pal. Signet: Convolutional siamese network for writer independent offline signature verification, 2017.

- [24] Christian Szegedy, Vincent Vanhoucke, Sergey Ioffe, Jonathon Shlens, and Zbigniew Wojna. Rethinking the inception architecture for computer vision. CoRR, abs/1512.00567, 2015.

- [25] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- [26] Muhammed Mutlu Yapıcı, Adem Tekerek, and Nurettin Topaloğlu. Deep learning-based data augmentation method and signature verification system for offline handwritten signature. Pattern Anal. Appl., 2021.

- [27] Yusnur Muhtar, Wenxiong Kang, Aliya Rexit, Mahpirat, and Kurban Ubul. A survey of offline handwritten signature verification based on deep learning. In 2022 3rd PRML, 2022.

- [28] Miguel A. Ferrer, Moises Diaz-Cabrera, and Aythami Morales. Synthetic off-line signature image generation. In 2013 International Conference on Biometrics (ICB), 2013.

- [29] Miguel A. Ferrer, Moises Diaz-Cabrera, and Aythami Morales. Static signature synthesis: A neuromotor inspired approach for biometrics. IEEE Trans. Pattern Anal. Mach. Intell., page 667–680, mar 2015.

- [30] Moisés Díaz Cabrera, Miguel Angel Ferrer-Ballester, George S. Eskander, and Robert Sabourin. Generation of duplicated off-line signature images for verification systems. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017.

- [31] Luiz G Hafemann, Robert Sabourin, and Luiz S Oliveira. Characterizing and evaluating adversarial examples for offline handwritten signature verification. IEEE Transactions on Information Forensics and Security, 14(8):2153–2166, 2019.

- [32] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. Intriguing properties of neural networks. International Conference on Learning Representations, 2014.

- [33] Jordan J Bird. Robotic and generative adversarial attacks in offline writer-independent signature verification. arXiv preprint arXiv:2204.07246, 2022.

- [34] Haoyang Li, Heng Li, Hansong Zhang, and Wei Yuan. Black-box attack against handwritten signature verification with region-restricted adversarial perturbations. Pattern Recognition, 111:107689, 2021.

- [35] Ruben Tolosana, Paula Delgado-Santos, Andres Perez-Uribe, Ruben Vera-Rodriguez, Julian Fierrez, and Aythami Morales. Deepwritesyn: On-line handwriting synthesis via deep short-term representations. In AAAI Conf. on Artificial Intelligence (AAAI), February 2021.

- [36] Nobuyuki Otsu. A threshold selection method from gray-level histograms. IEEE Transactions on Systems, Man, and Cybernetics, 9(1):62–66, 1979.

- [37] Shengyu Zhao, Zhijian Liu, Ji Lin, Jun-Yan Zhu, and Song Han. Differentiable augmentation for data-efficient gan training. NIPS ’20, 2020.

- [38] Jahandad, Suriani Mohd Sam, Kamilia Kamardin, Nilam Nur, and Norliza Mohamed. Offline signature verification using deep learning convolutional neural network (cnn) architectures googlenet inception-v1 and inception-v3. Procedia Computer Science, 2019.

- [39] Neha Sharma, Sheifali Gupta, Puneet Mehta, Xiaochun Cheng, Achyut Shankar, Prabhishek Singh, and Soumya Ranjan Nayak. Offline signature verification using deep neural network with application to computer vision. Journal of Electronic Imaging, 31(4):041210, February 2022.

- [40] Benjamin Zhao, Hassan Asghar, and Mohamed Ali Kaafar. On the resilience of biometric authentication systems against random inputs. Network and Distributed System Security Symposium, 2020.

- [41] Reza Shokri, Marco Stronati, and Vitaly Shmatikov. Membership inference attacks against machine learning models. CoRR, abs/1610.05820, 2016.

- [42] Jun Hyung Mo and Rajesh Kumar. ictgan–an attack mitigation technique for random-vector attack on accelerometer-based gait authentication systems. 2022 IEEE International Joint Conference on Biometrics (IJCB), pages 1–9, 2022.

- [43] Sam Bond-Taylor, Adam Leach, Yang Long, and Chris G. Willcocks. Deep generative modeling: A comparative review of vaes, gans, normalizing flows, energy-based and autoregressive models. IEEE Transactions on Pattern Analysis and Machine Intelligence, Nov 2022.