Deep evidential fusion with uncertainty quantification and reliability learning for multimodal medical image segmentation

Abstract

Single-modality medical images generally do not contain enough information to reach an accurate and reliable diagnosis. For this reason, physicians commonly rely on multimodal medical images for comprehensive diagnostic assessments. This study introduces a deep evidential fusion framework designed for segmenting multimodal medical images, leveraging the Dempster-Shafer theory of evidence in conjunction with deep neural networks. In this framework, features are first extracted from each imaging modality using a deep neural network, and features are mapped to Dempster-Shafer mass functions that describe the evidence of each modality at each voxel. The mass functions are then corrected by the contextual discounting operation, using learned coefficients quantifying the reliability of each source of information relative to each class. The discounted evidence from each modality is then combined using Dempster’s rule of combination. Experiments were carried out on a PET-CT dataset for lymphoma segmentation and a multi-MRI dataset for brain tumor segmentation. The results demonstrate the ability of the proposed fusion scheme to quantify segmentation uncertainty and improve segmentation accuracy. Moreover, the learned reliability coefficients provide some insight into the contribution of each modality to the segmentation process.

keywords:

Dempster-Shafer theory , Evidence theory , Medical image processing , Deep learning , Decision-level fusion[inst1]organization=Université de technologie de Compiègne, CNRS, Heudiasyc,city=Compiègne, country=France

[inst2]organization=Université de Rouen Normandie, Quantif, LITIS,city=Rouen, country=France \affiliation[inst3]organization=Université de Rouen Normandie, Centre Henri Becquerel,city=Rouen, country=France \affiliation[inst4]organization=Institut universitaire de France,city=Paris, country=France

1 Introduction

Recent advances in medical imaging technologies have facilitated the acquisition of multimodal data such as Positron Emission Tomography (PET)/Computed Tomography (CT) and multi-sequence Magnetic Resonance Imaging (MRI). Images from a single modality provide partial insight into cancer and other abnormalities within the human body. Multimodal medical image analysis, which integrates information from diverse medical imaging modalities, significantly contributes to a comprehensive understanding of intricate medical conditions [90]. It encompasses factors such as the location, size, and extent of pathological structures. Medical image segmentation based on the fusion of multimodal medical information allows clinicians to better delineate anatomical structures, lesions and abnormalities, thus enhancing the effectiveness of disease detection, diagnosis, and treatment planning.

Multimodal medical image fusion strategies can be implemented at different levels [84]. At the lowest pixel level, multimodality images are concatenated as a single input. Alternatively, features can be extracted from different modalities and combined for further modeling and reasoning (feature-level fusion). Finally, in the decision-level approach, partial decisions are made independently based on each modality and aggregated to obtain a final decision. Though recent developments in multimodal medical image analysis have yielded promising experimental results, conventional multimodal medical image fusion strategies still suffer from some limitations. It is often difficult to explain why a given strategy works in a given context, and to quantify decision uncertainty in a reliable way. Moreover, most approaches are based on optimistic assumptions about data quality and, contrary to clinical knowledge, they treat images from different modalities as equally reliable when segmenting tumors, which may lead to biased or wrong decisions.

The success of information fusion depends on the relevance and complementarity of input information, the existence of prior knowledge about the information sources, and the expressive power of the uncertainty model employed [65, 24, 61]. Given that the quality of input information and prior knowledge is intricately tied to the data collection stage, a lot of work has been devoted to modeling uncertainties in a faithful way [39]. As a critical factor in the information fusion process [1, 38], accurate uncertainty quantification must be regarded as a primary objective to achieve precise multimodal medical image segmentation.

Early methods for quantifying uncertainty essentially relied on probabilistic models, often integrated with Bayesian inference or sampling techniques to estimate uncertainty across various parameters or variables [34, 56]. The advent of deep neural networks has sparked renewed interest in uncertainty estimation [1], leading to the development of methods such as Monte-Carlo dropout [29] and deep ensembles [45]. However, it is important to note that these probabilistic models rely on assumptions about the underlying data distribution, and improper distributions can result in inaccurate uncertainty estimations. Furthermore, uncertainty quantification via inference or sampling algorithms heavily relies on computational approximations and may lack rigorous theoretical justification [6, 7]. These and other limitations motivate the search for alternative approaches for uncertainty quantification for information fusion and decision-making applications.

Instead of making strong assumptions on actual data distribution, non-probabilistic methods use alternative mathematical frameworks or representations such as possibility theory [87, 21] and Dempster-Shafer theory (DST) [15, 69, 20] to quantify uncertainty. In particular, the latter formalism is an evidence-based information modeling, reasoning, and fusion framework that can be used with both supervised [16, 80, 79] and unsupervised learning [48, 19], providing an effective way to handle imperfect (i.e., imprecise, uncertain, and conflicting) data. Compared to possibility theory, DST allows the quantification of both aleatory and epistemic uncertainty while providing a powerful mechanism for combining multiple unreliable pieces of information [61].

In multimodal medical image segmentation, effectively combining uncertain information from diverse sources presents a significant challenge. Some learning-based approaches propose addressing conflicting decisions by introducing learnable weights [50, 4, 71]. The term “weight” in those approaches usually refers to the importance of information. In contrast, reliability pertains to the trustworthiness of the information and needs to be carefully analyzed in different medical situations. Four major approaches have been used to provide reliability coefficients: 1) modeling the reliability of sources using a degree of consensus [14]; 2) modeling expert opinions using probability distributions [12]; 3) using external domain knowledge or contextual information to model reliability coefficients [26]; 4) learning the reliability coefficients from training data [25, 62], which is a very general approach that does not require any prior domain knowledge or expert opinions. In this work, we consider an even more flexible approach in which the reliability of each image modality is described by several coefficients, one for each ground truth value. The reliability coefficient for source and class is then defined as one’s belief that the information from source is reliable, if the true class is .

In this paper, we introduce a new approach to multimodal medical image segmentation combining DST with deep neural networks111This paper is an extended version of the short paper presented at the 25th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2022) [36]. This extended version includes a much more detailed description and explanation of the fusion framework, an improved optimization strategy with a two-part loss function, as well as extended results with a second dataset for lymphoma segmentation and an additional transformer-based feature-extraction module.. The proposed fusion scheme comprises multiple encoder-decoder-based feature extraction modules, DST-based evidence-mapping modules, and a multimodality evidence fusion module. The evidence-mapping modules transform the extracted features into mass functions representing the evidence from each imaging modality about the class of each voxel. These mass functions are then corrected by a contextual discounting operation, and the discounted pieces of evidence are combined by Dempster’s rule of combination. The whole framework is trained end-to-end by minimizing a loss function quantifying the errors before and after the fusion of information from each modality. Our main contributions are, thus, the following:

-

1.

We propose a new hybrid fusion architecture for multimodal medical images composed of feature extraction, evidence-mapping, and combination modules.

-

2.

Within this architecture, we integrate mechanisms for (i) quantifying segmentation uncertainty using Dempster-Shafer mass functions, (ii) correcting these mass functions to account for the relative reliability of each imaging modality using context discounting, and (iii) combining corrected mass functions from different sources to reach final segmentation decisions.

-

3.

We introduce an improved two-part loss function making it possible to optimize the segmentation performance of each individual source modality together with the overall performance of the combined decisions.

-

4.

Through extensive experiments with two real medical image datasets, we show that the proposed decision-level fusion scheme improves segmentation reliability and quality as compared to alternative pixel-level methods for exploiting different image modalities.

-

5.

We show that the learned reliability coefficients provide some insight into the contribution of each imaging modality in the segmentation process.

The rest of this paper is organized as follows. Background information and related work are first recalled in Section 2. Our approach is then introduced in Section 3, and experimental results are reported in Section 4. Finally, Section 5 concludes the paper and presents some directions for further research.

2 Related work

The basic concepts of DST and its application to classification are first recalled in Section 2.1. The contextual discounting operation, which plays a central role in our approach, is described separately in Section 2.2. The evidential neural network model used in this paper is then introduced in Section 2.3, and related work on multimodal medical image fusion is briefly reviewed in Section 2.4.

2.1 Dempster-Shafer theory

Let be the finite set of possible answers to some question, called the frame of discernment. Evidence about a variable taking values in can be represented by a mass function , such that

Each subset such that is called a focal set of . The mass represents a share of a unit mass of belief allocated to focal set , which cannot be allocated to any strict subset of . The mass can be interpreted as a degree of ignorance. Full ignorance is represented by the vacuous mass function verifying . If all focal sets are singletons, then is said to be Bayesian; it is equivalent to a probability distribution.

Belief and plausibility functions

The information provided by a mass function can also be represented by a belief function or a plausibility function from to defined, respectively, as:

and

for all , where denotes the complement of . The quantity can be interpreted as a degree of support for , while is a measure of lack of support against . The contour function associated to is the function that maps each element of to its plausibility, i.e.,

As shown below, this function can be easily computed when combining several pieces of evidence; it plays an important role in decision-making.

Dempster’s rule

In DST, the beliefs about a certain question are established by aggregating independent pieces of evidence represented by belief functions over the same frame of discernment [69]. Given two mass functions and derived from two independent items of evidence, the mass function representing the pooled evidence is defined as

| (1a) | |||

| for all , and . The coefficient is the degree of conflict between and , | |||

| (1b) | |||

This operation is called Dempster’s rule of combination. It is commutative and associative. The combined mass function is called the orthogonal sum of and . Mass functions and can be combined if and only if . Let , and denote the contour functions associated with, respectively, , and . The following equation holds:

| (2) |

The complexity of calculating the combined contour function using (2) is linear in the cardinality of , whereas computing the combined mass function using (1) has, in the worst-case, exponential complexity.

Conditioning

Given a mass function and a nonempty subset of such that , the conditional mass function is defined as the orthogonal sum of and the mass function such that . Conversely, given a conditional mass function given (expressing one’s beliefs in a context where it is only known that the truth lies in ), its conditional embedding [73] is the least precise mass function on such that ; it is obtained by transferring each mass to , for all . Conditional embedding is a form of “deconditioning”, i.e., it performs the inverse of conditioning.

Plausibility-probability transformation

Once a mass function representing the combined evidence has been computed, it is often used to make a decision. Decision-making methods in DST are reviewed in [18]. Here, we will use the simplest method [11], which consists in computing a probability distribution on by normalizing the plausibilities of the singletons,

| (3) |

Once probabilities have been computed, a decision can be made by maximizing the expected utility. We note that this method fits well with Dempster’s rule, as the plausibility of the singletons can be easily computed from (2) without computing the whole combined mass function.

2.2 Modeling the reliability of evidence

In the DST framework, the reliability of a source of information can be taken into account using the discounting operation, which transforms a mass function into a weaker, less informative one and thus allows us to combine information from unreliable sources [69]. Let be a mass function on and a real number in interpreted as the degree of belief that the source mass function is reliable. The discounting operation [69] with discount rate transforms mass function into a less informative one defined as a weighted sum of and the vacuous mass function , with coefficients and :

| (4) |

In the rest of this paper, we will refer to as a reliability coefficient. When , we accept the mass function provided by the source and take it as a description of our knowledge; when , we reject it and are left with the vacuous mass function .

The discounting operation plays an important role in many applications of DST, where it makes it possible to take into account “meta-knowledge” about the reliability of a source of information. It can be justified as follows [74]. Assume that is provided by a source that may be reliable () or not (). If the source is reliable, we adopt its opinion as ours, i.e., we set . If it is not reliable, then it leaves us in a state of total ignorance, i.e., . Furthermore, assume that we have the following mass function on : and , i.e., our degree of belief that the source is reliable is equal to . Then, combining the conditional embedding of with yields precisely in (4), after marginalizing on .

Contextual discounting

In [58], the authors generalize the discounting operation using the notion of contextual discounting, which makes it possible to account for richer metaknowledge about the reliability of a source in different contexts, i.e., conditionally on different hypotheses regarding the variable of interest. In the corresponding refined model, and are defined as before, but our beliefs about the reliability of the source are now defined by coefficients , one for each state in . More specifically, we have conditional mass functions defined by and , for . In this model, is, thus, the degree of belief that the source of information is reliable, given that the true state is . As shown in [58], combining the conditional embeddings of and for by Dempster’s rule yields the following discounted mass function,

| (5) |

for all , where is the vector of all reliability coefficients, and a product of terms is equal to 1 if the index set is empty. In many applications, we actually do not need to compute the whole mass function (5): we can compute only the associated contour function , which is all we need for decision-making. As shown in [58], this contour function is equal to

| (6) |

It can be computed in linear time with respect to the size of , instead of exponential time for . An evidential nearest neighbor rule based on the contextual discounting operation was introduced in [22].

Example 1

Consider a simplified diagnostic problem in which a patient may have one of two diseases denoted by and . Assume that is a heart disease while is a lung disease. A cardiologist examines the patient and describes his opinion by the following mass function on : , , , i.e., his degrees of belief in and are, respectively, 0.7 and 0.2. Furthermore, suppose that the cardiologist is fully reliable to diagnose heart diseases (), i.e., if the true state of the patient is , the physician’s opinion can be fully trusted, whereas he is only 60% reliable to diagnose lung diseases (), i.e., if is the true disease, there only is only 60% chance that the physician’s diagnostic is relevant. Applying formula (5) to gives the following discounted mass function:

The contour function of the original mass function is

After contextual discounting, we get

We can check that and , which is consistent with (6).

2.3 Evidential neural network

In [16], Denœux proposed a DST-based evidential neural network (ENN) classifier in which mass functions are computed based on distances between the input vector and prototypes. As shown in Figure 1, the ENN model comprises a prototype activation layer, a mass calculation layer, and a combination layer.

The prototype activation layer comprises units, whose weight vectors are prototypes in input space. The activation of unit in the prototype layer is

| (7) |

where and are two parameters. Each quantity can be interpreted as a degree of similarity between input vector and prototype .

The second hidden layer computes mass functions representing the evidence of each prototype , using the following equations:

| (8a) | ||||

| (8b) | ||||

where is the membership degree of prototype to class , and . The mass function can thus be seen as a discounted Bayesian mass function, with a discount rate ; its focal sets are singletons and . The mass assigned to increases with the distance between and . Finally, the third layer combines the mass functions using Dempster’s rule (1). The output mass function is a discounted Bayesian mass function that summarizes the evidence of the prototypes.

The idea of applying the above model to features extracted by a convolutional neural network (CNN) was first proposed by Tong et al. in [79]. In this approach, the ENN module becomes an “evidential layer”, which is plugged into the output of a CNN instead of the usual softmax layer. The feature extraction and evidential modules are trained simultaneously. Huang et al. applied the ENN model to medical image segmentation within a deep evidential segmentation network [37].

Remark 1

The approach described in this section should not be confused with the “evidential deep learning” approach introduced in [68] and applied to brain tumor segmentation in [93]. The latter approach is based on learning the parameters of a Dirichlet distribution that represents second-order uncertainty on the class probabilities. Although the parameters of the Dirichlet distribution can be formally identified to a mass function whose focal sets are the singletons and the whole frame , this is actually a Bayesian approach that learns a probability distribution over the class probabilities through a suitable loss function.

2.4 Multimodal medical image fusion

Multimodal medical image fusion can be performed at the pixel, feature or decision level. Pixel-level fusion is the traditional approach; it can be conducted directly in the spatial domain or indirectly through the application of transformations and representations. The fusion of high-level features is typically performed by a neural network learning a shared representation or a joint embedding space derived from multimodal features. Decision fusion consists in pooling decisions made independently from different image modalities; it can be performed with traditional or deep-learning approaches. In the following, we review previous work on multimodal medical image fusion, emphasizing the distinction between traditional and deep-learning approaches.

2.4.1 Traditional approaches

Traditional fusion methods aim at combining relevant information (either pixels themselves or low-level image features) from multiple images to produce a single fused image with enhanced features for further analysis. Four main approaches have been proposed: multi-scale transformation, sparse representation extraction, edge-preserving filters, and meta-heuristic optimization. The first three approaches focus on effective image representation, while the last one aims at combining the represented features efficiently.

The multi-scale transform approach decomposes images into different scales or frequency components using techniques such as wavelet transform [72], contourlet transforms [86], pyramid transforms [23] or curvelet transform [3], allowing relevant features from each source image to be combined. Sparse representation extraction assumes that multimodal images can be represented as a sparse linear combination of basis functions; search techniques such as dictionary learning [43] or sparse coding with dictionary learning [81] are used to obtain the sparse image representation and to merge images focusing on the most important features. Edge-preserving filters ensure the preservation of edges while smoothing images to ensure the fusion of critical features without blurring [75]. Commonly used filters include bilateral filters [47], guided filters [59], anisotropic diffusion [82], and total variation minimization [91]. The three above approaches can be used independently or in combination, which often yields better results. For example, in [35], Hu et al. propose a multimodal medical image fusion method based on separable dictionary learning and Gabor filtering; in [83], Wang et al. describe a multimodal medical image fusion method using Laplacian pyramid and adaptive sparse representations; in [51], Liu et al. introduce a general image fusion framework based on multi-scale transform and sparse representation.

In addition to studying effective image representations, a complementary research direction has been to design meta-heuristic optimization algorithms allowing one to find the best fusion parameters for combining features obtained by different transform, sparse or fitting algorithms. Many approaches use meta-heuristic optimization techniques such as genetic algorithms [3], particle swarm optimisation [77] or ant colony optimisation [70].

2.4.2 Deep learning approaches

Recent advances in deep learning have allowed breakthroughs in medical image fusion by making it to learn a joint embedding or a shared representation space from multiple features. Recent techniques include adversarial learning [67], co-training [89], multi-kernel learning [57], multi-task learning [52], etc. These methods exploit the ability of neural networks to extract meaningful representations and perform fusion in high-level feature spaces with learnable feature fusion rules. These approaches enable more sophisticated and robust image fusion, capable of handling complex relationships and producing high-quality fused image features. Here, we summarize three important models commonly used for multi-model medical image fusion.

Convolutional Neural Networks

Convolutional neural networks (CNNs) are widely used in image processing due to their strong feature representation capability. Within CNNs, various fusion operations can be used to effectively integrate information from different imaging modalities. Such operations include but are not limited to, concatenation, element-wise addition and multiplication, weighted sum, max pooling, etc. Fusion can occur at different stages of the network, i.e., early, middle, or late stages.

Early fusion stacks different modalities along a channel dimension and feeds into a single CNN [49]. This is the simplest operation but it requires high image registration quality. In the case of middle fusion, separate CNN branches are employed to extract features from each modality, which are subsequently concatenated at the feature level or fused in a particular common representation space. More recently, transformer-based CNN architectures, such as the Vision Transformer (ViT) [33], have also demonstrated considerable versatility in handling diverse types of data with the introduction of an attention mechanism [46]. CNNs can also be integrated with some traditional fusion ideas to obtain more robust fusion results using, e.g., the multiscale transformer [76] or multiscale residual pyramid attention network [28].

In contrast to the emphasis on image pixels or features in earlier fusion techniques, later fusion places greater importance on the aggregation of high-level decisions. It integrates information derived from preliminary classifications with the application of appropriate fusion rules. Approaches can be classified into two main categories: 1) hard fusion methods, which merge logical information membership values, such as model ensembling with majority or average voting [42]; and 2) soft fusion methods, where classifiers assign numerical values to reflect their confidence in decisions, as exemplified by fuzzy voting [32, 27].

Encoder-Decoder Networks

Encoder-decoder networks are another type of convolutional neural network commonly used for image segmentation and reconstruction. Within the encoder-decoder network, multiple encoders are used to extract deep features from each modality. These features are subsequently integrated either through a straightforward concatenation process or through a latent layer or learnt joint embedding space. The fused features are then passed to the decoder to produce the final image. Compared with CNNs, the Encoder-decoder architecture offers a more structured and effective fusion framework with enhanced feature representation, precise spatial alignment, and flexible and effective fusion strategies. Multimodal Transformer (MMT) [85] is one of the most sophisticated forms of multimodal encoder-decoder networks that employ self-attention mechanisms to integrate and process multimodal data in an effective manner; nnFormer [92] has been identified as the most advanced model for multimodal MRI brain tumor segmentation.

Generative Adversarial Networks

Generative Adversarial Networks (GANs), composed of a generator and a discriminator, are capable of learning complex relationships between disparate modalities through the generation of highly realistic images via unsupervised adversarial training [30]. In the context of multimodal medical image fusion, the generator learns to generate a fused image that combines the semantic features of the inputs from different modalities. The discriminator guides the generator to produce high-quality fused images by distinguishing between the fused and the real images. GAN-based fusion methods are particularly useful for advanced medical image fusion tasks where the quality and realism of the fused image are of paramount importance, such as the combination of structural and functional imaging modalities. For example, in [88], the authors propose a conditional generative adversarial network with a transformer for multimodal image fusion by introducing a wavelet fusion module to maintain long-distance dependencies across domains; in [67], the authors introduce an unsupervised medical fusion generative adversarial network to generate an image with CT bone structure and MRI soft tissue contrast by fusing CT and MRI image sequences.

Although a lot of research has been devoted to the study of multimodal medical image segmentation and promising experimental results have been obtained, modeling the reliability of each modality in a given context and quantifying the uncertainty on the outcome of the fusion process remain challenging research questions. In this paper, we address these questions using a deep evidential fusion framework combining deep learning with DST, and taking into account the reliability of each of the modalities being combined. The proposed decision-fusion framework is described in detail in the following section.

3 Proposed framework

The main idea of this paper is to hybridize a deep evidential fusion framework with uncertainty quantification and reliability learning for multimodal medical image segmentation under the framework of DST. The architecture of the system is described in Section 3.1, and the loss function used to train the whole framework end-to-end is presented in Section 3.2.

3.1 Architecture

The proposed framework is depicted in Figure 2. Features are first extracted from different modalities using independent encoder-decoder feature-extraction (FE) modules. The features from each modality are then transformed into mass functions using evidence mapping (EM) modules. Finally, mass functions are discounted and combined in a multi-modality evidence fusion (MMEF) module. These modules are described in greater detail below.

3.1.1 Feature-extraction (FE) module

Deep neural network architectures have been shown to be very powerful for extracting relevant information from high-dimensional data. Our approach is compatible with any deep FE architecture. The baseline model considered in this paper is UNet [41], a foundational medical image segmentation model. As illustrated in Figure 3, a UNet-based feature extraction module incorporates residual connections within each layer, following the same architecture as in [37]. Each layer of the module comprises encoding and decoding paths, connected by skip connections. In the encoding path (represented by blue blocks), the data undergoes downsampling through stride convolutions, while the decoding path (represented by green blocks) employs stride transpose convolutions for upsampling. The bottom layer, represented by the gray block, serves as the base connection without performing any down or up-sampling of the data. In Section 4.3, in addition to UNet, we will also consider the more recent nnUNet [40] and nnFormer [92] models as alternative FE modules. The settings of these modules will be described in Section 4.1.

3.1.2 Evidence mapping (EM) module

The EM module is based on the ENN architecture recalled in Section 2.3. It is identical to that described in [37]. As illustrated in Figure 2, we have one such module for each modality. The input to each module is a tensor containing the features extracted for each voxel. The prototypes are, thus, vectors in the -dimensional space of features extracted from modality images by the FE module. As explained in Section 2.3, a prototype layer first computes the similarities between feature vectors and prototypes using (7). The next layer computes mass functions for each prototype using (8) (see Figure 1). Finally, the prototype-based mass functions are combined by Dempster’s rule (1) in a third layer. Denoting by the set of classes, the EM module thus computes, for each voxel and modality , a mass function222Throughout this paper, we use an upper index to denote modalities, and lower indices and to denote, respectively, voxels and classes. with focal sets , and . The mass is a measure of the segmentation uncertainty for classifying voxel in the image of modality .

3.1.3 Multi-modality evidence fusion (MMEF) module

This module first transforms the contour functions from the EM modules using the contextual discounting operation recalled in Section 2.2. The contour function for voxel and modality is obtained from mass function as

Using (6), the discounted contour function is given by

| (9) |

where is the vector of discounting (reliability) coefficients for modality . We recall that represents our degree of belief that the modality is reliable when it is known that the actual class of voxel is . From (2), the combined contour function at voxel can then be computed up to a multiplicative constant by multiplying the contour functions for the modalities as

where is the vector of reliability coefficients for the the classes and modalities. Finally, the predicted probability distribution for voxel after combining evidence from the modalities is obtained from (3) as

| (10) |

The learnable parameters in this module are the reliability coefficients in vector .

3.2 Loss function

The whole framework is optimized by minimizing the following loss function,

where

-

1.

The term is the Dice loss quantifying the segmentation performance of each source modality independently, with

(11) where is the number of voxels, and if voxel belongs to class , and otherwise;

-

2.

The term quantifies the segmentation performance after combination:

(12) where is the predicted probability distribution for voxel given by (10).

The learnable parameters are the weights of the FE module, the prototypes and associated parameters , and of the EM module, and the reliability coefficients in the MMEF module. Learning the reliability coefficients is an original feature of our approach. As shown in Sections 4.2 and 4.3, these coefficients can allow us to gain some insight into the multi-modality segmentation process.

4 Experiments and results

In this section, the proposed framework described in Section 3 is applied to two real multimodal medical image datasets. The experimental settings are first described in Section 4.1. The results on the two datasets are then reported in Sections 4.2 and 4.3.

4.1 Experimental settings

Datasets

The proposed framework was tested on two multimodal medical image datasets.

The PET-CT lymphoma dataset contains 3D images from 173 patients who were diagnosed with large B-cell lymphomas and underwent PET-CT examination333The study was approved as a retrospective study by the Henri Becquerel Center Institutional Review Board.. For lymphoma segmentation, PET imaging helps identify active tumor sites by highlighting areas of increased metabolic activity. In contrast, CT imaging provides anatomical information about the size, shape, location, and surrounding structures of lymphoma tumors. While PET image makes it possible to obtain functional information about the tumor and surrounding tissues, CT images provide complementary anatomical details allowing for more accurate segmentation. The lymphomas in mask images were delineated manually by experts and considered as ground truth. Figure 4 shows an example of PET and CT images of a patient with lymphomas. The PET and CT images and the corresponding mask images have different sizes and spatial resolutions due to the use of different imaging machines and operations. For CT images, the size varies from to . For PET images, the size varies from to .

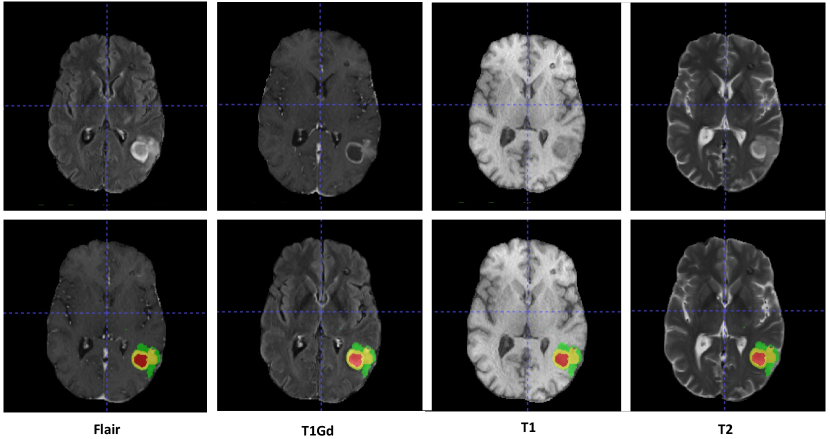

The multi-MRI brain tumor dataset was made available for the BraTS2021 challenge [5]. The original BraTS2021 dataset comprises training, validation, and test sets with, respectively, 1251, 219, and 570 cases. There are four modalities: FLAIR, T1Gd, T1, and T2 with voxels. Figure 5 shows examples of four-modality MRI slices for one patient. The appearance of brain tumors varies in different modalities [5]. T1Gd MRI images are obtained following the administration of a gadolinium-based contrast agent that enhances areas with disrupted blood-brain barrier such as tumor regions, making tumors appear hyperintense (bright) and improving the visibility of tumor margins. FLAIR MRI images suppress the signal from cerebrospinal fluid (CSF), highlighting pathological changes while suppressing the CSF signal. T2 MRI images are sensitive to tissue water content and provide good contrast between soft tissues. Tumors with increased water content often appear hyperintense (bright) on T2 images. T1 MRI images are crucial for identifying tumor location and structural details by their excellent anatomical detail. Annotations of scans comprise gadolinium (GD)-enhancing tumor (ET), necrotic and non-enhancing tumor core (NRC/NET), and peritumoral edema (ED). The task of the BraTS2021 challenge was to segment the images into three overlapping regions: ET, tumor core (TC, the union of ET and NRC/NET), and whole tumor (WT, the union of ET, NRC/NET, and ED). In this work, we evaluated the segmentation performances with respect to these three overlapping regions to allow a fair comparison with other state-of-the-art methods. Additionally, we also compared the results with respect to the three original non-overlapping tumor regions to highlight the impact of contextual discounting on subregion segmentation.

Pre-processing

For the PET-CT dataset, we first normalized the PET, CT and mask images: (1) for PET images, we applied a random intensity shift and scale to each channel with a shift value of 0 and scale value of 0.1; (2) for CT images, the shift and scale values were set to 1000 and 1/2000; (3) for mask images, the intensity value was normalized into the interval by replacing the outside value by . We then resized the PET and CT images to by linear interpolation and mask images to by nearest neighbor interpolation. Lastly, CT and PET images were registered using B-spline interpolation. Following [37], we randomly divided the 173 scans into subsets of size 138, 17, and 18 for, respectively, training, validation, and test. The training process was then repeated five times to test the stability of our framework, with different data used exactly once as the validation and test data.

For the BraTS2021 dataset, we used the same pre-processing operation as in [60]. We first performed a min-max scaling operation and clipped intensity values to standardize all volumes; we then cropped/padded the volumes to a fixed size of by removing the unnecessary background (the cropping/padding operation was only applied to training data). No data augmentation technique was applied, and no additional data was used in this study. Since the ground truth labels are unavailable for the validation and test sets, we trained and tested our framework with the training set. Following [60], we randomly divided the 1251 training scans into subsets of 834, 208, and 209 cases for training, validation, and testing, respectively. The process was repeated five times to test the stability of our framework. All the preprocessing methods mentioned in this paper can be found in the SimpleITK [53] toolkit.

All the compared methods used the same dataset composition and pre-processing operations. They were implemented in Python with the PyTorch-based medical image framework MONAI444More details about how to use those models can be found in MONAI core tutorials https://monai.io/started.html##monaicore..

Parameter initialization and learning

At the FE stage, the number of filters in UNet was set to with kernel size equal to five and convolutional strides equal to for layers from left to right. For nnUNet used in Section 4.3, the kernel size was set to and the upsample kernel size was set to with strides . For nnFormer used in Section 4.3, the crop size was set to with embedding dimension set to and the number of heads was set to . The number of extracted features was for the PET-CT lymphoma dataset and for the multi-MRI BraTS2021 dataset.

To train our fusion framework, we proceeded in three steps. First, FE modules (i.e., UNet, nnUNet, or nnFormer) were pre-trained independently for each modality during 50 epochs. Then, the weights of the FE modules were fixed, and the parameters of the EM and MMEF modules were optimized. Finally, the whole framework was fine-tuned for a few epochs. The initial values of parameters and in the EM modules were set to 0.5 and 0.01, and the membership degrees were initialized randomly by drawing uniform random numbers, and normalizing. We used, prototypes for the PET-CT lymphoma dataset, and prototypes for the more complex multi-MRI BraTS2021 dataset. These prototypes were randomly initialized from a normal distribution with zero mean and an identity covariance matrix. Details about the initialization of the EM module can be found in [37]. The reliability coefficients in the MMEF module were initialized at 0.5.

For both datasets, we used the Adam optimization algorithm with an early stopping strategy: training was stopped when there was no improvement in performance on the validation set during ten epochs. The initial learning rate was set to . The batch size was set to 4. For all the compared methods, the model with the best performance on the validation set was saved as the final model for testing555The code is available at https://github.com/iWeisskohl/Deep-evidential-fusion..

Evaluation criteria

Although many authors have shown that segmentation performance can be improved by merging multimodal medical images into deep neural networks [63, 2], the reliability of information sources and the quality of uncertainty quantification have rarely been investigated. Here, the former issue will be addressed by analyzing the reliability coefficients defined in Section 3.1.3. To assess the quality of uncertainty quantification, we will use three metrics: the Brier score [9], the negative log-likelihood (NLL), and Expected Calibration Error (ECE) [31]. These metrics provide a robust evaluation framework for the uncertainty of the segmentation results, with smaller values indicating better performance. Their definitions are recalled below.

The Brier Score and NLL are defined, respectively, as

and

where is the ground truth of voxel , is the predicted probability of voxel , and is the number of voxels.

The ECE measures the correspondence between predicted probabilities and ground truth. The output normalized plausibilities of the model are first discretized into equally spaced bins , ( in this paper). The accuracy of bin is defined as

where is the predicted class label for voxel and is the indicator function. The average confidence of bin is defined as

The ECE is the weighted average of the difference in accuracy and confidence of the bins:

A model is perfectly calibrated when for all , in which case .

Since our dataset has imbalanced foreground and background proportions, we only considered voxels belonging to the foreground or tumor region to calculate the above three indices. For the PET-CT lymphoma dataset, focusing only on the tumor region is not easy since the lymphomas are scattered throughout the whole body. Thus, we focused on the foreground region for this dataset. For the BraTS2021 dataset, we followed the suggestion from [66] to focus on the tumor region for the reliability evaluation. For each patient in the test set, we defined a bounding box covering the foreground or tumor region and calculated the corresponding values in this bounding box. For all segmentation performance criteria, the reported results were obtained by calculating the criteria for each test 3D scan and then averaging over the patients.

In addition to evaluating segmentation reliability, we also measured segmentation accuracy using the Dice score. In a segmentation task, the Dice score measures the volume of the overlapping region of the predicted object and the ground truth object as

where , , and denote, respectively, the numbers of true positive, false positive, and false negative voxels.

4.2 Segmentation results on the PET-CT lymphoma dataset

Segmentation uncertainty

The results concerning uncertainty estimation are reported in Table 1. Our model (MMEF-UNet) was compared to

-

1.

UNet with a softmax decision layer (the baseline);

- 2.

-

3.

ENN-UNet, composed of UNnet as the FE module and the EM module in place of the softmax layer; this is the architecture studied in [37];

-

4.

RBF-UNet, an alternative model composed of UNnet and a radial-basis function (RBF) module in place of the softmax layer; as shown in [37], this model makes it possible to compute output belief functions that are similar to those computed by ENN-UNet.

We can remark that approaches 1 to 4 above implement pixel-level fusion, whereas our approach is based on decision-level fusion. As for uncertainty quantification, UNet, UNet-MC and UNet-Ensemble are probabilistic methods. UNet only computes point estimates of class probabilities without taking into account second-order uncertainty. UNet-MC applies dropout during both training and inference, sampling multiple forward passes to estimate uncertainty by averaging the predictions. UNet-Ensemble quantifies uncertainty by averaging the predictions obtained from multiple independently-trained models. In contrast, ENN-UNet and RBF-UNet are evidential methods: they both calculate belief functions to represent segmentation evidence and uncertainty under the DST framework. For UNet-MC, the dropout rate was set to 0.2 and the number of samples was set to five; we averaged the five output probabilities at each voxel as the final output of the model. For UNet-ensembles, the number of samples was set to five; the five output probabilities were then averaged at each voxel as the final output of the model. The settings of ENN-UNet and RBF-ENN are the same as those reported in [37].

From Table 1, we can see that Monte-Carlo dropout and deep ensembles do not significantly improve the segmentation reliability as compared to the baseline UNet model, as shown, e.g., by the higher NLL values. In contrast, the addition of the EM module to the FE module, as implemented in ENN-UNet, brings a significant improvement, particularly according to NLL; the RBF-UNet model yields similar results. The decision-fusion framework MMEF-UNet brings an additional improvement according to all three criteria (ECE, Brier score, NLL) and outperforms the other models: specifically, we observe decreases of 1.1%, 0.9%, and 13% in ECE, Brier score, and NLL, respectively, as compared to UNet. We can conclude that, compared to the baseline model, both the EM and MMEF modules contribute to a higher segmentation reliability.

| Model | ECE | Brier score | NLL | Dice score |

| UNet | 0.0563.6 | 0.0653.9 | 0.3108.8 | 0.7703.2 |

| UNet-MC | 0.0534.6 | 0.0624.9 | 0.4008.7 | 0.8011.1 |

| UNet-Ensemble | 0.0637.6 | 0.0644.0 | 0.3437.2 | 0.8026.7 |

| ENN-UNet | 0.0503.5 | 0.0623.9 | 0.1911.4 | 0.8057.1 |

| RBF-UNet | 0.0513.3 | 0.0610.9 | 0.1931.3 | 0.8026.9 |

| MMEF-UNet (ours) | 0.0451.3 | 0.0562.7 | 0.1801.3 | 0.811 |

These findings are, to some extent, confirmed by Figure 6, which shows the calibration plots (also known as reliability diagrams) for the compared methods on the lymphoma dataset. Calibration plots are graphical representations showing how well the probabilistic predictions of a segmentation model are calibrated, i.e., how well confidence matches accuracy. In the left graph of Figure 6, we can see that the curve corresponding to UNet-Ensemble is closer to the diagonal than those of UNet and UNet-MC, which indicates better calibration. Looking at the right graph in Figure 6, we can see that the three DST-based models, ENN-UNet, RBF-UNet, and MMEF-UNet, have better calibration performance than the probabilistic ones, as shown by their calibration curves closer to the diagonal. Among them, MMEF-UNet shows the best calibration performance as ENN-UNet is slightly overconfident, while RBF-UNet is slightly underconfident.

Segmentation accuracy

The segmentation accuracy was measured by the Dice score, as shown in Table 1. Compared with the baseline model UNet, our proposal MMEF-UNet significantly increases segmentation performance, as shown by a 4.1% increase in the Dice score. Compared with the two DST-based deep evidential segmentation methods, MMEF-UNet has a higher Dice score (although the difference with ENN-UNet is not statistically significant). Figure 7 shows an example of visualized segmentation results obtained by UNet, ENN-UNet, RBF-UNet, and MMEF-UNet. We can see that UNet and RBF-UNet are more conservative (they correctly detect only a subset of the tumor voxels), while ENN-UNet is more radical (some of the voxels that do not belong to tumors are predicted as tumors). In contrast, the tumor regions predicted by MMEF-UNet better overlap the ground-truth tumor region, especially for the isolated lymphomas, which is also reflected by the promising Dice score value. These conclusions are consistent with the calibration trends displayed in Figure 6.

Analysis of reliability coefficients

| background | lymphomas | |

|---|---|---|

| PET | 0.9998.9 | 0.9964.5 |

| CT | 0.8631.8 | 0.9758.9 |

Table 2 reports the learned reliability coefficients. We can see that they are higher for the PET modality. This is consistent with domain knowledge, as mentioned in Section 4.1: PET images provide functional information about tumor activity and make it possible to identify active tumor sites, whereas CT images essentially provide detailed anatomical information (e.g., size, shape, and location) about lymph nodes and surrounding tissues and are used as a complement to PET images. This is also confirmed by the results presented in Table 3, showing that the performance of UNet and ENN-UNet with either CT alone or PET alone, the latter configuration yielding better results.

| Model | ECE | Brier score | NLL | Dice score |

|---|---|---|---|---|

| UNet (CT) | 0.1334.9 | 0.1579.8 | 0.5713.6 | 0.5442.8 |

| UNet (PET) | 0.0604.0 | 0.0684.0 | 0.3488.2 | 0.7642.9 |

| ENN-UNet (CT) | 0.1318.5 | 0.1561.0 | 0.5213.2 | 0.5432.7 |

| ENN-UNet (PET) | 0.0504.9 | 0.0645.4 | 0.1952.2 | 0.7813.5 |

4.3 Segmentation results on the multi-MRI BraTS2021 dataset

Segmentation uncertainty

For the BraTS2021 dataset, we tested the segmentation performance of our fusion framework with UNet as well as two alternative FE modules: nnUNet and nnFormer. The nnUNet model was reported to have the best performance in the BraTS2021 challenge [54] and nnFormer is now one of the state-of-the-art brain tumor segmentation models. The complete frameworks with nnUNet and nnFormer as a feature extractor are referred to, respectively, as MMEF-nnUNet and MMEF-nnFormer. We compared our results with three baseline models: UNet, nnUNet, and nnFormer, and three Monte Carlo-based uncertainty segmentation models: UNet-MC, nnUNet-MC, and nnFormer-MC. Since the results obtained in Section 4.2, as well as those reported in [37] have shown that ENN-UNet and RBF-UNet yield similar results, here we only compared the performance of the ENN-based models, i.e., ENN-UNet, ENN-nnUNet and ENN-nnFormer. Moreover, we did not test the performance of deep ensemble models because applying them to larger-scale datasets exceeds our computation resources.

| Model | ECE | Brier score | NLL |

|---|---|---|---|

| UNet | 0.0711.8 | 0.1411.8 | 2.4752.2 |

| UNet-MC | 0.0671.3 | 0.1354.5 | 2.2647.3 |

| ENN-UNet | 0.0651.3 | 0.1304.5 | 2.2503.6 |

| MMEF-UNet (ours) | 0.0601.3 | 0.1152.2 | 2.1894.1 |

| Model | ECE | Brier score | NLL |

|---|---|---|---|

| nnUNet | 0.0532.2 | 0.1094.5 | 1.8237.3 |

| nnUNet-MC | 0.0511.8 | 0.1074.5 | 1.8105.8 |

| ENN-nnUNet | 0.0531.8 | 0.1094.9 | 1.8048.2 |

| MMEF-nnUNet (ours) | 0.0511.3 | 0.1022.7 | 1.7485.9 |

| Model | ECE | Brier score | NLL |

|---|---|---|---|

| nnFormer | 0.0551.6 | 0.1113.2 | 1.9175.5 |

| nnFormer-MC | 0.0531.8 | 0.1073.6 | 1.7566.1 |

| ENN-nnFormer | 0.0551.4 | 0.1103.6 | 1.9077.0 |

| MMEF-nnFormer (ours) | 0.0520.6 | 0.1031.2 | 1.7872.2 |

As with the lymphoma dataset, we used the ECE, Brier score, and NLL metrics to assess segmentation uncertainty. The results with UNet, nnUNet and nnFormer in the FE module are presented, respectively, in Tables 4, 5 and 6. We can see that our fusion model consistently outperforms the baseline models with all three FE models and across all uncertainty evaluation metrics, although the differences are more significant when UNet is used as a feature extractor. Indeed, the fusion mechanism can be expected to have a smaller impact when information sources are more informative. Overall, MMEF-nnUNet achieves the highest segmentation reliability with the lowest ECE, Brier score, and NLL values, and MMEF-nnFormer yields the second-best results.

Segmentation accuracy

Segmentation accuracy was evaluated by the Dice score for the three overlapping regions, ET, TC, and WT, as well as by the mean Dice score. The results with UNet, nnUNet and nnFormer as feature extractors are reported, respectively, in Tables 7, 8 and 9. Again, we can see that our fusion strategy improves segmentation accuracy for all three FE models. Overall, the highest segmentation accuracy was achieved by MMEF-nnFormer, with an increase of 1.5 % in the mean Dice score compared with the second-best method, ENN-nnFormer.

We also report the Dice score for the segmentation of the three original tumor regions: ED, ET, and NRC/NET in Table 10. As we can see, the baseline nnFormer shows good performance for segmenting ED and ET, while it does not perform as well for segmenting NRC/NET. Indeed, the lack of clear contrast, the similar signal intensities to normal brain tissue, the infiltrative growth patterns, and the need for multi-modal data make the segmentation of NRC/NET inherently more challenging compared to ED and ET. When the MMEF-nnFormer approach was applied, the Dice scores for the ED, ET, and NRC/NET improved by 0.6%, 1.6%, and 6.5%, respectively. The substantial improvement in NRC/NET segmentation is particularly encouraging, as it demonstrates the effectiveness of the proposed fusion method for delineating fuzzy tumor boundaries and solving challenging segmentation tasks.

| Model | ET | TC | WT | Mean |

|---|---|---|---|---|

| UNet | 0.8079.4 | 0.8258.5 | 0.8816.7 | 0.8377.2 |

| UNet-MC | 0.8121.3 | 0.8321.1 | 0.8866.3 | 0.8438.9 |

| ENN-UNet | 0.8101.3 | 0.8421.1 | 0.8965.4 | 0.8499.4 |

| MMEF-UNet (ours) | 0.8331.2 | 0.8547.2 | 0.9074.9 | 0.8645.8 |

| Model | ET | TC | WT | Mean |

|---|---|---|---|---|

| nnUNet | 0.7914.9 | 0.8505.8 | 0.9123.5 | 0.8514.4 |

| nnUNet-MC | 0.8024.4 | 0.8604.9 | 0.9164.4 | 0.8594.9 |

| ENN-nnUNet | 0.8079.8 | 0.8691.9 | 0.9155.4 | 0.8639.8 |

| MMEF-nnUNet (ours) | 0.8329.8 | 0.8732.6 | 0.9181.3 | 0.8754.4 |

| Model | ET | TC | WT | Mean |

| nnFormer | 0.8393.8 | 0.8782.9 | 0.9152.4 | 0.8771.7 |

| nnFormer-MC | 0.8373.7 | 0.8774.5 | 0.9142.9 | 0.8762.3 |

| ENN-nnFormer | 0.8369.8 | 0.8825.6 | 0.9145.2 | 0.8783.2 |

| MMEF-nnFormer (ours) | 0.8547.5 | 0.9115.4 | 0.9142.3 | 0.8934.8 |

| Model | ED | ET | NRC/NET | Mean |

|---|---|---|---|---|

| nnFormer | 0.8175.0 | 0.8393.8 | 0.7407.2 | 0.7992.5 |

| MMEF-nnFormer (ours) | 0.8233.3 | 0.8557.5 | 0.8057.3 | 0.8284.7 |

Figures 8 and 9 show two segmentation cases when using nnFormer as the feature extractor. Figure 8 shows an easy segmentation case where only one tumor type is present. Both the Flair and T1Gd images exhibit good segmentation performance with only a few mislabeled voxels. It is surprising to see that concatenating multimodal medical images as the input for nnFormer resulted in worse outcomes, with the most mislabeled voxels. This might be due to the hard fusion strategy of nnFormer, i.e., image concatenation, which cannot mitigate the impact of noisy information. Consequently, the fused results are sometimes not as good as those from single-modality inputs. The proposed MMEF-nnFormer approach achieves the best performance, with fewer mislabeled voxels compared to other methods. Figure 9 illustrates a challenging segmentation scenario involving a tumor with ED, ET, and NRC/NET components. We can remark that the FLAIR image alone provides sufficient information to accurately segment ED, which is consistent with domain knowledge. Overall, the MMEF-nnFormer model yields the best results in this case. This example illustrates the ability of our method to improve segmentation accuracy by appropriately weighting and combining information from different modalities.

Analysis of reliability coefficients

We first recall some clinical domain knowledge of MRI images in segmenting brain tumors:

-

1.

T1Gd images are particularly useful for delineating tumor boundaries by making tumor regions hyperintense (bright);

-

2.

FLAIR images help delineate tumor boundaries, assess tumor infiltration into surrounding brain tissue, and are particularly sensitive to peritumoral edema, which appears hyperintense (bright) on FLAIR sequences;

-

3.

T2 images help delineate tumor extent, identify peritumoral edema, and assess the relationship between the tumor and surrounding brain structures;

-

4.

Tumors typically appear hypointense (dark) on T1 images, while the contrast between the tumor and surrounding normal brain tissue may not always be sufficient for accurate segmentation.

Figure 10 shows the learned reliability coefficients estimated by MMEF-nnFormer, for the four modalities and the three tumor classes. It can be seen that the evidence from the T1Gd modality is reliable when the true class is ED, ET, or NRC/NET, with all the reliability values greater than 0.9. In contrast, the evidence from the FLAIR modality is more reliable for the ED class with a high-reliability coefficient of 0.879 against, respectively, 0.26 and 0.39 for ET and NRC/NET. The evidence from the T2 modality shows similar reliability in segmenting the three classes with a reliability coefficient of around 0.5. The evidence from the T1 modality is the least reliable one, compared with the other three MRI modalities. These results are consistent with domain knowledge about these modalities as reported in [5] and recalled at the beginning of this section, i.e., T1Gd images are useful for delineating tumor boundaries, FLAIR images are sensitive to ED, and T1 images are not sufficient for accurate tumor segmentation. This transparency and explainability of the decision-making process can be expected to enhance end-users’ trust and can be seen as significant advantages of the proposed multimodal evidence fusion approach, as opposed to the “black box” nature of conventional deep learning segmentation models.

4.4 Discussion

In the following, we provide some discussion about the generalizability, computational complexity, and limitations of our approach.

Generalizability

The main advantage of our framework is its ability to model and learn the reliability of each image modality, which can be crucial when dealing with diverse, potentially noisy, or low-quality data. While multimodal medical image segmentation tasks are the focus of this paper, the proposed deep evidential fusion framework can be applied to a broader range of challenging medical tasks involving heterogeneous data sources. For instance, in medical tasks such as diagnosing dementia or Alzheimer’s disease, various heterogeneous medical data are available [10]. These data can include lower-quality brain MRI images due to brain degeneration, textual data on disease history and progression, time-series data on blood-brain-barrier integrity, cerebrovascular information, and other relevant physiological measures. Traditional models struggle to effectively address this heterogeneous data within a single neural network [13], and recent work also proposed to address data heterogeneity with model ensembles and hard decision fusion [78]. Our deep evidential fusion framework could be well-suited to analyze such heterogeneous medical tasks. By learning the reliability coefficients for each of the modalities, our model can effectively combine the evidence from heterogeneous sources to reach a more informed and explainable diagnostic decision.

Beyond medical image processing, our approach could be applied to multimodal data fusion in other domains, such as reviewed in [44] and [8]. As examples of potential application domains where heterogeneous data need to be processed to make decisions, we can mention remote sensing and earth observations, in which light detection and ranging (LiDAR), synthetic aperture radar (SAR), and hyperspectral images need to be combined for, e.g., improved classification of objects. As noted in [44], SAR and LiDAR use different electromagnetic frequencies and thus interact differently with materials and surfaces. It would thus be beneficial to apply different discounting (reliability) coefficients to these sensor data depending on the nature of the objects of interest. This conjecture needs, of course, to be validated experimentally, which goes beyond the scope of this paper.

Computational complexity

Although the operations of DST have, in the worst case, exponential complexity, the mass functions computed in the EM module have only focal sets, where is the number of classes, and the contextual discounting operation computed in the MMEF is applied to the contour function, as explained in Section 3.1.3. Consequently, the number of operations performed in the EM and MMEF modules is only linear in the number of classes. More precisely, as shown in [16], each forward and backward propagation for one voxel and one modality in the EM module has complexity , where is the number of prototypes, is the number of features extracted by the FE module, and is the number of classes. In the MMEF module, the discounting of the mass functions for each voxel using (9) and their combination using (10) can be performed in operations, and the backward pass (gradient calculation) requires the same computational effort. Overall, the complexity of our model is, thus, similar to that performed in standard neural network architectures based on weighted sums. In terms of computing times, pre-training each of the FE modules with the nnFormer architecture took approximately one hour on our machine666All models were trained on an NVIDIA A100-SXM4 graphics card with 40 GB GPU memory. for the BraTS2021 dataset, and training the whole system end-to-end took 2.3 hours. The total training time (6.4 hours) is slightly less than that of nnFormer with the four modalities (7.8 hours). As far as state-of-the-art uncertainty quantification techniques are concerned, Monte Carlo dropout does not significantly impact training time, while the deep ensemble method is notoriously time-consuming because it implies training several models. Overall, our framework based on DST and decision fusion is at least as efficient as alternative uncertainty quantification approaches.

Limitations

Our approach is based on combining high-level information extracted from each modality by the FE and EM modules in the form of mass functions. It, thus, has all the advantages and limitations of decision-level fusion approaches. On the plus side, it is highly modular and can still provide sensible results when only some of the modalities are available. This advantage is not crucial in multimodal image segmentation applications because all modalities are usually available, but it can matter in other potential applications such as remote sensing, as mentioned above. Another advantage of decision fusion is that the fusion process is simple and transparent, as already discussed in Sections 4.2 and 4.3. On the minus side, decision-level fusion is, at least in principle, suboptimal because it does not consider all input data globally: we can always construct a classification task in which a single classifier trained with a set of features will perform better than a combination of classifiers trained with each of the features. The good performances of our approach reported in Sections 4.2 and 4.3 show that this potential suboptimality is not an issue in the considered medical image segmentation applications, but it could be in other applications. Another limitation of our approach is that, to keep computations simple, we do not combine the whole discounted mass functions in the MMEF module, but only the contour functions. As a result, the output at each voxel is not a full mass function (with focal sets), which prevents us from harnessing the full power of DST, such as some of the decision rules reviewed in [18]. This and other limitations will be addressed in future work.

5 Conclusion

We have proposed a deep decision-level fusion architecture for multi-modality medical image segmentation. In this approach, features are first extracted from each modality using a deep neural network such as UNet. An evidence-mapping module based on prototypes in feature space then computes a Dempster-Shafer mass function at each voxel. To account for the varying reliability of different information sources in different contexts, the mass functions are transformed using the contextual discounting operation before being combined by Dempster’s rule. The whole framework is trained end-to-end by minimizing a loss function that quantifies prediction error both at the modality level and after fusion.

This model has been evaluated using two real-world datasets for lymphoma segmentation in PET-CT images and brain tumor segmentation in multi-MRI images. In both cases, our approach has been shown to allow for better uncertainty quantification and image segmentation as compared to various alternative schemes based on pixel-level fusion. In particular, as compared to UNet, nnUNet or nnFormer alone with a softmax layer, the introduction of the evidential mapping module (computing the mass functions) improves the results, and the decision-level fusion scheme with contextual discounting brings an additional improvement. Furthermore, the values found for the reliability coefficients are consistent with domain knowledge, which suggests that these coefficients can provide useful insight into the fusion process.

This work can be extended in many directions. First, as discussed in Section 4.4, our DST-based fusion approach can be applied to a variety of learning tasks in which several sources of information must be combined. In the biomedical domain, it could be applied to fuse heterogeneous data such as signals, personal information, biomarkers, gene information, etc. In remote sensing, a potential application could be, e.g., the fusion of Lidar, SAR and hyperspectral data. References [44] and [8] mention many other applications in which multimodal data fusion plays an important role, including human-machine interaction, meteorological monitoring using weather radar and satellite data, or concrete structural monitoring through fusing ultrasonic, impact echo, capacitance, and radar. From a theoretical point of view, our approach could be extended in several directions. As mentioned in Section 4.4, we could combine not only the contour functions from the EM module but the whole mass functions, which would allow us to compute richer outputs that could be exploited within more sophisticated decision strategies such as partial classification [55], or further combined with other data. We could also consider other mass-function correction methods making it possible to account for more diverse meta-knowledge about information sources such as proposed, e.g. in [62], and/or other combination rules such as the cautions rule [17] or variants with learnable parameters as used in [64].

Acknowledgements

This work was supported by the China Scholarship Council (No. 201808331005). It was carried out in the framework of the Labex MS2T, which was funded by the French Government, through the program “Investments for the Future” managed by the National Agency for Research (Reference ANR-11-IDEX-0004-02).

References

- [1] M. Abdar, F. Pourpanah, S. Hussain, D. Rezazadegan, L. Liu, M. Ghavamzadeh, P. Fieguth, X. Cao, A. Khosravi, U. R. Acharya, et al. A review of uncertainty quantification in deep learning: Techniques, applications and challenges. Information fusion, 76:243–297, 2021.

- [2] G. Andrade-Miranda, V. Jaouen, O. Tankyevych, C. C. Le Rest, D. Visvikis, and P.-H. Conze. Multi-modal medical transformers: A meta-analysis for medical image segmentation in oncology. Computerized Medical Imaging and Graphics, 110:102308, 2023.

- [3] M. Arif and G. Wang. Fast curvelet transform through genetic algorithm for multimodal medical image fusion. Soft Computing, 24(3):1815–1836, 2020.

- [4] C. Asha, S. Lal, V. P. Gurupur, and P. P. Saxena. Multi-modal medical image fusion with adaptive weighted combination of NSST bands using chaotic grey wolf optimization. IEEE Access, 7:40782–40796, 2019.

- [5] U. Baid, S. Ghodasara, S. Mohan, M. Bilello, E. Calabrese, E. Colak, K. Farahani, J. Kalpathy-Cramer, F. C. Kitamura, S. Pati, et al. The RSNA-ASNR-MICCAI BraTS 2021 benchmark on brain tumor segmentation and radiogenomic classification. arXiv preprint arXiv:2107.02314, 2021.

- [6] M. Bauer, M. Van der Wilk, and C. E. Rasmussen. Understanding probabilistic sparse gaussian process approximations. Advances in neural information processing systems, 29, 2016.

- [7] Y. Bengio, I. Goodfellow, and A. Courville. Deep learning, volume 1. MIT press Cambridge, MA, USA, 2017.

- [8] R. Bokade, A. Navato, R. Ouyang, X. Jin, C.-A. Chou, S. Ostadabbas, and A. V. Mueller. A cross-disciplinary comparison of multimodal data fusion approaches and applications: Accelerating learning through trans-disciplinary information sharing. Expert Systems with Applications, 165:113885, 2021.

- [9] G. W. Brier et al. Verification of forecasts expressed in terms of probability. Monthly weather review, 78(1):1–3, 1950.

- [10] C. Bycroft, C. Freeman, D. Petkova, G. Band, L. T. Elliott, K. Sharp, A. Motyer, D. Vukcevic, O. Delaneau, J. O’Connell, et al. The uk biobank resource with deep phenotyping and genomic data. Nature, 562(7726):203–209, 2018.

- [11] B. R. Cobb and P. P. Shenoy. On the plausibility transformation method for translating belief function models to probability models. International Journal of Approximate Reasoning, 41(3):314–330, 2006.

- [12] R. Cooke et al. Experts in uncertainty: opinion and subjective probability in science. Oxford University Press, 1991.

- [13] Y. Dai, D. Qiu, Y. Wang, S. Dong, and H.-L. Wang. Research on computer-aided diagnosis of Alzheimer’s disease based on heterogeneous medical data fusion. International Journal of Pattern Recognition and Artificial Intelligence, 33(05):1957001, 2019.

- [14] F. Delmotte, L. Dubois, and P. Borne. Context-dependent trust in data fusion within the possibility theory. In 1996 IEEE International Conference on Systems, Man and Cybernetics. Information Intelligence and Systems, volume 1, pages 538–543. IEEE, 1996.

- [15] A. P. Dempster. Upper and lower probability inferences based on a sample from a finite univariate population. Biometrika, 54(3-4):515–528, 1967.

- [16] T. Denœux. A neural network classifier based on Dempster-Shafer theory. IEEE Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans, 30(2):131–150, 2000.

- [17] T. Denœux. Conjunctive and disjunctive combination of belief functions induced by non distinct bodies of evidence. Artificial Intelligence, 172:234–264, 2008.

- [18] T. Denœux. Decision-making with belief functions: A review. International Journal of Approximate Reasoning, 109:87–110, 2019.

- [19] T. Denœux. NN-EVCLUS: neural network-based evidential clustering. Information Sciences, 572:297–330, 2021.

- [20] T. Denœux, D. Dubois, and H. Prade. Representations of uncertainty in artificial intelligence: Beyond probability and possibility. In P. Marquis, O. Papini, and H. Prade, editors, A Guided Tour of Artificial Intelligence Research, volume 1, chapter 4, pages 119–150. Springer Verlag, 2020.

- [21] T. Denœux, D. Dubois, and H. Prade. Representations of uncertainty in artificial intelligence: Probability and possibility. In P. Marquis, O. Papini, and H. Prade, editors, A Guided Tour of Artificial Intelligence Research, volume 1, chapter 3, pages 69–117. Springer Verlag, 2020.

- [22] T. Denœux, O. Kanjanatarakul, and S. Sriboonchitta. A new evidential k-nearest neighbor rule based on contextual discounting with partially supervised learning. International Journal of Approximate Reasoning, 113:287–302, 2019.

- [23] J. Du, W. Li, B. Xiao, and Q. Nawaz. Union laplacian pyramid with multiple features for medical image fusion. Neurocomputing, 194:326–339, 2016.

- [24] D. Dubois, W. Liu, J. Ma, and H. Prade. The basic principles of uncertain information fusion. an organised review of merging rules in different representation frameworks. Information Fusion, 32:12–39, 2016.

- [25] Z. Elouedi, K. Mellouli, and P. Smets. Assessing sensor reliability for multisensor data fusion within the transferable belief model. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 34(1):782–787, 2004.

- [26] S. Fabre, A. Appriou, and X. Briottet. Presentation and description of two classification methods using data fusion based on sensor management. Information Fusion, 2(1):49–71, 2001.

- [27] P. H. Foo and G. W. Ng. High-level information fusion: An overview. J. Adv. Inf. Fusion, 8(1):33–72, 2013.

- [28] J. Fu, W. Li, J. Du, and Y. Huang. A multiscale residual pyramid attention network for medical image fusion. Biomedical Signal Processing and Control, 66:102488, 2021.

- [29] Y. Gal and Z. Ghahramani. Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In international conference on machine learning, pages 1050–1059. PMLR, 2016.

- [30] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio. Generative adversarial nets. In Z. Ghahramani, M. Welling, C. Cortes, N. Lawrence, and K. Weinberger, editors, Advances in Neural Information Processing Systems, volume 27. Curran Associates, Inc., 2014.

- [31] C. Guo, G. Pleiss, Y. Sun, and K. Q. Weinberger. On calibration of modern neural networks. In International Conference on Machine Learning, pages 1321–1330. PMLR, 2017.

- [32] D. L. Hall, M. McNeese, J. Llinas, and T. Mullen. A framework for dynamic hard/soft fusion. In 2008 11th International Conference on Information Fusion, pages 1–8. IEEE, 2008.

- [33] K. Han, Y. Wang, H. Chen, X. Chen, J. Guo, Z. Liu, Y. Tang, A. Xiao, C. Xu, Y. Xu, et al. A survey on vision transformer. IEEE transactions on pattern analysis and machine intelligence, 45(1):87–110, 2022.

- [34] G. E. Hinton and D. Van Camp. Keeping the neural networks simple by minimizing the description length of the weights. In Proceedings of the sixth annual conference on Computational learning theory, pages 5–13, 1993.

- [35] Q. Hu, S. Hu, and F. Zhang. Multi-modality medical image fusion based on separable dictionary learning and gabor filtering. Signal Processing: Image Communication, 83:115758, 2020.

- [36] L. Huang, T. Denoeux, P. Vera, and S. Ruan. Evidence fusion with contextual discounting for multi-modality medical image segmentation. In Medical Image Computing and Computer Assisted Intervention–MICCAI 2022: 25th International Conference, Singapore, September 18–22, 2022, Proceedings, Part V, pages 401–411. Springer, 2022.

- [37] L. Huang, S. Ruan, P. Decazes, and T. Denœux. Lymphoma segmentation from 3d PET-CT images using a deep evidential network. International Journal of Approximate Reasoning, 149:39–60, 2022.

- [38] L. Huang, S. Ruan, and T. Denoeux. Application of belief functions to medical image segmentation: A review. Information fusion, 91:737–756, 2023.

- [39] L. Huang, S. Ruan, Y. Xing, and M. Feng. A review of uncertainty quantification in medical image analysis: Probabilistic and non-probabilistic methods. Medical Image Analysis, 97:103223, 2024.

- [40] F. Isensee, J. Petersen, A. Klein, D. Zimmerer, P. F. Jaeger, S. Kohl, J. Wasserthal, G. Koehler, T. Norajitra, S. Wirkert, et al. nnu-net: Self-adapting framework for u-net-based medical image segmentation. arXiv preprint arXiv:1809.10486, 2018.

- [41] E. Kerfoot, J. Clough, I. Oksuz, J. Lee, A. P. King, and J. A. Schnabel. Left-ventricle quantification using residual u-net. In International Workshop on Statistical Atlases and Computational Models of the Heart, pages 371–380. Springer, 2018.

- [42] S. U. R. Khan, M. Zhao, S. Asif, and X. Chen. Hybrid-net: A fusion of densenet169 and advanced machine learning classifiers for enhanced brain tumor diagnosis. International Journal of Imaging Systems and Technology, 34(1):e22975, 2024.

- [43] M. Kim, D. K. Han, and H. Ko. Joint patch clustering-based dictionary learning for multimodal image fusion. Information fusion, 27:198–214, 2016.

- [44] D. Lahat, T. Adali, and C. Jutten. Multimodal data fusion: An overview of methods, challenges, and prospects. Proceedings of the IEEE, 103(9):1449–1477, 2015.

- [45] B. Lakshminarayanan, A. Pritzel, and C. Blundell. Simple and scalable predictive uncertainty estimation using deep ensembles. Advances in neural information processing systems, 30, 2017.

- [46] H. Li and X.-J. Wu. Crossfuse: A novel cross attention mechanism based infrared and visible image fusion approach. Information Fusion, 103:102147, 2024.

- [47] X. Li, F. Zhou, H. Tan, W. Zhang, and C. Zhao. Multimodal medical image fusion based on joint bilateral filter and local gradient energy. Information Sciences, 569:302–325, 2021.

- [48] C. Lian, S. Ruan, T. Denœux, H. Li, and P. Vera. Joint tumor segmentation in PET-CT images using co-clustering and fusion based on belief functions. IEEE Transactions on Image Processing, 28(2):755–766, 2019.