Deep Contrastive Graph Learning with Clustering-Oriented Guidance

Abstract

Graph Convolutional Network (GCN) has exhibited remarkable potential in improving graph-based clustering. To handle the general clustering scenario without a prior graph, these models estimate an initial graph beforehand to apply GCN. Throughout the literature, we have witnessed that 1) most models focus on the initial graph while neglecting the original features. Therefore, the discriminability of the learned representation may be corrupted by a low-quality initial graph; 2) the training procedure lacks effective clustering guidance, which may lead to the incorporation of clustering-irrelevant information into the learned graph. To tackle these problems, the Deep Contrastive Graph Learning (DCGL) model is proposed for general data clustering. Specifically, we establish a pseudo-siamese network, which incorporates auto-encoder with GCN to emphasize both the graph structure and the original features. On this basis, feature-level contrastive learning is introduced to enhance the discriminative capacity, and the relationship between samples and centroids is employed as the clustering-oriented guidance. Afterward, a two-branch graph learning mechanism is designed to extract the local and global structural relationships, which are further embedded into a unified graph under the cluster-level contrastive guidance. Experimental results on several benchmark datasets demonstrate the superiority of DCGL against state-of-the-art algorithms.

Introduction

Graph-based clustering achieves dominant performance due to its capability on capturing data manifold. It uses an undirected graph to learn the pair-wise relations between samples, and infers the cluster labels from the adjacency structure. Recently, neural networks are widely used in data clustering (Xie, Girshick, and Farhadi 2016; Shaham et al. 2018; Bo et al. 2020; Ren et al. 2022). Among a variety of network structures, Graph Convolutional Network (GCN) (Kipf and Welling 2016a) has shown a prominent ability on exploring the relationship among graph-type data, and realized good results on graph-based clustering (Zhang et al. 2022).

GCN-based clustering methods learn the data representation with the input graph and data matrix. For general clustering tasks, there is no prior graph that records the relationships of samples. Hence, an initial graph needs to be constructed as the input of GCN. Although this approach has demonstrated promising results, it gives rise to some issues. Firstly, as observed in the Graph Auto-Encoder (GAE) framework (Li, Zhang, and Zhang 2021; Zhao et al. 2022) and a series of graph-structured data clustering models (Wang et al. 2019; Tu et al. 2021; Liu et al. 2022c), the training procedure relies on the input graph heavily, and pays insufficient attention to the original features. Therefore, if there are many connected samples from different classes in the initial graph, the embedding obtained by multilayer aggregation would be ambiguous, leading to a loss of discriminative capacity. This phenomenon is called as representation collapse. Secondly, GCN-based models emphasize the representational ability of the learned graph embedding, but lack clustering-oriented guidance, such as the distribution of centroids. As a result, the output of GCN may contain clustering-irrelevant information, while the cluster structure is not fully captured, which is essential for data clustering.

To address these issues, we propose the Deep Contrastive Graph Learning (DCGL) model for the general clustering task without a prior graph. As shown in Fig. 1, DCGL consists of a pseudo-siamese network, wherein one branch handles both the data matrix and the input graph with GCN, while the other branch encodes the data matrix with an encoder. During this stage, feature-level contrastive learning models the distances between the estimated centroids and samples, such that the discriminative characteristics are explored. Then, the outputs are employed to construct the Local Propinquity Graph (LPG) and Global Diffusion Graph (GDG) with different neighborhood sizes respectively. After that, the graphs are mapped into the cluster space, where cluster-level contrastive learning is used to push away the different centroids. In this way, the cluster structure is better maintained. The main contributions of this paper are summarized as follows:

-

•

A deep unsupervised graph learning model is established to cluster general data, which takes a pseudo-siamese network to analyze structural dependencies and node attributes concurrently. By considering the original features explicitly and updating the learned graph adaptively, the reliance on the initial graph is alleviated.

-

•

Two clustering-oriented contrastive learning strategies are devised to provide training guidance. Feature-level contrastive learning aims to preserve the discriminability of each node, and cluster-level contrastive learning promotes a clear distribution of centroids.

-

•

The graph learning procedure is divided into two branches to capture the data manifold from local and global perspectives respectively. Both the local and global graphs are mapped into the cluster space to search a unified cluster structure.

Related Work

Graph Structure Learning

Graph learning is an important topic in many practical applications (Liu et al. 2023; Li 2022a, b). -Nearest Neighbor (KNN) is the most traditional graph learning way. This strategy builds the adjacency relation based on the sample distance, and then calculates the weight by a specific formula (Cai et al. 2010; Li et al. 2017a, b). The graph from simple KNN reserves limited manifold information, so some advanced graph learning theories are raised, such as adaptive neighbor learning (Nie, Wang, and Huang 2014; Kang et al. 2019), self-expression (Elhamifar and Vidal 2013; Kang, Peng, and Cheng 2017), and consistency propagation (Huang et al. 2022; Tan et al. 2023).

In recent years, Graph Neural Network (GNN) has presented reliable competence in deep graph structure learning. GLCN (Jiang et al. 2019) exploits a unified framework for executing graph learning and graph convolution. IDGL (Chen, Wu, and Zaki 2020) leverages graph regularization terms to enhance learning quality. Pro-GNN (Jin et al. 2020) employs alternating optimization to realize the mutual regularization of graph learning and network update. GRCN (Yu et al. 2020) predicts edges and regulates weights adaptively by residual link. VIB-GSL (Sun et al. 2022) quotes a novel theory of variational information bottleneck to structure an adjacency graph. The above influential models have shown impressive accuracy in experiments. However, most of the deep models are tailored for some kind of supervised scenario, which is usually node classification. SUBLIME (Liu et al. 2022d) is described as the first attempt at unsupervised deep graph learning. In this paper, we plan to develop an unsupervised procedure to support graph-based clustering.

GNN-Based Data Clustering

To date, a series of GNN-based models have achieved excellent performance in graph-structured data clustering (Liu et al. 2022b). DAEGC (Wang et al. 2019) uses a new GAE based on graph attention network to learn pivotal node features. SDCN (Bo et al. 2020) performs GCN and auto-encoder simultaneously for better cluster learning. DFCN (Tu et al. 2021) applies a representation fusion module to integrate the topology structure and node attributes. DCRN (Liu et al. 2022c) utilizes the graph augmentation technology to explore different levels of latent characteristics, and then merges them into a consensus cluster representation.

In the above methods, the final cluster embedding relies on the input graph strongly, which means that the initial graph has a decisive influence on clustering performance. Therefore, the aforesaid models are not appropriate for the general clustering scenario without a reliable graph structure. Very lately, some modified GAEs (Li, Zhang, and Zhang 2021; Zhang et al. 2022) are established to handle general data, where the adjacency relation is updated automatically. However, GAE fails to preserve the discriminative characteristics within the original features. Moreover, most models lack clustering-oriented training guidance, and the learned graph may be unsuitable for clustering.

Contrastive Learning

As an effective tool in visual processing (Chen et al. 2020), contrastive learning has been applied to many tasks in the branch of GNN successfully, such as graph representation learning (Hassani and Khasahmadi 2020; Zhu et al. 2021; Mo et al. 2022) and graph-structured data clustering (Zhao et al. 2021; Liu et al. 2022a; Yang et al. 2023). In practical application, contrastive learning is usually joined with graph augmentation. Among them, graph augmentation aims to derive multiple data views from node-level and topology-level. Contrastive learning is used to maximize the mutual information between different views to generate the final node representation, which means pulling correlative semantic contents closer while pushing outlying features away. In this article, we introduce clustering-oriented guidance into contrastive learning methodologies, ensuring enhanced discriminability between samples and pursuing a distinguishable cluster structure.

Deep Contrastive Graph Learning

In this section, the proposed model is introduced. The pipeline of DCGL is illustrated in Fig. 1. It uses a presudo-siamese network to construct the local and global graphs, and maps them into the cluster space by siamese graph convolution.

Notations: in this paper, vectors and matrices are written in lowercase and uppercase letters respectively. For a data matrix , both and represent the -th sample. , , and denote , , and Frobenius norm respectively.

Preliminary Work

Given a dataset with categories, it is necessary to construct an initial graph as the input of GCN.

The initial graph indicates the relationship between two samples by the corresponding weight. Intuitively, a small Euclidean distance reflects a large weight. Therefore, the objective is expressed as

| (1) |

where is the trade-off parameter, is the desired graph, and refers to the -th column of . The second term aims to avoid the trivial solution .

Problem (1) can be decoupled into each column of separately. Denoting a column vector that satisfies , the sub-problem for can be written as

| (2) |

Define as the result of sorting in ascending order. Stipulating that only the weights of neighbors are updated, problem (2) has a closed-form solution as

| (3) |

where indicates . The final graph is obtained by , and the mathematical deduction can be found in (Nie, Wang, and Huang 2014).

With the scheme of Eq. (1), each sample connects with its neighbors adaptively. Moreover, the specific closed-form solution avoids the parameter selection of , and only the neighbor number needs to be tuned.

Pseudo-Siamese Network

After building the previous stage, the input for GCN is obtained, including data representation and initial graph . GCN mainly pays attention to the data relationship, but neglects the samples’ original attributes, and encounters the risk of representation collapse. To preserve the original features, we parallel GCN with an auto-encoder, leading to a pseudo-siamese network, which is formulated as

| (4) |

where is the normalized graph, , , refers to the diagonal degree matrix that satisfies , and and captures the structure and attributes respectively. The classical Mean-Square Error is used to train the auto-encoder

| (5) |

The above formulation focuses on data representation, and the training procedure lacks cluster-oriented guidance. Therefore, we design the following feature-level contrastive learning to improve the representations’ adaptability to clustering tasks.

Feature-Level Contrastive Learning.

In contrastive learning, the selection of positive and negative samples determines the final effect directly. For an anchor , the universal consensus (Zhu et al. 2021; Liu et al. 2022d) is to treat its embedding in the other branch as the positive sample (), and all the rest as negative samples, which may misjudges too many samples in the same category as negative. Differently, we employ a rough clustering result to guide the construction of sample pairs. For each anchor, the centroids of other clusters are taken as negative ones.

Specifically, -means is executed on to acquire centroids. Given any node , the centroids of other clusters constitute the negative sample set . On this basis, the InfoNCE loss (Oord, Li, and Vinyals 2018) is employed for feature-level contrastive learning

| (6) |

where computes the cosine similarity, and is the temperature parameter.

Eq. (6) pushes samples away from incorrect centroids, ensuring the discriminability of the learned representation.

Local Propinquity Graph Learning.

Replacing the in Eq. (1) with the learned , a new graph is derived. This graph learning problem can be converted to trace form as

| (7) |

where denotes the trace of a square matrix, and is the Laplace matrix of . The above formulation infers the manifold structure based on the pair-wise similarity, so we term as Local Propinquity Graph (LPG). With a small value of , only the very close samples will be connected, such that the final graph contains fewer incorrect connections. To detect more potential connections, we adopt a staged growth for the neighbor number, which means adding with a fixed increment after a constant interval.

Siamese Graph Convolution

The optimization of LPG focuses on the local dependencies between nodes. Considering that some samples of the same class may are not neighbors in Euclidean space, we propose to learn the global similarity with an extra graph, and fuse both the local and global graphs to achieve an agreement on cluster structure.

Global Diffusion Graph Learning.

Contrary to LPG, the new graph encodes the global topological relationship of samples, which requires more links between nodes. Firstly, the structural and attributed nodes are combined to generate a comprehensive representation

| (8) |

Then, the integrated is used as the data matrix to construct a graph with Eq. (1), which further exploits the original features. The neighbor number for is fixed as the upper bound of the learning procedure of LGP, where denotes the operation of round down.

Finally, Personalized PageRank graph diffusion (Hassani and Khasahmadi 2020) is introduced to transport the topological similarity through nodes, so as to further capture the global structure. The mathematical expression is

| (9) |

where is the transport rate, and is the degree matrix of . The corresponding closed-form solution is

| (10) |

where is the identity matrix.

is called Global Diffusion Graph (GDG). Compared with the straightforward inner product, the construction of GDG focalizes manifold information of each cluster, rather than the holistic sample space.

Cluster-Level Contrastive Learning.

With both the local graph and global graph , contrastive learning is performed within the cluster space.

We assume that both graphs should share the same cluster structure. To this end, the graphs and embeddings are fed into a siamese GCN to get the cluster indicators

| (11) |

where and are the normalized graphs of and . The softmax function is employed to activate the last layer of GCN. In this way, each element of and records the probability that a sample belongs to a cluster. To emphasize the optimization of LPG, both and use the structural representation as input.

Then, the cluster-level embedding is calculated as

| (12) |

where and consist of the cluster centroids. Eq. (12) projects the cluster indicators into centroid matrices. Afterward, the centroids are used as anchors, and contrastive learning is conducted to search a shared centroid distribution

| (13) |

where

| (14) |

Eq. (13) pushes the centroids of different clusters away, and ensures an explicit cluster structure. The siamese graph convolution module does not involve the initial graph , so the impact of the initial graph quality is reduced. Moreover, both cluster-level and feature-level contrastive learning employ centroids to leverage cluster structure, and further provide training guidance to facilitate clustering.

Input: Data matrix with samples, cluster number , initial neighbor number , neighbor number update interval , maximum iterations , parameters , , .

Output: Clustering result for .

Raw

Epoch-6

Epoch-12

Epoch-18

Epoch-24

Final

Overall Model and Optimization

The overall loss function of DCGL is

| (15) |

where and are the trade-off parameters.

The optimizer for DCGL is Adam (Kingma and Ba 2014), and the corresponding parameters are predefined with the authors’ recommendation. The temperature in contrastive learning is set as 0.5. The parameter in Eq. (10) is fixed to 0.2. The convergence condition of DCGL is that the neighbor amount of LPG reaches the upper bound. Algorithm 1 displays the complete flow of DCGL, and the final result is obtained by performing NCut (Shi and Malik 2000) on the converged LPG.

Experiments

Benchmark Datasets

Seven publicly available datasets are collected as benchmarks, including regular record types TOX-171 and Isolet, image types ORL, YaleB, PIE and USPS, and text type TR41. In YaleB, only the previous 30 images of each class are retained. All samples are normalized with norm. The details are exhibited in Table 1.

Evaluation Metrics

Two metrics named accuracy (ACC) and normalized mutual information (NMI) are used to evaluate the clustering performance. The mathematical formulas of ACC and NMI can be found in (Kong, Ding, and Huang 2011). Higher metric values indicate better clustering performance.

Performance Comparison

Competitors.

Thirteen state-of-the-art clustering methods are applied for performance comparison, including six traditional algorithms -means (Hartigan and Wong 1979), STSC (Zelnik-Manor and Perona 2004), CAN (Nie, Wang, and Huang 2014), PCAN (Nie, Wang, and Huang 2014), RGC (Kang et al. 2019) and GEMMF (Chen and Li 2022), and seven deep models DAEGC (Wang et al. 2019), DCRN (Liu et al. 2022c), DEC (Xie, Girshick, and Farhadi 2016), VGAE (Kipf and Welling 2016b), SpectralNet (Shaham et al. 2018), SUBLIME (Liu et al. 2022d) and AdaGAE (Li, Zhang, and Zhang 2021).

Except for DAEGC and DCRN, the other methods have been attested to be appropriate for non-graph-type data clustering. All codes are downloaded from online resources uploaded by authors.

Settings.

The hyper-parameters of competitors are set as the recommendations of the authors. For example, the graph regularization weight of GEMMF is searched from . The probability of dropout layers is from . For those methods that require an initial graph, a KNN graph via Gaussian kernel weighting (Cai et al. 2010) is constructed as input, and the number of neighbors is selected from .

For DCGL, the hyper-parameters , , and are fixed to , , and respectively. The neighbor number for LPG rises every 6 epochs. The maximum epoch number is . To ensure objectivity, the random seed is fixed before code execution, and each algorithm is repeated 10 times.

| Dataset | Type | Samples | Dimension | Classes |

| TOX-171 | Record | 171 | 5748 | 4 |

| ORL | Image | 400 | 1024 | 40 |

| TR41 | Text | 878 | 7454 | 10 |

| YaleB | Image | 1140 | 1024 | 38 |

| Isolet | Record | 1560 | 617 | 26 |

| PIE | Image | 2856 | 1024 | 64 |

| USPS | Image | 9298 | 256 | 10 |

| Method | TOX-171 | ORL | TR41 | YaleB | Isolet | PIE | USPS | |||||||

| ACC | NMI | ACC | NMI | ACC | NMI | ACC | NMI | ACC | NMI | ACC | NMI | ACC | NMI | |

| -means | 41.51 | 12.40 | 51.50 | 71.07 | 66.74 | 67.87 | 11.58 | 21.48 | 59.23 | 75.43 | 23.28 | 53.56 | 67.49 | 61.35 |

| STSC | 49.71 | 26.35 | 65.50 | 77.70 | 72.67 | 68.16 | 49.04 | 62.29 | 58.91 | 76.62 | 86.80 | 93.51 | 66.44 | 80.03 |

| CAN | 32.75 | 10.28 | 56.00 | 72.04 | 51.59 | 35.61 | 39.74 | 52.50 | 60.77 | 77.38 | 91.81 | 97.02 | 74.10 | 76.89 |

| PCAN | 45.03 | 21.66 | 61.00 | 74.18 | 62.30 | 53.97 | 45.09 | 49.73 | 57.24 | 69.87 | 85.68 | 87.88 | 65.41 | 76.16 |

| RGC | 50.88 | 28.60 | 59.50 | 77.11 | 64.24 | 64.06 | 31.32 | 43.68 | 63.53 | 77.92 | 40.62 | 68.29 | 72.00 | 78.93 |

| GEMMF | 43.86 | 13.19 | 64.75 | 79.29 | 58.89 | 57.74 | 31.40 | 51.84 | 63.53 | 78.23 | 41.81 | 71.35 | 69.96 | 66.43 |

| DAEGC | 46.20 | 16.90 | 59.50 | 76.09 | 61.62 | 57.87 | 23.86 | 42.86 | 59.68 | 73.11 | 39.57 | 71.48 | 70.43 | 59.77 |

| DCRN | 36.84 | 12.89 | 34.25 | 60.10 | 62.30 | 54.14 | 15.61 | 34.03 | 15.06 | 36.99 | 17.75 | 47.59 | 18.12 | 5.27 |

| DEC | 49.71 | 23.65 | 49.25 | 69.33 | 23.46 | 10.88 | 21.84 | 35.60 | 52.76 | 62.85 | 30.22 | 59.62 | 76.34 | 66.72 |

| VGAE | 49.71 | 22.99 | 56.75 | 74.99 | 72.78 | 64.25 | 27.19 | 40.60 | 63.59 | 77.06 | 38.41 | 63.17 | 66.66 | 60.78 |

| SpectralNet | 46.78 | 20.55 | 53.50 | 72.66 | 34.62 | 21.63 | 19.91 | 33.66 | 56.03 | 66.21 | 79.03 | 87.87 | 49.31 | 39.70 |

| SUBLIME | 51.46 | 26.87 | 68.75 | 82.29 | 65.49 | 66.85 | 47.72 | 62.41 | 64.41 | 76.64 | 82.35 | 90.15 | 59.14 | 64.50 |

| AdaGAE | 49.71 | 30.60 | 69.50 | 84.40 | 55.81 | 59.46 | 67.02 | 80.68 | 69.49 | 80.83 | 97.97 | 98.18 | 76.44 | 74.63 |

| DCGL | 51.46 | 34.75 | 75.50 | 86.91 | 73.23 | 69.80 | 81.93 | 86.18 | 71.73 | 80.85 | 99.86 | 99.87 | 78.32 | 80.87 |

Results.

The best clustering results of each algorithm are recorded in Table 2. We summarize the viewpoints that 1) STSC, GEMMF, VGAE, and SpectralNet perform worse than other graph-based methods relatively. They keep the graph structure fixed during iteration, so the final performance is limited by the initial graph quality. This reveals that adaptive graph learning is beneficial to clustering; 2) both DAEGC and DCRN provide unsatisfactory results. These models assume that the initial graph is highly reliable, and learn the cluster embeddings mainly based on the adjacency relation. The experimental results attest that such graph-type data clustering models are not suitable for general clustering tasks; 3) the deep models for general data clustering, i.e., SUBLIME, AdaGAE, and DCGL, realize a noticeable improvement. This verifies the potential of deep graph-based clustering. The good performance of SUBLIME and DCGL also demonstrates the feasibility of contrastive learning on processing non-vision data; 4) DCGL achieves better clustering compared to GCN-based competitors, which reflects the effects of restraining representation collapse and mining the original features. Besides, the difference between DCGL and GAE-based models mainly dates from the siamese graph convolution module that bootstraps a clear cluster structure gradually. With the cooperation of clustering-oriented mechanisms, DCGL is guided to preserve clustering-relevant information, and presents superior performance compared to other algorithms.

Visualization Analysis

To display the effect of DCGL intuitively, we use -SNE (Van der Maaten and Hinton 2008) to visualize the embeddings from when clustering YaleB. Only the front 10 clusters are processed for ease of observation. The visualization result is shown in Fig. 2, where each point is plotted as its actual label value. It can be seen that the samples of the same category are gradually compact with iteration, which indicates the practicability of DCGL on data clustering.

Ablation Studies

In this part, we first validate the effectiveness of two contrastive learning modules in DCGL, and then explore the impact of clustering-oriented mechanisms. Afterward, we analyze the impact of hyper-parameters.

Impact of Contrastive Learning.

DCGL-w/F

DCGL

Define DCGL-w/F and DCGL-w/C as the DCGL variants without feature-level and cluster-level contrastive learning respectively. Since the outputs of and are only related to cluster-level contrastive learning, DCGL-w/C means the entire expurgation of the siamese graph convolution module. Table 3 shows the ablation comparisons. Obviously, DCGL still presents the best clustering performance, which proves the effectiveness of each contrastive learning strategy.

The performance of DCGL-w/F is deteriorated compared to DCGL, which demonstrates the discriminability of the embeddings learned by DCGL. For a better illustration, we calculate the cosine similarity matrix of the data representation from . The heatmap on Pie is displayed in Fig. 3, where only the large values are preserved to facilitate observation. In the result of DCGL, the nodes across diverse classes have lower similarity, so we can conclude that feature-level contrastive learning is beneficial to improving discriminability.

DCGL-w/C

DCGL

DCGL exceeds DCGL-w/C on all benchmark datasets, which means the graph generated by DCGL maintains a clearer cluster structure. To verify the efficiency of cluster-level contrastive learning on graph optimization, we plot the converged LPGs from DCGL-w/C and DCGL on ORL in Fig. 4, where all non-zero elements are substituted with 1 to emphasize the adjacency relation. It can be seen that the graph obtained by DCGL exhibits a more distinct block diagonal structure, indicating more links between samples of the same category.

| Dataset | Metric | DCGL-w/F | DCGL-w/C | DCGL |

| TOX-171 | ACC | 47.95 | 42.11 | 51.46 |

| NMI | 24.17 | 24.84 | 34.75 | |

| ORL | ACC | 70.50 | 64.00 | 75.50 |

| NMI | 83.72 | 81.17 | 86.91 | |

| TR41 | ACC | 61.73 | 63.21 | 73.23 |

| NMI | 64.85 | 65.39 | 69.80 | |

| YaleB | ACC | 75.18 | 77.54 | 81.93 |

| NMI | 82.89 | 84.23 | 86.18 | |

| Isolet | ACC | 69.23 | 67.63 | 71.73 |

| NMI | 79.63 | 78.92 | 80.85 | |

| PIE | ACC | 99.68 | 98.35 | 99.86 |

| NMI | 99.69 | 99.65 | 99.87 | |

| USPS | ACC | 76.75 | 76.98 | 78.32 |

| NMI | 80.19 | 79.09 | 80.87 |

TOX-171

ORL

YaleB

Isolet

Impact of Clustering Guidance.

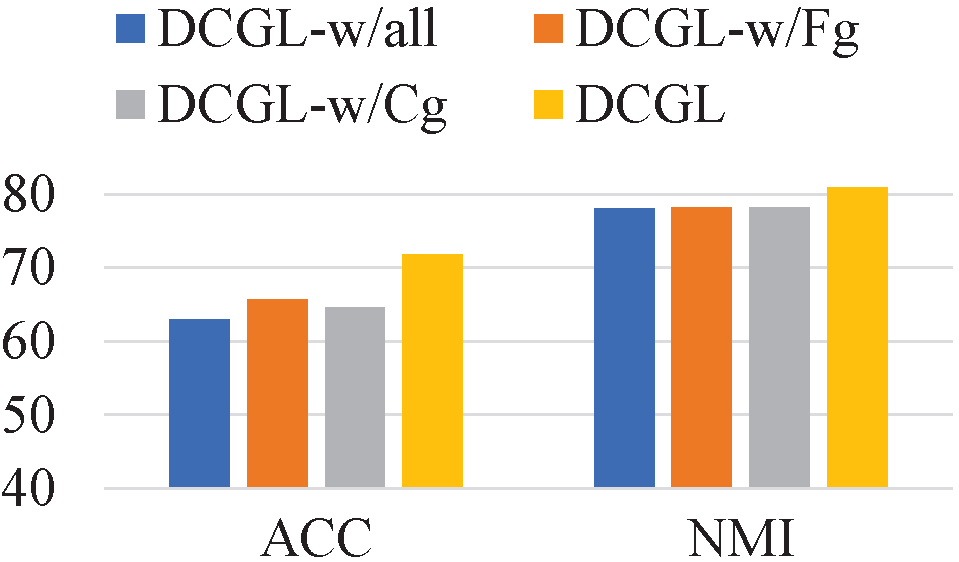

In the proposed contrastive learning strategies, feature-level and cluster-level guidance are introduced into the construction of sample pairs and the selection of anchors. To investigate the effectiveness of the above clustering guidance, we design three variants of DCGL with different contrastive learning approaches, termed as DCGL-w/Fg, DCGL-w/Cg, and DCGL-w/all. Among them, DCGL-w/Fg discards the feature-level guidance, and regards all non-corresponding nodes of the anchor in the other branch as negative samples in feature-level contrastive learning. DCGL-w/Cg removes the cluster-level guidance by taking the output embeddings of and as anchors directly. DCGL-w/all has the above two alterations.

Fig. 5 presents the comparison results. We can deduce that 1) the evident degradation of DCGL-w/all confirms the significance of clustering guidance; 2) the decline of DCGL-w/Fg implies that the discriminability is decreased without the feature-level guidance; 3) the disparity between DCGL-w/Cg and DCGL demonstrates that contrastive learning within the cluster space is helpful to perceive a clear cluster distribution.

Sensitivity of Hyper-Parameters and .

Finally, we focus on the influence of trade-off parameters and . We tune both and over the range of , and keep other experimental environments. Fig. 6 exhibits the clustering ACC of DCGL with different parameter combinations. It is observed that 1) the performance under too small and is not outstanding, which manifests the failure of related mechanisms, and again attests to the effectiveness of the designed modules; 2) the influence of is more salient relatively, because is associated with graph learning and the final cluster partitioning directly; 3) the setting of moderate and larger is likely to bring satisfactory clustering results. Overall, DCGL is not particularly sensitive to parameter selection in an appropriate range.

ORL

TR41

Isolet

PIE

Conclusion

In this paper, we establish the Deep Contrastive Graph Learning (DCGL) model for non-graph data clustering. DCGL adopts a pseudo-siamese network to learn both the structural and attributed representations. Two clustering-oriented contrastive learning strategies are also designed to capture clustering-relevant information. Concretely, feature-level contrastive learning is performed to push each node away from the centroids of other clusters, to retain the discriminative features of each class. Besides, cluster-level contrastive learning is conducted to search an explicit centroid distribution shared by both the learned local and global graphs, such that a clear cluster structure is achieved. Extensive experiments on benchmark datasets demonstrate the superiority of DCGL against state-of-the-art competitors.

Acknowledgments

This work was supported by the National Key Research and Development Program of China (No. 2022YFC2808000) and by the National Natural Science Foundation of China (No. 61871470).

References

- Bo et al. (2020) Bo, D.; Wang, X.; Shi, C.; Zhu, M.; Lu, E.; and Cui, P. 2020. Structural deep clustering network. In Proceedings of the Web Conference 2020, 1400–1410.

- Cai et al. (2010) Cai, D.; He, X.; Han, J.; and Huang, T. S. 2010. Graph regularized nonnegative matrix factorization for data representation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(8): 1548–1560.

- Chen and Li (2022) Chen, M.; and Li, X. 2022. Entropy minimizing matrix factorization. IEEE Transactions on Neural Networks and Learning Systems, 34(11): 9209–9222.

- Chen et al. (2020) Chen, T.; Kornblith, S.; Norouzi, M.; and Hinton, G. 2020. A simple framework for contrastive learning of visual representations. In International Conference on Machine Learning, 1597–1607.

- Chen, Wu, and Zaki (2020) Chen, Y.; Wu, L.; and Zaki, M. 2020. Iterative deep graph learning for graph neural networks: Better and robust node embeddings. Advances in Neural Information Processing Systems, 33: 19314–19326.

- Elhamifar and Vidal (2013) Elhamifar, E.; and Vidal, R. 2013. Sparse subspace clustering: Algorithm, theory, and applications. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(11): 2765–2781.

- Hartigan and Wong (1979) Hartigan, J. A.; and Wong, M. A. 1979. Algorithm AS 136: A k-means clustering algorithm. Journal of the Royal Statistical Society: Series C (applied statistics), 28(1): 100–108.

- Hassani and Khasahmadi (2020) Hassani, K.; and Khasahmadi, A. H. 2020. Contrastive multi-view representation learning on graphs. In International Conference on Machine Learning, 4116–4126.

- Huang et al. (2022) Huang, S.; Wu, H.; Ren, Y.; Tsang, I.; Xu, Z.; Feng, W.; and Lv, J. 2022. Multi-view subspace clustering on topological manifold. Advances in Neural Information Processing Systems, 35: 25883–25894.

- Jiang et al. (2019) Jiang, B.; Zhang, Z.; Lin, D.; Tang, J.; and Luo, B. 2019. Semi-supervised learning with graph learning-convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 11313–11320.

- Jin et al. (2020) Jin, W.; Ma, Y.; Liu, X.; Tang, X.; Wang, S.; and Tang, J. 2020. Graph structure learning for robust graph neural networks. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 66–74.

- Kang et al. (2019) Kang, Z.; Pan, H.; Hoi, S. C.; and Xu, Z. 2019. Robust graph learning from noisy data. IEEE Transactions on Cybernetics, 50(5): 1833–1843.

- Kang, Peng, and Cheng (2017) Kang, Z.; Peng, C.; and Cheng, Q. 2017. Twin learning for similarity and clustering: A unified kernel approach. In Proceedings of the AAAI Conference on Artificial Intelligence, 2080–2086.

- Kingma and Ba (2014) Kingma, D. P.; and Ba, J. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980.

- Kipf and Welling (2016a) Kipf, T. N.; and Welling, M. 2016a. Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907.

- Kipf and Welling (2016b) Kipf, T. N.; and Welling, M. 2016b. Variational graph auto-encoders. arXiv preprint arXiv:1611.07308.

- Kong, Ding, and Huang (2011) Kong, D.; Ding, C.; and Huang, H. 2011. Robust nonnegative matrix factorization using l21-norm. In Proceedings of the 20th ACM International Conference on Information and Knowledge Management, 673–682.

- Li (2022a) Li, X. 2022a. Multi-modal cognitive computing. SCIENTIA SINICA Informationis, 53(1): 1–32.

- Li (2022b) Li, X. 2022b. Vicinagearth security. Communication of the CCF, 18(11): 44–52.

- Li et al. (2017a) Li, X.; Chen, M.; Nie, F.; and Wang, Q. 2017a. Locality adaptive discriminant analysis. In International Joint Conference on Artificial Intelligence, 2201–2207.

- Li et al. (2017b) Li, X.; Chen, M.; Nie, F.; and Wang, Q. 2017b. A multiview-based parameter free framework for group detection. In Proceedings of the AAAI Conference on Artificial Intelligence, 4147–4153.

- Li, Zhang, and Zhang (2021) Li, X.; Zhang, H.; and Zhang, R. 2021. Adaptive graph auto-encoder for general data clustering. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(12): 9725–9732.

- Liu et al. (2022a) Liu, L.; Kang, Z.; Ruan, J.; and He, X. 2022a. Multilayer graph contrastive clustering network. Information Sciences, 613: 256–267.

- Liu et al. (2022b) Liu, Y.; Jun, X.; Sihang, Z.; Siwei, W.; Xifeng, G.; Xihong, Y.; Ke, L.; Wenxuan, T.; Wang, L. X.; et al. 2022b. A survey of deep graph clustering: Taxonomy, challenge, and application. arXiv preprint arXiv:2211.12875.

- Liu et al. (2022c) Liu, Y.; Tu, W.; Zhou, S.; Liu, X.; Song, L.; Yang, X.; and Zhu, E. 2022c. Deep graph clustering via dual correlation reduction. In Proceedings of the AAAI Conference on Artificial Intelligence, 7603–7611.

- Liu et al. (2023) Liu, Y.; Yang, X.; Zhou, S.; Liu, X.; Wang, S.; Liang, K.; Tu, W.; and Li, L. 2023. Simple contrastive graph clustering. IEEE Transactions on Neural Networks and Learning Systems.

- Liu et al. (2022d) Liu, Y.; Zheng, Y.; Zhang, D.; Chen, H.; Peng, H.; and Pan, S. 2022d. Towards unsupervised deep graph structure learning. In Proceedings of the Web Conference 2022, 1392–1403.

- Mo et al. (2022) Mo, Y.; Peng, L.; Xu, J.; Shi, X.; and Zhu, X. 2022. Simple unsupervised graph representation learning. In Proceedings of the AAAI Conference on Artificial Intelligence, 7797–7805.

- Nie, Wang, and Huang (2014) Nie, F.; Wang, X.; and Huang, H. 2014. Clustering and projected clustering with adaptive neighbors. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 977–986.

- Oord, Li, and Vinyals (2018) Oord, A. v. d.; Li, Y.; and Vinyals, O. 2018. Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748.

- Ren et al. (2022) Ren, Y.; Pu, J.; Yang, Z.; Xu, J.; Li, G.; Pu, X.; Yu, P. S.; and He, L. 2022. Deep clustering: A comprehensive survey. arXiv preprint arXiv:2210.04142.

- Shaham et al. (2018) Shaham, U.; Stanton, K.; Li, H.; Nadler, B.; Basri, R.; and Kluger, Y. 2018. SpectralNet: Spectral clustering using deep neural networks. arXiv preprint arXiv:1801.01587.

- Shi and Malik (2000) Shi, J.; and Malik, J. 2000. Normalized cuts and image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(8): 888–905.

- Sun et al. (2022) Sun, Q.; Li, J.; Peng, H.; Wu, J.; Fu, X.; Ji, C.; and Philip, S. Y. 2022. Graph structure learning with variational information bottleneck. In Proceedings of the AAAI Conference on Artificial Intelligence, 4165–4174.

- Tan et al. (2023) Tan, Y.; Liu, Y.; Huang, S.; Feng, W.; and Lv, J. 2023. Sample-level multi-view graph clustering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 23966–23975.

- Tu et al. (2021) Tu, W.; Zhou, S.; Liu, X.; Guo, X.; Cai, Z.; Zhu, E.; and Cheng, J. 2021. Deep fusion clustering network. In Proceedings of the AAAI Conference on Artificial Intelligence, 9978–9987.

- Van der Maaten and Hinton (2008) Van der Maaten, L.; and Hinton, G. 2008. Visualizing data using t-SNE. Journal of Machine Learning Research, 9(11).

- Wang et al. (2019) Wang, C.; Pan, S.; Hu, R.; Long, G.; Jiang, J.; and Zhang, C. 2019. Attributed graph clustering: A deep attentional embedding approach. In International Joint Conference on Artificial Intelligence, 3670–3676.

- Xie, Girshick, and Farhadi (2016) Xie, J.; Girshick, R.; and Farhadi, A. 2016. Unsupervised deep embedding for clustering analysis. In International Conference on Machine Learning, 478–487.

- Yang et al. (2023) Yang, X.; Liu, Y.; Zhou, S.; Wang, S.; Tu, W.; Zheng, Q.; Liu, X.; Fang, L.; and Zhu, E. 2023. Cluster-guided contrastive graph clustering network. In Proceedings of the AAAI Conference on Artificial Intelligence, 10834–10842.

- Yu et al. (2020) Yu, D.; Zhang, R.; Jiang, Z.; Wu, Y.; and Yang, Y. 2020. Graph-revised convolutional network. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 378–393.

- Zelnik-Manor and Perona (2004) Zelnik-Manor, L.; and Perona, P. 2004. Self-tuning spectral clustering. Advances in Neural Information Processing Systems, 17.

- Zhang et al. (2022) Zhang, H.; Shi, J.; Zhang, R.; and Li, X. 2022. Non-graph data clustering via bipartite graph convolution. IEEE Transactions on Pattern Analysis and Machine Intelligence.

- Zhao et al. (2021) Zhao, H.; Yang, X.; Wang, Z.; Yang, E.; and Deng, C. 2021. Graph debiased contrastive learning with joint representation clustering. In International Joint Conference on Artificial Intelligence, 3434–3440.

- Zhao et al. (2022) Zhao, J.; Guo, J.; Sun, Y.; Gao, J.; Wang, S.; and Yin, B. 2022. Adaptive graph convolutional clustering network with optimal probabilistic graph. Neural Networks, 156: 271–284.

- Zhu et al. (2021) Zhu, Y.; Xu, Y.; Yu, F.; Liu, Q.; Wu, S.; and Wang, L. 2021. Graph contrastive learning with adaptive augmentation. In Proceedings of the Web Conference 2021, 2069–2080.