Decentralized Inference with Graph Neural Networks in Wireless Communication Systems

Abstract

Graph neural network (GNN) is an efficient neural network model for graph data and is widely used in different fields, including wireless communications. Different from other neural network models, GNN can be implemented in a decentralized manner during the inference stage with information exchanges among neighbors, making it a potentially powerful tool for decentralized control in wireless communication systems. The main bottleneck, however, is wireless channel impairments that deteriorate the prediction robustness of GNN. To overcome this obstacle, we analyze and enhance the robustness of the decentralized GNN during the inference stage in different wireless communication systems in this paper. Specifically, using a GNN binary classifier as an example, we first develop a methodology to verify whether the predictions are robust. Then, we analyze the performance of the decentralized GNN binary classifier in both uncoded and coded wireless communication systems. To remedy imperfect wireless transmission and enhance the prediction robustness, we further propose novel retransmission mechanisms for the above two communication systems, respectively. Through simulations on the synthetic graph data, we validate our analysis, verify the effectiveness of the proposed retransmission mechanisms, and provide some insights for practical implementation.

Index Terms:

Machine learning, graph neural network, decentralized algorithm, retransmission, robustness.1 Introduction

With the explosion of data traffic and the enhanced capability of big data processing, the machine learning (ML) technique has been regarded as a potential enabler for the sixth-generation (6G) wireless communications [1]. Recently, a large number of works turn to various ML techniques to facilitate different applications in wireless communication systems, such as physical layer design [2, 3, 4, 5], resource allocation[6, 7, 8, 9, 10, 11], and networking [12, 13]. In these works, ML is generally utilized for two purposes. On the one hand, it has been employed as fast approximations of heavy computations in existing algorithms. For example, the authors in [7, 8] have made use of the imitation learning technique to replace the pruning strategy in the branch-and-bound algorithm, a widely-used algorithm for mixed-integer wireless resource allocation problems with exponential complexity in the worst case. On the other hand, ML has also been used to develop novel low-complexity heuristic algorithms. For example, the authors in [9] and [10] have proposed ML-based link scheduling algorithms for device-to-device networks by using deep neural networks (DNNs) and the graph embedding technique, respectively.

Amongst other ML techniques, the graph neural network (GNN) recently attracts much attention from both the academia and the industry. As the name implies, GNN is a kind of neural network model especially proposed for graph data [14, 15]. It can learn the representation of an input graph in the node/graph level via message passing between nodes. Because of its satisfactory performance and good interpretability, GNN has become a widely applied graph analytical tool in various fields, such as computer vision [16], traffic engineering [17], and chemical industry [18]. By observations, GNN has several properties that highly match with the characteristics/requirements of the applications in wireless communication systems. First, GNN can efficiently exploit the graph data for different learning tasks while wireless networks can be naturally modeled as graphs, where nodes and edges correspond to wireless devices and links, respectively. Secondly, GNN can process input graphs of different sizes. This generalization ability is in accord with the dynamic nature of wireless communication systems. Finally, after a well-trained GNN model is obtained111The training process of the GNN can be conducted in either centralized or decentralized manner by using different training techniques. Since using a well-trained GNN model, i.e., the inference stage of the GNN, is the focus of this paper, we assume that a well-trained GNN model is available throughout this paper., it can be used in a decentralized manner for inference to achieve decentralized management/control. Meanwhile, decentralized control and resource management often serves as an appealing alternative especially for large-scale wireless communication systems [47, 48, 49], which does not depend on the centralized controller, and thus avoiding concurrent communication, possibly severe traffic jam, the computation limitation, low scalability and flexibility, and single point of failure. Note that most standard neural networks widely used in existing works, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), requires central processing during the inference stage.

To employ the inference stage of a well-trained GNN model decentrally in wireless communication systems, information exchanges among neighboring nodes through wireless channels are inevitable. However, wireless transmission over noisy and fading channels is generally imperfect, which leads to stochastic errors in received signals, and eventually decreases the prediction accuracy and deteriorates the robustness of the GNN model. This is the main bottleneck concerning decentralized inference with GNN in wireless systems. Therefore, the goal of this paper is to analyze and enhance the robustness of the decentralized GNN during the inference stage in different wireless communication systems, making the prediction results not only accurate but also robust to transmission errors.

1.1 Related Work

1.1.1 Related Work in Machine Learning Field

GNNs, as the generalization of traditional DNNs to the graph data, inherit both advantages and disadvantages of DNNs. Specifically, GNNs have powerful representation ability, but are vulnerable to adversarial attacks during the inference stage222Adversarial attacks can also happen during the training stage of GNNs, which is out of the scope of this paper.. Note that adversarial attacks refer to the manually designed perturbations on the node/edge features or the topology of the graph data, which aim at fooling GNNs to get incorrect predictions. Therefore, there exist a surge of works in the ML field about enhancing the robustness of GNNs against adversarial attacks during the inference stage [19, 20, 21, 22, 23, 24, 25]. Existing adversarial defense methods can be categorized into three types [19]: (i) adversarial training that injects adversarial samples into the training set such that the GNN model can correctly predict the label of testing samples with adversarial attacks [20, 21], (ii) adversary detection that explores the intrinsic differences of adversarial edges/nodes and then cleans edges/nodes to get correct predictions [22, 23], and (iii) model improvement that focuses on improving the GNN model itself to make it robust against the adversarial attacks [24, 25]. There also exist some works that theoretically analyze the robustness of GNNs under adversarial attacks [26, 27]. However, existing adversarial attacks in literature are manually designed based on certain criteria, which are quite different from the random and stochastic errors caused by imperfect wireless transmission. Therefore, all aforementioned works about defending adversarial attacks may not be directly applied to the decentralized GNN in wireless communication systems. This motivates us to study and enhance the robustness of the decentralized GNN in wireless communication systems.

1.1.2 Related Work in Wireless Communication Field

In the wireless communication field, related works can be divided into two categories. The first category of works focus on using GNNs for different problems in wireless communication systems, such as link scheduling [10], power allocation [28, 29] and user association [30]. In these works, GNNs are generally used in the centralized manner. Recently, some works have used GNNs for decentralized control [31, 32, 33]. However, they generally assume perfect information exchanges between nodes. In contrast, the second category of works examine how wireless transmission affects ML performances [34, 35, 36, 37, 38, 39]. To be more specific, they focus on the training process of ML models and analyze the convergence performance of training algorithms, such as the stochastic gradient descent (SGD) algorithm, over noisy wireless channels. Based on the analysis, resource management algorithms [35, 36], learning parameter setting methods [37, 38], as well as scheduling mechanisms [39] are proposed to achieve different goals, such as trade-off between communication and computation resources, faster convergence, or better ML performance. These existing methods are also applicable to the decentralized training of the GNN in wireless communication systems. However, the characteristics of the training and inference stages of ML are very different. Specifically, training an ML model using algorithms, such as SGD, is a stochastic process, which is inherently tolerant of noisy data. On the contrary, using an ML model for prediction is a definite process and is more vulnerable to noise. Therefore, these aforementioned works are not applicable in our considered problem, which motivates the study in this paper.

1.2 Contributions and Outline

As far as we know, we are among the first to study robust decentralized inference with GNNs in wireless communication systems. To summarize, our main contributions are as follows.

-

•

We formulate and solve a robustness verification problem to check whether the predictions of the decentralized GNN binary classifier in wireless communication systems are robust.

-

•

We analyze the performance of the decentralized GNN binary classifier in both uncoded and coded communication systems by examining the robustness verification problem.

-

•

We develop two novel retransmission mechanisms based on the combining technique to deal with the impairments of wireless transmission and enhance the robustness of the decentralized GNN binary classifier in uncoded and coded communication systems, respectively.

-

•

We conduct extensive simulations through which we reveal the effectiveness of our proposed retransmission mechanisms and shed lights on practical implementation.

The rest of this paper is organized as follows. In Section 2, we give a brief overview of GNNs. In Section 3, we introduce the binary classification problem in wireless communication systems and discuss a corresponding GNN binary classifier as well as its decentralized implementation. In Section 4, we formulate and solve the robustness verification problem. Based on this, we analyze and enhance the robustness of the decentralized GNN binary classifier in uncoded and coded communication systems in Sections 5 and 6, respectively. Extensive testing results are presented in Section 7. Finally, we conclude the paper and provide directions for future work in Section 8.

1.3 Notations

Throughout this paper, vectors are denoted in bold lower-case letters, such as , while matrices are denoted in bold upper-case letters, such as . Moreover, we use to denote the -th entry in vector and to denote the entry in the -th row and -th column of matrix . We also use and to denote the -th row and the -th column in , respectively. Given two sets, and , corresponds to a sliced matrix from , which contains the rows corresponding to the indices in and the columns corresponding to the indices in . Furthermore, given two matrices and , if they have the same number of rows, we can stack them in column and the column-stacking result is denoted as . Similarly, if they have the same number of columns, we can stack them in row and the row-stacking result is denoted as . For example, assuming that the dimensions of and are both , then we have

2 Overview of Graph Neural Networks

GNNs, to some extent, are inspired by the success of CNNs that reduce the number of parameters in fully-connected DNNs with convolution filters. However, the convolution operation in CNNs only works for grid-like image data over the Euclidean space. Therefore, GNNs are proposed by redefining the convolution operation for graphs [14, 15]. To discuss the typical architecture of GNNs, we consider a graph with the node set and the edge set . The goal of GNNs is to learn a state embedding vector for each node by taking the graph adjacency matrix, node features, and edge features (if available) as inputs. Note that is a hyperparameter called hidden state dimension whose value is manually set. Then the state embedding vector is used to generate the output of node , i.e., . Generally, a GNN is constructed with a sequence of layers. In the -th layer, the hidden state of node , denoted as , is first updated by collecting the neighborhood information as follows

| (1) |

where is called the local transition function in the -th layer. Note that is the same for all nodes in and generally realized through a multi-layer perception (MLP), whose learning parameters are denoted as . Meanwhile, , , are the features of node , its edges, and its neighbors, respectively. Also, denotes the hidden states of the neighbors of node in the -th layer. After the update in (1), the output of node in the -th layer, denoted as , is computed as

| (2) |

where is called the local output function in the -th layer, which defines how the output of each node is produced. Similar to , is again the same for all nodes in and generally realized through an MLP with learning parameters, 333Eq. (1) and (2) are general formulas of the local transition and output functions. The specific design of these two functions can be different in various GNN models by omitting some input components, adding extra components, choosing different techniques to combine input components, or selecting different MLP structures..

As mentioned above, GNN has many special properties that match the characteristics and requirements of wireless communication systems. One important property is that the inference stage of the GNN can be naturally implemented in a decentralized manner. Specifically, the operations in (1) and (2) only need the information of node (, , and ) and its one-hop neighbors ( and ), where the one-hop neighbors’ information can be obtained by local information exchanges. In other words, operations in each layer of the GNN are entirely local. However, in wireless communication systems, the information exchanges among neighbors are achieved through noisy and fading channels, whose impairments lead to imperfect transmission and deteriorate the robustness of decentralized predicted results. This is the main bottleneck for the decentralized inference with the GNN. Analyzing the performance of the decentralized GNN in different wireless communication systems and overcoming the above obstacles to achieve robust predictions are the focus of this paper.

3 System Model and GNN Binary Classifier

For simplicity, we focus on a decentralized GNN binary classifier in the subsequent discussion of this paper444The proposed analysis and retransmission mechanism can be generalized to more complicated problems by modifying the GNN structure and the formulation of the robustness verification problem accordingly.. In this section, we first introduce the binary classification problem in wireless communication systems. Then, we discuss the architecture of a GNN binary classifier for this problem as well as its decentralized implementation in wireless communication systems.

3.1 Binary Classification Problem

As shown in Fig. 1, we consider a binary classification problem in the wireless communication system that can be widely found in literature [6, 9, 10, 49] and modeled as a graph , with the node set and the edge set . Specifically, this system has nodes. These nodes can be base stations, access points, edge servers, or mobile devices according to specific applications. To enable local computation, we assume that each device is equipped with sufficient storage and computing capability. Meanwhile, two nodes are neighbors and connected by an edge if they can communicate with each other through the wireless channel. In this paper, we assume that each node knows its neighbors in the system, which can be achieved by neighbor discovery algorithms such as [50]. Moreover, each node in can be classified into two classes and correspondingly labeled as 1 or -1. The label refers to binary indicators in wireless communication systems, such as the power allocation indicator in the power control problem [6], the link activation indicator in the wireless link scheduling problem [9], and the subcarrier assignment indicator for the subcarrier assignment problem [49]. Furthermore, each node keeps a vector that includes the needed internal characteristics for the classification task, such as the transmit power limit or the QoS requirement for the power control problem [6]. For the sake of storage and digital communication, this vector is quantized into a binary node feature vector, , where is called the node feature dimension and is manually set based on the requirements of the quantization precision. In this paper, we do not take the detailed quantization process into consideration and assume that is already available at each node.

3.2 Wireless Communication Model

Assume that node and node are neighbors. Then, when node transmits a -bit signal to node , the transmission takes time slots that is defined as a symbol block. Furthermore, we assume that the transmission of is not only contaminated by the additive white Gaussian noise (AWGN) that is independent and identically distributed (i.i.d.) over different time slots, but also impaired by the pathloss, the shadowing, and the block-Rayleigh fading, which indicates that the channel gain remains static within a symbol block but is i.i.d over different blocks. According to [39], we further assume that the receiver can estimate the signal-to-interference-plus-noise ratio (SINR) of the received signal from each neighbor .

Two kinds of communication systems, uncoded and coded communication systems, are considered in this paper. If the transmitted signal from node , i.e., , is uncoded, node can use to estimate the bit error rate (BER), , of the received signal. On the other hand, if is coded before transmission, we assume that it can be correctly decoded by node if , where is the preset transmit data rate of node . Otherwise, the received signal is said to be in outage and the whole packet will be discarded.

3.3 Architecture of GNN Binary Classifier

The aforementioned binary classification problem can be solved by a GNN binary classifier. The operations of the GNN binary classifier are as follows. First, the hidden states of all nodes are updated as

| (3) |

where is the node feature matrix, which is obtained by row-stacking node features of all nodes in . Also, is the matrix of learning parameters, is the ReLU function, and is the embedding output matrix that is obtained by row-stacking the embedding output vectors of all nodes, i.e., . Furthermore, is called graph filter, which defines how to integrate neighborhood information. It is generally a variant of the graph adjacency matrix . There are three popular graph filters for GNNs as follows

-

•

Unnormalized graph filters [40]: , where is the identity matrix.

-

•

Normalized graph filters [41]: , where is the degree matrix.

-

•

Random walk graph filters [42]: .

As mentioned in Section 3.1, the neighborhood information is available for each node. Therefore, the needed graph filter information can be assumed to be available for each node as well.

After the operation in (3), the hidden states of all nodes are input into a fully-connected layer for binary classification. The specific operation is given by

| (4) |

where is the predicted output vector of all nodes, with each entry indicating the probability that the corresponding node is labeled as 1. Also, is the sigmoid function, is the vector of learning weights, and is the bias. We use to denote the set of all learning parameters in the GNN binary classifier.

3.4 Decentralized Implementation of GNN Binary Classifier

As mentioned above, decentralized management/control is preferred in wireless communication systems. Therefore, in this part, we discuss the decentralized implementation of the above GNN binary classifier in the wireless communication system. As a premise, we assume that the GNN binary classifier is already well-trained and each node keeps a copy of it, i.e. , which indicates that the operations and parameters of the GNN model are available at each node. In the remaining part of this subsection, we introduce how each node uses to predict its label in a decentralized manner.

Note that the operations (3)-(5) of the GNN binary classifier introduced in the previous subsection are given in the centralized manner, where the graph filter and the node feature matrix of all the nodes are needed. To better illustrate the decentralized implementation of this GNN binary classifier, we need to manifest the operations in (3)-(5) for each single node . First, we denote the set of one-hop neighbors of node as . We further define the sliced graph filter, , as , which keeps the graph filter entries needed by node to integrate information from its neighbors. Similarly, we define the sliced node feature matrix, , as , which contains the node features of nodes in , i.e., . With these newly defined notations, the operations in (3)-(5) can be rewritten for node as

| (7) | ||||

| (8) |

| (9) |

where , is the embedding output vector of node , is the predicted output value of node , and is the binary predicted label of node . Thus, the overall operations at node is given by

| (10) |

From (10), the graph filter information, the node feature , and the neighbors’ node features are needed for node to get its predicted label. As mentioned above, the graph filter information and are already available at node . Given that can be obtained by information exchanges with its neighbors, the central unit is not needed and node can obtain its predicted label in a decentralized manner.

The specific process of the decentralized prediction for each node is as follows. First, node sends transmission requests to each node in . After receiving the request from node , each node in transmits its node feature to node through wireless channels as shown in Fig. 1. Given that is binary and has a length of , we utilize the binary phase shift keying (BPSK) modulation and the transmission of takes time slots or a symbol block. We denote the signal received by node from node as , whose SINR is denoted as . Due to imperfect wireless transmission, is generally not equal to . By row stacking for all nodes in , node gets an estimated sliced node feature matrix, . Then node inputs the graph filter information, , and into its local GNN copy . By the operations in (10), the decentrally predicted label of node is finally obtained as .

4 Robustness Verification Problem

As mentioned above, the received sliced node feature matrix, , is generally not equal to the true node feature matrix, , due to imperfect wireless transmission. It indicates a possibility that the predicted label of node , , is not robust. Therefore, we need to develop a methodology to verify whether a predicted label is robust before studying the performance of the decentralized GNN binary classifier in wireless communication systems. To this end, we investigate the robustness verification problem in this section.

4.1 Problem Formulation

Given that and are not available during the inference stage, we cannot directly compare with for robustness verification. However, for each received signal , its SINR is available at node , based on which the possible number of errors in can be estimated. Therefore, we introduce a new vector, , as the local error bound vector of node , where each entry represents the maximum number of tolerable errors in . Obviously, the value of is highly related to the specific communication model. In the remaining part of this section, we assume that the value of is available and more details about estimating the value of will be discussed in Section 5. Under this assumption, it is obvious that the true sliced node feature matrix, , must satisfy the following constraints

| (11) |

where means the L0 norm of the input vector. Therefore, we can search all possible matrices satisfying constraints in (11), and then pass them through the GNN model. If the predicted labels with all possible matrices are always the same as , then the true predicted label must be equal to . In this sense, is considered robust.

Accordingly, the robustness verification problem555The proposed “robustness verification problem” does not belong to the robust optimization field, where the objective and constraints are uncertain [43]. can be formulated as follows

| (12) |

subject to

| (12a) | |||

| (12b) |

where is the optimization variable. If , the predicted label is robust.

Remark 1: If , always has the same sign as and is also equal to due to the fact that the predicted label can only be 1 or -1.

4.2 Problem Transformation

Obviously, Problem (12) is intractable for two main reasons. On the one hand, it is a combinatorial optimization problem due to the constraint (12b). On the other hand, even if we relax the binary variables into continuous ones in , the optimization problem is still non-convex due to non-linear activation functions in the GNN binary classifier, i.e., the ReLU function and the sigmoid function. In this subsection, we transform Problem (12) into a more tractable form with different approximation and relaxation techniques.

4.2.1 Transformation on Sigmoid Function

According to the definition of the sigmoid function and the binary label transformation rule, the predicted label always has the same sign as . Therefore, Problem (12) can be simplified as

| (13) |

| (13a) |

where the non-linear sigmoid function is removed. Obviously, always has the same sign as . Therefore, by solving problem (13), we can also verify that the predicted label is robust if .

4.2.2 Approximation on ReLU Function

To deal with the non-linearity brought by the ReLU function, we adopt the linear approximation of the ReLU function proposed in [44].

First, we denote the input to the ReLU function in (13a) as and rewrite (13a) into

| (14) |

| (15) |

Then we try to bound the value of each entry in vector . Specifically, for , we can find its upper bound, , according to [26] as

| (16) |

| (17) | |||

where is a function that can find the -th largest entry in the input vector, denotes element-wise product, , and . The first two terms in (16) correspond to the value of if . Meanwhile, the last term in (16) denotes the maximal increase on the value of for any feasible constrained by (12a) and (12b), which can be explained as follows. First, the value of depends on the aggregation over all neighbors, which accounts for the summation over . Moreover, for each neighbor and each bit in , if (i) and , or (ii) and , flipping brings an increase on . These two conditions can be overall written as , which appears as the input of in (17). According to the constraint (12a), we can at most flip bits in . Therefore, we pick the top- possible increase to get the upper bound, which accounts for the summation over . Based on the analysis in [26], the upper bound defined in (16) and (17) is the tightest under constraints (12a) and (12b). Similarly, we can also find the tightest lower bound, , for as

| (18) |

| (19) | |||

After bounding as , we can rewrite or approximate the ReLU function in (15) based on the signs of and . Specifically, if and have the same signs, say or , the ReLU function is reduced into the following linear functions

| (20) |

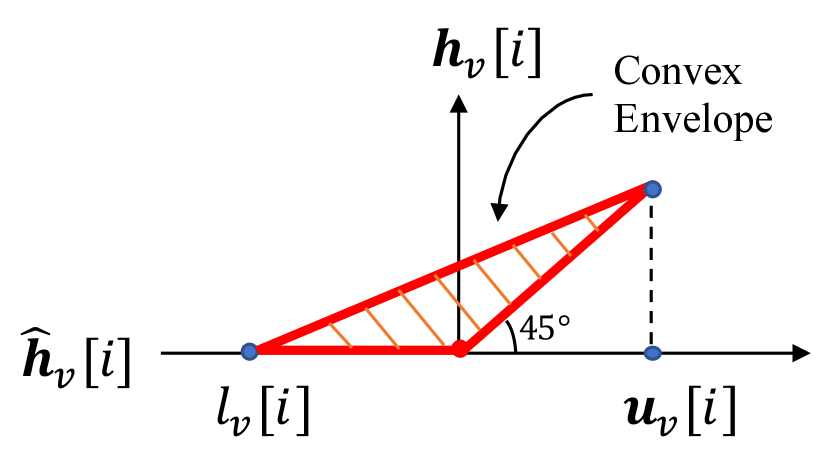

where and are the index sets of the entries in whose upper and lower bounds are both larger or smaller than 0, respectively. On the other hand, if , the ReLU function cannot be directly simplified. To avoid the non-linearity, we can replace it with its convex envelope proposed in [44] and the operation can be approximated as

| (21) |

where is the index set of the entries in whose upper and lower bounds differ in signs. A graphical illustration of the ReLU function’s convex envelope can be found in Fig. 2.

Based on the linear approximations in (20) and (21) for the ReLU function, Problem (13) can be transformed into

| (22) |

By observation, the feasible region of Problem (13) is a subset of that of Problem (22), which indicates that the minimum of the objective function in Problem (22) is a lower bound of that in Problem (13), i.e., . If , then . Therefore, by solving problem (22), we can also verify that the predicted label is robust if .

4.2.3 Relaxation on Binary Variables

Up to this point, Problem (22) is still intractable because is binary (see Eq. (12b)). Therefore, we relax it into the continuous variable between 0 and 1. Then Problem (22) is further relaxed as

| (23) |

| (23a) | |||

| (23b) |

where means the L1 norm of the input vector. It is obvious that Problem (23) is a linear programming problem, and the minimum of whose objective function is a lower bound of that of Problem (22), i.e., . Therefore, the predicted label is robust if . Note that the solution to Problem (23) is not equivalent to the original problem (12). However, we only focus on the sign instead of the value of the objective function. Therefore, the above series of approximation and relaxation meet our needs. By solving Problem (23), we will be able to rule out any non-robust predicted labels and thus guarantee robust predictions of the decentralized GNN binary classifier, which accords with the goal of this paper. In the following, we will solve Problem (23).

4.3 Dual Problem and Feasible Solution

As mentioned above, Problem (23) is a linear programming problem. Therefore, we can use duality theory to solve it. Specifically, the dual problem of Problem (23) is given by

| (24) | |||

subject to

| (24a) |

| (24b) |

| (24c) |

| (24d) |

| (24e) |

| (24f) |

where indicates the trace of the input matrix. The proof is available in Appendix A.

Note that the above dual problem has the similar form to the problems in [26] and [44]. Following these two works, we can easily find a good suboptimal solution for Problem (24). As mentioned above, since we only focus on whether the objective function in Problem (24) is positive, it is not necessary to get its actual value. Therefore, instead of optimizing over all possible values of , we can set as

| (27) |

According to the simulation results in [26], the choice in (27) is a good suboptimal solution of the original problem (12) and can efficiently help us verify the robustness of the predicted labels. After the value of is fixed, , , and all become constant. Then, Problem (24) is rewritten as

| (28) |

subject to

| (28a) |

| const | ||||

| (28b) |

Again, by using the duality theory, the optimal solution for is given by

| (29) |

whose detailed proof can be found in Appendix B. With the closed-form solutions given in (27) and (29), the value of the objective function in Problem (24) denoted as can be obtained with low complexity. If , the predicted label is robust.

5 Robustness Verification in Wireless Communication Systems

In the sections above, we have introduced the decentralized GNN binary classifier and formulated a robustness verification problem to verify whether its predicted label is robust. Note that the premise of employing the robustness verification problem is that the local error bound vector, , is available, which can be estimated from received SINRs and depends on specific communication systems. Therefore, in this section, we study how to obtain and justify the robustness of the decentralized GNN binary classifier in uncoded and coded communication systems, respectively.

5.1 Robustness Verification in Uncoded Communication Systems

In uncoded communication systems, node is able to estimate the BER, , of each received signal from its corresponding SINR according to Section 3.2. Given that the BPSK modulation is adopted, the BER of the received signal is given by [45]

| (30) |

where is the Q-function defined as 666Our analysis can be generalized to other modulation modes by changing (30) accordingly.. By i.i.d. assumption for the channel impairment, follows the Binomial distribution, . On the other hand, the error-tolerance of the decentralized GNN binary classifier is monotonic. Specifically, if it is robust to a received signal with more errors (large ), it is also robust to a received signal with fewer errors (small ). Based on the statistical characteristics of and the error-tolerance monotonicity, we propose a new metric, robustness probability, to measure the robustness of the decentralized GNN binary classifier in uncoded communication systems.

As the name implies, the robustness probability of node , denoted as , indicates the probability that is robust with the received signals . To give the formula of , we further introduce a new variable , which is the solution of the following optimization problem

| (31) |

subject to

| (31a) |

where is defined at the end of Section 4.3. Based on the error-tolerance monotonicity, Problem (31) can be solved by the bisection algorithm. From the definition of , if holds for all neighbors of node , is robust. Therefore, we define as . Combined with the fact that , the robustness probability of node is given by

| (32) |

In practice, a specific robustness requirement is generally imposed on node . In other words, the robustness probability of node is supposed to be no smaller than a given target robustness probability, . If , the robustness requirement is satisfied. Otherwise, retransmission is needed to enhance the prediction robustness, which will be further discussed in Section 6.1.

5.2 Robustness Verification in Coded Communication Systems

As mentioned in Section 3.2, in coded communication systems, a transmitted signal can be correctly decoded by the receiver if it is not in outage. Therefore, and if . Otherwise, the whole transmission packet corresponding to is discarded. In this case, we estimate as a zero vector for the computation of 777If outages happen, the corresponding signal needs to be estimated, even with random guess, to facilitate the robustness verification process. and the corresponding maximum number of tolerable errors is , i.e., . Overall, the value of in coded communication systems is given by

| (35) |

After getting based on (35), we solve the robustness verification problem (12) and check whether . If it is, is robust and the robustness indicator of node is set as 1, where the robustness indicator is a binary metric to measure the robustness of the decentralized GNN binary classifier in coded communication systems. Otherwise, the robustness indicator of node is set as 0 and retransmission is needed, which will be discussed in Section 6.2.

6 Retransmission Mechanism for Robustness Enhancement

When the given robustness requirement is not satisfied, retransmission is needed to enhance the prediction robustness. As mentioned in Section 3.2, the channel gain remains static within a symbol block but is i.i.d over different blocks. The channel diversity over different blocks can be exploited by utilizing the maximal-ratio combining (MRC) technique. Specifically, if is transmitted for rounds (one initial transmission and retransmissions) from node to node , all received copies of can be combined by the MRC technique at node and a received signal denoted as is obtained. The effective received SINR of is

| (36) |

where is the SINR of the signal received by node from node for the -th transmission and is assumed known at node according to Section 3.2. Obviously, retransmission can contribute to decreasing the BER and the outage probability for both uncoded and coded communication systems. This further enhances the robustness of the decentralized GNN binary classifier.

However, traditional retransmission mechanisms aim at reliable transmission and will come to an end only when the target BER is met and no neighbor is in outage for uncoded and coded communication systems, respectively. This will inevitably induce high communication overhead especially for dense networks. To alleviate communication burden of achieving robust predictions, in the following, we adopt the MRC technique to re-design retransmission mechanisms with novel retransmission criteria and stopping rules for the decentralized GNN binary classifier in uncoded and coded communication systems, respectively.

6.1 Retransmission in Uncoded Communication Systems

In this part, we develop the retransmission mechanism when the predicted label of node does not satisfy the robustness requirement, i.e., , in uncoded communication systems. First, we need to develop a criterion to check whether a neighbor of node needs to do retransmission. According to (30), node can estimate the BER of each received signal based on the received SINR. Generally, when is large, the probability of will be small, which further leads to a small robustness probability of node . Obviously, we need to find out those received signals with large BER and ask the corresponding neighbors to do retransmission. To achieve this goal, we first introduce a new variable, , which is called BER bound and is the solution to the following equation

| (37) |

Based on the above definition, can be interpreted as the maximum BER of each received signal to guarantee that the robustness probability of is no smaller than . In this way, we can use as a threshold to check whether retransmission is needed. Specifically, if , node needs to retransmit to node .

With the above retransmission criterion and the MRC technique, the retransmission mechanism for the decentralized GNN binary classifier in uncoded communication systems is summarized as Algorithm 1 and described as follows. After the initial transmission and prediction, if , node requests each neighbor with to retransmit their node features. By using the MRC technique, node coherently combines the received copies for each retransmitted signal, computes their corresponding effective SINR as (36), and updates the received sliced feature matrix . Then, node re-predicts its label and re-computes the robustness probability . The whole transmission process ends when finally becomes no smaller than .

6.2 Retransmission in Coded Communication Systems

In this part, we develop the retransmission mechanism when the predicted label of node is not robust, i.e., , in coded communication systems. Obviously, not all received signals but only those in outage need to be retransmitted. In other words, if , node needs to retransmit to node . By using the MRC technique, all received copies of are coherently combined and the effective SINR is given by (36), which is used to check whether the effective received signal is in outage. Specifically, after transmissions, if , it indicates that and . Otherwise, and . In this way, and are updated, which enables node to re-predict its label and re-solve Problem (12) to check the robustness of the newly-obtained predicted label. If it is robust, the whole transmission process ends. The retransmission mechanism for the decentralized GNN binary classifier in coded communication systems is summarized as Algorithm 2.

7 Simulation Results

In this section, we use simulation results to show the performance of the decentralized GNN binary classifier in wireless communication systems and validate the effectiveness of the proposed retransmission mechanisms. We also pay attention to the impact of some key parameters about the network design and retransmission mechanisms to shed lights on practical implementation.

7.1 Simulation Setup

We conduct the simulation on the synthetic random geometric graph data. To be more specific, we consider a 2,000 m by 2,000 m two-dimensional square area with nodes, which are uniformly distributed in the area. Moreover, we set the communication radius as 500 m, which indicates that two nodes are connected if their distance is smaller than 500 m. Furthermore, each graph corresponds to a binary classification problem in a wireless communication system and a specific GNN binary classifier is generated to solve it. Following [46], each entry of the parameters in set is independently generated from the Gaussian distribution . In a similar way, for all nodes in the graph, each entry of their node features is independently generated by following the Bernoulli distribution whose successful probability is 0.3. After that, the true labels of all nodes are obtained by using the GNN binary classifier in (6), which suggests that the misclassification rate will be 0 if the wireless transmission is perfect. For simplicity, we assume that all nodes have the same target robustness probability, and transmit at the same data rate and power, i.e., . And we do not consider interference among neighbors in the following simulation. Unless otherwise stated, the values of these three parameters are preset as 80%, 1 bit/s/Hz, and 0.1 w, respectively. Throughout this section, presented results are the average performance over all nodes in 200 testing graphs. Our simulation parameters are summarized in Table I.

| Parameter | Value | ||

|---|---|---|---|

| Square Area | 2,000 m 2,000 m | ||

| Communication Radius | 500 m | ||

| Bandwidth | 10 MHz | ||

| Noise Spectral Density | -174 dBm/Hz | ||

| Path Loss Model | 128.1+37.6log(d[km]) | ||

| Shadowing Standard Deviation, | 8 dB | ||

|

80% | ||

|

1 bit/s/Hz | ||

|

0.1 w | ||

|

32 | ||

|

32 | ||

| Graph Filter, |

|

7.2 Simulation Results in Uncoded Communication Systems

7.2.1 The Performance without Retransmission

First, we test the performance of the decentralized GNN binary classifier in uncoded communication systems without retransmission. Specifically, we pay attention to the misclassification rate and the percentage of robust nodes without retransmission. The results are summarized in Figs. 3(a) and 3(b), where “w/o” is the abbreviation for “without”. From the figures, it can be seen that when the transmit power is small, the imperfect transmission would lead to about 11%-13% incorrect predictions. With the increase of the transmit power, the received SINR increases, which further decreases the BER of the received signal. Therefore, the misclassification rate decreases and the percentage of robust nodes increases. Moreover, both metrics change at a decreasing speed when the transmit power increases, indicating that increasing power only leads to a limited performance enhancement for the decentralized GNN binary classifier. Without other mechanisms, it takes extremely high power consumption to achieve the robustness requirement, which suggests the necessity of the retransmission mechanism especially for power-limited systems.

| 0.1 | 0.5 | 1.0 | 1.5 | 2.0 | |

|---|---|---|---|---|---|

| Uncoded | 1.52 | 1.47 | 1.42 | 1.42 | 1.42 |

| Coded | 2.02 | 2.00 | 1.94 | 1.99 | 1.59 |

7.2.2 The Performance with Retransmission

To show the performance of the decentralized GNN in uncoded communication systems with retransmission, we focus on the misclassification rate with retransmission and the number of average needed transmission rounds to achieve the robustness requirement. The results are summarized in Figs. 3(a) and 3(c), where “w/” is the abbreviation for “with”. From Fig. 3(a), after each node achieving the preset robustness requirement , the misclassification rate is around 0, which suggests that the retransmission mechanism proposed in Section 6.1 can efficiently decrease incorrect predictions and enhance the prediction robustness. Furthermore, Fig. 3(c) suggests that the number of average needed transmission rounds to achieve the robustness requirement decreases with the transmit power. The reason has been discussed in Section 7.2.1. Larger transmit powers bring about fewer errors, and therefore fewer transmission rounds are needed to achieve the preset . Undoubtedly, both metrics are closely related to the value of , which will be further discussed later.

To further validate the advantage of the proposed retransmission mechanism, we compare it with the traditional retransmission mechanism where the retransmission will stop until the BER of the received signals from all neighbors are smaller than a given threshold, which is manually set in advance. To achieve a fair comparison, the BER threshold of the traditional retransmission mechanism should be set to be comparable with the proposed retransmission mechanism, i.e., . However the value of changes for each transmission. We notice that its value varies from to during the above simulation. Therefore, to be conservative about our performance gain, we set the BER threshold of the traditional retransmission mechanism as in the following simulation. Specifically, we pay attention to the gain in terms of the number of average needed transmission rounds, #Transmission, and the results are summarized in Table II, where refers to the ratio between the #Transmission of the traditional and the proposed retransmission mechanisms. The results suggest that our proposed retransmission mechanism is superior to the traditional retransmission mechanism especially when the transmit power is small.

7.2.3 The Impact of Target Robustness Probability

In uncoded communication systems, the performance highly depends on the value of the target robustness probability as mentioned above. Therefore, in this part, we study the impact of with fixed transmit power w and the simulation results are summarized in Fig. 4. Obviously, with the increase of , the misclassification rate with retransmission decreases and finally converges to 0 (for ) as suggested in Fig. 4(a). Also, fewer nodes can achieve the robustness requirement without retransmission as indicated by Fig. 4(b) and eventually more transmission rounds are needed as shown in Fig. 4(c). Furthermore, it is observed that all three metrics change very sharply when increasing from 0 to , which indicates that a low robustness requirement already helps a lot to enhance the prediction accuracy. Another important observation is that the number of average needed transmission rounds increases very quickly when . Given that the misclassification rate converges to 0 when reaches 80%, there is no need to set larger than 80% for robust predictions, which does not enhance the prediction accuracy but leads to extra communication overhead. Obviously, the threshold value (80% in this test setting) varies with different applications, but the key insight concerning the trade-off between prediction accuracy and communication overhead when selecting the value of always holds. Indeed, it should be reasonably chosen according to the specific accuracy requirement in practice.

7.3 Simulation Results in Coded Communication Systems

7.3.1 The Performance without Retransmission

Similar to Section 7.2.1, we use the misclassification rate and the percentage of robust nodes without retransmission as two metrics to show the performance of the decentralized GNN binary classifier in coded communication systems without retransmission. The results are summarized in Figs. 5(a) and 5(b). From the figures, it can be seen that the impact of the transmit power is similar to that in uncoded systems. However, by comparing the simulation results in Figs. 3 and 5, with the same transmit power, the decentralized GNN in coded communication systems has a smaller misclassification rate and a larger percentage of robust nodes, which indicates the benefits of coding before transmission.

7.3.2 The Performance with Retransmission

In this part, we study the performance of the decentralized GNN binary classifier in coded systems with retransmission. Different from uncoded communication systems where retransmission can only guarantee that the predicted label is robust with a probability , the predicted label in coded communication systems with retransmission is definitely robust, i.e., equals the true label. This indicates that the misclassification rate with retransmission is always 0 in coded communication systems. Therefore, we only use the number of average needed transmission rounds to achieve robustness as a metric in this part and the results are summarized in Fig. 5(c). Meanwhile, the comparison with the traditional retransmission mechanism where the retransmission will stop until no neighbor is in outage is presented in Table II.

Similar to the analysis in Section 7.2.2, the number of average needed transmission rounds decreases with the transmit power and is only 50% of that with the traditional retransmission mechanism. Moreover, with the same transmit power, coded communication systems need fewer transmission rounds to achieve robustness requirements than uncoded communication systems. This again validates the superiority of coded systems in terms of prediction robustness.

7.3.3 The Impact of Transmit Data Rate

Transmit data rate is an important parameter in coded communication systems, which directly influences the outage probability and the time consumption. Therefore, we study the impact of transmit data rate on the robustness of coded systems. The results are summarized in Fig. 6, where the effective data rate per transmission round in Fig. 6(d) is defined as the ratio between the transmit data rate and the number of needed transmission rounds to achieve robustness. Obviously, it is negatively related to the time consumption of the decentralized GNN binary classifier to achieve robustness (Algorithm 2).

From Figs. 6(a) and 6(b), larger transmit data rate would deteriorate the prediction robustness (larger misclassification rates and smaller percentages of robust nodes without retransmission). With the same preset transmit power (0.1 w), larger transmit data rate would lead to higher outage probability of received signals. Accordingly, more transmission rounds are needed to achieve robustness with larger transmit data rate as shown in Fig. 6(c). Furthermore, the effective transmit data rate per transmission round first increases and then decreases with the transmit data rate as can be seen in Fig. 6(d). From this figure, a suitable value of (that maximizes the effective data rate) should be 3.5 bit/s/Hz for this test setting, which is variant in different applications. The results indicate that the selection of the transmit data rate also involves various trade-offs (similar to the conclusion in Section 7.2.3 about ), which should be reasonably chosen for practical implementation.

7.4 The Impact of Network Parameters

To get more insights about the wireless network design and practical implementation, we study the impact of two important network parameters, i.e., the node density and the node feature dimension, in this section.

7.4.1 The Impact of Node Density

First, we study the impact of the node density on the decentralized GNN binary classifier in wireless communication systems. To this end, we conduct simulation on graphs with different numbers of nodes located in a fixed area size (2,000m 2,000m). The results are summarized in Figs. 3, 4, 5, and 6. From these figures, increasing the node density would deteriorate the performance of the decentralized GNN binary classifier. Specifically, in both uncoded and coded communication systems, larger node densities lead to larger misclassification rates and smaller percentages of robust nodes without retransmission. When retransmission mechanism is used, the predicted results must satisfy the target robustness probability. Therefore, the misclassification rate with retransmission are always around 0 regardless of the node density and the corresponding curves are close to each other. However the corresponding communication overhead is different. Specifically, more transmission rounds are needed for networks with larger node densities to achieve a given robustness requirement. This can be explained as follows. The node density directly influences the number of neighbors of each node in the graph, i.e., node degree . As shown in Table III, larger node densities correspond to larger node degrees. For uncoded communication systems, larger node degrees lead to smaller robustness probability for given SINR or BER of the received signal based on (32). On the other hand, for coded communication systems, larger node degrees may increase the number of neighbors in outage, which deteriorates the prediction robustness. Overall, networks with small node densities are preferred in terms of prediction robustness. Nowadays, however, ultra-dense networks (UDNs) are prevalent, where neighborhood sampling technique can be utilized to enhance the prediction robustness of the decentralized GNN.

| Number of Nodes, | 50 | 100 | 150 | 200 |

|---|---|---|---|---|

| Average Node Degree | 7.61 | 15.56 | 23.33 | 31.15 |

7.4.2 The Impact of Node Feature Dimension

Besides the node density mentioned above, the node feature dimension is also a key parameter for the practical network design, which directly corresponds to the length of the packet to be transmitted. In the following, we study the impact of the node feature dimension on the decentralized GNN binary classifier in wireless communication systems with . The simulation results are summarized in Figs. 7 and 8.

As can be seen, the impact of the node feature dimension for uncoded and coded communication systems are different. From Fig. 7, larger node feature dimensions deteriorate the robustness of the decentralized GNN binary classifier in uncoded communication systems (larger misclassification rates and smaller percentages of robust nodes without retransmission). Again, this can be explained based on the definition of robustness probability in (32). From (32), when SINR or BER of the received signal is fixed, increasing leads to smaller robustness probability. Accordingly, more transmission rounds are needed for networks with larger node feature dimensions to achieve a given robustness requirement, as depicted in Fig. 7(c).

However, the robustness of the decentralized GNN binary classifier in coded communication systems does not change with . The reason is that packet-level errors are considered in coded communication systems and the length of each packet does not matter (so long as its transmission can be completed within the coherence time). Based on the above simulation results, for uncoded communication systems, we need to constraint the node feature dimension. As mentioned in Section 3.1, the node feature dimension is related to the quantization precision. Higher-precision quantization on the internal characteristics leads to larger node feature dimension but alleviates the difficulty of learning a good GNN model. Therefore, there exists a trade-off between the training difficulty and the prediction robustness for the decentralized GNN binary classifier in uncoded communication systems.

7.5 Comparison between Uncoded and Coded Communication Systems

Up to this point, we have analyzed and tested the performance of the decentralized GNN binary classifier and the corresponding retransmission mechanisms in both uncoded and coded communication systems. As a summary of the discussion in this section, we highlight some major differences between the two communication systems in Table IV. Overall speaking, coded communication systems are superior to uncoded communication systems for the robust implementation of the GNN binary classifier. Undoubtedly, transmission with coded signals induces overhead in other aspects, such as the decoding delay. Choosing specific transmission mode is important for specific wireless applications.

| System Model | Unoded | Coded | ||

|---|---|---|---|---|

| Error | Bit-level (BER) | Packet-level (Outage) | ||

| Robustness Metric | Robustness Probability | Robustness Indicator | ||

|

Larger | Smaller | ||

|

Larger | Smaller | ||

|

More | Fewer | ||

|

Limited | Strong |

8 Conclusion

GNN is a potentially powerful tool for a wide range of applications in wireless communication systems. Different from other widely used neural network models, the inference stage of the GNN can be naturally used in a decentralized manner with information exchanges among neighbors over wireless channels. However, wireless transmission is generally imperfect due to the noise and fading impairments, which is the main bottleneck of the decentralized GNN. Therefore, by using the GNN binary classifier as an example, this paper analyzes the performance the decentralized GNN during the inference stage in wireless communication systems and develops retransmission mechanisms to enhance the prediction robustness. We first develop a methodology to check whether the predicted labels are robust. Then we analyze the performance of the decentralized GNN binary classifier in both uncoded and coded communication systems. Furthermore, we develop novel retransmission mechanisms to enhance the prediction robustness in the above two communication systems. To verify our analysis and the effectiveness of the proposed retransmission mechanisms, we conduct simulations on synthetic graph data. The results suggest that our proposed retransmission mechanisms efficiently enhance the robustness of the decentralized GNN. We also provide some insights into related wireless networks design and practical implementation. The proposed analysis, retransmission mechanisms, and corresponding insights are applicable to any GNN based decentralized binary control/management in wireless communication systems.

As far as we know, we are among the first to study the robust decentralized inference with GNNs over noisy wireless channels. The current work represents a first step towards this goal and some open problems still need further investigation. First, this paper focuses on a single-layer GNN binary classifier, where nodes only need to exchange node features with each other. For multi-layer GNNs, however, hidden states at different layers also need to be shared among neighbors through wireless channels, for which the synchronization among neighbors cannot be neglected. Therefore, designing effective synchronization protocol is an important future direction to extend the analysis in this paper. Furthermore, we assume the existence of a well-trained GNN model and the accuracy of the decentralized GNN with perfect transmission is 100% to simplify the analysis. However, this assumption may not hold in practice. Besides imperfect transmission, incorrect predictions may be caused by the limited generalizability of the GNN model on unseen samples as well. How to analyze the robustness of the decentralized GNN with limited generalizability to testing samples is worth future investigation. Moreover, all unqualified neighbors are asked to re-transmit in our proposed retransmission mechanism and the robustness is re-checked after all retransmission is done. In practice, the retransmission is generally done in serial. It is possible that after retransmission from some (but not all) unqualified neighbors, the robustness can be achieved and the order of retransmission may matter. Investigating reasonable order of retransmission to reduce the communication overhead is an interesting topic. Finally, we only consider uncoded and simplified coded communication systems in this paper for theoretical analysis. However, error correction codes, cyclic redundancy check or other more complicated coding mechanisms which can help detect and correct errors are usually adopted in practical systems. These mechanisms definitely influence the robustness of the decentralized GNN. One can also consider other techniques, such as the joint source-channel coding and the error correction mechanism, instead of the retransmission mechanism to further enhance the robustness of the decentralized GNN in wireless communication systems.

References

- [1] W. Saad, M. Bennis, and M. Chen, “A vision of 6G wireless systems: Applications, trends, technologies, and open research problems,” IEEE Netw., vol. 34, no. 3, pp. 134–142, May/Jun. 2020.

- [2] T. O’Shea and J. Hoydis, “An introduction to deep learning for the physical layer,” IEEE Trans. Cogn. Commun. Netw., vol. 3, no. 4, pp. 563–575, Dec. 2017.

- [3] F. Liang et.al., “An iterative BP-CNN architecture for channel decoding,” IEEE J. Sel. Topics Signal Process., vol. 12, no. 1, pp. 144–159, Feb. 2018.

- [4] Z. Qin et.al., “Deep learning in physical layer communications,” IEEE Wireless Commun., vol. 26, no. 2, pp. 93–99, Apr. 2019.

- [5] Q. Hu et.al., “Iterative algorithm induced deep-unfolding neural networks: Precoding design for multiuser MIMO systems,” IEEE Trans. Wireless Commun., vol. 20, no. 2, pp. 1394–1410, Feb. 2021.

- [6] H. Sun et.al., “Learning to optimize: Training deep neural networks for interference management,” IEEE Trans. Signal Process., vol. 66, no. 20, pp. 5438–5453, Oct. 2018.

- [7] Y. Shen et.al., “LORM: Learning to optimize for resource management in wireless networks with few training samples,” IEEE Trans. Wireless Commun., vol. 19, no. 1, pp. 665–679, Jan. 2020.

- [8] M. Lee, G. Yu, and G. Y. Li, “Learning to branch: Accelerating resource allocation in wireless networks,” IEEE Trans. Veh. Technol., vol. 69, no. 1, pp. 958–970, Jan. 2020.

- [9] W. Cui et.al., “Spatial deep learning for wireless scheduling,” IEEE J. Sel. Areas Commun., vol. 37, no. 6, pp. 1248–1261, Jun. 2019.

- [10] M. Lee et.al., “Graph embedding based wireless link scheduling with few training samples,” IEEE Trans. Wireless Commun., vol. 20, no. 4, pp. 2282–2294, Apr. 2021.

- [11] H. Ye, G. Y. Li, and B.-H. F. Juang, “Deep reinforcement learning based resource allocation for V2V communications,” IEEE Trans. Veh. Technol., vol. 68, no. 4, pp. 3163–3173, Apr. 2019.

- [12] M. Wang, Y. Cui, X. Wang, S. Xiao, and J. Jiang, “Machine learning for networking: WorkFlow, advances and opportunities,” IEEE Netw., vol. 32, no. 2, pp. 92–99, Mar./Apr. 2018.

- [13] E. Zeydan et al., “Big data caching for networking: Moving from cloud to edge,” IEEE Commun. Mag., vol. 54, no. 9, pp. 36–42, Sep. 2016.

- [14] Z. Wu et.al., “A comprehensive survey on graph neural networks,” arXiv preprint arXiv:1901.00596, Dec. 2019.

- [15] J. Zhou et.al., “Graph neural networks: A review of methods and applications,” arXiv preprint arXiv:1812.08434, Jul. 2019.

- [16] D. Xu et.al., “Scene graph generation by iterative message passing,” in Proc. of CVPR, vol. 2, 2017.

- [17] H. Yao et.al., “Deep multi-view spatial-temporal network for taxi demand prediction,” in Proc. of AAAI, 2018, pp. 2588–2595.

- [18] A. Fout et.al., “Protein interface prediction using graph convolutional networks,” in Proc. of NIPS, 2017, pp. 6530–6539.

- [19] W. Jin et.al., “Adversarial attacks and defenses on graphs: A review and empirical study,” arXiv preprint arXiv:2003.00653, 2020.

- [20] H. Dai et.al., “Adversarial attack on graph structured data,” in Proc. of ICML, Jul. 2018, pp. 1115-1124.

- [21] K. Xu et.al., “Topology attack and defense for graph neural networks: An optimization perspective,” in Proc. of IJCAI, 2019, pp. 3961–3967.

- [22] V. N. Ioannidis et.al., “Graphsac: Detecting anomalies in large-scale graphs,” arXiv preprint arXiv:1910.09589, 2019.

- [23] Y. Zhang et.al., “Comparing and detecting adversarial attacks for graph deep learning,” in Proc. Int. Conf. Learning Representation, May 2019.

- [24] D. Zhu, et.al., “Robust graph convolutional networks against adversarial attacks,” in Proc. of KDD, Aug. 2019, pp. 1399–1407.

- [25] M. Jin et.al., “Power up! robust graph convolutional network against evasion attacks based on graph powering,” arXiv preprint arXiv:1905.10029, 2019.

- [26] D. Zügner and S. Günnemann, “Certifiable robustness and robust training for graph convolutional networks,” in Proc. of KDD, Aug. 2019, pp. 246–256.

- [27] A. Bojchevski and S. Günnemann, “Certifiable robustness to graph perturbations,” in Proc. of NIPS, 2019, pp. 8317–8328.

- [28] M. Eisen and A. R. Ribeiro, “Optimal wireless resource allocation with random edge graph neural networks,” IEEE Trans. Signal Process., vol. 68, pp. 2977–2991, Apr. 2020.

- [29] Y. Shen et.al., “Graph neural networks for scalable radio resource management: Architecture design and theoretical analysis,” IEEE J. Sel. Areas Commun., vol. 39, no. 1, pp. 101–115, Jan. 2021.

- [30] R. Liu et.al., “User association for millimeter-wave networks: A machine learning approach,” IEEE Trans. Commun., vol. 67, no. 7, pp. 4162–4174, Jul. 2020.

- [31] E. Tolstaya et.al., “Learning decentralized controllers for robot swarms with graph neural networks,” in Proc. Conf. Robot Learn., 2020, pp. 671–682.

- [32] Q. Li, F. Gama, A. Ribeiro, and A. Prorok, “Graph neural networks for decentralized multi-robot path planning,” in Proc. IEEE/RSJ Int. Conf. Intell. Robots Syst., Oct. 2020, pp. 1–8.

- [33] F. Gama, E. Tolstaya, and A. Ribeiro, “Graph neural networks for decentralized controllers,” arXiv preprint arXiv:2003.10280v2, 2020.

- [34] W. Y. B. Lim, et. al., “Federated learning in mobile edge networks: A comprehensive survey,” IEEE Commun. Surveys Tuts., vol. 22, no. 3, pp. 2031–2063, Apr. 2020.

- [35] M. Chen et.al., “Convergence time optimization for federated learning over wireless networks,” IEEE Trans. Wireless Commun., vol. 20, no. 4, pp. 2457–2471, Apr. 2021.

- [36] C. Dinh et al., “Federated learning over wireless networks: Convergence analysis and resource allocation,” arXiv preprint arXiv:1910.13067, 2019.

- [37] J. Ren, G. Yu, and G. Ding, “Accelerating DNN training in wireless federated edge learning system,” IEEE J. Sel. Areas Commun., vol. 39, no. 1, pp. 219 –232, Jan. 2021.

- [38] S. Wang et. al., “Adaptive federated learning in resource constrained edge computing systems,” IEEE J. Sel. Areas Commun., vol. 37, no. 6, pp. 1205–1221, Jun. 2019.

- [39] D. Liu et.al., “Wireless data acquisition for edge learning: Data-importance aware retransmission,” IEEE Trans. Wireless Commun., vol. 20, no. 1, pp. 406–420, Jan. 2021.

- [40] K. Xu et.al., “How powerful are graph neural networks,” in Proc. Int. Conf. Learning Representation, 2019, pp. 1–17.

- [41] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” in Proc. Int. Conf. Learning Representation, Apr. 2017.

- [42] G. Puy et.al., “Unifying local and non-local signal processing with graph CNNs,” arXiv preprint arXiv:1702.07759, Feb. 2017.

- [43] A. Ben-Tal, L. El Ghaoui, and A. Nemirovski, Robust Optimization, ser. Princeton Series in Applied Mathematics. Princeton, NJ: Princeton University Press, 2009.

- [44] E. Wong and J. Z. Kolter, “Provable defenses against adversarial examples via the convex outer adversarial polytope,” in Proc. Int. Conf. Mach. Learn., 2018, pp. 5283–5292.

- [45] A. Goldsmith, Wireless Communications. New York, NY, USA: Cambridge Univ. Press, 2005.

- [46] S. Zhang et.al., “Fast Learning of graph neural networks with guaranteed generalizability: One-hidden-layer case,” in Proc. Int. Conf. Mach. Learn., 2020, pp. 11268–11277.

- [47] S. Agarwal, R. H. Katz, S. V. Krishnamurthy, and S. K. Dao, “Distributed power control in ad hoc wireless networks,” in Proc. IEEE Int. Symp. Pers., Indoor, and Mobile Radio Commun., vol. 2, Oct. 2001, pp. 59–66.

- [48] C. W. Sung and K. K. Leung, “A generalized framework for distributed power control in wireless networks,” IEEE Trans. Info. Theory, vol. 51, no. 7, pp. 2625–2635, July 2005.

- [49] A. Zappone, E. Jorswieck, and A. Leshem, “Distributed resource allocation for energy efficiency in MIMO OFDMA wireless networks,” IEEE J. Sel. Areas Commun., vol. 34, no. 12, pp. 3451–3465, Dec 2016.

- [50] W. Sun, Z. Yang, X. Zhang, and Y. Liu, “Energy-efficient neighbor discovery in mobile ad hoc and wireless sensor networks: A survey,” IEEE Commun. Surveys Tuts., vol. 16, no. 3, pp. 1448–1459, Mar. 2014.

Appendix A Dual Problem for Problem (23)

We reformulate Problem (23) as follows

| (38) |

subject to

| (38a) |

| (38b) |

| (38c) |

| (38d) |

| (38e) |

| (38f) |

| (38g) |

| (38h) |

| (38i) |

Note that constraint (38f) can be simply eliminated from the optimization. Then the dual problem of Problem (38) is given by

| (39) |

subject to

| (39a) |

| (39b) |

| (39c) |

| (39d) |

| (39e) |

| (39f) |

| (39g) |

We know that either or must be zero, which correspond to upper and lower bound of , respectively. According to (39e), if , then we have

Similarly, if ,

Therefore, we have . Combined (39c), the following term in the objective function of Problem (A) can be rewritten as which is constant.

To further simplify the objective function of Problem (A), we introduce a new vector, , which satisfies . Therefore, constraint (39d) can be rewritten as

| (40) | ||||

Similarly, we introduce a new matrix, , which satisfies the following equations

In this way, constraint (39a) can be rewritten as Based on the above transformation, we know that and the following term in the objective function of Problem (A) can be rewritten as

| (41) | |||

where

| (42) | |||

In fact, (41) can be transformed into a more tractable form. When , if , holds. Similarly, if , . Under this condition, to maximize the objective function, should be set as its lower bound and

| (43) | ||||

Similar analysis can be conducted when . Overall, we have Therefore, (41) can be rewritten as

where indicates the trace of the input matrix. Based on the above transformations, Problem (A) finally can be transformed into Problem (24) in Section 4.3.

Appendix B Solution for Problem (26)

Problem (26) can be reformulated as

| (44) |

subject to

| (44a) |

| (44b) |

The dual problem of Problem (44) is given by

| (45) |

subject to (44b),

| (45a) |

| (45b) |

The optimal solution of the above problem is very obvious. Specifically, for , its optimal solution is obtained by setting corresponding to the top- largest entries in as 1 and others as 0.

Because is the dual variable corresponding to constraint (44a), the following equation holds

| (46) | |||

If , holds, which indicates that . Meanwhile, also means that is among the top- largest entries in . Therefore, is no larger than all top- largest entries in . Similarly, if , it must be the case that and . According to (46), must equal to 0, which suggests that is not among the top- largest entries in under this condition. Therefore, should be larger than all the that is not among the top- largest entries in . Overall, the solution of is the -largest entry in .