Decentralized Concurrent Learning with Coordinated Momentum and Restart

Abstract

This paper studies the stability and convergence properties of a class of multi-agent concurrent learning (CL) algorithms with momentum and restart. Such algorithms can be integrated as part of the estimation pipelines of data-enabled multi-agent control systems to enhance transient performance while maintaining stability guarantees. However, characterizing restarting policies that yield stable behaviors in decentralized CL systems, especially when the network topology of the communication graph is directed, has remained an open problem. In this paper, we provide an answer to this problem by synergistically leveraging tools from graph theory and hybrid dynamical systems theory. Specifically, we show that under a cooperative richness condition on the overall multi-agent system’s data, and by employing coordinated periodic restart with a frequency that is tempered by the level of asymmetry of the communication graph, the resulting decentralized dynamics exhibit robust asymptotic stability properties, characterized in terms of input-to-state stability bounds, and also achieve a desirable transient performance. To demonstrate the practical implications of the theoretical findings, three applications are also presented: cooperative parameter estimation over networks with private data sets, cooperative model-reference adaptive control, and cooperative data-enabled feedback optimization of nonlinear plants.

keywords:

Concurrent Learning, Data-driven Optimization, Multi-Agent Systems, Hybrid Dynamical Systems1 INTRODUCTION

Concurrent Learning (CL) techniques have emerged as powerful data-driven tools for designing estimation and learning dynamics in a wide range of applications where persistence of excitation (PE) conditions are either impractical or infeasible [1, 2]. These techniques have demonstrated their utility in diverse fields, including parameter estimation in batteries [3], exoskeleton robotic systems [4, 5], mobile robots and aerial vehicles [6], extremum seeking algorithms [7], and reinforcement learning controllers [8, 9]. In these applications, extensive datasets containing historical recorded measurements of the relevant system signals are often available and can be leveraged for estimation purposes. When these datasets are “sufficiently rich”, they can be seamlessly integrated into dynamic estimation algorithms, enabling (uniform) exponential convergence to the unknown parameters even in the absence of PE conditions.

However, relaxations of PE conditions can lead to suboptimal transient performance, particularly in terms of slow convergence rates that depend on the “level of richness” of the dataset used by the algorithm. Since datasets readily available in applications may exhibit prohibitively small levels of richness, there is a growing need for the development of enhanced CL techniques that can accelerate the convergence rate of the estimation dynamics while maintaining the desirable stability and robustness properties.

1.1 Literature Review

One promising direction to alleviate the slow convergence issue in decision-making algorithms is the incorporation of momentum with dynamic damping, see [3, 10, 11]. For single-agent gradient-based dynamics with momentum, the use of decreasing damping has been shown to play a crucial role in inducing favorable acceleration properties [12, 13, 14, 15]. However, it has also been shown that stability bounds in terms of functions may not exist for such systems unless the damping coefficients are persistently exciting [16], a condition that precludes vanishing coefficients. Furthermore, it is well-known that, without proper tuning, the use of momentum can lead to undesirable oscillations [17]. To address potential instability issues and to eliminate oscillatory behaviors, restart mechanisms that reset the momentum have been developed for single-agent systems using adaptive [17, 18] and periodic policies (usually called “scheduled”) with carefully selected frequencies [13, 19, 20, 17]. Recent works have also investigated the development of similar momentum-based algorithms in multi-agent systems, including distributed continuous-time heavy-ball dynamics with constant damping [21], limiting equations of stochastic recursive algorithms as multi-agent flows with momentum [22], and decision-making algorithms with momentum for high-order multi-agent systems [23, 24, 25]. However, existing approaches have primarily focused on undirected network topologies. Additionally, the incorporation of momentum and restarting mechanisms in decentralized concurrent learning algorithms has remained unexplored. Such algorithms are essential when a network of agents seeks to collaboratively and efficiently learn a common model by sharing local estimates with neighboring agents, without revealing their private data. Applications of these algorithms span various domains, including source seeking in autonomous mobile robots [26], adaptive formation control of robotic teams [27], and cooperative adaptive control [28].

1.2 Contributions

Motivated by the previous background, in this paper we study the synthesis and analysis of decentralized concurrent learning dynamics with momentum and restart for general directed graphs. In particular, we consider a model that extends the centralized dynamics studied in [13, 14], and [19] to cases where each agent implements its own restart policy and shares information only with neighbors characterized by the topology of the communication graph. To assess the impact of the topology of graph on the stability properties of the dynamics, we exploit analytical tools from graph theory and hybrid dynamical system’s theory [29]. Using these tools, this paper makes the following primary contributions:

(1) We first introduce a class of multi-agent concurrent learning (CL) algorithms that incorporate momentum and a centralized restarting mechanism. We demonstrate that if: (a) the graph is strongly connected, (b) the overall data collected by the agents satisfies a “cooperative richness condition,” and (c) the restarting frequency exceeds a certain threshold that encodes the “asymmetry” of the communication graph, then the resulting error estimation dynamics are input-to-state stable [30] with respect to measurement noise and model error approximations. Furthermore, the convergence is exponential with rates assignable via the restarting period. These results are presented in Theorem 1.

(2) Next, by leveraging the robustness properties of the dynamics, we interconnect the momentum-based concurrent learning algorithms with a decentralized coordinated restarting mechanism, enabling a fully decentralized implementation. The resulting dynamical systems are also globally stable and exhibit convergence rates consistent with Theorem 1 following an initial synchronization phase of the restarting times. These results are presented in Theorem 2.

(3) Finally, we present three applications of the proposed momentum-based CL algorithms with restart within the context of data-enabled control: (a) cooperative parameter estimation without persistently exciting regressors in networks where nodes have private data with heterogeneous informativity properties; (b) data-enabled cooperative model-reference adaptive control; (c) data-enabled cooperative feedback-optimization. By employing (hybrid) Lyapunov-based techniques, we show that the resulting closed-loop systems exhibit favorable stability and convergence properties, which are also illustrated via numerical examples.

2 Preliminaries

Notation: We use to denote a closed ball of appropriate dimension in the Euclidean space, of radius , and centered at the origin. Let be the matrix with all entries equal to zero except the entry, which is equal to one. Let be the vector of all ones, and be the identity matrix. Given , we let denote their concatenation. We use to denote the standard basis of . A matrix is represented in terms of its entries as , with being its entry. Similarly, we use to represent a block matrix in terms of its blocks, and use to build a block diagonal matrix from the set of matrices . Given a vector we let represent a diagonal matrix with diagonal entries given by the entry of . A matrix is said to be nonnegative () if for all . The spectral radius of a matrix is denoted by . We use for the vector 2-norm, for the matrix norm induced by , and to denote the distance of a vector to a closed set . With a slight abuse of notation, given a matrix we define the semi-norm . Given a set-valued mapping , the domain of is the set . A function is of class () if it is continuous, strictly increasing, and satisfies . It is said to be of class (), if additionally as . A function is of class () if it is nondecreasing in its first argument, nonincreasing in its second argument, for each , and for each .

Graph Theory: For a directed graph (digraph) , we denote by a directed edge from node to node , we call node an in-neighbor of node , and we call node an out-neighbor of node . We consider digraphs that do not have self-arcs. A weighted Laplacian matrix associated with satisfies the following: the off-diagonal entries are such that if is an edge, and otherwise; the diagonal entries are determined such that every row of sums to zero, and all its nonzero eigenvalues have positive real part [31, Lemma 6.5]. A digraph is strongly connected if for any two distinct nodes and , there is a path from to . The Laplacian matrix of a strongly connected digraph satisfies [31, Ch. 6].

Hybrid Dynamical Systems: In this paper, we work with dynamical systems that combine continuous-time and discrete-time dynamics. Such systems are called hybrid dynamical systems (HDS) [29]. The dynamics of a HDS with state are represented as:

| (1a) | |||

| (1b) | |||

where is an exogenous input, is called the flow map, is called the jump map, is called the flow set, and is called the jump set. We use to denote the data of the HDS . These systems generalize purely continuous-time systems () and purely discrete-time systems (). Time-varying systems can also be represented as (1) by using an auxiliary state with dynamics and . Solutions to system (1) are parameterized by a continuous-time index , which increases continuously during flows, and a discrete-time index , which increases by one during jumps. Therefore, solutions to (1) are defined on hybrid time domains (HTDs). Solutions to (1) are required to satisfy , with being locally essentially bounded and Lebesgue measurable for each . To establish this correspondence, a hybrid input in (1) is obtained from a suitable continuous-time input by using (with some abuse of notation) during the flows (1a) for each fixed , and by keeping constant during the jumps (1b). For a precise definition of hybrid time domains and solutions to HDS of the form (1), we refer the reader to [32]. To simplify notation, in this paper we use , and we let . The stability properties of HDS will be studied using the following notion.

Definition 1

Given a closed set , a HDS of other form (1) is said to be input-to-state stable (ISS) with respect to if there exist and such that every maximal solution pair ) to satisfies:

| (2) |

for all and all . If (2) holds with , the set is said to be uniformly globally asymptotically stable (UGAS). If additionally, for some constants , the set is said to be uniformly globally exponentially stable (UGES).

3 Problem Formulation

We consider a decentralized learning problem in a multi-agent system (MAS), where a group of agents seeks to collaboratively estimate a common model characterized by a parameter . The agents share information with each other via a directed communication network modeled by a strongly connected digraph , where is the set of nodes, and is the set of edges. We assume that each agent has access to both real-time and past recorded measurements of a signal of the form

| (3) |

where represents an unknown and possibly time-varying disturbance, and represents a regressor function (or basis functions), which is assumed to be continuous, uniformly bounded, and known to the agent. These assumptions are typical in single-agent [9, 10, 3, 2] and distributed CL problems [7, 28].

3.1 Model Description and Key Assumptions

To estimate , we consider a decentralized momentum-based concurrent learning (DMCL) algorithm with the following update rule for the local estimate of each agent:

where is a dynamic, non-decreasing coefficient, with rate of growth bounded by , and which satisfies

where are tunable parameters. The auxiliary state captures the incorporation of momentum, and it satisfies

where is a tunable gain, is a suitable mapping described below, and is the entry of the adjacency matrix of the graph modeling the flow of information betwen agent and its neighbors. The key components of the DMCL dynamics are explained below:

-

(a)

The function has the general form:

(4) where and are tunable constants.

-

(b)

The function in (4) is given by

(5) and it incorporates the real-time information available to the agent, where is given by (3), , and is a time-varying disturbance.

-

(c)

The function in (4) is given by

(6) and it incorporates past recorded measurements of the signal in (3) and the regressor , obtained at a sequence of times , where , and where and models the persistent disturbances occurring during data collection.

-

(d)

The last term in the dynamics of captures the exchange of information between agent and its neighbors. Note that, in general, we have that .

To study the DMCL dynamics, the data matrix associated to the agent is defined as:

| (7) |

Rather than assuming that every matrix is positive definite, as in standard single-agent concurrent learning (CL) [9], we will assume a weaker “cooperative” richness condition on the overall data of the network [33, Def. 2].

Assumption 1

There exists a constant , such that

| (8) |

Moreover, the graph is strongly connected.

If (8) holds, the data is said to be cooperatively sufficiently rich (CSR).

Remark 1

Assumption 1 allows for some agents to have uninformative data (e.g., ) provided other agent’s data is sufficiently rich data to satisfy (8), see also [34]. This is an important relaxation for large-scale MAS where, unlike standard CL [9], it might be unreasonable to assume that every node’s data satisfies . Moreover, note that in the DMCL dynamics agents do not share their data with other agents in the system. In fact, only the local estimates are shared with the neighboring agents. This prevents the direct solution of the estimation problem using “single-shot” techniques, and instead calls for recursive algorithms that preserve the privacy of the individual data.

3.2 Connections to Accelerated Gradient Flows

The form of the DMCL dynamics is closely related to the accelerated gradient flows with momentum studied in [13, 35, 14, 24], which have the general form

and where is a suitable convex cost function and is a time-varying coefficient. Indeed, using the vectors , , the parameter error coordinates , , and the Laplacian matrix of the graph , the DMCL dynamics with a centralized coefficient can be written as the following dynamical system:

| (9) |

where is given by

| (10) |

and where , and where and are block-diagonal matrices given by:

and is given by:

| (14) |

However, while similar decentralized algorithms have been studied in [23, 24, 25], the DMCL dynamics do not describe a standard gradient flow with momentum due to the lack of symmetry on , i.e., the right-hand side of (10) cannot be expressed as the gradient of a potential function, a property that usually plays a crucial role in the stability properties of momentum-based dynamics.

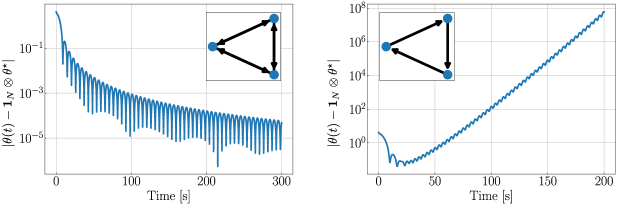

The following example highlights some of the challenges that can arise when momentum is used and the multi-agent system (MAS) has a communication topology characterized by a directed graph.

Example 1

Consider a multi-agent system with three agents, i.e., . We let and , and for simplicity we assume that all agents use the same coefficient , with and . We consider regressors with collected data satisfying Assumption 1, and the parameter . The DMCL dynamics are implemented using . The left plot of Figure 1 shows the evolution in time (in logarithmic scale) of the estimation error when the graph is fully connected. As observed, the estimation error converges to zero, which is consistent with the stability results of [13, Thm. 3] and the fact that in this case, the DMCL dynamics describe an accelerated gradient system. Now, suppose that the communication graph is given by a directed cycle graph, as shown on the inset of the right plot of Figure 1. In this case, the same DMCL algorithm ceases to be a momentum-based gradient flow and it exhibits the instability issue shown in the plot. The right plot, however, reveals a promising solution to the instability issue in asymmetric graphs. Stability can be restored by implementing a well-designed restart mechanism that accounts for the graph’s asymmetry. The details of this mechanism will be elaborated upon in the following sections.

3.3 DMCL with Coordinated Restart

To address the instability observed in Example 1, while simultaneously inducing suitable convergence rates achieved via momentum, we can incorporate restart mechanisms into the algorithm. Such mechanisms persistently reset the momentum and the dynamic coefficients whenever exceeds the upper bound . The resets are performed according to the following discrete-time updates:

| (15) |

where is a pre-defined parameter indicating the restart policy of agent . It has been shown that this approach can curtail the oscillations of momentum-based algorithms [13, 17] and also “robustify” their stability properties with respect to persistent disturbances [19]. Indeed, note that the policy implies , which implies . For multi-agent systems with undirected graphs, similar restart mechanisms of the form (15) have been studied in [25, 24]. However, the effectiveness of restarting in the context of multi-agent systems with directed graphs has remained largely unexplored, and, as suggested by Example 1 the extension is non-trivial. Indeed, from the behavior observed in Figure 1 it should be clear that a “slow” restart policy (e.g., when is large) will not be able to stabilize the system. However, a “fast” restart policy (e.g., when is small) would keep approximately constant, thus reducing the effectiveness of using momentum. The right plot of Figure 1 shows the emerging behavior of the DMCL algorithm when restart is implemented by each node of the network with a “suitable” frequency and in a coordinated manner. While similar phenomena has been recently observed in game-theoretic problems [24], the use of momentum and restart in decentralized CL problems, and its dependence on the data of the system, the topology of the graph, and the perturbed models (3) have remained largely unexplored. This observation motivates the main research problem that we study in this paper:

Problem 1

Characterize the restart mechanisms that: a) robustly stabilize the DMCL algorithm in directed networks; b) achieve ISS with respect to the disturbances in (3); c) induce network-wide acceleration properties in the MAS.

In the next section we provide an answer to Problem 1 using tools from hybrid dynamical systems and graph theory.

4 Main Results

To tackle Problem 1, we first consider a centralized restart mechanism that makes use of a common state that satisfies . This centralized assumption simplifies the analysis and will be relaxed in the subsequent subsections to encompass decentralized implementations. For the purpose of analysis, we also use an auxiliary state with dynamics to model any explicit dependence on time .

4.1 Centralized Restart: Hybrid Systems Model

When using a common coefficient to coordinate the restart of the DMCL algorithm, the resulting dynamical system can be modeled by the following differential inclusion, in vectorial form, with state :

| (16) |

whenever , and where , the function is given by

| (17) |

and the vectors and are defined as

where . The maps and above are defined as

| (18a) | ||||

| (18b) | ||||

where the functions were defined in (5)-(6) for all . Since and are allowed to evolve in , while , in (16), the flow set is defined as:

| (19) |

which guarantees that during flows the state remains in the interval . To incorporate restarts into the DMCL algorithm, each time meets the condition , it is reset to , and, all the states are updated as in (15). Therefore, using

| (20) |

with , the discrete-time updates of the states of the hybrid system can be written in vectorial form as

| (21) |

which are executed whenever are in the jump set , defined as follows:

| (22) |

Therefore, the overall discrete-time dynamics of the system with state can be written as:

| (23) |

By combining (16) and (23), the DMCL algorithm with centralized restart can be viewed as a HDS of the form (1), with data

| (24) |

Note that in this centralized HDS the jump set (22) only imposes conditions on the state . Namely, a restart is triggered whenever . If for all time, then the HDS would model a DMCL algorithm with scheduled periodic restart, where the time between two consecutive restarts is . However, the differential inclusion in (16) also allows us to consider scenarios where is not constant but rather is any absolutely continuous function (between restarts) satisfying , which includes functions that remain constant for arbitrarily long periods of time.

Before presenting our first main result, we introduce two technical propositions that play important roles in our results. All the proofs are presented in Section 6.

Proposition 1

Suppose that Assumption 1 holds. Then, there exists a unit vector such that:

-

(a)

The entries of satisfy:

(25) -

(b)

and with .

- (c)

-

(d)

There exists a class- function such that

(28) , where and is the largest singular value of .

Remark 2

By construction, if the Laplacian is symmetric, then . However, if is asymmetric, then in general we have . For the purpose of illustration, Figure 2 presents four examples of different graphs and their corresponding numerical values of .

4.2 Input-to-State Stability of

With Propositions 1-2 at hand, we are now ready to present the first main result of this paper, which provides conditions to stabilize the DMCL algorithm using a centralized restart. In particular, we study the stability properties of with respect to the closed set , where

| (30) |

which precisely describes the situation where all agent’s estimates are equal to the true parameter .

Theorem 1

Suppose that Assumption 1 holds, and let the constants (, , , ) be given by Proposition 1. If the restart parameters satisfy and

| (31) |

then the following hold:

-

(a)

For any restart policy the HDS renders the set ISS with respect to the input .

-

(b)

If for all , and , then, for every initial condition , every solution-input pair of , and every with , the sampled sequence of estimates satisfies

(32) where , and .

The main result of Theorem 1 reveals the impact of the asymmetry of on the resetting parameter . In particular, the following observations are in order:

(1) When is symmetric (i.e., ) and the DMCL dynamics do not use real-time data (i.e., ), condition (31) reduces to , which can always be satisfied using any positive constant , recovering the results of [19, Thm. 2] in the context of optimization.

(2) In general, the more “informative” is the collective data in the overall system (i.e., the larger is in (8)), the larger the parameter will be, thus providing more flexibility to increase the upper bound .

(3) The ISS result implies that the trajectories of the algorithm will converge to a neighborhood of the true parameter , where the size of the neighborhood shrinks as the disturbances shrink in (3). When , the result establishes asymptotic convergence to the true parameter.

(4) Lastly, when , the bound (32) characterizes the “accelerated” convergence properties of towards the true model . Since the rate of convergence is -dependent, the rate of convergence depends on . In particular, following similar steps as in the centralized case [19], the “optimal” value of that minimizes the contraction coefficient over a given window of time can be computed as .

Next, the following corollary leverages the expression of to obtain convergence bounds that parallel those obtained for centralized single-agent systems [19].

Corollary 1

The bound from Corollary 1 implies that, as , the convergence of towards is of order , for all . We complete this section with a corollary for the case , which guarantees the ISS properties of , but not convergence bounds of the form (32).

Corollary 2

4.3 Decentralized Restart: Synchronization

Since a central coordinator might not exist in large-scale networks, in this section, we study decentralized restart strategies based on each agent implementing an individual dynamic coefficient . To simplify our discussion, in this section we assume that and for all , and that , which allows us to remove the state variable and its associated dynamics. However, all our results can be extended to the case when time-varying regressors are included.

When each agent implements its own coefficient , the continuous-time DMCL dynamics (16) become

| (33) |

where the main state is now , , , is given by (18b), and the flow set is given by

| (34) |

In this case, restarts of the form (15) with occur whenever at least one of the agents satisfies the condition . This behavior can be modeled by the following jump set:

| (35) |

However, note that this approach would lead to uncoordinated restarts of the individual dynamics of the agents across the system. For example, for any time window , one can select equidistant initial conditions , where , which result in solutions experiencing restarts during this time window, each restart separated by intervals of flow of length . Therefore, as , asynchronous restarts would occur more often, hindering the advantages of incorporating momentum into the flows of the algorithm to accelerate the overall system.

To address this issue, and inspired by the synchronization algorithms of [36], we integrate the restart dynamics (15) of each agent with a decentralized coordination mechanism for the states . Specifically, each agent performs individual restarts of the form (15) when . However, the agents also implement the following additional discrete-time updates whenever their neighbors satisfy the condition :

| (36) |

where is a tunable parameter. To incorporate these additional discrete-time updates into the overall jump map of the system, consider the set-valued map

which is defined to be non-empty if and only if and . In words, the mapping captures the resets of the individual states of agent via (15), and also the updates of its neighbors via (36). The overall jump-map of the multi-agent hybrid system can then be defined using the outer-semicontinuous hull of 111The outer-semicontinuous hull of a set-valued mapping is the unique set-valued mapping satisfying , where stands for the closure., denoted leading to

| (37) |

Note that system (37) preserves the sparsity property of the graph .

The decentralized continuous-time dynamics (33) and the decentralized discrete-time dynamics (37) comprise the overall DMCL algorithm with restarts studied in this paper. This algorithm is fully modeled by the HDS

| (38) |

with state .

The following theorem provides a decentralized version of Theorem 1. In this case, stability of is studied with respect to the “synchronized” set , and the stability properties of the overall state are studied with respect to

| (39) |

For simplicity, we state the result for the case , but we comment on the robustness properties of the dynamics.

Theorem 2

Remark 3 (Nominal Robustness)

Since the hybrid system is nominally well-posed in the sense of [29, Ch. 6], the UGES properties of the DMCL algorithm are preserved, in a semi-global practical sense, under arbitrarily small additive perturbations on states and dynamics. This property is crucial for the use of in practical applications where dynamic disturbances are unavoidable.

Remark 4 (Strong Robustness via ISS)

The techniques employed to proof Theorem 2 can be further utilized to obtain ISS of provided that originates from a dynamical system evolving in a compact set. We omit this extension due to space constraints.

Remark 5

To the best of the author’s knowledge, Theorems 1-2 and the respective corollaries, are the first stability results for momentum-based CL algorithms implemented in multi-agent systems with general directed graphs. We note that in the literature of centralized CL, other accelerated algorithms have been studied using finite-time and fixed-time stability tools [3, 37, 38]. However, as shown in the comparison presented in [3], when the “level of richness” of the data (i.e., in Assumption 1) is “low”, momentum-based methods can yield better transient performance compared to other first-order non-smooth techniques. For decentralized problems defined over networks, we are not aware of finite-time or fixed-time CL algorithms that are stable under Assumption 8. A natural progression for future research involves developing such algorithms and comparing them with the DMCL algorithms proposed in this paper.

5 Applications in Estimation, Control, and Model-free Feedback Optimization

In this section, we apply the DMCL algorithm with restart in three different applications.

5.1 Hybrid Cooperative Identification Over Digraphs

First, we validate Theorem 2 in an cooperative estimation problem defined in a multi-agent system with , , and , for all . To implement the DMCL algorithm with coordinated restarts, we parameterize using the regressor and . To satisfy Assumption 1 with , each agent records five measurements of . We implement the hybrid system and plot the resulting trajectories of the estimation error in the left plot of Figure 3, using , and a fully connected graph. We also show with dashed lines the trajectory obtained when using the first-order decentralized CL dynamics of [7]. Since the graph is symmetric, in this case can be selected arbitrarily large to tune the convergence rate of the dynamics (see inequality (31)). The simulations start from a non-synchronized initial condition and rapidly achieve synchronization. Trajectories related to different choices of are also shown to illustrate the impact of the restart period on the convergence rate. Next, we let be a cycle digraph, for which . The resetting parameter is selected to satisfy inequality (31), and the resulting trajectories are shown in the right plot of Figure 3. In this case, the best transient performance is obtained as approaches the upper bound .

5.2 Data-Enabled Hybrid Cooperative MRAC

A key advantage of the robust stability results presented in Theorems 1 and 2, is that the DMCL dynamics can be interconnected with other systems for the solution of feedback control problems. To illustrate this application, we consider a multi-agent dynamical system, where each agent has individual dynamics of the form:

| (40) |

where models structured uncertainty parameterized by a common vector , and a regressor that is known by each agent . The agent’s goal is to be able to asymptotically track a common bounded reference despite the uncertainty in their model.

5.2.1 Two-Time Scale Hybrid Dynamics

To solve the tracking problem we use a two-time scale approach. First, we introduce a reference model , where is assumed to be Hurwitz. Following the ideas of [9], each agent implements a model-reference adaptive control (MRAC) law that incorporates three elements: (1) an adaptive component , where is the individual estimate of ; (2) a state-feedback component ; and (3) a feed-forward term designed such that ; see Figure 4 for an illustration of the control law. Using , and the error coordinates , the error dynamics for agent become:

| (41) |

where , for all . We make the assumption that that system (41) has no finite escape times from all initial conditions, and that Assumption 1 holds. To cooperatively estimate , we interconnect (41) with the DMCL algorith with restart given by (38) with flow map

| (42) |

where the pair is given by (33), and where is a tunable parameter.

To study the stability of the interconnected system, we will first analyze the system under a centralized timer , and we interpret the closed-loop system as a two-time scale hybrid dynamical system with the DMCL algorithm having continuous-time dynamics operating in a faster time scale compared to (41). Since is Hurwitz, for each there exists such that , i.e., system (41) is UGES when . Similarly, by Theorem 1, the momentum-based hybrid dynamics render the set UGES via a Lyapunov function . We can then study the interconnection of both systems using the Lyapunov function , with . From the proof of Theorem 1, during jumps satisfies because . On the other hand, during flows of the closed-loop system the function satisfies

| (43) | ||||

| (44) |

where we used the quadratic lower bounds of , and the boundedness of the regressors to obtain . From here the result follows by completing squares and taking sufficiently large such that . Since , and the jumps are periodic, we obtain UGES of the set , where is given by (39). The stability properties for the decentralized case follow now by leveraging the absence of finite escape times, and by using the reduction principle as in the proof of Theorem 2.

5.2.2 Numerical Example

To illustrate the previous result, we consider a multi-agent system with agents, where the communication graph is a directed cycle graph, see the inset in Figure 4. We consider open-loop unstable individual dynamics characterized by matrices and the parameterized uncertainty , with and , for all . For the MRAC controllers, we consider a second order reference model with natural frequency and damping ratio equal to , a state-feedback gain , and a feed-forward term , for all . Each agent records two measurements of and at times . The corresponding data matrices are not individually rich, which precludes the direct application of standard CL techniques [9]. However, the collective data satisfies the CSR condition (1) with . To regulate the state to zero, we choose , , , and to avoid instability in the estimation due to the asymmetry of the graph. We let each agent implement an MRAC controller interconnected with the hybrid dynamics and show the resulting trajectories in Figure 4. As observed, the DMCL algorithm with restart yields better transient performance compared to traditional first-order cooperative approaches without momentum [7]. Note that these results are obtained using decentralized recorded (i.e., batch) data, as opposed to real-time PE data. The latter might require potentially extreme transient excursions of some states whenever the parameter estimation is accelerated, which is a well-known challenge in real-time adaptive control, see [39].

5.3 Data-Enabled Hybrid Cooperative Feedback Optimization

Consider a multi-agent system with dynamics

| (45) |

where is the state, is the input, and is the output. The set is assumed to be compact and convex for all . We consider the setting where agents seek to cooperatively find, in real-time and in a model-free manner, an optimal input that maximizes their individual outputs at steady state. This scenario describes a classic data-enabled model-free feedback optimization or extremum-seeking problem [7]. To guarantee that this problem is well-posed, the function is assumed to be globally Lipschitz in both arguments, and we also assume there exists a smooth function , such that for each fixed , the system renders the equilibrium point UGES, uniformly on . Since the function describes the steady-state input-to-state mapping of (45), the optimization problem that each agent seeks to solve can be written as

| (46) |

where the response maps are assumed to be unknown, continuously differentiable, strongly convex, common across the network; and parametrizable as , for all , where is a known continuous and bounded regressor. Functions that satisfy these conditions are common in source seeking problems, where a group of mobile robots seeks to cooperatively find the maximizer of a common potential field using intensity measurements, see [7]. In the more general case, we note that, by the universal approximation property of smooth functions, the above assumption on always holds on compact sets, modulo a small residual error that is also bounded on compact sets. In this case (i.e., non-zero approximation error), our result still holds but now in a “semi-global practical” sense, provided that the bound on the residual approximation error is sufficiently small, a property that can always be achieved by increasing the complexity (i.e., number of basis functions, etc) of the approximator.

5.3.1 Three-Time Scale Hybrid Dynamics

To solve the model-free feedback optimization problem using recorded data that is distributed among the agents, we use a three-time scale approach. Let be the vector whose entries are the solutions to the optimization problems defined in (46). To steer towards , we consider the following feedback optimization dynamics:

| (47) |

where is the Jacobian matrix of , the function is the Euclidean projection on the set , is a tunable parameter, and is the individual estimate of , which will be recursively updated using the DMCL algorithm with restart, modeled by the hybrid system ; refer to Figure 5 for an illustration of the overall control scheme.

To study the stability properties of the closed-loop system, we modeled the overall dynamics as a three-time scale system, where the plant dynamics (45) operate at a faster time scale, the DMCL dynamics with restart operate in a medium time scale, and the optimization dynamics (47) operate at the slowest time scale. Such time scale separation can be induced by an appropriate tuning of the gains in (47) and in (42). By the stability assumptions on the plant dynamics (45), and by using a standard converse Lyapunov theorem [40, Thm. 4.14], there exists a Lyapunov function , and constants , for , such that , , and for all and . Similarly, by the proof of Theorem 2, and since the HDS satisfies the hybrid basic conditions [41, Ch.6], there exists a quadratic Lyapunov function that decreases exponentially fast during flows and jumps of , provided that the data matrices are CSR. Additionally, since the static-map (46) is convex, the optimization dynamics (47) with reduced to a projected gradient flow that renders UGES the point via the quadratic Lyapunov function , which satisfies [42, Thm. 4]. Using these individual quadratic-type Lyapunov functions, and the global Lipschitz properties of the vector fields (45), (42), and (47), we can now use the Lyapunov function to establish exponential stability of the closed-loop system by following, recursively, the exact same steps of [40, Ch. 11.5], and using sufficiently small gains and .

5.3.2 Numerical Example

Consider a multi-vehicle system with vehicles, seeking to collaboratively locate the source of a potential field that is only accessible via intensity measurements. The vehicles share information via a communication graph characterized again by a cycle. We assume the plant dynamics (45) have the form with matrices , and quadratic output where , and for all . The sets are given by where , with being the standard matrix that rotates a vector by an angle . In this case, the steady-state input-to-output map (46) reduces to , and each agent uses the vector of basis functions , where the parameter is assumed to be unknown. To implement the DMCL dynamics with restart, each agent has access to only two points of data . In this way, while the collected data is not persistently exciting for each agent, the collective data satisfies Assumption 1 with . Using these data and the parameters , , , , and , we simulate the closed-loop system comprised of the plant dynamics, the optimization dynamics in (47), and the hybrid dynamics . Figure 5 shows the resulting trajectories of the vehicles, converging to the maximizers of in . Figure 6 shows the evolution in time of the parameter estimation error and the control signals. It can be observed that, given the low richness of the collected data (small ), the proposed decentralized concurrent learning algorithm with momentum achieves faster convergence compared to the first-order cooperative estimation approach of [7].

6 Proofs

In this section, we present the proofs and analyses of our main results.

6.1 Proof of Proposition 1

For the purpose of clarity, we divide the proof of Proposition 1 into multiple lemmas.

Lemma 1

Proof: Items (a)-(b) follow directly by [43, Prop. 1]. To show item (c), we use the expressions in (26) and (27), and by direct substitution we obtain:

Applying a left-multiplication by and a right-multiplication by leads to

| (48) |

and since , and , we obtain:

Finally, we show that . Indeed, since and is given by (6), we have:

which implies .

Lemma 2

There exists such that (28) holds.

Proof: Consider the following matrix:

| (49) |

and recall that for any symmetric matrix we have [44, Cor. 10.4.2] and [44, Fact 7.12.9], where and are the maximum eigenvalue and the maximum singular value of , respectively. By using these facts, together with the sub-multiplicativity of the matrix norm, we obtain that:

| (50) |

Since is uniformly bounded, there exists such that for all and all . Combining this fact with the diagonal structure of leads to . By using this bound in (50) we obtain:

The result follows using , which is clearly a class- function.

6.2 Proof of Proposition 2

We divide the proof into two lemmas:

Proof: We present the proof step-by-step.

(a) First, note that since , , with , and trivially. Then, since and it follows that .

(b) Let the eigenvalues of the matrix be organized as and let be the eigenvector that corresponds to the eigenvalue and satisfies . It follows that .

(c) Let , and let

where the vectors denote the standard basis in . Note that the matrices and characterize the null space and the range space of , respectively.

(d) Let be a unit vector, which we can write as

| (51) |

where and satisfy

(e) Since can be written as , and , we have that

which leads to

| (52) |

Using (51) and (52), we obtain

where , is given by Assumption 1, and are defined in (25), and . Moreover, since , and using , we obtain:

| (53) |

(f) On the other hand, we have that

| (54) |

Since by the construction of in (27) we have , the above bounds imply that , where

| (55) |

(g) Next, we study (55) and we show that this lower bound is indeed positive. Since, by item (a), , without loss of generality we can assume that the first term in the brackets in (55) is non-negative. Indeed, suppose by contradiction that such term is negative. Then, since , we can take as a non-negative lower bound for , and since only if , we obtain that for such the first term in the brackets is indeed positive.

(h) To get a closed form of the expression in (55), let . In the variable, (55) becomes:

where the constants are given by . Further simplifying, we obtain

| (56) |

We argue that the intersection point of the trigonometric curves solves the min-max problem (56).

(i) To establish the existence of such , we use the following facts: (i) , (ii) , , , (ii) , , . Since and are continuous functions, the previous conditions imply the existence of a point such that . Moreover, since is decreasing on with , , , , and , it follows that the intersection point is in fact the minimum of the point-wise maximum of and . See Figure 7 for an illustration of this step.

(j) By computing the intersection point , we obtain

Substituting the values of , and , establishes the existence of a positive lower bound on the constant that satisfies , given by

| (57) |

where , with

and . Note that since , which implies that .

Lemma 4

Let be the largest eigenvalue of . Then, under Assumption 1, the matrix satisfies

| (58) |

6.3 Proof of Theorem 1

We follow a (hybrid) Lyapunov-based approach to study the HDS with input , in the error coordinates

where , , and . In these new coordinates, the HDS with input becomes

where , with given by (10). For this system, we will study stability properties with respec to the set , where

| (59) |

6.3.1 Proof of Theorem 1-(a)

We establish item (a) of Theorem 1 via a sequence of lemmas. The following lemma follows directly from the uniform boundedness assumption on the regressors and the definition of in (14).

Lemma 5

There exist such that for all .

Next, we consider the Lyapunov function

| (60) |

and we study its behavior during flows and jumps of . and present a lemma and two auxiliary propositions.

Lemma 6

There exist constants such that

for all .

Proof: Since, by the definition of , we always have , we just need to study . To establish the lower bound, and using the definition of the norm , and since for all , we directly obtain that and . Therefore, , where . To establish the upper bound, we use (25) together with the fact that to obtain that , where we also used the fact that . Using Lemma 4, we obtain , with .

Lemma 7

Suppose that ; then, there exists and such that satisfies for all .

Proof: By direct computation, we have:

| (61) |

where

for all , where

and where we used the fact that . Using the definitions of , , and , and Lemma 10 in the Appendix, it follows that , for all , all , and all , with

| (62) |

and . Using the Cauchy-Schwartz inequality to upper-bound the last term in (61), and since and for all , we obtain:

| (63) |

for all and all , where the last inequality follows from the fact that for all . Setting for and using the lower bound of Lemma 6, the expression in (63) yields:

| (64) |

The result follows by setting and letting be defined as .

Lemma 8

Suppose that ; then,

where , and .

Proof: Using the definition of the jump map , for all we have:

| (65) |

where . By Lemma 11 in the Appendix, the change of during jumps, given by , satisfies:

where we also used the fact that whenever . It then follows that for all . When , the previous inequality yields , wich in turn implies that .

By the construction of the dynamics of , every solution to is guaranteed to have intervals of flow with a duration of at least between any two consecutive jumps. Combining this fact with Lemmas 6, 7, and 8, it follows that renders the set ISS with respect to the input . The ISS property of the HDS with respect to follows directly by employing the change of coordinates .

6.3.2 Proof of Theorem 1-(b)

Let the initial condition satisfy , and let be a maximal solution pair to from the initial condition , and satisfying for all . By Lemma 7, we have that for all such that . Let

| (66) |

and let . Then, letting for every , and via the comparison lemma, it follows that for all such that . On the other hand, from Lemma 8, it follows that , which iterating over yields:

| (67) |

for all such that and where we have used that . Since if and Proposition 8 holds for all , it follows that for all , meaning that

| (68) |

for all . The bounds (67) and (68), together with Lemma 6 and the time-invariance of , imply that for all , where we also used the fact that for all . The bound (32), is obtained by evaluating the above bound at the hybrid times , noting that , and via the change of coordinates .

6.4 Proof of Theorem 2

The proof uses the reduction principle for hybrid systems [29, Corollary 7.24]. First, note that, by construction, satisfies the hybrid basic conditions [29, Assump. 6.5]. Since the flow map is globally Lipschitz in , the HDS does not exhibit finite escape times. To study the stability properties of the system, we first intersect the flow set , the jump set , and the values of the jump map with a compact set . Since already evolves in a compact set, we take only to restrict the states . The new restricted system is denoted as . Since the dynamics of the state are independent of , we can directly use [36, Prop. 1-(a)] to conclude that, under condition (b) of Theorem 2: 1) the set is UGAS for the HDS , and 2) converges to before the hybrid time . It follows that, for all solutions , and all times such that and , the restricted synchronized HDS behaves as having the centralized master timer of Section 4. Next, we intersect the data of the HDS with the set . For this restricted HDS, denoted , Theorem 1 guarantees UGES of the set when . By invoking the reduction principle of [29, Corollary 7.24], we conclude UGES of the set for the HDS . Since this system has bounded solutions, and was arbitrary large, for each compact set of initial conditions of system , we can select sufficiently large such that the restriction in does not affect the solutions from , obtaining UGES of for the original hybrid system . Now, since the convergence of to occurs in finite time after which the stability properties are characterized by Theorem 1, we obtain that is UGES for .

6.5 Proof of Corollary 1

First, note that for any . Therefore, since , the bound (32) implies the following slightly looser bound when :

| (69) |

where and come from Lemma 6. Following similar ideas to [17, 19], and using the definition of , we solve the following optimization problem to maximize the rate of contraction over any window of time :

Computing the derivative of with respect to , and equating to zero, we obtain: , which is the unique minimizer of . By substituting in (69), we obtain

| (70) |

Thus, to have for a given , it suffices to have that Moreover, note that the right hand side of (70) is of order .

6.6 Proof of Corollary 2

7 Conclusion

In this paper, we explored decentralized concurrent learning dynamics with momentum and coordinated resetting for multi-agent systems over directed graphs. The proposed approach utilizes intermittent coordinated resets to enable collective convergence to a common parameter estimate, even with asymmetric information flow. Using Lyapunov theory for hybrid systems, we established input-to-state stability properties for the momentum-based dynamics, subject to a cooperative richness condition on the data matrices and a topology-dependent lower bound on the resetting frequency. We demonstrated the effectiveness of the proposed dynamics in cooperative adaptive control, showcasing their advantages in accelerated convergence and enhanced transient behavior compared to first-order adaptation algorithms.

Acknowledgments

The authors would like to thank Bob Bitmead for fruitful discussions on transient performance and fundamental limitations in adaptive control and batch-based adaptive dynamics.

References

- [1] R. Kamalapurkar, B. Reish, G. Chowdhary, and W. E. Dixon, “Concurrent learning for parameter estimation using dynamic state-derivative estimators,” IEEE Trans. on Automatic Control, vol. 62, no. 7, pp. 3594–3601, 2017.

- [2] K. G. Vamvoudakis, M. F. Miranda, and J. P. Hespanha, “Asymptotically stable adaptive–optimal control algorithm with saturating actuators and relaxed persistence of excitation,” IEEE transactions on neural networks and learning systems, vol. 27, no. 11, pp. 2386–2398, 2015.

- [3] D. E. Ochoa, J. I. Poveda, A. Subbaraman, G. S. Schmidt, and F. R. Pour-Safaei, “Accelerated concurrent learning algorithms via data-driven hybrid dynamics and nonsmooth ODEs,” Learning for Dynamics and Control, pp. 866–878, 2021.

- [4] J. Casas, C.-H. Chang, and V. H. Duenas, “Switched adaptive concurrent learning control using a stance foot model for gait rehabilitation using a hybrid exoskeleton,” IFAC-PapersOnLine, vol. 55, no. 41, pp. 187–192, 2022.

- [5] J. Casas, C.-H. Chang, and V. H. Duenas, “Switched concurrent learning adaptive control for treadmill walking using a lower limb hybrid exoskeleton,” IEEE Trans. on Ctrl. Systems Technology, 2023.

- [6] G. V. Chowdhary and E. N. Johnson, “Theory and flight-test validation of a concurrent-learning adaptive controller,” Journal of Guidance, Control, and Dynamics, vol. 34, no. 2, pp. 592–607, 2011.

- [7] J. I. Poveda, M. Benosman, and K. G. Vamvoudakis, “Data-enabled extremum seeking: A cooperative concurrent learning-based approach,” International Journal of Adaptive Control and Signal Processing, vol. 35, no. 7, pp. 1256–1284, 2021.

- [8] D. E. Ochoa and J. I. Poveda, “Accelerated continuous-time approximate dynamic programming via data-assisted hybrid control,” IFAC-PapersOnLine, vol. 55, no. 12, pp. 561–566, 2022.

- [9] G. Chowdhary and E. Johnson, “Concurrent learning for convergence in adaptive control without persistency of excitation,” in 49th IEEE Conf. on Decision and Control (CDC), pp. 3674–3679, IEEE, 2010.

- [10] J. H. Le and A. R. Teel, “Concurrent learning in high-order tuners for parameter identification,” in 2022 IEEE 61st Conference on Decision and Control (CDC), pp. 2159–2164, IEEE, 2022.

- [11] T. Nguyen, R. Baraniuk, A. Bertozzi, S. Osher, and B. Wang, “Momentumrnn: Integrating momentum into recurrent neural networks,” Advances in Neural Information Processing Systems, vol. 33, pp. 1924–1936, 2020.

- [12] M. Muehlebach and M. I. Jordan, “Optimization with momentum: Dynamical, control-theoretic, and symplectic perspectives,” J. Mach. Learn. Res., vol. 22, Jan 2021.

- [13] W. Su, S. Boyd, and E. J. Candès, “A differential equation for modeling nesterov’s accelerated gradient method: Theory and insights,” J. Mach. Learn. Res., vol. 17, p. 5312–5354, jan 2016.

- [14] A. Wibisono, A. C. Wilson, and M. I. Jordan, “A variational perspective on accelerated methods in optimization,” proceedings of the National Academy of Sciences, vol. 113, no. 47, pp. E7351–E7358, 2016.

- [15] H. H. N. Nguyen, T. Nguyen, H. Vo, S. Osher, and T. Vo, “Improving neural ordinary differential equations with nesterov’s accelerated gradient method,” Advances in Neural Information Processing Systems, vol. 35, pp. 7712–7726, 2022.

- [16] J. I. Poveda and A. R. Teel, “The heavy-ball ODE with time-varying damping: Persistence of excitation and uniform asymptotic stability,” in 2020 American Control Conference (ACC), pp. 773–778, IEEE, 2020.

- [17] B. O’donoghue and E. Candès, “Adaptive restart for accelerated gradient schemes,” Foundations of computational mathematics, vol. 15, no. 3, pp. 715–732, 2015.

- [18] V. Roulet and A. d’Aspremont, “Sharpness, restart and acceleration,” Advances in Neural Information Processing Systems, vol. 30, 2017.

- [19] J. I. Poveda and N. Li, “Robust hybrid zero-order optimization algorithms with acceleration via averaging in time,” Automatica, vol. 123, p. 109361, 2021.

- [20] B. Wang, T. Nguyen, T. Sun, A. L. Bertozzi, R. G. Baraniuk, and S. J. Osher, “Scheduled restart momentum for accelerated stochastic gradient descent,” SIAM Journal on Imaging Sciences, vol. 15, no. 2, pp. 738–761, 2022.

- [21] Y. Yu, B. Açıkmeşe, and M. Mesbahi, “Mass–spring–damper networks for distributed optimization in non-Euclidean spaces,” Automatica, vol. 112, p. 108703, 2020.

- [22] N. M. Boffi and J.-J. E. Slotine, “A continuous-time analysis of distributed stochastic gradient,” Neural computation, vol. 32, no. 1, pp. 36–96, 2020.

- [23] C. Sun and G. Hu, “A continuous-time Nesterov accelerated gradient method for centralized and distributed online convex optimization,” arXiv preprint arXiv:2009.12545, 2020.

- [24] D. E. Ochoa and J. I. Poveda, “Momentum-based Nash set-seeking over networks via multi-time scale hybrid dynamic inclusions,” IEEE Transactions on Automatic Control, 2023.

- [25] D. E. Ochoa, J. I. Poveda, C. A. Uribe, and N. Quijano, “Robust optimization over networks using distributed restarting of accelerated dynamics,” IEEE Ctrl. Systems Letters, vol. 5, no. 1, pp. 301–306, 2020.

- [26] S. Z. Khong, Y. Tan, C. Manzie, and D. Nešić, “Multi-agent source seeking via discrete-time extremum seeking control,” Automatica, vol. 50, no. 9, pp. 2312–2320, 2014.

- [27] X. Chen and Y. Li, “Smooth formation navigation of multiple mobile robots for avoiding moving obstacles,” International Journal of Control, Automation, and Systems, vol. 4, no. 4, pp. 466–479, 2006.

- [28] W. Chen, C. Wen, S. Hua, and C. Sun, “Distributed cooperative adaptive identification and control for a group of continuous-time systems with a cooperative pe condition via consensus,” IEEE Trans. on Automatic Control, vol. 59, no. 1, pp. 91–106, 2013.

- [29] R. Goebel, R. G. Sanfelice, and A. R. Teel, Hybrid dynamical systems. Princeton University Press, 2012.

- [30] E. D. Sontag and Y. Wang, “On characterizations of the input-to-state stability property,” Systems & Control Letters, vol. 24, no. 5, pp. 351–359, 1995.

- [31] F. Bullo, Lectures on network systems, vol. 1. CreateSpace, 2018.

- [32] C. Cai and A. R. Teel, “Characterizations of input-to-state stability for hybrid systems,” Systems & Ctrl. Ltrs., vol. 58, no. 1, pp. 47–53, 2009.

- [33] J. I. Poveda, K. G. Vamvoudakis, and M. Benosman, “CODES: Cooperative data-enabled extremum seeking for multi-agent systems,” in 2019 IEEE 58th Conference on Decision and Control (CDC), pp. 2988–2993, IEEE, 2019.

- [34] M. U. Javed, J. I. Poveda, and X. Chen, “Excitation conditions for uniform exponential stability of the cooperative gradient algorithm over weakly connected digraphs,” IEEE Control Systems Letters, 2021.

- [35] A. C. Wilson, B. Recht, and M. I. Jordan, “A Lyapunov analysis of accelerated methods in optimization.,” J. Mach. Learn. Res., vol. 22, pp. 113–1, 2021.

- [36] M. U. Javed, J. I. Poveda, and X. Chen, “Scalable resetting algorithms for synchronization of pulse-coupled oscillators over rooted directed graphs,” Automatica, vol. 132, p. 109807, 2021.

- [37] H. Ríos, D. Efimov, J. A. Moreno, W. Perruquetti, and J. G. Rueda-Escobedo, “Time-varying parameter identiffication algorithms: Finite and fixed-time convergence,” IEEE Transactions on Automatic Control, vol. 62, no. 7, pp. 3671–3678, 2017.

- [38] F. Tatari, M. Mazouchi, and H. Modares, “Fixed-time system identification using concurrent learning,” IEEE Transactions on Neural Networks and Learning Systems, vol. 34, no. 8, pp. 1–11, 2021.

- [39] Z. Zang and R. R. Bitmead, “Transient bounds for adaptive control systems,” in 29th IEEE conference on decision and control, pp. 2724–2729, IEEE, 1990.

- [40] H. K. Khalil, Nonlinear systems; 3rd ed. Upper Saddle River, NJ: Prentice-Hall, 2002.

- [41] R. Goebel, R. G. Sanfelice, and A. R. Teel, Hybrid Dynamical Systems: Modeling, Stability and Robustness. Princeton University Press, 2012.

- [42] X.-B. Gao, “Exponential stability of globally projected dynamic systems,” IEEE Transactions on Neural Networks, vol. 14, no. 2, pp. 426–431, 2003.

- [43] H. Zhang, Z. Li, Z. Qu, and F. L. Lewis, “On constructing Lyapunov functions for multi-agent systems,” Automatica, vol. 58, pp. 39–42, 2015.

- [44] D. S. Bernstein, Matrix mathematics: theory, facts, and formulas. Princeton university press, 2009.

- [45] R. A. Horn and C. R. Johnson, Matrix analysis. Cambridge University Press, 2012.

Appendix A Auxiliary Lemmas

Lemma 9

Consider the following block triangular matrix:

Suppose that is non-singular. Then, the minimum singular value of , , satisfies

Proof. First, since the inverse of the block triangular matrix is given by

we can upper-bound the 2-norm matrix of :

| (71) |

Then, since the minimum singular value of a matrix is the inverse of the 2-norm of the inverse matrix, i.e., , we can use (71) to obtain the result.

Lemma 10

Proof: First, we show that the matrix-valued function is positive-definite uniformly over , , and . To do this, we decompose as follows:

| (76) |

where

and

Using the definition of , and the fact that for all , we obtain

| (77) |

Also, it follows that

| (78) |

for all , where

| (79) |

Note that since condition (31) holds by assumption. Therefore, since

it follows that the matrix is positive definite uniformly over , , and [45, Theorem 7.7.7].

Now, we establish the matrix inequality (74). To do so, we use the bounds (77) and (78) for (76) to obtain that

| (80) |

where is the upper block triangular matrix

By applying Lemma 9 on the matrix , and using (80) together with the fact that has full column rank and thus that , we obtain

where we have used the fact that the induced 2-norm is sub-multiplicative and that and . This completes the proof.