DCT Approximations Based on Chen’s Factorization

In this paper, two 8-point multiplication-free DCT approximations based on the Chen’s factorization are proposed and their fast algorithms are also derived. Both transformations are assessed in terms of computational cost, error energy, and coding gain. Experiments with a JPEG-like image compression scheme are performed and results are compared with competing methods. The proposed low-complexity transforms are scaled according to Jridi-Alfalou-Meher algorithm to effect 16- and 32-point approximations. The new sets of transformations are embedded into an HEVC reference software to provide a fully HEVC-compliant video coding scheme. We show that approximate transforms can outperform traditional transforms and state-of-the-art methods at a very low complexity cost.

Keywords

Approximate DCT,

Chen’s factorization,

Fast algorithms,

Image and video compression,

Low-complexity transforms

1 Introduction

Discrete transforms are very useful tools in digital signal processing and compressing technologies [10, 23]. In this context, the discrete cosine transform (DCT) plays a key role [2] since it is a practical approximation for the Karhunen-Loève transform (KLT) [15]. The KLT has the property of being optimal in terms of energy compaction when the intended signals are modeled after a highly correlated first-order Markov process [10]. This is a widely accepted supposition for natural images [17].

Particularly, the DCT type II (DCT-II) of order 8 is a widely employed method [10], being adopted in several industry standards of image and video compression, such as JPEG [17], MPEG-1 [40], MPEG-2 [25], H.261 [26], H.263 [27], H.264 [39], and the state-of-the-art HEVC [38]. Aiming at the efficient computation of the DCT, many fast algorithms have been reported in literature [12, 33, 43, 53]. Nevertheless, these methods usually need expensive arithmetic operations, as multiplications, and an arithmetic on floating point, demanding major hardware requirements [32].

An alternative to the exact DCT computation is the use of DCT approximations that employ integer arithmetic only and do not require multiplications [10, 23]. In this context, several approximations for the DCT-II were proposed in literature. Often the elements of approximate transform matrices are defined over the set [20, 14]. Relevant methods include the signed DCT (SDCT) [20] and the Bouguezel-Ahmad-Swamy (BAS) series of approximations [7, 8, 9, 3].

Transform matrices with elements in have null multiplicative complexity [5]. Thus their associate hardware implementations require only additions and bit-shifting operations [3]. This fact renders such multiplierless approximations suitable for hardware-software implementations on devices/sensors operating at low computational power and severe energy consumption constraints [11, 39, 40].

In this work, we aim at the following goals:

-

•

the proposition of new multiplication-free approximations for the 8-point DCT-II, based on Chen’s algorithm [12];

-

•

the derivation of fast algorithms for the introduced transforms;

-

•

a comprehensive assessment of the new approximations in terms of coding and image compression performance compared to popular alternatives;

-

•

the extension of the proposed 8-point transforms to 16- and 32-point DCT approximations by means of the scalable recursive method proposed in [29]; and

-

•

the embedment of the obtained approximations into an HEVC-compliant reference software [28].

This paper unfolds as follows. Section 2 presents Chen’s factorization for the 8-point DCT-II. In Section 3, we present two novel 8-point multiplierless transforms and their fast algorithms. The proposed approximations are assessed and mathematically compared with competing methods in Section 4. Section 5 provides comprehensive image compression analysis based on a JPEG-like compression scheme. Several images are compressed and assessed for quality according to the approximate transforms. Section 6 extends the 8-point transforms to 16- and 32-point DCT approximations and considers a real-world video encoding scheme based on these particular DCT approximations. Final conclusions and remarks are drawn in Section 7.

2 Chen’s factorization for the DCT

Chen et al. [12] proposed a fast algorithm for the DCT-II based on a factorization for the DCT type IV (DCT-IV). These two versions of the DCT differ in the sample points of the cosine function used in their transformation kernel [48, 10]. The -th element of the -point DCT-II and DCT-IV transform matrices, respectively denoted as and , are given by:

where and

In the following, let the zero matrix of order , the identity and the counter-identity matrix, which is given by:

In [47], Wang demonstrated that the 8-point DCT-II matrix possesses the following factorization:

| (1) |

where and are permutation and pre-addition matrices given by, respectively:

Additionally, Chen et al. suggested in [12] that the matrix admits the following factorization:

| (2) |

where

with and .

Replacing (2) in (1) and expanding the factorization, we obtain:

| (3) |

where

with . The expression in (3) is referred to as Chen’s factorization for the 8-point DCT-II.

Without any fast algorithm, the computation of the DCT-II requires 64 multiplications and 56 additions. Using the Chen’s factorization in (3) the arithmetic complexity is reduced to 16 multiplications and 26 additions. The quantities , , and , presented in , , and , are irrational numbers and demand non-trivial multiplications.

For the sake of notation, hereafter the DCT-II is referred to as DCT.

3 Proposed DCT approximations

In this section, new approximations for the DCT are sought. To this end, we notice that the factorization (3) naturally induces the following mapping:

| (4) |

where is the space of 88 matrices with real-valued entries, , , and . Now the matrices , , and are seen as matrix functions, where the constants in (3) are understood as parameters:

| (5) |

In particular, for the values

| (6) | ||||

we have . In the following, we vary the values of parameters , , and aiming at the derivation of low-complexity matrices whose elements are restricted to the set .

To facilitate our approach, we consider the signum and round-off functions, respectively, given by:

where is the floor function for . These functions coincide with their definitions implemented in C and MATLAB computer languages. When applied to vectors or matrices, and operate entry-wise.

Thereby, considering the values in (6) and applying directly the functions above, we obtain the approximate vectors shown below:

Then, the following matrices are generated according to (5):

which are explicitly given by:

Invoking the factorization from (3), we define the following new transforms:

| (7) | ||||

| (8) |

The numerical evaluation of (7) and (8) reveals the following matrix transforms:

Above transformations have simple inverse matrices. Direct matrix inversion rules applied to (7) and (8) furnish:

| (9) | ||||

| (10) |

where

and

Figure 1 depicts the signal flow graphs (SFG) of the proposed fast algorithm for the transform and its inverse . Figure 1(a) and 1(b) are linked to (8) and (10), respectively. The SFGs for the and are similar and were suppressed for brevity.

3.1 Orthogonality and near orthogonality

The proposed transforms lead to nonorthogonal approximations for the DCT. This is also the case for the well-known SDCT [20] and the BAS approximation described in [7]. Indeed, for image/video processing, orthogonality is not strict requirement; being near orthogonality sufficient for very good energy compaction properties.

To quantify how close a given matrix is to an orthogonal matrix, we adopt the deviation from diagonality measure [16], which is described as follows. Let be a square matrix. The deviation from diagonality of is given by:

where denotes the Frobenius norm [51, p. 115]. For diagonal matrices, the function returns zero. Therefore for a full rank low-complexity transformation matrix , we can measure its closeness to orthogonality by calculating .

Both the 8-point SDCT [20] and the BAS approximation proposed in [7] are nonorthogonal and good DCT approximations. Their orthogonalization matrices have deviation from diagonality equal to 0.20 and 0.1774, respectively. Comparatively, matrices and have deviation from diagonality equal to and , respectively. Because these values are smaller than those for the SCDT and BAS approximations, the proposed transformations are more “nearly orthogonal” than such approximations.

In [45], the problem of deriving DCT approximations based on nonorthogonal matrices was addressed. The approach consists of a variation of the polar decomposition method as described in [21]. Let be a full rank low-complexity transformation matrix. If satisfies the condition:

| (11) |

where is a diagonal matrix, then it is possible to derive an orthonormal approximation linked to . This is accomplished by means of the polar decomposition [21]:

where is a positive definite matrix and denotes the matrix square root operation [22].

From the computational point-of-view, it is desirable that be a diagonal matrix [13]. In this case, the computational complexity of is the same as that of , except for the scaling factors in the diagonal matrix . Moreover, in several applications, such scaling factors can be embedded into related computation step. For example, in JPEG-like compression the quantization step can absorb the diagonal elements [3, 9, 31, 14].

Since the transformations and do not satisfy (11), one may consider approximating itself by replacing the off-diagonal elements of by zeros, at the expense of not obtaining a precisely orthogonal approximation . Therefore, we consider the following approximate matrix for :

where returns the diagonal matrix from its matrix argument. Thus, the near orthogonal approximations for the DCT-II associated to the proposed transforms are given by:

where

Notice that and derive from (3.1).

4 Performance assessment and computational cost

To measure the proximity of the new multiplierless transforms with respect to the exact DCT, we elected the total error energy [14] as figure of merit. We also considered the coding gain relative to the KLT [18] as the measure for coding performance evaluation. For comparison, we separated the classical approximations SDCT [20] and BAS [7]—which are nonorthogonal—as well as the HT [42] and the WHT [23], both orthogonal.

4.1 Performance measures

4.1.1 Total error energy

The total error energy is a measure of spectral similarity between the exact DCT and the considered approximate DCT [14]. Although originally defined over the spectral domain [20], the total error energy for a given DCT approximation matrix can be written as:

where is the exact DCT matrix and denotes the Frobenius norm [51]. The total error energy measurements for all discussed approximations are listed in Table 1.

| SDCT | BAS | WHT | HT | |||

|---|---|---|---|---|---|---|

The proposed approximation presents the lower total error energy among all considered transforms, at the same time that requires only 22 additions. The BAS transform, which possess the smallest arithmetic cost among the considered methods, presents a considerably higher total error energy. Thus, and SDCT outperform BAS. Comparatively, HT and WHT are less suitable approximations for the DCT.

4.1.2 Coding gain relative to the KLT

For coding performance evaluation, images are assumed to be modeled after a first-order Markov process with correlation coefficient , where [32, 10, 18]. Natural images satisfy the above assumptions [32]. Then, the -th element of the covariance matrix of the input signal is given by [10].

Let and be the th rows of and , respectively. Thus, the coding gain of is given by:

where , returns the sum of the elements of its matrix argument [35], operator is the element-wise matrix product [41], , and is the Euclidean norm. For orthogonal transforms, the unified coding gain collapses into the usual coding gain as defined in [10, 18].

High coding gain values indicate better energy compaction capability into the transform domain [32]. In this sense, the KLT is optimal [10, 15]. Thus, an appropriate measure for evaluating the coding gain is given by [18]:

where denotes the coding gain corresponding to the KLT. For example, for and , the coding gains linked to the KLT and DCT are 8.8462 dB and 8.8259 dB, respectively [10]. Hence, the DCT coding gain relative to the KLT is .

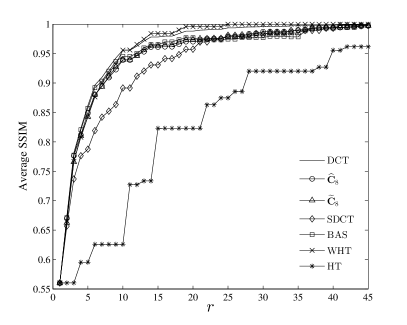

Figure 2 compares the values of coding gain relative to the KLT for the considered transforms in the range . As expected, the DCT has the smallest difference with respect to the KLT, followed by the HT and WHT (both with the same values). Roughly orthogonal transforms tend to show better coding gain performance when compared to nonorthogonal. The proposed transforms and could outperform the SDCT and BAS, both nonorthogonal transformations. As , the approximation performs as well as the HT and WHT. This scenario is realistic for image compression, because natural images exhibit high inter-pixel correlation [17].

4.2 Computational cost

The low-complexity matrices associated to the proposed approximations and their inverses possess multiplierless matrix factorizations as shown in (7), (8), (9), and (10). Therefore, the only truly multiplicative elements are the ones found in the diagonal matrices and . However, in the context of image and video coding, such diagonal matrices can easily absorbed in the quantization step [3, 9, 31, 14]. As a consequence, in that context, the introduced approximations can be understood as fully multiplierless operations.

Table 2 presents the arithmetic complexity of the considered transforms. The computational cost of the exact DCT according to the Chen’s factorization (cf. (3)) [12] and the integer DCT for HEVC [34] are also included for comparison. The proposed approximation requires only 22 additions. On the other hand, the computational cost of is comparatively larger.

5 Low-complexity image compression

In this section, we describe a JPEG-like image compression computational experiment. Proposed transformations are evaluated in terms of their data compression capability.

5.1 JPEG-like compression

We considered a set of 30 standard 8-bit 512512 gray scale images obtained from [1]. The images were subdivided into 88 blocks. Each block was submitted to the 2-D transformation given by [44]:

where is a particular transformation. The 64 obtained coefficients in were arranged according to the standard zig-zag sequence [46]. The first coefficients were retained, being the remaining coefficients discarded. We adopted . Then for each block , the 2-D inverse transform was applied to reconstruct the compressed image:

Finally, the resulting image was compared with the original image according to the peak signal-to-noise ratio (PSNR) [24] and the structural similarity index (SSIM) [50, 49]. The absolute percentage error (APE) for such metrics relative to the exact DCT was also considered. The PSNR is the most commonly used measure for image quality evaluation. However, the SSIM measure consider visual human system characteristics which are not considered by PSNR [50]. Following the methodology proposed in [14], the average values of the measures over the 30 standard images were computed. It leads to statistically more robust results when compared with single image analysis [30].

5.2 Results

Results of the still image compression experiment are presented in Figure 4 and 4. In Figure 4(b), the curve corresponding to the HT was suppressed because it presents excessively high values in comparison with other curves. In terms of PSNR, outperforms both the SDCT and the BAS approximations; and provides similar results as to those furnished by the WHT, but at a lower computational cost. For SSIM results, both transforms and show similar results. In terms of PSNR, the proposed approximation performs closely to the WHT. Figure 3(a) and 3(b) show that is superior to the WHT at high compression ratios ().

A qualitative analysis is displayed in Figure 5. Standard Elaine image was compressed and reconstructed according to the SDCT, WHT, HT, BAS, and the proposed approximation for visual inspection. Compressed image resulted from the exact DCT is also exhibited. All images were compressed with , which represents a removal of approximately of the transformed coefficients. The visual analysis of the obtained images shows the superiority of the proposed transform over the SDCT in image compression. Furthermore, Table 3 lists the PSNR and SSIM values for the Elaine image, for . The PSNR and SSIM values are listed for two additional images (Lenna and Boat images). The proposed transform outperforms the HT, WHT, and SDCT approximations in terms of PSNR and SSIM and BAS approximation in terms of PSNR for the Elaine and Lenna images.

5.3 Blocking artifact analysis

A visually undesirable effect in image compression is the emergence of blocking artifacts [17, p. 573]. Figure 6 shows a qualitative comparison in terms of blocking artifact resulted from , WHT, and BAS. The proposed approximation effected a lower degree of blocking artifact comparatively with the WHT and BAS.

6 HEVC-compliant video encoding

In this section, we aim at demonstrating the practical real-world applicability of the proposed DCT approximations for video coding. However, the HEVC standard requires not only an 8-point transform, but also 4-, 16-, and 32-point transforms. In order to derive Chen’s DCT approximations for larger blocklengths, we considered the algorithm proposed by Jridi-Alfalou-Meher (JAM) [29]. We embedded these low-complexity transforms into a publicly available reference software compliant with the HEVC standard [28].

The JAM algorithm consists of a scalable method for obtaining higher order transforms from an 8-point DCT approximation. An -point DCT approximation , where is a power of two, is recursively obtained through:

where

and is an resulting from expanding the identity matrix by interlacing it with zero rows. The matrix is a permutation matrix and does not introduce any arithmetic cost. Matrix contributes with only additions. Furthermore, the scaling factor can be merged into the image compression quantization step and does not contribute to the arithmetic complexity of the transform. Thus, the additive complexity of is equal to twice the additive complexity of plus additions [29].

6.1 Chen’s DCT approximations for large blocklengths

In its original form, the JAM algorithm employs the 8-point DCT approximation introduced in [14]. In this section, we adapt the JAM algorithm to derive DCT approximations based on Chen’s algorithm for arbitrary power-of-two () blocklengths. We are specially interested in 16- and 32-point low-complexity transformations for subsequent embedding into the HEVC standard. Let be a power of two. We introduce the Chen’s signed and rounded transformations, respectively, according to the following recursion:

| (12) |

Based on (7) and (8), and admit the following factorizations:

Thus, applying the factorizations above in (12) and expanding them, we obtain:

| (13) | ||||

| (14) |

where

Their inverse transformations possess the following factorizations:

| (15) | ||||

| (16) |

where

In particular, for , we have from (7) and (8) that , , , , , , and therefore it yields:

where

The near orthogonal DCT approximations linked to the proposed low-complexity matrices are given by (cf. Section 3.1):

where and .

Because the entries of and and their inverses are in the set , we have that the matrices in (13), (14), (15) and (16) also have entries in . Moreover, (13), (14), (15) and (16) can also be recursively obtained from (7), (8), (9), and (10). Thus, and are low-complexity DCT approximations for blocklength . The arithmetic complexity of the proposed 16- and 32-point Chen’s approximations and transformations prescribed in the HEVC standard are presented in Table 4. In terms of hardware implementation, the circuitry corresponding to and and their inverses can be re-used for the hardware implementation of both the direct and inverse Chen’s DCT approximations for larger blocklengths.

6.2 Results

The proposed approximations were embedded into the HEVC reference software [28]. For video coding experiments we considered two set of videos, namely: (i) Group A, which consider eleven CIF videos from [52]; (ii) Group B, with six standard video sequence, one for each class specified in the Common Test Conditions (CTC) document for HEVC [6]. Such classification is based on the resolution, frame rate and, as consequence, the main applications of these kind of media [36]. All test parameters were set according to the CTC document, including the quantization parameter (QP) that assumes values in {22, 27, 32, 37}. As suggested in [29], we selected the Main profile and All-Intra mode for our experiments.

The PSNR measurements—already furnished by the reference software—were obtained for each video frame and YUV channel. The overall PSNR was obtained from each frame according to [37]. We averaged the PSNR values for the first 100 frames of all videos in each group. Figure 7 shows the average PSNR in terms of the quantization parameter (QP) for each set of 8-, 16-, and 32-point transforms: , , and the original transforms in the HEVC standard. Results in Figure 7 show no significant degradation in terms of PSNR regardless of the video group. The proposed approximations resulted in essentially the same frame quality while having a very low computational cost when compared to those originally employed in HEVC.

Additionally, we computed the Bjøntegaard delta PSNR (BD-PSNR) and delta rate (BD-Rate) [19, 4] for compressed videos considering all discussed 8- to 32-point transformations. The first 11 rows of Table 5 present the results for the Group A whilst the remaining ones correspond to Group B. We report a negligible impact in video quality associated to the results from the modified HEVC with the approximate transforms. Similar to the still images experiments, performed better than with a degradation of no more than 0.70dB and 0.58dB for Groups A and B, respectively. These declines in PSNR represent an increase of 10.63% and 7.02% in bitrate, respectively.

| Video information | BD-PSNR (dB) | BD-Rate (%) | ||

| Akiyo | 0.4600 | 0.2990 | ||

| Bowing | 0.5301 | 0.4316 | ||

| Coastguard | 0.7596 | 0.7026 | ||

| Container | 0.4075 | 0.3750 | ||

| Foreman | 0.2263 | 0.1627 | ||

| Hall_monitor | 0.2754 | 0.1952 | ||

| Mobile | 0.2752 | 0.2629 | ||

| Mother_daughter | 0.4202 | 0.3384 | ||

| News | 0.2539 | 0.1975 | ||

| Pamphlet | 0.4253 | 0.3680 | ||

| Silent | 0.3029 | 0.2399 | ||

| PeopleOnStreet | 0.5350 | 0.4734 | ||

| BasketballDrive | 0.3372 | 0.2531 | ||

| RaceHorses | 0.6444 | 0.5781 | ||

| BlowingBubbles | 0.2563 | 0.1986 | ||

| KristenAndSara | 0.4651 | 0.3807 | ||

| BasketballDrillText | 0.1984 | 0.1565 | ||

As a qualitative example, Figure 6.2 displays particular frames of Silent and PeopleOnStreet video sequences after compression according to the original HEVC and to the modified versions of HEVC based on the proposed transforms. Visual inspection shows no sign of image quality degradation.

7 Conclusion

We introduced two new multiplierless DCT approximations based on the Chen’s factorization. The suggested approximations were assessed and compared with other well-known approximations. The proposed transformation presented low total error energy and very close similarity to the exact DCT. Furthermore, presents very close coding gain when compared to the optimal KLT. The approximation outperformed the SDCT, BAS, and HT as tools for JPEG-like still image compression at a lower computational cost. Adapting the JAM scalable method, we also proposed low-complexity Chen’s DCT approximations and , were is a power of two; we also provided fast algorithms for their implementations. The introduced 8-, 16-, and 32-point approximations were embedded into an HEVC reference software and assessed for video compression. Finally, the proposed low-complexity transforms are suitable for image and video coding, being a realistic alternative for efficient and low complexity image/video coding.

Acknowledgments

This research was partially supported by CAPES, CNPq, and FAPERGS, Brazil.

References

- [1] The USC-SIPI Image Database, University of Southern California, Signal and Image Processing Institute., 2011.

- [2] N. Ahmed, T. Natarajan, and K. R. Rao, Discrete Cosine Transform, IEEE Trans. Comput., C-23 (1974), pp. 90–93.

- [3] F. M. Bayer and R. J. Cintra, DCT-like transform for image compression requires 14 additions only, Electron. Lett., 48 (2012), pp. 919–921.

- [4] G. Bjøntegaard, Calculation of average PSNR differences between RD-curves, in 13th VCEG Meeting, Austin, TX, USA, Apr 2001. Document VCEG-M33.

- [5] R. E. Blahut, Fast Algorithms for Signal Processing, Cambridge University Press, 2010.

- [6] F. Bossen, Common test conditions and software reference configurations, 2013. Document JCT-VC L1100.

- [7] S. Bouguezel, M. O. Ahmad, and M. N. S. Swamy, A multiplication-free transform for image compression, in 2nd International Conference on Signals, Circuits and Syst. (SCS), Nov. 2008, pp. 1–4.

- [8] , A novel transform for image compression, in IEEE 53rd International Midwest Symposium on Circuits Syst. (MWSCAS), Aug. 2010, pp. 509–512.

- [9] , A low-complexity parametric transform for image compression, in IEEE International Symposium on Circuits Syst. (ISCAS), May 2011, pp. 2145–2148.

- [10] V. Britanak, P. C. Yip, and K. R. Rao, Discrete Cosine and Sine Transforms: General Properties, Fast Algorithms and Integer Approximations, Elsevier Science, 2010.

- [11] T. S. Chang, C. S. Kung, and C. W. Jen, A simple processor core design for DCT/IDCT, IEEE Trans. Circuits Syst. Video Technol., 10 (2000), pp. 439–447.

- [12] W. H. Chen, C. Smith, and S. Fralick, A fast computational algorithm for the Discrete Cosine Transform, IEEE Trans. Commun., 25 (1977), pp. 1004–1009.

- [13] R. J. Cintra, An integer approximation method for discrete sinusoidal transforms, Circuits, Syst., and Signal Process., 30 (2011), pp. 1481–1501.

- [14] R. J. Cintra and F. M. Bayer, A DCT approximation for image compression, IEEE Signal Process. Lett., 18 (2011), pp. 579–582.

- [15] M. Effros, H. Feng, and K. Zeger, Suboptimality of the Karhunen-Loève transform for transform coding, IEEE Trans. Inf. Theory, 50 (2004), pp. 1605–1619.

- [16] B. N. Flury and W. Gautschi, An algorithm for simultaneous orthogonal transformation of several positive definite symmetric matrices to nearly diagonal form, SIAM J. Sci. Stat. Comput., 7 (1986), pp. 169–184.

- [17] R. C. Gonzalez and R. E. Woods, Digital image processing, Prentice-Hall, Inc., Upper Saddle River, NJ, USA, 3rd ed., 2006.

- [18] J. Han, Y. Xu, and D. Mukherjee, A butterfly structured design of the hybrid transform coding scheme, in Picture Coding Symposium, 2013, pp. 1–4.

- [19] P. Hanhart and T. Ebrahimi, Calculation of average coding efficiency based on subjective quality scores, J. Vis. Commun. Image R., 25 (2014), pp. 555–564.

- [20] T. I. Haweel, A new square wave transform based on the DCT, Signal Process., 81 (2001), pp. 2309–2319.

- [21] N. J. Higham, Computing the polar decomposition with applications, SIAM J. Sci. Stat. Comput., 7 (1986), pp. 1160–1174.

- [22] N. J. Higham, Computing real square roots of a real matrix, Linear Algebra Appl., 88–89 (1987), pp. 405–430.

- [23] K. J. Horadam, Hadamard matrices and their applications, Cryptography Commun., 2 (2010), pp. 129–154.

- [24] Q. Huynh-Thu and M. Ghanbari, Scope of validity of PSNR in image/video quality assessment, Electron. Lett., 44 (2008), pp. 800–801.

- [25] International Organisation for Standardisation, Generic coding of moving pictures and associated audio information – Part 2: Video, ISO/IEC JTC1/SC29/WG11 - coding of moving pictures and audio, ISO, 1994.

- [26] International Telecommunication Union, ITU-T recommendation H.261 version 1: Video codec for audiovisual services at kbits, tech. rep., ITU-T, 1990.

- [27] , ITU-T recommendation H.263 version 1: Video coding for low bit rate communication, tech. rep., ITU-T, 1995.

- [28] Joint Collaborative Team on Video Coding (JCT-VC), HEVC reference software documentation, 2013. Fraunhofer Heinrich Hertz Institute.

- [29] M. Jridi, A. Alfalou, and P. K. Meher, A generalized algorithm and reconfigurable architecture for efficient and scalable orthogonal approximation of DCT, IEEE Trans. Circuits Syst. I, Reg. Papers, 62 (2015), pp. 449–457.

- [30] S. M. Kay, Fundamentals of Statistical Signal Processing, Volume I: Estimation Theory, vol. 1 of Prentie Hall Signal Processing Series, Prentice Hall, Upper Saddle River, NJ, 1993.

- [31] K. Lengwehasatit and A. Ortega, Scalable variable complexity approximate forward DCT, IEEE Trans. Circuits Syst. Video Technol., 14 (2004), pp. 1236–1248.

- [32] J. Liang and T. D. Tran, Fast multiplierless approximations of the DCT with the lifting scheme, IEEE Trans. Signal Process., 49 (2001), pp. 3032–3044.

- [33] C. Loeffler, A. Ligtenberg, and G. S. Moschytz, Practical fast 1-D DCT algorithms with 11 multiplications, in International Conference on Acoust., Speech, Signal Process. (ICASSP), May 1989, pp. 988–991.

- [34] P. K. Meher, S. Y. Park, B. K. Mohanty, K. S. Lim, and C. Yeo, Efficient Integer DCT Architectures for HEVC, IEEE Trans. Circuits Syst. Video Technol., 24 (2014), pp. 168–178.

- [35] J. K. Merikoski, On the trace and the sum of elements of a matrix, Linear Algebra Appl., 60 (1984), pp. 177–185.

- [36] M. Naccari and M. Mrak, Chapter 5 - perceptually optimized video compression, in Academic Press Library in signal Processing Image and Video Compression and Multimedia, vol. 5, Elsevier, 2014, pp. 155–196.

- [37] J.-R. Ohm, G. J. Sullivan, H. Schwarz, T. K. Tan, and T. Wiegand, Comparison of the coding efficiency of video coding standards - including high efficiency video coding (HEVC), IEEE Trans. Circuits Syst. Video Technol., 22 (2012), pp. 1669–1684.

- [38] M. T. Pourazad, C. Doutre, M. Azimi, and P. Nasiopoulos, HEVC: The new gold standard for video compression: How does HEVC compare with H.264/AVC?, IEEE Consum. Electron. Mag., 1 (2012), pp. 36–46.

- [39] A. Puri, X. Chen, and A. Luthra, Video coding using the H.264/MPEG-4 AVC compression standard, Signal Process.: Image Commun., 19 (2004), pp. 793–849.

- [40] N. Roma and L. Sousa, Efficient hybrid DCT-domain algorithm for video spatial downscaling, EURASIP J. Adv. Signal Process, 2007 (2007), pp. 1–16.

- [41] G. A. F. Seber, A Matrix Handbook for Statisticians, John Wiley & Sons, Inc, 2008.

- [42] J. Seberry, B. J. Wysocki, and T. A. Wysocki, On some applications of Hadamard matrices, Metrika, 62 (2005), pp. 221–239.

- [43] N. Suehiro and M. Hatori, Fast algorithms for the DFT and other sinusoidal transforms, IEEE Trans. Acoust., Speech, Signal Process., 34 (1986), pp. 642–644.

- [44] T. Suzuki and M. Ikehara, Integer DCT based on direct-lifting of DCT-IDCT for lossless-to-lossy image coding, IEEE Trans. Image Process., 19 (2010), pp. 2958–2965.

- [45] C. Tablada, F. Bayer, and R. Cintra, A class of DCT approximations based on the Feig-–Winograd algorithm, Signal Process., 113 (2015), pp. 38–51.

- [46] G. Wallace, The JPEG still picture compression standard, IEEE Trans. Consum. Electron., 38 (1992), pp. 18–34.

- [47] Z. Wang, Reconsideration of: A fast computational algorithm for the Discrete Cosine Transform, IEEE Trans. Commun., 31 (1983), pp. 121–123.

- [48] , Fast algorithms for the discrete W transform and for the Discrete Fourier Transform, IEEE Trans. Acoust., Speech, Signal Process., 32 (1984), pp. 803–816.

- [49] Z. Wang and A. C. Bovik, Mean squared error: Love it or leave it? A new look at signal fidelity measures, IEEE Signal Process. Mag., 26 (2009), pp. 98–117.

- [50] Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, Image quality assessment: from error visibility to structural similarity, IEEE Trans. Image Process., 13 (2004), pp. 600–612.

- [51] D. Watkins, Fundamentals of Matrix Computations, Pure and Applied Mathematics: A Wiley Series of Texts, Monographs and Tracts, Wiley, 2004.

- [52] Xiph.Org Foundation, Xiph.org video test media, 2014.

- [53] W. Yuan, P. Hao, and C. Xu, Matrix factorization for fast DCT algorithms, in IEEE International Conference on Acoust., Speech, Signal Process. (ICASSP), vol. 3, May 2006, pp. 948–951.