© 2021 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works.

Data-free mixed-precision quantization using novel sensitivity metric

Abstract

Post-training quantization is a representative technique for compressing neural networks, making them smaller and more efficient for deployment on edge devices. However, an inaccessible user dataset often makes it difficult to ensure the quality of the quantized neural network in practice. In addition, existing approaches may use a single uniform bit-width across the network, resulting in significant accuracy degradation at extremely low bit-widths. To utilize multiple bit-width, sensitivity metric plays a key role in balancing accuracy and compression. In this paper, we propose a novel sensitivity metric that considers the effect of quantization error on task loss and interaction with other layers. Moreover, we develop labeled data generation methods that are not dependent on a specific operation of the neural network. Our experiments show that the proposed metric better represents quantization sensitivity, and generated data are more feasible to be applied to mixed-precision quantization.

Index Terms— Deep Learning, Quantization, Data Free

1 Introduction

(a) Data generation

(b) Profiling for quantization

(c) Sensitivity measurement

(d) Mixed-precision inference

In recent years, deep neural networks have simplified and enabled applications in numerous domains, especially for vision tasks [1, 2, 3]. Meanwhile, there is a need to minimize the memory footprint and reduce the network computation cost to deploy on edge devices. Significant efforts have been made to reduce the network size or accelerate inference of the neural network [4, 5, 6]. Several approaches exist to this problem, and quantization has been studied as one of the most reliable solutions. In the quantization process, low-bit representations of both weights and activations introduce quantization noise, which results in accuracy degradation. To alleviate the accuracy loss, retraining or fine-tuning methods are developed by exploiting extensive training datasets [7, 8, 9].

However, these methods are not applicable in many real-world scenarios, where the user dataset is inaccessible due to confidential or personal issues [10]. It is impossible to train the network and verify the quality of a quantized neural network without a user dataset. Although post-training quantization is a frequently suggested method to address this problem [11, 12], a small dataset is often required to decide the optimal clipping range of each layer. The credential statistics of layers are important to ensure the performance of the quantized network in increasing task loss at ultra-low-bit precision.

Considering that most quantization approaches have used uniform bit allocation across the network, the mixed-precision quantization approach takes a step further in preserving the accuracy by lifting the limit of those approaches [13, 14]. As a necessity, several sensitivity measurement methods for maximizing the effects of mixed-precision have also been proposed because it is difficult to determine which sections of the network are comparatively less susceptible to quantization [15, 16, 17]. To measure the quantization robustness of activations and weights, it is necessary to analyze the statistics generated during forward and backward processes by using a reliable dataset. The prior sensitivity metrics use the difference between outputs of the original model and the quantized model when quantizing each layer separately [10, 15]. However, this approach does not consider the interaction of quantization error and other layers. It cannot be neglected because a lower bit quantization implies a larger quantization error. Other prior studies require severe approximations to compute higher-order derivatives efficiently [16, 18].

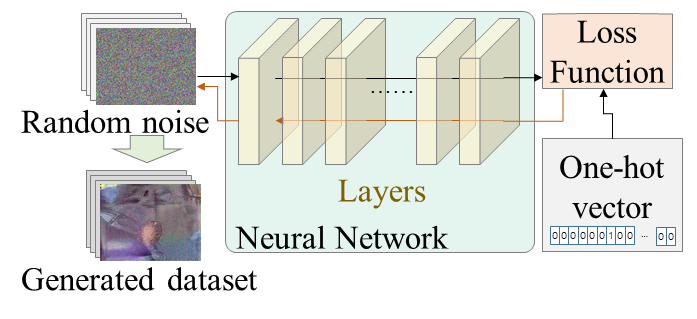

In this work, we provide a straightforward method to compute the layer-wise sensitivity for mixed-precision quantization, considering the interaction of quantization error. In addition, we propose a data generation method, which is effective in the process of post-training mixed-precision quantization, as shown in Figure 1. Prior works [10, 19] use statistics of a particular operation (such as batch normalization), and it is impotent when the networks that do not have the specific operation explicitly. The proposed synthetic data engineering approach is independent of network structures. Moreover, it generates labeled data to verify the quantized network.

The remainder of this paper is organized as follows. Section 2 describes the data generation method and proposes a sensitivity measurement metric. Section 3 provides an experimental demonstration of mixed-precision quantization using the proposed metric and generated data, and the results are compared with previous approaches.

2 Methodology

2.1 Data Generation

For a general data generation method, we seek to avoid any dependency on a specific operation in a convolutional network. As shown in Figure 1(a), we first forward a noisy image initialized from a uniform distribution in the range between 0 and 255, inclusive. To produce a set of labeled data, we use a set of class-relevant vectors/matrices, which means one-hot vectors per class in the classification task. In the forward pass, the loss is computed as

| (1) |

where is the trainable input feature, is the set of class-relevant vectors/matrices, called the golden set, is the neural network, and is an activation function (i.e., softmax) used to compute cross-entropy loss () with the golden set. In addition, we reinforce the loss by maximizing the activation of output of network to enhance the efficiency of data generation process by referring the prior works for model interpretation [20, 21]. The calculated loss is propagated in the backward pass and generates input feature for each class, . Finally, we have crafted synthetic data for each class after several iterations of forward and backward processes.

Figure 2 demonstrates the reliability of crafting labeled data for each class by showing the top-2 confidences per class of synthetic data. All the generated data are used not only to measure the layer-wise sensitivity of the network but also to obtain the statistics of activations to improve the quality of the post-training quantized network.

2.2 Sensitivity Metric

The objective of mixed-precision quantization is to allocate appropriate bits to each layer to reduce the cost (e.g., bit operations [22]) of neural network models while suppressing the task loss growth. The sensitivity metric is an important factor for finding the optimal point between the effects of quantization error and cost. First, we would like to measure the effect of quantization error on the loss of a network that has total layers when weights of layer , are quantized through quantization function into -bit. Given input data and quantized neural network , we consider the Euclidean distance between and as the , the loss of network output, for sensitivity measurement instead of task loss. We can define the effect of quantization error of on as follows:

| (2) |

where denotes the total size of the dataset, and are gradients for the quantization error. is not variable in post-training quantization; thus, we can represent Eq. 2 as weight gradients of quantized parameters by using the chain rule as follows:

| (3) |

The effects of the activation’s quantization error on is represented as activation gradients of quantized activation by applying the derivation of the formula shown in the previous equations. Subsequently, we calculate the expectation for the effects of the quantized network on by using the geometric mean of of -bit quantized activation and quantized weights , which is formulated as

| (4) |

The gradients of the converged single-precision neural network are not changed for the same data. To measure the effect of on other connected layers, we observe the gradient perturbations of and , which are caused by . Consequently, we can measure the effect of quantization error on other layers and loss of the network together, i.e., sensitivity of quantization by using when we only quantize activations or weights of a layer for which we would like to measure the sensitivity. Expressing the single-precision as , we have

| (5) | ||||

for quantization sensitivity metric for . It is straightforward by using the information of back-propagation of quantized network and considers the effect of quantization error on other layers.

3 Experiments

In this section, we first demonstrate the effectiveness of the proposed data generation method in post-training quantization. Then, we show that the proposed sensitivity metric represents the quantization sensitivity of the layer effectively by using our generated data. Finally, sensitivity value by using various datasets indicates that the proposed data generation method is also credible in sensitivity measurement.

To demonstrate our methodology, we use classification models VGG19, ResNet50, and InceptionV3 on the ImageNet validation dataset.

| Model | Dataset | Top-1 | Top-5 |

| VGG19 | ImageNet | 72.31 | 90.81 |

| Noise | 3.45 | 10.45 | |

| ZeroQ | - | - | |

| Proposed | 71.84 | 90.53 | |

| ResNet50 | ImageNet | 75.89 | 92.74 |

| Noise | 11.28 | 29.88 | |

| ZeroQ | 75.47 | 92.62 | |

| Proposed | 75.68 | 92.72 | |

| InceptionV3 | ImageNet | 77.14 | 93.43 |

| Noise | 62.49 | 85.00 | |

| ZeroQ | 76.85 | 93.31 | |

| Proposed | 76.83 | 93.26 |

3.1 Efficacy of Data Generation Method

We evaluate our method using the generated data to determine the clipping range of each layer in post-training quantization. Our experiments exploit a simple symmetric quantization approach, which uses the maximum value of activation as the clipping range of each layer. Hence, maximum values are extremely crucial for confining the dynamic range to utilize the bit-width efficiently, preventing a severe accuracy drop in low-bit quantization.

For our proposed method, input images are initialized according to uniform distribution, which follows standardization or normalization. To generate data, we use Adam optimizer to optimize the loss function with a learning rate of 0.04, and we found that =1 works best empirically.

For InceptionV3, input data are initialized according to (0, 255), which follows standardization with mean and variance by considering that the model requires synthetic data to be converted into the range of [-1, 1] through preprocessing. For VGG19 and ResNet50, input data are initialized according to (0, 255), which follows normalization with the factor of 255 because they require the range of [0, 1].

Table 1 shows the empirical results for ImageNet, random noise, and ZeroQ [10]. In the experiment, ImageNet data are produced by choosing one image per class from the training data, and we generate 1000 data samples randomly using the existing method and the proposed method. As one can see, our method shows similar or higher performances over existing data-free method, having less than 0.5% differences from the results of ImageNet cases. As shown in VGG19, although the existing research is hard to generalize, the method maintains sound performance regardless of the network structure.

3.2 Comparison of Sensitivity Metrics

(a) ResNet50 A32W{32,4}

(b) ResNet50 A{32, 4}W32

(c) InceptionV3 A32W{32,4}

(d) InceptionV3 A{32, 4}W32

To verify several metrics in weight sensitivity, we measure the task loss by switching floating-point weights in the order of layers with higher sensitivity values, where all weights are quantized to 4-bit, and activations do not have quantization error. We denote this experiment as A32W{32,4}. A{32,4}W32 indicates the evaluation of the metrics in activation sensitivity when switching the 4-bit quantized activation to floating-point, where weights are single-precision. ZeroQ [10] measures the KL divergence and [15] measures the Euclidean distance between the original model and the quantized model. ZeroQ [10] only measures the weight sensitivity. HAWQ-V2 [16] uses the average Hessian trace and L2 norm of quantization perturbation. We use the data generated by the proposed method for all metrics.

Figure 3 shows the results of ResNet50 and InceptionV3 over ImageNet dataset. Our sensitivity metric reliably and quickly lowered the task loss, which means that the proposed sensitivity metric is good at weights and activations that are comparatively more susceptible to quantization. To express the results of Figure 3 quantitatively, we calculate the relative area under the curve of task loss graph considering the result of the proposed metric as 1 and summarize it in Table 2. Our proposed method has the smallest area in all results.

| Model | Metric | A32W{32,4} | A{32,4}W32 |

| ResNet50 | Proposed | 1 | 1 |

| L2 [15] | 1.22 | 1.11 | |

| ZeroQ(KLD) | 1.14 | 18.48 | |

| HAWQ-V2 | 1.42 | 2.09 | |

| InceptionV3 | Proposed | 1 | 1 |

| L2 | 1.04 | 1.50 | |

| ZeroQ(KLD) | 1.69 | 8.06 | |

| HAWQ-V2 | 1.25 | 6.17 |

3.3 Sensitivity According to Dataset Type

To show the importance of the data in measuring the sensitivity, we evaluate the proposed sensitivity metric over the different datasets of Section 3.1 on the 4-bit quantized network that clipped the activation range using ImageNet dataset. We measure the task loss as in Section 3.2. The proposed dataset is the most similar in sensitivity to the ImageNet dataset, as shown in Table 3. Notably, the proposed data generation method provides reliable statistics similar to the original training dataset. For InceptionV3, preprocessed ImageNet data are in the range of [-2.03, 2.52]. However, the range of the preprocessed data from [10] is measured as [-11.50, 11.21], while that of our data is in [-2.35, 2.36], whose maximum value is almost similar to that of the ImageNet dataset. These similar statistics are seen in ResNet50. The incorrect statistics of the activation data corresponding to the original training dataset implies inaccurate sensitivity measurement [10]. Thus, our generation method can be used for reliable sensitivity measurement.

| Model | Dataset | A32W{32,4} | A{32,4}W32 |

| ResNet50 | Proposed | 1 | 1 |

| ImageNet | 0.74 | 1.60 | |

| Noise | 1.35 | 6.76 | |

| ZeroQ | 1.11 | 3.17 | |

| InceptionV3 | Proposed | 1 | 1 |

| ImageNet | 1.07 | 2.48 | |

| Noise | 1.36 | 3.54 | |

| ZeroQ | 1.47 | 2.63 |

4 Conclusion

In this paper, we proposed an effective data generation method for post-training mixed-precision quantization. Our approach is to train the random noise to generate data by using class-relevant vectors. It is not only independent of network structure but also provides a labeled dataset. We demonstrate that the generated data are sufficient to ensure the quality of post-training quantization. Furthermore, we proposed a novel sensitivity metric, which is important to optimize bit allocation. The proposed sensitivity metric considers the effect of quantization on other relative layers and task loss together using the gradient perturbation of the quantized neural network. Comparisons of sensitivity metrics were made to show the extent to which a layer with high sensitivity, measured with sensitivity metrics of other methods, affects task loss. The proposed sensitivity metric outperformed other metrics to stand for the effect of quantization error. We leave optimizing bit allocation using the proposed metric and applying to other tasks as part of future work.

References

- [1] C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna, “Rethinking the Inception architecture for computer vision,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 2818–2826.

- [2] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “SSD: Single shot multibox detector,” in European conference on computer vision. Springer, 2016, pp. 21–37.

- [3] L.-C. Chen, Y. Zhu, G. Papandreou, F. Schroff, and H. Adam, “Encoder-decoder with atrous separable convolution for semantic image segmentation,” in Proceedings of the European conference on computer vision (ECCV), 2018, pp. 801–818.

- [4] A. Howard, M. Sandler, G. Chu, L.-C. Chen, B. Chen, M. Tan, W. Wang, Y. Zhu, R. Pang, V. Vasudevan, Q. V. Le, and H. Adam, “Searching for mobilenetv3,” arXiv preprint arxiv:1905.02244, 2019.

- [5] G. Hinton, O. Vinyals, and J. Dean, “Distilling the knowledge in a neural network,” arXiv preprint arXiv:1503.02531, 2015.

- [6] S. Han, H. Mao, and W. J. Dally, “Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding,” arXiv preprint arXiv:1510.00149, 2015.

- [7] S. Jung, C. Son, S. Lee, J. Son, J.-J. Han, Y. Kwak, S. J. Hwang, and C. Choi, “Learning to quantize deep networks by optimizing quantization intervals with task loss,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019.

- [8] J. Choi, Z. Wang, S. Venkataramani, P. I.-J. Chuang, V. Srinivasan, and K. Gopalakrishnan, “PACT: Parameterized clipping activation for quantized neural networks,” arXiv preprint arXiv:1805.06085, 2018.

- [9] D. Zhang, J. Yang, D. Ye, and G. Hua, “LQ-Nets: Learned quantization for highly accurate and compact deep neural networks,” in 15th European Conference on Computer Vision, 2018.

- [10] Y. Cai, Z. Yao, Z. Dong, A. Gholami, M. W. Mahoney, and K. Keutzer, “ZeroQ: A novel zero shot quantization framework,” in 17th Computer Vision and Pattern Recognition, 2020.

- [11] P. Nayak, D. Zhang, and S. Chai, “Bit efficient quantization for deep neural networks,” arXiv preprint arXiv:1910.04877, 2019.

- [12] M. Nagel, M. van Baalen, T. Blankevoort, and M. Welling, “Data-free quantization through weight equalization and bias correction,” in 2019 international Conference on Computer Vison, 2019.

- [13] K. Wang, Z. Liu, Y. Lin, J. Lin, and S. Han, “HAQ: Hardware-aware automated quantization with mixed precision,” in The 16th Computer Vision and Pattern Recognition, 2019.

- [14] B. Wu, Y. Wang, P. Zhang, Y. Tian, P. Vajda, and K. Keutzer, “Mixed precision quantization of ConvNets via differentiable neural architecture search,” arXiv preprint arxiv:1812.00090, 2018.

- [15] W. Zhe, J. Lin, V. Chandrasekhar, and B. Girod, “Optimizing the bit allocation for compression of weights and activations of deep neural networks,” in 2019 IEEE International Conference on Image Processing (ICIP). IEEE, 2019, pp. 3826–3830.

- [16] Z. Dong, Z. Yao, Y. Cai, D. Arfeen, A. Gholami, M. W. Mahoney, and K. Keutzer, “HAWQ-V2: Hessian aware trace-weighted quantization of neural networks,” arXiv preprint arxiv:1911.03852, 2019.

- [17] P. Molchanov, A. Mallya, S. Tyree, I. Frosio, and J. Kautz, “Importance estimation for neural network pruning,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 11 264–11 272.

- [18] Z. Dong, Z. Yao, A. Gholami, M. W. Mahoney, and K. Keutzer, “HAWQ: Hessian aware quantization of neural networks with mixed-precision,” in 2019 Intenational Conference on Computer Vison, 2019.

- [19] H. Yin, P. Molchanov, J. M. Alvarez, Z. Li, A. Mallya, D. Hoiem, N. K. Jha, and J. Kautz, “Dreaming to distill: Data-free knowledge transfer via DeepInversion,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 8715–8724.

- [20] M. T. Alexander Mordvintsev, Christopher Olah, “Inceptionism: Going deeper into neural networks,” 2015. [Online]. Available: https://ai.googleblog.com/2015/06/inceptionism-going-deeper-into-neural.html

- [21] F. M. Graetz, “How to visualize convolutional features in 40 lines of code,” 2019. [Online]. Available: https://towardsdatascience.com/how-to-visualize-convolutional-features-in-40-lines-of-code-70b7d87b0030

- [22] M. van Baalen, C. Louizos, M. Nagel, R. A. Amjad, Y. Wang, T. Blankevoort, and M. Welling, “Bayesian bits: Unifying quantization and pruning,” arXiv preprint arXiv:2005.07093, 2020.