Data-driven computation methods for quasi-stationary distribution and sensitivity analysis

Abstract.

This paper studies computational methods for quasi-stationary distributions (QSDs). We first proposed a data-driven solver that solves Fokker-Planck equations for QSDs. Similar as the case of Fokker-Planck equations for invariant probability measures, we set up an optimization problem that minimizes the distance from a low-accuracy reference solution, under the constraint of satisfying the linear relation given by the discretized Fokker-Planck operator. Then we use coupling method to study the sensitivity of a QSD against either the change of boundary condition or the diffusion coefficient. The 1-Wasserstein distance between a QSD and the corresponding invariant probability measure can be quantitatively estimated. Some numerical results about both computation of QSDs and their sensitivity analysis are provided.

Key words and phrases:

quasi-stationary distribution, Monte Carlo simulation, data-driven computation, coupling method1. Introduction

Many models in various applications are described by Markov chains with absorbing states. For example, any models with mass-action kinetics, such as ecological models, epidemic models, and chemical reaction models, are subject to the population-level randomness called the demographic stochasticity, which leads to extinction in finite time. There are also many dynamical systems that have interesting short term dynamics but trivial long term dynamics, such as dynamical systems with transient chaos [15]. A common way of capturing asymptotical properties of these transient dynamics is the quasi-stationary distribution (QSD), which is the conditional limiting distribution conditioning on not hitting the absorbing set yet. However, most QSDs do not have a closed form. So numerical solutions are necessary in various applications.

Computational methods for QSDs are not very well developed. Although the relation between QSD and the Fokker-Planck equation is well known, it is not easy to use classical PDE solver to solve QSDs because of the following two reasons. First a QSD is the eigenfunction of the Fokker-Planck operator whose eigenvalue is unknown. The cost of solving eigenfunction of a discretized Fokker-Planck operator is considerably high. Secondly the boundary condition of the Fokker-Planck equation is unknown. We usually have a mixture of unbounded domain and unknown boundary value at the absorbing set. As a result, Monte Carlo simulations are more commonly used. However the efficiency of the Monte Carlo simulation is known to be low. To get the probability density function, one needs to deal with undesired noise associated to the Monte Carlo simulation. Methods like the kernel density estimator can smooth the solution but also introduce undesired diffusions to the solution, especially when a QSD is highly concentrated at the vicinity of some low-dimensional sets.

The first goal of this paper is to extend the data-driven Fokker-Planck solver developed by the first author to the case of QSDs [7]. Similar to [7], we need a reference solution generated by the Monte Carlo simulation. Then we discretize the Fokker-Planck operator in a numerical domain without the boundary condition. Because of the lack of boundary conditions, the discretization only gives an underdetermined linear system, denoted by . Then we minimize in the null space of . As shown in [8], this optimization problem projects the error terms of to a low dimensional linear subspace, which significantly reduces its norm. Our numerical simulations show that this data-driven Fokker-Planck solver can tolerate very high level of spatially uncorrelated error, so the accuracy of does not have to be high. The main difference between QSD solver and the Fokker-Planck solver is that we need a killing rate to find the QSD, which is obtained by a Monte Carlo simulation. We find that the QSD is not very sensitive against small error in the estimation of the killing rate.

The second goal of this paper is to study the sensitivity of QSDs. Some modifications of either the boundary condition or the model parameters can prevent the Markov process from hitting the absorbing state in finite time. So the modified process would admit an invariant probability measure instead of a QSD. It is important to understand the difference between the QSD of a Markov process and the invariant probability measure of its modification. For example, many ecological models do not consider demographic noise because the population size is large and the QSD is much harder to study. But would the demographic noise completely change the asymptotical dynamics? More generally, a QSD captures the transient dynamics of a stochastic differential equation. If we separate a domain from the global dynamics by imposing reflecting boundary condition, how would the local dynamics be different from the corresponding transient dynamics? All these require some sensitivity analysis with quantitative bounds.

The way of sensitivity analysis is similar to [8]. We need both finite time error and the rate of contraction of the transition kernel of the Markov process. The finite time error is given by both the killing rate and the change of model parameters (if any). Both cases can be estimated easily. The rate of contraction is estimated by the data-driven method proposed in [17]. We design a suitable coupling scheme for the modified Markov process that admits an invariant probability measure. Because of the coupling inequality, the exponential tail of the coupling time can be used to estimate the rate of contraction. The sensitivity analysis is demonstrated by several numerical examples. We can find that the invariant probability measure of the modified process is a better approximation of the QSD if (i) the speed of contraction is faster and (ii) the killing rate is lower.

The organization of this paper is as follows. A short preliminary about QSD, Fokker-Planck equation, simulation method, and coupling method is provided in Section 2. Section 3 introduces the data-driven QSD solver. The sensitivity analysis of QSD is given in Section 4. Section 5 is about numerical examples.

2. Preliminary

In this section, we provide some preliminaries to this paper, which are about the quasi-stationary distribution (QSD), the Fokker-Planck equation, the coupling method, and numerical simulations of the QSD.

2.1. Quasi-stationary distribution

We first give definition of the QSD and the exponential killing rate of a Markov process with an absorbing state. Let be a continuous-time Markov process taking values in a measurable space . Let be the transition kernel of such that for all . Now assume that there exists an absorbing set . The complement is the set of allowed states.

The process is killed when it hits the absorbing set, implying that for all , where is the hitting time of set . Throughout this paper, we assume that the process is almost surely killed in finite time, i.e. .

For the sake of simplicity let (resp. ) be the probability conditioning on the initial condition (resp. the initial distribution ).

Definition 2.1.

A probability measure on is called a quasi-stationary distribution(QSD) for the Markov process with an absorbing set , if for every measurable set

| (2.1) |

or equivalently, if there is a probability measure exists such that

| (2.2) |

in which case we also say that is a quasi-limiting distribution.

Remark 2.1.

In the analysis of QSD, we are particularly interested in a parameter , called the killing rate of the Markov process. If the distribution of the killing time has an exponential tail, then is the rate of this exponential tail. The following theorem shows that the killing time is exponentially distributed when the process starts from a QSD[5].

Theorem 2.1.

Let be a QSD and when starting from , the killing time is exponentially distributed, that is,

where is called the killing rate of .

Throughout this paper, we assume that admits a QSD denoted by with a strictly positive killing rate .

2.2. Fokker-Planck equation

Consider a stochastic differential equation

| (2.3) |

where and is killed when it hits the absorbing set ; is a continuous vector field; is an matrix-valued function; and is the white noise in . The following well known theorem shows the existence and the uniqueness of the solution of equation (2.3).

Theorem 2.2.

There are quite a few recent results about the existence and convergence of QSD. Since the theme of this paper is numerical computations, in this paper we directly assume that admits a unique QSD on set that is also the quasi-limit distribution. The detailed conditions are referred in [18, 13, 20, 9, 21].

Let be the probability density function of . We refer [2] for the fact that satisfies

| (2.4) |

where , and is the killing rate.

2.3. Simulation algorithm of QSD

It remains to review the simulation algorithm for QSDs. In order to compute the QSD, one needs to numerically simulate a long trajectory of . Once hits the absorbing state, a new initial value is sampled from the empirical QSD. The re-sampling step can be done in two different ways. We can either use many independent trajectories that form an empirical distribution [3] or re-sample from the history of a long trajectory [19]. In this paper we use the latter approach.

Let be a long numerical trajectory of the time- sample chain of , where is an numerical approximation of , then the Euler-Maruyama numerical scheme is given by

| (2.5) |

where , is a -dimensional normal random variable.

Another widely used numerical scheme is called the Milstein scheme, which reads

where is a matrix with its -th component being the double It integral

and is a vector of left operators with -th component

Remark 2.2.

Under suitable assumptions of Lipschitz continuity and linear growth conditions for and , the Euler-Maruyama approximation provides a convergence rate of order 1/2, while the Milstein scheme is an order 1 strong approximation[14].

For simplicity, we introduce the algorithm for , specifically, we solve in equation (2.4) numerically on a 2D domain . Firstly, we construct an grid on with grid size . Each small box in the mesh is denoted by . Let be the numerical solution on that we are interested in, then can be considered as a vector in . Each element approximates the density function at the center of each , with coordinate . Generally speaking, we count the number of falling into each box and set the normalized value as the approximate probability density at . As we are interested in the QSD, the main difference from the general Monte Carlo is that the Markov process will be regenerated as the way of its empirical distribution uniformly once it is killed. The details of simulation is shown in Algorithm 1 as following.

Sometimes the Euler-Maruyama method underestimates the probability that moves to the absorbing set, especially when is close to . This problem can be fixed by introducing the Brownian bridge correction. We refer to [4] for details. For a sample falling into a small box which are closed to , the probability of them falling into the trap is relatively high. In fact, this probability is exponentially distributed and the rate is related to the distance from . Let be the Brownian Bridge on the interval . In the 1D case, the law of the infimum and the supremum of the Brownian Bridge can be computed as follows: for every

| (2.6) |

where , and is the strength coefficient of Brownian Bridge. This means that at each step , if , one can compute, with the help of the above properties, a Bernoulli random variable with the parameter

2.4. Coupling Method

The coupling method is used for the sensitivity analysis of QSDs.

Definition 2.2.

(Coupling of probability measures) Let and be two probability measures on a probability space . A probability measure on is called a coupling of and , if two marginals of coincide with and respectively.

The definition of coupling can be extended to any two random variables that take value in the same state space. Now consider two Markov processes and with the same transition kernel . A coupling of and is a stochastic process on the product state space such that

(i) The marginal processes and are Markov processes with the transition kernel ;

(ii) If , we have for all .

The first meeting time of and is denoted as , which is called the coupling time. The coupling is said to be successful if the coupling time is almost surely finite, i.e. .

In order to give an estimate of the sensitivity of the QSD, we need the following two metrics.

Definition 2.3.

(Wasserstein distance) Let be a metric on the state space . For probability measures and on , the Wasserstein distance between and for is given by

| (2.7) |

In this paper, without further specification, we assume that the 1-Wasserstein distance is induced by

Definition 2.4.

(Total variation distance) Let and be probability measures on . The total variation distance of and is

Lemma 2.3.

(Coupling inequality) For the coupling given above and the Wasserstein distance induced by the distance given in (2.7), we have

Proof.

By the definition of Wasserstein distance,

∎

Consider a Markov coupling where and are two numerical trajectories of the stochastic differential equation described in (2.5). Theoretically, there are many ways to make stochastic differential equations couple. But since numerical computation always has errors, two numerical trajectories may miss each other when the true trajectories couple. Hence we need to apply a mixture of the following coupling methods in practice.

Independent coupling. Independent coupling means the noise term in the two marginal processes and are independent when running the coupling process . That is

where and are independent random variables for each .

Reflection coupling Two Wiener processes meet less often than the 1D case when the state space has higher dimensions. This fact makes the independent coupling less effective. The reflection coupling is introduced to avoid this case. Take the Euler-Maruyama scheme of the SDE

as an example, where is a constant matrix. The Euler-Maruyama scheme of reads as

where is a standard Wiener process. The reflection coupling means that we run as

while run as

where is a projection matrix with

Nontechnically, reflecting coupling means that the noise term is reflected against the hyperplane that orthogonally passes the midpoint of the line segment connecting and . In particular, when the state space is 1D.

Maximal coupling Above coupling schemes can bring moves close to when running numerical simulations. However, a mechanism is required to make with certain positive probability. That’s why the maximal coupling is involved. One can couple two trajectories whenever the probability distributions of their next step have enough overlap. Denote and as the probability density functions of and respectively. The implementation of the maximal coupling is described in the following algorithm.

For discrete-time numerical schemes of SDEs, we use reflection coupling when and are far away from each other, and maximal coupling when they are sufficiently close. The threshold of changing coupling method is in our simulation, that is, the maximal coupling is applied when the distance between and is smaller than the threshold.

3. Data-driven solver for QSD

Recall that the probability density function of QSD solves the Fokker-Planck equation . The QSD solver consists of three components: an estimator of the killing rate , a Monte Carlo simulator of QSD that produces a reference solution, and an optimization problem similar as in [16].

3.1. Estimation of

Let be a long numerical trajectory of as described in Algorithm 1. Let be recordings of killing times of the numerical trajectory such that hits at when running Algorithm 1. Note that is an 1D vector and each element in is a sample of the killing time. It is well known that if the QSD exists for a Markov process, then there exists a constant such that

Therefore, we can simply assume that the killing times be exponentially distributed and the rate can be approximated by

One pitfall of the previous approach is that Algorithm 1 only gives a QSD when the time approaches to infinity. It is possible that has not converged close enough to the desired exponential distribution. So it remains to check whether the limit is achieved. Our approach is to check the exponential tail in a log-linear plot. After having , it is easy to choose a sequence of times and calculate for each . Then is an estimator of . Now let (resp. ) be the upper (resp. lower) bound of the confidence interval of such that

where , and [1]. If for each , we accept the estimate . Otherwise we need to run Algorithm 1 for longer time to eliminate the initial bias in .

3.2. Data driven QSD solver.

The data driven solver for the Fokker-Planck equation introduced in [16] can be modified to solve the QSD for the stochastic differential equation (2.3). We use the same 2D setting in Section 2.3 to introduce the algorithm. Let the domain and the boxes be the same as defined in Section 2.3. Let u be a vector in such that approximates the probability density function at the center of the box . As introduced in [7], we consider u as the solution to the following linear system given by the spatial discretization of the Fokker-Planck equation (2.4) with respect to each center point:

| (3.1) |

where is an matrix, which is called the discretized Fokker-Planck operator, and is the killing rate, which can be obtained by the way we mentioned in previous subsection. More precisely, each row in describes the finite difference scheme of equation (2.4) with respect to a non-boundary point in the domain .

Similar to [16], we need the Monte Carlo simulation to produce a reference solution v, which can be obtained via Algorithm 1 in Section 2. Let be a long numerical trajectory of time - sample chain of process produced by Algorithm 1, and let such that

It follows from the ergodicity of (2.3) that v is an approximate solution of equation (2.4) when the trajectory is sufficiently long. However, as discussed in [16], the trajectory needs to be extremely long to make v accurate enough. Noting that the error term of v has little spatial correlation, we use the following optimization problem to improve the accuracy of the solution.

| (3.2) |

The solution to the optimization problem (3.2) is called the least norm solution, which satisfies , within . [16]

An important method called the block data-driven solver is introduced in [7], in order to reduce the scale of numerical linear algebra problem in high dimensional problems. By dividing domain into blocks and discretizing the Fokker-Planck equation, the linear constraint on is

where is an matrix. The optimization problem on is

where is a reference solution obtained from the

Monte-Carlo simulation. Then the numerical solution to Fokker-Planck

equation (3.1) is collage of all

on all blocks. However, the optimization problem ”pushes” most error

terms to the boundary of domain, which makes the solution is less

accurate near the boundary of each

block. Paper

citedobson2019efficient introduced the overlapping

block method and the shifting blocks method to reduce the interface error. The overlapping block method enlarges the

blocks and set the interior solution restricted to the original block

as new solution, while the shifting block method moves the interface

to the interior by shifting all blocks and recalculate the solution.

Note that in Section 3.1, we assume that is a pre-determined value given by the Monte Carlo simulation. Theoretically one can also search for the minimum of with respect to both and v. But empirically we find that the result is not as accurate as using the killing rate from the Monte Carlo simulation, possibly because has too much error.

One natural question is that how the simulation error in would affect the solution to the optimization problem (3.2). Some linear algebraic calculation shows that the optimization problem (3.2) is fairly robust against small change of .

Theorem 3.1.

Let and be the solution to the optimization problem (3.2) with respect to killing rates and respectively, where . Then

where is the smallest singular value of .

Proof.

Let be an perturbation matrix. Let , and . Since and , it is sufficient to prove

Note that

and since is , we can neglect it when we consider the inverse matrix of , then

and without considering the high order term , we can see

Consider the singular value decomposition(SVD) of matrix , i.e. , wherein is an matrix and both are orthogonal. Then , where , and

Note that . Since and are two generalized inverse of ,

where

| (3.3) |

and , hence we conclude that

∎

Remark 3.1.

It is very difficult to estimate the minimum singular value of matrix analytically, even for the simplest case when the Fokker-Planck equation is just a heat equation. But empirically we find that is usually not very large. For example, for the gradient flow with a double well potential is Section 5.4 is , and for the “ring example” in Section 5.3 is only .

4. Sensitivity analysis of QSD

A stochastic differential equation has a QSD usually because it has a natural absorbing state. For example, in ecological models, this absorbing state is the natural boundary of the domain at which the population of some species is zero. Obviously invariant probability measures are easier to study than QSDs. One interesting question is that if we slightly modify the equation such that it does not have absorbing states any more, how can we quantify the difference between QSD and the invariant probability measure after the modification? This is called the sensitivity analysis of QSDs.

In this section, we focus on the difference between the QSD of a stochastic differential equation and the invariant probability measure of a modification of , denoted by . For the sake of simplicity, this paper only compares the QSD (resp. invariant probability measure) of the numerical trajectory of (resp. ), denoted by (resp. ). Denote the QSD (resp. invariant probability measure) of (resp. ) by (resp. ) and the QSD (resp. invariant probability measure) of the original SDE (resp. ) by (resp. ). The sensitivity of invariant probability measure against time discretization has been addressed in [8]. When the time step size of the time discretization is small enough, the invariant probability measure is close to the numerical invariant probability measure . The case of QSD is analogous. Hence is usually a good approximation of .

We are mainly interested in the following two different modifications of .

Case 1: Reflection at One easy way to modify to eliminate the absorbing state is to add a reflecting boundary. This method preserves the local dynamics but changes the boundary condition. More precisely, the trajectory of follows that of until it hits the boundary . Without loss of generality assume is a smooth manifold embedded in . If but , then is the mirror reflection of against the boundary of . Still take the 2D case as an example. Let . Without loss of generality, suppose first hits the absorbing set , then

Case 2: Demographic noise in ecological models. We would also like to address the case of demographic noise in a class of ecological models, such as population model and epidemic model. For the sake of simplicity consider an 1D birth-death process with some environment noise

| (4.1) |

where . It is known that for suitable , the probability of hitting zero in finite time is zero [7]. However, if we take the demographic noise, i.e., the randomness of birth/death events, into consideration, the birth-death process becomes

| (4.2) |

where is a small parameter that is proportional to -th power of the population size, is the new parameter that address the separation of environment noise and demographic noise. For example, if the steady state of is around , we can choose . Different from equation (4.1), equation (4.2) usually can hit the boundary in finite time with probability one. Therefore, equation (4.1) admits an invariant probability measure while equation (4.2) has a QSD. One very interesting question is that, if is sufficiently small, how different is the invariant probability measure of equation (4.1) from the QSD of equation (4.2)? This is very important in the study of ecological models because theoretically every model is subject a small demographic noise. If the invariant probability measure is dramatically different from the QSD after adding a small demographic noise term, then the equation (4.1) is not a good model due to high sensitivity, and we must study the equation (4.2) directly.

We roughly follow [8] to carry out the sensitivity analysis of QSD. Here we slightly modify such that if , we immediately re-sample from the QSD . This new process, denoted by , admits an invariant probability measure . Now denote the transition kernel of and by and respectively. Let be a fixed constant. Let be the 1-Wasserstein distance defined in Section 2. Motivated by [12], we can decompose via the following triangle inequality:

If the transition kernel has enough contraction such that

for some , then we have

Therefore, in order to estimate , we need to look for suitable numerical estimators of the finite time error and the speed of contraction of . The finite time error can be easily estimated in both cases. And the speed of contraction comes from the geometric ergodicity of the Markov process . If our numerical estimation gives

then we set . As discussed in [8], this is a quick way to estimate , and in practice it does not differ from the “true upper bound” very much. The “true upper bound” of in [8] comes from the extreme value theory, which is much more expensive to compute.

4.1. Estimation of contraction rate

Similar as in [17], we use the following coupling method to estimate the contraction rate . Let be a Markov process in such that and are two copies of . Let the first passage time to the “diagonal” hyperplane be the coupling time, which is denoted by . Then by Lemma 2.3

As discussed in [17], we need a hybrid coupling scheme to make sure that two numerical trajectories can couple. Some coupling methods such as reflection coupling or synchronous coupling are implemented in the first phase to bring two numerical trajectories together. Then we compare the probability density function for the next step and couple these two numerical trajectories with the maximal possible probability (called the maximal coupling). After doing this for many times, we will have many samples of denote by . We use the exponential tail of to estimate the contraction rate . More precisely, we look for a constant such that

if the limit exists. See Algorithm 3 for the detail of implementation of coupling. Note that we cannot simply compute the contraction rate start from because only the tail of coupling time can be considered as exponential distributed. In practice, we apply the same method as we compute the killing rate in section 3.1. After having , it is easy to choose a sequence of times and calculate for each . Then is an estimator of . Now let (resp. ) be the upper (resp. lower) bound of the confidence interval of such that

where , and . Let be the largest time that we can still collect available samples. If there exist constants and such that for each , we say that the exponential tail starts at . We accept the estimate of the exponential tail with rate if the confidence interval is sufficiently small, i.e., the estimate of coupling probability at is sufficiently trustable. Otherwise we need to run Algorithm 1 for longer time to eliminate the initial bias in .

4.2. Estimator of error terms

It remains to estimate the finite time error . As we mentioned in the beginning of this section, we will consider two different cases and estimate the finite time errors respectively.

4.2.1. Case 1: Reflection at

Recall that the modified Markov process reflects at the boundary when it hits the boundary. Hence two trajectories

are identical if we set the same noise in the simulation process. only differs from when hits the boundary . When and hit the boundary, is resampled from , and reflects at the boundary. Hence the finite time error is bounded from above by the killing probability within the time interval when starting from .

In order to sample initial value from the numerical invariant measure , we consider a long trajectory . The distance between and the modified trajectory is recorded after time , then we let and restart. See the following algorithm for the detail.

When the number of samples is sufficiently large, are from a long trajectory of the time- skeleton of . Hence they are approximately sampled from . The error term for estimates . Let be the probability measure on that is supported by the hyperplane such that for any . Since the pushforward map is a coupling, it is easy to see that the output of Algorithm 4 gives an upper bound of .

From the analysis above, we have the following lemma, which gives an upper bound of the finite time error .

Lemma 4.1.

For the Wasserstein distance induced by the distance given in (2.7), we have

where is the initial value with distribution .

Proof.

Note that is a coupling of and . From the definition of Wasserstein distance, we have

the inequality in the last step comes from the definition . ∎

4.2.2. Case 2: Impact of a demographic noise

Another common way of modification in ecological models is to add a demographic noise. Let be the numerical trajectory of the SDE with an additive demographic noise . Let be the modification of that resample from whenever hitting so that it admits as an invariant probability measure. Let be the numerical trajectory of the SDE without demographic noise so that admits an invariant probability measure. We have trajectories

Here we assume that has zero probability to hit the absorbing set in finite time. Different from the Case 1, we will need to study the effect of the demographic noise. When estimating the finite time error , we still need to sample the initial value from and record the distance between these two trajectories and up to time . The distance between and can be decomposed into two parts: one is from the killing and resampling, the other is from the demographic noise. The first term is the same as in Case 1. The second term is due to the nonzero demographic noise that can separate and before the killing. In a population model, this effect is more obvious when one species has small population, because when . See the description of Algorithm 5 for the full detail.

When is sufficiently large, are from a long trajectory of the time- skeleton of . Hence they are approximately sampled from . The error term for estimates . A similar coupling argument shows that the output of Algorithm 5 is an upper bound of .

For each initial value , denote

Similar as in Case 1, the following lemma gives an upper bound for the finite time error .

Lemma 4.2.

For the Wasserstein distance induced by the distance given in (2.7), we have

where is the initial value with distribution and .

Proof.

Note that is a coupling of and . From the definition of the Wasserstein distance, we have

according to the definition of . ∎

5. Numerical Examples

5.1. Ornstein–Uhlenbeck process

The first SDE example is the Ornstein–Uhlenbeck process :

| (5.1) |

where and are parameters, is a constant. In addition, is a Wiener process, and is the strength of the noise. In our simulation, we set and the absorbing set . In addition, we apply the Monte Carlo simulation with mesh points on the interval .

We first need to use Algorithm 1 to estimate the survival rate . Our simulation uses Euler-Maruyama scheme with and sample size and depending on the setting. All samples of killing times are recorded to plot the exponential tail. The mean of killing times gives an estimate . The exponential tail of vs. , the upper and lower bound of the confidence interval, and the plot of are compared in Figure 1. We can see that the plot of falls in the confidence interval for all . Hence the estimate of is accepted.

.

Furthermore, we would like to show the robustness of our data-driven QSD solver. The QSD is not explicit given so we use very large sample size ( samples) and very small step size () to obtain a much more accurate solution, which is served as the benchmark. Then we compare the numerical solutions obtained by the Monte Carlo method and the data-driven method for QSD with and samples, respectively. The result is shown in the first column of Figure 2. The data-driven solver performs much better than the Monte Carlo approximation for samples. It takes samples for the direct Monte Carlo sampler to produce a solution that looks as good as the QSD solver. Similar as the data-driven Fokker-Planck solver, our data-driven QSD solver can tolerate high level error in Monte Carlo simulation that has small spatial correlation.

It remains to check the effect of Brownian Bridge. We apply different time step sizes and for each trajectory. We use samples for and samples for to make sure that the number of killing events (for estimating the killing rate) are comparable. When , the error is small with and without Brownian bridge correction. But Brownian bridge correction obviously improves the quality of solution when . See the lower left panel of Figure 2. This is expected because, with larger time step size, the probability that the Brownian bridge hits the absorbing set gets higher.

5.2. Wright-Fisher Diffusion

The second numerical example is the Wright-Fisher diffusion model, which describes the evolution of colony undergoing random mating, possible under the additional actions of mutation and selection with or without dominance [11]. The Wright-Fisher model is an SDE

where is a Wiener process and is the absorbing set. By the analysis of [11], the Yaglom limit, i.e., the QSD, satisfies

The goal of this example is to show the effect of Brownian bridge when the coefficient of noise is singular at the boundary. Since the Euler-Maruyama scheme only has an order of accuracy , in the simulation, we apply the Milstein scheme, which reads

One difficulty of using the Brownian bridge correction in this model is that the coefficient of the Brownian motion is vanishing at the boundary. Recall that the strength coefficient of Brownian bridge is denoted by . Since the coefficient of the Brownian motion is vanishing, the original strength coefficient can dramatically overestimate the probability of hitting the boundary. In this example, it is not possible to explicitly calculate the conditional distribution of the Brownian bridge that starts from and ends at . But we find that empirically can fix this problem. Since a similar vanishing coefficient of the Brownian motion also appears in many ecological models, we will implement this modified Brownian bridge correction when simulating these models.

The effect of Brownian bridge is shown in the left side of Figure 3. We compare the solutions obtained via Monte Carlo method and the data-driven method with Brownian Bridge by running samples on with time step size . The Monte Carlo approximation is far from the true density function of Beta(1,2) near , while the use of the original Brownian Bridge only makes things worse. The modified Brownian Bridge solves this boundary effect problem reasonably well. The output of the data-driven QSD solver has a similar result (Figure 3 Right). Let and . One can see that the numerical QSD is much closer to the true distribution if we replace the strength coefficient of the Brownian bridge by the modified strength coefficient .

5.3. Ring density function

Consider the following stochastic differential equation:

where and are independent Wiener processes. In the simulation, we set the strength of noise .

We first look at the approximation obtained by Monte Carlo method with mesh points on the domain . The simulation uses step size and samples. (See upper left panel in Figure 4). The Monte Carlo approximation has too much noise to be useful. The quality of this solution can be significantly improved by using the data-driven QSD solver. See upper right panel in Figure 4.

The simulation result shows the estimated rate of killing . We use this example to test the sensitivity of solution u against small change of the killing rate. We compare the approximations obtained by setting the killing rate be , and respectively. Heat maps of the difference between QSDs with “correct” and “wrong” killing rates are shown in two middle panels in Figure 4. It shows that difference brought by “wrong” rates is only , which can be neglected. This result coincides with the analysis in Theorem 3.1 in this paper.

Finally, we would like to emphasis that the data-driven QSD solver can tolerate very high level of spatially uncorrelated noise in the reference solution . For example, if we use the same long trajectory with samples that generates the top left panel of Figure 4, but only select samples with intervals of steps of the numerical SDE solver, the Monte Carlo data becomes very noisy (Bottom left panel of Figure 4). However, longer intermittency between samples also reduces the spatial correlation between samples. As a result, the output of the QSD solver has very little change except at the boundary grid points, because the optimization problem (3.2) projects most of error to the boundary of the domain. (See bottom right panel of Figure 4.) This result highlights the need of high quality samplers. A Monte Carlo sampler with smaller spatial correlation between samples can significantly reduce the number of samples needed in the data-driven QSD solver.

5.4. Sensitivity of QSD: 1D examples

In this subsection, we use 1D examples to study the sensitivity of QSDs against changes on boundary conditions. Consider an 1D gradient flow of the potential function with an additive noise perturbation

| (5.2) |

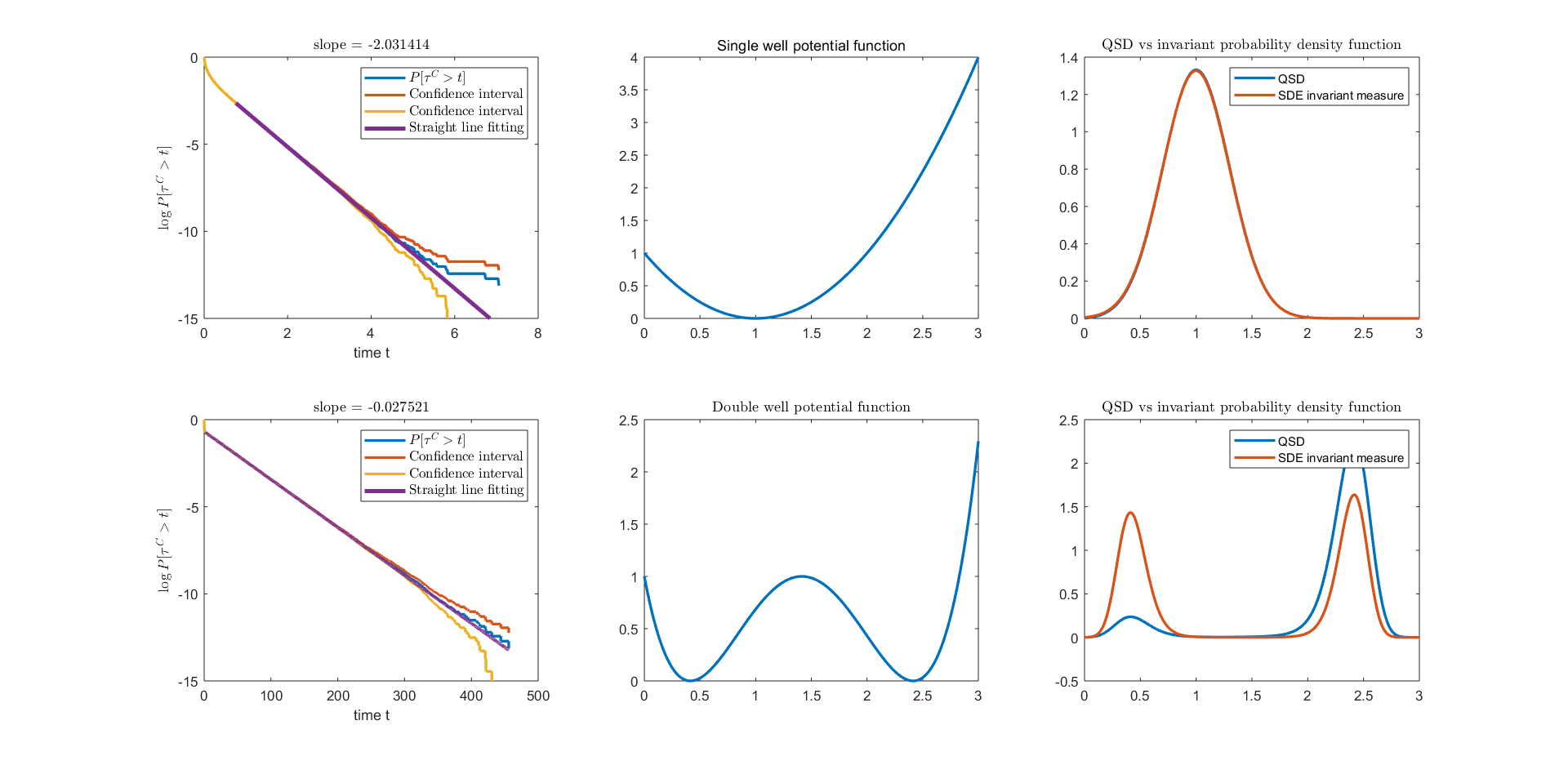

Let be the absorbing state of . So if , admits a QSD, denoted by . If we let the stochastic process reflect at , the modified stochastic process, denoted by , admits an invariant probability measure denoted by . We will compare the sensitivity of against the change of boundary condition for two different cases whose speed of mixing are different, namely a single well potential function and a double well potential function.

We choose a single well potential function and a double well potential function . The values of minima of both and are zero. The values of and at the absorbing state are . And the height of the barrier between two local minima of is . The strength of noise is in both examples. See Figure 5 middle column for plots of these two potential functions. In both cases, the QSD and the invariant probability measure are computed on the domain . To further distinguish these two cases, we denote the QSD of equation (5.2) with absorbing state and potential function (resp. ) by (resp. ) and the invariant probability measure of equation (5.2) with reflection boundary at and potential function (resp. ) by (resp. ). Probability measures and (resp. and ) are compared in Figure 5 right column. We can see that the QSD and the invariant probability measure have small difference for the single well potential function . But they look very different for the double well potential function . With the double well potential function, there is a visible difference between probability density functions of and . The density function of QSD is much smaller than the invariant probability measure around the left local minimum because this local minimum is closer to the absorbing set, which makes killing and regeneration more frequent when is near this local minimum. In other words, the QSD of equation (5.2) with respect to the double well potential is very sensitive against the change at the boundary.

The reason of the high sensitivity is illustrated by the coupling argument. We first run Algorithm 3 with 8 independent long trajectories with length of and collect the coupling times. The slope of exponential tail of the coupling time gives the rate of contraction of . The versus plot is demonstrated in log-linear plot in Figure 5 left column. The slope of exponential tail is for the single well potential , and for the double well potential case. Then we run Algorithm 4 to estimate the finite time error for both cases. Since the single well potential case has a much faster coupling speed, we can choose . The output of Algorithm 4 is . This gives an estimate . The double well potential case converges much slower. We choose to make sure that the denominator is not too small. As a result, Algorithm 4 gives an approximation , which means . This is consistent with the right column seen in Figure 5, the QSD of the double well potential is much more sensitive against a change of the boundary condition than the single well potential case.

5.5. Lotka-Volterra Competitive Dynamics

In this example, we focus on the effect of demographic noise on the classical Lotka-Volterra competitive system. The Lotka-Volterra competitive system with some environmental fluctuation has the form

| (5.3) |

Here is the per-capita growth rate of species and is the per-capita competition rate between species and . More details can be found in [10]. Model parameters are chosen to be . Let be the union of x-axis and y-axis. For suitable and , and can coexist such that the probability of hits in finite time is zero. So equation (5.3) admits an invariant probability measure, denoted by .

As a modification, we add a small demographic noise term to equation (5.4). The equation becomes

| (5.4) |

It is easy to see that equation (5.4) can exit to the boundary . It admits a QSD, denoted by .

In order to study the effect of demographic noise, we compare , the numerical invariant measure of equation (5.3), and , the QSD of equation (5.4). In our simulation, we fix the strength of demographic noise as and compare and at two different levels of the environment noise and respectively. The coefficient and in equation (5.3) satisfies for to match the effect of the additional demographic noise. Compare Figure 6 and Figure 7, one can see that has significant concentration at the boundary when .

The result for is shown in Figure 6. Left bottom of Figure 6 shows the invariant measure. The QSD is shown on right top of Figure 6. The total variation distance between these two measures are shown at the bottom of Figure 6. The difference is very small and it just appears around boundary. This is reasonable because with high probability, the trajectories of equation (5.4) moves far from the absorbing set in both cases, which makes the regeneration events happen less often. This is consistent with the result of Lemma 4.2. We compute the distribution of the coupling time. The coupling time distribution and its exponential tail are shown in Figure 6 Top Left. Then we use Algorithm 5 to compute the finite time error. To better match two trajectories given by equations (5.3) and (5.4), we separate the noise term in equation (5.3) into for , where and use the same Wiener process trajectory as and in equation (5.4) for . Let . The finite time error caused by the demographic noise is . As a result, the upper bound given in Lemma 4.2 is . Note that as seen in Figure 6, this upper bound actually significantly overestimates the distance between the invariant probability measure and the QSD. The empirical total variation distance is much smaller that our theoretical upper bound. This is because the way of matching and is very rough. A better approach of matching those noise terms will likely lead to a more accurate estimation of the upper bound of the error.

The results for are shown in Figure 7. The total variation distance between these two measures are shown at the bottom of Figure 7. It is not hard to see the difference is significantly larger than case . The reason is that trajectories of equation (5.4) have high probability moving along the boundary in this parameter setting. This makes the probability of falling into the absorbing set much higher. Same as above, we compute the distribution of the coupling time and demonstrate the coupling time distribution (as well as the exponential tail) in Figure 7 Top Left. The coupling in this example is slower so we choose to run Algorithm 5. The probability of killing before is approximately and the total finite time error caused by the demographic noise is . As a result, the upper bound given in Lemma 4.2 is . This is consistent with the numerical finding shown in Figure 7 Bottom Right.

5.6. Chaotic attractor

In this subsection, we consider a non-trivial 3D example that has interactions between chaos and random perturbations, called the Rossler oscillator. The random perturbation of the Rossler oscillator is

| (5.5) |

where , and and are independent Wiener processes. The strength of noise is chosen to be . This system is a representative example of chaotic ODE systems appearing in many applications of physics, biology and engineering. We consider equation (5.5) restricted to the box . Therefore, it admits a QSD supported by . In this example, a grid with mesh points is constructed on .

It is very difficult to use traditional PDE solver to compute a large scale 3D problem. To analyze the QSD of this chaotic system, we apply the blocked version of the Fokker-Planck solver studied in [7]. More precisely, a big mesh is divided into many “blocks”. Then we solve the optimization problem (3.2) in parallel. The collaged solution is then processed by the “shifting block” technique to reduce the interface error, which means the blocks are reconstructed such that the center of new blocks cover the boundary of old blocks. Then we let the solution from the first found serve as the reference data, and solve optimization problem (3.2) again based on new blocks. See [7] for the full details of implementation. In this example, the grid is further divided into blocks. We run the “shifting block” solver for 3 repeats to eliminate the interface error. The reference solution is generated by a Monte Carlo simulation with samples. The killing rate is . Two “slices” of the solution, as seen in Figure 8, are then projected to the xy-plane for the sake of easier demonstration. See the caption of Figure 8 for the coordinates of these two “slices”. The left picture in Figure 8 shows the projection of the solution has both dense and sparse parts that are clearly divided. An outer ”ring” with high density appears and the density decays quickly outside this ”ring.” The right picture in Figure 8 demonstrates the solution has much lower density when -coordinate is larger than 1.

6. Conclusion

In this paper we provide some data-driven methods for the computation of quasi-stationary distributions (QSDs) and the sensitivity analysis of QSDs. Both of them are extended from the first author’s earlier work about invariant probability measures. When using the Fokker-Planck equation to solve the QSD, we find that the idea of using a reference solution with low accuracy to set up an optimization problem still works well for QSDs. And the QSD is not very sensitively dependent on the killing rate, which is given by the Monte Carlo simulation when producing the reference solution. The data-driven Fokker-Planck solver studied in this paper is still based on discretization. But we expect the mesh-free Fokker-Planck solver proposed in [7] to work for solving QSDs. In the sensitivity analysis part, the focus is on the relation between a QSD and the invariant probability measure of a “modified process”, because many interesting problems in applications fall into this category. The sensitivity analysis needs both a finite time truncation error and a contraction rate of the Markov transition kernel. The approach of estimating the finite time truncation error is standard. The contraction rate is estimated by using the novel numerical coupling approach developed in [17]. The sensitivity analysis of QSDs can be extended to other settings, such as the sensitivity against small perturbation of parameters, or the sensitivity of a chemical reaction process against its diffusion approximation. We will continue to study sensitivity analysis related to QSDs in our subsequent work.

References

- [1] Alan Agresti and Brent A Coull. Approximate is better than “exact” for interval estimation of binomial proportions. The American Statistician, 52(2):119–126, 1998.

- [2] William J Anderson. Continuous-time Markov chains: An applications-oriented approach. Springer Science & Business Media, 2012.

- [3] Russell R Barton and Lee W Schruben. Uniform and bootstrap resampling of empirical distributions. In Proceedings of the 25th conference on Winter simulation, pages 503–508, 1993.

- [4] Michel Benaim, Bertrand Cloez, Fabien Panloup, et al. Stochastic approximation of quasi-stationary distributions on compact spaces and applications. Annals of Applied Probability, 28(4):2370–2416, 2018.

- [5] Pierre Collet, Servet Martínez, and Jaime San Martín. Quasi-stationary distributions: Markov chains, diffusions and dynamical systems. Springer Science & Business Media, 2012.

- [6] John N Darroch and Eugene Seneta. On quasi-stationary distributions in absorbing discrete-time finite markov chains. Journal of Applied Probability, 2(1):88–100, 1965.

- [7] Matthew Dobson, Yao Li, and Jiayu Zhai. An efficient data-driven solver for fokker-planck equations: algorithm and analysis. arXiv preprint arXiv:1906.02600, 2019.

- [8] Matthew Dobson, Yao Li, and Jiayu Zhai. Using coupling methods to estimate sample quality of stochastic differential equations. SIAM/ASA Journal on Uncertainty Quantification, 9(1):135–162, 2021.

- [9] Pablo A Ferrari, Servet Martínez, and Pierre Picco. Existence of non-trivial quasi-stationary distributions in the birth-death chain. Advances in applied probability, pages 795–813, 1992.

- [10] Alexandru Hening and Yao Li. Stationary distributions of persistent ecological systems. arXiv preprint arXiv:2003.04398, 2020.

- [11] Thierry Huillet. On wright–fisher diffusion and its relatives. Journal of Statistical Mechanics: Theory and Experiment, 2007(11):P11006, 2007.

- [12] James E Johndrow and Jonathan C Mattingly. Error bounds for approximations of markov chains used in bayesian sampling. arXiv preprint arXiv:1711.05382, 2017.

- [13] Ioannis Karatzas and Steven Shreve. Brownian motion and stochastic calculus, volume 113. springer, 2014.

- [14] Peter E Kloeden and Eckhard Platen. Stochastic differential equations. In Numerical Solution of Stochastic Differential Equations, pages 103–160. Springer, 1992.

- [15] Ying-Cheng Lai and Tamás Tél. Transient chaos: complex dynamics on finite time scales, volume 173. Springer Science & Business Media, 2011.

- [16] Yao Li. A data-driven method for the steady state of randomly perturbed dynamics. arXiv preprint arXiv:1805.04099, 2018.

- [17] Yao Li and Shirou Wang. Numerical computations of geometric ergodicity for stochastic dynamics. Nonlinearity, 33(12):6935, 2020.

- [18] Bernt Øksendal. Stochastic differential equations. In Stochastic differential equations, pages 65–84. Springer, 2003.

- [19] Christian Robert and George Casella. Monte Carlo statistical methods. Springer Science & Business Media, 2013.

- [20] Erik A Van Doorn. Quasi-stationary distributions and convergence to quasi-stationarity of birth-death processes. Advances in Applied Probability, pages 683–700, 1991.

- [21] Erik A van Doorn and Pauline Schrijner. Geomatric ergodicity and quasi-stationarity in discrete-time birth-death processes. The ANZIAM Journal, 37(2):121–144, 1995.