DAOT: Domain-Agnostically Aligned Optimal Transport for Domain-Adaptive Crowd Counting

Abstract.

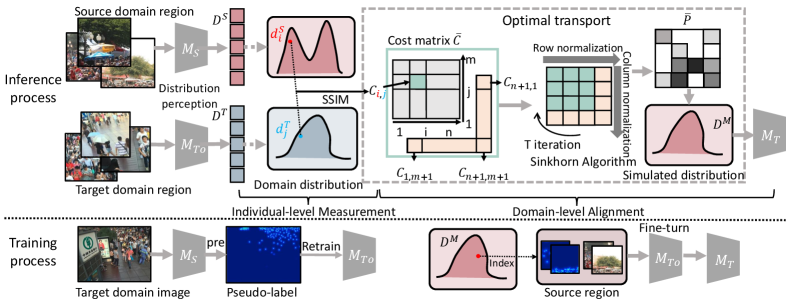

Domain adaptation is commonly employed in crowd counting to bridge the domain gaps between different datasets. However, existing domain adaptation methods tend to focus on inter-dataset differences while overlooking the intra-differences within the same dataset, leading to additional learning ambiguities. These domain-agnostic factors, e.g., density, surveillance perspective, and scale, can cause significant in-domain variations, and the misalignment of these factors across domains can lead to a drop in performance in cross-domain crowd counting. To address this issue, we propose a Domain-agnostically Aligned Optimal Transport (DAOT) strategy that aligns domain-agnostic factors between domains. The DAOT consists of three steps. First, individual-level differences in domain-agnostic factors are measured using structural similarity (SSIM). Second, the optimal transfer (OT) strategy is employed to smooth out these differences and find the optimal domain-to-domain misalignment, with outlier individuals removed via a virtual “dustbin” column. Third, knowledge is transferred based on the aligned domain-agnostic factors, and the model is retrained for domain adaptation to bridge the gap across domains. We conduct extensive experiments on five standard crowd-counting benchmarks and demonstrate that the proposed method has strong generalizability across diverse datasets. Our code will be available at: https://github.com/HopooLinZ/DAOT/.

1. Introduction

Crowd counting is a critical task in multimedia and computer vision (Jiang et al., 2021; Xu et al., 2022; Zhong et al., 2022; Han et al., 2023; Yang et al., 2023; Xie et al., 2023) that aims to estimate the number of people in surveillance images or videos. Three types of methods are commonly used for crowd counting: detection-based (Arteta et al., 2014; Wang et al., 2021), regression-based (Bernardo and Smith, 2001), and density map-based (Cheng et al., 2021; Ma et al., 2022; Yan et al., 2022; Zhou et al., 2022; Liu et al., 2020b; Liang et al., 2021; Lin et al., 2022; Liu et al., 2023a). Detection-based methods are unsuitable for estimating the number of people in dense crowds due to occlusion issues, while regression-based methods are less affected by occlusion but cannot obtain the approximate position distribution of people. The emergence of density map-based methods has addressed the shortcomings of the previous two types of methods and has become the mainstream method for crowd counting. However, most crowd counting methods rely on extensive data annotation and cannot be applied to open scenes. The effect of directly training models with labeled datasets and transferring them to unlabeled scenes is often unsatisfactory due to domain gap issues. As a consequence, unsupervised domain adaptation (UDA) methods (Wang et al., 2019b, a; Gao et al., 2021; Tang et al., 2021; Cho et al., 2022) have been widely applied in crowd counting to bridge the domain gap.

UDA methods in crowd counting cover Synthetic-Real (Syn-Real) or Real-Real scenarios. However, Syn-Real methods often require significant computational resources and cannot capture real crowd characteristics accurately. For the more practical Real-Real cross-domain problem, previous works propose Gaussian process-based iterative learning (Sindagi et al., 2020), and combine detection and density estimation with dual knowledge transfer (Liu et al., 2020a). Nevertheless, these methods prioritize improving their knowledge and fall short in exploring inter-domain relations. To exploit the inter-domain relation, ASNet (Zou et al., 2021) use discriminators to evaluate the similarity between the source and target domains at various levels of granularity, while FGFD (Zhu et al., 2022) utilize inter-domain invariant information for model migration. However, as depicted in Fig. 1, many factors within the same domain, e.g., density, perspective, and scale, exhibit more significant intra-domain differences than inter-domain differences, limiting the ability to discriminate or find similarities between single images across domains. Some research also focuses on domain generalization, e.g., C2MoT (Wu et al., 2021) and DGCC (Du et al., 2022). In C2MoT, only domain-invariant information is explored, but not domain-specific information. In DGCC, although domain information is divided into domain invariant features and domain-specific features, they are considered mutually exclusive and the factual domain-specific features are not explored.

In this paper, we have three key discoveries related to the cross-domain issue in the context of crowd counting. As depicted in Fig. 1, factors that are domain-agnostic, e.g., density, perception, and scale, can lead to more significant variations within the same dataset compared to those observed across different domains. This because many of these factors are not unique to a particular domain and can vary widely even within the same dataset.

Meanwhile, we observe that domain-agnostic factors are misaligned across domains, and the cross-domain performance tends to improve as the different domains become more distributionally consistent. We visualize the distribution of density from UCF-QNRF (Q), NWPU-Crowd (N), ShanghaiTech PartB (A), and ShanghaiTech PartB (B) (Zhang et al., 2016) at the top of Fig. 2. We discover that the frequency distribution of A and Q are more similar, with a higher frequency of samples with a medium crowd density range. Similarly, the frequency distribution of N and B are also more similar, with a higher frequency of samples with a low crowd density range. Moreover, we notice that the performance of the model (Liang et al., 2021) in different cross-domain settings varies significantly. Specifically, the N2B setting outperforms the Q2B setting, and Q2A outperforms N2B, with Q2A even approaching the A2A performance. This discovery indicates that misalignment of domain-agnostic factors at the domain level significantly impacts the model’s cross-domain performance (Liang et al., 2021).

Furthermore, we discover that the effect of domain misalignment on cross-domain performance can outweigh the impact of the quantity of data available in the source domain. As shown in the bottom of Fig. 2, the Q (Idrees et al., 2018) contains 1,535 images, while the N (Wang et al., 2020a) contains 5,109 images. However, when the same settings are applied to the target domain A (Zhang et al., 2016), which consists of 182 images, N performs worse than Q. This discovery underscores the criticality of aligning domain-agnostic factors, including compensating for insufficient data quantity, in optimizing cross-domain model performance.

Based on the above three discoveries, we posit that the domain gap is not solely dependent on inter-domain factors at the image level, but is strongly influenced by the misalignment of domain-agnostic factors at the domain level. In this paper, the domain factors can be concretely represented as the crowd distribution, as this encompasses information such as density, perspective, scale, and other related factors. The objective of this paper is to address domain adaptation by resolving the misalignment of domain-agnostic factors that contribute to the domain gap. Therefore, we propose a three-step domain-adaption approach in an align-and-transfer manner. In the first step, we measure the differences in domain-agnostic factors at the individual level using structural similarity (SSIM) as an indicator. In the second step, we smooth out these domain-agnostic differences. For this purpose, we introduce the optimal transport (OT) strategy to find the optimal domain-to-domain transformation. To handle outlier individuals in the misalignment measurement process, we augment each domain with a virtual column representing a “dustbin” individual, where outlier individuals are removed. Finally, we can transfer knowledge based on the aligned domain-agnostic factors. By aligning the domain-agnostic factors across domains through the optimal domain-to-domain transformation, we retrain the model (Liang et al., 2021) for domain adaptation and bridge the gap across domains.

To summarize, the main contributions are threefold:

-

•

We propose a new perspective on the impact of domain-agnostic factors in crowd counting, highlighting their significant influence on in-domain variations and inferior cross-domain performance due to misalignment at the domain level. This discovery provides a better understanding of the domain gap and the importance of domain alignment for improving cross-domain performance.

-

•

We design a novel domain adaptation method, called Domain-agnostically Aligned Optimal Transport (DAOT), to bridge the domain gap by aligning domain-agnostic factor misalignments between the source and target domains.

-

•

We demonstrate the effectiveness and strong generalizability of the proposed DAOT through extensive experiments on five crowd counting datasets. Experimental results indicate that DAOT outperforms state-of-the-art methods.

2. Related Work

2.1. Optimal Transport

It is well-known that optimal transport (OT) (Peyré et al., 2019; Panaretos and Zemel, 2019) has been widely applied in domain adaptation tasks, particularly in generating target domain data by finding the optimal distance between the source and target domain feature distributions. However, most of these methods (Damodaran et al., 2018; Xu et al., 2020; Chai et al., 2022) focus on generating images similar to those in the target domain, which requires finding the optimal distance between the generated and target images. In contrast, the proposed DAOT differs from traditional methods of OT in domain adaptation, as we establish correspondence between source and target domain distributions without generating new images or altering source domain images, similar to a feature-matching task (Jin et al., 2021).

Compared to point-to-point matching methods (Sarlin et al., 2020; Han et al., 2022; Wang et al., 2023), the proposed DAOT has a broader scope as it involves region-to-region matching. Moreover, we use a distance measurement method that emphasizes spatial structure, namely SSIM. Note that we do not perform person-level matching, as opposed to DR.VIC (Han et al., 2022). Our focus is not on the existence of a matching relation between the query and gallery, but on specific matching and transfer relations.

In crowd counting, OT has been used as a loss function, with DM-Count (Wang et al., 2020b) being one of the first proposed methods. Several methods (Song et al., 2021; Lin and Chan, 2023) have adopted this type of loss. Other than loss functions, we use OT to find the best match rather than simply measuring the difference.

2.2. Unsupervised Domain Adaptation

Most UDA crowd counting methods (Wang et al., 2019b, a; Gao et al., 2021; Liu et al., 2023b; Xie et al., 2023) focus on classifying individual images into either the source or target domain, and therefore aim to bridge the gap between the source and target domains on a per-image basis. However, in Real-Real domain adaptation tasks, many images may have greater intra-domain differences than inter-domain ones. Addressing only the differences within individual images may not be sufficient to fundamentally bridge the gap between domains. Instead, we propose a domain adaptation method based on aligning the overall distribution of data in the source and target domains, rather than focusing solely on individual images. Several crowd counting methods (Zou et al., 2021; Zhu et al., 2022) consider that there are similarities between source and target domains and thus adapt between domains by finding similarities. However, individual similarity retrieval has shifted. Perhaps the found source and target distributions do have strong similarities, but due to the existence of retrieval bias, much of the information in the source distribution is not fully utilized, and only the distribution with the highest similarity is left. In essence, this approach is unable to fully simulate the entire data domain, while the proposed DAOT seeks to maximize the search for transfer relation between the two distributions. Apart from the methods that seek to find the similarities between domains, other methods (Wu et al., 2021; Du et al., 2022) attempt to find common knowledge between them. However, in our opinion, many images in different domains differ significantly in density, scale, and perception, making it difficult to find common knowledge. Even within the same domain, it is challenging to find common knowledge. We believe that rich data is essential for the model to learn knowledge and to have stronger generalization abilities. Therefore, instead of seeking common knowledge, we bridge the domain gap by aligning domain-agnostic factor misalignment between the source and target domains.

3. Proposed Method

3.1. Problem Definition

In this paper, we consider domain adaptation as a problem of aligning domain-agnostic factors between domains, and we employ a region-level distribution-perception method, where the distribution is used to describe the domain-agnostic factors. As shown in Fig. 3, the source domain region is set to , and the target domain region is set to . The model trained with labeled data in the source domain is defined as , the initial model in the target domain is defined as , with the training labels using the pseudo-labels predicted by the source domain model on the target domain data set. And the regions in the two domains are distribution-perception to obtain the source domain distribution and the target domain distribution , respectively. In Sec. 3.2, the distance matrix is calculated using SSIM to measure the distance between each distribution and extended to form the cost matrix , and the final obtained simulated distribution in Sec. 3.3 is defined as . The main method for domain alignment is optimal transport (OT).

The principle behind OT is to minimize the cost of transporting one distribution to another, where the cost is usually defined as the distance between the two distributions. The OT problem can be formulated as a linear programming problem, where the objective function is to minimize the cost of transportation subject to some constraints. Therefore, the first step is to obtain a probability transition matrix that expresses the transfer between two distributions, called the cost matrix . Then, based on the cost matrix, the Sinkhorn algorithm (Sinkhorn and Knopp, 1967; Cuturi, 2013; Peyré et al., 2019) to find the optimal solution solved to find the optimal solution for transporting between the distributions. In our paper, local distribution matching can be achieved by solving an augmented version of Kantorovich’s OT problem (Peyré et al., 2019). And denotes the discrete of and .

| (1) |

where is a transportation matrix. represents the distance between each element in the source and target domains, in Eq. (2).

3.2. Individual-Level Measurement

We believe that domain-agnostic factors are variable in both inter-domains and intra-domain, which makes individual images not exclusively belong to a particular domain, while misalignment of these factors at the domain level lead to the domain gap. Therefore, this paper aims to bridge the domain gap by aligning domain-agnostic factors. To achieve domain-level alignment, we measure individual-level differences using the following algorithm.

3.2.1. Describing Domain-Agnostic Factors

Due to the diversity of distributions increasing with scale, it is challenging to align at the individual level without altering the image itself. Thus, we align the regions in the image to address this issue. Therefore, we divides the whole image in the source and target domains into different regions and forms the sets and . Subsequently, the distributions and of the source and target domains are obtained after distribution perception of the source domain model and the preliminary target domain model . We utilize FIDT (Liang et al., 2021) as our location-sensitive distribution-perception model.

3.2.2. Structural Similarity and Cost Matrix

The solution of the transfer matrix between the distributions heavily relies on the distance metric function between the distributions. In the crowd counting distribution distance metric, due to the huge and dense crowd, it possesses rich brightness, contrast, and structural variation. The SSIM similarity can fully combine this information, and the calculated similarity is not only the value of the vector but the structural properties in the whole distribution.

For a given source domain distribution set and target domain distribution set , we can calculate an SSIM distance, , between each element and . The formula is defined as Eq. (2).

| (2) |

| (3) |

where and separately denote the mean of and standard deviation of . is covariance of and . We set and as and . as the final cost matrix, the shape of .

| (4) |

where is used to represent the distance between source domain region distribution and target domain region distribution. As shown in Fig. 4, to ensure the reliability of the distance scores, we set , , and as “dustbin” column to remove unreliable rows and columns, where represents SSIM value between the distribution of each source domain sample and a distribution consisting of all ones, while represents SSIM value between the distribution of each target domain sample and the same distribution consisting of all ones. represents the SSIM value between a matrix consisting of all zeros and the same distribution consisting of all ones, which serves as a threshold for determining the reliability of each element in the distance matrix . If the minimum value in any row or column of exceeds , the corresponding row or column is zeroed out to filter out unreliable elements and enhance the Sinkhorn algorithm’s robustness.

3.3. Domain-Level Alignment

In this paper, the inter-domain adaptation task is transformed into an inter-domain domain-agnostic factor alignment problem, and further, the alignment of domain-agnostic factors is considered as finding the optimal mapping between domain-level distributions, which are used to describe domain-agnostic factors at the domain level. It is also the solution of Eq. (1). The function we use to find the optimal transport is Sinkhorn (Sinkhorn and Knopp, 1967). The ultimate goal of the OT problem is to find a matrix that optimally transfers the source distribution to the target distribution so that the product of . This is essentially a linear programming problem Eq. (1) with constraints Eq. (4), the constraints ensure that the probabilities of mapping between the source and target distributions sum up to one for each element, and vice versa.

3.3.1. Sinkhorn Algorithm

Sinkhorn Algorithm (Sinkhorn and Knopp, 1967; Peyré et al., 2019) is applied to solve for , which represents the cost of transporting each element from the source domain to the target domain. The algorithm computes the optimal transport plan from the source domain to the target domain and returns a mapping between the indices of the source and target elements that minimizes the overall cost of the transport plan. In Sec. 3.3, the cost matrix , between the domains has been obtained.

This algorithm is a differentiable version of the Hungarian algorithm (Munkres, 1957), which is typically used for bipartite matching. It involves iterative normalizing along rows and columns, similar to row and column Softmax, and the DAOT for calculating discrete is SSIM. This means minimizing Eq. (1). Specifically, subject to the constraints that the marginals of match the given weights:

| (5) |

where the algorithm updates the rows of as follows:

| (6) |

where is an index variable used for summation, it represents that for the -th source sample and the -th target sample, we need to sum over all , which represents the -th sample in the source domain. Therefore, the formula can be understood as computing the transition probability between the -th source sample and the -th target sample and using information from all source domain samples to calculate it. And then updates the columns of by applying the same operation to the transpose of . The regularization parameter prevents division by zero. This row and column scaling process is repeated iteratively until the resulting transport plan converges to a stable solution. The symbol ”” in Eq. 6 indicates the final output of the Sinkhorn Algorithm. The algorithm stops at , following the convention in (Cuturi, 2013).

3.3.2. Final Mapping and Target Model

After obtaining the optimal transport plan using the Sinkhorn algorithm, we can use it to obtain a mapping between the source and target domains. As shown in Fig. 4, this mapping represents the correspondence between the source and target samples, and can be used to train a model that can translate samples from the source to the target domain. To obtain the mapping, we find the index in the source domain that has the highest probability of being paired with each target sample , which can be computed as . We consider the correspondences between the source and target samples only if the corresponding probability value is non-zero. This procedure gives us the mapping between the source and target samples with non-zero correspondence values.

Finally, we obtain the distribution to fine-tune the initial model and obtain the final target domain model .

| Method | backbone | Type | AD | A2B | A2Q | Q2A | Q2B | N2A | N2B | J2B | J2Q | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | ||||

| Cycle GAN (Zhu et al., 2017) | CycleGAN | Syn | ● | 25.4 | 39.7 | 257.3 | 400.6 | 143.3 | 204.3 | 25.4 | 39.7 | 143.3 | 204.3 | 25.4 | 39.7 | 25.4 | 39.7 | 257.3 | 400.6 |

| GP (Sindagi et al., 2020) | ResNet50 | Syn | ● | 12.8 | 19.2 | 210.0 | 351.0 | 121.0 | 181.0 | 12.8 | 19.2 | 121.0 | 181.0 | 12.8 | 19.2 | 12.8 | 19.2 | 210.0 | 351.0 |

| DACC (Gao et al., 2021) | CycleGAN | Syn | ● | 13.1 | 19.4 | 203.5 | 343.0 | 112.4 | 176.9 | 13.1 | 19.4 | 112.4 | 176.9 | 13.1 | 19.4 | 13.1 | 19.4 | 203.5 | 343.0 |

| BLA (Gong et al., 2022) | VGG16 | Syn | ● | 11.9 | 18.9 | 198.9 | 316.1 | 99.3 | 145.0 | 11.9 | 18.9 | 99.3 | 145.0 | 11.9 | 18.9 | 11.9 | 18.9 | 198.9 | 316.1 |

| CDCC (Liu et al., 2022) | CycleGAN | Syn | ● | 11.4 | 17.3 | 198.3 | 332.9 | 109.2 | 168.1 | 11.4 | 17.3 | 109.2 | 168.1 | 11.4 | 17.3 | 11.4 | 17.3 | 198.3 | 332.9 |

| SPN+L2SM (Xu et al., 2019) | VGG16 | Real | ○ | 21.2 | 38.7 | 227.2 | 405.2 | 73.4 | 119.4 | - | - | - | - | - | - | - | - | - | - |

| BL (Ma et al., 2019) | VGG16 | Real | ○ | 15.9 | 25.8 | 135.8 | 252.6 | 69.8 | 123.8 | 15.3 | 26.5 | - | - | - | - | - | - | - | - |

| RBT (Liu et al., 2020a) | VGG16 | Real | ● | 13.4 | 29.3 | 175.0 | 294.8 | - | - | - | - | - | - | - | - | - | - | - | - |

| D2CNet (Cheng et al., 2021) | VGG16 | Real | ● | 21.6 | 34.6 | 126.8 | 245.5 | 61.2 | 100.8 | 10.2 | 19.1 | 93.3 | 167.1 | 11.1 | 16.0 | 27.4 | 43.2 | 171.4 | 302.2 |

| ASNet (Zou et al., 2021) | VGG16 | Real | ● | 13.6 | 23.2 | - | - | - | - | - | - | - | - | - | - | - | - | - | - |

| C2MoT (Wu et al., 2021) | VGG19 | Real | ● | 12.4 | 21.1 | 125.7 | 218.3 | - | - | - | - | - | - | - | - | - | - | 135.3 | 251.9 |

| FGFD (Zhu et al., 2022) | HRNet | Real | ● | 12.7 | 23.3 | 124.1 | 242.0 | 70.2 | 118.4 | 12.5 | 20.3 | 83.2 | 138.1 | 10.2 | 18.6 | 13.4 | 20.9 | 166.7 | 320.0 |

| DGCC (Du et al., 2022) | VGG16 | Real | ● | 12.6 | 24.6 | 119.4 | 216.6 | 67.4 | 112.8 | 12.1 | 20.9 | - | - | - | - | - | - | - | - |

| FIDT (Liang et al., 2021) | HRNet | Real | ○ | 16.0 | 26.9 | 129.3 | 254.1 | 72.6 | 132.3 | 12.5 | 21.7 | 83.6 | 138.1 | 9.8 | 17.0 | 14.2 | 27.3 | 166.6 | 282.4 |

| DAOT (Ours) | HRNet | Real | ● | 10.9 | 18.8 | 113.9 | 215.6 | 67.0 | 128.4 | 11.3 | 19.6 | 79.9 | 135.0 | 8.8 | 14.8 | 10.5 | 17.4 | 146.5 | 255.2 |

4. Experimental Result

4.1. Datasets and Implementation Details

Shanghai Tech (Zhang et al., 2016) is a benchmark for crowd counting contains 1,198 scene images and 330,165 labeled head positions, which is subdivided into PartA (A) and PartB (B). A is densely populated and contains 300 training images and 182 testing images; B is relatively sparser and contains 400 training images and 316 testing images.

UCF-QNRF (Q) (Idrees et al., 2018) is a challenging crowd counting dataset with rich scenes and varying viewpoints, densities, and illumination conditions. It contains 1,535 images of dense crowd scenes, with 1,201 training images and 334 testing images.

NWPU-Crowd (N) (Wang et al., 2020a) has 5,109 high resolution images and annotated entities 2,133,238. The large range of annotated entity numbers for a single image is extremely challenging. Additionally, some images have object annotation number of 0.

JHU-CROWD++ (J) (Sindagi et al., 2020) contains 4,372 images with a total of 1.51 million annotations, including some based on extreme weather variation and light variation, and introduces negative samples of entities annotated with zero.

Loss Function. We use the loss function proposed in FIDT (Liang et al., 2021) for training, where is set to 1 following FIDT.

| (7) |

Evaluation Metrics. In this paper, the evaluation metrics used are mean absolute error (MAE) and root mean square error (RMSE) introduced in (Zhang et al., 2016). MAE assesses model accuracy, while RMSE evaluates model robustness.

Optimal Transport. In A2Q setting, the regularization parameter is set to , while in Q2B setting, it is set to . All other settings are set to . The algorithm stops at .

Retraining. We use adaptive moment estimation (Adam) (Kingma and Ba, 2015) to optimize the model with the learning rate of , and the weight decay is set as . We set the size of the training batch to .

4.2. Comparison with the State-of-the-Arts

To evaluate the effectiveness of DAOT, this subsection compares it with other state-of-the-art cross-domain methods. We conduct quantitative evaluations through five sets of adaptation experiments, reporting the error between the predicted density maps and ground-truth maps on the target set for each dataset pair. We compare DAOT with three types of counting methods: 1) state-of-the-art counting methods without domain adaptation (Xu et al., 2019; Cheng et al., 2021), 2) Syn-Real domain adaptation methods (Zhu et al., 2017; Wang et al., 2019a; Sindagi et al., 2020; Gao et al., 2021; Gong et al., 2022; Liu et al., 2022), and 3) Real-Real domain adaptation methods (Zou et al., 2021; Wu et al., 2021; Du et al., 2022; Zhu et al., 2022), as well as our baseline method, FIDT (Liang et al., 2021). Notably, the source domains for Syn-Real methods are large-scale synthetic GCC (Wang et al., 2019a).

As shown in Table 1, the proposed DAOT achieves the best performance in five groups’ experiments (A2B, A2Q, N2A, N2B, and J2B) and obtained the second-best performance among the other groups (Q2A, Q2B, and J2Q). Especially with a significant improvement over other methods in the setting of A2B and N2B. The DAOT outperforms the baseline in all 8 settings and achieves better results than the similarity-based FGFD (Zhu et al., 2022) method in multiple settings. Compared with D2CNet (Cheng et al., 2021), the proposed DAOT is slightly lower than D2CNet, indicating that D2CNet is more suitable for the group with the source domain as Q, while the DAOT has a stronger generalization ability. Compared with C2MoT (Wu et al., 2021), the DAOT is slightly lower than C2MoT, but the amount of data used for retraining is much lower than that of C2MoT, and C2MoT is more dependent on large amounts of data. When the target domain is B, DAOT often achieves better performance, which proves that it has a stronger fitting ability for sparse density distribution. In the setting of A2Q and N2B with aligned domain-agnostic factors, the proposed DAOT method can further improve the excellent performance.

4.3. Ablation Study

Ablation Study on Region Size The transition relation between entire images across domains is limited, while the transition relation between regions exists extensively across different domains. As the region size decreases, the accuracy of distance measurement between distributions will decrease. As the region size increases, the transition relation between distributions gradually decreases. As shown in Fig. 5, as the region size decreases from the entire image to 256, the MAE and RMSE metrics correspondingly improve. However, as the region size continues to decrease beyond 256, MAE and RMSE begin to exhibit a rising trend.

Ablation Study on Various Factors Performance To achieve domain adaptation in crowd counting, we transform it into a domain-agnostic factors alignment problem and adopt optimal transport (OT) to find the best transfer distance between distributions. In Table 2, using all factors achieves the best performance in all experiments. OT significantly improved performance, especially in Q2A experiment. Using only pseudo-labels and SSIM achieves the second-best performance, showing a slight improvement over using only source-domain data. This suggests that adjusting the density distribution misalignment can bridge the domain gap.

| Source | Pre. | SSIM | OT | A2B | A2Q | Q2A | Q2B | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | ||||

| ● | ○ | ○ | ○ | 16.0 | 26.9 | 129.3 | 254.1 | 72.6 | 132.3 | 12.5 | 21.7 |

| ○ | ● | ○ | ○ | 13.1 | 27.1 | 120.5 | 216.8 | 76.9 | 138.4 | 13.7 | 22.6 |

| ○ | ● | ● | ○ | 11.0 | 19.6 | 116.5 | 219.6 | 76.4 | 138.1 | 12.3 | 24.3 |

| ○ | ● | ● | ● | 10.9 | 18.8 | 113.9 | 215.6 | 67.0 | 128.4 | 11.3 | 19.6 |

| Metric | A2B | A2Q | Q2A | Q2B | ||||

|---|---|---|---|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | |

| Source | 16.0 | 26.9 | 129.3 | 254.1 | 72.6 | 132.3 | 12.5 | 21.7 |

| ED | 14.4 | 25.3 | 125.6 | 218.3 | 68.1 | 130.0 | 14.1 | 25.9 |

| KL | 11.2 | 21.0 | 114.7 | 218.9 | 80.7 | 150.3 | 13.3 | 27.0 |

| SSIM | 10.9 | 18.8 | 113.9 | 215.6 | 67.0 | 128.4 | 11.3 | 19.6 |

Ablation Study on Distance Metric This paper aims to find the optimal transport between distributions, by measuring the distribution distance between the source and target domains before using OT. The OT ultimately obtains a more reasonable distribution distance. The distance measurement method is crucial for the final solution, and we conduct ablation studies on different similarity metrics, which are shown in Table 3. The three distance metric methods have all shown some improvement compared to using source domain data alone, especially when using SSIM.

4.4. Qualitative Analysis

Analysis of t-SNE Visualization To more intuitively demonstrate the changes in domain-agnostic factors before and after domain adaptation, we visualize Fig. 6. In A2B, the clustering of the overall image distribution shows the most obvious difference, with the distribution of B more concentrated in the lower left corner. This indicates that there is more similarity in the crowd distribution between A and B, but the range of A is wider. In the regional clustering, most of the data in the regions of A and B overlap, but the distribution range of A is still wider than that of B. From the third and fourth columns, it can be seen that although the simulated distribution comes entirely from the source domain, it is highly overlapped with the target domain and more concentrated than the distribution of the source domain.

In A2Q, the clustering of the overall image distribution shows higher overlap, but the distribution of Q is more concentrated in the center. In regional clustering, the distribution of Q shows a more obvious clustering phenomenon. In the visualizations of the third and fourth columns, it can be clearly seen that the simulated distribution is closer to the target domain.

Analysis of Cross-Domain Density Map Prediction To visualize the cross-domain performance of different methods in a straightforward manner, we visualize Fig. 7. The figure illustrates that the proposed DAOT method outperforms other methods in various scenarios, ranging from dense to sparse (B2A), from large-scale to small-scale (Q2A), and even from small-scale dense to large-scale (A2Q). Particularly in Q2A setting, the DAOT prediction is very close to the ground truth. In the first two rows (Q2A and A2Q), there are fewer erroneous classifications of the background, and in the third row (A2B), the estimation of the crowd is more accurate. We have highlighted these parts with red boxes.

Analysis of Distribution Match The final output distribution has a matching relation with the initial target domain distribution, and we visualize the distribution matching in Fig. 11. We demonstrate the retrieved source domain regions using different methods on the target domain regions ranging from dense to sparse. As shown in the figure, the DAOT achieves superior performance across all density levels, even in scenarios with few people or only background. Compared with the method using only SSIM, the proposed DAOT achieves better performance in the second column with denser scenarios, and has better perception simulation for the densest scenario. Compared with the diffusion-based FGFD (Zhu et al., 2022), it achieves better results in the densest and sparsest scenarios, and has better simulation for the location of pedestrians.

5. Conclusion

In crowd counting domain adaptation task, it has been observed that the intra-domain differences are larger than the inter-domain differences in many data domains. However, even for large-scale source domains, the model’s performance in cross-domain tasks is consistently inferior to that in the source domain. Our research has revealed that this is mainly due to the misalignment of domain-agnostic factors across domains, which strongly influences the cross-domain performance of the model. Therefore, we propose to bridge the inter-domain gap in crowd counting by aligning the misalignment of domain-agnostic factors using optimal transportation in both the source and target domains. Our approach is the first domain adaptation method for crowd counting that considers the entire data domain, unlike previous methods that deal with individual distributions. We treat the overall domain distribution as a whole and achieve the best performance on multiple datasets. This work provides a new perspective on crowd counting domain adaptation, and we believe that solving the problem of domain misalignment will benefit not only cross-domain tasks but also other related applications.

Acknowledgements.

This work was supported in part by the National Natural Science Foundation of China (62271361 and 62171325), and the Department of Science and Technology, Hubei Provincial People’s Government (2021CFB513), Guangdong Distinguished Young Scholar (2023B1515020097), and Singapore MOE Tier 1 Fund (MSS23C002).References

- (1)

- Arteta et al. (2014) Carlos Arteta, Victor S. Lempitsky, J. Alison Noble, and Andrew Zisserman. 2014. Interactive Object Counting. In Proc. Eur. Conf. Comput. Vis. 504–518.

- Bernardo and Smith (2001) José M. Bernardo and Adrian F. M. Smith. 2001. Bayesian Theory. Meas. Sci. Technol. 12 (2001), 221–222.

- Chai et al. (2022) Liangyu Chai, Yongtuo Liu, Wenxi Liu, Guoqiang Han, and Shengfeng He. 2022. CrowdGAN: Identity-Free Interactive Crowd Video Generation and Beyond. IEEE Trans. Pattern Anal. Mach. Intell. 44, 6 (2022), 2856–2871.

- Cheng et al. (2021) Jian Cheng, Haipeng Xiong, Zhiguo Cao, and Hao Lu. 2021. Decoupled Two-Stage Crowd Counting and Beyond. IEEE Trans. Image Process. 30 (2021), 2862–2875.

- Cho et al. (2022) Yoonki Cho, Woo Jae Kim, Seunghoon Hong, and Sung-Eui Yoon. 2022. Part-based Pseudo Label Refinement for Unsupervised Person Re-identification. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 7298–7308.

- Cuturi (2013) Marco Cuturi. 2013. Sinkhorn Distances: Lightspeed Computation of Optimal Transport. In Adv. Neural Inf. Process. Syst. 2292–2300.

- Damodaran et al. (2018) Bharath Bhushan Damodaran, Benjamin Kellenberger, Rémi Flamary, Devis Tuia, and Nicolas Courty. 2018. DeepJDOT: Deep Joint Distribution Optimal Transport for Unsupervised Domain Adaptation. In Proc. Eur. Conf. Comput. Vis. 467–483.

- Du et al. (2022) Zhipeng Du, Jiankang Deng, and Miaojing Shi. 2022. Domain-general Crowd Counting in Unseen Scenarios. arXiv:2212.02573 (2022).

- Gao et al. (2021) Junyu Gao, Tao Han, Yuan Yuan, and Qi Wang. 2021. Domain-adaptive crowd counting via high-quality image translation and density reconstruction. IEEE Trans. Neural Networks Learn. Syst. (2021), 1–13.

- Gong et al. (2022) Shenjian Gong, Shanshan Zhang, Jian Yang, Dengxin Dai, and Bernt Schiele. 2022. Bi-level Alignment for Cross-Domain Crowd Counting. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 7532–7540.

- Han et al. (2022) Tao Han, Lei Bai, Junyu Gao, Qi Wang, and Wanli Ouyang. 2022. DR.VIC: Decomposition and Reasoning for Video Individual Counting. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 3073–3082.

- Han et al. (2023) Zongbo Han, Changqing Zhang, Huazhu Fu, and Joey Tianyi Zhou. 2023. Trusted Multi-View Classification With Dynamic Evidential Fusion. IEEE Trans. Pattern Anal. Mach. Intell. 45, 2 (2023), 2551–2566.

- Idrees et al. (2018) Haroon Idrees, Muhmmad Tayyab, Kishan Athrey, Dong Zhang, Somaya Al-Máadeed, Nasir M. Rajpoot, and Mubarak Shah. 2018. Composition Loss for Counting, Density Map Estimation and Localization in Dense Crowds. In Proc. Eur. Conf. Comput. Vis. 544–559.

- Jiang et al. (2021) Kui Jiang, Zhongyuan Wang, Peng Yi, Chen Chen, Zheng Wang, Xiao Wang, Junjun Jiang, and Chia-Wen Lin. 2021. Rain-Free and Residue Hand-in-Hand: A Progressive Coupled Network for Real-Time Image Deraining. IEEE Trans. Image Process. 30 (2021), 7404–7418.

- Jin et al. (2021) Yuhe Jin, Dmytro Mishkin, Anastasiia Mishchuk, Jiri Matas, Pascal Fua, Kwang Moo Yi, and Eduard Trulls. 2021. Image Matching Across Wide Baselines: From Paper to Practice. Int. J. Comput. Vis. 129, 2 (2021), 517–547.

- Kingma and Ba (2015) Diederik P. Kingma and Jimmy Ba. 2015. Adam: A Method for Stochastic Optimization. In Proc. Int. Conf. Learn. Represent. 1–11.

- Liang et al. (2021) Dingkang Liang, Wei Xu, Yingying Zhu, and Yu Zhou. 2021. Focal Inverse Distance Transform Maps for Crowd Localization. IEEE Trans. Multimedia (2021), 1–13.

- Lin et al. (2022) Hui Lin, Zhiheng Ma, Rongrong Ji, Yaowei Wang, and Xiaopeng Hong. 2022. Boosting Crowd Counting via Multifaceted Attention. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 19596–19605.

- Lin and Chan (2023) Wei Lin and Antoni B. Chan. 2023. Optimal Transport Minimization: Crowd Localization on Density Maps for Semi-Supervised Counting. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 21663–21673.

- Liu et al. (2022) Weizhe Liu, Nikita Durasov, and Pascal Fua. 2022. Leveraging Self-Supervision for Cross-Domain Crowd Counting. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 5331–5342.

- Liu et al. (2023a) Yongtuo Liu, Sucheng Ren, Liangyu Chai, Hanjie Wu, Dan Xu, Jing Qin, and Shengfeng He. 2023a. Reducing Spatial Labeling Redundancy for Active Semi-Supervised Crowd Counting. IEEE Trans. Pattern Anal. Mach. Intell. 45, 7 (2023), 9248–9255.

- Liu et al. (2020a) Yuting Liu, Zheng Wang, Miaojing Shi, Shin’ichi Satoh, Qijun Zhao, and Hongyu Yang. 2020a. Towards unsupervised crowd counting via regression-detection bi-knowledge transfer.. In Proc. ACM Int. Conf. Multimedia. 129–137.

- Liu et al. (2020b) Yongtuo Liu, Qiang Wen, Haoxin Chen, Wenxi Liu, Jing Qin, Guoqiang Han, and Shengfeng He. 2020b. Crowd Counting Via Cross-Stage Refinement Networks. IEEE Trans. Image Process. 29 (2020), 6800–6812.

- Liu et al. (2023b) Yongtuo Liu, Dan Xu, Sucheng Ren, Hanjie Wu, Hongmin Cai, and Shengfeng He. 2023b. Fine-grained Domain Adaptive Crowd Counting via Point-derived Segmentation. In Proc. IEEE Int. Conf. Multimedia and Expo.

- Ma et al. (2022) Yu-Jen Ma, Hong-Han Shuai, and Wen-Huang Cheng. 2022. Spatiotemporal Dilated Convolution With Uncertain Matching for Video-Based Crowd Estimation. IEEE Trans. Multimedia 24 (2022), 261–273.

- Ma et al. (2019) Zhiheng Ma, Xing Wei, Xiaopeng Hong, and Yihong Gong. 2019. Bayesian Loss for Crowd Count Estimation With Point Supervision. In Proc. IEEE/CVF Int. Conf. Comput. Vis. 6141–6150.

- Munkres (1957) James Munkres. 1957. Algorithms for the assignment and transportation problems. J. Soc. Ind. Appl. Math. 5, 1 (1957), 32–38.

- Panaretos and Zemel (2019) Victor M. Panaretos and Yoav Zemel. 2019. Statistical aspects of Wasserstein distances. Annu. Rev. Stat. Its Appl. 6 (2019), 405–431.

- Peyré et al. (2019) Gabriel Peyré, Marco Cuturi, et al. 2019. Computational optimal transport: With applications to data science. Found. Trends Mach. Learn. 11, 5–6 (2019), 355–607.

- Sarlin et al. (2020) Paul-Edouard Sarlin, Daniel DeTone, Tomasz Malisiewicz, and Andrew Rabinovich. 2020. SuperGlue: Learning Feature Matching With Graph Neural Networks. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 4937–4946.

- Sindagi et al. (2020) Vishwanath A Sindagi, Rajeev Yasarla, and Vishal M Patel. 2020. Jhu-crowd++: Large-scale crowd counting dataset and a benchmark method. IEEE Trans. Pattern Anal. Mach. Intell. 44, 5 (2020), 2594–2609.

- Sinkhorn and Knopp (1967) Richard Sinkhorn and Paul Knopp. 1967. Concerning nonnegative matrices and doubly stochastic matrices. Pac. J. Math. 21, 2 (1967), 343–348.

- Song et al. (2021) Qingyu Song, Changan Wang, Zhengkai Jiang, Yabiao Wang, Ying Tai, Chengjie Wang, Jilin Li, Feiyue Huang, and Yang Wu. 2021. Rethinking Counting and Localization in Crowds: A Purely Point-Based Framework. In Proc. IEEE/CVF Int. Conf. Comput. Vis. 3345–3354.

- Tang et al. (2021) Geyu Tang, Xingyu Gao, Zhenyu Chen, and Huicai Zhong. 2021. Unsupervised adversarial domain adaptation with similarity diffusion for person re-identification. Neurocomputing 442 (2021), 337–347.

- Wang et al. (2020b) Boyu Wang, Huidong Liu, Dimitris Samaras, and Minh Hoai Nguyen. 2020b. Distribution Matching for Crowd Counting. In Adv. Neural Inf. Process. Syst.

- Wang et al. (2023) Haiping Wang, Yuan Liu, Qingyong Hu, Bing Wang, Jianguo Chen, Zhen Dong, Yulan Guo, Wenping Wang, and Bisheng Yang. 2023. RoReg: Pairwise Point Cloud Registration With Oriented Descriptors and Local Rotations. IEEE Trans. Pattern Anal. Mach. Intell. 45, 8 (2023), 10376–10393.

- Wang et al. (2019b) Li Wang, Yongbo Li, and Xiangyang Xue. 2019b. CODA: Counting Objects via Scale-Aware Adversarial Density Adaption. In Proc. IEEE Int. Conf. Multimedia Expo. 193–198.

- Wang et al. (2020a) Qi Wang, Junyu Gao, Wei Lin, and Xuelong Li. 2020a. NWPU-crowd: A large-scale benchmark for crowd counting and localization. IEEE Trans. Pattern Anal. Mach. Intell. 43, 6 (2020), 2141–2149.

- Wang et al. (2019a) Qi Wang, Junyu Gao, Wei Lin, and Yuan Yuan. 2019a. Learning From Synthetic Data for Crowd Counting in the Wild. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 8198–8207.

- Wang et al. (2021) Xiao Wang, Zheng Wang, Wu Liu, Xin Xu, Jing Chen, and Chia-Wen Lin. 2021. Consistency-Constancy Bi-Knowledge Learning for Pedestrian Detection in Night Surveillance. In Proc. ACM Int. Conf. Multimedia. 4463–4471.

- Wu et al. (2021) Qiangqiang Wu, Jia Wan, and Antoni B. Chan. 2021. Dynamic Momentum Adaptation for Zero-Shot Cross-Domain Crowd Counting. In Proc. ACM Int. Conf. Multimedia. 658–666.

- Xie et al. (2023) Haiyang Xie, Zhengwei Yang, Huilin Zhu, and Zheng Wang. 2023. Striking a Balance: Unsupervised Cross-Domain Crowd Counting via Knowledge Diffusion. In Proc. ACM Int. Conf. Multimedia.

- Xu et al. (2019) Chenfeng Xu, Kai Qiu, Jianlong Fu, Song Bai, Yongchao Xu, and Xiang Bai. 2019. Learn to Scale: Generating Multipolar Normalized Density Maps for Crowd Counting. In Proc. IEEE/CVF Int. Conf. Comput. Vis. 8381–8389.

- Xu et al. (2020) Renjun Xu, Pelen Liu, Liyan Wang, Chao Chen, and Jindong Wang. 2020. Reliable Weighted Optimal Transport for Unsupervised Domain Adaptation. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 4393–4402.

- Xu et al. (2022) Xin Xu, Xin Yuan, Z. Wang, Kai Zhang, and Ruimin Hu. 2022. Rank-in-Rank Loss for Person Re-identification. ACM Trans. Multimedia Comput. Commun. Appl. 18, 2s (2022), 1–21.

- Yan et al. (2022) Zhaoyi Yan, Ruimao Zhang, Hongzhi Zhang, Qingfu Zhang, and Wangmeng Zuo. 2022. Crowd Counting Via Perspective-Guided Fractional-Dilation Convolution. IEEE Trans. Multimedia 24 (2022), 2633–2647.

- Yang et al. (2023) Zhengwei Yang, Xian Zhong, Zhun Zhong, Hong Liu, Zheng Wang, and Shin’ichi Satoh. 2023. Win-Win by Competition: Auxiliary-Free Cloth-Changing Person Re-Identification. IEEE Trans. Image Process. 32 (2023), 2985–2999.

- Zhang et al. (2016) Yingying Zhang, Desen Zhou, Siqin Chen, Shenghua Gao, and Yi Ma. 2016. Single-Image Crowd Counting via Multi-Column Convolutional Neural Network. In Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. 589–597.

- Zhong et al. (2022) Xian Zhong, Shidong Tu, Xianzheng Ma, Kui Jiang, Wenxin Huang, and Zheng Wang. 2022. Rainy WCity: A Real Rainfall Dataset with Diverse Conditions for Semantic Driving Scene Understanding. In Proc. Int. Joint Conf. Artif. Intell. 1743–1749.

- Zhou et al. (2022) Joey Tianyi Zhou, Le Zhang, Jiawei Du, Xi Peng, Zhiwen Fang, Zhe Xiao, and Hongyuan Zhu. 2022. Locality-Aware Crowd Counting. IEEE Trans. Pattern Anal. Mach. Intell. 44, 7 (2022), 3602–3613.

- Zhu et al. (2022) Huilin Zhu, Jingling Yuan, Zhengwei Yang, Xian Zhong, and Zheng Wang. 2022. Fine-Grained Fragment Diffusion for Cross Domain Crowd Counting. In Proc. ACM Int. Conf. Multimedia. 5659–5668.

- Zhu et al. (2017) Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A. Efros. 2017. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proc. IEEE/CVF Int. Conf. Comput. Vis. 2242–2251.

- Zou et al. (2021) Zhikang Zou, Xiaoye Qu, Pan Zhou, Shuangjie Xu, Xiaoqing Ye, Wenhao Wu, and Jin Ye. 2021. Coarse to Fine: Domain Adaptive Crowd Counting via Adversarial Scoring Network. In Proc. ACM Int. Conf. Multimedia. 2185–2194.

Appendix

Appendix A Experiment on Virtual Data

To demonstrate the significant impact of the misalignment between the source and target domains on the cross-domain performance, we simulated a dataset (from A and Q) similar to the A test set distribution as the training set and evaluated it on the test set.

As shown in Fig. 9 and Table 4, when the crowd distribution in the training data is similar to that in the test set, the model’s performance is also close to the non-cross-domain performance. Even in cases where the data distribution is closer, less data has achieved better performance.

| Setting | A2A | V2A |

|---|---|---|

| Amount of Training Data | 300 | 295 |

| MAE | 70.9 | 67.0 |

| RMSE | 140.7 | 134.0 |

Appendix B Visualization of density map

We show in Fig. 10 the detailed visualization of the cross-domain experiments on A2Q, J2Q, A2B, and N2B. Each column stands for the crowd image, the corresponding Ground truth, the density maps produced by the Baseline method FIDT and the results predicted by our DAOT method. Our method has been demonstrated to outperform the baseline approach in scenes with varying crowd densities, including dense-to-sparse (A2B), sparse-to-dense (A2Q), and dense-to-dense (J2Q, N2B) scenes. The primary reason is that our method leverages the alignment of domain-agnostic factors between the source and target domains, facilitating effective model migration. Specifically, our method is able to learn to match the density, surveillance perspective, scale, and other relevant factors in a domain-agnostic way, which improves the ability of the model to generalize to new domains with different crowd distributions. This lets the model perceive the distribution and background better, leading to more accurate crowd counting.

Appendix C Visualization of various factors alignment

We visualize the distribution matching results in Fig. 11 under different settings, arranged from sparse to dense from top to bottom. Our method aligns the distributions in terms of density, head scale, and perception in each group, as demonstrated by the visualizations in Fig. 11, which are arranged from sparse to dense. Notably, in the third row of A2Q, the crowds in the two patches on the left and right are extremely similar in density, scale, perception, and even background factors. Additionally, the first row of A2B and Q2A shows similar background matching in the absence of people. These results demonstrate that our method can align various factors of crowd distributions.

Appendix D Regularization Term

The OT regularization term enhances the smoothness and stability of the OT plan by penalizing the cost function. It usually measures the distance or divergence between the transport plan and a reference distribution, often a prior distribution. This regularization prevents overfitting, improves stability and robustness, and allows incorporating prior knowledge or constraints when dealing with noisy or high-dimensional data.

As shown in Table 5, we varied from to 1. When was between 1 and 10, the number of mapped distribution data points was less than 100. We also experimented with set as and 0 in four groups. With , the final optimal mapping data points were all less than 50, and with , no mapping was obtained in any of the four groups, highlighting the importance of the regularization term. Different values for the regularization term slightly affected the performance of the four groups. A2B and Q2A achieved the best performance with , A2Q performed best with , and Q2B achieved its best performance with .

| Reg-term | A2B | A2Q | Q2A | Q2B | ||||

|---|---|---|---|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | MAE | RMSE | MAE | RMSE | |

| 10.9 | 18.8 | 118.5 | 214.9 | 67.0 | 128.4 | 12.4 | 21.4 | |

| 11.5 | 20.3 | 113.9 | 215.6 | 72.4 | 136.2 | 12.8 | 22.9 | |

| 11.9 | 20.2 | 116.3 | 217.3 | 73.7 | 142.1 | 11.3 | 19.6 | |

| 11.1 | 18.4 | 121.1 | 219.7 | 72.1 | 139.1 | 13.0 | 23.1 | |

Appendix E Analysis of Domain Alignment

To demonstrate the density frequency before and after the transfer, we visualize frequency distribution histograms of the source and target domain distributions and the simulated distribution histograms after transformation, as shown in Fig. 12. Since our distribution transfer is at the regional level, we show distribution transfer when the region is set to 256. Before distribution transfer, the overall distribution of datasets A and B is concentrated in range of 0 to 150, so we show frequency distribution histograms before and after the transfer for images with 0 to 150 people. From the figure, it can be seen that A has a very high peak around 40, while the distribution of B peaks at around 10 and exhibits a sudden increase in a few specific regions. After distribution transfer, the distribution is closer to the distribution of B, peaking at around 10 and trending similarly to B overall.