DAMe: Personalized Federated Social Event Detection with Dual Aggregation Mechanism

Abstract.

Training social event detection models through federated learning (FedSED) aims to improve participants’ performance on the task. However, existing federated learning paradigms are inadequate for achieving FedSED’s objective and exhibit limitations in handling the inherent heterogeneity in social data. This paper proposes a personalized federated learning framework with a dual aggregation mechanism for social event detection, namely DAMe. We present a novel local aggregation strategy utilizing Bayesian optimization to incorporate global knowledge while retaining local characteristics. Moreover, we introduce a global aggregation strategy to provide clients with maximum external knowledge of their preferences. In addition, we incorporate a global-local event-centric constraint to prevent local overfitting and “client-drift”. Experiments within a realistic simulation of a natural federated setting, utilizing six social event datasets spanning six languages and two social media platforms, along with an ablation study, have demonstrated the effectiveness of the proposed framework. Further robustness analyses have shown that DAMe is resistant to injection attacks.

1. Introduction

Social Event Detection (SED) aims to pinpoint unusual occurrences that involve specific times, locations, people, content, etc., in the real world from social media platforms (Peng et al., 2022). Traditionally, individual platforms collect their own data to train SED models. However, users tend to post content across various platforms driven by personal preferences (e.g., linguistic preferences (Ren et al., 2021) and social affiliations (Ren et al., 2022b)). Consequently, the models trained individually by each platform are susceptible to their inherent biases, leading to a limited scope and incomplete detection of events. Meanwhile, due to privacy concerns, existing regulations prohibit organizations from sharing data without user consent (Sui et al., 2020), making it unfeasible to centralize data for training purposes. In such scenarios, the most straightforward solution is implementing Federated Learning (FL) (McMahan et al., 2017). In FL, each client (participant) trains a local model using their private data, while the central server facilitates information exchange among clients by iteratively aggregating the locally uploaded model weights. This paper initiates the study on Federated Social Event Detection (FedSED).

Implementing SED through FL necessitates considering its inherent characteristics and challenges. Firstly, FedSED aims to facilitate clients in achieving better performance on their respective data. Unlike traditional FL (McMahan et al., 2017; Li et al., 2020), which prioritizes training a global model with optimal performance across all data, FedSED aims to facilitate information sharing among clients within a federated framework, thereby enhancing the performance of local models on respective data. This requires prioritizing client demands as the primary driving force throughout the federated process. Secondly, social data sourced from various clients inhibit significant heterogeneity. In practical FL scenarios (Li et al., 2020; Huang et al., 2023), data from different clients often exhibit non-independent and non-identically distributed characteristics (referred to as non-IID). This non-IID nature of the data leads to clients converging in different directions, a phenomenon known as “client-drift”. While non-IID has been a longstanding issue in FL (Huang et al., 2021), it is further aggravated in the FedSED context since data from various platforms can differ in formats, languages, contents, etc. Consequently, addressing the challenges posed by non-IID social data, including multilingualism and multiplatform discrepancies, is paramount in FedSED.

Given FedSED’s objective of enhancing local performance and addressing inherent heterogeneity, personalized federated learning (pFL) approaches based on aggregation appear to be promising solutions. Firstly, in model-level aggregation (Luo and Wu, 2022; Zhang et al., 2020), clients have access to the local models of all other clients and perform aggregation in their own preferred manner. However, this line of approaches is encumbered by substantial communication overhead and privacy concerns, given that model parameters could be leveraged to recover private data (Zhu et al., 2019; Zhao et al., 2020). Secondly, layer-level aggregation, (Sun et al., 2021) where clients learn strategies for selecting parameters from the global or local models as their respective local models. Nevertheless, such a binary selection fails to capture the essential information and struggles to learn effective strategies that meet local objectives. Thirdly, parameter-level aggregation, such as FedALA (Zhang et al., 2023), where clients learn aggregation weights for each parameter of the global and local models. Nonetheless, attaining the optimal solution for all parameters proves to be highly challenging, if not practically unachievable. Furthermore, the aggregation of the global model relies on the FedAvg strategy, which falls short in delivering the most advantageous knowledge to individual clients.

Expanding on the preceding discussion, we outline three crucial perspectives in developing a pFL framework for SED: 1) On the server side, when dispatching the global model to each client, it is imperative to strike a balance between maximizing the information available for client personalization and mitigating potential heterogeneity issues that may arise. 2) On the client side, it is essential to retain a portion of local characteristics while integrating the knowledge provided by the server to prevent deviation from local objectives or local overfitting; 3) In entirety, achieving a level of consensus between the global and local models on the representation of the same event is important to mitigate the impact of heterogeneity.

In light of the abovementioned perspectives for developing the FedSED framework, this work proposes a novel Dual Aggregation Mechanism for Personalized Federated Social Event Detection, namely DAMe. The framework aims to enhance the performance of local models on local data through the collaborative efforts of the server and clients. DAMe comprises three components: local aggregation, global aggregation, and global-local alignment. For local aggregation, we employ Bayesian optimization to explore the ideal aggregation weight. This process facilitates the integration of extensive global knowledge while preserving the unique local characteristics to a great extent. For global aggregation, we construct a client graph on the server side and minimize the 2D structural entropy within it. Through this process, an optimal aggregation strategy is acquired to maximize the external knowledge available to each client while reducing global heterogeneity. For global-local alignment, we propose a global-local event-centric constraint to align the local event representation with the global event representation. This ensures that the local models acquire improved representations of social messages. We evaluate the proposed framework using six social event datasets covering six languages and two social media platforms. The experiments are conducted within a realistic simulation of a natural federated setting. Our experimental results and ablation study underscore the efficacy of DAMe for FedSED, with the potential to extend to other applications. Further robustness analysis confirms that DAMe is resilient to federated injection attacks.

In summary, the contributions of this work are as follows:

-

•

We pioneer the study on Federated Social Event Detection (FedSED) and present a pFL framework that satisfies the objective of the task.

-

•

We propose a novel dual aggregation mechanism that maximizes the transfer of external knowledge from the server to the clients while enabling the clients to retain a portion of their own characteristics during local learning.

-

•

We devise a global-local event-centric constraint to learn better message representation, meanwhile preventing local overfitting and “client-drift”.

-

•

Extensive experiments conducted in natural federated settings have corroborated our proposed framework’s effectiveness and demonstrated its robustness against federated injection attacks.

2. Related Work

2.1. Social Event Detection

Social event detection, aiming to identify potential social events from social streams, is a longstanding and challenging task. Recent SED methods primarily rely on Graph Neural Networks (GNNs) (Peng et al., 2021, 2019; Cao et al., 2021; Cui et al., 2021; Ren et al., 2021, 2022a; Wei et al., 2023; Ren et al., 2023). These approaches construct message graphs to represent social message data, integrating various attributes that complement each other and serve independent roles in propagating and aggregating text semantics. For instance, KPGNN (Cao et al., 2021) builds an event message graph using user, keyword, and entity attributes, then employs inductive Graph Attention Networks (GAT) to learn message representations. Furthermore, some approaches adopt multi-view learning strategies to enhance the feature learning process. MVGAN (Cui et al., 2021), for instance, learns message features from both semantic and temporal views and incorporates an attention mechanism to fuse them. ETGNN (Ren et al., 2023) focuses on learning representations from co-hashtag, co-entity, and co-user views. However, current works have not yet explored methods utilizing federated learning to enhance the comprehensiveness and accuracy of SED.

2.2. Federated Learning

Federated Learning (FL), an advanced paradigm for decentralized data training, has garnered significant attention in recent years (Zhang et al., 2021). FedAvg (McMahan et al., 2017) achieves collaborative learning across decentralized devices by locally training models and aggregating parameters on a central server. On that basis, FedProx (Li et al., 2020) introduces regularization that enhances model convergence and generalization by considering the smoothness of the global model in FL.

Due to the statistical heterogeneity in FL, a centralized global model may lower the performance of certain participants (Zhang et al., 2023). Hence, personalized FL has garnered significant attention (Tan et al., 2022). We categorize pFL methods into three types. Fine-Tuning-based Methods involve learning a global model, which clients then fine-tune on their respective sides to achieve personalization (Fallah et al., 2020; Collins et al., 2021; Yu et al., 2024). For instance, Per-FedAvg (Fallah et al., 2020) regards the global model as an initial shared model, allowing all clients to fine-tune it with local data to fit their specific needs. Personalized Layer/Model-based Methods offer flexibility in model architecture, allowing for variations such as sharing specific layers or training additional local models (T Dinh et al., 2020; Li et al., 2021a; Yang et al., 2023; Liu et al., 2023). For example, FedACK (Yang et al., 2023) employs GAN-based knowledge distillation for cross-model and cross-lingual social bot detection. Aggregation-based Methods achieve personalization through server centrally aggregates specialized global models for the participants or clients directly exchange parameters among themselves in a decentralized setting, allowing them to select the information they desire (Huang et al., 2021; Li et al., 2021b; Zhang et al., 2020; Luo and Wu, 2022; Sun et al., 2021; Chen et al., 2022; Zhang et al., 2023).

Previous studies have overlooked the possibility of servers providing a suitable model while allowing clients to integrate helpful knowledge. To this end, this work focuses on personalization to enhance local performance via global and local aggregation.

3. Preliminaries

In this section, we outline the problem formulations of Federated Learning (FL) and Social Event Detection (SED), then define the threat model in the federated setting.

3.1. Federated Learning

This paper considers FL with one central server and clients. The dataset , locally collected by client , remains inaccessible to others. Below is an overview of the training process for the classic FL algorithm FedAvg (McMahan et al., 2017):

- Step 1:

-

Initialization. At the initial communication round , all local model parameters of clients are initialized as the global model parameter: , where and denotes the model parameters of client and the server at round , respectively.

- Step 2:

-

Client Update. Each client trains the model on their private dataset with task objective . Then, upload the trained local model parameters to the server.

- Step 3:

-

Server Execute. The server aggregates the received parameters by , where denotes the number of data samples of client , and is the total number of data samples across all clients. Then, the server distributes the new global model parameters to clients in the following round.

Iterate Steps 2 and 3 continuously until the final communication round. The global objective of the overall FL process is:

| (1) |

3.2. Social Event Detection

Given a collection of social messages , a social event detection algorithm aims to learn a model from , where is the model parameter, is the set of events (all labels).

3.3. Threat Model

Current federated paradigms are vulnerable to injection attacks (Lyu et al., 2022). In this work, the threat model is defined by the presence of malicious clients deliberately injecting backdoors within the training data (data poisoning attack) or uploading corrupted parameters to the server (model poisoning attack) to sabotage the FL process (e.g., performance, collaboration). Such attacks could have profound repercussions on the global model and threaten FL’s reliability and accuracy. We analyze the robustness of the proposed framework against injection attacks in Section 6.2.

4. Methodology

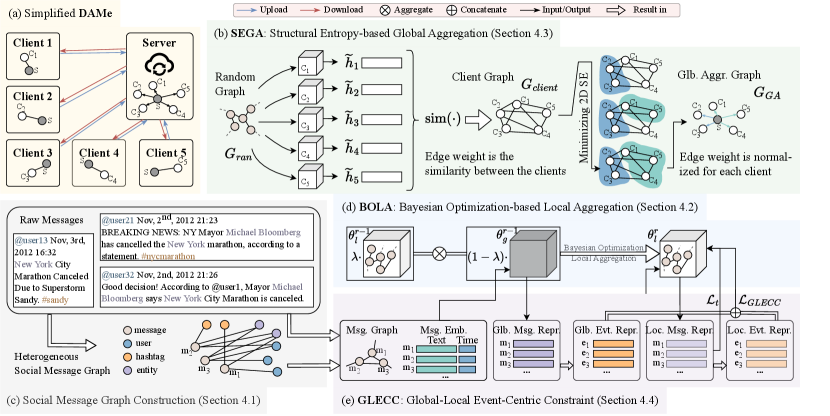

This section presents the proposed framework, which consists of four key components: backbone model of SED, local aggregation, global aggregation, and global-local alignment. The overall framework is illustrated in Figure 1.

4.1. Backbone Social Event Detection Model

For FedSED, we apply GAT (Graph Attention Network) as our backbone SED model and map the text encoding of different languages into a shared vector space.

Social Message Graph Construction

As illustrated in Figure 1(c), attributes (including users, hashtags, and entities) are extracted from messages and connected with their corresponding messages, forming a heterogeneous social graph. Then, the heterogeneous social graph is projected onto a homogeneous social graph by retaining the original message nodes and adding edges connecting message nodes with shared attributes. In this graph, nodes represent messages, and edges signify the associations between messages.

Social Message Representation

The message embedding is obtained by concatenating the message’s textual and temporal embedding. The temporal embedding corresponds to the message’s timestamp in the OLE date format. Regarding textual embedding, accommodating the linguistic differences among clients is essential for FL. Consequently, all clients utilize the pre-trained language model, SBERT-based (Sentence-BERT (Reimers and Gurevych, 2019)) multilingual model (Reimers and Gurevych, 2020) to encode the textual content of messages. This implementation ensures that messages in diverse languages reside within a unified feature space.

4.2. Local Aggregation via Bayesian Optimization

We introduce a local aggregation mechanism, where clients learn a strategy that incorporates global knowledge while preserving their local characteristics rather than being directly overridden by the global model. The following optimization problem is formulated to describe the local aggregation process at the -th communication round for client :

| (2) |

where and denote the local and global model parameters, respectively. represents the aggregation weight (the weight of local preservation). Local aggregation strives to determine the optimal or near-optimal weight that allows clients to acquire the maximum amount of knowledge. The process of is described in Section 4.4.

Bayesian Optimization (BO) algorithm (Frazier, 2018) is a widely employed approach for optimizing functions with costly or challenging direct evaluations. We utilize BO for determining the aggregation weight for Local Aggregation (BOLA), as shown in Figure 1(d). BOLA is accomplished through a three-step BO procedure: first defining the objective function, then modeling it using a Bayesian statistical model, and finally determining the subsequent sampling position by an acquisition function.

4.2.1. Objective Function

The objective function for a single round of local aggregation can be formulated as follows (symbols denoting the -th round and client are omitted for simplicity):

| (3) |

suggesting that the aggregation weight can be evaluated by observing the task-specific performance of the aggregated model on private datasets , e.g., NMI score for SED performance. is controlled by a hyperparameter to reduce search space.

4.2.2. Bayesian statistical model

To model the object function (Equation 3), Gaussian Process regression (GPR) is applied. Specifically, for any finite set of points , the joint distribution of the corresponding function values follows a multivariate Gaussian distribution. Consequently, is characterized as a Gaussian process, denoted as:

| (4) |

where and denote the mean and kernel functions, respectively. The learnable parameters in these functions can be estimated through maximum likelihood estimation.

Applying Bayes’ rule, we obtain a joint probability distribution:

| (5) |

where denotes the current optimal value of , which serves as the objective of the optimization process. , , and . Based on the joint distribution of and , the conditional distribution is as follows:

| (6) | ||||

Through the above equations, it is observed that the posterior distribution’s statistical properties and are modeled using GPR on the prior distribution’s mean function and covariance function .

4.2.3. Acquisition function

The acquisition function is used to determine the next aggregation weight. In this work, we apply the Expected Improvement (EI) (Mockus, 1974; Jones et al., 1998) criterion and the Upper Confidence Bound (UCB) (SRINIVAS, 2010) as acquisition functions.

Expected Improvement (EI)

The calculation of the objective function necessitates processing the entire dataset , which makes obtaining the next weight’s objective function value costly. Therefore, we calculate the expected improvement value of the next weight with the aim of maximizing it. EI is computed as:

| (7) |

where denotes the expectation computed under the posterior distribution (Equation 6). denotes the optimal at the -th step, and denotes the optimal result during the first iterations.

Upper Confidence Bound (UCB)

The UCB algorithm chooses the weight with the highest upper confidence bound for exploration, aiming to converge towards weights with higher actual reward values. It is defined as:

| (8) |

Here, is a learnable parameter derived from theoretical analysis that increases over time. denotes the standard deviation function.

Given the intricate and non-convex nature of the objective function (Hoffman et al., 2011), we employ a mixed acquisition strategy of incorporating EI and UCB.

4.3. Global Aggregation via 2D Structural Entropy Minimization

Under the federated framework described in Section 3.1, personalized global aggregation aims to provide clients with maximum external information by producing global models that can benefit individual clients more. The server needs an aggregation strategy that considers client heterogeneity and individual characteristics to maximize external knowledge for all clients. To achieve this objective, we construct a client graph based on clients’ similarity. By minimizing the two-dimensional Structural Entropy (2DSE) of , a graph capturing the internal similarities among clients is obtained, finalizing the Global Aggregation strategy for each client (SEGA). This process is demonstrated in Figure 1(b).

is an undirected, fully connected, weighted graph consisting of nodes corresponding to clients, with their similarities as edge weights. The similarity between client models can be estimated by providing them with the same input and measuring the similarity between their respective outputs. On this basis, the server first generates a random graph as input to all client models (Holland et al., 1983). With graph pooling (Lee et al., 2019), the server obtains different client models’ representations of the same graph, and the similarity between client and is calculated as:

| (9) |

where is the averaged output of all node embeddings in the input graph and .

Upon constructing the client graph , we minimize the 2DSE of the graph, resulting in a partitioned graph, which serves as the basis for the aggregation strategy. Suppose forms partitions of nodes in , where is the total number of partitions, represents the set of client nodes, and denotes the -th partition that contains specific client node(s). The 2DSE of the client graph is calculated as follows (Cao et al., 2024; Li and Pan, 2016):

| (10) | ||||

where denotes the number of total partitions, denotes the number of client nodes in partition , denotes the degree of the -th client node in , computes the volume, and denotes the sum of degrees of edges with one endpoint in partition . The objective of the minimization process is to assign each client node to a distinct partition . Specifically, each client node is initially treated as an individual partition. New partitions are formed by iteratively merging different partitions. The changes in the 2DSE before and after merging are observed to identify the partitioning scheme that yields the lowest overall 2DSE and generates the desired partitions. We leverage the greedy strategy in (Li and Pan, 2016) to minimize 2DSE. The difference in 2DSE before and after merging and into is calculated as follows:

| (11) | ||||

where the calculation of , , and follows Equation 10, to compute the 2DSE of partition under the partion . denotes the 2DSE of obtained by merging and into . Note that we always merge the two partitions with the smallest SE until all SE , thus obtaining the final partitions . Based on the final partition, the global aggregation strategy aims to aggregate information within each partition. Specifically, in the -th partition , all client nodes are connected by edges weighted according to their similarities. For all nodes in the partition, the global model for client is obtained by:

| (12) |

where represents the node within the same partition as , is the local model of client , and is the normalized weight between client and client , computed as:

| (13) |

4.4. Local Optimization

In Section 4.2, we introduced a local aggregation strategy that aggregates and into . This section describes the local optimization of with local data, maintaining the proximity between the local and the global models while preventing overfitting to the local data. The overall process is shown in Figure 1(e).

Step 1: Triplet Loss

Essentially, the objective of SED is to maximize similarity among messages belonging to the same event. Current approaches in SED (Cao et al., 2021) employ contrastive triplet loss to guide the optimization process. The triplet loss is computed as:

| (14) |

where is a set of constructed triples, represents the anchor sample, represents the positive sample (i.e., a sample from the same class as the anchor sample), and represents the negative sample (i.e., a sample from a different class than the anchor sample). calculates the Euclidean distance between samples, denotes the representation of message , is the margin parameter, and denotes global or local.

Step 2: Global-Local Event-Centric Constraint

In FedSED, data among clients exhibits non-IID characteristics. This non-IID nature leads to “client-drift”, resulting in low model utility. Building on this observation, our study introduces an Event-Centric Constraint that aligns the Global and Local models closer (GLECC). Firstly, the client obtains message representations from global model and aggregated model . Then, we learn the event representation from and based on the representations of the messages within each event:

| (15) |

where is the total number of messages in event , and denotes global or local. Finally, GLECC is calculated with pairwise loss (Ren et al., 2022a) as:

| (16) |

where denotes the number of events in the current batch. By pulling closer the representations of the same event in both global and local models, the server and client establish a mutual consensus on representation learning. This alignment mitigates the risk of overfitting to local data, preventing divergence from the global context, as well as the tendency to solely pursue the global objective while disregarding local characteristics.

Step 3: Overall Loss

The overall loss during the optimization process is calculated as follows:

| (17) |

where is calculated as:

| (18) |

suggesting that the local model should only learn from the global model when the global model demonstrates better performance on local data. Note that, the global model remains fixed throughout the whole process, training into .

5. Experimental Setups

This section introduces the experiment setups. We outline the following research questions (RQs) as guidelines for our experiments:

-

•

RQ1: Compared to existing FL approaches, can the proposed DAMe improve local performance?

-

•

RQ2: Is the proposed framework robust (able to withstand injection attacks) in the setting of federated learning?

-

•

RQ3: How does each component of the proposed framework contribute to the overall performance?

-

•

RQ4: Regarding computation and communication, is the proposed framework efficient?

5.1. Datasets

We conducted experiments on 6 datasets, spanning 6 languages and 2 platforms. Table 1 presents the statistics of all datasets. The English Twitter (McMinn et al., 2013) dataset, the French Twitter (Mazoyer et al., 2020) dataset, and the Arabic Twitter (Alharbi and Lee, 2021) dataset are publicly available. We collect the rest of the datasets from Weibo in Chinese and Twitter in Japanese and German. First, we extracted key events from Wikipedia111https://en.wikipedia.org/wiki/Portal:Current_events pages in multiple languages for 2018. Subsequently, we retrieved relevant posts from Twitter or Weibo using event keywords and crawled them to construct the datasets. The events listed on Wikipedia pages in different languages are customized according to users’ preferences in that language, making the obtained dataset closely resemble real-world distributions.

5.2. Federated Setting

Prior works (Liu et al., 2023; Yang et al., 2023) often partition a single dataset into multiple clients to mimic the federated setting. However, such practices fail to capture the non-IID nature of real-world data. This study breaks this cycle by treating each dataset as an independent client in the experiments. This enables us to replicate the complexities of real-world data distribution across platforms more accurately. By preserving the inherent non-IID characteristics of the data, we aim to enhance the fidelity of our federated learning experiments and provide insights that are more applicable to practical scenarios. In our experiments, we utilize a setup consisting of one server and six clients, each with social message data in distinct languages.

| Dataset | # Messages | # Events | Avg. Length |

|---|---|---|---|

| English Twitter | 68,841 | 503 | 27.03 |

| French Twitter | 64,516 | 257 | 51.77 |

| Arabic Twitter | 9,070 | 7 | 53.18 |

| Japanese Twitter | 60,530 | 189 | 38.66 |

| German Twitter | 90,091 | 179 | 36.76 |

| Chinese Weibo | 71,846 | 221 | 30.60 |

5.3. Baselines

In addition to Local training without parameter sharing, we compare DAMe with two categories of FL methods in the task of SED: (1) Classic FL methods: FedAvg (McMahan et al., 2017) aggregates a weighted global model for all clients. FedProx (Li et al., 2020) introduce regularization to alleviate disparities between the global and local models. (2) Personalized FL methods: Per-FedAvg (Fallah et al., 2020) fine-tunes the global model on the client side to achieve personalization. In Ditto (Li et al., 2021a), each client learns an additional personalized model by incorporating a proximal term to extract information from the updated global model. SFL (Chen et al., 2022) constructs a client graph on the server and aggregates personalized models for each client via structural information. In APPLE (Luo and Wu, 2022), clients have access to all other clients’ models and aggregates locally. FedALA (Zhang et al., 2023) dynamically aggregates the global and local parameters at a fine-grained level based on the local objective.

| Clients | English Twitter | French Twitter | Arabic Twitter | Japanese Twitter | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Metrics | NMI | AMI | ARI | NMI | AMI | ARI | NMI | AMI | ARI | NMI | AMI | ARI |

| Local | .69 .00 | .52 .00 | .13 .00 | .69 .00 | .62 .00 | .15 .00 | .55 .00 | .55 .00 | .50 .00 | .60 .00 | .50 .00 | .11 .01 |

| FedAvg | .73 .00 | .58 .00 | .20 .01 | .74 .00 | .68 .00 | .21 .01 | .64 .00 | .64 .00 | .58 .00 | .64 .00 | .56 .00 | .18 .01 |

| FedProx | .71 .01 | .55 .01 | .16 .02 | .70 .01 | .64 .01 | .17 .00 | .59 .01 | .59 .01 | .54 .02 | .62 .00 | .53 .00 | .14 .01 |

| Per-FedAvg | .72 .00 | .56 .00 | .17 .01 | .70 .00 | .64 .00 | .18 .01 | .62 .01 | .62 .00 | .57 .00 | .62 .00 | .53 .00 | .15 .01 |

| Ditto | .73 .00 | .58 .00 | .20 .01 | .73 .00 | .68 .00 | .21 .01 | .63 .02 | .62 .02 | .55 .02 | .64 .00 | .56 .00 | .18 .01 |

| SFL | .69 .00 | .52 .00 | .12 .00 | .69 .00 | .62 .00 | .15 .00 | .56 .00 | .56 .00 | .50 .01 | .60 .00 | .50 .00 | .11 .00 |

| APPLE | .71 .00 | .55 .00 | .16 .01 | .70 .00 | .63 .00 | .16 .00 | .70 .02 | .69 .01 | .73 .01 | .61 .01 | .52 .03 | .12 .00 |

| FedALA | .74 .00 | .59 .00 | .21 .00 | .74 .00 | .69 .00 | .22 .00 | .63 .00 | .63 .00 | .57 .00 | .65 .00 | .56 .00 | .20 .01 |

| DAMe (Ours) | .75 .00 | .61 .00 | .21 .00 | .76 .00 | .70 .00 | .23 .02 | .86 .00 | .86 .00 | .89 .00 | .67 .00 | .59 .00 | .25 .01 |

| promotion | .01 | .02 | .01 | .02 | .01 | .01 | .16 | .17 | .16 | .02 | .03 | .05 |

| Clients | German Twitter | Chinese Weibo | Average on all clients | Avg. Gain (compare to Local) | ||||||||

| Metrics | NMI | AMI | ARI | NMI | AMI | ARI | NMI | AMI | ARI | NMI | AMI | ARI |

| Local | .41 .00 | .32 .00 | .04 .00 | .47 .00 | .34 .00 | .05 .00 | .57 | .48 | .16 | - | - | - |

| FedAvg | .46 .00 | .39 .01 | .08 .01 | .53 .00 | .43 .00 | .11 .00 | .62 | .55 | .23 | .04 | .06 | .07 |

| FedProx | .43 .00 | .35 .00 | .06 .00 | .48 .01 | .37 .00 | .07 .00 | .59 | .51 | .19 | .02 | .03 | .03 |

| Per-FedAvg | .44 .00 | .36 .00 | .06 .00 | .49 .00 | .38 .00 | .08 .01 | .60 | .51 | .20 | .02 | .03 | .04 |

| Ditto | .46 .00 | .39 .00 | .08 .00 | .53 .00 | .43 .00 | .11 .01 | .62 | .54 | .22 | .04 | .06 | .07 |

| SFL | .41 .00 | .32 .00 | .04 .00 | .47 .00 | .35 .00 | .05 .00 | .57 | .48 | .16 | .00 | .00 | .00 |

| APPLE | .43 .01 | .35 .01 | .06 .00 | .48 .00 | .36 .00 | .06 .00 | .61 | .52 | .22 | .01 | .02 | .01 |

| FedALA | .46 .00 | .39 .00 | .08 .00 | .53 .00 | .43 .00 | .12 .01 | .63 | .55 | .23 | .05 | .06 | .09 |

| DAMe (Ours) | .48 .00 | .40 .00 | .09 .00 | .56 .00 | .46 .00 | .16 .01 | .68 | .60 | .30 | .07 | .09 | .14 |

| promotion | .02 | .01 | .01 | .03 | .03 | .04 | .05 | .05 | .07 | .02 | .03 | .05 |

5.4. Implementation Details

The experiments are implemented using the PyTorch framework and run on a machine with eight NVIDIA Tesla A100 (40G) GPUs. We randomly sample 70%, 20%, and 10% for training, testing, and validation as common studies on SED (Cao et al., 2021; Ren et al., 2022a). For all methods, we employ the SED model in Section 4.1 as the backbone model. The backbone model consists of two layers of GAT, where each node in the batch aggregates messages from 800 direct neighbors and 100 one-hop neighbors. We set the mini-batch size to 2000, the learning rate to be , the margin for the contrastive triplet loss to 3, and employed the Adam optimizer. During the FL process, we perform 50 communication rounds, and each client conducts local training for 1 epoch, a compromise value across all baselines (Zhang et al., 2023; Chen et al., 2022).

The baseline methods are implemented based on open-source implementations on Github222https://github.com/dawenzi098/SFL-Structural-Federated-Learning333https://github.com/zs2847037826zs/PFL-Non-IID/tree/master. For SFL, the client-wise relation graph is constructed based on the distances between model parameters using Euclidean distance. It is observed that FedALA failed to converge and entered a state of deadlock after the initial communication round. Therefore, we set a local patience of 10 for FedALA. We set the number of epochs for local training to 50.

For the robustness analysis, we conducted injection attacks involving model poisoning444https://github.com/RingBDStack/KPGNN and data poisoning555https://github.com/thunlp/HiddenKiller following (Zhang et al., 2022) and (Qi et al., 2021), respectively.

All methods utilize k-means clustering. All experiments are repeated 5 times to mitigate the uncertainty of deep learning methods. We report the average value and standard deviation of the 5 repetitions. All implementations are available at https://github.com/XiaoyanWork/DAMe.

5.5. Evaluation Metric

Technically, SED involves learning representations of social messages and clustering them into specific events. We evaluate the performance of all methods using three commonly used metrics for clustering tasks: Normalized Mutual Information (NMI) (Estévez et al., 2009), Adjusted Mutual Information (AMI) (Vinh et al., 2009), and Adjusted Rand Index (ARI) (Vinh et al., 2009). These metrics quantify the similarity between the detected and ground truth clusters. A higher score of these metrics indicates better message representation.

6. Experimental Results

6.1. RQ1: Federated Performance

This section investigates the performance of SED with various FL systems. The result is demonstrated in Table 2. The results show that DAMe has outperformed all baseline methods on all metrics for each client dataset. Especially on the Arabic dataset, which suffers from limited data samples, DAMe surpasses the baseline methods by at least 0.16, 0.17, and 0.16 regarding NMI, AMI, and ARI, respectively. This observation indicates that DAMe has the potential to integrate most external knowledge from other clients, thereby promoting local performance to the greatest extent. It is observed that compared to local training, DAMe achieves an average gain of 0.07, 0.09, and 0.14 in NMI, AMI, and ARI, respectively, surpassing all FL baseline methods. These results highlight that the proposed framework meets the objective of FedSED, which is to enhance the performance of individual clients and benefit all clients involved. Moreover, in comparison to the pFL methods, the results reveal the following: (1) When comparing methods that directly override the local model with the global model (SFL, PerFedAvg, Ditto), these methods encounter challenges in achieving satisfactory local performance. This is because, in each communication round, training is perceived as starting from scratch on the client side, initialized with the global parameters. In the case of PerFedAvg, fine-tuning on the client side with local data necessitates an additional training round after the FL process. However, during local fine-tuning, there is a risk of catastrophic forgetting, whereby the learned information from the global model can be lost. As for SFL, which aggregates the global model using structural information on the server side, does not significantly contribute to local performance improvement. This is because the objective is to personalize the model with local data, and a model that integrates the majority of external knowledge and overrides the local model disregards the importance of local characteristics, which are crucial factors in personalization. Consequently, SFL does not surpass local training in most cases. (2) When comparing DAMe with methods that locally aggregate models (FedALA and APPLE), it is observed that, for APPLE, exposing each client to other clients’ local models does not necessarily lead to improved local performance. This is because clients cannot accurately determine which models are useful or possess similar distributions to their own. As for FedALA, the performance is relatively satisfactory due to its parameter-level aggregation, which aids in identifying relevant knowledge within the global model. However, FedALA’s approach of dispatching the global model based on weighted averages of data samples does not provide clients with the most advantageous information they require. In contrast, our proposed framework addresses this limitation by considering client similarity during the global aggregation process. This allows the server to dispatch an aggregated global model better aligned with each client’s specific needs. To sum up, the proposed dual aggregation mechanism significantly enhances the performance of local training. This is achieved by considering the local characteristics and providing clients with the knowledge they require the most. The dual aggregation mechanism is crucial in improving local performance to a paramount extent.

| Clean | Model Poisoning | Data Poisoning | |

|---|---|---|---|

| French Twitter | .76 .00 | .75 .00 | .75 .00 |

| Arabic Twitter | .86 .00 | .85 .07 | .85 .00 |

| Japanese Twitter | .67 .00 | .67 .00 | .67 .00 |

| German Twitter | .48 .00 | .47 .00 | .45 .00 |

| Chinese Weibo | .56 .00 | .55 .02 | .55 .00 |

| Average | .69 | .66 | .65 |

6.2. RQ2: Robustness Analysis

To evaluate DAMe’s robustness, we investigate its resistance to two types of attacks: model poisoning attacks and data poisoning attacks. In both attacks, the malicious client possesses the English dataset and aims to compromise the FL process. In the model poisoning attack, the client uploads manipulated parameters to the server (Zhang et al., 2022), resulting in a model with no practical utility. In the data poisoning attack, the client injects backdoors into its own data (Qi et al., 2021) and trains a local model using the poisoned data. Consequently, the injected backdoor affects the model parameters and corrupts the global model. In traditional settings, the server integrates all received models, including the poisoned model, without differentiation, which decreases the accuracy of the global model. Moreover, this corrupted global model is dispatched to individual clients, overriding their local models and compromising their local training. However, the proposed dual mechanism has advantages against such attacks. With BOLA, clients can determine the proportion of the dispatched global model that should be integrated into their local training. This enables them to avoid incorporating potentially poisoned parameters. Additionally, SEGA, implemented on the server side, leverages the client-uploaded models to compute the similarity between clients’ models. This similarity calculation enables the server to develop a dispatching strategy, ensuring that models containing poison parameters are not distributed to clients. From the result in Table 3, it is evident that the performance of the DAMe on all datasets demonstrates a negligible perturbation in performance. This observation indicates that DAMe is resilient to injection attacks in FL. Regarding injection attacks, DAMe has the capability to disregard model injection, as it selectively focuses on relevant information that is essential for clients (while disregarding irrelevant noise).

6.3. RQ3: Analysis on DAMe

This section presents an ablation study to identify the contribution of the components in DAMe and analyze the effect of the modules.

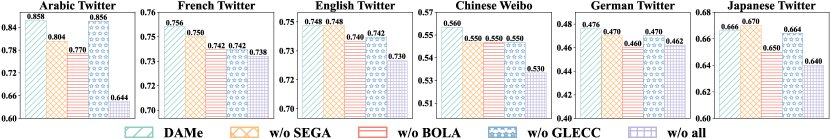

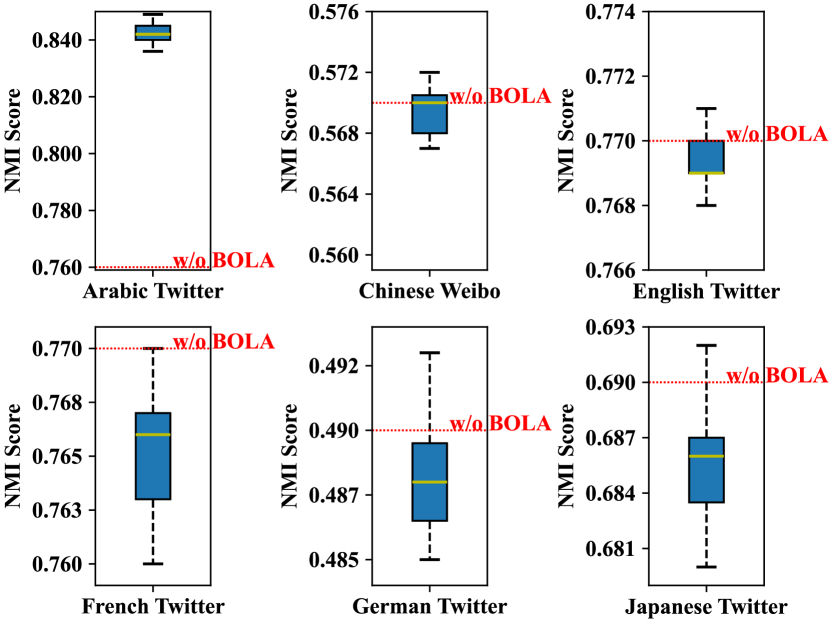

The results of the ablation study are presented in Figure 2. The findings demonstrate that all proposed components enhance the performance of FedSED. Specifically, BOLA exhibits a substantial influence on performance improvement. This indicates that in pFL settings, it is crucial to empower clients to determine how much they incorporate knowledge from the global model, while preserving their individual characteristics during training. Moreover, SEGA significantly improves performance and surpasses the baseline SFL, aggregating the global model based on structural information within a client graph. This finding suggests that our approach, which leverages client similarity and minimizes the 2DSE of the client graph for global aggregation, effectively identifies pertinent knowledge that boosts local performance. Lastly, GLECC also plays a role in improving overall performance. This implies that aligning the global and local representations of the same event is advantageous for achieving a consensus between the global and local models, thereby mitigating the inherent heterogeneity.

We analyze the Bayesian search space in the last communication round of the FL process and present the visualization of BOLA in Figure 3 . For all clients, DAMe achieves the best performance. For French, German, and Japanese Twitter, DAMe without BOLA achieves performance above the first quartile of the weight distribution in the search space of the Bayesian optimization algorithm but consistently lags behind the upper bound. This observation highlights the significant benefits of utilizing BOLA to search for local aggregation weights, thereby enhancing local performance.

6.4. RQ4: Overhead Analysis

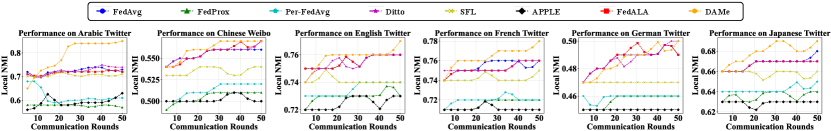

Here, we delve into the crucial factors in FL, including the convergence, computation and communication overhead of the proposed framework and baseline methods.

Convergence As depicted in Figure 4, the proposed DAMe demonstrates stable convergence. This stability can be attributed to DAMe’s tailored design for the task of SED, which focuses on learning message representations and performing clustering. DAMe stands out as the first work to consider the characteristics of the SED task, unlike baseline methods, which serve as a general framework for FL. This task-specific approach allows DAMe to better suit the requirements of SED, enhancing its performance and convergence compared to the less specialized FL baselines.

Computation Overhead The second column in Table 4 presents the time consumption of all methods during the entire training process. It is observed that DAMe without the GLECC module has the shortest time for training in the FL setting, shorter than DAMe, since it omit the process of obtaining the global and local event representation. Moreover, Per-FedAvg and Ditto exhibit the longest training times, surpassing DAMe’s duration by 1.5 times. This can be attributed to their approaches of local fine-tuning or training additional local models, which significantly prolongs the training process. However, contrasting the results in Table 2, these methods do not demonstrate substantial performance improvements. In this regard, DAMe demonstrates success in such scenarios by achieving notable task performance while maintaining reasonable computational overhead.

Communication Overhead The communication overhead is shown in the last column in Table 4. In most scenarios, the methods adhere to the centralized FL setting, where a single server communicates with all clients, leading to a consistent communication overhead across the same parameter scales. However, APPLE adopts a decentralized FL setting, where all clients interact with each other to determine a local aggregation strategy. This significantly increases the communication overhead as the number of clients grows. In our experiment, where , the communication overhead of APPLE is 3.5 times higher compared to other methods.

| Computation | Communication | ||

| (Total time) | (Time/round) | (Param./round) | |

| Local | 1402 min | - | - |

| FedAvg | 1522 min | 30 min | |

| FedProx | 1902 min | 38 min | |

| Per-FedAvg | 2945 min | 59 min | |

| Ditto | 2890 min | 58 min | |

| SFL | 1555 min | 31 min | |

| APPLE | 1568 min | 31 min | |

| FedALA | 2725 min | 55 min | |

| DAMe | 1896 min | 38 min | |

| w/o BOLA | 1688 min | 33 min | |

| w/o SEGA | 1612 min | 32 min | |

| w/o GLECC | 1530 min | 31 min | |

7. Conclusion

In this paper, we initiate the study on FedSED and propose DAMe, a personalized federated framework for social event detection that incorporates two aggregation strategies. In DAMe, the server provides clients with maximum external knowledge with a structural entropy-based global aggregation strategy; clients leverage received knowledge and retain local characteristics to the greatest extent by a Bayesian optimization-based local aggregation strategy. Moreover, the local optimization process is guided by an event-centric constraint that mitigates the issues arising from heterogeneity, while preventing overfitting to the local data. Extensive experiments on six SED datasets across six languages and two platforms have demonstrated the effectiveness of DAMe. Further robustness analyses have shown that DAMe is resistant to federated injection attacks.

Acknowledgements.

This work is supported by the National Key Research and Development Program of China (No.2023YFF0905302), and the Yunnan Provincial Major Science and Technology Special Plan Projects (No.202302AD080003).References

- (1)

- Alharbi and Lee (2021) Alaa Alharbi and Mark Lee. 2021. Kawarith: an Arabic Twitter corpus for crisis events. In Proceedings of the Sixth Arabic Natural Language Processing Workshop. 42–52.

- Cao et al. (2021) Yuwei Cao, Hao Peng, Jia Wu, Yingtong Dou, Jianxin Li, and Philip S. Yu. 2021. Knowledge-preserving incremental social event detection via heterogeneous gnns. In Proceedings of the Web Conference 2021. 3383–3395.

- Cao et al. (2024) Yuwei Cao, Hao Peng, Zhengtao Yu, and S Yu Philip. 2024. Hierarchical and incremental structural entropy minimization for unsupervised social event detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 38. 8255–8264.

- Chen et al. (2022) F Chen, G Long, Z Wu, T Zhou, and J Jiang. 2022. Personalized Federated Learning With a Graph. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence. International Joint Conferences on Artificial Intelligence, 1–8.

- Collins et al. (2021) Liam Collins, Hamed Hassani, Aryan Mokhtari, and Sanjay Shakkottai. 2021. Exploiting shared representations for personalized federated learning. In International conference on machine learning. PMLR, 2089–2099.

- Cui et al. (2021) Wanqiu Cui, Junping Du, Dawei Wang, Feifei Kou, and Zhe Xue. 2021. MVGAN: Multi-view graph attention network for social event detection. ACM Transactions on Intelligent Systems and Technology (TIST) 12, 3 (2021), 1–24.

- Estévez et al. (2009) Pablo A Estévez, Michel Tesmer, Claudio A Perez, and Jacek M Zurada. 2009. Normalized mutual information feature selection. IEEE Transactions on neural networks 20, 2 (2009), 189–201.

- Fallah et al. (2020) Alireza Fallah, Aryan Mokhtari, and Asuman Ozdaglar. 2020. Personalized federated learning with theoretical guarantees: A model-agnostic meta-learning approach. Advances in Neural Information Processing Systems 33 (2020), 3557–3568.

- Frazier (2018) Peter I Frazier. 2018. A tutorial on Bayesian optimization. arXiv preprint arXiv:1807.02811 (2018), 1–22.

- Hoffman et al. (2011) Matthew Hoffman, Eric Brochu, Nando De Freitas, et al. 2011. Portfolio Allocation for Bayesian Optimization.. In UAI. 327–336.

- Holland et al. (1983) Paul W Holland, Kathryn Blackmond Laskey, and Samuel Leinhardt. 1983. Stochastic blockmodels: First steps. Social networks 5, 2 (1983), 109–137.

- Huang et al. (2023) Wenke Huang, Guancheng Wan, Mang Ye, and Bo Du. 2023. Federated graph semantic and structural learning. In Proc. Int. Joint Conf. Artif. Intell. 3830–3838.

- Huang et al. (2021) Yutao Huang, Lingyang Chu, Zirui Zhou, Lanjun Wang, Jiangchuan Liu, Jian Pei, and Yong Zhang. 2021. Personalized cross-silo federated learning on non-iid data. In Proceedings of the AAAI conference on artificial intelligence, Vol. 35. 7865–7873.

- Jones et al. (1998) Donald R Jones, Matthias Schonlau, and William J Welch. 1998. Efficient global optimization of expensive black-box functions. Journal of Global optimization 13 (1998), 455–492.

- Lee et al. (2019) Junhyun Lee, Inyeop Lee, and Jaewoo Kang. 2019. Self-attention graph pooling. In International conference on machine learning. PMLR, 3734–3743.

- Li and Pan (2016) Angsheng Li and Yicheng Pan. 2016. Structural information and dynamical complexity of networks. IEEE Transactions on Information Theory 62, 6 (2016), 3290–3339.

- Li et al. (2021a) Tian Li, Shengyuan Hu, Ahmad Beirami, and Virginia Smith. 2021a. Ditto: Fair and robust federated learning through personalization. In International conference on machine learning. PMLR, 6357–6368.

- Li et al. (2020) Tian Li, Anit Kumar Sahu, Manzil Zaheer, Maziar Sanjabi, Ameet Talwalkar, and Virginia Smith. 2020. Federated optimization in heterogeneous networks. Proceedings of Machine learning and systems 2 (2020), 429–450.

- Li et al. (2021b) Xin-Chun Li, De-Chuan Zhan, Yunfeng Shao, Bingshuai Li, and Shaoming Song. 2021b. Fedphp: Federated personalization with inherited private models. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer, 587–602.

- Liu et al. (2023) Yi Liu, Xiaohan Bi, Lei Li, Sishuo Chen, Wenkai Yang, and Xu Sun. 2023. Communication Efficient Federated Learning for Multilingual Neural Machine Translation with Adapter. In Findings of the Association for Computational Linguistics: ACL 2023. 5315–5328.

- Luo and Wu (2022) Jun Luo and Shandong Wu. 2022. Adapt to Adaptation: Learning Personalization for Cross-Silo Federated Learning. In IJCAI: proceedings of the conference, Vol. 2022. 2166–2173.

- Lyu et al. (2022) Lingjuan Lyu, Han Yu, Xingjun Ma, Chen Chen, Lichao Sun, Jun Zhao, Qiang Yang, and Philip S. Yu. 2022. Privacy and robustness in federated learning: Attacks and defenses. IEEE transactions on neural networks and learning systems (2022), 1–21.

- Mazoyer et al. (2020) Béatrice Mazoyer, Julia Cagé, Nicolas Hervé, and Céline Hudelot. 2020. A french corpus for event detection on twitter. (2020), 1–8.

- McMahan et al. (2017) Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Aguera y Arcas. 2017. Communication-efficient learning of deep networks from decentralized data. In Artificial intelligence and statistics. PMLR, 1273–1282.

- McMinn et al. (2013) Andrew J McMinn, Yashar Moshfeghi, and Joemon M Jose. 2013. Building a large-scale corpus for evaluating event detection on twitter. In Proceedings of the 22nd ACM international conference on Information & Knowledge Management. 409–418.

- Mockus (1974) Jonas Mockus. 1974. On Bayesian methods for seeking the extremum. In Proceedings of the IFIP Technical Conference. 400–404.

- Peng et al. (2019) Hao Peng, Jianxin Li, Qiran Gong, Yangqiu Song, Yuanxing Ning, Kunfeng Lai, and Philip S. Yu. 2019. Fine-grained event categorization with heterogeneous graph convolutional networks. In Proceedings of the 28th International Joint Conference on Artificial Intelligence. 3238–3245.

- Peng et al. (2021) Hao Peng, Jianxin Li, Yangqiu Song, Renyu Yang, Rajiv Ranjan, Philip S. Yu, and Lifang He. 2021. Streaming social event detection and evolution discovery in heterogeneous information networks. ACM Transactions on Knowledge Discovery from Data (TKDD) 15, 5 (2021), 1–33.

- Peng et al. (2022) Hao Peng, Ruitong Zhang, Shaoning Li, Yuwei Cao, Shirui Pan, and Philip S. Yu. 2022. Reinforced, incremental and cross-lingual event detection from social messages. IEEE Transactions on Pattern Analysis and Machine Intelligence 45, 1 (2022), 980–998.

- Qi et al. (2021) Fanchao Qi, Yangyi Chen, Mukai Li, Yuan Yao, Zhiyuan Liu, and Maosong Sun. 2021. ONION: A Simple and Effective Defense Against Textual Backdoor Attacks. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. 9558–9566.

- Reimers and Gurevych (2019) Nils Reimers and Iryna Gurevych. 2019. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). 3982–3992.

- Reimers and Gurevych (2020) Nils Reimers and Iryna Gurevych. 2020. Making Monolingual Sentence Embeddings Multilingual using Knowledge Distillation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP). 4512–4525.

- Ren et al. (2022a) Jiaqian Ren, Lei Jiang, Hao Peng, Yuwei Cao, Jia Wu, Philip S. Yu, and Lifang He. 2022a. From known to unknown: quality-aware self-improving graph neural network for open set social event detection. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management. 1696–1705.

- Ren et al. (2022b) Jiaqian Ren, Lei Jiang, Hao Peng, Lingjuan Lyu, Zhiwei Liu, Chaochao Chen, Jia Wu, Xu Bai, and Philip S Yu. 2022b. Cross-network social user embedding with hybrid differential privacy guarantees. In Proceedings of the 31st ACM international conference on information & knowledge management. 1685–1695.

- Ren et al. (2023) Jiaqian Ren, Hao Peng, Lei Jiang, Zhiwei Liu, Jia Wu, Zhengtao Yu, and Philip S. Yu. 2023. Uncertainty-guided Boundary Learning for Imbalanced Social Event Detection. IEEE Transactions on Knowledge and Data Engineering (2023), 1–14.

- Ren et al. (2021) Jiaqian Ren, Hao Peng, Lei Jiang, Jia Wu, Yongxin Tong, Lihong Wang, Xu Bai, Bo Wang, and Qiang Yang. 2021. Transferring knowledge distillation for multilingual social event detection. arXiv preprint arXiv:2108.03084 (2021), 1–31.

- SRINIVAS (2010) N SRINIVAS. 2010. Gaussian process optimization in the bandit setting: No regret and experimental design. In Proceedings of the International Conference on Machine Learning, 2010. 1–17.

- Sui et al. (2020) Dianbo Sui, Yubo Chen, Jun Zhao, Yantao Jia, Yuantao Xie, and Weijian Sun. 2020. Feded: Federated learning via ensemble distillation for medical relation extraction. In Proceedings of the 2020 conference on empirical methods in natural language processing (EMNLP). 2118–2128.

- Sun et al. (2021) Benyuan Sun, Hongxing Huo, Yi Yang, and Bo Bai. 2021. Partialfed: Cross-domain personalized federated learning via partial initialization. Advances in Neural Information Processing Systems 34 (2021), 23309–23320.

- T Dinh et al. (2020) Canh T Dinh, Nguyen Tran, and Josh Nguyen. 2020. Personalized federated learning with moreau envelopes. Advances in Neural Information Processing Systems 33 (2020), 21394–21405.

- Tan et al. (2022) Alysa Ziying Tan, Han Yu, Lizhen Cui, and Qiang Yang. 2022. Towards personalized federated learning. IEEE Transactions on Neural Networks and Learning Systems (2022), 1–17.

- Vinh et al. (2009) Nguyen Xuan Vinh, Julien Epps, and James Bailey. 2009. Information theoretic measures for clusterings comparison: is a correction for chance necessary?. In Proceedings of the 26th annual international conference on machine learning. 1073–1080.

- Wei et al. (2023) Yifan Wei, Fangyu Lei, Yuanzhe Zhang, Jun Zhao, and Kang Liu. 2023. Multi-view graph representation learning for answering hybrid numerical reasoning question. arXiv preprint arXiv:2305.03458 (2023).

- Yang et al. (2023) Yingguang Yang, Renyu Yang, Hao Peng, Yangyang Li, Tong Li, Yong Liao, and Pengyuan Zhou. 2023. FedACK: Federated adversarial contrastive knowledge distillation for cross-lingual and cross-model social bot detection. In Proceedings of the ACM Web Conference 2023. 1314–1323.

- Yu et al. (2024) Xiaoyan Yu, Tongxu Luo, Yifan Wei, Fangyu Lei, Yiming Huang, Peng Hao, and Liehuang Zhu. 2024. Neeko: Leveraging Dynamic LoRA for Efficient Multi-Character Role-Playing Agent. arXiv preprint arXiv:2402.13717 (2024).

- Zhang et al. (2021) Chen Zhang, Yu Xie, Hang Bai, Bin Yu, Weihong Li, and Yuan Gao. 2021. A survey on federated learning. Knowledge-Based Systems 216 (2021), 106775.

- Zhang et al. (2023) Jianqing Zhang, Yang Hua, Hao Wang, Tao Song, Zhengui Xue, Ruhui Ma, and Haibing Guan. 2023. Fedala: Adaptive local aggregation for personalized federated learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 37. 11237–11244.

- Zhang et al. (2020) Michael Zhang, Karan Sapra, Sanja Fidler, Serena Yeung, and Jose M Alvarez. 2020. Personalized Federated Learning with First Order Model Optimization. In International Conference on Learning Representations. 1–17.

- Zhang et al. (2022) Zaixi Zhang, Xiaoyu Cao, Jinyuan Jia, and Neil Zhenqiang Gong. 2022. Fldetector: Defending federated learning against model poisoning attacks via detecting malicious clients. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining. 2545–2555.

- Zhao et al. (2020) Bo Zhao, Konda Reddy Mopuri, and Hakan Bilen. 2020. idlg: Improved deep leakage from gradients. arXiv preprint arXiv:2001.02610 (2020).

- Zhu et al. (2019) Ligeng Zhu, Zhijian Liu, and Song Han. 2019. Deep leakage from gradients. Advances in neural information processing systems 32 (2019), 1–11.