Karthikeya S Parunandi1, Aayushman Sharma2, Suman Chakravorty3 and Dileep Kalathil4

11institutetext: Texas AM University, College Station TX 77843, USA

11email: [email protected]1, 11email: [email protected]2, 11email: [email protected]3, and 11email: [email protected]4

D2C 2.0: Decoupled Data-Based Approach for Learning to Control Stochastic Nonlinear Systems via Model-Free ILQR

Abstract

In this paper, we propose a structured linear parameterization of a feedback policy to solve the model-free stochastic optimal control problem. This parametrization is corroborated by a decoupling principle that is shown to be near-optimal under a small noise assumption, both in theory and by empirical analyses. Further, we incorporate a model-free version of the Iterative Linear Quadratic Regulator (ILQR) in a sample-efficient manner into our framework. Simulations on systems over a range of complexities reveal that the resulting algorithm is able to harness the superior second-order convergence properties of ILQR. As a result, it is fast and is scalable to a wide variety of higher dimensional systems. Comparisons are made with a state-of-the-art reinforcement learning algorithm, the Deep Deterministic Policy Gradient (DDPG) technique, in order to demonstrate the significant merits of our approach in terms of training-efficiency.

keywords:

Machine Learning, Motion and Path Planning, Optimization and Optimal Control1 INTRODUCTION

It is well-known that a general solution to stochastic optimal control problem is computationally intractable. Hence, most methods rely on approximated dynamic programming methods (See, for e.g., [1]). In case of an unknown system model, the problem is further complicated. This problem of learning to optimally control an unknown general nonlinear system has been well-studied in the stochastic adaptive control literature [2] [3]. A very similar problem is also addressed in the field of reinforcement learning (RL) except that it specifically aims to solve a discrete-time stochastic control process (Markov Decision Process), more often with an infinite horizon formulation [4]. Also, RL has attracted a great attention with its recent success, especially in its sub-domain of deep RL [5] [6] [7].

Most methods in the RL can be divided into model-based and model-free approaches, based on whether a model is constructed before solving for an optimal policy or not, respectively. Among them, model-based RL typically requires lesser number of samples and can be global or local model-based. Global models are typically approximated with Gaussian Processes (GPs) or Neural Networks, and hence can be inaccurate in some portions of its state space. Local models (such as in [8][9]) in contrast offer more sample efficient and accurate solutions. On the other hand, model-free RL, since has to bypass learning the system model requires substantially more number of training samples to train the policy [10]. While an overwhelming number of successful works in the recent past have relied on a complex parameterizations such as by using neural networks [7] [11][12] [13], they are challenged with non-determinism in reproducing the results and in benchmarking against existing methods [14][15]. Recent works such as by [16] showed that a simple linear parameterization can be very effective and can result in performances competitive to that of the former methods. The current work, aimed at deriving a simple but structured, sample-efficient and thereby a fast policy, falls under the local model-based regime, though we won’t explicitly build the system model but rather deal with its gradients in the neighborhood of a nominal trajectory.

In the prequel of this work, called D2C [17], instead of solving for the optimal closed-loop policy via dynamic programming as in typical RL techniques, our approach searches for a computationally tractable policy that is nonetheless near-optimal to second order in a noise parameter . This is done via decoupling of the open and the closed loops, wherein we first solve for an optimal open-loop trajectory, and then design an optimal linear feedback law to stabilize the linear perturbations about the nominal trajectory. The success of this approach was previously demonstrated in [18][19] for model-based problems. This way of decoupling results in a compact parameterization of the feedback law in terms of an open loop control sequence and a linear feedback policy, and thereby, constitutes a novel approach to the RL problem via the decoupled search.

The contributions of this sequel paper are as follows:

i) We incorporate a data-efficient, and hence, significantly faster way to compute the gradients in the ILQR framework in a model free fashion. This, in turn, is incorporated in our D2C framework to solve for the optimal open-loop trajectory. This is in contrast to the ‘direct’ gradient-descent based approach introduced in the earlier version of our algorithm (D2C 1.0).

ii) We make a comparison with a state-of-the-art reinforcement learning algorithm - DDPG [7] as well as D2C 1.0, and show the significant merit of the current method, termed D2C 2.0, in terms of its computational efficiency.

iii) D2C 2.0 also constitutes a novel approach to solving complex robotic motion planning problems under uncertainty in a data efficient model-free fashion.

2 RELATED WORK

Differential Dynamic Programming (DDP) [20] is a class of iterative algorithms for trajectory optimization. One salient aspect of DDP is that it exhibits quadratic convergence for a general non-linear optimal control problem and can be solved in a single step for linear systems. In spite of its origins in the 1960s, it gained popularity only in the last decade due to the success of the modified algorithm called ILQR [21]. Though DDP (theoretically) guarantees a quadratic convergence, some of the terms in it involve computing second order derivatives of the system dynamical models. Since the dynamical models of most systems are multivariable vector-valued functions and their second order derivatives being third order tensors, DDP in its original form was not effective for practical implementation. ILQR [22] [23] dropped these terms and introduced regularization schemes [24][25] to handle the absence of these computationally expensive terms. This resulted in a faster and stable convergence to the optimal solution.

One advantage that ILQR has in terms of solving it in a model-free manner is that this algorithm is explicit in terms of system model and their first order derivatives. Earlier works such as by [25] and [26] employed finite differencing in computing the Jacobians in ILQR. Typically, a forward Euler scheme is chosen to independently determine each element (i.e, gradient) in a Jacobian matrix. [9] presented ILQR-LD by learning the dynamics through Locally Weighted Projection Regression (LWPR) approximation of the model first and then obtaining a corresponding analytical Jacobian. Their work also demonstrated a better computational efficiency over finite differences.

Spall introduced the ‘Simultaneous Perturbation Stochastic Approximation’ (SPSA) [27] method that evaluates the function only twice to calculate the Jacobian of the cost function. In this paper, a similar formulation is derived to compute the Jacobians of the system model online, in a model-free fashion, through the least squares method in a central-difference scheme.

The rest of the paper is organized as follows. Section 3 introduces the terminology and provides the problem statement. In section 4, we present a near-optimal decoupling principle that forms the basis of our algorithm. Section 5 describes our algorithm - D2C 2.0 in detail. In Sections 6 and 7, we provide results and a discussion of the application of the D2C 2.0 to several benchmark examples, along with comparisons to DDPG and the D2C technique.

3 PROBLEM FORMULATION

Let represent the state of a system and be the control signal at time respectively. Let denote the deterministic state transition model of the system. Let be the policy to be applied on the system. Let be the time-indexed incremental cost function for all and be the terminal cost function. Let represent the cost-to-go function under the policy and be the corresponding action-value function, both at time . Finally, let us denote the derivatives of a variable at time w.r.t. the state or the control by placing respective variables in the subscript. For example, . Unless stated otherwise, they are evaluated at the nominal state or control at time .

Now, let us define the notion of process noise in the system through an important parameter called ‘noise scaling factor’, denoted by . We assume that the noise is additive and white, zero-mean Gaussian. Hence, the state evolution equation of our stochastic system is represented as , where and is zero-mean Gaussian distributed.

Let us define the notion of a ‘nominal’ trajectory, given a policy , as follows - the nominal control actions of the policy are the control actions output by the policy when all the noise variables . Let us represent the nominal trajectory variables with bars over them and , both at time . So, the nominal trajectory is given by, , where is the time horizon, and , where .

Using the above definitions, the problem of stochastic optimal control that we consider is to find the set of time-varying optimal control policies (subscripts denote the time-indices) that minimize the finite horizon total expected cumulative cost,

| (1) |

such that the system model i.e, , relevant constraints and boundary conditions are satisfied. Since the current approach deals with model-free problem, the system model and its constraints are assumed to be implicitly satisfied in the data that the system/agent gathers from its environment (through a simulator or a real world experiment).

4 A NEAR-OPTIMAL DECOUPLING PRINCIPLE

Note : The results provided in this particular section are restated from our prequel paper on D2C primarily for the reader’s convenience and for the sake of completeness.

In this section, we describe a near-optimal decoupling principle which provides the theoretical foundation for our D2C technique.

Assuming that and are sufficiently smooth, let us describe the linearized dynamics about the nominal trajectory corresponding to the policy as follows. The state and control perturbations, given by and respectively, evolve as follows:

| (2) | ||||

| (3) |

where , , and , , are higher order terms in respective Taylor’s expansions. By substituting (3) in (2), we have

| (4) |

where . Now, similarly expanding the cost function under the policy about the nominal trajectory as , where and results in

| (5) |

Lemma 1: The state perturbation equation (4) can be equivalently separated into and , and is .

Proof: Proof is provided in the supplementary file.

From (5) and lemma-1, we obtain

| (6) |

Proposition 1: Given a feedback policy satisfying the smoothness requirements,

| (7) | ||||

| (8) |

Proof: Proof is provided in the supplementary file.

Noting that , the above proposition entails the following observations:

i) Observation 1: The cost-to-go along the nominal trajectory given by is within of the expected cost-to-go of the policy .

ii) Observation 2: Given the nominal control sequence , the variance of the cost-to-go is overwhelmingly determined by the linear feedback law by the variance of the linear perturbation of the cost-to-go, , under the linear closed loop system dynamics .

Proposition 1 and the above observations suggest that an optimal open-loop control sequence with a suitable linear feedback law (for the perturbed linear system) wrapped around it is approximately optimal. The following subsection summarizes the problems to be solved for each of them.

4.1 Decoupled Policy for Feedback Control

Open-Loop Trajectory Design: The open-loop trajectory is designed by solving the noiseless equivalent of the stochastic optimal control problem (1), as shown below:

Feedback Law: A linear feedback law is determined by solving for the optimal feedback gains that minimize the variance corresponding to the linear perturbation system around the optimal nominal trajectory found from the above, as follows:

Albeit convex, the above problem does not have a standard solution. However, since we are really only interested in a good variance rather than the optimal variance, we can solve an inexpensive time-varying LQR problem as a surrogate to reduce the variance of the cost-to-go. The corresponding problem can be posed as follows:

Remark 4.1.

In fact, if the feedback gain is designed carefully rather than the heuristic LQR above, then one can obtain near-optimality. This requires some more developments, however, we leave this result out of this paper because of the paucity of space.

5 ALGORITHM

This section presents our decoupled data-based control (D2C-2.0) algorithm. There are two main steps involved in solving for the requisite policy:

-

1.

Design an open-loop nominal trajectory by solving the optimization problem via a model-free ILQR based approach without explicitly making use of an analytic model of the system. This is described in subsection 5.1. This is the primary difference from the prequel D2C where we used a first order gradient based technique to solve the optimal open loop control problem.

-

2.

Determine the parameters of the time-varying linear perturbation system about its optimal nominal trajectory and design an LQR controller based on the system identification. This step is detailed in subsection 5.2.

5.1 Open-Loop Trajectory Design

An open-loop trajectory is designed by solving the corresponding problem in subsection 4.1. However, since we are solving it in a model-free manner, we need a suitable formulation that can efficiently compute the solution.

In this subsection, we present an ILQR based model-free method to solve the open-loop optimization problem. The advantage with ILQR is that the equations involved in it are explicit in system dynamics and their gradients. Hence, in order to make it a model-free algorithm, it is sufficient if we could explicitly obtain the estimates of Jacobians. A sample-efficient way of doing it is described in the following subsubsection. Since ILQR/DDP is a well-established framework, we skip the details and instead present the essential equations in algorithm 1, algorithm 2 and algorithm 3, where we also reflect the choice of our regularization scheme.

5.1.1 Estimation of Jacobians in a Model-Free Setting

From the Taylor’s expansions of ‘f’ about the nominal trajectory on both the positive and the negative sides, we obtain the following central difference equation:

| (9) |

Multiplying by on both sides to the above equation:

Assuming that is invertible (which will later be proved to be true by some assumptions), let us perform inversions on either sides of the above equation as follows:

| (10) |

Equation (10) solves for Jacobians, and , simultaneously. It is noted that the above formulation requires only 2 evaluations of , given the nominal state and control (). The remaining terms (also, the error in the evaluation) are of the order that is quadratic in and . The following extends equation (10) to be used in practical implementations (sampling).

We are free to choose the distribution of and . We assume both are i.i.d. Gaussian distributed random variables with zero mean and a standard deviation of . This ensures that is invertible. More on the advantage of using this distribution will be elaborated in the next paragraph. Let be the number of samples for each of the random variables, and , as and , respectively. Then is given by the following :

| (11) |

Let us consider the terms in the matrix . . Similarly, , and . From the definition of sample variance, we can write the above matrix as

Given that we have high enough number of samples ‘’ (typically, slightly more than ‘’ are sufficient), the above approximation holds good. Since the inversion of an identity matrix is trivial and always exists, the above matrix is invertible in equation (11). Thus, one can calculate and this way during the backward pass. For the sake of convenience in the later sections, let us refer to this method as ‘Linear Least Squares by Central Difference (LLS-CD)’. The entire algorithm to determine the optimal nominal trajectory in a model-free fashion is summarized together in Algorithm 1, Algorithm 3 and Algorithm 2.

5.2 Feedback Law

As discussed in the previous section, a time-varying LQR feedback law is used as a surrogate for the minimum variance problem. The LQR gains can be fully determined if , , and are known. The first two are the tuning parameters in the cost function. Determining and for a linear system is a system identification problem that can be solved by ‘LLS-CD’ as described under subsubsection 5.1.1.

6 SIMULATIONS

This section reports the implementation details of D2C-2.0 in simulation on various systems and their training results. We train the systems with varying complexities in terms of the dimension of the state and the action spaces. The physical models of the system are deployed in the simulation platform ‘MuJoCo-2.0’ [28] as a surrogate to their analytical models. The models are imported from the OpenAI gym [29] and Deepmind’s control suite [30].

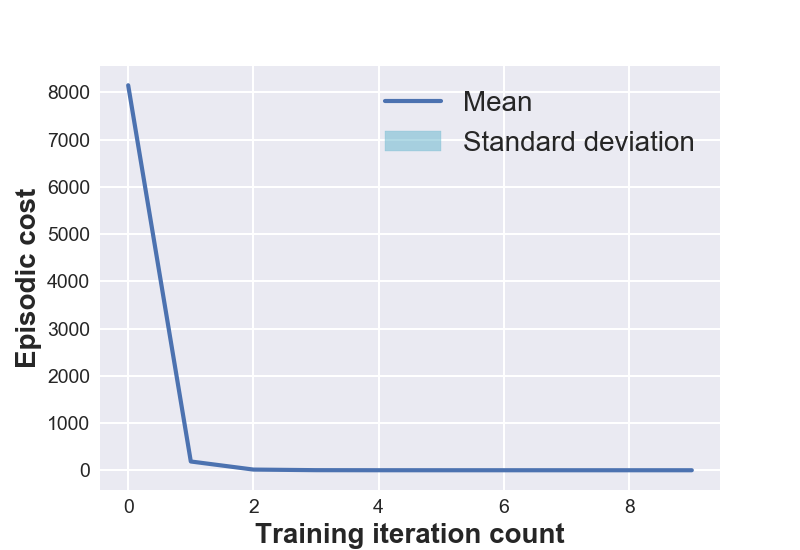

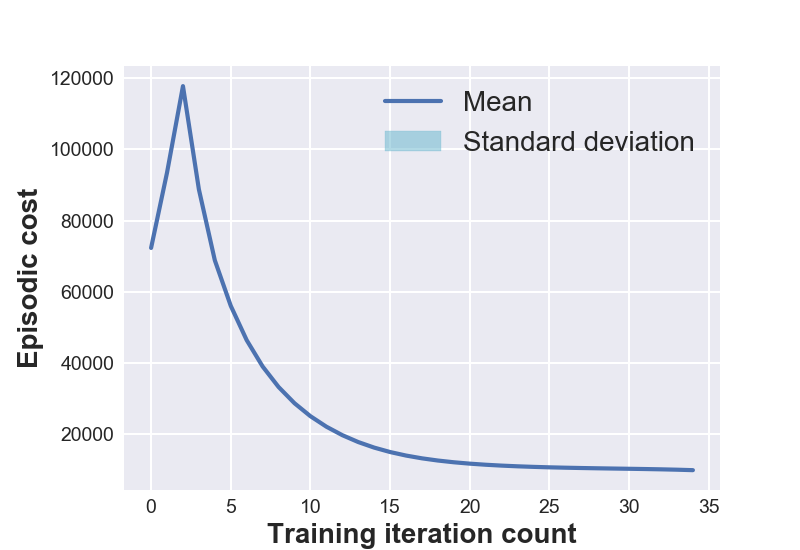

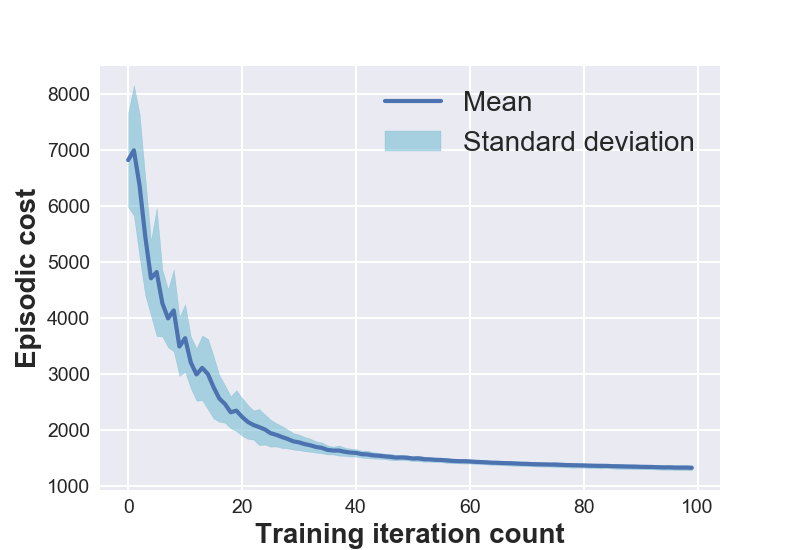

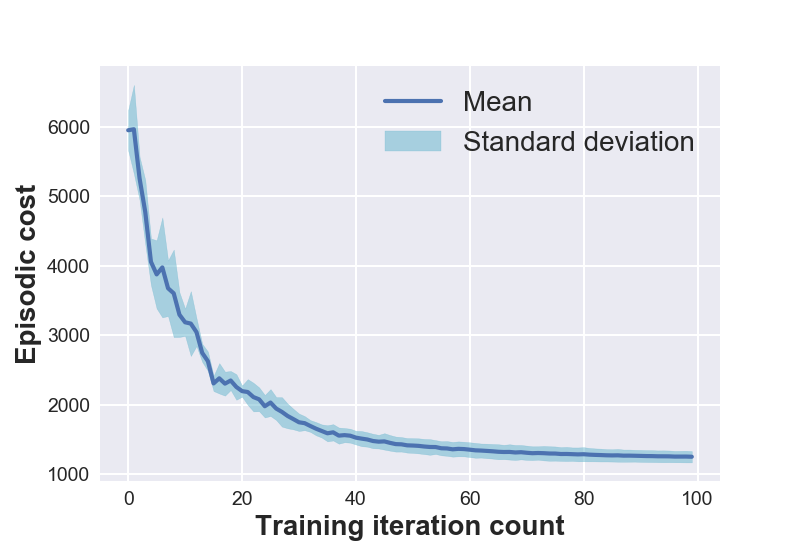

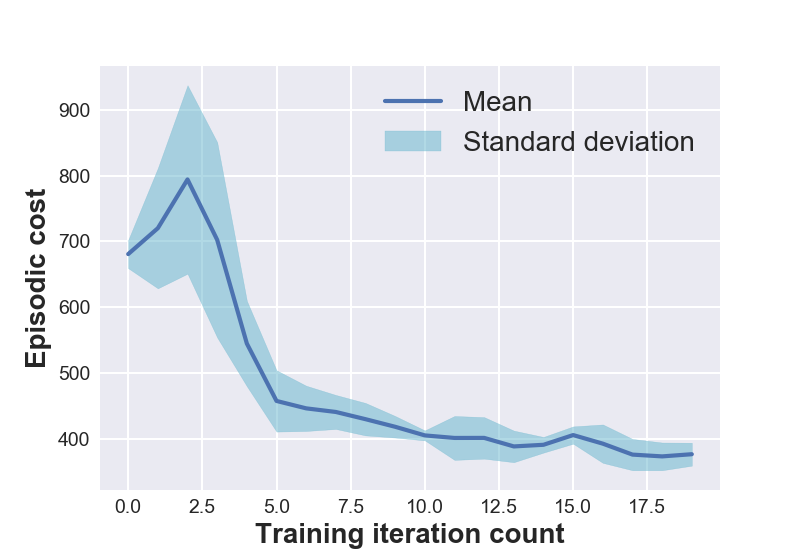

Figure 1 (left) shows the open-loop training plots of various systems under consideration. The data is averaged over 5 experimental runs to reflect the training variance and the repeatability of D2C-2.0 over multiple training sessions. One might notice that the episodic cost is not monotonically decreasing during training. This is because of the flexibility provided in training for the episodic cost to stay within a ‘band’ around the cost at the previous iteration. Though this might momentarily increase the cost in subsequent training iterations, it is observed to have improved the overall speed of convergence.

7 DISCUSSION

In this section, we compare D2C-2.0 with D2C and DDPG, and discuss the merits and demerits of each of the methods.

The manuscript on D2C provided an in-depth analysis on the comparison of D2C with DDPG. We have shown that training in D2C is much more sample-efficient and fares well w.r.t. the training time and the reproducibility of the resulting policy.

| Training time (in sec.) | ||||

| System | D2C | |||

| Open- | Closed- | DDPG | D2C- | |

| loop | loop | 2.0 | ||

| Inverted | 12.9 | 2261.15 | 0.33 | |

| Pendulum | ||||

| Cart pole | 15.0 | 1.33 | 6306.7 | 1.62 |

| 3-link | ||||

| Swimmer | 4001.0 | 4.6 | 38833.64 | 186.2 |

| 6-link | ||||

| Swimmer | 3585.4 | 16.4 | 88160 | 127.2 |

| Fish | 6011.2 | 75.6 | 124367.6 | 54.8 |

Table 1 shows the training times of D2C, DDPG and D2C-2.0. It is evident from the table that for simple and lower dimensional systems such as pendulum and cart pole, the policy can be almost calculated online (parallelization could make it much faster). It is due to the tendency of ILQR/DDP to quickly converge if the linearization of system models has bigger basin of attraction (such as pendulum, which is, in fact, often approximated to be linear about its resting state). Moreover, for a higher dimensional system such as in the case of a robotic fish, D2C-2.0 took 54.8 seconds while the original D2C took around 6096 seconds, which is approximately 90 times faster. The table makes it evident that D2C-2.0 clearly exhibits much faster convergence when compared to both D2C and DDPG, while D2C is much faster than DDPG. The primary reason for the much larger training times in DDPG is due to the complex parametrization of the feedback policy in terms of deep neural networks which runs into hundreds of thousands of parameters while D2C has a parametrization, primarily the open loop control sequence which is of dimension , where is the number of inputs and is the time horizon of the problem, that runs from tens to a few thousands (see Table 2).

| System | No. of | No. of | No. of | No. of |

|---|---|---|---|---|

| steps | actuators | parameters | parameters | |

| optimized | optimized | |||

| in D2C | in DDPG | |||

| Inverted | 30 | 1 | 30 | 244002 |

| Pendulum | ||||

| Cart pole | 30 | 1 | 30 | 245602 |

| 3-link | 1600 | 2 | 3200 | 251103 |

| Swimmer | ||||

| 6-link | 1500 | 5 | 7500 | 258006 |

| Swimmer | ||||

| Fish | 1200 | 6 | 7200 | 266806 |

A major reason behind the differences in training efficiencies stems from their formulation. D2C is based on gradient descent, which is linearly convergent. It is a direct and generic method of solving an optimization problem. On the other hand, the equations involved in D2C-2.0 arise from the optimality conditions that inherently exploit the recursive optimal structure and the causality in the problem. Moreover, the equations are explicit in the Jacobians of the system model. As a result, it is possible to solve for it independently and in a sample efficient manner, at every time-step of each iteration. However, one should expect that as we consider very high dimensional systems, the algorithmic complexity of D2C-2.0 could outgrow that of D2C. This is because it is in terms of its computational complexity. Also, D2C, by the nature of its formulation does not need the state of the system, and hence, is generalizable to problems with partial state observability.

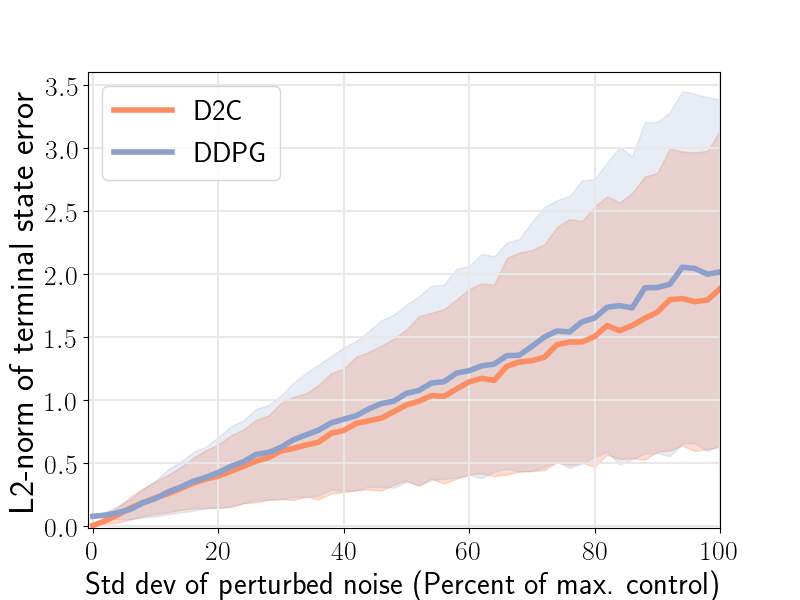

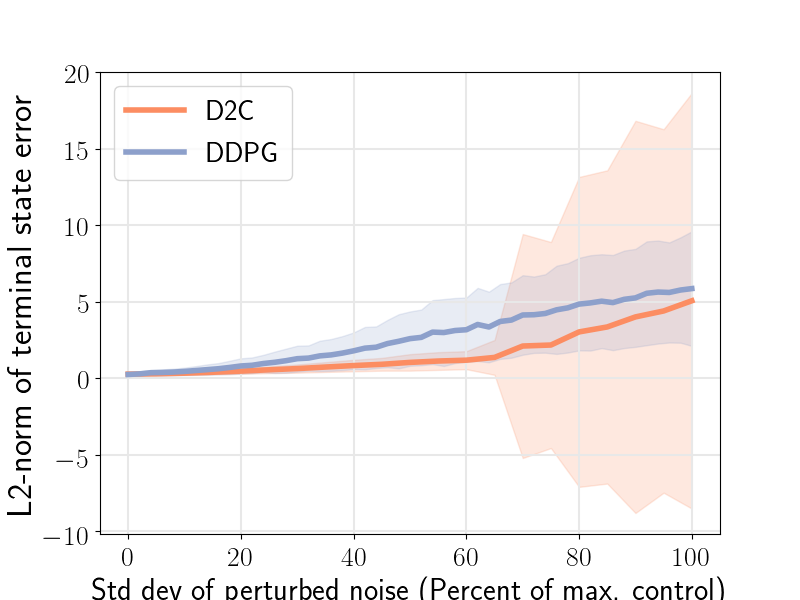

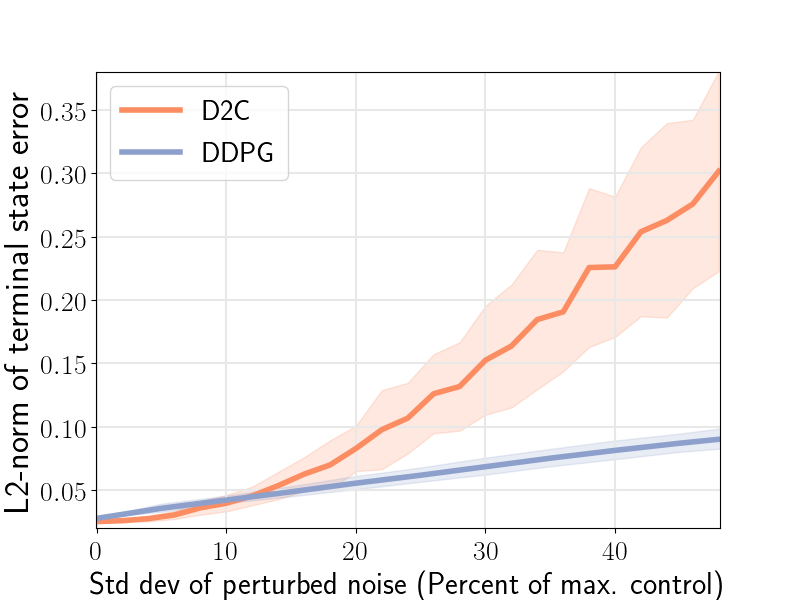

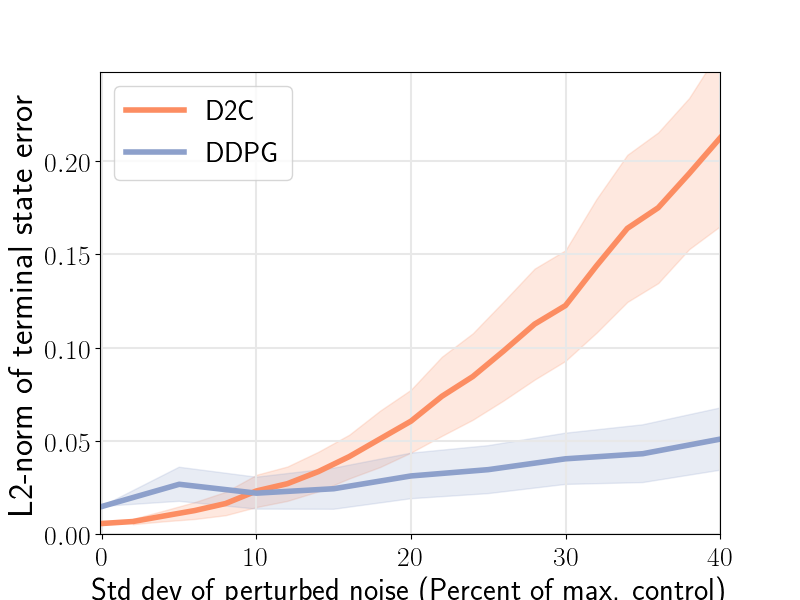

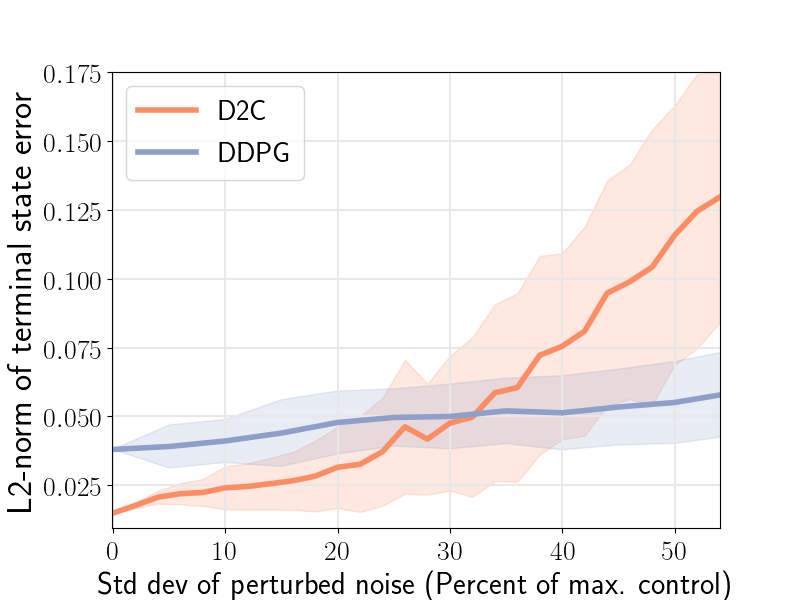

The closed-loop performance of D2C-2.0 w.r.t. that of DDPG is shown in the figure 1 (right). Noise is injected into the system via the control channel by varying the noise scaling factor- and then, measuring the performance. We note here that DDPG fails to converge for the robotic fish problem, and thus, the nominal performance () is poor. We see that the performance of D2C is better than that of DDPG when the noise levels are lower, however, the performance of DDPG remains relatively similar for higher levels of noise while that of D2C degrades. In our opinion, this closed loop performance aspect of the methods needs to be studied in more detail in order to make a definitive judgement, and is left for future work. For instance, by the time DDPG overtakes D2C in terms of the average performance, the actual performance of both systems is quite poor, and thus, it is not clear if this can be construed as an advantage.

Now, as mentioned in section 2, earlier works have attempted to tackle the idea of model-free ILQR and have mostly confined to finite-differences for Jacobian computation. Table 3 shows the comparison of per-iteration computational times between ‘finite-differences’ and ‘linear least squares with central difference formulation’. It is clearly evident that, as the dimension of the state space increases, the method of finite-differences requires many more function evaluations, and hence, our LLS-CD method is much more efficient.

| System | FD | LLS-CD |

| Inverted | ||

| Pendulum | 0.017 | 0.033 |

| Cart pole | 0.0315 | 0.0463 |

| Acrobot | 0.554 | 0.625 |

| 3-link | ||

| Swimmer | 4.39 | 1.86 |

| 6-link | ||

| Swimmer | 14.43 | 1.278 |

| Fish | 34.75 | 2.74 |

8 Conclusion

In order to conclude the discussion that started the global vs local policy approximation dilemma in section 1, how well deep RL methods extrapolate their policy to the entire continuous state and action spaces remains an open question. Now, consider that the results shown in the Table 1 are based on serial implementations on an off-the-shelf computer and D2C-2.0 can be highly parallelizable. By looking at the order of magnitude of numbers in the same table, we expect with reasonable augmentation of computational power by parallelization, D2C-2.0 could offer a near-real time solution in high dimensional problems. In such cases, one could rely on the near-optimal policy described in this paper for low noises, and re-solve for the open-loop trajectory online by the approach presented in this paper along with the attendant feedback, whenever the noise takes the method out of the region of attraction of the linear feedback policy. This will be our motivation for the future work along with addressing the following limitation.

Limitations: D2C-2.0, in its current form, is not reliable for hybrid models such as legged robots that involve impacts. Primarily, the issue is in the feedback design which requires smoothness of the underlying dynamics while legged systems are only piece-wise smooth. Future work shall model impacts to tractably incorporate them in order to estimate the required gradients. This seems plausible as iLQR has been used to design controllers for such systems in the literature [25].

References

- [1] W. B. Powell (2007). Approximate Dynamic Programming: Solving the curses of dimensionality. John Wiley & Sons.

- [2] P.A. Ioannou and J. Sun (2012). Robust adaptive control, Courier Corporation.

- [3] K.J. Astrom and B. Wittenmark (2012). Adaptive control, Courier Corporation.

- [4] R.S. Sutton, and A.G. Barto (2018). Reinforcement learning: An introduction, MIT press.

- [5] V. Mnih, K. Kavukcuoglu, D. Silver, A. Rusu, J. Veness, M. Bellemare, A. Graves, M. Riedmiller, A. Fidjeland, G. Ostrovski, S. Petersen, C. Beattie, A. Sadik, I. Antonoglou, H. King, D. Kumaran , D. Wierstra, S. Legg, D. Hassabis (2015). Human-level control through deep reinforcement learning, Nature. vol. 518, pp. 529-33.

- [6] D. Silver, A. Huang, C.J. Maddison, A. Guez, L. Sifre, G. Van Den Driessche, J. Schrittwieser, I. Antonoglou, V. Panneershelvam, M. Lanctot et al. (2016). Mastering the game of go with deep neural networks and tree search, Nature, vol. 529, no. 7587, pp. 484.

- [7] T.P. Lillicrap, J.J. Hunt, A. Pritzel, N. Heess, T. Erez, Y. Tassa, D. Silver, and D. Wierstra (2016). Continuous control with deep reinforcement learning, International Conference on Learning Representations.

- [8] V. Kumar, E. Todorov, and S. Levine (2016). Optimal Control with Learned Local Models: Application to Dexterous Manipulation, International Conference for Robotics and Automation (ICRA).

- [9] D. Mitrovic, S. Klanke, and S. Vijayakumar (2010). Adaptive Optimal Feedback Control with Learned Internal Dynamics Models, In: Sigaud O., Peters J. (eds) From Motor Learning to Interaction Learning in Robots. Studies in Computational Intelligence, vol 264. Springer, Berlin, Heidelberg, pp. 65-84.

- [10] P. Abbeel, M. Quigley, and A.Y. Ng (2006). Using inaccurate models in reinforcement learning, Proceedings of the 23rd international conference on Machine learning (ICML ’06). ACM, New York, NY, USA, 1-8. DOI: https://doi.org/10.1145/1143844.1143845.

- [11] Y. Wu, E. Mansimov, S. Liao, R. Grosse and J. Ba (2017). Scalable trust-region method for deep reinforcement learning using Kronecker-factored approximation. 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA.

- [12] J. Schulman, S. Levine and P. Moritz, M. I. Jordan and P. Abbeel (2015). Trust Region Policy Optimization. arXiv:1502.05477.

- [13] J. Schulman, F. Wolski, P. Dhariwal, A. Radford and O. Klimov (2017). Proximal Policy Optimization Algorithms. arXiv:1707.06347.

- [14] P. Henderson, R. Islam, P. Bachman, J. Pineau, D. Precup, and D. Meger (2018). Deep reinforcement learning that matters, in Thirty Second AAAI Conference on Artificial Intelligence.

- [15] M. G. D. P. Riashat Islam, Peter Henderson (2017). Reproducibility of benchmarked deep reinforcement learning tasks for continuous control, Reproducibility in Machine Learning Workshop, ICML.

- [16] A. Rajeswaran, K. Lowrey, E. V. Todorov, and S. M. Kakade (2017). Towards generalization and simplicity in continuous control, Advances in Neural Information Processing Systems, pp. 6550-6561.

- [17] R. Wang, K.S. Parunandi, D. Yu, D. Kalathil and S. Chakravorty (2019). Decoupled Data Based Approach for Learning to Control Nonlinear Dynamical Systems, arXiv:1904.08361, under review for IEEE Tr. Aut. Control.

- [18] M. Rafieisakhaei, S. Chakravorty, and P.R. Kumar (2017). A Near-optimal separation principle for nonlinear stochastic systems arising in robotic path planning and control, IEEE Conference on Decision and Control(CDC).

- [19] K. S. Parunandi, S. Chakravorty (2019). T-PFC: A Trajectory-Optimized Perturbation Feedback Control Approach, IEEE Robotics and Automation Letters, vol. 4, pp. 3457-3464.

- [20] D. Jacobsen, and D. Mayne (1970). Differential Dynamic Programming, Elsevier.

- [21] W. Li and E. Todorov (2007). Iterative linearization methods for approximately optimal control and estimation of non-linear stochastic system, International Journal of Control, vol. 80, no. 9, pp. 1439-1453.

- [22] W. Li, and E. Todorov (2004). Iterative Linear Quadratic Regulator Design for Nonlinear Biological Movement Systems, ICINCO.

- [23] E. Todorov, and W. Li (2005). A generalized iterative LQG method for locally-optimal feedback control of constrained nonlinear stochastic systems. Proceedings of the 24th American Control Conference, volume 1 of (ACC 2005), pp. 300 - 306.

- [24] Z. Xie, C. K. Liu, and K. Hauser (2017). Differential dynamic programming with nonlinear constraints, International Conference on Robotics and Automation (ICRA), pp. 695-702.

- [25] Y. Tassa, T. Erez, and E. Todorov (2012). Synthesis and stabilization of complex behaviors through online trajectory optimization, International Conference on Intelligent Robots and Systems, pp. 4906-4913.

- [26] S. Levine, and V. Koltun (2013). Guided policy search, Proceedings of the 30th International Conference on International Conference on Machine Learning - Volume 28 (ICML’13), Sanjoy Dasgupta and David McAllester (Eds.), Vol. 28. JMLR.org III-1-III-9.

- [27] J. C. Spall (1998). An overview of the Simultaneous Perturbation Method for efficient optimization, Johns Hopkins APL Technical Digest, vol. 19, no. 4, pp. 482-492.

- [28] T. Emanuel, E. Tom, and Y. Tassa (2012). Mujoco: A physics engine for model-based control, IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5026-5033.

- [29] G. Brockman, V. Cheung, L. Pettersson, J. Schneider, J. Schulman, J. Tang, and W.Zaremba (2016). OpenAI Gym, preprint :1606.01540.

- [30] T. Yuval, Y. Doron, A. Muldal, T. Erez, Y. Li, D. de Las Casas, D. Budden, A. Abdolmaleki, J. Merel, A. Lefrancq, T. Lillicrap, and M. Riedmiller (2018). Deepmind control suite, :1801.00690.

9 Supplementary

Proof of Lemma 1:

We proceed by induction. The first general instance of the recursion occurs at . It can be shown that:

| (12) |

Noting that and are second and higher order terms, it follows that is .w

Suppose now that where is . Then:

| (13) |

Noting that is and that is by assumption, the result follows.

Proof of Proposition 1:

From (6), we get,

| (14) |

The first equality in the last line of the equations before follows from the fact that , since its the linear part of the state perturbation driven by white noise and by definition . The second equality follows from the fact that is an function. Now,

| (15) |

Since is , is an function. It can be shown that is as well (proof is given in Lemma 2). Finally is an function because is an function. Combining these, we will get the desired result.

Lemma 2: Let , be as defined in (6). Then, is an function.

Proof of Lemma 2:

In the following, we suppress the explicit dependence on for and for notational convenience.

Recall that , and . For notational convenience, let us consider the scalar case, the vector case follows readily at the expense of more elaborate notation. Let us first consider . We have that . Then, it follows that:

| (16) |

where represents the coefficient of the second order term in the expansion of . A similar observation holds for in that:

| (17) |

where is the coefficient of the second order term in the expansion of . Note that and . Therefore, it follows that we may write:

| (18) |

for suitably defined coefficients . Therefore, it follows that

| (19) |

for suitably defined . Therefore:

| (20) |

Taking expectations on both sides:

| (21) |

Break , assuming . Then, it follows that:

| (22) |

where the first and last terms in the first equality drop out due to the independence of the increment from , and the fact that and . Since is the state of the linear system , it may again be shown that:

| (23) |

where represents the state transitions operator between times and , and follows from the closed loop dynamics. Now, due to the independence of the noise terms , it follows that regardless of .

An analogous argument as above can be repeated for the case when . Therefore, it follows that .

DDPG Algorithm: Deep Deterministic Policy Gradient (DDPG) is a policy-gradient based off-policy reinforcement learning algorithm that operates in continuous state and action spaces. It relies on two function approximation networks one each for the actor and the critic. The critic network estimates the value given the state and the action taken, while the actor network engenders a policy given the current state. Neural networks are employed to represent the networks.

The off-policy characteristic of the algorithm employs a separate behavioural policy by introducing additive noise to the policy output obtained from the actor network. The critic network minimizes loss based on the temporal-difference (TD) error and the actor network uses the deterministic policy gradient theorem to update its policy gradient as shown below:

Critic update by minimizing the loss:

Actor policy gradient update:

The actor and the critic networks consists of two hidden layers with the first layer containing 400 (’relu’ activated) units followed by the second layer containing 300 (’relu’ activated) units. The output layer of the actor network has the number of (’tanh’ activated) units equal to that of the number of actions in the action space.

Target networks one each for the actor and the critic are employed for a gradual update of network parameters, thereby reducing the oscillations and a better training stability. The target networks are updated at . Experience replay is another technique that improves the stability of training by training the network with a batch of randomized data samples from its experience. We have used a batch size of 32 for the inverted pendulum and the cartpole examples, whereas it is 64 for the rest. Finally, the networks are compiled using Adams’ optimizer with a learning rate of 0.001.

To account for state-space exploration, the behavioural policy consists of an off-policy term arising from a random process. We obtain discrete samples from Ornstein-Uhlenbeck (OU) process to generate noise as followed in the original DDPG method. The OU process has mean-reverting property and produces temporally correlated noise samples as follows:

where indicates how fast the process reverts to mean, is the equilibrium or the mean value and corresponds to the degree of volatility of the process. is set to 0.15, to 0 and is annealed from 0.35 to 0.05 over the training process.