Cyclops: Open Platform for Scale Truck Platooning

Abstract

Cyclops, introduced in this paper, is an open research platform for everyone who wants to validate novel ideas and approaches in self-driving heavy-duty vehicle platooning. The platform consists of multiple 1/14 scale semi-trailer trucks equipped with associated computing, communication and control modules that enable self-driving on our scale proving ground. The perception system for each vehicle is composed of a lidar-based object tracking system and a lane detection/control system. The former maintains the gap to the leading vehicle, and the latter maintains the vehicle within the lane by steering control. The lane detection system is optimized for truck platooning, where the field of view of the front-facing camera is severely limited due to a small gap to the leading vehicle. This platform is particularly amenable to validating mitigation strategies for safety-critical situations. Indeed, the simplex architecture is adopted in the computing modules, enabling various fail-safe operations. In particular, we illustrate a scenario where the camera sensor fails in the perception system, but the vehicle is able to operate at a reduced capacity to a graceful stop. Details of Cyclops, including 3D CAD designs and algorithm source codes, are released for those who want to build similar testbeds.

I INTRODUCTION

Vehicle platooning is an automation technology that coordinates multiple vehicles so that short longitudinal inter-vehicle distances are maintained while vehicles are running at a high speed [1]. The leading vehicle (LV) is usually driven by a human driver, while the following vehicles (FVs) are autonomous based on perception sensors and wireless communications between vehicles [2]. Its potential benefits include (i) reduced fuel consumption and emission, (ii) better road utilization, and (iii) enhanced safety [3] [4].

There have been many national-level projects for vehicle platooning, including SARTRE [5] [6] and ENSEMBLE [7] in EU, and TROOP [8] in Korea. We are partly engaged in one of such projects that develops safety scenarios for heavy-duty truck platooning, where the developed system should be validated with potentially fatal scenarios on public highways. The scenarios include (i) emergency braking, (ii) cutting-in rogue vehicles, and (iii) loss of wireless connections. Due to the heavyweights of the employed semi-trailer trucks, we found it much too dangerous to put full-size trucks directly into such scenarios in early validation stages. Although Hardware-in-the-Loop (HiL) simulators are employed, they are mostly for validating control algorithms, not for validating countermeasures for safety-critical scenarios.

With this motivation, we developed a scale truck platooning testbed, as shown in Fig. 1, which is named cyclops since the single front-facing camera on the tractor unit reminds us of the one-eyed giant in Greek mythology. Our testbed includes three autonomous semi-trailer trucks, each of which is equipped with perception sensors, computing platforms, and wireless communication devices. With the truck platforms, we developed a platooning system with our perception and control systems that closely resemble the full-size platooning system. It retains most of the real-world challenges in platooning but significantly reduces the cost of implementing safety scenarios. Thus, it allows us to test and validate safety countermeasures with lower cost in the early development stage, even before the full-size trucks are ready. The platooning system can operate in our scale truck proving ground, as shown in Fig. 2, where various platooning scenarios can be rapidly developed and validated.

The perception system employs one camera sensor and one single-plane lidar sensor. The camera detects ego lanes for lateral control, while the lidar measures the longitudinal distance and the lateral location of a preceding trailer. Regarding the perception system, we were attracted by a unique challenge of truck platooning. Since FVs closely follow their preceding trailers, the rear end of trailers significantly occludes the camera’s view, as was also reported in the case of full-size trucks [9]. The occlusion adversely affects the lane detection performance because the trailer’s rear end creates indistinguishable noises. To reduce such noises, our perception system dynamically adjusts the region of interest (ROI) in the camera image based on the distance to the preceding trailer, keeping the ROI free from the noises.

For the platooning control, the following three algorithms are developed: (i) velocity control, (ii) gap control, and (iii) lane keeping control. The first is to have the vehicle move at the reference velocity. The second is to maintain the distance from the preceding truck to the desired gap reference. Gap control utilizes the distance to the preceding trailer from the lidar sensor and the reference velocity of the preceding truck through wireless communications. Finally, the third utilizes the camera sensor to locate the ego truck’s lateral location, adjusting the steering angle.

We conducted a case study with a camera sensor failure with the developed testbed. Since our platooning system relies on the camera for lateral control, a camera failure leads to a catastrophic event. Considering this hazardous safety scenario, we developed a mitigation method that temporarily utilizes the lidar sensor such that FV follows the center point of the preceding trailer instead of following the lane until a graceful stop. With this case study, we argue that our testbed is useful in validating safety algorithms and platooning scenarios.

We share in this paper our experience gained while developing the scale truck platooning testbed and release all the implementation details, including the algorithm source codes111All the design materials and the source codes are available at https://github.com/hyeongyu-lee/scale_truck_control.. We hope that our testbed can be reproduced by other research groups interested in truck platooning systems.

II Truck and System Design

II-A System Design

Fig. 3 shows the overall architecture of our testbed, where three trucks (LV, FV1, and FV2) are connected through a wireless network to each other and also with a remote control center. The control center communicates directly with LV, providing the target velocity and gap distance. The target velocity is used as the reference velocity of LV, while the target gap distance is communicated to FV1, which is relayed to FV2 for velocity and gap control. We point out that every truck in Cyclops is autonomous, which is not valid in full-size truck platooning, where its LV is usually human-driven.

In FV1 and FV2, the perception system receives the front-facing camera images and the lidar data, where the camera image is used for detecting the lane curvature, while the lidar points are used to locate the preceding trailer’s rear end. Then the following information is delivered to the control system: (i) the lane curvature, (ii) the gap distance, (iii) the current velocity from the encoder sensor, (iv) the target gap distance given by the command center, and (v) the preceding truck’s reference velocity. Now the lane keeping controller decides the steering angle, controlled by the steering motor through the steering motor controller. The gap controller generates the reference velocity to meet the target gap, then given to the velocity controller that controls the driving motor through the driving motor controller. The reference velocity generated by the gap controller is communicated to the following truck through V2V communication for its gap control.

LV and FVs share the same hardware and software architecture with a slight difference. Since LV does not need the gap controller, it is replaced with the emergency braking controller between the obstacle detection and the velocity controller. Since our testbed does not allow any other surrounding vehicles except the trucks at this moment, LV automatically enforces an emergency stop when it detects an obstacle in the driving direction, which in turn triggers emergency stops in FV1 and FV2. Additionally, the command center can also enforce an emergency stop by a remote command.

| Component | Item |

|---|---|

| Tractor | 1/14 MAN 26.540 6x4 XLX |

| Trailer | 40-Foot Container 1/14 Semi-trailer |

| Camera | ELP-USBFHD04H-BL180 |

| Lidar | RPLidar A3 |

| Encoder | Magnetic Encoder Pair Kit |

| High-level controller | Nvidia Jetson AGX Xavier |

| Low-level controller | OpenCR 1.0 |

| Driving Motor | TBLM-02S 21.5T |

| Steering motor | SANWA SRM-102 |

| Battery | BiXPower 96Wh BP90-CS10 |

| Antenna | ipTIME N007 |

II-B Truck Design

Fig. 4 shows one of our 1/14 scale semi-trailer trucks. As the truck body, we use an RC truck famous among the RC enthusiasts. Three such trucks were developed, one designated as LV while the others are FVs. The figure highlights nine essential components such as sensors and computing platforms. The hardware components are listed in Table I. More specifically, each truck is equipped with a 180 degrees FoV (Field of View) front-facing camera on top of the tractor. A single-plane lidar sensor with a 25 m detection range is hidden inside the truck cabin. An encoder that counts wheel rotations is installed at the tractor’s front wheel to measure the vehicle velocity. We use two heterogeneous computing platforms for the high-level and low-level control, respectively. For the high-level controller, we use an Nvidia Jetson AGX Xavier located inside the trailer, while, as the low-level controller, an OpenCR embedded computer is attached at the back of the tractor. For the driving motor, we use a 1800 KV BLDC motor. For the steering motor, we use a servo motor with a torque of 3.3 kgcm. All the electronic components are powered by a 96 Wh battery in the trailer. We use the WIFI network interface in the Nvidia Jetson AGX Xavier for wireless communication, which is reinforced by an additional high-performance antenna installed on the trailer.

III Perception and Control

This section presents our perception and control methods for truck platooning. Our platooning system has two different modes of operations (i.e., the LV mode and the FV mode). We implicitly assume the FV mode in this section, and it will be explicitly stated when the LV mode needs to be presented.

III-A Lane Detection

The objective of lane detection is to find the curvature of the desired path from the front-facing camera. For that, a camera image goes through the following steps: (i) pre-processing of cutting the trapezoid ROI and removing noises using the Gaussian blur method; (ii) conversion into a binarized bird’s eye view image; (iii) sliding window-based search to detect the left and right lanes; (iv) fitting the desired path’s curvature combining the left and right lane curvatures. The final curvature is decided as a second-order polynomial.

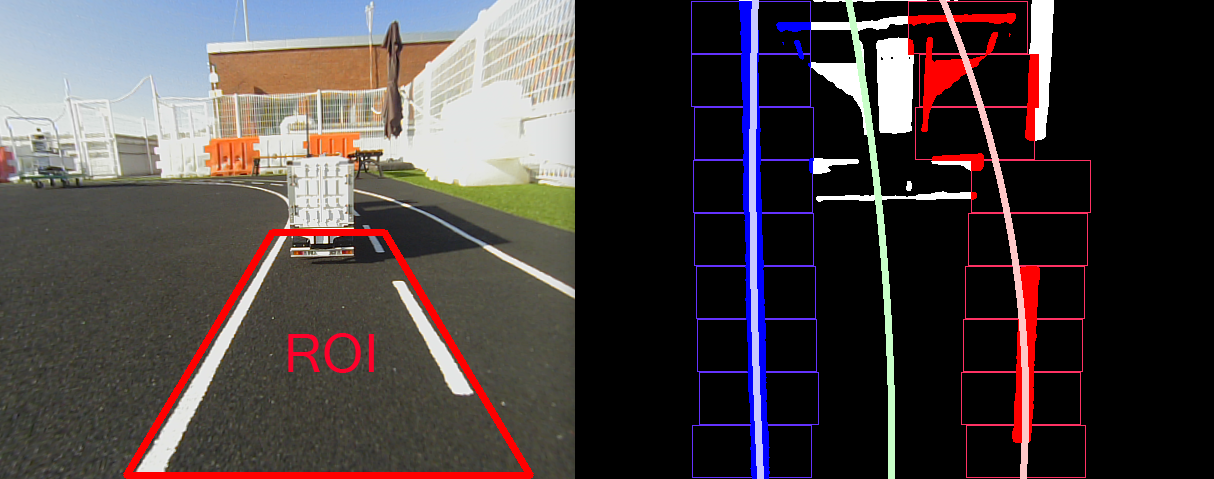

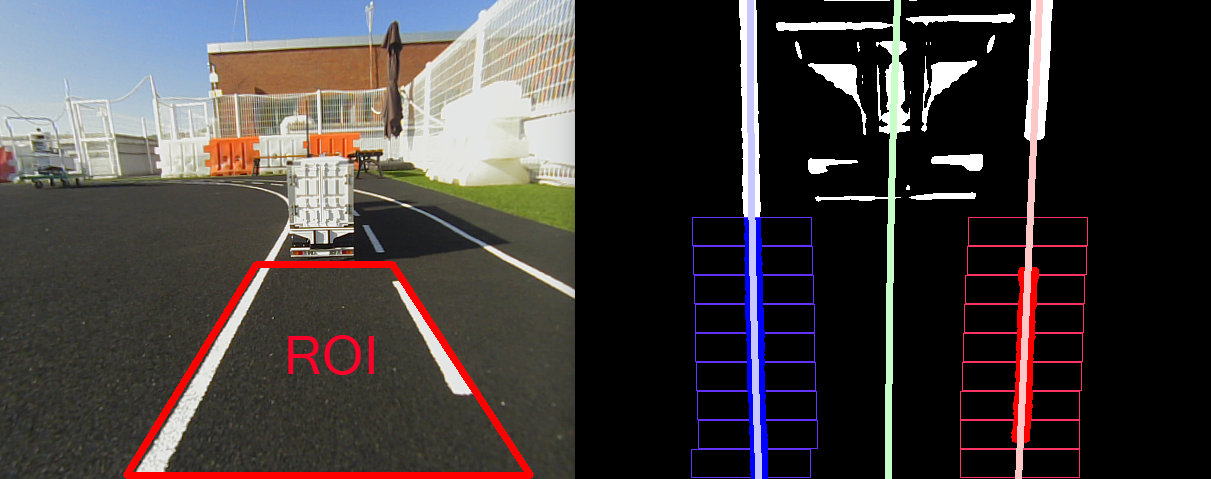

The above lane detection method has been widely used in many studies [10, 11, 12] because it is robust and lightweight for the implementation in small embedded systems. Unfortunately, however, the method does not perform well in the platooning scenario due to the minimal gap to the preceding truck. Fig. 5(a) shows a forward view image and its lane detection result with the static ROI method where the trapezoid ROI is a fixed one. The binary image indicates that the slight inclusion of the trailer’s rear end in the ROI badly affects the lane detection result. The noise by the preceding trailer is a known issue reported in the platooning with full-size trucks [9]. In our testbed, the effect is even vibrant because the trailer’s color happens to be white.

To eliminate the noise by the trailer at a close distance, we try to cut off the trailer portion in the bird’s eye view image. For that, we use the gap information from the lidar sensor. By actual measurements, we found that the ratio between the longitudinal distance and the number of vertical pixels is 490 pixels per 1.0 m. With this mapping, Fig. 5(b) shows the adapted ROI that precisely removes the noise, which provides a significantly improved lane detection result. This adaptation logic is added in our lane detection module such that it can dynamically determine the optimal ROI.

III-B Obstacle Detection

Obstacle detection provides the gap distance to the preceding truck. We use a single-plane lidar sensor that provides a set of points to the nearest objects around 360 degrees. To detect the preceding truck, we limitedly use the front-facing 60 degrees. For the obstacle detection, we employ the well-known obstacle_detector ROS package [13], which detects and also tracks nearby objects by clustering the 2D lidar points. The tracking result is obtained as a circle where its center and diameter correspond to the middle point and the horizontal length of the preceding trailer’s rear end, respectively.

III-C Velocity Control

In this section, we introduce the velocity control, which consists of the feed-forward plus anti-windup proportional-integral (FF+AWPI) control, the velocity saturation function , and the inverse of the nonlinear function . Here, the subscript denotes the variables or function for LV, for FV1, and for FV2. The FF+AWPI control calculates the velocity control input such that

| (1) |

where is the sampling time, is the velocity control input saturated by function , the positive constants , and are control parameters, and is the saturated velocity reference. The velocity tracking error is given as

| (2) |

where is the velocity measurements of the truck.

The saturation function represents the velocity limitation that a truck can generate. The saturation function is given as

| (3) |

where denotes the maximum velocity that a truck can generate.

The motor command and the velocity of the truck have the nonlinear relation. The inverse of nonlinear function is used for linearly treating the truck. The nonlinear function representing the nonlinearity between the motor command and the velocity of the truck is obtained by fitting the experimental data for the motor command and the velocity of truck into a quadratic function, and is given by

| (4) |

where , and are design parameters. From (4), we can induce the function such that

| (5) |

Finally, the output of the function is the motor command , which is applied to the driving motor.

III-D Gap Control

The gap control is used by only FVs. The goal of the gap control is to make the gap to the preceding truck closely follow the gap reference. The gap control is composed of the feed-forward plus proportional-differential (FF+PD) control and the velocity reference saturation function . The FF+PD control is given in the following form:

| (6) |

where is the saturated velocity reference of the preceding truck received via wireless communication. The positive constants and are control parameters, and the gap tracking error is obtained as

| (7) |

where denotes the gap reference and denotes the measured gap to the preceding truck.

The velocity reference saturation function saturates the velocity reference as the velocity limit of the lane, which is represented as

| (8) |

where denotes the velocity limit of the lane. Finally, the saturated velocity reference plays as the velocity reference for the velocity control.

III-E Lane Keeping Control

In this section, we introduce the lane keeping control to maintain the truck within the lane. The lane keeping control is given by

| (9) |

where is the steering angle, is the estimated lateral error, is the preview lateral error, and and are control parameters that are varied according to the truck velocity. Because faster velocities lead to bigger lateral changes by a given steering angle, with a faster velocity, the control parameters have to be smaller.

In (9), the preview lateral error is obtained with a camera sensor. The lateral error , however, cannot be directly obtained with the camera sensor due to its limited sight, thus we estimate the lateral error such that

| (10) |

where is the preview distance and is the angle between the direction in which the truck moves and the preview point on the desired path which is obtained with the camera sensor.

IV Experiments

This section first evaluates the platooning performance of our developed scale truck platforms with two scenarios. The first scenario is to speed up LV while maintaining the constant gap between trucks, and the second one is to reduce the gap between trucks while maintaining the constant velocity of the platoon. Then we evaluate the effectiveness of our dynamic ROI method by measuring how it affects lateral control stability. However, as mentioned in the previous section, the front-facing camera sensor cannot effectively measure the lateral error, which is essential for evaluating the lane keeping control. As an alternative, we install an extra camera sensor, downfacing each trailer’s side, which is dedicated to measuring the lateral error.

For all the trucks, the velocity control parameters in (1) are identically chosen as , and for . The parameters and in (4) are selected as Table II. The gap control parameters of FV1 and FV2 in (6) are also identically chosen as and for . The lane keeping control parameters of all the trucks are designed as shown in Fig. 6. The parameter for in (3) is selected as 2 m/s, and in (8) is chosen as 1.4 m/s. In the following graphs, the red colored areas commonly indicate that LV is in the curved road segments, whereas the white areas indicate straight road segments.

|

|

|

|

|

||||

|---|---|---|---|---|---|---|---|

IV-A Performance of Platooning with Three Trucks

Fig. 7 shows the evaluation results for the first platooning scenario (Scenario 1). Fig. 7(a) shows how LV follows the given reference velocity as it ramps up from 0.6 m/s to 1.2 m/s. Although the results show slight overshoots upon velocity changes, they do not last long, resulting in satisfactory longitudinal control performance. Fig. 7(b) shows the measured gaps between trucks as the reference gap is constant at 1.2 m. The results show that all the trucks maintain the desired gap well despite the acceleration of the platoon. The lateral errors, meanwhile, increase upon the velocity goes about 1 m/s, but all the trucks are safely maintained within the lane.

In Fig. 8, the second scenario (Scenario 2) is validated, where the reference gap ramps down from 1.2 m to 0.6 m while maintaining a constant velocity at 1.0 m/s. Fig. 8(a) shows the LV velocity well maintained at 1.0 m/s. Fig. 8(b) shows that the measured gaps stick well to the dynamic reference gap. However, in Fig. 8(c), we can see that the lateral errors get significant while the reference gap is the smallest (i.e., 0.6 m). As mentioned before, this lateral safety problem happens when the gap is too short such that the camera’s view is significantly occluded by the preceding trailer.

IV-B Effectiveness of Dynamic ROI

We apply the dynamic ROI method to the lane detection module for solving the lateral stability problem. Fig. 9 compares FV2’s lateral errors with static ROI and dynamic ROI while maintaining the 0.6 m gap between FV1 and FV2. In the figure, the lateral error with dynamic ROI is visibly smaller than that with static ROI. Even worse, FV2 goes out the lane at the 51.4-second point when operated with the static ROI method. In contrast, the truck is safely maintained within the lane with dynamic ROI. Thus, we claim that dynamic ROI is an effective method to enhance lateral stability and safety.

V Case Study: Camera Sensor Failure

To show how our testbed can help develop safety scenarios, we conducted a case study of developing a mitigation method for a camera sensor failure scenario on our testbed. Since the lateral control depends on the camera sensor, its failure can be catastrophic. In such a fatal scenario, one possible method is to use the remaining lidar sensor to follow the preceding trailer’s center, even without knowing the lane curvature. By this method, the platoon can buy enough time until it slowly stops while maintaining minimal safety.

For the experiment, we implemented a fault injection scenario that simulates a cut wire from the camera in FV1. In such cases, the old image is repeatedly fed into the perception system such that the failure can be detected by comparing the current image with old images assuming that the truck’s velocity is not zero. Then the truck goes into a fail-safe mode that no longer uses the lane detection result but follows the preceding truck’s center. The failure is also notified to LV such that the entire platoon goes into a fail-safe mode, slowing down until a graceful stop.

Fig. 10 compares FV1’s lateral error for two scenarios. One is without any failure mitigation method, and the other is with our failure mitigation method. For the measurement, the platoon drives at a velocity of 0.8 m/s with a gap of 0.8 m. After LV reaches a straight road segment, a fault is injected into FV1’s camera. The figure shows that the truck quickly goes out of the lane without the mitigation method, whereas our mitigation method makes it keep its lane even though the lateral oscillation is slightly amplified.

VI Related World

The truck platooning technology has been developed through national flagship projects [1, 3, 5, 6, 14, 15, 16]. Most studies thus far have dealt with control issues [17, 18, 19, 20], fuel economy [21, 22, 23], and safety issues [24, 25, 26]. To the best of our knowledge, our study is one of the first attempts to implement a scale truck platooning tested.

VII Conclusion

We presented Cyclops, a scale truck platooning testbed, targeting an open research platform for everyone interested in the truck platooning technology. Our platooning system uses wireless communications as well as camera and lidar sensors to maintain a constant gap at a given platooning velocity. We implemented our perception and control systems optimized for solving the platooning system’s unique challenges caused by the minimal gap between trucks. With our scale trucks, we successfully demonstrated the control stability up to 1.2 m/s, corresponding to about 60 km/h in actual trucks. Also, we can safely reduce the gap until it reaches 0.6 m, which corresponds to 8.4 m in actual trucks, at the velocity of 1.0 m/s. Furthermore, we conducted a case study that validates a camera failure mitigation method that is difficult to realize with heavy-duty trucks due to its high risks. We hope our testbed can benefit many researchers by releasing all the details of our work on Github.

In the future, we plan to extend our work by adding more complex safety scenarios such as network failures and computer failures.

Acknowledgment

This work was supported by Institute of Information and communications Technology Planning and Evaluation (IITP) grant funded by the Korea government (MSIT) (No. 2014-3-00065, Resilient Cyber-Physical Systems Research). J.-C. Kim is the corresponding author of this paper.

References

- [1] C. Bergenhem, S. Shladover, E. Coelingh, C. Englund, and S. Tsugawa, “Overview of platooning systems,” in Proceedings of the 19th ITS World Congress, 2012, pp. 1–8.

- [2] C. Bergenhem, E. Hedin, and D. Skarin, “Vehicle-to-vehicle communication for a platooning system,” Procedia-Social and Behavioral Sciences, vol. 48, pp. 1222–1233, 2012.

- [3] S. Tsugawa, S. Jeschke, and S. E. Shladover, “A review of truck platooning projects for energy savings,” IEEE Transactions on Intelligent Vehicles, vol. 1, no. 1, pp. 68–77, Mar. 2016.

- [4] J. Lioris, R. Pedarsani, F. Y. Tascikaraoglu, and P. Varaiya, “Doubling throughput in urban roads by platooning,” IFAC-PapersOnLine, vol. 49, no. 3, pp. 49–54, 2016.

- [5] T. Robinson, E. Chan, and E. Coelingh, “Operating platoons on public motorways: An introduction to the sartre platooning programme,” in Proceedings of the 17th world congress on intelligent transport systems, 2010, pp. 1–11.

- [6] C. Bergenhem, Q. Huang, A. Benmimoun, and T. Robinson, “Challenges of platooning on public motorways,” in Proceedings of the 17th world congress on intelligent transport systems, 2010, pp. 1–12.

- [7] L. Konstantinopoulou, A. Coda, and F. Schmidt, “Specifications for Multi-Brand Truck Platooning,” in Proceedings of the 8th International Conference on Weigh-In-Motion (ICWIM8), 2019, pp. 8–17.

- [8] Y. Lee, T. Ahn, C. Lee, S. Kim, and K. Park, “A novel path planning algorithm for truck platooning using V2V communication,” Sensors, vol. 20, no. 24, p. 7022, Dec. 2020.

- [9] T.-W. Kim, W.-S. Jang, J. Jang, and J.-C. Kim, “Camera and radar-based perception system for truck platooning,” in Proceedings of the 20th International Conference on Control, Automation and Systems (ICCAS), 2020, pp. 950–955.

- [10] Y. Fan, W. Zhang, X. Li, L. Zhang, and Z. Cheng, “A robust lane boundaries detection algorithm based on gradient distribution features,” in Proceedings of the 8th International Conference on Fuzzy Systems and Knowledge Discovery (FSKD), 2011, pp. 1714–1718.

- [11] X. Zhang, M. Chen, and X. Zhan, “A combined approach to single-camera-based lane detection in driverless navigation,” in Proceedings of the IEEE/ION Position, Location and Navigation Symposium (PLANS), 2018, pp. 1042–1046.

- [12] M. R. Haque, M. M. Islam, K. S. Alam, H. Iqbal, and M. E. Shaik, “A computer vision based lane detection approach,” International Journal of Image, Graphics and Signal Processing, vol. 11, no. 3, pp. 27–34, Mar. 2019.

- [13] M. Przybyla, “obstacle_detector,” https://github.com/tysik/obstacle_detector, 2018.

- [14] S. Tsugawa, S. Kato, and K. Aoki, “An automated truck platoon for energy saving,” in Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2011, pp. 4109–4114.

- [15] J. B. Michael, D. N. Godbole, J. Lygeros, and R. Sengupta, “Capacity analysis of traffic flow over a single-lane automated highway system,” Journal of Intelligent Transportation System, vol. 4, no. 1-2, pp. 49–80, 1998.

- [16] J. Carbaugh, D. N. Godbole, and R. Sengupta, “Safety and capacity analysis of automated and manual highway systems,” Transportation Research Part C: Emerging Technologies, vol. 6, no. 1-2, pp. 69–99, 1998.

- [17] R. Rajamani, H.-S. Tan, B. K. Law, and W.-B. Zhang, “Demonstration of integrated longitudinal and lateral control for the operation of automated vehicles in platoons,” IEEE Transactions on Control Systems Technology, vol. 8, no. 4, pp. 695–708, July 2000.

- [18] J. Ploeg, D. P. Shukla, N. van de Wouw, and H. Nijmeijer, “Controller synthesis for string stability of vehicle platoons,” IEEE Transactions on Intelligent Transportation Systems, vol. 15, no. 2, pp. 854–865, Apr. 2014.

- [19] A. Alam, J. Mårtensson, and K. H. Johansson, “Experimental evaluation of decentralized cooperative cruise control for heavy-duty vehicle platooning,” Control Engineering Practice, vol. 38, pp. 11–25, May 2015.

- [20] B. Besselink and K. H. Johansson, “String stability and a delay-based spacing policy for vehicle platoons subject to disturbances,” IEEE Transactions on Automatic Control, vol. 62, no. 9, pp. 4376–4391, Sep. 2017.

- [21] A. Alam, B. Besselink, V. Turri, J. Martensson, and K. H. Johansson, “Heavy-duty vehicle platooning for sustainable freight transportation: A cooperative method to enhance safety and efficiency,” IEEE Control Systems Magazine, vol. 35, no. 6, pp. 34–56, Dec. 2015.

- [22] V. Turri, B. Besselink, and K. H. Johansson, “Cooperative look-ahead control for fuel-efficient and safe heavy-duty vehicle platooning,” IEEE Transactions on Control Systems Technology, vol. 25, no. 1, pp. 12–28, Jan. 2017.

- [23] A. Al Alam, A. Gattami, and K. H. Johansson, “An experimental study on the fuel reduction potential of heavy duty vehicle platooning,” in Proceedings of the 13th International IEEE Conference on Intelligent Transportation Systems (ITSC), 2010, pp. 306–311.

- [24] E. van Nunen, D. Tzempetzis, G. Koudijs, H. Nijmeijer, and M. van den Brand, “Towards a safety mechanism for platooning,” in Proceedings of the IEEE Intelligent Vehicles Symposium (IV), 2016, pp. 502–507.

- [25] T. Bijlsma and T. Hendriks, “A fail-operational truck platooning architecture,” in Proceedings of the IEEE Intelligent Vehicles Symposium (IV), 2017, pp. 1819–1826.

- [26] E. van Nunen, F. Esposto, A. K. Saberi, and J.-P. Paardekooper, “Evaluation of safety indicators for truck platooning,” in Proceedings of the IEEE Intelligent Vehicles Symposium (IV), 2017, pp. 1013–1018.