CS-AF: A Cost-sensitive Multi-classifier Active Fusion Framework

for Skin Lesion Classification

Abstract

Convolutional neural networks (CNNs) have achieved the state-of-the-art performance in skin lesion analysis. Compared with single CNN classifier, combining the results of multiple classifiers via fusion approaches shows to be more effective and robust. Since the skin lesion datasets are usually limited and statistically biased, while designing an effective fusion approach, it is important to consider not only the performance of each classifier on the training/validation dataset, but also the relative discriminative power (e.g., confidence) of each classifier regarding an individual sample in the testing phase, which calls for an active fusion approach. Furthermore, in skin lesion analysis, the data of certain classes (e.g., the benign lesions) is usually abundant making them an over-represented majority, while the data of some other classes (e.g., the cancerous lesions) is deficient, making them an underrepresented minority. It is more crucial to precisely identify the samples from an underrepresented (i.e., in terms of the amount of data) but more important minority class (e.g., certain cancerous lesion). In other words, misclassifying a more severe lesion to a benign or less severe lesion should have relative more cost (e.g., money, time and even lives). To address such challenges, we present CS-AF, a cost-sensitive multi-classifier active fusion framework for skin lesion classification. In the experimental evaluation, we prepared 96 base classifiers (of 12 CNN architectures) on the ISIC research datasets. Our experimental results show that our framework consistently outperforms the static fusion competitors.

keywords:

Deep Neural Networks; Multi-classifier Fusion; Active Fusion; Ensemble Learning; Cost-sensitive Classification; Skin Lesion Analysis.1 Introduction

Deep learning (DL) has achieved great success in many applications related to skin lesion analysis. For instance, Zhang et al. [1] has shown that convolutional neural networks (CNNs) have achieved the state-of-the-art performance in skin lesion classification. Also, as the development of various deep learning techniques, numerous different designs of classifiers, that might have different CNN architectures, use different sizes of the training data, use different subsets or classes distributions of the training data or use different feature sets, were proposed to tackle the skin lesion classification problem. For instance, as shown in the ISIC Challenges [2, 3, 4], several CNN architectures have been used in skin lesion analysis, including ResNet, Inception, DenseNet, PNASNet, etc. Because of such difference (i.e., CNN architectures, subset of the training data, feature sets, etc.), those classifiers tend to have distinct performance under different conditions (e.g,, different subsets or classes distributions of data). There is no one-size-fits-all solution to design a single classifier for skin lesion classification. It is necessary to investigate multi-classifier fusion techniques to perform skin lesion classification under different conditions.

Designing an effective multi-classifier fusion approach for skin lesion classification needs to address two challenges. First, since the datasets are usually limited and statistically biased [2, 3, 4], while conducting multi-classifier fusion, it is necessary to consider not only the performance of each classifier on the training/validation dataset, but also the relative discriminative power (e.g., confidence) of each classifier regarding an individual sample in the testing phase. This challenge requires the researchers to design an active fusion approach, that is capable of tuning the weight assigned to each classifier dynamically and adaptively, depending on the characteristics of given samples in the testing phase. Second, since in most of the real-world skin lesion datasets [2, 3, 4] the data of certain classes (e.g., the benign lesions) is abundant making them an over-represented majority, while the data of some other classes (e.g., the cancerous lesions) is deficient, making them an underrepresented minority, it is more crucial to precisely identify the samples from an underrepresented (i.e., in terms of the amount of data) but more important minority class (e.g., certain cancerous lesion). For instance, a deadly cancerous lesion (e.g., melanoma) that rarely appears during the examinations should be barely misclassified as benign or other less severe lesions (e.g., dermatofibroma). Specifically, misclassifying a more severe lesion to a benign or less severe lesion should have relative more cost (e.g., money, time and even lives). Hence, it is also important to enable such “cost-sensitive” feature in the design of an effective multi-classifier fusion approach for skin lesion classification.

In this work, we propose CS-AF, a cost-sensitive multi-classifier active fusion framework for skin lesion classification, where we define two types of weights: the objective weights and the subjective weights. The objective weights are designed according to the classifiers’ reliability to recognize the particular skin lesions, which is determined by the prior knowledge obtained through the training phase. The subjective weights are designed according to the relative confidence of the classifiers while recognizing a specific previously “unseen” sample (i.e., individuality), which are calculated by the posterior knowledge obtained through the testing phase. While designing the objective weights, we also utilize a customizable cost matrix to enable the “cost-sensitive” feature in our fusion framework, where given a sample, different outputs (i.e., the correct prediction or all kinds of errors) of a classifier should result in different costs. For instance, the cost of misclassifying melanoma as benign should be much higher than misclassifying benign as melanoma. In the experimental evaluation, we trained 96 base classifiers as the input of our fusion framework, utilizing twelve CNN architectures, four classes distributions and two data split ratios on the ISIC research datasets for skin image analysis [2, 3, 4]. Our experimental results show that our CS-AF framework consistently outperforms the static fusion competitors in terms of accuracy, and always achieves lower total cost.

To summarize, our work has the following contributions:

We present a novel and effective cost-sensitive multi-classifier active fusion framework, CS-AF. To the best of our knowledge, this is the first work to apply active fusion for skin lesion analysis, and show its advantages over the conventional static fusion approaches.

Our framework is the first to define the simple but effective objective/subjective weights and the customizable cost matrix, which enables the “cost-sensitive” feature for skin lesion analysis.

A comprehensive experimental evaluation using twelve popular and effective CNN architectures has been conducted on the most popular skin lesion analysis benchmark dataset, ISIC research datasets [2, 3, 4]. For the sake of reproducibility and convenience of future studies about fusion approaches in skin lesion analysis, we have released our prototype implementation of CS-AF, information regarding the experiment datasets and the code of our comparison experiments. 111https://tinyurl.com/tabzxec

2 Related Work

2.1 Multi-classifier Fusion

Fusion approaches have been widely applied in numerous applications, such as skin lesion analysis [5, 6], human activity recognition [7, 8], active authentication [9], facial recognition [10, 11, 12], botnet detection [13, 14, 15] and community detection [16, 17]. According to whether the weights are dynamically/adaptively assigned to each classifier, the multi-classifier fusion approaches are divided into two categories: (i) static fusion, where the weight assigned to each participating classifier will be fixed after its initial assignment, and (ii) active fusion, where the weights are adaptively tuned depending on the characteristics of given samples in the testing phase. Many conventional approaches, such as the bagging [18], boosting [19, 20] and stacking [21], are static fusion. To date, a few methods attempting to conduct active fusion were also proposed [22, 23, 24]. For instance, Ren et al. [22] proposes to use the decision credibility that is evaluated by fuzzy set theory and cloud model, to determine the real-time weight of a base classifier regarding the current sample in the testing phase. META-DES [23] defines five distinct sets of meta-features to measure the level of competence of a classifier for the classification of input samples, and proposes to train a meta-classifier to determine the rank or weight of a base classifier while facing input samples. DES-MI [24] propose an active fusion approach where the weights are determined via emphasizing more on the classifiers that are more capable of classifying examples in the region of underrepresented area among the whole sample distribution. In our work, we propose a novel active fusion approach, that leverages the “reliability” (Section 3.3) and the “individuality ” (Section 3.4) of the base classifiers to assign the weights dynamically and adaptively.

2.2 Fusion of CNNs for Skin Lesion Analysis

Convolutional neural networks (CNNs) have achieved the state-of-the-art performance [2, 3, 4] in skin lesion analysis since 2016 (i.e., ISIC 2016 Challenge [2]), where nearly all the teams employed CNNs in either feature extraction or classification procedure. Recently, approaches attempting to apply fusion on CNNs to tackle the skin lesion classification, are proposed [25, 26, 5]. For instance, Marchetti et al. [25] presents a fusion of CNNs framework for the the classification of melanomas versus nevi or lentigines, where five fusion approaches were implemented to fuse 25 different CNN classifiers trained on the same dataset of the same problem to make a single decision. Bi et al. [26] proposes another CNNs fusion framework to tackle the classification of melanomas versus seborrheic keratosis versus nevi, where three ResNet classifiers were trained for three different classification problems via fine-tuning pretrained ImageNet CNNs: the original three-class problem and two binary classifiers (i.e., melanoma versus both other lesion classes and seborrheic carcinoma versus both other lesion classes). Perez et al. [5] conducts a comparison study between two fusion strategies for melanoma classification: selecting the classifiers at random (i.e., among 125 models over 9 CNNs architectures), and selecting the classifiers depending on their performance on a validation dataset. To summarize, most of the existing approaches use static fusion for skin lesion analysis. However, as discussed in Section 1, since the skin lesion datasets are usually limited and statistically biased [2, 3, 4], it is necessary to enable active fusion in such problem. To the best of our knowledge, our work is the first to design and apply active fusion approach in skin lesion classification.

2.3 Cost-sensitive Machine Learning

A variety of cost-sensitive machine learning approaches have been proposed to tackle the class imbalance issue in pattern classification and learning problems. Mollineda et al. [27], a comprehensive study on the class imbalance issue, divides most of the cost-sensitive machine learning approaches into two categories: the data-level and the algorithmic-level. The data-level approaches usually manipulate the data distribution via over-sampling the samples of the minority classes or under-sampling the samples of the majority classes. For instance, SMOTE [28] is an over-sampling technique proposed to address the over-fitting problem via synthesizing more of the samples of the minority classes. Several variants of the SMOTE approach [29, 30, 31, 32] are also proposed to solve this issue. The algorithmic-level approaches directly re-design the machine learning algorithms to minimize a customizable loss function, that enables the “cost-sensitive” feature, of the classifier on certain dataset (e.g., improving the sensitivity of the classifier towards minority classes). For instance, Zhang et al. [33] proposes an extreme learning machine (ELM) based evolutionary cost-sensitive classification approach, where the cost matrix would be automatically identified given a specific task (i.e., which error cost more). Iranmehr et al. [34] extends the standard loss function of support vector machine (SVM) to consider both the class imbalance (i.e., the cost) and the classification loss. Khan et al. [35] proposes a cost-sensitive deep neural network framework that could automatically learn the “cost-sensitive” feature representations for both the majority and minority classes, where during the training phase, the proposed framework would perform a joint-optimization on the class-dependent costs and the deep neural network parameters. In this work, we enable the “cost-sensitive” feature in the process of multi-classifier fusion, and employ it in the skin lesion classification problem.

3 Methodology

3.1 Multi-classifier Fusion

In multi-classifier fusion, we define a classification space, as shown in Figure 1, where there are classes and classifiers. Let denote the set of base classifiers and denote the set of classes. Let denote the posterior probability of given sample identified by classifier as belonging to class , where and . Hence, all the posterior probabilities form a decision matrix as follows:

| (1) |

Since the importance of different classifiers might be different, we assign a wight to the decision vector (i.e., posterior probabilities vector) of each classifier , where . Let denote the sum of the posterior probabilities, that sample belonging to class , of all the classifiers. Then, we have

| (2) |

The final decision (i.e., class) of sample is determined by the maximum posterior probabilities sum:

| (3) |

Conventional multi-classifier fusion approaches either use the same weight for all the classifiers (i.e., average fusion) or use static weights that will not be changed after its initial assignment during the training phase. As illustrated in Figure 1, our weights (i.e., ) contains two components: (i) the objective weight that is static and determined by the prior knowledge obtained through the training phase (Section 3.3), and (ii) the subjective weight that is dynamic and calculated by the posterior knowledge obtained through the testing phase (Section 3.4). To be simplified, we assign the same weight, i.e., , on both and , while combining them together.

3.2 Cost-sensitive Problem Formulation

As discussed in Section 1, given a sample, different outputs (i.e., the correct prediction or all kinds of errors) of a classifier should result in different costs. For instance, misclassifying a more severe lesion to a benign or less severe lesion should have relative higher cost. Let denote the cost of classifying an instance belonging to class into class . Then, we obtain a cost matrix as follows:

| (4) |

Let be a fusion weight vector, and be the fusion weight vector space, where . The goal of cost-sensitive multi-classifier fusion is to find the , that can minimize the average cost of the fusion approach’s outcomes over all the testing samples. In Section 4.3, we provide certain principles to design a good cost matrix. We also provide two examples of cost matrices, that emphasize on different skin lesion classes, based on our literature references and those principles.

3.3 Computing the Objective Weights

The objective weights are designed according to the classifiers’ reliability to recognize the particular skin lesions, which is determined by the prior knowledge obtained through the training phase. In the training phase, we separate all the labelled data into two parts: training data and validation data. As shown in Figure 2, computing the objective weights in our framework contains three steps:

Classifier build. We prepare a set of base classifiers, where all the classifiers might have different CNN architectures, use different size of the training data, or use different subset or classes distributions of the training data. In this step, we trained 96 base classifiers, more details are introduced in Section 4.2.

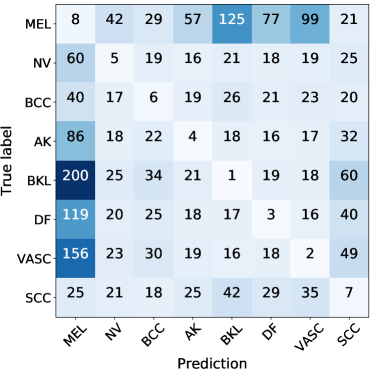

Reliability validation. Let denote the reliability of a base classifier , that is designed to describe the average recognition performance of the classifier on the validation data. Higher accuracy and less error on the validation data usually means higher reliability. Hence, we use the confusion matrix result of each base classifier on the same validation dataset as its reliability, where a confusion matrix [36] is a table that is often used to describe the performance of a classifier on a set of validation data for which the true values are known. It allows easy identification of confusion between classes, e.g., one class is commonly mislabeled as the other. Many performance measures could be computed from the confusion matrix (e.g., F-scores). As such, we use to denote the probability of a base classifier classifying an instance belonging to class p into class q.

Cost-sensitive adjustment. As described in Section 3.2, we would like to enable the “cost-sensitive” feature in the design of our objective weights. As shown in Figure 3, for each classifier , we use an element-wise multiplication between its reliability (confusion matrix) and the customized cost matrix (Section 3.2) to formulate a cost-sensitive confusion matrix, where all the results/errors in the confusion matrix have been adjusted based on the cost matrix. Then, we use the micro-average F1-score [37] of the cost-sensitive confusion matrix to define the objective weight of each base classifier, and all the object weights are automatically normalized to .

3.4 Computing the Subjective Weights

The subjective weights are designed according to the relative confidence of the classifiers while recognizing a specific previously “unseen” image (i.e., individuality), which are calculated by the posterior knowledge obtained through the testing phase. As shown in Figure 4, computing the subjective weights in our framework contains three steps:

Sample evaluating/testing. Each sample is evaluated/tested through all the classifiers to obtain the corresponding decision vectors (i.e., the soft labels).

Individuality calculation. We consider the individuality of a classifier as its relative class discriminative power regarding a given individual sample. A classifier can easily identify the class of a given sample in the testing phase, if its posterior probabilities of the corresponding decision vector is highly concentrated in one class, and the misclassification rate would also be low. On the contrary, if the distribution of the posterior probabilities is close to uniform, the classifier shows its difficulty in discriminating the class of the given sample. Also, different classifiers would present different distribution of the posterior probabilities in the decision vectors while testing the same sample. Hence, we define the the individuality of a classifier using the posterior probabilities distribution as follows:

| (5) |

where is the largest posterior probability value in .

Normalization. Since the subjective weights are relative values among all the base classifiers, we normalize each individuality to the subjective weight as follows:

| (6) |

where and .

4 Experimental Evaluation

We conducted our experiments on the ISIC Challenge 2019 dataset [38, 39, 3] and utilized 12 CNN architectures to evaluate the performance of our proposed CS-AF framework. Two examples of cost matrices, that emphasize on different skin lesion classes (i.e., cancerous lesion classes vs. benign lesion classes), have been designed to evaluate the effectiveness of the “cost-sensitive” feature of our proposed CS-AF framework. Furthermore, extensive comparisons have been made among Max Voting Fusion (static), Average Fusion (static), AF (i.e., active fusion without the “cost-sensitive” feature) and our CS-AF framework. The presented results show that our approach consistently outperforms the base static fusion approaches (i.e., Max Voting and Average Fusion) in terms of the overall accuracy and the total cost, and consistently better than AF in terms of the total cost under different conditions.

4.1 Experiment Dataset

In our experiments, we utilized the well known ISIC Challenge 2019 dataset [38, 39, 3]. Since the ground truth of the original testing data was not available, we only employed the original training data without meta-data in our experimental evaluation. This dataset (i.e., the original training data of the ISIC Challenge 2019) contains 25,331 images in total, coming from 3 source datasets: BCN_20000 [38], HAM10000 [39] and MSK [3]. It depicts 8 skin lesion diseases (i.e., 8 classes): melanoma (MEL, 4,522 images), melanocytic nevus (NV, 12,875 images), basal cell carcinoma (BCC, 3,323 images), actinic keratosis (AK, 867 images), benign keratosis (BKL, 2624 images), dermatofibroma (DF, 239 images), vascular lesion (VASC, 253 images) and squamous cell carcinoma (SCC, 628 images). We split the entire 25,331 images into training (80%), validation (5%) and testing (15%) datasets.

| Skin Lesion | Dist-1 | Dist-2 | Dist-3 | Dist-4 |

|---|---|---|---|---|

| MEL | 3,662 (18.1%) | 2,509 (12.4%) | 5,052 (22.5%) | 604 (2.7%) |

| SCC | 502 (2.5%) | 2,510 (12.4%) | 4,331 (19.3%) | 1,200 (5.4%) |

| BCC | 2,670 (13.2%) | 2,494 (12.4%) | 3,781 (16.9%) | 1,812 (8.1%) |

| NV | 10,235 (50.5%) | 2,512 (12.4%) | 3,032 (13.5%) | 2,529 (11.3%) |

| AK | 705 (3.5%) | 2,564 (12.5%) | 2,463 (11.0%) | 3,150 (14.1%) |

| DF | 188 (1%) | 2,444 (12.3%) | 1,871 (8.3%) | 3,702 (16.9%) |

| VASC | 194 (1%) | 2,522 (12.5%) | 1,262 (5.6%) | 4,334 (19.3%) |

| BKL | 2,099 (10.4%) | 2,612 (13.0%) | 626 (2.8%) | 5,056 (22.6%) |

| CNN Architectures | Dist-1 | Dist-1 Sub-70 | Dist-2 | Dist-2 Sub-70 | Dist-3 | Dist-3 Sub-70 | Dist-4 | Dist-4 Sub-70 |

|---|---|---|---|---|---|---|---|---|

| PNASNet-5-Large [40] | 78.48 | 76.53 | 81.14 | 80.73 | 77.01 | 75.21 | 78.76 | 75.34 |

| NASNet-A-Large [41] | 78.35 | 76.71 | 79.80 | 78.31 | 76.00 | 75.36 | 76.12 | 74.32 |

| ResNeXt101-3216d [42] | 79.47 | 76.96 | 83.09 | 80.08 | 80.18 | 77.14 | 79.47 | 77.47 |

| SENet154 [43] | 80.31 | 77.72 | 81.19 | 76.33 | 79.04 | 74.04 | 78.76 | 76.43 |

| Dual Path Net-107 [44] | 76.61 | 74.51 | 79.10 | 77.92 | 76.07 | 70.88 | 77.24 | 74.80 |

| Xception [45] | 74.63 | 74.07 | 78.53 | 75.19 | 76.46 | 72.93 | 75.82 | 74.30 |

| Inception-V4 [46] | 76.76 | 74.22 | 80.11 | 77.45 | 77.09 | 75.37 | 75.89 | 74.10 |

| InceptionResNet-V2 [46] | 77.58 | 76.64 | 70.81 | 77.39 | 77.77 | 76.12 | 76.48 | 74.01 |

| SE-ResneXt101-324d [43] | 77.45 | 77.21 | 79.87 | 78.41 | 75.38 | 74.68 | 75.60 | 74.33 |

| ResNet152 [47] | 75.69 | 73.23 | 79.27 | 75.01 | 76.00 | 74.96 | 75.77 | 74.45 |

| Inception-V3 [48] | 75.16 | 73.82 | 79.52 | 78.83 | 73.69 | 72.41 | 75.62 | 72.07 |

| EfficientNet-b7 [49] | 67.31 | 63.07 | 74.10 | 71.28 | 71.81 | 68.75 | 71.48 | 67.37 |

To evaluate the performance of our proposed CS-AF framework using the base classifiers that are trained from the datasets with different classes distributions, we designed 4 training datasets that have different classes distributions. For instance, one training dataset could have balanced classes distribution, and the other training datasets could have unbalanced classes distributions in different ways. The details (i.e., classes distributions) of each training dataset are shown in Table 1 and described as below:

-

1.

Dist-1: This training dataset follows the classes distribution of the original training dataset of the ISIC Challenge 2019 dataset.

-

2.

Dist-2: This training dataset contains evenly distributed number of samples for all classes.

-

3.

Dist-3: This training dataset contains more samples for cancerous lesion classes, and less samples for benign lesion classes.

-

4.

Dist-4: This training dataset contains less samples for cancerous lesion classes, and more samples for benign lesion classes (i.e., the opposite order of classes distributions as in Dist-3).

To generate different training datasets satisfying different classes distributions described above, we utilized data augmentation techniques to generate more images for the skin lesion classes lacking of images, and randomly sampled smaller portions from the classes with superfluous images. The main data augmentation techniques utilized are rotation (for 45, 90, 135, 180, 225, 270 and 315 degrees, respectively), horizontal flip or the combination of both. We also utilized the same strategy to generate the validation and testing datasets, to ensure the numbers of samples of all classes are equal, where there are approximate 200 samples of each class in the validation dataset, and approximate 500 samples of each class in the testing dataset.

In addition, to evaluate the performance of our proposed CS-AF framework using the base classifiers that are trained from different subsets of the training dataset, for each of those four training datasets that have different classes distributions, we randomly select 70% of it to produce another sub-dataset, namely, Sub-70. Therefore, in our experimental evaluation, there are 8 different training datasets in total (i.e., Dist-1, Dist-1 Sub-70, Dist-2, Dist-2 Sub-70, Dist-3, Dist-3 Sub-70, Dist-4 and Dist-4 Sub-70).

4.2 Base Classifiers Preparation

We prepared the base classifiers to evaluate the fusion approaches performance using 12 different popular CNN architectures (as shown in Table 2) with parameters pre-trained on ImageNet [50]. Different CNN architectures have different size of input images:

-

1.

331331: PNASNet-5-Large, NASNet-A-Large.

-

2.

320320: ResNeXt101-3216d.

-

3.

299299: Xception, Inception-V4, Inception-V3, InceptionResNet-V2.

-

4.

224224: SENet154, Dual Path Net-107, SE-ResneXt101-324d, EfficientNet-b7, ResNet152.

All the base classifiers were fine-tuned by stochastic gradient decent (SGD) with learning rate and momentum 0.9. The learning rate degraded in 20 epochs by 0.1. We stopped the training process either after 40 epochs or while the validation accuracy was failed to improve for 7 consecutive epochs. Our experiments were implemented using Pytorch, running on a server with 4 GTX 1080Ti 11 GB GPUs. To keep the same batch size 32 in each evaluation, and due to the memory constraint of single GPU, certain CNN architectures were trained with more GPUs:

-

1.

2 GPUs: SENet154, EfficientNet-b7, Dual Path Net-107.

-

2.

4 GPUs: PNASNet-5-Large, ResNeXt101-3216d, NASNet-A-Large.

Table 2 illustrates the performance (i.e., accuracy) of all 96 base classifiers on the testing dataset.

4.3 Design of the Cost Matrix

As discussed in Section 3.2, it is necessary to have an appropriate cost matrix to enable our “cost-sensitive” feature in our active fusion framework. Hence, we create several principles to design an adequate cost matrix:

-

1.

All the costs should be positive, since it will be item-wise multiplied with the confusion matrix, and we don’t want to get non-positive values in our cost-sensitive confusion matrix.

-

2.

The cost of the correct predictions should depend on the relative severeness of the corresponding disease. For instance, it should be more valuable (i.e., less cost) to classify a more severe disease (i.e., melanoma) correctly. To figure out the relative severeness relationships among all eight skin lesion classes and better design our cost matrix, we referred to some literature articles regarding to the severeness of those diseases [51, 52, 53, 54, 55, 56, 57, 58, 59]. To be simplified and enable the evaluation of our work, based on those references, we heuristically ordered the severeness of the 8 skin lesion classes (from the most severe to the least one) as follows: melanoma (MEL), squamous cell carcinoma (SCC), basal cell carcinoma (BCC), melanocytic nevus (NV), actinic keratosis (AK), dermatofibroma (DF), vascular lesion (VASC), benign keratosis (BKL).

-

3.

The relative costs of different incorrect predictions should be based on their relative severeness. For instance, misclassifying melanoma (i.e, a deadly cancerous lesion) as benign keratosis should result in much more cost than the opposite scenario.

-

4.

The cost of correct predictions should be no more than the cost of incorrect predictions.

As such, we designed two examples of cost matrices that emphasize on different skin lesion classes (i.e., cancerous lesions vs. benign lesions) to evaluate our work, as illustrated in Figure 5a (i.e., Cost Matrix A) and Figure 5b (i.e., Cost Matrix B).

Let us take the design of Cost Matrix A, that emphasizes on cancerous lesions (i.e., the cost of misclassifying a cancerous lesion is much more than a benign lesion), as an example. Firstly, we assign the cost of correct prediction of each skin lesion class, i.e., , (as defined in Section 3.2), according to the relative disease severeness, where predicting a more severe skin lesion class correctly should result in less cost. For instance, we set the cost of correct prediction of MEL (i.e., the most severe one) as 1, and the cost of correct prediction of BKL (i.e., the least severe one) as 8. Secondly, to calculate the relative cost of each incorrect prediction, we follow the equation below:

| (7) |

where as defined in Section 3.2, denote the cost of classifying an instance belonging to class into class . For instance, if the cost of correct prediction of MEL is 1 and the cost of correct prediction of BKL is 8, the cost of misclassifying an instance belonging to MEL into BKL would be . Last but not least, to ensure the cost of correct predictions are always no more than the cost of incorrect predictions, without loss of generality, we normalized the costs of misclassifications to integers between 16 and 200, using min-max scaling. Figure 5a shows the final result of our designed Cost Matrix A.

To evaluate our framework under different cost matrices, we also designed a Cost Matrix B (as shown in Figure 5b), that emphasizes on benign lesions (i.e., the cost of misclassifying a benign lesion is much more than a cancerous lesion). Cost Matrix B follows the same design steps as Cost Matrix A, other than considering an exactly reverse order of the severeness. For instance, in the design of Cost Matrix B, melanoma became the “least severe” one and benign keratosis became the “most severe” one.

Our two examples of cost matrices are just for our experimental evaluation purposes. To utilize our framework, the other researchers could always create the cost matrix with their own demands or based on their own best domain expert knowledge.

4.4 Experimental Procedure

As described in Section 4.2, we have prepared 96 base classifiers. To evaluate the effectiveness of our active fusion approach extensively, we use different subsets of the 96 base classifiers to form a fusion model. Specifically, given a fusion approach, each time, we randomly select classifiers among all 96 base classifiers to form a fusion model and evaluate its performance, where . For each specific , we will repeat the random selection experiments for 100 times, and use the averaged performance as the final results.

4.5 Evaluate the Effectiveness of CS-AF

To demonstrate the effectiveness of our approach, a comparison between two CS-AF implementations (using two different cost matrices to compute the objective weights) and three other fusion approaches has been conducted. The contestants are:

-

1.

Max Voting Fusion is a static approach, where predictions are combined from multiple base classifiers and only the predicted class with the highest votes will be included in the final prediction.

-

2.

Average Fusion is another static approach, where it averages the decision vectors of multiple base classifiers and uses the averaged decision vector to make the final prediction.

-

3.

AF is a baseline active fusion approach by removing the cost-sensitive adjustment step from CS-AF while calculating the objective weights.

-

4.

CS-AF (Cost Matrix A) is our approach while computing its objective weights using Cost Matrix A.

-

5.

CS-AF (Cost Matrix B) is our approach while computing its objective weights using Cost Matrix B.

Given a contestant fusion approach, we evaluate its effectiveness in terms of (i) its averaged accuracy on our testing dataset (as shown in Figure 6a), (ii) its total cost on our testing dataset specified by Cost Matrix A (as shown in Figure 6b), and (iii) its total cost on our testing dataset specified by Cost Matrix B (as shown in Figure 6c). The total cost could be calculated by the sum of the item-wise product between the confusion matrix resulted from our testing dataset and the specified cost matrix. By definition, better fusion approach usually leads to higher accuracy on the testing dataset and lower total cost specified by certain cost matrix. From the results (Figure 6), we could observe that:

-

1.

Compared with the best performed base classifier, ResNeXt101-3216d, as shown in Table 2, our two implementations of CS-AF and AF consistently achieve over 2%-5% higher accuracy on the testing dataset.

-

2.

For all the fusion approaches, as more base classifiers involved, the accuracy tends to increase and the total cost tends to decrease.

-

3.

In terms of the accuracy, our two implementations of CS-AF and AF consistently outperform the static fusion approaches (Max Voting and Average), and CS-AF (Cost Matrix B) consistently achieves the best accuracy. Hence, it presents that our proposed active fusion approach is rather effective towards enhancing the overall accuracy.

-

4.

In terms of the total cost, CS-AF consistently outperforms the other fusion approaches (Max Voting, Average and AF). For instance, while calculating the total cost using Cost Matrix A, CS-AF (Cost Matrix A) always achieves the lowest total cost, and while calculating the total cost using Cost Matrix B, CS-AF (Cost Matrix B) always obtains the lowest total cost. Thus, it demonstrates that our proposed cost-sensitive active fusion approach could adapt to different cost matrices and is optimized to achieve the lowest total cost.

4.6 Analyze the “Cost-sensitive” of CS-AF

As discussed above, our proposed CS-AF could adapt to different cost matrices and is optimized to achieve the lowest total cost under a specified cost matrix, namely “cost-sensitive”. In this section, we would like to analyze how such “cost-sensitive”, while using certain cost matrix, influence the performance of CS-AF on certain single skin lesion classes, thus reducing the total cost. We evaluate single class performances using sensitivity and specificity, defined as follows:

| (8) |

where denotes the number of true positives and denotes the number of false negatives.

| (9) |

where denotes the number of true negatives and denotes the number of false positives.

Figure 7 and Figure 9 illustrate the sensitivity and specificity results of each single class of CS-AF (Cost Matrix A) and CS-AF (Cost Matrix B), respectively. We could observe that

-

1.

Compared with CS-AF (Cost Matrix B), CS-AF (Cost Matrix A) tends to achieve higher sensitivity on more severe cancerous lesion classes (i.e., melanoma, squamous cell carcinoma and basal cell carcinoma), and lower sensitivity on less severe benign lesion classes (i.e., benign keratosis, vascular lesion, dermatofibroma, actinic keratosis and melanocytic nevus)

-

2.

Compared with CS-AF (Cost Matrix A), CS-AF (Cost Matrix B) tends to achieve higher specificity on those more severe cancerous lesion classes, and lower specificity on those less severe benign lesion classes.

As described in Section 4.3, Cost Matrix A emphasizes on cancerous lesions (i.e., the cost of misclassifyinga cancerous lesion is much more than a benign lesion), while Cost Matrix B emphasizes on benign lesions (i.e., the cost of misclassifyinga benign lesion is much more than a cancerous lesion). While using Cost Matrix A to compute the objective weights of our CS-AF implementation (i.e., CS-AF (Cost Matrix A)), it tends to increase the TP and FP of cancerous lesion classes and decrease the FN and TN of benign lesion classes, thus resulting in higher sensitivity and lower specificity for cancerous lesion classes. CS-AF (Cost Matrix B) also works in such way accordingly. Therefore, the observations demonstrate that our proposed CS-AF is “cost-sensitive”, where its performance on certain single skin lesion classes could be flexibly influenced by or adapted to certain well designed cost matrices.

5 Conclusion

In this paper, we propose CS-AF, a cost-sensitive multi-classifier active fusion framework for skin lesion classification, where we define two types of weights: the objective weights that are designed according to the classifiers’ reliability to recognize the particular skin lesions, and the subjective weights that are designed according to the relative confidence of the classifiers while recognizing a specific previously “unseen” image (i.e., individuality). We also enable the “cost-sensitive” feature in our framework, via incorporating a customizable cost matrix in the design of the objective weights. In the experimental evaluation, we trained 96 classifiers of 12 CNN architectures as the base classifiers, and compared our CS-AF framework with two static fusion approaches (i.e., Max Voting Fusion and Average Fusion), and a baseline active fusion approach, AF. Our experimental results show that our CS-AF framework consistently outperforms the static fusion competitors in terms of accuracy, and always achieves lower total cost. We also demonstrated our “cost-sensitive” feature by using two examples of cost matrices. In our future work, we plan to (i) investigate and incorporate other metrics (i.e., other than F1-score) in the design of the objective weights; (ii) design learning-based approach to determine the subjective weights; and (iii) employ and evaluate our CS-AF framework in other medicine-related applications.

Acknowledgments

Effort sponsored in part by United States Special Operations Command (USSOCOM), under Partnership Intermediary Agreement No. H92222-15-3-0001-01. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation thereon. 111The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of the United States Special Operations Command.

References

- [1] J. Zhang, Y. Xie, Y. Xia, C. Shen, Attention residual learning for skin lesion classification, IEEE transactions on medical imaging 38 (9) (2019) 2092–2103.

- [2] D. Gutman, N. C. Codella, E. Celebi, B. Helba, M. Marchetti, N. Mishra, A. Halpern, Skin lesion analysis toward melanoma detection: A challenge at the international symposium on biomedical imaging (isbi) 2016, hosted by the international skin imaging collaboration (isic), arXiv preprint arXiv:1605.01397 (2016).

- [3] N. C. Codella, D. Gutman, M. E. Celebi, B. Helba, M. A. Marchetti, S. W. Dusza, A. Kalloo, K. Liopyris, N. Mishra, H. Kittler, et al., Skin lesion analysis toward melanoma detection: A challenge at the 2017 international symposium on biomedical imaging (isbi), hosted by the international skin imaging collaboration (isic), in: 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), IEEE, 2018, pp. 168–172.

- [4] N. Codella, V. Rotemberg, P. Tschandl, M. E. Celebi, S. Dusza, D. Gutman, B. Helba, A. Kalloo, K. Liopyris, M. Marchetti, et al., Skin lesion analysis toward melanoma detection 2018: A challenge hosted by the international skin imaging collaboration (isic), arXiv preprint arXiv:1902.03368 (2019).

- [5] F. Perez, S. Avila, E. Valle, Solo or ensemble? choosing a cnn architecture for melanoma classification, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2019, pp. 0–0.

- [6] N. N. Di Zhuang, K. Chen, J. M. Chang, Saia: Split artificial intelligence architecture for mobile healthcare systems, arXiv preprint arXiv:2004.12059 (2020).

- [7] D. Tao, L. Jin, Y. Yuan, Y. Xue, Ensemble manifold rank preserving for acceleration-based human activity recognition, IEEE transactions on neural networks and learning systems 27 (6) (2014) 1392–1404.

- [8] D. Zhuang, J. M. Chang, Utility-aware privacy-preserving data releasing, arXiv preprint arXiv:2005.04369 (2020).

- [9] P.-Y. Wu, C.-C. Fang, J. M. Chang, S.-Y. Kung, Cost-effective kernel ridge regression implementation for keystroke-based active authentication system, IEEE transactions on cybernetics 47 (11) (2016) 3916–3927.

- [10] C. Ding, D. Tao, Trunk-branch ensemble convolutional neural networks for video-based face recognition, IEEE transactions on pattern analysis and machine intelligence 40 (4) (2017) 1002–1014.

- [11] H. Nguyen, D. Zhuang, P.-Y. Wu, M. Chang, Autogan-based dimension reduction for privacy preservation, Neurocomputing (2019).

- [12] D. Zhuang, S. Wang, J. M. Chang, Fripal: Face recognition in privacy abstraction layer, in: 2017 IEEE Conference on Dependable and Secure Computing, IEEE, 2017, pp. 441–448.

- [13] L. Mai, D. K. Noh, Cluster ensemble with link-based approach for botnet detection, Journal of Network and Systems Management 26 (3) (2018) 616–639.

- [14] D. Zhuang, J. M. Chang, Peerhunter: Detecting peer-to-peer botnets through community behavior analysis, in: 2017 IEEE Conference on Dependable and Secure Computing, IEEE, 2017, pp. 493–500.

- [15] D. Zhuang, J. M. Chang, Enhanced peerhunter: Detecting peer-to-peer botnets through network-flow level community behavior analysis, IEEE Transactions on Information Forensics and Security 14 (6) (2018) 1485–1500.

- [16] A. Tagarelli, A. Amelio, F. Gullo, Ensemble-based community detection in multilayer networks, Data Mining and Knowledge Discovery 31 (5) (2017) 1506–1543.

- [17] D. Zhuang, M. J. Chang, M. Li, Dynamo: Dynamic community detection by incrementally maximizing modularity, IEEE Transactions on Knowledge and Data Engineering (2019).

- [18] L. Breiman, Bagging predictors, Machine learning 24 (2) (1996) 123–140.

- [19] R. E. Schapire, The strength of weak learnability, Machine learning 5 (2) (1990) 197–227.

- [20] Y. Freund, R. E. Schapire, A desicion-theoretic generalization of on-line learning and an application to boosting, in: European conference on computational learning theory, Springer, 1995, pp. 23–37.

- [21] D. H. Wolpert, Stacked generalization, Neural networks 5 (2) (1992) 241–259.

- [22] F. Ren, Y. Li, M. Hu, Multi-classifier ensemble based on dynamic weights, Multimedia Tools and Applications 77 (16) (2018) 21083–21107.

- [23] R. M. Cruz, R. Sabourin, G. D. Cavalcanti, T. I. Ren, Meta-des: A dynamic ensemble selection framework using meta-learning, Pattern recognition 48 (5) (2015) 1925–1935.

- [24] S. García, Z.-L. Zhang, A. Altalhi, S. Alshomrani, F. Herrera, Dynamic ensemble selection for multi-class imbalanced datasets, Information Sciences 445 (2018) 22–37.

- [25] M. A. Marchetti, N. C. Codella, S. W. Dusza, D. A. Gutman, B. Helba, A. Kalloo, N. Mishra, C. Carrera, M. E. Celebi, J. L. DeFazio, et al., Results of the 2016 international skin imaging collaboration international symposium on biomedical imaging challenge: Comparison of the accuracy of computer algorithms to dermatologists for the diagnosis of melanoma from dermoscopic images, Journal of the American Academy of Dermatology 78 (2) (2018) 270–277.

- [26] L. Bi, J. Kim, E. Ahn, D. Feng, Automatic skin lesion analysis using large-scale dermoscopy images and deep residual networks, arXiv preprint arXiv:1703.04197 (2017).

- [27] R. Mollineda, R. Alejo, J. Sotoca, The class imbalance problem in pattern classification and learning, in: II Congreso Espanol de Informática (CEDI 2007). ISBN, 2007, pp. 978–84.

- [28] N. V. Chawla, K. W. Bowyer, L. O. Hall, W. P. Kegelmeyer, Smote: synthetic minority over-sampling technique, Journal of artificial intelligence research 16 (2002) 321–357.

- [29] K.-J. Wang, B. Makond, K.-H. Chen, K.-M. Wang, A hybrid classifier combining smote with pso to estimate 5-year survivability of breast cancer patients, Applied Soft Computing 20 (2014) 15–24.

- [30] H. Han, W.-Y. Wang, B.-H. Mao, Borderline-smote: a new over-sampling method in imbalanced data sets learning, in: International conference on intelligent computing, Springer, 2005, pp. 878–887.

- [31] C. Bunkhumpornpat, K. Sinapiromsaran, C. Lursinsap, Safe-level-smote: Safe-level-synthetic minority over-sampling technique for handling the class imbalanced problem, in: Pacific-Asia conference on knowledge discovery and data mining, Springer, 2009, pp. 475–482.

- [32] T. Maciejewski, J. Stefanowski, Local neighbourhood extension of smote for mining imbalanced data, in: 2011 IEEE Symposium on Computational Intelligence and Data Mining (CIDM), IEEE, 2011, pp. 104–111.

- [33] L. Zhang, D. Zhang, Evolutionary cost-sensitive extreme learning machine, IEEE transactions on neural networks and learning systems 28 (12) (2016) 3045–3060.

- [34] A. Iranmehr, H. Masnadi-Shirazi, N. Vasconcelos, Cost-sensitive support vector machines, Neurocomputing 343 (2019) 50–64.

- [35] S. H. Khan, M. Hayat, M. Bennamoun, F. A. Sohel, R. Togneri, Cost-sensitive learning of deep feature representations from imbalanced data, IEEE transactions on neural networks and learning systems 29 (8) (2017) 3573–3587.

- [36] S. Visa, B. Ramsay, A. L. Ralescu, E. Van Der Knaap, Confusion matrix-based feature selection., MAICS 710 (2011) 120–127.

- [37] V. Van Asch, Macro-and micro-averaged evaluation measures [[basic draft]], Belgium: CLiPS 49 (2013).

- [38] M. Combalia, N. C. Codella, V. Rotemberg, B. Helba, V. Vilaplana, O. Reiter, A. C. Halpern, S. Puig, J. Malvehy, Bcn20000: Dermoscopic lesions in the wild, arXiv preprint arXiv:1908.02288 (2019).

- [39] P. Tschandl, C. Rosendahl, H. Kittler, The ham10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions, Scientific data 5 (2018) 180161.

- [40] C. Liu, B. Zoph, M. Neumann, J. Shlens, W. Hua, L.-J. Li, L. Fei-Fei, A. Yuille, J. Huang, K. Murphy, Progressive neural architecture search, in: Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 19–34.

- [41] B. Zoph, Q. V. Le, Neural architecture search with reinforcement learning, arXiv preprint arXiv:1611.01578 (2016).

- [42] S. Xie, R. Girshick, P. Dollár, Z. Tu, K. He, Aggregated residual transformations for deep neural networks, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 1492–1500.

- [43] J. Hu, L. Shen, G. Sun, Squeeze-and-excitation networks, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7132–7141.

- [44] Y. Chen, J. Li, H. Xiao, X. Jin, S. Yan, J. Feng, Dual path networks, in: Advances in neural information processing systems, 2017, pp. 4467–4475.

- [45] F. Chollet, Xception: Deep learning with depthwise separable convolutions, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 1251–1258.

- [46] C. Szegedy, S. Ioffe, V. Vanhoucke, A. A. Alemi, Inception-v4, inception-resnet and the impact of residual connections on learning, in: Thirty-first AAAI conference on artificial intelligence, 2017.

- [47] K. He, X. Zhang, S. Ren, J. Sun, Deep residual learning for image recognition, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [48] C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, Z. Wojna, Rethinking the inception architecture for computer vision, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 2818–2826.

- [49] M. Tan, Q. V. Le, Efficientnet: Rethinking model scaling for convolutional neural networks, arXiv preprint arXiv:1905.11946 (2019).

- [50] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, L. Fei-Fei, ImageNet: A Large-Scale Hierarchical Image Database, in: CVPR09, 2009.

- [51] D.-F. C. Institute, What’s the difference between melanoma and skin cancer?, https://blog.dana-farber.org/insight/2019/12/difference-between-melanoma-and-skin-cancer/ (2019 (accessed March 1, 2020)).

- [52] H. Godman, What are the prognosis and survival rates for melanoma by stage?, https://www.healthline.com/health/melanoma-prognosis-and-survival-rates (2017 (accessed March 1, 2020)).

- [53] A. Oakley, Mole, https://dermnetnz.org/topics/mole/ (2016 (accessed March 1, 2020)).

- [54] A. A. of Dermatology Association., Types of skin cancer, https://www.aad.org/public/diseases/skin-cancer/types/common (2020 (accessed March 1, 2020)).

- [55] WebMD, Understanding actinic keratosis – the basics, https://www.webmd.com/skin-problems-and-treatments/understanding-actinic-keratosis-basics (2019 (accessed March 1, 2020)).

- [56] M. C. Staff, Seborrheic keratosis, https://www.mayoclinic.org/diseases-conditions/seborrheic-keratosis/symptoms-causes/syc-20353878 (2019 (accessed March 1, 2020)).

- [57] N. Y. U. L. Health, Surgery for vascular malformations in the torso & limbs, https://nyulangone.org/conditions/vascular-malformations-in-the-torso-limbs-in-adults/treatments/surgery-for-vascular-malformations-in-the-torso-limbs (2020 (accessed March 1, 2020)).

- [58] N. Y. U. L. Health, Types of vascular malformations in the torso & limbs, www.nyulangone.org/conditions/vascular-malformations-in-the-torso-limbs-in-adults/types (2020 (accessed March 1, 2020)).

- [59] U. of California San Francisco Health, Basal cell carcinoma and squamous cell carcinoma, http://www.ucsfhealth.org/conditions/basal-cell-and-squamous-cell-carcinoma (2020 (accessed March 1, 2020)).