Crossing-Domain Generative Adversarial Networks for Unsupervised Multi-Domain Image-to-Image Translation

Abstract.

State-of-the-art techniques in Generative Adversarial Networks (GANs) have shown remarkable success in image-to-image translation from peer domain X to domain Y using paired image data. However, obtaining abundant paired data is a non-trivial and expensive process in the majority of applications. When there is a need to translate images across domains, if the training is performed between every two domains, the complexity of the training will increase quadratically. Moreover, training with data from two domains only at a time cannot benefit from data of other domains, which prevents the extraction of more useful features and hinders the progress of this research area. In this work, we propose a general framework for unsupervised image-to-image translation across multiple domains, which can translate images from domain to any a domain without requiring direct training between the two domains involved in image translation. A byproduct of the framework is the reduction of computing time and computing resources, since it needs less time than training the domains in pairs as is done in state-of-the-art works. Our proposed framework consists of a pair of encoders along with a pair of GANs which learns high-level features across different domains to generate diverse and realistic samples from. Our framework shows competing results on many image-to-image tasks compared with state-of-the-art techniques.

1. Introduction

In this work, we define multi-domain as multiple datasets or several subsets of one dataset that are applied to complete the same task, but these datasets (or subsets) have different statistical biases. As some examples, images taken at Alps in the summer and in the winter are considered as two different domains, while faces with hair and faces with eyeglasses form another two different domains. Under this domain definition, for faces with black hair and faces with yellow hair, the black hair and yellow hair are two different attributes of the same domain. In multi-domain learning, each sample is drawn from a domain specific distribution and has a label , with signifying from domain , signifying not from domain .

Image-to-image translation is the task of learning to map images from one domain to another, e.g., mapping grayscale images to color images (Cao et al., 2017), mapping images of low resolution to images of high resolution (Ledig et al., 2017), changing the seasons of scenery images (Zhu et al., 2017), and reconstructing photos from edge maps (Isola et al., 2017). The most significant improvement in this research field came with the application of Generative Adversarial Networks (GANs) (Goodfellow et al., 2014; Liu and Tuzel, 2016).

The image-to-image translation can be performed in supervised (Isola et al., 2017) or unsupervised way (Zhu et al., 2017), with the unsupervised one becoming more popular since it does not need to collect ground-truth pairs of samples. Despite the quick progress of research on image-to-image translation, state-of-the-art results for unsupervised translation are still not satisfactory. In addition, existing research generally focuses on image-to-image translation between two domains, which is limited by two drawbacks. First, the translation task is specific to two domains, and the model has to be retrained when there is a need to perform image translation between another pair of similar domains. Second, it can not benefit from the features of multiple domains to improve the training quality. We take the most representative work in this research field CycleGAN (Zhu et al., 2017) as an example to illustrate the first limitation. The translation between two image domains and is achieved with two generators, and . However, this model is inefficient in completing the task of multi-domain image translation. To derive mappings across all domains, it has to train generators, as shown in Fig. 1a.

To enable more efficient multi-domain image translation with unsupervised learning where image pairing across domains is not predefined, we propose Crossing-Domain GAN (CD-GAN), which is a multi-domain encoding generative adversarial network that consists of a pair of encoders and a pair of generative adversarial networks (GANs). We would like the encoders to efficiently encode the information of all domains to form a high-level feature space with an encoding process, then images of different domains will be translated by decoding the high-level features with a decoding process. CD-GAN achieves this goal with the integrated use of three techniques. First, the two encoders are constrained by a weight sharing scheme, where the two encoders (or the two generators) share the same weights at both the highest-level layers and the lowest-level layers. This ensures that the two encoders can encode common high-level semantics as well as low-level details to obtain the feature space, based on which generators can decode the high-level semantics and low-level details correctly to generate images of different domains. Second, we use a selected or existing label to guide the generator to generate images of a corresponding domain from the high-level features learnt. Third, we propose an efficient training algorithm that jointly train the model across domains by randomly selecting two domains to train at each iteration.

Different from (Liu and Tuzel, 2016) where only weights at high-level layers of generators are shared, in CD-GAN, we propose the concurrent sharing of the lowest-level and the highest-level layers at both the encoders and the generators to improve the quality of image translation between any two domains. The sharing of highest layers between two encoders helps to enable more flexible cross-domain image translation, while the sharing of the lowest layers across domains helps improve the training quality by taking advantage of the transferring learning across domains.

The contributions of our work are as follows:

-

•

We propose CD-GAN that learns mappings across multiple domains using only two encoder-generator pairs.

-

•

We propose the concurrent use of weight-sharing at highest-level and lowest-level layers of both encoders and generators to ensure that CD-GAN generates images with sufficient useful high-level semantics and low-level details across all domains.

-

•

We leverage domain labels to make a conditional GAN training that greatly improves the performance of the model.

-

•

We introduce a cross-domain training algorithm that efficiently and sufficiently trains the model by randomly taking samples from two of domains at a time. CD-GAN can fully exploit data from all domains to improve the training quality for each individual domain.

Our experiment results demonstrate that when trained on more than two domains, our method achieves the same quality of image translation between any two domains as compared to directly training for translation between the pair. However, our model is established with much less training time and can generate better quality images for a given amount of time. We also show how CD-GAN can be successfully applied to a variety of unsupervised multi-domain image-to-image translation problems.

The remainder of this paper is organized as follows. Section 2 reviews the relevant research for image-to-image translation problems. Section 3 describes our model and training method in details. Section 4 presents our evaluation metrics, experimental methodology, and the evaluation results of the model’s accuracy and efficiency on different datasets. Finally, we discuss some limitations of our work and conclude our work in Section 5.

2. Related Work

2.1. Generative Adversarial Networks (GANs)

GANs (Goodfellow et al., 2014) were introduced to model a data distribution using independent latent variables. Let be a random variable representing the observed data and be a latent variable. The observed variable is assumed to be generated by the latent variable, i.e., , where can be explicitly represented by a generator in GANs. GANs are built on top of neural networks, and can be trained with gradient descent based algorithms.

The GAN model is composed of a discriminator , along with the generator . The training involves a min-max game between the two networks. The discriminator is trained to differentiate ‘fake’ samples generated from the generator from the ‘real’ samples from the true data distribution . The generator is trained to synthesize samples that can fool the discriminator by mistaking the generated samples for genuine ones. They both can be implemented using neural networks.

At the training phase, the discriminator parameters are firstly updated, followed by the update of the generator parameters . The objective function is given by:

| (1) | ||||

The samples can be generated by sampling , then , where is a prior distribution, for example, a multivariate Gaussian.

2.2. Image-To-Image Translation

Image-to-image translation problem is a kind of image generation task that given an input image of domain X, the model maps it into a corresponding output image of another domain Y. It learns a mapping between two domains given sufficient training data (Isola et al., 2017). Early works on image-to-image translation mainly focused on tasks where the training data of domain are similar to the data of domain (Gupta et al., 2012; Liu et al., 2008), and the results were often unrealistic and not diverse.

In recent years, deep generative models have shown increasing capability of synthesizing diverse, realistic images that capture both fine-grained details and global coherence of natural images (Gregor et al., 2015; Radford et al., 2015; Kingma and Welling, 2014). With Generative Adversarial Networks (GANs) (Isola et al., 2017; Zhu et al., 2017; Kim et al., 2017), recent studies have already taken significant steps in image-to-image translation. In (Isola et al., 2017), the authors use a conditional GAN on different image-to-image translation tasks, such as synthesizing photos from label maps and reconstructing objects from edge maps. However, this method requires input-output image pairs for training, which is in general not available in image-to-image translation problems. For situations where such training pairs are not given, in (Zhu et al., 2017), the authors proposed CycleGAN to tackle unsupervised image-to-image translation. With a pair of Generators and , the model not only learns a mapping using an adversarial loss, but constrains this mapping with an inverse mapping . It also introduces a cycle consistency loss to enforce , and vice versa. In settings where paired training data are not available, the authors showed promising qualitative results. The authors in (Kim et al., 2017) and (Yi et al., 2017) use similar idea to solve the unsupervised image-to-image translation tasks.

These approaches only tackle the problems of translating images between two domains, and have two major drawbacks. First, when applied to domains, these approaches need generators to complete the task, which is computationally inefficient. To train all models, it would either require a significant amount of time to complete if the training is performed on one GPU, or it will require a lot of hardware and computing resources if training is run over multiple GPUs. Second, as each model is trained with only two datasets, the training cannot benefit from the data of other domains.

Our work is inspired by multimodal learning (Ngiam et al., 2011), which shows that data features can be better extracted using one modality if multiple modalities are present at feature learning time. The intuition of our method is that if we can encode the information of different domains together and generate a high-level feature space, it would be possible to decode the high-level features to build images of different domains. In this work, rather than generating images from random noise, we incorporate an encoding process into a GAN model. The image-to-image translation can be achieved by first encoding real images into high-level features, and then generating images of different domains using the high-level features through a decoding process. The encoding process and the decoding process are constrained by a weight-sharing technique that both the highest layer and the lowest layer are shared across the two encoders as well as the two generators. Sharing the high-level layers makes sure that the generated images are semantically correct, while sharing the low-level layers ensures that important low-level features be captured and transferred between domains. Our model is trained end-to-end using data from all domains.

3. Cross-Domain Generative Adversarial Network

To conduct unsupervised multi-domain image-to-image translation, a direct approach is to train a CycleGAN for every two domains. While this approach is straightforward, it is inefficient as the number of training models increases quadratically with the number of domains. If we have domains, we have to train generators, as shown in Fig. 1a. In addition, since each model only utilizes data from two domains and to train, the training cannot benefit from the useful features of other domains.

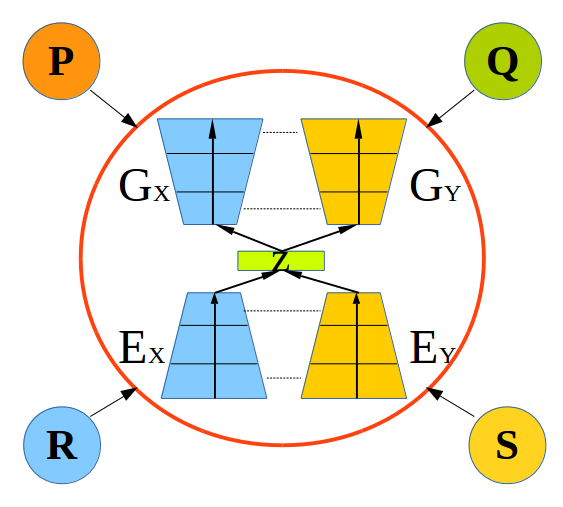

To tackle these two problems, a possible way is to encode useful information of all domains into common high level features, and then to decode the high-level features into images of different domains. Inspired by work (Ngiam et al., 2011) from mutimodal learning, where training data are from multiple modalities, we propose to build a multi-domain image translation model that can encode information of multiple domains into a set of high-level features, and then use features in to reconstruct data of different domains or to do image-to-image translation. The overview of the model applied to 4 domains is shown in Fig. 1b, where only one model is used.

In this section, we first present our proposed CD-GAN model, then describe how image translation can be performed across domains, and finally introduce our cross-domain training method.

3.1. CD-GAN with Double Layer Sharing

We first describe how to apply our model to multi-domain image-to-image translation in general then illustrate it using two domains as an example. As shown in Fig. 2a, our proposed CD-GAN model consists of a pair of encoders followed by a pair of GANs. Taking domain and as an example, the two encoders and encode domain information from and into a set of high-level features contained in a set . Then from a high-level feature in space , we can generate images that fall into domain or . The generated images are then evaluated by the corresponding discriminators and to see whether they look real and cannot be identified as generated ones. For example, following the red arrows, the input image is first encoded into a high-level feature , then is decoded to generate the image . The image is the translated image in domain . Similar processes exist for image .

Our model is also constrained by a reconstruction process shown in Fig. 2b. For example, following the red arrows, the input image is first encoded into a high-level feature , then is decoded to generate the image , which is a reconstruction of the input image. Similar processes exist for image .

Learning with deep neural networks involves hierarchical feature representation. In order to support flexible cross-domain image translation and also to improve the training quality, we propose the use of double-layer sharing where the highest-level and the lowest-level layers of the two encoders share the same weights and so does the two generators. By enforcing the layers that decode high-level features in GANs to share weights, the images generated by different generators can have some common high-level semantics. The layers that decode low-level details then map the high-level features to images in individual domains.

Sharing weights of low-level layers has the benefit of transferring low-level features of one domain to the other, thus making the image-to-image translation more close to real images in the respective domains. Besides, sharing layers reduces the complexity of the model, making it more resistant to the over-fitting problem.

3.2. Conditional Image Generation

In state-of-the-art techniques, like CycleGAN, each domain is described by a specific generator, thus there is no need to inform the generator which domain the input image is generated to. However, in our model, multiple domains share two generators. For an input image, we have to include an auxiliary variable to guide the generation of image for a specific domain. The only information we have is the domain labels. To make use of this information, the inputs of the model are not images , , but image pairs and where the labels and inform the generators which domains to generate an image for. These image pairs are not the same as the image pairs of supervised image-to-image generation tasks, which are . Thus no matter which domain images are the input, the model can always generate images of a domain of interest.

We denote the data distributions as and . As illustrated in Fig. 2, our model includes four mappings, two translation mappings , and two reconstruction mappings , . The translation mappings constrain the model by a GAN loss, while the reconstruction mappings constrain the model by a reconstruction loss. To further constrain the auxiliary variable, we introduce a classification loss by applying a classifier to classify the real or generated images into different domains. The intuition is that if images are generated with the guidance of the auxiliary variable, then it can be correctly classified into the domain specified by the auxiliary variable. Next, we introduce these model losses in more details as follows.

GAN Losses Following the translation mapping , we can translate image from domain to of domain using , . With the purpose of improving the quality of the generated samples, we apply adversarial loss. We express the objective as:

| (2) | ||||

where tries to generate images that look similar to images from domain , while aims to distinguish between translated samples and real samples . The similar adversarial loss for is

| (3) | ||||

The total GAN loss is:

| (4) |

Reconstruction Loss The reconstruction mappings , encourage the model to encode enough information to the high-level feature space from each domain. The input can then be reconstructed by the generators. The reconstruction process of domain is , . Similar reconstruction process exists for domain . With distance as the loss function, the reconstruction loss is:

| (5) | ||||

Latent Consistency Loss With only the above losses, the encoding part is not well constrained. We constrain the encoding part using a latent consistency loss. Although is translated to , which is in domain , is still semantically similar to . Thus, in the latent space , the high-level feature of should be close to that of . Similarly, the high-level feature of in domain should be close to the high-level feature of in domain . The latent consistency loss is the following:

| (6) | ||||

Classification Loss We consider domains as categories in the classification problems. We use a network , which is an auxiliary classifier, on top of the general discriminator to measure whether a sample (real or generated) belongs to a specific fine-grained category. The output of the classifier represents the posterior probability . Specifically, there are four classification losses, i.e., for real data , , and generated data , . For image-label pairs (, ) and (, ) with and our goal is to translate to with label , and to translate to with label . The four classification losses are:

| (7) | ||||

This loss can be used to optimize discriminators , , generators , , and encoders , .

Cycle Consistency Loss Although the minimization of GAN losses ensures that produce a sample similar to samples drawn from , the model still can be unstable and prone to failure. To tackle this problem, we further constrain our model with a cycle-consistency loss (Zhu et al., 2017). To achieve this goal, we want mapping from domain to domain and then back to domain to reproduce the original sample, i.e., and . Thus, the cycle-consistency loss is:

| (8) | ||||

Final Objective of CD-GAN To sum up, the goal of our approach is to minimize the following objective:

| (9) |

where , , and signify encoders , , generators , , and discriminators , , and , , , control the relative importance of the losses. Same as solving a regular GAN problem, training the model involves the solving of a min-max problem, where ,, , and aim to minimize the objective, while and aim to maximize it.

| (10) |

3.3. Cross-Domain Training

Our proposed model has two encoder-generator pairs, but we have data from domains. To train the model using samples of all domains equally, we introduce a cross-domain training algorithm. As shown in Fig. 1b, there are 4 domains. At each iteration, we randomly select two domains and , and feed training data of these two domains into the model. At the next iteration, we might take another two domains and , and perform the same training. We train the model using all data samples of domains at every epoch for several iterations. The training algorithm is shown in Algorithm 1. Cross-domain training ensures the model to learn a generic feature representation of all domains by training the model equally on independent domains.

4. experiment

In this section, we conduct experiments over three datasets to compare our proposed model with reference models in terms of image translation quality and efficiency.

4.1. Datasets

To evaluate the scalability and effectiveness of our model, we test it on a variety of multi-domain image-to-image translation tasks using the following datasets:

Alps Seasons dataset (Anoosheh et al., 2017) is collected from images on Flickr. The images are categorized into four seasons based on the provided timestamp of when it was taken. It consists of four categories: Spring, Summer, Fall, and Winter. The training data consists of 6053 images of four seasons, while the test data consists of 400 images.

Painters dataset (Zhu et al., 2017) includes painting images of four artists Monet, Van Gogh, Cezanne, and Ukiyo-e. We use 2851 images as the training set, and 200 images as the test set.

CelebA dataset (Liu et al., 2015) contains ten thousand identities, each of which has twenty images, i.e., two hundred thousand images in total. Each image in CelebA is annotated with 40 face attributes. We resize the initial size images to . We randomly select 4000 images as test set and use all remaining images for training data.

We run all the experiments on a Ubuntu system using an Intel i7-6850K, along with a single NVIDIA GTX 1080Ti GPU.

4.2. Reference Models

We compare the performance of our proposed CD-GAN with that of two reference models:

CycleGAN (Zhu et al., 2017) This method trains two generators and in parallel. It not only applies a standard GAN loss respectively for and , but applies forward and backward cycle consistency losses which ensure that an image from domain be translated to an image of domain , which can then be translated back to the domain , and vice versa.

DualGAN (Yi et al., 2017) This method uses a dual-GAN mechanism, which consists of a primal GAN and a dual GAN. The primal GAN learns to translate images from domain to domain , while the dual-GAN learns to invert the task. Images from either domain can be translated and then reconstructed. Thus a reconstruction loss can be used to train the model.

UNIT (Liu et al., 2017) This method consists of two VAE-GANs with a fully shared latent space. To complete the task of image-to-image translation between domains, it needs to be trained times.

DB (Hui et al., [n. d.]) This method addresses the multi-domain image-to-image translation problem by introducing domain-specific encoders/decoders to learn an universal shared-latent space.

4.3. Evaluation Metrics

There is a challenge to evaluate the quality of synthesized images (Salimans et al., 2016). Recent works have tried using pre-trained semantic classifiers to measure the realism and discriminability of the generated images. The idea is that if the generated images look to be more close to real ones, classifiers trained on the real images will be able to classify the synthesized images correctly as well. Following (Zhang et al., 2016; Isola et al., 2017; Wang and Gupta, 2016), to evaluate the performance of the models in classifying generated images quantitatively, we apply the metric classification accuracy. For each experiment, we generate enough number of images of different domains, then we use a pre-trained classifier which is trained on the training dataset to classify them to different domains and calculate the classification accuracy.

4.4. Network Architecture and Implementation

The design of the architecture is always a difficult task (Radford et al., 2015). To get a proper model architecture, we adopt the architecture of the discriminator from (Isola et al., 2017) which has been proven to be proficient in most image-to-image generation tasks. It has 6 convolutional layers. We keep the discriminator architecture fixed and vary the architectures of the encoders and generators. Following the design of the architectures of the generators in (Isola et al., 2017), we use two types of layers, the regular convolutional layers and the basic residual blocks (He et al., 2016). Since the encoding process is the inverse of the decoding process, we use the same layers for them but put the layers in the inverse orders. The only difference is the first layer of the encoder and the last layer of the generator. We apply channels (corresponding to different filters) for the first layer of the encoders, but channels for the last layer of the generators since the output images have only RGB channels. We gradually change the number of convolutional layers and the number of residual blocks until we get a satisfying architecture. We don’t apply weight sharing initially. The performance of different architectures is evaluated on the Painters dataset and shown in Fig. 3. We can see that when the model has 3 regular convolutional layers and 4 basic residual blocks, the model has the best performance. We keep this architecture fixed for other datasets.

We then vary the number of weight-sharing layers in the encoders and the generators. We change the number of weight-sharing layers from 1 to 4. Sharing 1 layer means sharing the highest layer and the lowest layers in the encoder pair. Sharing 2 layers means sharing the highest and lowest two layers. The same sharing method applies for the generator pair (not including the output layer). The results are shown in table 1. We found that sharing 1 layer is enough to have a good performance.

| # of shared layers | acc. % (Painters) | acc. % (Alps Seasons) |

| 0 | 49.75 | 29.95 |

| 1 | 52.54 | 33.78 |

| 2 | 52.81 | 33.54 |

| 3 | 51.13 | 33.06 |

In summary, for the testbed evaluation, we use two encoders each consisting of 3 convolutional layers and 4 basic residual blocks. The generators are composed with 4 basic residual blocks and 3 fractional-strided convolutional layers. The discriminators consist of a stack of 6 convolutional layers. We use LeakyReLU for nonlinearity. The two encoders share the same parameters on their layers 1 and 7, while the two generators share the same parameters on layers 1 and 6, which is the lowest-level layer before the output layer. The details of the networks are given in table 2. We evaluate various network architectures in the evaluation parts. We fix the network architecture as in Table 2.

| Layer | Encoders | Generators | Discriminators |

|---|---|---|---|

| 1 | |||

| 2 | |||

| 3 | |||

| 4 | |||

| 5 | |||

| 6 | |||

| 7 |

We use ADAM (Kingma and Ba, 2014) for training, where the training rate is set to 0.0001 and momentums are set to 0.5 and 0.999. Each mini-batch consists of one image from domain and one image from domain . Our model has several hyper-parameters. The default values are , , , and . The hyper-parameters of the baselines are set to the suggested values by the authors.

4.5. Quantitative Results

We evaluate our model on different datasets and compare it with baseline models.

4.5.1. Comparison on Painters Dataset

To compare the proposed model with baseline models Painters dataset, we first train the state-of-the-art VGG-11 model (Simonyan and Zisserman, 2014) on training data and get a classifier of accuracy 94.5%. We then score synthesized images by the classification accuracy against the domain labels these photos were synthesized from. We generate around 4000 images for every 5 hours and the classification accuracies are shown in Fig. 4.

We can see that our model achieves the highest classification accuracy of 52.5% when using both the highest layer and lowest layer sharing, with the training time less than the other reference models in reaching the peak.

4.5.2. Comparison on Alps Seasons Dataset

We train VGG-11 model on training data of Alps Seasons dataset and get a classifier of accuracy 85.5% trained on the training data. We then classify the generated images by our model and the classification accuracies are shown in Fig. 5.

Similar to Fig. 4, our model achieves the highest classification accuracy of 33.8% with the training time less than the baseline models in reaching the peak.

4.6. Analysis of the loss function

We compare the ablations of our full loss. As GAN loss and cycle consistency loss are critical for the training of unsupervised image-to-image translation, we keep these two losses as the baseline model and do the ablation experiments to see the importance of other losses.

| Loss | acc.% (Painters) | acc. % (Alps Seasons) |

|---|---|---|

| Baseline | 35.23 | 20.81 |

| Baseline + R | 36.86 | 21.59 |

| Baseline + LCL | 44.42 | 25.05 |

| Baseline + C | 43.63 | 24.01 |

| Baseline + R + LCL | 45.79 | 27.19 |

| Baseline + R + C | 44.82 | 26.63 |

| Baseline + LCL + C | 50.74 | 32.51 |

| Baseline + R + LCL + C | 52.54 | 33.78 |

As shown in Tabel 3, the reconstruction loss is least important with accuracy improvement of about 4.6% on Painters dataset and 3.7% on Alps Seasons dataset. The latent consistency loss brings the model an accuracy improvement of 26.1% on Painters dataset and 20.4% on Alps Seasons dataset. The accuracy is improved by 23.8% on Painters dataset and 15.4% on Alps Seasons dataset by the classification loss .

4.7. Qualitative Results

We demonstrate our model on three unsupervised multi-domain image-to-image translation tasks.

Painting style transfer (Fig. 6) We train our model on Painters dataset and use it to generate images of size . The model can transfer the painting style of a specific painter to the other painters, e.g., transferring the images of Cezanne to images of other three painters Monet, Ukiyoe and Vangogh. In Fig. 7, we also compare our model with other reference models when given the same test image.

Season transfer (Fig. 8) The model is trained on the Alps Seasons dataset. We use the trained model to generate images of different seasons. For example, we generate an image of summer from an image of spring and vice versa. In Fig. 9, we also compare our model with other reference models when given the same test image.

Attribute-base face translation (Fig. 10) We train the model on CelebA dataset for attribute-based face translation tasks. We choose 4 attributes, black hair, blond hair, brown hair, and gender. We then use our model to generate images with these attributes. For example, we transfer an image with a man wearing black hair to a man with blond hair, or transfer a man to a woman.

5. Conclusion

In this paper, we propose a Cross-Domain Generative Adversarial Networks (CD-GAN), a novel and scalable model to conduct unsupervised multi-domain image-to-image translation. We show its capability of translating images from one domain to many other domain using several datasets. It still has some limitations. First, training could be unstable due to the training problem of GAN model. Second, the diversity of the generated images are constrained by the cycle consistency loss. We plan to address these two problems in the future work.

References

- (1)

- Anoosheh et al. (2017) A. Anoosheh, E. Agustsson, R. Timofte, and L. Van Gool. 2017. ComboGAN: Unrestrained Scalability for Image Domain Translation. ArXiv e-prints (Dec. 2017). arXiv:cs.CV/1712.06909

- Cao et al. (2017) Yun Cao, Zhiming Zhou, Weinan Zhang, and Yong Yu. 2017. Unsupervised Diverse Colorization via Generative Adversarial Networks. In ECML/PKDD (1) (Lecture Notes in Computer Science), Vol. 10534. Springer, 151–166.

- Goodfellow et al. (2014) Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In Advances in Neural Information Processing Systems 27, Z. Ghahramani, M. Welling, C. Cortes, N. D. Lawrence, and K. Q. Weinberger (Eds.). Curran Associates, Inc., 2672–2680. http://papers.nips.cc/paper/5423-generative-adversarial-nets.pdf

- Gregor et al. (2015) K. Gregor, I. Danihelka, A. Graves, D. Jimenez Rezende, and D. Wierstra. 2015. DRAW: A Recurrent Neural Network For Image Generation. ArXiv e-prints (Feb. 2015). arXiv:cs.CV/1502.04623

- Gupta et al. (2012) Raj Kumar Gupta, Alex Yong-Sang Chia, Deepu Rajan, Ee Sin Ng, and Huang Zhiyong. 2012. Image Colorization Using Similar Images. In Proceedings of the 20th ACM International Conference on Multimedia (MM ’12). ACM, New York, NY, USA, 369–378. https://doi.org/10.1145/2393347.2393402

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. 2016. Deep Residual Learning for Image Recognition. In CVPR. IEEE Computer Society, 770–778.

- Hui et al. ([n. d.]) L. Hui, X. Li, J. Chen, H. He, C. gong, and J. Yang. [n. d.]. Unsupervised Multi-Domain Image Translation with Domain-Specific Encoders/Decoders. ArXiv e-prints ([n. d.]). arXiv:1712.02050

- Isola et al. (2017) Phillip Isola, Jun-Yan Zhu, Tinghui Zhou, and Alexei A. Efros. 2017. Image-to-Image Translation with Conditional Adversarial Networks. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017), 5967–5976.

- Kim et al. (2017) Taeksoo Kim, Moonsu Cha, Hyunsoo Kim, Jung Kwon Lee, and Jiwon Kim. 2017. Learning to Discover Cross-Domain Relations with Generative Adversarial Networks. In Proceedings of the 34th International Conference on Machine Learning (Proceedings of Machine Learning Research), Doina Precup and Yee Whye Teh (Eds.), Vol. 70. PMLR, International Convention Centre, Sydney, Australia, 1857–1865. http://proceedings.mlr.press/v70/kim17a.html

- Kingma and Ba (2014) D. P. Kingma and J. Ba. 2014. Adam: A Method for Stochastic Optimization. ArXiv e-prints (Dec. 2014). arXiv:cs.LG/1412.6980

- Kingma and Welling (2014) Diederik P. Kingma and Max Welling. 2014. Auto-Encoding Variational Bayes. In Proceedings of the Second International Conference on Learning Representations (ICLR 2014).

- Ledig et al. (2017) Christian Ledig, Lucas Theis, Ferenc Huszar, Jose Caballero, Andrew Cunningham, Alejandro Acosta, Andrew P. Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, and Wenzhe Shi. 2017. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, July 21-26, 2017. 105–114. https://doi.org/10.1109/CVPR.2017.19

- Liu et al. (2017) Ming-Yu Liu, Thomas Breuel, and Jan Kautz. 2017. Unsupervised Image-to-Image Translation Networks. In Advances in Neural Information Processing Systems 30.

- Liu and Tuzel (2016) Ming-Yu Liu and Oncel Tuzel. 2016. Coupled Generative Adversarial Networks. In Advances in Neural Information Processing Systems 29, D. D. Lee, M. Sugiyama, U. V. Luxburg, I. Guyon, and R. Garnett (Eds.). Curran Associates, Inc., 469–477. http://papers.nips.cc/paper/6544-coupled-generative-adversarial-networks.pdf

- Liu et al. (2008) Xiaopei Liu, Liang Wan, Yingge Qu, Tien-Tsin Wong, Stephen Lin, Chi-Sing Leung, and Pheng-Ann Heng. 2008. Intrinsic Colorization. ACM Trans. Graph. 27, 5, Article 152 (Dec. 2008), 9 pages. https://doi.org/10.1145/1409060.1409105

- Liu et al. (2015) Ziwei Liu, Ping Luo, Xiaogang Wang, and Xiaoou Tang. 2015. Deep Learning Face Attributes in the Wild. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV) (ICCV ’15). IEEE Computer Society, Washington, DC, USA, 3730–3738. https://doi.org/10.1109/ICCV.2015.425

- Ngiam et al. (2011) Jiquan Ngiam, Aditya Khosla, Mingyu Kim, Juhan Nam, Honglak Lee, and Andrew Y. Ng. 2011. Multimodal Deep Learning. In Proceedings of the 28th International Conference on International Conference on Machine Learning (ICML’11). Omnipress, USA, 689–696. http://dl.acm.org/citation.cfm?id=3104482.3104569

- Radford et al. (2015) A. Radford, L. Metz, and S. Chintala. 2015. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. ArXiv e-prints (Nov. 2015). arXiv:cs.LG/1511.06434

- Salimans et al. (2016) Tim Salimans, Ian Goodfellow, Wojciech Zaremba, Vicki Cheung, Alec Radford, Xi Chen, and Xi Chen. 2016. Improved Techniques for Training GANs. In Advances in Neural Information Processing Systems 29, D. D. Lee, M. Sugiyama, U. V. Luxburg, I. Guyon, and R. Garnett (Eds.). Curran Associates, Inc., 2234–2242. http://papers.nips.cc/paper/6125-improved-techniques-for-training-gans.pdf

- Simonyan and Zisserman (2014) K. Simonyan and A. Zisserman. 2014. Very Deep Convolutional Networks for Large-Scale Image Recognition. ArXiv e-prints (Sept. 2014). arXiv:cs.CV/1409.1556

- Wang and Gupta (2016) X. Wang and A. Gupta. 2016. Generative Image Modeling using Style and Structure Adversarial Networks. ArXiv e-prints (March 2016). arXiv:cs.CV/1603.05631

- Yi et al. (2017) Z. Yi, H. Zhang, P. Tan, and M. Gong. 2017. DualGAN: Unsupervised Dual Learning for Image-to-Image Translation. In 2017 IEEE International Conference on Computer Vision (ICCV). 2868–2876. https://doi.org/10.1109/ICCV.2017.310

- Zhang et al. (2016) R. Zhang, P. Isola, and A. A. Efros. 2016. Colorful Image Colorization. ArXiv e-prints (March 2016). arXiv:cs.CV/1603.08511

- Zhu et al. (2017) J. Y. Zhu, T. Park, P. Isola, and A. A. Efros. 2017. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In 2017 IEEE International Conference on Computer Vision (ICCV). 2242–2251. https://doi.org/10.1109/ICCV.2017.244